Abstract

The real-time monitoring of the weld pool during deposition is important for automatic control in plasma arc additive manufacturing. To obtain a high deposition accuracy, it is essential to maintain a stable weld pool size. In this study, a novel passive visual method is proposed to measure the weld pool length. Using the proposed method, the image quality was improved by designing a special visual system that employed an endoscope and a camera. It also includes pixel brightness-based and gradient-based algorithms that can adaptively detect feature points at the boundary when the weld pool geometry changes. This algorithm can also be applied to materials with different solidification characteristics. Calibration was performed to measure the real weld pool length in world coordinates, and outlier rejection was performed to increase the accuracy of the algorithm. Additionally, tests were carried out on the intersection component, and the results showed that the proposed method performed well in tracking the changing weld pool length and was applicable to the real-time monitoring of different types of materials.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Plasma arc additive manufacturing (PAAM) is a promising additive manufacturing process with a high resource efficiency and low device cost compared with conventional machining processes. A wide range of materials, including titanium alloys, steels, and aluminum alloys, can be used in PAAM processes [1, 2]. PAAM is characterized by a high deposition rate for large-scale components. During the deposition of mid-complexity components, the thermal masses of different parts of the components change with the geometry, which affects the thermal behavior of the weld pool. Therefore, the weld pool size is not typically kept constant during the deposition process, potentially resulting in defects in the components [3,4,5]. Some researchers have attempted to use path optimization methods to compensate for the deposition defects caused by thermal mass changes. For example, a high-quality T-crossing component can be deposited by increasing the deposition distance to the intersecting area [6, 7]. Li et al. [8] proposed a new path strategy called end lateral extension (ELE) for additive manufacturing, while Davis and Shin [9] increased the wire feeding speed when depositing around an intersection to improve the deposition quality. However, these methods were realized by adjusting some parameters offline and not maintaining a stable weld pool size in real time. However, the real-time measurement of the weld pool size is highly beneficial because this information can be used as a feedback signal for deposition control. Consequently, the weld pool size can be stabilized, thereby improving the geometric accuracy of the deposited components and preventing potential defects.

Studies have been previously conducted on the monitoring of the weld pool size. Researchers have used additional light sources such as LED lights [10] or laser dot arrays [11] to illuminate the molten area and obtain the weld pool size by analyzing the reflected images. Dual cameras have also been used to obtain the pool width [12], and an infrared camera has been used to obtain the temperature distribution on the metal surface [13, 14]. The light emitted from the plasma beam has a high intensity over the entire waveband; therefore, it is difficult for the camera to record the LED light. Laser dot array systems or binocular vision systems require multiple cameras, which means that errors accumulate when each camera is calibrated. An infrared camera is required to estimate the reflectivity of the weld pool surface, which changes with the pool temperature during deposition. Because of these factors, existing methods potentially cause measurement errors in weld pool monitoring, resulting in a low feedback control accuracy. Other researchers have focused on simpler and more applicable monitoring systems. Setting a pixel value threshold for image segmentation can assist in the extraction of the boundary of a steel weld pool [15,16,17]; however, this is not applicable when the contrast around the boundary is not evident [18]. A numerical simulation method was used to predict the size of a titanium alloy weld pool in laser welding [19] and powder bed fusion [20]. The weld pool size was controlled through calculations using physical models [21]. However, various types of materials are employed in additive manufacturing using plasma arcs. Moreover, plasma arcs have different reflection and radiation characteristics during the deposition process, which limits the applicability of the pool size measurement method.

For the deposition of mid-complexity components, the weld pool width is limited by the widths of the previously-deposited layers, such as the T-crossing component, as shown in Fig. 1b. In this case, the weld pool size is primarily influenced by the pool length. Therefore, a novel method that is suitable for different types of materials is proposed in this study for the real-time measurement of the weld pool length in plasma arc additive manufacturing. Applying this method, the length of the weld pool was monitored and compared with reference data to determine the wire feeding speed that produced steady deposition, and to the ensure that the proper component size was used during the plasma arc additive manufacturing process. The rest of this study is structured as follows. Section 2 describes the visual monitoring system, which comprises an endoscope used to capture close images of the weld pool at a high resolution. Section 3 introduces the algorithms for processing both the brightness and brightness gradients of the image pixels, which are used to detect the boundary between the solid and liquid metals at the rear of the weld pool. The visual system is then calibrated in Sect. 4 to realize the conversion between image and world coordinates. In Sect. 5, the measurement of the weld pool length based on the detected feature points is presented. Finally, the algorithms are integrated into software and tested by depositing different materials on the intersection components in Sect. 6. A comparison of the results revealed the optimal algorithm for extracting the length of the weld pool for different materials.

2 System overview

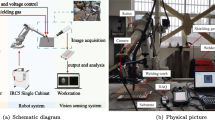

A schematic of the main component of the system is shown in Fig. 1a. The system includes a plasma arc torch, an endoscope, an XIRIS camera, and a metal blocking plate. The samples were sealed in a glove box and moved using a 3-axis computer numerical control (CNC) system. The plasma power source, wire feeder, and computer were placed outside the glovebox and connected to the torch and camera by cables. The glovebox was filled with argon gas for global protection to prevent the oxidation of the deposited metal. When deposition began, the CNC motion system moved the torch along the substrate, and an image of the weld pool was collected using the endoscope and camera. The images were then transmitted to a computer, where the weld pool geometry was detected, and the processed results were displayed on the monitoring screen.

The main technical specifications of the XIRIS camera are listed in Table 1. The dynamic range of the chosen camera was sufficiently wide to record the entire plasma weld pool and prevent over- or under-exposure in the image. The emission spectrum of the plasma-arc light has several peaks within the wavelength range of 300–600 nm [22], whereas the selected camera has a high spectral response within 400–650 nm. Therefore, a 10 nm wide, 550 nm thick hard-coated bandpass interference filter was used to block extra light and record the image of the weld pool. To obtain a better image of the weld pool, a special vision system that includes a light-blocking plate was used. The endoscope was connected coaxially to the camera, and changed the light path by 90 °, allowing the camera to be placed beside the substrate and have a clear view of the back of the weld pool at a vertical capturing angle. This protected the camera from damage caused by heat generated during deposition. To prevent the plasma arc light from affecting the image quality, a blocking plate was fixed between the endoscope and torch. By adding a blocking plate and adjusting the position of the endoscope, the effect of the specular reflection of the arc light was reduced, thereby allowing the determination of the boundary of the weld pool, as shown in Fig. 2. The original, blocked titanium, and blocked steel images are shown in Figs. 2a–c, respectively. Figure 2d shows the changes in the brightness of the pixels along the weld pool centerline in Figs. 2a–c. Figure 2a shows a record of the reflection of the arc light in the middle of the pool and behind the pool, which hinders the recognition of the boundary in the image. By adding a blocking plate, the influence of the reflected light is prevented, as shown in Fig. 2b. In addition, Fig. 2d shows that the curvature of the curve at the boundary position in Fig. 2b is larger than that in Fig. 2a. Consequently, the change in the brightness at the boundary is larger and easily detected when the blocking plate is used. Figure 2c shows an extension of the application of the plate in steel, and the result shows a more evident boundary than that of the titanium alloy.

3 Image processing method

3.1 Problem analysis

To achieve the best detection performance while minimizing the computational cost, it is necessary to obtain the optimal extraction algorithm for different materials. For certain types of metals such as steel, the boundary appears clearly in the image, which indicates that the pixel brightness from the weld pool center to the edge suddenly changes, as shown in Fig. 2c. This is owing to the unsmooth metal surface that is formed after the liquid solidifies. For other metals, such as titanium alloys, the surface brightness from the weld pool to the solid metal slowly decreases without forming a clear contrast in the brightness, as shown in Fig. 2b. A series of tests were conducted to obtain images of titanium alloy deposition under different torch speeds, currents, and wire feeding speeds, as shown in Fig. 3. Based on these images, it can be concluded that although the weld pool maintains its shape, there is a change in the size. In addition, the brightness of the pixels along the pool length direction varies following a certain trend. Therefore, brightness-based and gradient-based algorithms corresponding to metals with different surface smoothness during the solidification process (steel and titanium alloy, respectively) were included in the weld pool boundary detection method proposed in this study. This process is illustrated in Fig. 4.

3.2 Feature point detection

3.2.1 Determination of the ROI and CDL

Image processing was used to detect the boundary of the weld pool in the image captured by the monitoring system mentioned above. The boundaries of the steel and titanium weld pool in the images are both parabola to ensure that the intersection between the CDL and boundary can be used as the feature point for determining the size of the weld pool. The image process starts with the determination of ROI and CDL. Subsequently, the pixels from the line segments parallel to the CDL are extracted for data processing. The useful information is only contained in the circular area of the image captured by the endoscope. The image is then cropped into a rectangular ROI to reduce the computation cost. Finally, the image coordinate is set up within the image, as shown in Fig. 5.

The upper left corner was taken as the origin of the coordinates \(o\). The line along the width was taken as the \(x\) axis, whereas that along the height was taken as the \(y\) axis. Two points in the image were selected in advance and defined as the upper left corner \({P}_{\mathrm{R}1}\) and lower right corner \({P}_{\mathrm{R}3}\) of the ROI rectangle. The four corners of the ROI were defined as \({P}_{\mathrm{R}1}\left({x}_{\mathrm{R}1},{y}_{\mathrm{R}1}\right)\), \({P}_{\mathrm{R}2}\left({x}_{\mathrm{R}2},{y}_{\mathrm{R}1}\right)\), \({P}_{\mathrm{R}3}\left({x}_{\mathrm{R}2},{y}_{\mathrm{R}2}\right)\) and \({P}_{\mathrm{R}4}\left({x}_{\mathrm{R}1},{y}_{\mathrm{R}2}\right)\). It should be noted that the size of the ROI should include only the circular area in the image, as indicated by the red rectangle in Fig. 5. Moreover, the boundary profile is parabolic. This means that on the centerline of the deposition layer, the position of the boundary is the farthest from the front of the weld pool. Therefore, a CDL can be drawn along the deposition layer to detect the feature point, which is the farthest position of the boundary. Because the positions of the camera, endoscope, blocking plate, and torch were relatively fixed, the position of the CDL could be determined in advance and fixed along the deposition layer. Two points, \({P}_{\mathrm{c}1}\left({x}_{\mathrm{c}1},{y}_{\mathrm{c}1}\right)\) and \({P}_{\mathrm{c}2}\left({x}_{\mathrm{c}2},{y}_{\mathrm{c}2}\right)\) were then selected within the ROI and used as the start and end points of the CDL, respectively. The CDL should be close as possible to the centerline of the deposition layer, as shown by the blue line segment in Fig. 5. The expression of the CDL in image coordinates is

where \({k}_{\mathrm{c}}\) is the slope of the CDL and \({b}_{\mathrm{c}}\) is the bias. Consequently, a feature point can be obtained from the CDL. To avoid the low extraction accuracy caused by extraction errors, more DLs were drawn to obtain more feature points. This is achieved by drawing \(2N\) line segments parallel to the CDL. These line segments are equally distributed on both sides of the CDL, with \(N\) on one side and \(N\) on the other, as indicated by the green line segment in Fig. 5. The distance between the detection lines was fixed at \(d\). The endpoints of these line segments were then obtained based on the CDL endpoints by adding the increments \(\mathrm{d}x\) and \(\mathrm{d}y\). For the nth DL, the starting point \({P}_{\mathrm{L}1}^{n}\) and ending point \({P}_{\mathrm{L}2}^{n}\) can be expressed as

where \(n\in \left[-N,N\right]\) and {\({P}_{\mathrm{L}1}^{n},{P}_{\mathrm{L}2}^{n}\)} are the endpoint of the nth detection line. When \(n<0\), the detection line is on the left side of the CDL, and when \(n>0\), the line is on the right side. \(n=0\) refers to the CDL itself. The coordinates of the endpoints for each DL can be obtained as shown below.

As shown in Fig. 6, the distance between the CDL and the nth DL is \(nd\). Moreover, the increment in the coordinate is \(n\cdot \mathrm{d}x\) and \(n\cdot \mathrm{d}y,\) respectively. By drawing a dashed line parallel to the \(x\) and \(y\) axes, two similar triangles, \(\Delta {P}_{\mathrm{c}1}{{O}_{1}P}_{\mathrm{L}1}^{n}\) and \(\Delta {P}_{\mathrm{c}2}{O}_{2}{P}_{\mathrm{c}1},\) can be formed. For triangles with a similar property, the ratios of the corresponding sides are the same.

According to the Pythagorean Theorem, the following equation holds

By combining Eqs. (4) and (5), the increment in the endpoints can be obtained as follows

Consequently, when \(n\in \left[-N,N\right]\), the general expression for the nth DL is

3.2.2 Pixel extraction from the DL

Each DL intersects the boundary at a point that can be considered a feature point. To obtain the feature point from the DL, it is necessary to first extract the pixels falling on the DL. Because the pixels are discrete rather than continuous points, the pixels whose distance from the DL was less than \({L}_{\text{d}}\) were defined as points falling on the DL. Therefore, by traversing all the pixels in the ROI, the pixels falling on the DL can be extracted. Taking \(P(x,y)\) as a pixel point at a certain position \((x,y)\) within the ROI, \({P}_\text{DL}^{n}\) is a set containing all the pixel points that fall on the nth DL.

where \(x\in [{x}_{\mathrm{R}1},{x}_{\mathrm{R}2}]\), \(y\in [{y}_{\mathrm{R}1},{y}_{\mathrm{R}2}]\), and \(n\in \left[-N,N\right]\). There are \((2N+1)\) different \({P}_{\mathrm{DL}}^{n}\) values corresponding to each DL. Consequently, the values of the pixel points from every \({P}_{\mathrm{DL}}^{n}\) can be processed to obtain the feature points.

3.2.3 Brightness-based algorithm

An image of the deposited steel is shown in Fig. 7a. It was found that the liquid and solid metals in the weld pool exhibited different properties in terms of the amount of light reflected and radiated from their surfaces, resulting in a change in brightness at the boundary of the image.

In this section, a brightness-based algorithm is introduced to detect the steel pool boundaries. According to the method described in Sect. 3.2.1, a group of DLs was determined along the deposited layer, and the pixel brightness was obtained. One DL was selected as an example for further examination, as indicated by the blue line in Fig. 7a. The brightness of the pixels in \({P}_{\mathrm{DL}}^{n}\) can be represented by \(\left\{{B}^{n}\left(1\right),{B}^{n}\left(2\right),\cdots ,{B}^{n}\left({M}_{n}\right)\right\},\) and \({M}_{n}\) is the number of pixel points in \({P}_{\mathrm{DL}}^{n}\). \(k\in \{\mathrm{1,2},\cdots ,{M}_{n}\}\), \({B}^{n}(k)\) is a discrete function that shows the change in brightness along the nth DL. Because \({B}^{n}(k)\) contains the signal noise produced by the camera, a smoothing function, \({f}_{\mathrm{s}}\left(x\right),\) is used to reduce it. The smoothing function is defined as follows

where \(A(i)\) is a discrete array containing \(L\) elements and \((2a+1)\) is the number of adjacent values used for averaging. Taking \({B}_\text{a}^{n}\left(k\right)={f}_{\mathrm{s}}\left({B}^{n}\left(k\right),{M}_{n}\right),\) the curve of the filtered brightness changing function is as shown in Fig. 7b, where the first peak from the back to the front represents the change in the brightness at the boundary. This implies that the peak position can be regarded as a feature point. Therefore, a peak search algorithm was proposed, and its flowchart is shown in Fig. 8. An array \(W=\left\{W\left(1\right), W\left(2\right), \cdots ,W\left({w}_{\mathrm{d}}\right)\right\}\) that has a width of \({w}_\text{d}\) and slides over \({B}_{\mathrm{a}}^{n}\left(k\right)\) with an index \(j\) was defined. For every iteration, \(W\) was used to store \({B}_{\mathrm{a}}^{n}\left(k\right)\) values from \(j\) to \(j-{w}_{\mathrm{d}}\). Initially, \(j\) is equal to \({M}_{n}\), which means that array \(W\) slides from the end to the beginning within \({B}_{\mathrm{a}}^{n}\left(k\right)\). During each iteration, the maximum value of \(W,\) \(\mathrm{Max}\left\{W\right\}=W\left(i\right),\) was obtained. \(\mathrm{Max}\left\{W\right\}\) is the maximum of the array in one iteration, and \(i\) is the position of \(\mathrm{Max}\left\{W\right\}\) in \(W\). Additionally, \({B}_{\mathrm{max}}\) is the global maximum of all iterations. When the \(\mathrm{Max}\left\{W\right\}\) in an iteration was larger than \({B}_{\mathrm{max}}\), a new peak was observed. Subsequently, \({B}_{\mathrm{max}}=\mathrm{Max}\left\{W\right\}\) was updated and \(j\) was updated to \(j-{w}_{\mathrm{d}}+i\) before starting the next iteration. The iterations were then stopped when \({B}_{\mathrm{max}}\) no longer changed. This indicated that the position of the peak was found. The final \(j\) was then used as the output. Consequently, the pixel point corresponding to the jth element in \({B}_{\mathrm{a}}^{n}\left(k\right)\) was considered as the feature point.

3.2.4 Gradient-based algorithm

For the titanium alloys, the brightness of the weld pool is similar to that of the solid material. Figure 9a shows an example of the deposition of Ti6Al4V, and Fig. 10b shows its brightness curve. No peak can be observed at the feature point position in the brightness curve along the DL. Instead, the brightness remains at a high level in the molten area before slowly decreasing at the boundary position. This implies that the brightness-based algorithm does not perform well for titanium alloys.

In this section, a gradient-based algorithm is proposed for detecting the pool boundaries of titanium alloys. First, the pixel gradient \({G}^{n}\left(k\right)\) was defined to describe the changes in the slope of the brightness

where \(b\) is the interval between the two pixels and \(k\in [1,{M}_{n}-b]\). Additionally, \({M}_{n}-b\) is the number of elements in \({G}^{n}\left(k\right);\) and \({{G}_{\mathrm{a}}^{n}={f}_{\mathrm{s}}(G}^{n}\left(k\right),{M}_{n}-b)\) is used to smoothen the gradient. The smoothed curve is shown in Fig. 10.

The first trough from the back to the front of the gradient curve corresponds to the position where the brightness sharply changes. Therefore, the position of the trough can be used to locate the feature points. To this regard, the trough search algorithm was proposed, which was similar to the peak search algorithm described in Sect. 3.2.2. Its flowchart is shown in Fig. 11. For the trough search algorithm, a \({w}_{\mathrm{d}}\)-wide \(W\) with an index \(j\) was used to slide over \({G}_{\mathrm{a}}^{n}\left(k\right)\). For every iteration, \(W\) was used to store \({G}_{\mathrm{a}}^{n}\) values from \(j\) to \(j-{w}_{\mathrm{d}}\). Initially, \(j\) is equal to \({M}_{n}-b\). Moreover, \(\mathrm{Min}\left\{W\right\}=W(i)\) represents the minimum value of each iteration, and \({G}_{\mathrm{min}}\) is the minimum global gradient. When the \(\mathrm{Min}\left\{W\right\}\) in one iteration was smaller than \({G}_{\mathrm{min}}\), \({G}_{\mathrm{min}}=\mathrm{Min}\left\{W\right\}\) was updated and \(j\) was updated to \(j-{w}_{\mathrm{d}}+i\) before starting the next iteration. The iterations were stopped when \({G}_{\mathrm{min}}\) no longer changed. The final \(j\) was used as the output. Consequently, the pixel point corresponding to the jth element in \({G}_{\mathrm{a}}^{n}\left(k\right)\) was considered as a feature point.

4 Calibration

The feature points were detected in the image coordinates; therefore, they have to be converted into world coordinates to obtain the real size of the weld pool [23]. For camera-based monitoring systems, the inherent characteristics of the lens and photosensitive element are described using the internal parameters that determine the distortion produced in the captured image. In addition, the relative position of the camera with respect to the weld pool is described using the external parameters that show the rotation and translation of the images. Consequently, a conversion formula can be used to describe the mapping relationship between the three-dimensional world coordinates and two-dimensional image coordinates

where \(x\) and \(y\) are the coordinates of the point in the image. Additionally, \(s\) is the scale factor, \({f}_{x}\) and \({f}_{y}\) are the focal lengths on different optical axes, and \({c}_{x}\) and \({c}_{y}\) are the displacements away from the axis. These enabled the formation of a 3 × 3 matrix of the internal parameters. \({{\varvec{r}}}_{1}\), \({{\varvec{r}}}_{2}\), and \({{\varvec{r}}}_{3}\) are 3 × 1 vectors representing the rotational transformation, and \({\varvec{t}}\) is a 3 × 1 vector representing the translation transformation. Moreover, \({{\varvec{r}}}_{1}\), \({{\varvec{r}}}_{2}\), \({{\varvec{r}}}_{3}\), and \({\varvec{t}}\) form a 4 × 3 matrix of the external parameters, and \(X\), \(Y\), and \(Z\) denote the world coordinates of the feature points. In this study, the length of the weld pool was considered as the distance between the feature point and front of the weld pool. This implies that the size of the weld pool can be measured on a plane parallel to the layer surface. Therefore, only two-dimensional world coordinates were considered. The conversion formula can be simplified by setting \(Z=0\)

where \({\varvec{H}}\) is a 3 × 3 matrix (called a homography matrix) that comprises a combination of all the parameters in the function. In this study, the method described in Ref. [24] was used for calibration. As shown in Fig. 12, a chessboard was placed on the deposition plane for calibration. The corner points of the square in the chessboard image were then selected. Additional corner points were averaged to reduce the influence of noise, and their coordinates were input into the formula to obtain the equations. Finally, \({\varvec{H}}\) was solved as follows

5 Weld pool length measurement

To measure the length of the weld pool, the positions of the feature points and front of the weld pool were obtained. Owing to the limitations of the blocking plate and gas shield, only a part of the weld pool could be recorded in the images. To solve this problem, the weld pool length, represented as \({P}_{\mathrm{L}}\), was divided into two portions using a reference line. The reference line is defined as a line segment that passes through the CDL starting point, is orthogonal to the CDL, and is fixed in the image coordinates. As shown in Fig. 13a, the first portion is the distance between the feature point and reference line, represented as \({P}_{\mathrm{L}1}\). The second portion is the distance between the reference line and front of the weld pool, represented as \({P}_{\mathrm{L}2}\).

To obtain \({P}_{\mathrm{L}1}\), the coordinates of the detected feature points and reference line were converted from image coordinates to world coordinates based on the conversion formula obtained during calibration

where \({X}_{n}\) and \({Y}_{n}\) are the world coordinates of the detected feature points; \({x}_{n}\) and \({y}_{n}\) are the image coordinates; and \({{\varvec{H}}}^{-1}\) is the inverse matrix of \({\varvec{H}}\). The positions of the detected points and reference line before and after conversion are shown in Figs. 14 and 15, respectively. The reference line was converted into world coordinates with the formula \(Y={k}_{\mathrm{r}}X+{b}_{\mathrm{r}}\), where \({k}_{\mathrm{r}}\) and \({b}_{\mathrm{r}}\) are obtained based on the definitions of the reference line. Certain points may be incorrectly detected during the detection of feature points. To reduce the effects of these incorrect points on the measurement accuracy, an outlier elimination method was used [25]. First, the mean and standard deviation of the \({Y}_{{n}}\) were obtained as follows

Subsequently, the points whose Y coordinate is within \([{\mu }_{y}-3{\sigma }_{y},{\mu }_{y}+3{\sigma }_{y}]\) were considered and the other points were removed as outliers. The mean of the X and Y coordinates of the remaining feature points were then obtained and represented as \({X}_{\mathrm{m}}\) and \({Y}_{\mathrm{m}}\), respectively. Consequently, \({P}_{\mathrm{L}1}\) is equal to the distance between the point \(({X}_{\mathrm{m}}\),\({Y}_{\mathrm{m}})\) and reference line.

The reference line was fixed because the camera and torch positions were fixed. Although there was a slight change in the weld front position when the parameters were different, this change stabilized under a specific set of fixed parameters, as listed in Table 2. The \({P}_{\mathrm{L}2}\) values were manually measured using the method shown in Fig. 13b. For the various groups of tests, the shared settings were current of 180 A current and wire feeding speed of 1 800 mm/min. Table 2 shows that the position of the weld pool front was stable when a certain set of parameters were used for deposition. Therefore, \({P}_{\mathrm{L}2}\) can be obtained beforehand through trials. The complete weld pool length can be obtained as follows

6 Test and verification

Tests were conducted to verify the performance of the proposed weld pool length detection algorithm. The substrates were machined to form an intersection where metal layers were deposited, as shown in Figs. 16a and b. Two materials were tested, Ti6-Al-4V and S355 steel. The deposition parameters of the two materials are listed in Table. 3. The proposed monitoring method was integrated into a software to detect the weld pool length in real time during deposition. The interface allowed the parameters to be set and displayed the value of the detected length. The raw image and detection results are shown in the picture for monitoring purposes.

The data for the different depositions are shown in Figs. 16c and d. During the deposition of the titanium alloy and steel, the software tracked the change in the weld pool at a frequency of approximately 2–3 Hz. After experiencing a change at the start of deposition, the pool length stabilized. When passing through the intersection, the weld pool length decreased rapidly before slowly recovering. The change in the detected data was synchronized with the change in the actual weld pool. Consequently, the proposed weld pool detection algorithm effectively tracked the change in the size of the weld pool during the intersection deposition process. Therefore, the feasibility was verified to have good reliability by testing titanium 6-Al-4V alloy and S355 steel.

7 Conclusions

This study proposes a novel method for monitoring the change in the length of the weld pool caused by a thermal mass change in a component. This method can be used to ensure the geometric accuracy of the deposited component by stably controlling the size of the weld pool. It includes the establishment of a special imaging system, the rapid extraction of feature points on the boundary of the weld pool, outlier rejection for accuracy improvement, the measurement of the weld pool length based on calibration, and the analysis of the optimal algorithm for different materials. The rest of this study was organized as follows.

-

(i)

An endoscope and a shielding plate were added to the monitoring system to obtain a vertical viewing angle and prevent arc interference, which improved the image quality of the weld pool boundary.

-

(ii)

A gradient-based algorithm was proposed to detect the boundary of a titanium alloy weld pool, which solved the monitoring problem caused by the low brightness contrast on the pool surface.

-

(iii)

For comparison, the optimal extraction algorithms for the S322 steel and titanium 6-Al-4V alloys were determined.

-

(iv)

The pixel extraction method based on the detection line was used to reduce the computational cost of the entire algorithm, which was beneficial for realizing real-time monitoring.

However, the proposed method requires some parameter settings to be set in advance, and more materials need to be tested. Therefore, future work will focus on the following: (i) automatic setting of the software parameters based on the signal from the manipulation system, (ii) additional tests using other materials commonly used in plasma arc additive manufacturing.

Abbreviations

- CDL:

-

Center detecting line

- CNC:

-

Computer numerical control

- ROI:

-

Region of interest

- DL:

-

Detecting line

- \(b\) :

-

Interval between two pixels when determining the gradient

- \({b}_{\mathrm{c}}\) :

-

Bias of the CDL in the image coordinate

- \({b}_{\mathrm{r}}\) :

-

Bias of the reference line in the world coordinate

- \(B_{\text{a}}^n(k)\) :

-

Smoothed brightness

- \({B}_{\mathrm{max}}\) :

-

Maximum global brightness of all iterations

- \({B}^{n}(k)\) :

-

Discrete brightness function along the \({n}\text{th}\) DL

- \({c}_{x}\),\({c}_{y}\) :

-

Displacement away from the axis

- \(d\) :

-

Distance between each DL

- \(\mathrm{d}x\),\(\mathrm{d}y\) :

-

DL axis increment based on the CDL

- \({f}_{\mathrm{s}}(x)\) :

-

Smooth function

- \({f}_{x}\),\({f}_{y}\) :

-

Focal length on the optic axis

- \({G}_{\mathrm{min}}\) :

-

Minimum global gradient of all iterations

- \({G}^{n}\left(k\right)\) :

-

Pixel gradient

- \({G}_{\rm{a}}^{n}(k)\) :

-

Smoothed gradient

- \(H\) :

-

3×3 Homography matrix

- \(W\) :

-

Array sliding over \({B}_{\rm{a}}^{n}(k)\) and \({G}_{\rm{a}}^{n}(k)\)

- \(j\) :

-

Index in W

- \({k}_{\mathrm{c}}\) :

-

Bias of the CDL in the image coordinate

- \({k}_{\mathrm{r}}\) :

-

Slope of the reference line in the world coordinate

- \({L}_{\mathrm{d}}\) :

-

Distance used to define the points falling on DL

- \({M}_{n}\) :

-

Number of pixels points in \({P}_{\mathrm{DL}}^{n}\)

- \(n\) :

-

Index of the DLs

- \(N\) :

-

Number of DLs on one side of the CDL

- \(O,X,Y\) :

-

Origin and axis of the world coordinate

- \(o,x,y\) :

-

Origin and axis of the image coordinate

- \(P(x,y)\) :

-

Point within the ROI

- \({P}_{\mathrm{c}1}\left({x}_{\mathrm{c}1},{y}_{\mathrm{c}1}\right)\) :

-

Starting point of the CDL

- \({P}_{\mathrm{c}2}\left({x}_{\mathrm{c}2},{y}_{\mathrm{c}2}\right)\) :

-

Ending point of the CDL

- \({P}_{\mathrm{DL}}^{n}\) :

-

Points falling on the nth DL

- \({P}_{\mathrm{L}1}^{n}\),\({P}_{\mathrm{L}2}^{n}\) :

-

End points of the nth DL

- \({P}_{\mathrm{L}},{P}_{\mathrm{L}1},{P}_{\mathrm{L}2}\) :

-

Molten length and its two portions

- \({P}_{\mathrm{R}1}\left({x}_{\mathrm{R}1},{y}_{\mathrm{R}1}\right)\) :

-

Upper left corner point of the ROI

- \({P}_{\mathrm{R}2}\left({x}_{\mathrm{R}2},{y}_{\mathrm{R}1}\right)\) :

-

Upper right corner point of the ROI

- \({P}_{\mathrm{R}3}\left({x}_{\mathrm{R}2},{y}_{\mathrm{R}2}\right)\) :

-

Lower right corner point of the ROI

- \({P}_{\mathrm{R}4}\left({x}_{\mathrm{R}1},{y}_{\mathrm{R}2}\right)\) :

-

Lower left corner point of the ROI

- \({{\varvec{r}}}_{1}\), \({{\varvec{r}}}_{2}, \, {{\varvec{r}}}_{3}\) :

-

3×1 vectors of the rotation transformation

- \(s\) :

-

Scale factor

- \({\varvec{t}}\) :

-

3×1 vector of the translation transformation

- \({w}_{\mathrm{d}}\) :

-

Width of \(W\)

- \({X}_{\mathrm{m}}\),\({Y}_{\mathrm{m}}\) :

-

Mean coordinates of the remaining feature points

- x n, \({y}_{{n}}\) :

-

Image coordinates of the detected feature points

- X n, Y n :

-

World coordinates of the detected feature points

- \({\mu }_{y}\) :

-

Mean of \({Y}_{n}\)

- \({\sigma }_{y}\) :

-

Standard deviation of \({Y}_{n}\)

References

Wang C, Suder W, Ding J et al (2021) Wire based plasma arc and laser hybrid additive manufacture of Ti-6Al-4V. J Mater Process Tech 293:117080. https://doi.org/10.1016/j.jmatprotec.2021.117080

Artaza T, Alberdi A, Murua M et al (2017) Design and integration of WAAM technology and in situ monitoring system in a gantry machine. Procedia Manuf 13:778–785

Devesse W, Baere DD, Hinderdael M et al (2016) Hardware-in-the-loop control of additive manufacturing processes using temperature feedback. J Laser Appl 28(2):022302. https://doi.org/10.2351/1.4943911

Demir AG, Mazzoleni L, Caprio L et al (2019) Complementary use of pulsed and continuous wave emission modes to stabilize melt pool geometry in laser powder bed fusion. Opt Laser Technol 113:15–26

Hu D, Kovacevic R (2003) Modelling and measuring the thermal behaviour of the molten pool in closed-loop controlled laser-based additive manufacturing. Proc Inst Mech Eng B-J Eng 217(4):441–452

Venturini G, Montevecchi F, Scippa A et al (2016) Optimization of WAAM deposition patterns for T-crossing features. Procedia Cirp 55:95–100

McAndrew AR, Rosales MA, Colegrove PA et al (2018) Interpass rolling of Ti-6Al-4V wire+arc additively manufactured features for microstructural refinement. Addit Manuf 21:340–349

Li R, Wang G, Ding Y et al (2020) Optimization of the geometry for the end lateral extension path strategy to fabricate intersections using laser and cold metal transfer hybrid additive manufacturing. Addit Manuf 36:101546. https://doi.org/10.1016/j.addma.2020.101546

Davis T, Shin YC (2010) Vision-based clad height measurement. Mach Vision Appl 22(1):129–136

Mazzoleni L, Caprio L, Pacher M et al (2019) External illumination strategies for melt pool geometry monitoring in SLM. JOM 71:928–937

Wang ZZ (2014) Monitoring of GMAW weld pool from the reflected laser lines for real-time control. IEEE T Ind Inform 10(4):2073–2083

Xiong J, Shi M, Liu Y et al (2020) Virtual binocular vision sensing and control of weld pool width for gas metal arc additive manufactured thin-walled components. Addit Manuf 33:101121. https://doi.org/10.1016/j.addma.2020.101121

Yang D, Wang G, Zhang G (2017) Thermal analysis for single-pass multi-layer GMAW based additive manufacturing using infrared thermography. J Mater Process Tech 244:215–224

Chen ZQ, Gao XD (2014) Detection of weld pool width using infrared imaging during high-power fiber laser welding of type 304 austenitic stainless steel. Int J Adv Manuf Tech 74(9/12):1247–1254

Yamane S, Godo T, Hosoya T et al (2015) Detecting and tracking of welding line in visual plasma robotic welding. Weld Int 29(9):661–667

Zhang GK, Wu CS, Liu X (2020) Single vision system for simultaneous observation of keyhole and weld pool in plasma arc welding. J Mater Process Tech 215:71–78

Xiong J, Pi Y, Chen H (2019) Deposition height detection and feature point extraction in robotic GTA- based additive manufacturing using passive vision sensing. Robot Com-Int Manuf 59:326–334

Reisgen U, Purrio M, Buchholz G et al (2020) Machine vision system for online weld pool observation of gas metal arc welding processes. Weld World 58(5):707–711

Akbari M, Saedodin S, Toghraie D et al (2014) Experimental and numerical investigation of temperature distribution and melt pool geometry during pulsed laser welding of Ti6Al4V alloy. Opt Laser Technol 59:52–59

Liu BQ, Fang G, Lei LP (2021) An analytical model for rapid predicting molten pool geometry of selective laser melting (SLM). Appl Math Model 92:505–524

Wang Q, Li JY, Gouge M et al (2016) Physics-based multivariable modeling and feedback linearization control of melt-pool geometry and temperature in directed energy deposition. ASME J Manuf Sci Eng 139(2):021013. https://doi.org/10.1115/1.4034304

Weglowski MS (2007) Investigation on the electric arc light emission in TIG welding. Int J Comput Mat Sci Surface Eng 1(6):734–749

Zhao CX, Richardson IM, Kenjeres S et al (2009) A stereo vision method for tracking particle flow on the weld pool surface. J Appl Phys 105(12):2570–3147

Zhang Z (2000) A flexible new technique for camera calibration. IEEE T Pattern Anal 22(11):1330–1334

Bin Z, Sen C, Wang Ju et al (2019) Feature points extraction of laser vision weld seam based on genetic algorithm. Chin J Lasers 46(1):0102001. https://doi.org/10.3788/CJL201946.0102001

Acknowledgments

The authors are grateful for the financial support provided by the China Scholarship Council and Basic and Applied Basic Research Foundation of Guangdong Province (Grant No. 2022A1515110733). The Cranfield University and the Ji Hua Laboratory are also gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, BR., Shi, YH. A novel weld-pool-length monitoring method based on pixel analysis in plasma arc additive manufacturing. Adv. Manuf. 12, 335–348 (2024). https://doi.org/10.1007/s40436-023-00466-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40436-023-00466-w