Abstract

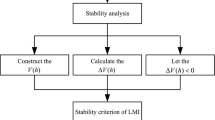

This paper is concerned with new approaches to the stability analysis of delayed neural networks. By modifying the non-orthogonal polynomial-based integral inequality (NPII), a new delay-product functional (DPF) is formulated. On the basis of the proposed DPF, a Lyapunov–Krasovskii functional (LKF) is constructed where a new state introduced in the second-order Bessel–Legendre integral inequality (BLII) is included in augmented vectors of Lyapunov matrix. On account of this proposed LKF, the delay-dependent criterion is introduced in terms of linear matrix inequalities (LMIs) for stability analysis of neural networks time-varying delay. Two commonly used numerical examples are considered for demonstration purpose to test the efficacy of the proposed stability criterion.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

From past few decades, neural networks (NN) have been successfully used in many scientific and engineering systems such as image processing, associative memory, pattern recognition and optimization algorithms [1, 2]. Broadly, the neural networks are realized by very large-scale integrated electronic circuits. In view of communicative speed between the neurons and restricted switching speed of electronic devices, time delay often exists in the neural networks which degrades the performance and even destabilizes it. Therefore, delay-dependent stability analysis of neural network (NN) with delay becomes a key problem in the past decades, see in [3,4,5,6,7].

The Lyapunov–Krasovskii (LK) approach for determining stability in its implementation is the most investigated method in stability analysis of NN with time delay. A variety of delay-dependent conditions for stability analysis have been proposed in the form of LMIs [8,9,10,11,12,13]. The main focus in these works is to develop new stability criteria such that it provides largest upper bound (LUB) of the delay by establishing negative definite condition of derivative of the LK functional (LKF). For obtaining LUB of time-varying delay for NN, the two crucial issues are construction of appropriate LKF and to find a precise bound of quadratic integral function obtained in the time derivative of LKF.

In earlier works, Jensen-based integral inequality (JBII) [14] has been used numerously to bound the integral function. To get less conservative stability condition, several other integral inequalities have been used, like Wirtinger-based integral inequality (WBII) [15], auxiliary function-based integral inequality (AFII) [16], Bessel–Legendre-based integral inequality (BLII) [17] and free-matrix-based integral inequality (FMII) [18], although these inequalities have potential contribution to get improved stability criterion. A careful look reveals that these inequality functions introduce additional quadratic terms and each term includes a new state vector. It was shown in [19] that LKF must be augmented by the states involved in the inequality in order to reduce conservativeness.

The matrix-refined function in [20] has been formulated in which slack variables are utilized to refine the Lyapunov matrix to provide more flexibility. In [21,22,23,24], the LKF proposed in which all the quadratic terms involved was not necessarily required to be positive definite. It has been demonstrated that such a relaxed condition provides improved results. To exploit the information of delays and its derivative, a new type of LKF, known as delay-product (DP) functional [25, 26], has been introduced, which contains time-varying delay as the coefficients in the non-integral quadratic terms. In similar fashion, new DP functionals have been proposed by modifying WBII and FMII to exploit the advantages of single integral state vectors in [22, 27, 28].

Motivated by these types of works to formulate new form of LKF, this article is mainly based on the creation of new delay-product LKF by modifying non-orthogonal polynomial-based integral inequality (NPBI) of [29]. The NPBI is designed on the basis of a non-orthogonal polynomial sequence. The auxiliary sequel vector \(\{1, g(s), g^{2}(s)\}\) is non-orthogonal because \(\int _{a}^{b}g^{2}(s)\ne 0\). Hence, an additional cross-term has been introduced in the NPBI as compared to orthogonal polynomial type integral inequality. This additional cross-term has been key to get less conservative stability condition. Then improved reciprocally convex lemma of [19] and second-order BLII are jointly utilized to derive, delay-dependent stability criteria for delayed NN. To show the efficacy of the proposed stability conditions, two numerical examples are considered.

\(\textbf{Notations}\):-\(\mathbb {R}^n\) and \(\mathbb {R}^{n \times m}\) mean n-dimensional Euclidean space and set of all real matrices with dimension \((n \times m)\), respectively. \(Col(\cdot )\) and \({\text {diag}}(\cdot )\) stand for column and diagonal matrix, respectively. \(Q>0\) represents Q be a symmetric positive definite matrix. \(Sym\{N\}=N+N^\textrm{T}\). 0 and I are zero and identity matrix of appropriate dimensions. For a given function \(x: [-\hslash , +\infty ] \rightarrow \mathbb {R}^n\), \(x_{t}(s)\) represents \(x(t + s)\), for all \(s \in [-\hslash , 0]\) and all \(t \ge 0\).

2 System description and preliminaries

The NN with its equilibrium point shifted into origin can be represented as:

where \(x(t) \in \mathbb {R}^n\) is the state vector representing n number of neurons; \(\textit{A}= {\text {diag}}\{a_{1},\ldots ,a_{n-1}, a_{n}\} >0, \textit{B}, \textit{C}\) and \(\textit{W} \in \mathbb {R}^{n \times n}\) are the known interconnecting weight matrices of the neurons. The time-delay function \(d_{t}\) is otherwise expressed as d(t). As per the real scalars \(\nu _{1}\), \(\nu _{2}\) and \(\hslash \), time delay \(d_{t}\) has following conditions.

The activation function of neuron is denoted by \(f(x(t))=\{f(x_{1}(t)),\ldots ,f(x_{n-1}(t)), f(x_{n}(t))\}\) and satisfies

where \(\sigma _{i}^{-}\) and \(\sigma _{i}^{+}\) are real scalars with known values. For this paper, let \(\varSigma _{1}={\text {diag}}\{\sigma _{1}^{-},\ldots ,\sigma _{n}^{-}\}\) and \(\varSigma _{2}={\text {diag}}\{\sigma _{1}^{+},\ldots ,\sigma _{n}^{+}\}\). The aim of this paper is to construct new functionals to obtain improved stability criteria for delayed NN of (1). For this reason, some key lemmas are rewritten as follows. First, the improved reciprocally convex lemma is presented.

Lemma 1

[19] For matrices \(X_{i}>0\) and \(S_{i},i=1,2\), using positive scalars \(\alpha , \beta \) related by \(\alpha +\beta =1\), then the following holds:

where \(T_{1}= \beta S_{2}R_{2}^{-1}S_{2}^\textrm{T}\) and \(T_{2}= \alpha S_{1}^\textrm{T}R_{1}^{-1} S_{1}\). The following lemma presents the second-order BLII and JII.

Lemma 2

[14, 17, 30] For any continuously differentiable function \( \textit{w} \in [a,b] \rightarrow \mathbb {R}^n \) with a constant matrix \(0 \le R\), then the following holds:

-

(i)

Second-order BLII:

$$\begin{aligned}&\int ^b_a \dot{\textit{w}}^\textrm{T}(s) R \dot{\textit{w}}(s) \textrm{d}s \ge \frac{1}{b-a}[ \mathcal {V}_{1}^\textrm{T} R \mathcal {V}_{1}+ 3\mathcal {V}_{2}^\textrm{T} R \mathcal {V}_{2} \nonumber \\&\quad + 5\mathcal {V}_{3}^\textrm{T} R \mathcal {V}_{3}] \end{aligned}$$(5) -

(ii)

JII:

$$\begin{aligned} \int ^b_a \textit{w}^\textrm{T}(s) R \textit{w}(s) \textrm{d}s \ge \frac{1}{b-a} \vartheta _{0}^\textrm{T} R \vartheta _{0} \end{aligned}$$(6)

where \(\mathcal {V}_{1}= \textit{w}(b)-\textit{w}(a), \mathcal {V}_{2}=\textit{w}(a)+\textit{w}(b)-\frac{2}{(b-a)}\vartheta _{0}\), \(\mathcal {V}_{3}=\mathcal {V}_{1}+\frac{6}{(b-a)}\int _{a}^{b} \delta _{a}^{b}(s) \textit{w}(s)\textrm{d}s\), \(\vartheta _{0}=\int _{a}^{b} \textit{w}(s)\textrm{d}s\) and \(\delta _{a}^{b}(s)=-2\left( \frac{s-a}{b-a}\right) +1\).

The next inequality to be recalled is used as bound of the quadratic type integral function. It is derived based on a polynomial sequence of non-orthogonal in nature.

Lemma 3

[29] For real scalars a and b having \(b > a\), differentiable function \(w: [a.b] \rightarrow \mathbb {R}^n\), real matrix \(R > 0\) with dimension \(n \times n\) and matrices \(Z_{1}, Z_{2}, L_{1}, L_{2}\) satisfies \(\mathcal {Z}=\begin{bmatrix} Z_{1} &{} Z_{2} &{} L_{1} \\ *&{} Z_{3} &{} L_{2} \\ *&{} *&{} R \end{bmatrix} \ge 0,~ \) the following inequality holds

where \(\mathcal {V}_{4}=\frac{4}{b-a} \int _{a}^{b}w(s)\textrm{d}s-\frac{8}{(b-a)^2}\int _{a}^{b} \int _{\theta }^{b} w(s)\textrm{d}s\textrm{d}\theta \)

Remark 1

The right-hand side of NPII in (7) contains four quadratic terms. The first three quadratic terms are similar to the BLII. The last cross-term is the additional term utilized in NPII. The cross-term \(\mathcal {V}_{4}^\textrm{T} L_{2}\mathcal {V}_{1}\) evolves due to the non-orthogonal polynomial function. This cross-term contains the states \(\frac{4}{b-a} \int _{a}^{b}w(s)\textrm{d}s\), \(\frac{8}{(b-a)^2}\int _{a}^{b} \int _{\theta }^{b} w(s)\textrm{d}s\textrm{d}\theta \), \(\textit{w}(b)\) and \(\textit{w}(b)\). The additional interaction among these states in cross-term improves the conservativeness.

In order to obtain the simpler presentation, the notations have been utilized as follows.

with \(e_{1}, e_{2},\ldots , e_{14} \in \mathbb {R}^{n \times 14n}\) are basic block matrices, for example \(e_{2}=[0_{n \times n}, I_{n}, 0_{n \times 12n}]\).

3 Main Results

In this section, we construct a delay-product functional (DPF) using the NPII in (7).

Proposition 1

If the matrices \(0 < N_{3}, N_{4} \in \mathbb {R}^{n \times n}\), the symmetric matrices \(Z_{i}, Y_{i}, L_{j}\) and \(M_{j} \in \mathbb {R}^{n \times n}\), \(i=1,2,3\) and \(j=1,2\) satisfy the following LMIs

then, the following function can be a DPF candidate:

where

Proof

By using the non-orthogonal polynomial-based integral inequality of [29], one can write \(\int ^{t}_{t-d_{t}} \dot{x}^\textrm{T}(s) N_{1} \dot{x}(s) \textrm{d}s\) \(\ge \frac{1}{d_{t}}[ d_{t}\vartheta _{3}^\textrm{T} (t) \mathcal {L}(d_{t}) \vartheta _{3} (t)] - d_{t}\vartheta _{4}^\textrm{T} (t) \mathcal {Z} \vartheta _{4} (t)\), and \(\int ^{t-d_{t}}_{t-\hslash } \dot{x}^\textrm{T}(s) N_{2} \dot{x}(s)\textrm{d}s\ge - \hslash _{d}(t) \vartheta _{6}^\textrm{T} (t) \mathcal {Y} \vartheta _{6} (t)\) \(+\frac{1}{\hslash _{d}(t)}[\hslash _{d}(t)\vartheta _{5}^\textrm{T} (t) \mathcal {M}(\hslash _{d}(t)) \vartheta _{5} (t)] \)

Since \(\hslash \ge d_{t} \ge 0\), it is clear that \(V_{N}(t) > 0\). \(\square \)

Remark 2

The new delay-product LKF has been created of modifying non-orthogonal polynomial-based integral inequality (NPBI) introduced in [29]. The NPBI is designed on the basis of a non-orthogonal polynomial sequence. The auxiliary sequel vector \(\{1, g(s), g^{2}(s)\}\) is non-orthogonal because \(\int _{a}^{b}g^{2}(s)\ne 0\). Hence, an additional cross-term has been introduced in the NPBI as compared to orthogonal polynomial type integral inequality. This additional cross-term has been key to get less conservative stability condition.

Remark 3

The DPF (9) consists of integral terms and delay coefficient-based non-integral terms. These non-integral terms are non-positive and play the role to provide relaxed stability condition, and its derivative produces cross-terms with product of delay and its derivative. Hence, both relaxed condition and delay-product properties make the DPF more effective to reduce conservatism.

By considering DPF (9), we have the following stability criterion.

Theorem 1

For matrices \(0<P \in \mathbb {R}^{6n \times 6n}, 0<P_{i}\in \mathbb {R}^{4n \times 4n}, 0<N_{i},R_{i}\in \mathbb {R}^{n \times n}\), diagonal matrices \(0 < \varPi _{k}\), \(\varOmega _{j}, \varLambda _{j}\), any matrices \(U_{i}\in \mathbb {R}^{3n \times 3n}\) and \(U_{i+2}\in \mathbb {R}^{n \times n}\), \(i=1,2; j=1,2,3; k=1,2,\ldots ,6\) with given scalars \(\hslash , \nu _{1}\) and \(\nu _{2}\), the delayed NN (1) is asymptotically stable, if inequalities in (8) and following LMIs hold for \(\dot{d}_{t} \in [\nu _{1}, \nu _{2}]\).

Proof

Consider the following LKF as:

where \(V_{N}(t)\) is defined in Proposition 1 and \(V_{1}(t)=\varpi ^\textrm{T}_{0}(t)P\varpi _{0}(t)+\int _{t-{d}_{t}}^{t} \varpi ^\textrm{T}_{1}(s)P_{1}\varpi _{1}(s)\textrm{d}s\) \(+\int _{t-\hslash }^{t-{d}_{t}} \varpi ^\textrm{T}_{1}(s)P_{2}\varpi _{1}(s)\textrm{d}s\), \(V_{2}(t)=2\sum _{i=1}^{n}\int _{0}^{\textit{W}_{i}x(t)}[\pi _{1i}f_{i}^{-}(s)+\pi _{2i}f_{i}^{+}(s)]\textrm{d}s+2\sum _{i=1}^{n}\int _{0}^{\textit{W}_{i}x_{d}(t)}[\pi _{3i}f_{i}^{-}(s)+\pi _{4i}f_{i}^{+}(s)]\textrm{d}s+\) \(+2\sum _{i=1}^{n}\int _{0}^{\textit{W}_{i}x_{h}(t)}[\pi _{5i}f_{i}^{-}(s)+\pi _{6i}f_{i}^{+}(s)]\textrm{d}s,V_{3}(t)=\hslash \int _{-\hslash }^{0}\int _{t+u}^{t}\dot{x}^\textrm{T}(s)R_{1}\dot{x}(s)\textrm{d}s \textrm{d}u+\hslash \int _{-\hslash }^{0}\int _{t+u}^{t}f^\textrm{T}(\textit{W}x(s))R_{2}f(\textit{W}x(s))\textrm{d}s \textrm{d}u\).

With the derivative of (18) along the solution of delayed NN (1), one can write

where

where \(\varPhi _{0}(\dot{d}_{t},{d}_{t}), \varPhi _{1}{d}_{t})\) and \(\varPhi _{2}(\dot{d}_{t},{d}_{t})\) are defined in (13), (14) and (15), respectively. Now, one can approximate the integral functions involving \(R_{1}\) and \(R_{2}\) in (23) by utilizing Lemmas 1 and 2. Apply integral inequality (5) of Lemma 2; on these integrals we have the following inequalities.

where \(\mathcal {R}_1={\text {diag}}\{R_{1}, 3R_{1}, 5R_{1}\}\) and \(E_{1}, E_{2}\) are defined above. Similarly using (6), we have

Now, one can estimate the right-hand side (RHS) of (24) and (25) using Lemma 1 with \(\frac{{d}_{t}}{\hslash }=\alpha \), \(\frac{{d}_{t}}{\hslash _{d}(t)}=\beta \), and finally, substituting in (23) we have

where \(\varPhi _{3}({d}_{t})\) is defined in (16) and

Now, from (3) with \(\varOmega ={\text {diag}}\{\omega _{1}, \omega _{2},\ldots , \omega _{n}\}\ge 0\) and \(\varLambda ={\text {diag}}\{\lambda _{1}, \lambda _{2},\ldots , \lambda _{n}\}\ge 0\), the following inequalities hold for \(s, s_{1}, s_{2} \in \mathbb {R}\):

where

Therefore, it follows from (27) that

so, one can write

where \(\varPhi _{4}\) is defined in (17). Using (20), (21), (22), (26), and (29), the time derivative of V(t) along the solution of (1) as

where \({\Upsilon }(\dot{d}_{t},{d}_{t})\) and \(\Gamma \) are defined in (30) and (27), respectively. The matrix \({\Upsilon }(\dot{d}_{t},{d}_{t})+\Gamma \) is linear in \(\dot{d}_{t}\) and \({d}_{t}\). Therefore, if the matrix \({\Upsilon }(\dot{d}_{t},{d}_{t})+\Gamma \) is negative definite for all \({d}_{t}\in [0,\hslash ]\) and \(\dot{d}_{t}\in [\nu _{1},\nu _{2}]\), then \(\dot{{V}}(t) < 0\). Finally, using Schur complement one can transform matrix \(\bar{\Upsilon }(\dot{d}_{t},{d}_{t})+\Gamma \) into LMIs (10) and (11). \(\square \)

4 Numerical examples

In this section, we perform a comparison between the proposed theorems and the existing ones in literature by considering two numerical examples.

Example 1

Consider a delayed NN (1) having delay function \(d_{t}\), activation function f(x(t)) satisfying (2) and (3), respectively, and \(W=I\) with

The LUB of time delay \(\hslash \) for different \(\nu =(0.1, 0.5, 0.9)\) using the criteria proposed in this paper is listed in Table 1 along with the existing ones. Comparing proposed Theorem 1 and the criteria listed in Table 1, one can find that this method is less conservative in comparison with all the approaches listed in Table 1 for slow-varying delay \((\nu =0.1)\). But for fast-varying delay \((\nu =0.5, 0.9)\), Theorem 1 is more conservative than Theorem 1 of [34], Proposition 1 of [29], and Proposition 1 of [33]. However, the proposed criteria in Tm 1 contain less number of decision variables and it decreases the complexity.

Example 2

Consider another delayed NN in the form of (1), where

with \(\varSigma _{1}=0, \varSigma _{2}={\text {diag}}(0.368, 0.1795, 0.2876)\). Also, the time-varying delay and the activation function satisfy (2) and (3), respectively.

The LUB of the time-varying delay \(\hslash \) for various \(\nu =-\nu _{1}=\nu _{2}\) using the proposed criteria and existing ones is listed in Table 2. The obtained results in Theorem 2 provide better results as compared to all works listed in Table 2 except Theorem 1(N=1) of [34] for \(\nu =0.5\). It may be noted that the proposed methods involve lesser number of LMI variables. Therefore, the criteria introduced in this article reduce the computational burden and complexity.

5 Conclusion

In this paper, stability analysis of generalized DNN is studied utilizing LKF method. First a delay-product type functional (DPF) is proposed using the cross-terms of NPII. In second part, a LKF is introduced using newly developed DPF. Finally, a stability criterion based on LMI is derived in which the information on delay and its time derivative are considerably utilized to get improved results. The effectiveness of the developed stability criteria is demonstrated by considering two numerical examples.

Availability of data and material

No datasets were generated or analyzed during the current study.

References

Chua LO, Yang L (1988) Cellular neural networks: applications. IEEE Trans Circuits Syst 35:1273–1290

Liu GP (2012). Nonlinear identification and control: a neural network approach. Springer, Berlin

Xie X, Ren Z (2014) Improved delay-dependent stability analysis for neural networks with time-varying delays. ISA Trans 53:1000–1005

Shao H, Li H, Shao L (2018) Improved delay-dependent stability result for neural networks with time-varying delays. ISA Trans 80:35–42

Kwon OM, Park MJ, Park JH, Lee SM, Cha EJ (2014) New and improved results on stability of static neural networks with interval time-varying delays. Appl Math Comput 239:346–357

Shi K, Zhu H, Zhong S, Zeng Y, Zhang Y, Wang W (2015) Stability analysis of neutral type neural networks with mixed time-varying delays using triple-integral and delay-partitioning methods. ISA Trans 58:85–95

Mahto SC, Elavarasan RM, Ghosh S, Saket R, Hossain E, Nagar SK (2020) Improved stability criteria for time-varying delay system using second and first order polynomials. IEEE Access 8:210961–210969

Kwon OM, Park MJ, Lee SM, Park JH, Cha EJ (2012) Stability for neural networks with time-varying delays via some new approaches. IEEE Trans Neural Netw Learn Syst 24:181–193

Hua C, Yang X, Yan J, Guan X (2012) New stability criteria for neural networks with time-varying delays. Appl Math Comput 218:5035–5042

Zhang XM, Han QL (2011) Global asymptotic stability for a class of generalized neural networks with interval time-varying delays. IEEE Trans Neural Netw 22:1180–1192

Zhang CK, He Y, Jiang L, Lin WJ, Wu M (2017) Delay-dependent stability analysis of neural networks with time-varying delay: a generalized free-weighting-matrix approach. Appl Math Comput 294:102–120

Yang B, Wang J, Wang J (2017) Stability analysis of delayed neural networks via a new integral inequality. Neural Netw 88:49–57

Mahto SC, Ghosh S, Nagar SK, Dworak P (2020) New delay product type Lyapunov–Krasovskii functional for stability analysis of time-delay system. In: Advanced, contemporary control: proceedings of KKA 2020—the 20th Polish control conference, Łódź, Poland. Springer, pp 372–383

Gu K, Chen J, Kharitonov VL (2003) Stability of time-delay systems. Springer, Berlin

Seuret A, Gouaisbaut F (2013) Wirtinger-based integral inequality: application to time-delay systems. Automatica 49:2860–2866

Park P, Lee WI, Lee SY (2015) Auxiliary function-based integral inequalities for quadratic functions and their applications to time-delay systems. J Frankl Inst 352:1378–1396

Seuret A, Gouaisbaut F (2015) Hierarchy of lmi conditions for stability analysis of time-delay systems. Syst Control Lett 81:1–7

Zeng HB, He Y, Wu M, She J (2015) Free-matrix-based integral inequality for stability analysis of systems with time-varying delay. IEEE Trans Autom Control 60:2768–2772

Zhang XM, Han QL, Seuret A, Gouaisbaut F (2017) An improved reciprocally convex inequality and an augmented Lyapunov–Krasovskii functional for stability of linear systems with time-varying delay. Automatica 84:221–226

Lee TH, Park JH (2017) A novel Lyapunov functional for stability of time-varying delay systems via matrix-refined-function. Automatica 80:239-242

Xu S, Lam J, Zhang B, Zou Y (2015) New insight into delay-dependent stability of time-delay systems. Int J Robust Nonlinear Control 25:961–970

Lee TH, Park JH, Xu S (2017) Relaxed conditions for stability of time-varying delay systems. Automatica 75:11–15

Zhang B, Lam J, Xu S (2014) Stability analysis of distributed delay neural networks based on relaxed Lyapunov–Krasovskii functionals. IEEE Trans Neural Netw Learn Syst 26:1480–1492

Zhang B, Lam J, Xu S (2016) Relaxed results on reachable set estimation of time-delay systems with bounded peak inputs. Int J Robust Nonlinear Control 26:1994–2007

Zhang CK, He Y, Jiang L, Wu M (2017) Notes on stability of time-delay systems: bounding inequalities and augmented Lyapunov–Krasovskii functionals. IEEE Trans Autom Control 62:5331–5336

Mahto SC, Ghosh S, Saket R, Nagar SK (2020) Stability analysis of delayed neural network using new delay-product based functionals. Neurocomputing 417:106–113

Lee TH, Park JH (2018) Improved stability conditions of time-varying delay systems based on new Lyapunov functionals. J Frankl Inst 355:1176–1191

Lee TH, Trinh HM, Park JH (2017) Stability analysis of neural networks with time-varying delay by constructing novel Lyapunov functionals. IEEE Trans Neural Netw Learn Syst 29:4238–4247

Zhang XM, Lin WJ, Han QL, He Y, Wu M (2017) Global asymptotic stability for delayed neural networks using an integral inequality based on nonorthogonal polynomials. IEEE Trans Neural Netw Learn Syst 29:4487–4493

Kim JH (2016) Further improvement of Jensen inequality and application to stability of time-delayed systems. Automatica 64:121–125

Yang B, Wang R, Dimirovski GM (2016) Delay-dependent stability for neural networks with time-varying delays via a novel partitioning method. Neurocomputing 173:1017–1027

Chen J, Park JH, Xu S (2018) Stability analysis for neural networks with time-varying delay via improved techniques. IEEE Trans Cybern 49:4495–4500

Zhang XM, Han QL, Zeng Z (2017) Hierarchical type stability criteria for delayed neural networks via canonical Bessel–Legendre inequalities. IEEE Trans Cybern 48:1660–1671

Chen J, Park JH, Xu S (2019) Stability analysis for delayed neural networks with an improved general free-matrix-based integral inequality. IEEE Trans Neural Netw Learn Syst 31:675–684

Yang B, Wang R, Shi P, Dimirovski GM (2015) New delay-dependent stability criteria for recurrent neural networks with time-varying delays. Neurocomputing 151:1414–1422

Acknowledgements

I would like to acknowledge that this paper was completed entirely by me and my co-author and not by someone else.

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Contributions

Dr. Sharat Chandra Mahto involved in conceptualization of this study, methodology, software. Mr. Thakur Pranav Kumar Gautam took part in data curation, writing—original draft preparation.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mahto, S.C., Gautam, T.P.K. A new Lyapunov–Krasovskii functional for stability analysis of delayed neural network. Int. J. Dynam. Control (2024). https://doi.org/10.1007/s40435-024-01450-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40435-024-01450-3