Abstract

This paper presents an estimation algorithm for continuous-time stochastic systems under coloured perturbations using an extended form of the instrumental variable method. A comparison between the extended an the simple form was done using two numerical examples to test their efficiency. The results showed that the simple version is able to estimate coloured noise without additional information and it is easier to implement than the extended version.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A model is a mathematical representation of any physical system (biological, electronic, mechanical). System modelling requires to consider all the relevant factors involved in its description, when the model of a plant takes into account random variations is defined as a stochastic system, these variations can be present in the parameters, measurements, inputs or disturbances, and if the inputs of the system represent functions of time determined for all instants beginning from some initial instant, like analogous systems, then the system is called continuous [1, 2]. The interest in the study of stochastic models has increased recently, due to the necessity to take into account the random effects present in physical systems. Intensified research activity in this area has been stimulated by the need to take into account random effects in complicated physical systems [3].

A fundamental task in engineering and science, is the extraction of the system information and the model from measured data. A discipline that provides tools for an efficient use of data, and the estimation of variables appearing in mathematical models is parameter estimation [4]. Parameter identification for dynamic systems has been studied for at least the last three decades, motivated by the need of designing more efficient control systems (see [5, 6]). In discrete time systems, the most common technique is the Least Squares Method (LSM) (see, [6,7,8]). This method has been very successful in theory and applications, like econometrics, robotics or mechanics.

Parameter estimation using instrumental variables has been used in problems where the regressors are correlated with the equation noise [9]. The instrumental variables can be formed as different combinations of inputs, delayed inputs and outputs, filtered inputs, etc., in [10] a general analysis of various instrumental variable methods is presented. Systems with additive-noise have the problem of errors in variable perturbations, in [11] it is presented the instrumental variable estimator in a general framework. In continuous-time the refined instrumental variable has been implemented; in [12] it is presented a study that provides the convergence property of this method.

In this paper we present the parameter estimation problem of continuous-time stochastic systems under coloured perturbations. Here it is proposed the instrumental variable method implemented in two different ways: the simple estimation algorithm (see [13]) and an extended form of the algorithm, similar to the extended LSM algorithm presented in [14]. Until now, this algorithms have been extensively applied in discrete time, but the implementation in continuous-time stochastic systems is scarce. The main idea is to compare both algorithms in order to show if the simple version is good enough to estimate coloured noise, or if it is necessary to extend the algorithm to estimate when the system presents coloured noise.

Paper structure In the second section the problem formulation is presented, as well as the assumptions needed. In the third section the extended instrumental variable algorithm is designed. Finally, the estimation technique is illustrated with two numerical examples that will show the performance of the extended IV and will compare it with the simple version of the algorithm.

2 Problem formulation

Consider the stochastic correlated continuous-time system with the dynamics states \(x_{t} \in \mathbf R ^{n}\) given by the following system of stochastic differential equations:

where the unknown time-varying matrix to be identified is \(A_{t} \in \mathbf R ^{n \times m}\), the matrix \(H_{t}:=H_{0}+\varDelta H_{t}\) has a nominal (central) matrix \(H_{0}\in \mathbf R ^{n \times n}\) that is supposed to be a priori known and \(\varDelta H_{t}\in \mathbf R ^{n\times n}\) is unknown but bounded, i.e., \(\left\| \varDelta H_{t}\right\| \le \varDelta ^{+}\), \(B_{t}\in \mathbf R ^{n\times n}\) is a known deterministic matrix , \(\sigma _{t}\in \mathbf R ^{n\times l}\) is known, \(f_{t}\) is a measurable (available at any \(t\ge 0)\) deterministic bounded excitation vector-input, \(\left( \left\| f_{t}\right\| \le f^{+}\right) \), \(\zeta : \mathbf R ^{n}\rightarrow \mathbf R ^{m}\) is a known (measurable) nonlinear Lipschitz vector function or, a regressor, and \(W_{t}\) is a standard vector Wiener process, \(s_{t}\) is the coloured perturbation defined by the deterministic matrix \(H_{t}\) and the Wiener process \(dW_{t}\).

2.1 Problem description

The main problem in this paper is to design an estimate \(\hat{A}_{t}\) of the time varying matrix \(A_{t} \in \mathbf R ^{n \times m}\) implementing an extended instrumental variable method based only on the available data up to time t that includes all information about the state dynamics \(x_{t}\) and excitation inputs. The subsystem that defines the coloured noise is formed by a nominal matrix \(H_{0}\) that is known, and the perturbation matrix \(\varDelta H_{t}\) that is unknown but bounded, and also a Wiener process. Here we will restudy the algorithm presented in [13], and extend it for the coloured noise case, and will compare both forms of the algorithm, and also compare their performance with the LSM extended algorithm presented in [14].

3 Instrumental variables algorithm for continuous-time

In [14] an algorithm for parameter estimation in stochastic systems under coloured perturbations using LSM was presented, this method represented the plant dynamic in an extended form, and it was necessary to estimate part of the structure of the noise in order to estimate \(A_{t}\). Here we will include the instrumental variable in this algorithm and will compare its performance with the simple instrumental variable algorithm in order to analyse which version is more suitable for coloured perturbations.

3.1 Estimation algorithm in the extended form

For the extended version of the IV algorithm, first let us represent the plant dynamics of Eq. (1) in the following extended form

where \(C_{t}\) is the vector to estimate, composed by elements \(a_{ij,t}\) from matrix \(A_{t}\) and the elements \(s_{i,t}\) from \(s_{t}\)

and the new regressor \(z_{t}\) is composed by the original regressor \(x_{t}\), and the available data \(g_{i,j}\) from \(B_{t}\) and \(H_{0}\)

First, notice that, by back-integrating, for \(t\ge h\) Eq. (4) can be expressed as

rearranging the terms of this equation in an extended output \(F_{t,t-h}\), an extended regressor \(Z_{_{t,t-h}}\), and an extended perturbation \(\xi _{t,t-h}\) in the corresponding “regression form” we get

where

with \(h>0\) as a back-step, and \(Z_{_{t,t-h}}\) defined for \({\mathbf {\tau \ge h}}\). Here the “extended output” \(F_{t,t-h}\) and the “extended regressor” \(Z_{_{t,t-h}}\) are known at each \(t\ge 0\). Now it is necessary to define the instrumental variable as follows

here instead of the extended matrix \(z_{t}\) it will be used the matrix

that is equivalent to (1) but without the Wiener process. In this system \(x_{t}\) is replaced by the instrument \(v_{t}\), that is noise free and is based on the following system

since we are trying to estimate \(A_{t}\), it is not realistic to use the exact parameter in the instrumental variable, instead we use an approximated value \(\tilde{A}_{t}\).

Multiplying (6) by the instrumental variable \(V_{_{t,t-h}}^{\top }\) and integrating back from \(t-h\) to t, we get

where

If the “rate of parameter changing” \(\left\| C_{s^{\prime }} -C_{t}\right\| \) for any \(s^{\prime }\in \left[ t-h,t\right] \) is small enough (since h can be taken also small enough), one can define the current parameter estimate \(\hat{C}_{t}\) as the matrix satisfying the equalities

Then the “extended error-vector” \(\bar{\xi }_{t,t-h}\) will represent the current identification error corresponding to the parameter estimate \(\hat{C}_{t}\) satisfying (11). If the “persistence excitation condition” is fulfilled, i.e., if for any \(t\ge h>0\)

then the estimate \(\hat{C}_{t}\) can be represented by

that can be expressed, alternatively, as

Here, \(\chi \left( \tau \ge t-h\right) \) is the characteristic function defined by

In fact, this function characterizes the “window” \(\left[ t-h,t\right] \) within the integrals in (14). There, instead of \(\chi \left( \tau \ge t-h\right) \), a different kind of “windows” can be applied, for example, the “window” corresponding to the “forgetting factor” that leads to the following matrix estimate

with

where \(0<r<1\) is the forgetting factor. Below we will analyse differential form of the matrix estimating algorithm (16).

3.2 Differential form of the estimating algorithm

The direct derivation of (16) and (17) implies

where

Here\(\ \frac{d}{dt}r^{t-\tau }\) \(=r^{t-\tau }\ln r\). In view of this, (19) can be rewritten as

To calculate \(\dot{\varGamma }_{t}\), let us differentiate the identity \(\varGamma _{t}\varGamma _{t}^{-1}=I\) that leads to the following relations

The direct differentiation of (17) gives

Replacing \(\frac{d}{dt}\varGamma _{t}^{-1}\) (22) in (21) we get

So, finally, the relations (23) and (20) constitute the following extended instrumental variable identification algorithm:

In fact, \(t_{0}\) is any time just after the moment when the matrix \(\varGamma _{t}^{-1}\) is non-singular. This algorithm will be implemented for the coloured noise case, and compared with the simple IV algorithm shown in the following subsection.

3.3 The simple form of the IV algorithm

The IV algorithm in the simple form is based on the system

where the stochastic noise is only a Wiener process. Following the same procedure presented previously we get the simple form of the IV method given by:

The error estimation analysis for both algorithms is similar to the result presented in [13, 14].

4 Numerical examples

In this section, we present two numerical examples in order to show the performance of the estimation algorithms mentioned in the previous section.

Example A. In the first example, system (1) is defined as follows

where \(\sigma _{t}=1\) and \(B_{t}=1\), \(h=0.0001\), and \(r=0.3\), the simulation time is \(t=100\), and the simulation method used in Matlab is ode1. The instrument \(v_{t}\) for the simple version (Eq. 25) is

and for the extended version (Eq. 24)

In Fig. 1 it is shown the estimation algorithm using instrumental variables in the extended form, and it is compared with the least squares method, also in an extended form. Both algorithms have a similar performance, and there is not a visible benefit in the implementation of the instrumental variable.

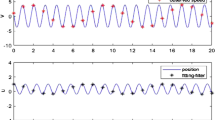

In Fig. 2, it is presented the result of the estimation algorithm in the simple (IV2) and extended version (IV1) of the instrumental variable. Here the simple version of the algorithm shows a better performance than the extended version. This Figure shows that the simple version of the instrumental variable algorithms is strong enough to estimate even coloured noise, and is more effective than extending the estimation algorithm by adding information of the coloured noise.

The quality of the parameter estimation algorithm has been evaluated using the following performance index

with \(\varepsilon = 0.0001\) and \(t=100\), here \(\varepsilon \) is a regularizing parameter that avoids singularities in the beginning of the process (\(t=0\)). The performance index results for this example are:

this index shows that indeed, the simple form of the instrumental variable method is more effective for parameter estimation in systems under stochastic perturbations.

Example B. In this example the following system, from Eq. (1), is defined by

where \(\sigma _{t}=1\), \(B_{t}=1\), \(h=0.02\) and \(r=0.2\), the simulation time is \(t=50\), and the simulation method used in Matlab is ode3, the instrument for the simple version (Eq. 25) is

and for the extended version

The estimated parameter it is presented in Fig. 3, that displays the original parameter and the result of the estimation algorithm, this Figure shows that the simple form of the algorithm, even when some noise is present in the estimated parameter, has a good performance and is able to estimate the time varying parameter without any additional information concerning the coloured noise, and without any filtering or discretization of the system. The performance index results in this example are :

-

For the simple case \(J_{t=50}^{IV}=0.0284\)

-

For the extended case \(J_{t=50}^{IV}=0.0295\)

5 Conclusion

This study presented the instrumental variable (IV) technique for parameter estimation in continuous-time stochastic systems under coloured perturbations. The IV estimation algorithm was analysed in the simple form and in an extended form, that estimates part of the structure of the coloured noise. It was shown, through the performance index, that even when the extended form shows a good performance, the simple form is able to estimate coloured noise without additional information, is easier to implement and, has a better performance than the extended version.

References

Pugachev VS, Sinitsyn IN (2001) Stochastic systems. Theory and applications. World Scientific, Singapore

Gard T (1988) Introduction to stochastic differential equations. Marcel Dekker, New York

Davis MHA (1977) Linear estimation and stochastic control. Chapman and Hall, London

Beck JV, Arnold KJ (1977) Parameter estimation in engineering and science. John Wiley & Sons, New York, Chichester, Brisbane, Toronto

Chen H, Guo L (1991) Identification and stochastic adaptive control. Birkhäuser, Boston, Basel, Berlin

Ljung L (1999) System identification. Theory for the user. Prentice Hall, Upper Saddle River

Poznyak A (1980) Estimating the parameters of autoregressing processes by the method of least squares. Int J Syst Sci 5(11):235254

Kumar P, Varaiya P (1986) Stochastic systems: estimation, identification,and adaptive control. Prentice Hall, Upper Saddle River

Bowden R, Darrel A (1984) Instrumental variables. Cambridge University Press, Cambridge

Söderström T, Stoica P (1981) Comparison of some instrumental variable methods consistency and accuracy aspects. Automatica 17(1):101–115

Söderström T (2011) A generalized instrumental variable estimation method for errors-in-variables identification problems. Automatica 47:1656–1666

Liu X, Wang J, Zheng WX (2011) Convergence analysis of refined instrumental variable method for continuous-time system identification. IET Control Theory Appl 5(7):868–877

Escobar J, Enqvist M (2016) Instrumental variables and LSM in continuous-time parameter estimation, ESAIM: Control, Optimisation and Calculus of Variations

Escobar J, Poznyak A (2011) Time-varying matrix estimation in stochastic continuous-time models under coloured noise using LSM with forgetting factor. Int J Syst Sci 42(12):2009–2020

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Escobar, J., Gallardo, A. Instrumental variables for parameter estimation in stochastic systems with coloured noise. Int. J. Dynam. Control 6, 1105–1110 (2018). https://doi.org/10.1007/s40435-017-0304-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40435-017-0304-z