Abstract

There are many challenges against an accurate gas turbine fault diagnostics, such as the nonlinearity of the engine health, the measurement uncertainty, and the occurrence of simultaneous faults. The conventional methods have limitations in effectively handling these challenges. In this paper, a hybrid intelligent technique is devised by integrating an autoassociative neural network (AANN), nested machine learning (ML) classifiers, and a multilayer perceptron (MLP). The AANN module is used as a data preprocessor to reduce measurement noise and extract the important features for visualisation and fault diagnostics. The features are extracted from the bottleneck layer output values based on the concept of the nonlinear principal component analysis (NLPCA). The nested classifier modules are then used in such a manner that fault and no-fault conditions, component and sensor faults, and different component faults are distinguished hierarchically. As part of the classification, evaluation of the fault classification performance of five widely used ML techniques aiming to identify alternative approaches is undertaken. In the end, the MLP approximator is utilised to estimate the magnitude of the isolated component faults in terms of flow capacity and isentropic efficiency indices. The developed system was implemented to diagnose up to three simultaneous faults in a two-shaft industrial gas turbine engine. Its robustness towards the measurement uncertainty was also evaluated based on Gaussian noise corrupted data. The test results show the derivable benefits of integrating two or more methods in engine diagnostics on the basis of offsetting the weakness of the one with the strength of another.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The performance of a gas turbine (GT) degrades through time due to different physical faults, including fouling, erosion, corrosion, foreign object damage, thermal stress, and an increase in blade tip clearance [1]. This would result in high energy consumption, loss of production, environmental pollution, and even major damage to the machinery. In order to avoid these faults and to maintain its best performance, the GT should be provided with an effective monitoring system. Degradations can be manifested by changes in the gas path measurements (such as pressure, temperature, spool speed, fuel flow) from a previously established baseline. Due to the reason that performance could also, equivalently, be changed owing to load or ambient condition variations, establishing the baseline is difficult. There are also other challenges against achieving sufficiently accurate fault diagnostic techniques, including measurement uncertainties; occurrence of multiple faults, simultaneously; and availability of limited numbers of sensors installed on the engine’s gas path [2].

In order to address these challenges, different studies have been conducted in the past [3, 4]. With regard to the baseline establishment, one of the most widely used approaches is correcting the measurements towards the ambient and load conditions [5]. Alternatively, Mohammadi and Montazeri-Gh [6] recommended a method that is able to deal with part loads. In this method, the input data generated at different part load conditions are first corrected towards the ambient conditions, and then, the diagnostic performance of the algorithm is analysed at each part load.

In reality, it is difficult to have error-free measurements [7]. The measurement uncertainty affects the value of the measurement deviations due to performance deterioration, thereby providing the wrong information in the fault diagnostics. As a result, sensor diagnostics is one of the most popular studies in GT engine condition monitoring. Noise reduction, outlier removal, and sensor fault diagnostics are some of the key objectives. Numerous techniques have been devised previously by different authors. For instance, authors in [8,9,10] proposed Kalman filter-based schemes, whilst others, as in Refs. [11, 12], used AANNs. In addition, a comprehensive explanation of more than ten different techniques had been presented by Ganguli [13]. Different factors, such as noise and bias levels, the possible existence of multiple sensors being simultaneously faulty, and sensor fault detection and isolation in a faulty engine, affect the diagnostic ability of sensor faults [14].

On the other hand, as the GT operating hours increase, it is more likely that two or more components could deteriorate simultaneously. The challenge to get an accurate diagnostic system increases with the increase in the number of faults. Some studies have focused on multiple fault diagnostics by neglecting noise effects. For instance, Kong et al. [15] contributed a GT performance diagnostic method using a user-friendly interface neural network that can deal with component faults only. A multilayer perceptron (MLP) [16] was also used for multiple component fault detection and isolation in a turboshaft engine. The reported results showed that, in the absence of noise, 99.4% of single component faults (SCFs) and double component faults (DCFs), and 94.6% of triple component faults (TCFs) were classified correctly.

Although several studies, as mentioned above, considered sensor and component problems separately, there are also numerous studies which have attempted to address component problems taking into account sensor noise and bias. Ogaji et al. [17] developed an artificial neural network (ANN)-based fault diagnostic method that can detect, isolate, and quantify both sensor and component faults on a single spool industrial GT. In the same manner, Joly et al. [18] utilised the ANN for component fault isolation and assessment of a high bypass military turbofan engine. The double shaft GT engine diagnostic performance of nested ANNs was also examined by Xiradakis and Li [19] and Fentaye et al. [20]. Generally, the reported results indicated that diagnostic accuracy deceases with the number of concurrent faults and the noise level considered.

Recently, Tayarani-Bathaie and Khorasani [21] proposed dynamic neural network (DNN)-based mechanisms that can detect and isolate both sensor and component faults. In the same year, Vanini et al. [22] proposed a new sensor and component fault diagnostic approach using the AANN. More recently, Amozegar et al. [23] and Li et al. [24] developed an ensemble DNN-based scheme for a GT engine fault diagnostics. However, these methods were limited to single and double faults and were qualitative. Moreover, they used efficiency and flow capacity changes separately to represent single and concurrent component faults although the component’s deterioration should, most significantly, be represented by at least the two health indices as a module [25].

In general, although the aforementioned techniques have exhibited remarkable performance for the task they were designed for, as far as multiple fault diagnostics is concerned, most of them were limited to dual faults only. On the other hand, in those studies where TCFs were considered, their accuracy needs some improvement.

In this research, a GT gas path component fault diagnostic system using a hybrid AI-based technique has been developed. The method has three integrated parts: the first part performs the task of reducing measurement noise and extracting the necessary features, the second part concerns fault detection and isolation, whilst the last part estimates the magnitude of the faults. For the first part, an AANN-based model was developed and the required features were extracted exploiting the concept of the NLPCA. A hierarchical multiple fault classifier was then modelled and integrated with the AANN model. In order to select the appropriate classifier that could effectively and efficiently perform the multi-class classification task, five different classifiers [decision tree (DT), linear and nonlinear support vector machines (SVMs), K-nearest neighbour (KNN), and ensemble classifier (EC)] were developed. Finally, the fault quantification scheme was developed using the MLP. The diagnostic performance of our proposed method has been tested for single, double, and triple component fault scenarios in a two-shaft stationary GT engine. The required data to train and test this method were generated from a GT simulation program (GSP). Finally, the performance of our method was compared with the state-of-the-art methods. To sum up, the main contributions of the research delivered in this paper can be listed as:

-

Combined usage of an AANN, an ML classifier, and an MLP: As a matter of fact, there is no single technique which addresses all the issues related to GT fault diagnostics; it would, however, be interesting to try and combine more than one technique to offset the limitations of the one with the advantages of the other [4]. Regarding this, although there are many different hybrid techniques available in the literature developed for a GT gas path diagnostic application, our proposed combination is new. Different alternative frameworks were considered and evaluated, and therefore, the most appropriate framework was selected. This framework was designed in such a way that the necessary tasks in fault diagnosis like noise filtration, feature extraction, and decision making can be performed step by step in an integrated manner. The comparison results confirmed that the proposed method has an obvious advantage over the individual methods, particularly over those designed to perform single and multiple faults together.

-

Usage of an AANN for noise reduction and faults visualisation: Previous studies, as in Ref. [22], have mainly focused on the use of the AANN for GT data validation based on a trend shift (threshold) and binary decision approaches. In both cases, the difference between the predicted and observed measurements, called residuals, is used. Unlike these approaches, in the new method, in addition to the noise reduction task, the AANN is used to extract the important features using the bottleneck layer neurons or principal components (PCs). The feature variables are used as axes to the visualisation plot. It is important to understand the relationship between the available fault classes and decide whether they are linearly or nonlinearly separable. Besides that, upon using the AANN for noise minimisation, the tolerance of the proposed method towards measurement noise is enhanced.

-

As discussed, in the literature review, the majority of the methods are limited to single fault analysis due to their frequency of existence. Moreover, except for a few authors like those in [18, 26] who considered modular DCFs (4 performance parameters at a time), most of the authors who studied DCF diagnostics, like for example [21,22,23], used 2 performance parameters as a DCF. In the new method up to 3 faulty components (6 performance parameters at a time) were diagnosed successfully.

2 Gas turbine description

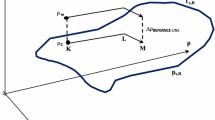

Although the fault diagnostics technique proposed in this paper is of general validity for an industrial two-shaft gas turbine applications, it will be easier to present it through its application to a particular engine type. The engine considered in this study is a two-shaft stationary gas turbine engine, which is thermodynamically similar to the LM2500. It consists of a 16-stage axial compressor with a pressure ratio of 17.2:1, a 2-stage high-pressure compressor turbine, and a 6-stage free power turbine (PT). Since the gas generator (GG) section and the power turbine are not mechanically coupled, the GG shaft and the PT shaft are free to rotate at different speeds. Some specifications of this engine in design point condition can be found in [27]. Figure 1 illustrates the schematic of such an engine.

Monitoring the health status of gas turbine components is accomplished based on gas path measurement deviations. For the current engine, eight (8) measurable parameters are used and their maximum nonrepeatability values are given in Table 1.

3 Gas path faults and their simulation

A gas turbine engine is subjected to deterioration due to its hostile operating environment. The deterioration can be recoverable, nonrecoverable, and permanent. Fouling, erosion, corrosion, blade tip clearance, and foreign object damage are the causes. Amongst these causes, fouling and erosion are the most common ones [28]. Fouling is accountable for more than 70% of the overall engine performance loss during its operation [1]. Particularly, maybe due to their location in the gas path, fouling is a common problem for compressors and erosion for turbines [29]. Consequently, in the current work, due to their frequency of existence and percentage contribution to the overall performance degradation, compressor fouling (CF), compressor turbine erosion (CTE), and power turbine erosion (PTE) were considered. As per past studies [30, 31], these component faults have the possibility to exist one at a time and simultaneously. Accordingly, seven fault scenarios, as listed in Table 2, were assumed as component faults that may possibly occur in a two-shaft engine during its operation. The performance model of the considered gas turbine was developed by means of one of the most popular engine performance modelling programs called GSP. It is a user-friendly component-based tool developed at the National Aerospace Laboratory (NLR), in the Netherlands. It can perform both design and off-design simulations of any kind of gas turbine in different configurations [32]. Before simulating deterioration, the “healthy” condition model was developed based on generic component maps scaled to the design point data of the engine under ISO conditions (Pamb = 1.01325 bar and Tamb = 288.15 K).

Summary of the data generation is given below.

-

First, 357 data samples were generated from the “healthy” engine conditions.

-

Next, as a component fault scenario, the seven component faults listed in Table 2 (CF, CTE, PTE, CF + CTE, CF + PTE, CTE + PTE, and CF + CTE + PTE) were taken into account. The generally accepted and implemented method to simulate deterioration is implanting sets of known fault case patterns associated with each fault scenario to the healthy engine model [33]. Accordingly, a total of 3570 fault case patterns (i.e. 510 patterns from each component fault type) were drawn based on Table 3. Generally, two data groups were generated: a 2499 data sample (we call it “main dataset” hereafter) for learning and a 1071 data sample (153 samples from each fault class) as a blind test case used to verify the diagnostic capability of the method for unknown conditions. The main dataset was further divided into three subsets: training, validation, and test.

Table 3 Relationship between the gas path faults and performance parameters -

In order to take into account the issue of measurement uncertainty, Gaussian noise was added into the data, which is the standard way of engine measurement noise representation [34]. The noise level for each measurement was assumed to be the standard deviation in per cent of the measured values, and this assumption is the recommended measurement noise assumption in gas turbines.

-

Finally, the percentage measurement deltas (Δz) were computed based on Eq. (1) where yb and yp are the baseline and the predicted value of the measurements, respectively.

$$\Delta z = \frac{{y_{\text{p}} - y_{\text{b}} }}{{y_{\text{b}} }}$$(1)

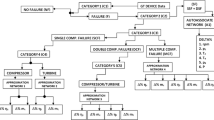

4 Gas path diagnostics method

According to the most widely used definition of a fault diagnostics in the military and other sectors [35], it is the procedure of fault detection (detecting an abnormal operating condition and reporting), isolation (determining the type and location of the detected fault), and identification (estimating the magnitude of the isolated fault). In the proposed method (Fig. 2), an AANN was used as a data preprocessor to minimise noise effects and extract features for visualisation and fault classification, nested ML-based classifiers were used as gas path faults classification scheme, and a MLP-based approximation network was used to estimate the magnitude of the isolated component faults. According to this framework, the component faults given in Table 2 were classified in such a way that engine operators could easily understand the type, nature, and location of these faults. The first module is the detection module where healthy and unhealthy data classes were separated. The second classifier module was accountable to split single, double, and triple component fault categories. The remaining two classification modules were trained to distinguish single component faults (F1, F2, and F3) and double component faults (F4, F5, and F6), respectively. Finally, a single approximation network applied to estimate the magnitude of the isolated component fault(s) in terms of flow capacity (Γ) and isentropic efficiency (η) deltas (Δ). Therefore, ΔΓC, ΔηC, ΔΓCT, ΔηCT, ΔΓPT, ΔηPT are outputs of the approximation network associated with the compressor, compressor turbine, and power turbine components. Using nested modules for classification aiming to divide and share complex diagnostic tasks is highly encouraged [2]. In particular, dividing the diagnostic tasks systematically and arranging the associated models hierarchically provide a more detailed information about the nature of the gas path problems. That may increase the understanding of the engine operator in the decision making. Moreover, this helps to avoid the possibility of searching a nonexisting fault by activating only the necessary module(s) in the hierarchy so that the testing time would be reduced. The advantage of this concept has also been evaluated for different classification methods [18, 36]. The fault diagnostic effectiveness of the proposed algorithm was demonstrated in the MATLAB programming language.

4.1 Noise reduction and visualisation using an AANN

AANNs are an important family of multilayer feed forward neural networks with equal input and output size. They are also known as autoencoder, bottleneck, NLPCA, replicator network, and sand-glass network due to its unique architecture [37]. The general structure, as shown in the upper part of Fig. 3, usually contains five layers: an input layer, three hidden layers, and an output layer. The hidden layer consists of mapping, bottleneck, and de-mapping layers. The encoder maps the high-dimensional input vector (i.e. sample of the measured deviations; ΔT3, ΔP3, ΔNCT, ΔWf, ΔT4, ΔP4, ΔT5, ΔP5, as shown in Fig. 3) into a low-dimensional feature vector and the decoder, taking the output of the encoder, demaps back to the input space. The bottleneck contains the minimum number of neurons, and those neurons correspond to the PCs. The compression process is performed based on the concept of the NLPCA. The compressed feature vector consists of the most relevant and meaningful representation of the input data, and the feature variables can be considered as PCs [22, 38]. Once we have this feature vector, we can easily visualise by plotting it on a suitable dimensional space for further analysis. Because the mapping and de-mapping layers should be able to efficiently represent data that lie on and close to nonlinear manifolds, the activation function for these layers ought to be nonlinear [38]. In this regard, for hidden layers with a suitable number of neurons, sigmoid activation functions can handle any type of multidimensional nonlinear function. The bottleneck and output layers can use either linear or nonlinear activation functions. In the present work, a Tan-Sigmoid (TANSIG) scaled into the range (− 1, 1) was the activation functions used in the hidden layers, whilst purelin was applied in the output layer [39]. The number of neurons per layer is determined through training. More details on AANNs about the mathematical analysis, architecture, learning process, and potential applications can be found in [38, 40].

The AANN architecture illustrated in Fig. 3 was used to reduce measurement noise and extract features for the visualisation and fault diagnostics. For the noise reduction network, noisy data were used as an input and noise-free data as an output. Whilst training, the network is accountable to produce an output as close to the original noise-free data as possible. Meanwhile, since the number of bottleneck neurons is less than the input neurons, the 8-dimensional input data is compressed to two or 3-dimensional feature vector by the bottleneck layer so that it can be visualised on a 2D or 3D space. In order to select the best structure with an acceptable noise reduction capability, the generated MATLAB code was run multiple times by changing the controlling factors including the number of hidden neurons, the activation functions, and the training function. An equal number of neurons were assumed for the mapping and de-mapping layers varied from 5 to 40. Mean squared error (MSE) was the cost function minimised by the backpropagation.

After a successful training, the network weights and biases were saved and the encoder and decoder parts separated. Simulation of the encoder was then followed using both the training and test data samples so as to extract the important features for the visualisation. Assuming that b1 is the mapping layer bias vector, LW1 is the mapping layer weight vector, b2 is the bottleneck layer bias vector, and LW2 is the bottleneck layer weight vector, for an input data X ∈ RM×N (where M and N are the numbers of input parameters and number of samples used, respectively) of the output of the bottleneck layer neuron or the feature vector can be computed by applying the Matlab code; h = tansig(repmat(b2, 1, N) + LW2 * tansig(b1 * ones(1, N) + LW1 * X)). In the end, for the visualisation, the feature vector was projected onto the feature space (x, y, and z-axis represent the 1st, 2nd, and 3rd bottleneck layer neuron output values, respectively). This enables a human observer and can be an engine health monitoring system developer or end user, to visualise and interpret the nature of the component(s) performance degradation. The complete process flow diagram of the noise reduction and feature extraction program is given in Fig. 4.

4.2 Gas turbine component FDI using nested ML classifiers

The FDI problem was treated as a multi-class classification problem. It is understandable that selecting an appropriate classification technique is critical for an effective FDI. Having this in mind, in this paper, the potential of the most popular ML classifiers, namely, the DT, SVM, KNN, and EC, was investigated aiming to determine which classification approach is more effective. The DT and KNN techniques are directly multi-class classification algorithms, whilst the other two are basically binary [41]. Decentralised and nested classifier models, arranged hierarchically aiming to divide and share the classification tasks, were developed in order to classify both single and multiple gas path component faults in a two-shaft industrial GT engine to the class level.

4.2.1 Machine learning classifiers

Each of the classifiers utilised in this work is briefly described below. Their mathematical details could be found in the cited references.

Decision tree Is a tree-like decision structure consisting of three types of nodes, namely, root node, decision node, and leaf nodes. The root node represents the origin of the entire input data, internal nodes represent branches of the root node, and the leaf nodes represent the class labels or the final outcomes that do not have child classes. The connections between the nodes, all the way from the root to the leaf nodes, represent classification rules derived from the training samples. The classification is then performed sequentially via the DT with its leaf nodes representing the engine faults. The accuracy of the subsequent branch depends on the previous accuracy, and a big change in the tree can result due to small changes in the data sample [42]. The detailed procedure to build a DT can be found in [43].

Support vector machine The SVM is a binary classifier that separates data classes by maximising the margin between them. Multi-class problems are treated as a binary classification by dividing them into several binary classes. The classification task can thus be performed using either the one-vs-all (OvA) or one-vs-one (OvO) approach [44]. In the former case, if one of the classes to be determined is taken as positive, the rest of the classes will be considered as negative. The classifier will then learn a boundary to separate the positive and negative classes. This needs to be performed for all the other classes too. In the latter scenario, for a k number of classes, k(k − 1)/2 binary classes will be formed and each of those classes trained against every one of the other classes. The final decision will then be performed combining the outputs of all the binary classifiers and applying the majority rule [36]. Previously, the SVM was used by several authors for different machinery condition monitoring and fault diagnostics [45].

K-nearest neighbour The KNN is one of the simplest and nonparametric classification algorithms [46]. Classification is performed based on the number of neighbours closest to the new point of interest p. This may be accomplished by ranking all of the data points according to the Euclidean distance from the new point p and selecting K vectors that are located closest to this point. The class type is determined based on the majority of the closest points. Its prediction accuracy can be influenced by the number of K [47].

Ensemble classifier EC is also a multi-class classifier which comprises two or more classifier models, which might be the same or different types. ECs have lower errors than the individual methods and offer less overfitting. For the classification decision, the mean of the individual classifier outcomes is used. An extensive survey of the state-of-the-art EC methods is available in [48].

4.2.2 FDI performance evaluation

The performance of the proposed method in FDI was evaluated based on the standard performance confusion metrics [49]. The important elements of the fault detection and isolation decision metrics (i.e. missed detections, false alarms, misclassifications, and correct classifications) were established for each classifier. Tables 4 and 5 show the general structure of the detection and isolation confusion metrics, respectively. Regarding Table 4, false negative (FN) implies that the condition was treated as normal although it was actually faulty [this will lead to a false alarm (FA)). Conversely, false positive (FP) refers to the prediction of the fault state as a normal state (this will cause a missed detection (MD)]. Similarly, true negative (TN) indicates that the condition was actually normal and predicted as normal and false positive (FP) indicates the condition was actually normal, but predicted as faulty. In both matrices, the diagonal values represent the number of states predicted correctly whilst the values other than the diagonal represents the number of incorrect detection/classification.

The rate of true positive (RTP), the rate of true negative (RTN), the rate of false positive (RFP), the rate of false negative (RFN), and the overall detection accuracy (OADA) can be computed as;

Similarly for the isolation confusion matrix, the individual fault classification accuracy (IFCA) and the overall classification accuracy (OACA) of the classifier can be computed as:

4.3 Gas turbine fault identification using MLP

Fault identification/quantification is the last stage of the fault diagnostics process, which is the task of estimating the magnitude of the isolated component fault in terms of the health indices (efficiency and flow capacity changes). For this purpose, the basic single-hidden-layer MLP architecture, as shown in Fig. 5, is used. The inputs are the eight measurement parameters’ changes (ΔT3, ΔP3, ΔNCT, ΔWf, ΔT4, ΔP4, ΔT5, and ΔP5), whereas the outputs are the six health parameter indices to be estimated (ΔΓC, ΔηC, ΔΓCT, ΔηCT, ΔΓPT, and ΔηPT). The task of the fault estimation network is to undertake the inverse process performed by a performance simulator. The performance simulator is used to simulate the effect of the components’ deterioration on the gas path measurements. The fault estimation network, in turn, utilises the measurement variations to assess the level of the deterioration.

In ANN training, a set of inputs is first forward propagated through the first hidden layer and the activation values of that layer are transferred to the following layer and so on, all the way to the output. The output from the forward propagation is compared to the target value, and the cost is the difference between these two. Here, the network involves iterative procedures, across the whole training data samples, for the minimisation of the error function using a backpropagation. An attempt to minimise the error function may lead to a phenomenon known as “overfitting”. A widely accepted method to fix this problem is called cross-validation [50]. This may be accomplished by splitting the learning data samples randomly into three groups: training, cross-validation, and test. Training the network on the first group to capture the useful patterns from that set and adjusting the connection weights is the first process. In the meantime, the status of the prediction accuracy of the network should be checked using both the training and validation data samples. The MSE and sum square error (SSE) are the two frequently used measures of network prediction accuracy in an ANN training. Network training should stop when the validation error begins to increase whilst the training error keeps on decreasing. At that point, so as to check how well the system functions, its prediction performance ought to be evaluated utilising the test data samples. To be more confidential, the prediction accuracy of the system can be verified using another data sample, which is not included in the first three data groups.

Since a single MLP net is used to estimate all the 7 component fault scenarios considered in this study, in the SCF cases, except for 2 of the health parameters associated with the faulty component, the other 4 parameters should be close to zero. Similarly, in the DCF case, the 4 health parameters will probably deviate from zero based on the fault level, whilst the rest of the parameters remain close to zero. The accuracy of the individual fault estimation accuracy of the network is evaluated.

The estimation accuracy of the network was evaluated based on the two popular statistical precision accuracy assessment approaches, namely, the standard deviation (σ, is the precision of the prediction, and also indicates how spread out the individual prediction values are from their mean) and correlation coefficient (r, measure of the degree of matching between network outputs and the target), as defined by Eqs. (9) and (10), respectively [17].

5 Results and discussion

5.1 Noise reduction and visualisation

The performance of the method was evaluated in different cases such as changing the sizes of the hidden layers, training and activation functions, and the number of epochs. The size of the bottleneck layer was determined based on the number of dimensions required for the visualisation and the capability of the network to extract sufficiently important features that can regenerate the original noise-free data with minimum error. The performance of some selected architectures amongst the several potential models is presented in Table 6. In general, since the faults were not coplanar, the features from 2 bottleneck nodes were unable to clearly distinguish and visualise them. Instead, since the performance and the noise reduction obtained from the 3 bottleneck neurons were sufficiently high and the 3D plot showed a better visualisation and separability, we selected Network 5 (8:10:3:10:8), which is the structure with the minimum hidden neurons.

Figures 6, 7, and 8 demonstrate the visualisation of the eight data classes based on the main dataset. The outputs of the bottleneck neurons (h1, h2, and h3) refer to the feature vector variables and were used as coordinates of the feature space. The groups of features (loci) with different colours represent the classes presented in Fig. 2. It can be observed that most of the data points corresponding to the assumed component fault classes were clearly separable from each other after the dimensionality reduction. At low severity, the fault categories became close to each other and the separation space between the adjacent fault groups decreased when the noise level increased. This indicates the difficulty in the fault separation when small faults, as close as the noise effect, were considered. 0.5% and 0.25% were the minimum flow capacity and isentropic efficiency deltas used in this analysis. One can see that the data features from 3 bottleneck neurons showed better separability than the 2 neurons. In the 3D case, since the view angle matters with the degree of visualisation, the plot could be rotated into the appropriate direction.

To verify the generalisation performance of the AANN-based model, the 1071 unseen data sample was simulated and plotted on the feature space (see Fig. 9). It can be observed that the test sample data classes were distinguished into their corresponding main classes.

5.2 Fault detection

Fault detection is the first step in fault diagnostics process, which is used to decide whether the operating system is healthy or unhealthy. A detection system with the minimum FA and MD is essential. In this work, the detection problem was considered as a binary class classification problem. Performance data that could represent both the healthy and faulty engine operating conditions were used to develop and demonstrate the detection model. The healthy operating condition was assigned as positive (1), whilst the faulty condition as negative (− 1). The classifier was then assigned to distinguish the positive class from the negative one by applying the binary decision logic. The detection accuracy was then computed based on the decision matrix approach as discussed in Sect. 4.2. Table 7 shows the percentage detection accuracy obtained from the five ML-based detection models. It can be seen that all the classifiers, except for the LSVM-based method, in general, were able to separate over 96% of the two data groups successfully. Specifically, a higher percentage of FA than MD was recorded.

5.3 Fault isolation/classification

For component fault classification, as shown in Fig. 10, three classifier modules have been developed. CLASSIFIER 2 is trained to classify SCF, DCF, and TCF categories. CLASSIFIER 3 and CLASSIFIER 4 are accountable to distinguish the faulty components to the class level. This kind of engine fault classification model is important to understand the nature of the fault categories and requires fewer classifiers than the conventional OvA techniques. Whenever misclassification occurs, the hierarchical classification scheme will tell us from which specific class comes the wrong classification or to which specific class it goes. Furthermore, as we go from top to bottom of this model, the number of classes decreases, and as a result, the probability of a successful classification increases.

Table 8 presents the test results found from the classification model corresponding to the DT, SVM, KNN, and EC-based classifiers. The best accuracy was obtained with the NLSVM (99.83), followed by EC (98.43%) and KNN (97.15%). Misclassification was observed when equivalent fault severity levels between the SCFs and DCFs and/or DCFs and TCFs were considered. This is because the corresponding input patterns became similar. Consequently, as shown in Table 8, some DCF patterns were misclassified as SCFs and TCF and some TCF patterns as DCFs. Specifically, when a small amount of fault is assumed for one of the components in a DCF, there is a probability that the classifier may recognise it as an SCF. Similarly, when small faults are assumed for any one of the components in a TCF, there is a chance that some TCF patterns might be considered as a DCF and vice versa [51].

5.3.1 Effect of measurement noise

In this section, to evaluate the ability of the current method to come with measurement noises, high-level noise values ranging up to ± 3σ was considered. Firstly, the noise corrupted data passed through the AANN noise reduction technique. The visualisation of the extracted feature vector is demonstrated in Fig. 11. The noise reduced features were then fed into the fault detection and isolation system. The test results, as presented in Table 9, showed that 97%, 99.2%, 98%, and 96.7% of the data was correctly detected by the DT, NLSVM, KNN, and EC-based classifiers, respectively. From the detection confusion matrix, we observed that the maximum detection accuracy resulted in a 1% MA and a 4.2% FD. By CLASSIFIER 2, excluding the LSVM-based classifier, on average, over 91.8% of the data were separated correctly into the fault categories, SCF, DCF, and TCF. Regarding CLASSIFIER 3 and CLASSIFIER 4, the SVM and KNN-based methods resulted in a better accuracy than the remaining two classifiers. Taking into account the amount of data used for training and testing, the obtained results were reasonably acceptable.

5.4 Fault identification

The identification network is accountable for estimating the magnitude of the isolated fault(s) in terms of efficiency and flow capacity deltas. It was trained on 2499 data samples until the MSE value of 0.00032 was attained after 1000 iterations. Figure 12 illustrates the absolute estimation error of the proposed algorithm, that is, the difference between the implanted and predicted values associated with the 1071 test points. For each test example, the flow capacity and efficiency deltas were implanted concurrently as single, double, and triple component faults. As it is shown in this figure, for each test fault case reported on the abscissa, two error values, corresponding to each component fault, were plotted on the y-axis. The first three sections refer to the estimation errors of the identification network, corresponding to each SCF, the next three sections were corresponding to each DCF, and the last section corresponds to the TCF. One can see that the estimation errors of the network for the compressor performance parameters were relatively high. There are also some estimation outliers for large fault case values. But the overall estimation accuracy of the module associated with each fault scenario should be determined based on the distribution of the errors of the test data samples. Relative to the total test results, the contribution of these individual prediction outliers to the overall estimation accuracy was insignificant. The error values in terms of range, for most of the test examples, fell in between -0.3 and 0.3. With regard to the ± 2σ error precision estimation accuracy applied by the authors in [51], on average, the MLP module is 97.3% confident to provide a fault estimation value of the actual value ± 0.113.

The influence of the number of health parameters on the measurements increases with the number of components deteriorated simultaneously. Particularly, when the number of performance parameters excides the number of gas path parameters, the diagnostic problem will become underdetermined. However, in all the fault scenarios considered in this paper, the maximum number of performance parameters to be estimated was less than the instrumentation suite used. Moreover, as reported in [4], in contrast to the model-based methods (like GPA and KF), one of the beauties of ANNs is their capability of undertaking diagnostic problems with a limited number of measurements. Maybe due to these two reasons, even for the TCF scenario, the instrumentation suite was enough to converge the solution.

Figure 13 presents the estimation error distribution of the test data sample along with their mean (µ), σ, and r values. The errors’ standard deviation determines how spread out the prediction values are from their mean. It allows us to quantify the level of confidence that the network produces estimation values in the given range of error distribution. As shown in Table 10, more than 77% of the test errors lie within the range of ± 1σ, over 95% within ± 2σ, and 100% within ± 3σ of the mean error. It is known that the approximation ability of AANN-based methods relies highly on the data size. Hence, increasing the input data size may significantly improve the approximation accuracy of this method.

6 Conclusions

In this paper, the gas path component fault diagnostic performance of a hybrid intelligent-based method for a two-shaft industrial GT engine was investigated. Towards this end, first, a five-layer AANN model was devised so as to reduce sensor noise and extract the important features for the visualisation and fault diagnostics. This process was accomplished using the bottleneck layer neurons based on the concept of the NLPCA. The visualisation helped us visualise the nature of the component faults and develop a hierarchically arranged classification framework. The data preprocessor AANN method was then integrated with the widely used ML classifiers trained for single and multiple fault classification. An MLP-based approximation technique was developed to assess the magnitudes of the isolated gas path component faults in terms of isentropic efficiency and flow capacity indices. The generalisation performance of these algorithms was evaluated using the performance data obtained from a gas turbine simulation program, tuned to the case engine which is thermodynamically similar to the GE LM2500. Moreover, in order to evaluate the robustness of the method towards measurement errors, random Gaussian noise values were added to the data. The test results showed that the hierarchical multi-class classification approach yielded better results than the conventional single technique approaches. Specifically, the NLSVM and KNN-based classifiers, in most instances, resulted the highest and nearly the same classification accuracies. On average, 99.9% of F1, F2, and F3, 99.8% of F4, F5, and F6, and 91.3% of F7 were classified successfully by the NLSVM-based classifiers. The TCF classification success rate achieved by this method was better than the single technique-based literature results. Regarding the fault approximation performance of the MLP-based scheme, in general, over 95% of the test errors, for all fault types, fell within the range of ± 2σ with a mean value of nearly zero.

Abbreviations

- AANN:

-

Autoassociative neural network

- ANN:

-

Artificial neural network

- AI:

-

Artificial intelligence

- CF:

-

Compressor fouling

- CTE:

-

Compressor turbine erosion

- DCF:

-

Double component fault

- DNN:

-

Dynamic neural network

- DT:

-

Decision tree

- EC:

-

Ensemble classification

- FA:

-

False alarm

- FN:

-

False negative

- FP:

-

False positive

- GG:

-

Gas generator

- GSP:

-

Gas turbine simulation program

- IFCA:

-

Individual fault classification accuracy

- KNN:

-

K-nearest neighbour

- LSVM:

-

Linear support vector machine

- LOGSIG:

-

Logistic sigmoid

- MD:

-

Missed detection

- ML:

-

Machine learning

- MLP:

-

Multilayer perceptron

- MSE:

-

Mean square error

- N :

-

Shaft speed

- NLR:

-

National aerospace laboratory

- NLSVM:

-

Nonlinear support vector machine

- OACA:

-

Over all classification accuracy

- OADA:

-

Over all detection accuracy

- OvA:

-

One-vs-all

- P :

-

Pressure (bar)

- PC:

-

Principal component

- PCA:

-

Principal component analysis

- PT:

-

Power turbine

- PTE:

-

Power turbine erosion

- RBFNN:

-

Radial basis function neural network

- RFA:

-

Rate of false alarm

- RFN:

-

Rate of false negative

- RTP:

-

Rate of true positive

- SCF:

-

Single component fault

- T :

-

Temperature (K)

- TCF:

-

Triple component fault

- Wf:

-

Fuel mass flow rate (kg/s)

- Γ :

-

Flow capacity

- Η :

-

Efficiency

- µ :

-

Mean

- σ :

-

Standard deviation

- r :

-

Correlation coefficient

- Δ :

-

Delta

References

Diakunchak IS (1992) Performance deterioration in industrial gas turbines. J Eng Gas Turbines Power 114:161–168

Singh R (2003) Advances and opportunities in gas path diagnostics. In: 15th ISABE, Paper No. ISABE-2003-1008

Amare DF, Gilani SIU-H, Aklilu TB (2016) Gas turbine gas path diagnostics: a review. In: MATEC web of conferences, p 00005

Marinai L, Probert D, Singh R (2004) Prospects for aero gas-turbine diagnostics: a review. Appl Energy 79:pp. 109–126, 9//

Volponi AJ (1999) Gas turbine parameter corrections. J Eng Gas Turbines Power 121:613–621

Mohammadi E, Montazeri-Gh M (2014) Simulation of full and part-load performance deterioration of industrial two-shaft gas turbine. J Eng Gas Turbines Power 136:092602

Abernathy R, Powell BD, Colbert DL, Sanders DG, Thompson Jr J (1973) Handbook, uncertainty in gas turbine measurements. DTIC Document

Lu F, Huang J, Lv Y (2013) Gas path health monitoring for a turbofan engine based on a nonlinear filtering approach. Energies 6:492–513

Meskin N, Naderi E, Khorasani K (2013) A multiple model-based approach for fault diagnosis of jet engines. IEEE Trans Control Syst Technol 21:254–262

Pourbabaee B, Meskin N, Khorasani K (2016) Robust sensor fault detection and isolation of gas turbine engines subjected to time-varying parameter uncertainties. Mech Syst Signal Process 76–77:136–156

Ogaji SOT, Singh R, Probert SD (2002) Multiple-sensor fault-diagnoses for a 2-shaft stationary gas-turbine. Appl Energy 71:321–339

Shah B, Sarvajith M, Sankar B, Thennavarajan S (2013) Multi-auto associative neural network based sensor validation and estimation for aero-engine. In: AUTOTESTCON (proceedings), pp 250–256

Ganguli R (2012) Gas turbine diagnostics: signal processing and fault isolation. CRC Press, Boca Raton

Romessis C, Mathioudakis K (2002) Setting up of a probabilistic neural network for sensor fault detection including operation with component faults. In: ASME Turbo Expo 2002: power for land, sea, and air, pp 101–108

Kong C, Ki J, Kang M, Kho S (2004) Intelligent performance diagnostics of a gas turbine engine using user-friendly interface neural networks. Aircr Eng Aerosp Technol 76:391–397

Matuck GR, Barbosa JR, Bringhenti C, Lima I (2009) Multiple faults detection of gas turbine by MLP neural network. In: ASME Turbo Expo 2009, power for land, sea, and air. American Society of Mechanical Engineers, pp 697–703

Ogaji SOT, Li YG, Sampath S, Singh R (2003) Gas path fault diagnosis of a turbofan engine from transient data using artificial neural networks. In: ASME Turbo Expo 2003, Atlanta, Georgia, USA, pp 405–414. https://doi.org/10.1115/GT2003-38423

Joly RB, Ogaji SOT, Singh R, Probert SD (2004) Gas-turbine diagnostics using artificial neural-networks for a high bypass ratio military turbofan engine. Appl Energy 78:397–418

Xiradakis N, Li YG (2004) Gas turbine and sensor fault diagnosis with nested artificial neural networks. In: Proceedings of the ASME Turbo Expo 2004, pp 351–359

Fentaye AD, Baheta AT, Gilani SIU-H (2018) Gas turbine gas-path fault identification using nested artificial neural networks. Aircr Eng Aerosp Technol 90:992–999

Sina Tayarani-Bathaie S, Khorasani K (2015) Fault detection and isolation of gas turbine engines using a bank of neural networks. J Process Control 36:22–41

Sadough Vanini ZN, Meskin N, Khorasani K (2014) Multiple-model sensor and components fault diagnosis in gas turbine engines using autoassociative neural networks. J Eng Gas Turbines Power 136:091603

Amozegar M, Khorasani K (2016) An ensemble of dynamic neural network identifiers for fault detection and isolation of gas turbine engines. Neural Netw 76:106–121

Li Z, Zhong S-S, Lin L (2016) Novel gas turbine fault diagnosis method based on performance deviation model. J Propul Power 33:730–739

Li Y (2010) Gas turbine performance and health status estimation using adaptive gas path analysis. J Eng Gas Turbines Power 132:041701

Marinai L, Singh R (2006) A fuzzy logic approach to gas path diagnostics in aero-engines. In: Palade V, Bocaniala CD (eds) Computational intelligence in fault diagnosis. Springer, Berlin, pp 37–79

Saravanamuttoo H, Lakshminarasimha A (1985) A preliminary assessment of compressor fouling. ASME paper, p 153

Lakshminarasimha AN, Boyce MP, Meher-Homji CB (1994) Modeling and analysis of gas turbine performance deterioration. J Eng Gas Turbines Power 116:46–52

Ogaji SOT, Sampath S, Singh R, Probert SD (2002) Parameter selection for diagnosing a gas-turbine’s performance-deterioration. Appl Energy 73:25–46

Ogaji SO, Singh R (2003) Advanced engine diagnostics using artificial neural networks. Appl Soft Comput 3:259–271

Zedda M, Singh R (1998) Fault diagnosis of a turbofan engine using neural networks—a quantitative approach. In: 34th AIAA/ASME/SAE/ASEE joint propulsion conference and exhibit, American Institute of Aeronautics and Astronautics

Visser WP, Broomhead MJ (2000) GSP, a generic object-oriented gas turbine simulation environment. In: ASME Turbo Expo 2000: power for land, sea, and air, p V001T01A002

Ogaji S, Singh R (2002) Study of the optimisation of measurement sets for gas path fault diagnosis in gas turbines. In: ASME Turbo Expo 2002: power for land, sea, and air, pp 271–280

Abernethy R, Thompson J (1973) Uncertainty in gas turbine measurements. In: 9th Propulsion conference, p 1230

Vachtsevanos GJ, Lewis F, Hess A, Wu B (2006) Intelligent fault diagnosis and prognosis for engineering systems. Wiley, Hoboken

Jaw LC, Lee Y-J (2014) Engine diagnostics in the eyes of machine learning. In: ASME Turbo Expo 2014: turbine technical conference and exposition, p V006T06A029

Scholz M (2012) Validation of nonlinear PCA. Neural Process Lett 36:21–30

Kramer MA (1991) Nonlinear principal component analysis using autoassociative neural networks. AIChE J 37:233

Demuth H, Beale M (2015) Neural network toolbox for use with MATLAB—user’s guide

Kramer MA (1992) Neutral network applications in chemical engineeringAutoassociative neural networks. Comput Chem Eng 16:313–328

Aly M (2005) Survey on multiclass classification methods. Neural Netw 19:1–9

Pekelis L (2013) Classification and regression trees: a practical guide for describing a dataset, Bicostal Datafest. Stanford University

Saimurugan M, Ramachandran K, Sugumaran V, Sakthivel N (2011) Multi component fault diagnosis of rotational mechanical system based on decision tree and support vector machine. Expert Syst Appl 38(4):3819–3826

Friedman J (1996) Another approach to polychotomous classification. Technical report, Department of Statistics, Stanford University

Widodo A, Yang B-S (2007) Support vector machine in machine condition monitoring and fault diagnosis. Mech Syst Signal Process 21:2560–2574

Shah JK, Smolenski BY, Yantorno RE, Iyer AN (2004) Sequential k-nearest neighbor pattern recognition for usable speech classification. In: Signal processing conference, 2004 12th European, pp 741–744

Wang D (2016) K-nearest neighbors based methods for identification of different gear crack levels under different motor speeds and loads: revisited. Mech Syst Signal Process 70:201–208

Ren Y, Zhang L, Suganthan PN (2016) Ensemble classification and regression-recent developments, applications and future directions. IEEE Comput Intell Mag 11:41–53

Simon DL, Bird J, Davison C, Volponi A, Iverson RE (2008) Benchmarking gas path diagnostic methods: a public approach. In: ASME Turbo Expo 2008: power for land, sea, and air, pp 325–336

Prechelt L (1998) Automatic early stopping using cross validation: quantifying the criteria. Neural Netw 11:761–767

Ogaji SOT (2003) Advanced gas-path fault diagnostics for stationary gas turbines. Ph.D. thesis, Cranfield University, UK

Acknowledgements

The authors would like to thank Universiti Teknologi PETRONAS for supporting this work financially.

Author information

Authors and Affiliations

Corresponding author

Additional information

Technical Editor: André Cavalieri.

Rights and permissions

About this article

Cite this article

Amare, D.F., Aklilu, T.B. & Gilani, S.I. Gas path fault diagnostics using a hybrid intelligent method for industrial gas turbine engines. J Braz. Soc. Mech. Sci. Eng. 40, 578 (2018). https://doi.org/10.1007/s40430-018-1497-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40430-018-1497-6