Abstract

Regime switching models are able to capture clustering effects, nonlinearities in time series and jumps in volatility. In the present paper, we propose a broad class of Markov-switching AutoRegressive Stochastic Volatility \((MSAR-SV)\) models, in which the \(\log -\)volatility follows a \( p^{th}-MS-\)autoregression. So, it can be seen as a replacement of the general \(MS-GARCH\) model. This parameterization draws a lot of attention in modeling structural changes in dependent data. The parameters of the \(\log -\) volatility are expressed as a function of a homogeneous Markov chain with a finite state space. The primary goal of the proposed model is to confer it a change driven by a Markov chain in order to capture by the habitual changing behavior of volatility due to economic forces, as the discrete shift in volatility due to abrupt abnormal events. Several probabilistic properties of \(MSAR-SV\) models have been obtained, especially, strictly (resp. second-order) stationary, causal and ergodic solution, geometric ergodicity, and computation of higher-order moments. Moreover, we derive the expression of the covariance function of the squared (resp. powers) process. Consequently, the logarithm squared (resp. powers) process admits an ARMA representation. Then we provide the limit theory for quasi-maximum likelihood estimator (QMLE), and, in addition, establish the strong consistency of this estimator. Finally, we present a simulation study on the performance of the proposed estimation method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Markov-switching models (MSM) have attracted a lot of research attention and have become a robust tool for modeling and describing asymmetric business cycles in the econometric literature (see., Hamilton [14]). Such models go ahead to earn more and more popularity, especially in financial data. In the same context, these models have been chosen because of their high flexibility in capturing stability and/or asymmetric effects on volatility shocks and their competence in modeling time series. A linear or nonlinear MSM was developed by many authors (for example, \(MS-ARMA\): Cavicchioli [3,4,5], nonlinear \( MS-ARMA\): Stelzer [17], \(MS-GARCH:\) Hass et al. [13] and Cavicchioli [6], \(MS-BL\): Ghezal et al. [1] and [12] among others). The various authors have pointed out that means identifying occasional switching in the parameter values may provide more appropriate modeling of volatility. In our paper, we alternatively present a \(MS-\)AutoRegressive Stochastic V olatility \((MSAR-SV)\) process in which the process which follows locally (i.e., each regime) an autoregressive stochastic volatility \((AR-SV)\) representation. In this model the \(\log -\)volatility process follows a \( p^{th}-MS-\)autoregression, where the coefficients depend on a Markov chain. The latter was considered in the literature as the best alternative to the \( MS-ARCH-\)type models, where the volatility is driven by an exogenous innovation. The model presented in this paper is a natural extension of the \( MS-SV\) model of So et al. [16], so that the observed process is described by heavy-tail innovations (see also Casarin [2], for a more qualitative discussion). The main reason for choosing a \(MSAR-SV\) model is that it significantly promotes the predicting vigor of the \(AR-SV\) model, does a perfect act in capturing the leading events affecting the oil market, and, in addition, simultaneously captures the usual changing behavior of volatility due to economic forces as well as the sudden discrete shift in volatility due to sudden abnormal events. (see So et al. [16], for a more qualitative discussion). To evaluate the \(MSAR-SV\) model, its performance, in terms of goodness-of-fit and forecasting power, is compared to the standard \(AR-SV\) model. Firstly, the probabilistic properties of the \( MSAR-SV\) model are investigated. For this, we afford the sufficient and necessary assumptions to ensure the existence of a stationary solution, observing that the \(MSAR-SV\) coefficients related to the Markov chain can breach the usual stationary assumptions of standard \(AR-SV\) models. Secondly, this paper aims to analyse the strong consistency of the QMLE of \(MSAR-SV\) models. Before we go ahead, we present some symbols:

1.1 Symbols

Through the paper, the following symbols are used.

-

\(I_{\left( .\right) }\) is the square matrix whose every principal diagonal entry is equal to 1, and the remaining entries are equal to 0, \( O_{\left( p,m\right) }\) is the \(p\times m\) matrix such that all entries are zeros, \({\underline{H}}^{\prime }:=\left( I_{\left( 1\right) },{\underline{O}} _{\left( p-1\right) }^{\prime }\right) .\)

-

\(\log {\underline{V}},\) \(\exp {\underline{V}}\) and \({\underline{V}}^{\frac{1 }{2}}\) denote the vectors formed by the logarithm, exponential and square root of the entries of the vector \({\underline{V}}\), respectively, \(diag\left( {\underline{V}}\right) \) denotes the diagonal matrix created by the entries of \({\underline{V}}\). \(\rho (A)\) is the spectral radius of a square matrix A.

-

\(\left\| .\right\| \) is any norm on \(m\times n\)-(resp. \(m\times 1-)\) matrices (resp. vectors). \(\otimes \) is the Kronecker product, and \( A\left( 1\right) =a_{11}\) of \(A=(a_{ij})\).

-

\(\left( s_{t},t\in \mathbb {Z}\right) \) is a stationary, irreducible and aperiodic Markov chain.

-

\(\mathbb {P}^{n}=\left( p_{ij}^{(n)},(i,j)\in \mathbb {S\times S}\right) \) is the \(n-\)step transition probability matrix, where \(p_{ij}^{(n)}=P\left( s_{t}=j|s_{t-n}=i\right) \) with one-step transition probability matrix \( \mathbb {P}:=\left( p_{ij}\text {, }(i,j)\in \mathbb {S\times S}\right) \) where \(p_{ij}:=p_{ij}^{(1)}=P\left( s_{t}=j|s_{t-1}=i\right) \) for \(i,j\in \mathbb { S=}\left\{ 1,...,d\right\} .\)

-

\({\underline{\Pi }}^{\prime }=(\pi (1),...,\pi (d))\) is the initial stationary distribution, where \(\pi (i)=P\left( s_{0}=i\right) \), \( i=1,...,d, \) such that \({\underline{\Pi }}^{\prime }={\underline{\Pi }}^{\prime } \mathbb {P}\).

-

For any set of non-random matrices \(A:=\left\{ A(i),i\in \mathbb {E} \right\} \), we note

$$\begin{aligned} \mathbb {P}^{\left( n\right) }(A)=\left( \begin{array}{ccc} p_{11}^{\left( n\right) }A(1) &{} \ldots &{} p_{d1}^{\left( n\right) }A(1) \\ \vdots &{} \ldots &{} \vdots \\ p_{1d}^{\left( n\right) }A(d) &{} \ldots &{} p_{dd}^{\left( n\right) }A(d) \end{array} \right) ,\text { }{\underline{\Pi }}(A)=\left( \begin{array}{c} \pi (1)A(1) \\ \vdots \\ \pi (d)A(d) \end{array} \right) \text {,} \end{aligned}$$with \(\mathbb {P}^{\left( 1\right) }(A)=\mathbb {P}(A).\)

The rest of the paper is ordered as follows. In Sect. 2, we introduce the \(MSAR-SV\) model and present several probabilistic properties of this model, specifically the strictly and second (resp. higher)-order stationary solution of \(MSAR-SV\). Then the autocovariance functions of the squared and powers processes are derived. As a result, we find that the logarithm squared (resp. powers) process admits an ARMA representation. We also provide here sufficient assumptions for the \(MSAR-SV\) model to be geometrically ergodic and \(\beta -\)mixing. In Sect. 3, we propose the QMLE for this model and derive the strong consistency. Simulation results are reported in Sect. 4.

2 \(MSAR-SV\) models

The Markov-switching autoregressive stochastic volatility model (denoted by \(MSAR-SV\left( p\right) \)) is given by

In Eq. (2.1), \(\left\{ \left( e_{t},\eta _{t}\right) ,t\in \mathbb {Z} \right\} \) is an independent and identically distributed (i.i.d.) sequence of random vectors with mean \({\underline{O}}_{\left( 2\right) }^{\prime }\) and covariance matrix \(I_{\left( 2\right) }\). The functions \( a_{i}\left( .\right) ,\) \(i=0,...,p\) and \(b_{0}\left( .\right) \) are related to the unobserved Markov chain \(\left( s_{t},t\in \mathbb {Z} \right) \). We also suppose that \(\left( e_{t},\eta _{t}\right) \) and \( \left\{ \left( \epsilon _{u-1},s_{t}\right) ,u\le t\right\} \) are independent. It is worth noting that \(h_{t}\) is conventionally called volatility. It is not the conditional variance of \(\epsilon _{t}\) given its past informations up to time \(t-1\) (this is justified by \(E\left\{ \epsilon _{t}^{2}\left| \sigma -\left\{ \left( \epsilon _{u},s_{u}\right) ,u<t\right\} \right. \right\} =E\left\{ h_{t}\left| \sigma -\left\{ \left( \epsilon _{u},s_{u}\right) ,u<t\right\} \right. \right\} \ne h_{t}\)). The aim of this section is to show some of the most likely probabilistic properties of the \(MSAR-SV\) model. As in much time-series, it is helpful to write Eq. (2.1) in an equivalent state-space representation to smooth the study. In this discussion we can write Eq. (2.1) in the MS multivariate stochastic volatility form

where

and

So, the process \(\left( \left( \log {\underline{H}}_{t}^{\prime },s_{t}\right) ^{\prime },t\in \mathbb {Z} \right) \) is a Markov chain on \(\mathbb {R}^{p}\times \mathbb {S}\). However, the study of the probabilistic properties of model (2.1) is easier and best through model (2.2). The second equation of Eq. (2.2) is the same as defined for the \(D-MSAR\) model studied newly by Ghezal [11]. First, we get the following important result which implies strict stationarity.

Theorem 2.1

Consider the MS-multivariate stochastic volatility model (2.2). Then

- 1.:

-

A sufficient condition for (2.2) to have a unique, strictly stationary, causal and ergodic solution; given by

$$\begin{aligned} \epsilon _{t}=e_{t}\exp \left\{ \frac{1}{2}\sum \limits _{k=0}^{\infty }\left\{ \prod \limits _{j=0}^{k-1}\Gamma \left( s_{t-j}\right) \right\} \left( 1\right) \left( a_{0}\left( s_{t-k}\right) +b_{0}\left( s_{t-k}\right) \eta _{t-k}\right) \right\} \end{aligned}$$(2.3)which converges absolutely almost surely (a.s.) for all \(t\in \mathbb {Z}\), is

$$\begin{aligned} \gamma _{L}\left( \Gamma \right) :=\lim \limits _{t\rightarrow \infty }E\left\{ \frac{1}{t}\log \left\| \prod \limits _{j=0}^{t-1}\Gamma \left( s_{t-j}\right) \right\| \right\} \overset{a.s}{=}\lim \limits _{t \rightarrow \infty }\left\{ \frac{1}{t}\log \left\| \prod \limits _{j=0}^{t-1}\Gamma \left( s_{t-j}\right) \right\| \right\} <0. \end{aligned}$$ - 2.:

-

Contrariwise, assume that \(\left\{ a_{0}\left( s_{t}\right) {\underline{H}},b_{0}\left( s_{t}\right) {\underline{H}},\Gamma \left( s_{t}\right) \right\} \) is controllableFootnote 1 and (2.2) has a strictly stationary solution. Then \(\gamma _{L}\left( \Gamma \right) <0\).

Remark 2.1

Using Jensen’s inequality, condition \(E\left\{ \left\| \prod \limits _{j=0}^{t-1}\Gamma \left( s_{t-j}\right) \right\| \right\} <1\) constitutes a sufficient condition for \(\gamma _{L}\left( \Gamma \right) <0\).

Example 2.1

Consider the \(MSAR-SV\left( 1\right) \) model. The sufficient condition is \( \gamma _{L}\left( \Gamma \right) =\) \(\sum \limits _{k=1}^{d}\pi (k)\log |a_{1}(k)|<0\). In this case there exists a unique strictly stationary, causal and ergodic solution

Therefore, the local strict stationarity is not requisite, i.e., the presence of burst regimes (i.e., \(\log |a_{1}(k)|\) \(>0\)) does not exclude the global strict stationarity. In the special case of \(MSAR-SV\left( 1\right) \) with two-regimes, we get

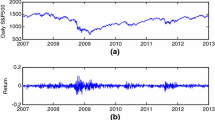

The zone of strict stationarity is elucidated in Figure 1 below.

Other properties such as second-order stationarity and the existence of moments are clear and easy to obtain.

Theorem 2.2

Consider the MS-univariate stochastic volatility model (2.1) with MS-multivariate stochastic volatility model (2.2) and let \(\Gamma ^{(2)}:=\left\{ \Gamma ^{\otimes 2}(k),k\in \mathbb {S}\right\} \). If

Then Eq. (2.2) has a unique second-order stationary solution given by the Series (2.3), which converges absolutely a. s. and in \(\mathbb {L}_{2}\). Furthermore, this solution is strictly stationary and ergodic.

Proof

The outcome follows from second-order stationarity of the vector autoregression \(\left( \log {\underline{H}}_{t},t\in \mathbb {Z} \right) \) given by (2.2), which can be easily obtained by using the results of Ghezal et al. [1]. \(\square \)

For this purpose, the explicit expressions of the moments up to second-order are shown in the following result

Proposition 2.1

Consider the MS-univariate stochastic volatility model (2.1), if \(\epsilon _{t}\in {\mathbb {L}}_{2}\), then

-

1.

\(E\left\{ \epsilon _{t}\right\} =0.\)

-

2.

\(\gamma _{\epsilon }\left( h\right) =E\left\{ \epsilon _{t}\epsilon _{t-h}\right\} \)\( \qquad \qquad =\left\{ \begin{array}{l} \sum \limits _{x_{t},x_{t-1},...\in \mathbb {S}}\prod \limits _{k\ge 0}p_{x_{t-k-1}x_{t-k}}E\left\{ \exp \left\{ \left\{ \prod \limits _{j=0}^{k-1}\Gamma \left( x_{t-j}\right) \right\} \left( 1\right) \left( a_{0}\left( x_{t-k}\right) \right. \right. \right. \\ \left. \left. \left. +b_{0}\left( x_{t-k}\right) \eta _{0}\right) \right\} \right\} \text { if }h=0 \\ 0\text { otherwise} \end{array} \right. .\) In the special case of normal innovations \(\left( \eta _{t},t\in \mathbb {Z} \right) ,\) we obtain

$$\begin{aligned}{} & {} \gamma _{\epsilon }\left( 0\right) =\sum \limits _{x_{t},x_{t-1},...\in \mathbb {S}}\prod \limits _{k\ge 0}p_{x_{t-k-1}x_{t-k}}\\{} & {} \quad \exp \left\{ \left\{ \prod \limits _{j=0}^{k-1}\Gamma \left( x_{t-j}\right) \right\} \left( 1\right) a_{0}\left( x_{t-k}\right) +\frac{1}{2}\left( \left\{ \prod \limits _{j=0}^{k-1}\Gamma \left( x_{t-j}\right) \right\} \left( 1\right) \right) ^{2}b_{0}^{2}\left( x_{t-k}\right) \right\} . \end{aligned}$$

Proof

Under the last condition, we can easily obtain the second-order moments and so the details are omitted. \(\square \)

Example 2.2

Consider the \(MSAR-SV\left( 1\right) \) model. The condition (2.4) is reduced to \(\rho \left( \mathbb {P}({\underline{a}} _{1}^{(2)})\right) <1\) where \({\underline{a}}_{1}^{(2)}:=\left( a_{1}^{2}(k),k\in \mathbb {S}\right) ^{\prime }\). In particular, for two regimes with \(p_{11}=1-q=p_{22},\) \(p_{12}=q=p_{21},\) condition (2.4) is equivalent to the following two conditions

The region of second-order stationarity is shown in Figure 2 below.

Interesting now for assumptions guaranteeing the existence of higher-order moments for univariate-\(MSAR-SV(p)\) having multivariate-\( MSAR-SV(1)\) representation (2.2).

Remark 2.2

The odd-order moments of \(\left( \epsilon _{t},t\in \mathbb {Z} \right) \) are null when they exist, while the existence of even-order moments of \(\left( \epsilon _{t},t\in \mathbb {Z} \right) \) is summarized in the following theorem

Theorem 2.3

Consider the MS-univariate stochastic volatility model (2.1) with MS-multivariate stochastic volatility model (2.2). For all integer \(m>0\), assume that \( E\left\{ e_{t}^{m}\right\} <+\infty ,\) \(E\left\{ \eta _{t}^{m}\right\} <+\infty \) and

where \(\Gamma ^{(m)}:=\left\{ \Gamma ^{\otimes m}\left( k\right) ,k\in \mathbb {S}\right\} \). Then the \(MSAR-SV\) defined by the state-space (2.2) has a unique, causal,ergodic and strictly stationary solution given by (2.3) having moment up to \(m-\) order. Moreover, the closed form of the \(m-\)th moment of \(\epsilon _{t}\) is given by

Proof

We have used the same proof of the last theorem, the results obtained can be extended and hence omitted the details. \(\square \)

Remark 2.3

Applying the normality of \(\left( \eta _{t},t\in \mathbb {Z} \right) \ \)yields

The autocovariance function of the squared process \(\left( \epsilon _{t}^{2},t\in \mathbb {Z} \right) \) is summarized in the following theorem

Theorem 2.4

Under the assumptions of the last theorem, we have

-

1.

If \((\epsilon _{t},t\in \mathbb {Z})\) follows the MS-univariate stochastic volatility model (2.1) and \(\epsilon _{t}\in \mathbb {L} _{4}\), then

$$\begin{aligned}{} & {} \gamma _{\epsilon ^{2}}\left( 0\right) =E\left\{ e_{t}^{4}\right\} \sum \limits _{x_{t},x_{t-1},...\in \mathbb {S}}\prod \limits _{k\ge 0}p_{x_{t-k-1}x_{t-k}}\\{} & {} \quad E\left\{ \exp \left\{ 2\left\{ \prod \limits _{j=0}^{k-1}\Gamma \left( x_{t-j}\right) \right\} \left( 1\right) \left( a_{0}\left( x_{t-k}\right) +b_{0}\left( x_{t-k}\right) \eta _{0}\right) \right\} \right\} -\gamma _{\epsilon }^{2}\left( 0\right) \text {, } \end{aligned}$$and \(\gamma _{\epsilon ^{2}}\left( h\right) =0\) otherwise.

-

2.

If \((\epsilon _{t},t\in \mathbb {Z})\) follows the MS-univariate stochastic volatility model (2.1) and \(\epsilon _{t}\in \mathbb {L} _{2\,m}\), then

$$\begin{aligned}{} & {} \gamma _{\epsilon ^{m}}\left( 0\right) =E\left\{ e_{t}^{2m}\right\} \sum \limits _{x_{t},x_{t-1},...\in \mathbb {S}}\prod \limits _{k\ge 0}p_{x_{t-k-1}x_{t-k}}\\{} & {} \quad E\left\{ \exp \left\{ m\left\{ \prod \limits _{j=0}^{k-1}\Gamma \left( x_{t-j}\right) \right\} \left( 1\right) \left( a_{0}\left( x_{t-k}\right) +b_{0}\left( x_{t-k}\right) \eta _{0}\right) \right\} \right\} -\left( E\left\{ \epsilon _{t}^{m}\right\} \right) ^{2}, \end{aligned}$$and \(\gamma _{\epsilon ^{m}}\left( h\right) =0\) otherwise.

Proof

It is enough to remark that

the processes \((\epsilon _{t}^{2})\) and \((\epsilon _{t}^{2\,m})\) are both white noise processes. \(\square \)

Remark 2.4

It is clear that the process \((\epsilon _{t}^{2})\) and its power do not admit an ARMA representation. However, the logarithm process \((\log \epsilon _{t}^{2})\) has an ARMA autocovariance structure.

From the previous remark, we can obtain the following representation

with \(\omega _{t}:=\log e_{t}^{2}-E\left\{ \log e_{t}^{2}\right\} \) and \( \widetilde{a}_{0}\left( .\right) \) is an intercept, easily obtained. So Eq. (2.6) can be written in the following vectorial representation,

where \({\underline{M}}_{t}^{\prime }:=\left( \log \epsilon _{t}^{2},...,\log \epsilon _{t-p+1}^{2},\omega _{t},...,\omega _{t-p+1}\right) \) and \( {\underline{V}}_{t}\left( s_{t}\right) :={\underline{v}}_{0}+{\underline{v}} _{1}\omega _{t}+{\underline{v}}_{2}\left( s_{t}\right) \eta _{t},\) whereas \( {\underline{v}}_{0},\) \({\underline{v}}_{1},\) \({\underline{v}}_{2}\left( s_{t}\right) ,\) \(A\left( s_{t}\right) \) are appropriate vectors and matrix easily obtained and uniquely determined by \(\left\{ a_{i}\left( s_{t}\right) ,b_{0}\left( s_{t}\right) ,0\le i\le p\right\} .\) The feature of the vectorial representation (2.7) when \(s_{t}=k\), the vector \({\underline{M}}_{t}\) is independent of \({\underline{V}}_{u}\left( k\right) \) for \(u>t.\) For appropriateness, we will treat the centered version of the vector \({\underline{M}}_{t},\)

where \(\widetilde{{\underline{M}}}_{t}={\underline{M}}_{t}-E\left\{ {\underline{M}} _{t}\right\} \) and \(\widetilde{{\underline{V}}}_{t}\left( s_{t}\right) \) is the centered residual vector such that \(s_{t}=k\), \(\widetilde{{\underline{V}}} _{t}\left( k\right) \perp \widetilde{{\underline{M}}}_{u}\) for \(t>u.\)

Proposition 2.2

Consider the \(MSAR-SV\left( p\right) \) process (2.1) with vectorial representation (2.8). Then under the assumptions of Theorem 2.2, we get

where \(\underline{\widetilde{V}}^{\left( 2\right) }:=\left( \underline{ \widetilde{V}}_{k}^{\left( 2\right) }=E\left\{ \widetilde{{\underline{V}}} _{t}^{\otimes 2}\left( k\right) \right\} ,k\in \mathbb {S}\right) ,\) \( A^{\left( n\right) }:=\left( A^{\left( n\right) }(k)=A^{\otimes n}\left( k\right) ,k\in \mathbb {S}\right) ;\) \(n=1,2,{\underline{1}}=\left( 1,...,1\right) ^{\prime }\in \mathbb {R}^{d},{\underline{H}}_{0}^{\prime }:=\left( I_{\left( 1\right) },{\underline{O}}_{\left( 2p-1\right) }^{\prime }\right) .\)

Proof

Starting from (2.8), for \(h=0\) we get

and when \(h>0\),

Let \({\underline{\Lambda }}\left( h\right) =\left( E\left\{ \widetilde{ {\underline{M}}}_{t}\otimes \widetilde{{\underline{M}}}_{t-h}|s_{t}=k\right\} ,k\in \mathbb {S}\right) \). Then

\(\square \)

The following result gives an ARMA representation for the \( MSAR-SV\left( p\right) \) model.

Proposition 2.3

Under the conditions of Theorem 2.2, the \( MSAR-SV\left( p\right) \) process with vectorial representation ( 2.8) is a ARMA process.

Proof

To demonstrate proposition 2.3, we utilize the same technique as Ghezal et al. [1]. \(\square \)

For this purpose, it is requisite to behold a higher-power of the \( \log -\)squared observed process admits a ARMA representation. Let the vector \({\underline{Z}}_{t}^{\prime }:=\left( \log \epsilon _{t}^{2},...,\log \epsilon _{t-p+1}^{2},\omega _{t},...,\omega _{t-p+1}\right) \). Then the next result can be shown by a straightforward modification of Ghezal et al. [1]

Proposition 2.4

Consider the model (2.1), and suppose that \(\log e_{t}^{2}\), \(\eta _{t}\in \mathbb {L}_{2m}\) for any positive integer m. Then \(\left( {\underline{Z}}_{t}^{\otimes m}\right) \) is solution of a \(ARMA(n_{1},n_{2})\) equation of the form

where \(\left( {\underline{v}}_{t}\right) \) is a white noise and \(\left( \Psi _{i}\right) ,\) \(\left( \Lambda _{j}\right) \) are sequences of \(\left( 2p\right) ^{m}\) \(\times \left( 2p\right) ^{m}\) matrices.

At the end of this section, the geometric ergodicity and \(\beta -\) mixing are manifest.

Theorem 2.5

Consider the model (2.1). Under the condition (2.4 ), \(\left( \left( \log {\underline{H}}_{t}\right) ^{\prime },s_{t}\right) ^{\prime }\) is a geometrically ergodic Markovian chain. If it is initialized from the invariant measure, then \((\epsilon _{t})\) and \( \left( \log h_{t}\right) \) are strict stationarity and \(\beta -\)mixing with exponential rate.

Proof

The result follows from geometric ergodicity of the process \((\log {\underline{H}}_{t}) \), which can be easily created using Ghezal et al. [1]. \(\square \)

3 QML estimation

The estimation of Markov-switching models is rather complex. So, some specific models were considered in the literature (see for example, Francq and Zakoian [9], Ghezal [11] for further discussions). There is already established Markov Chain Monte Carlo (MCMC) procedure in the literature for estimating a few particular states of Eq. (2.1) including [16, 18] among others. Now, we consider a given realization \(\left( \epsilon _{1},\epsilon _{2},...,\epsilon _{n}\right) \) created from the unique, causal and strictly stationary \(MSAR-SV\) model, and suppose that p and d are known and \( \left( e_{t}\right) \) is standard Gaussian. The unknown parameters\(\ a_{i}(.),b_{0}(.),\) \(i=0,...,p\) and \(\left( p_{i,j},i,j=1,...,s,i\ne j\right) \) collected in a vector \({\underline{\theta }}\) belonging to the parameter space \(\Theta \), while \({\underline{\theta }}_{0}\) is the true values. Xie [19] advocated the QMLE and established its strong consistency for \(MS-GARCH_{s}\left( p,q\right) \), Ghezal [11] imposes some assumptions under which the strong consistency of QMLE for the doubly \(MS-AR\) model is satisfied. The Gaussian likelihood function is given

where

with the \(\log -\)transformed conditional stochastic variance process is \( \log h_{s_{i}}(\epsilon _{1},...,\epsilon _{i-1})\) defined by the second equation in (2.1). This likelihood function is also written in the following form

A QMLE of \({\underline{\theta }}_{0}\) is defined as any measurable solution \( \underline{\widehat{\theta }}_{n}\) of

In this section, let \(f_{s_{t}}\left( \left. \epsilon _{t}\right| \underleftarrow{\epsilon }_{t-1}\right) \) (resp. \(f_{s_{t}}\left( \left. \epsilon _{t}\right| {\underline{\epsilon }}_{1}\right) \)) be the density of \(\epsilon _{t}\) given the all past observations (resp. past observations unto \(\varepsilon _{1}\)) and let \(g_{{\underline{\theta }}}\left( \left. \epsilon _{t}\right| \underleftarrow{\epsilon }_{t-1}\right) \) (resp. \( g_{{\underline{\theta }}}\left( \left. \epsilon _{t}\right| \underline{ \epsilon }_{1}\right) \) ) be the corresponding logarithm conditional density of \(\epsilon _{t}\) given \(\left\{ \epsilon _{t-1},\epsilon _{t-2},...\right\} \) (resp. \(\left\{ \epsilon _{t-1},\epsilon _{t-2},...\epsilon _{1}\right\} \)). Now, we determine the likelihood function \(\widetilde{L}_{n}\left( {\underline{\theta }}\right) \) based on all past observations which is defined as \(L_{n}\left( {\underline{\theta }} \right) \) in ( 3.1) except changing the density \( f_{s_{t}}(\epsilon _{1},...\epsilon _{t})\) by \(f_{s_{t}}\left( \left. \epsilon _{t}\right| \underleftarrow{\epsilon }_{t-1}\right) \). Furthermore, we can write \(\widetilde{L}_{n}\left( {\underline{\theta }} \right) \) as

where the matrix \(\mathbb {P}_{{\underline{\theta }}}\left( f\left( \left. \epsilon _{i}\right| \underleftarrow{\epsilon }_{i-1}\right) \right) \) (resp. the vector \({\underline{\Pi }}(f\left( \left. \epsilon _{1}\right| \underleftarrow{\epsilon }_{0}\right) ))\) replaces \(f_{s_{i}}(\epsilon _{1},...\epsilon _{i})\) by \(f_{s_{i}}\left( \left. \epsilon _{i}\right| \underleftarrow{\epsilon }_{i-1}\right) \), \(i=1,..,n\) in \(\mathbb {P}_{ {\underline{\theta }}}\left( f(\epsilon _{1},...\epsilon _{i}\right) )\) (resp. \({\underline{\Pi }}(f\left( \epsilon _{1}\right) )).\)

3.1 Strong consistency of the QMLE

To prove the strong consistency of the QMLE, we use the following assumptions

- A1.:

-

\(\Theta \) is compact subset of \(\mathbb {R}^{s}\) and the true value \({\underline{\theta }}_{0}\) belongs to \(\Theta .\)

- A2.:

-

\(\gamma _{L}\left( \Gamma ^{0}\right) <0\) for any \(\underline{ \theta }\in \Theta \) where \(\Gamma ^{0}\) is the sequence \(\left( \Gamma \left( s_{t}\right) ,t\in \mathbb {Z}\right) \) when the parameters \( {\underline{\theta }}\) are changed by \({\underline{\theta }}_{0}\).

- A3.:

-

For any \({\underline{\theta }},{\underline{\theta }}^{*}\in \Theta \), if almost surely \(g_{{\underline{\theta }}}\left( \left. \epsilon _{t}\right| \underleftarrow{\epsilon }_{t-1}\right) =g_{\underline{ \theta }^{*}}\left( \left. \epsilon _{t}\right| \underleftarrow{ \epsilon }_{t-1}\right) \) then \({\underline{\theta }}={\underline{\theta }} ^{*}.\)

Assumption A1 is a standard assumption and it is used in many results of real analysis. Assumption A2 ensures the strict stationarity of the process \(\left( \epsilon _{t},t\in \mathbb {Z}\right) .\) Assumption A3 ensures that the parameter \({\underline{\theta }}\) is identifiable. First, we present the following key lemmas.

Lemma 3.1

Under Assumptions A2 and A3, almost surely, we have

Proof

Using the logarithmic function, we have \(\log \widetilde{L}_{n}\left( {\underline{\theta }}\right) \) \(=\sum \limits _{t=1}^{n}g_{{\underline{\theta }} }\left( \left. \epsilon _{t}\right| \underleftarrow{\epsilon } _{t-1}\right) \) and \(\log L_{n}\left( {\underline{\theta }}\right) \) \( =\sum \limits _{t=1}^{n}g_{{\underline{\theta }}}\left( \left. \epsilon _{t}\right| {\underline{\epsilon }}_{1}\right) \). Then

Now, for all \(\varsigma \ge 0\), the process \(\left( N_{t}\left( m\right) \right) \) is defined as \(N_{t}\left( m\right) =\underset{\varsigma \ge m}{ \sup }\left| g_{{\underline{\theta }}}\left( \epsilon _{t}|\underline{ \epsilon }_{t-\varsigma }\right) -g_{{\underline{\theta }}}\right. \)\( \left. \left( \epsilon _{t}|\underleftarrow{\epsilon }_{t-1}\right) \right| \). Then for fixed m, the process \(\left( N_{t}\left( m\right) \right) \) is also strictly stationary and ergodic with \(E_{{\underline{\theta }}_{0}}\left\{ N_{t}\left( m\right) \right\} <+\infty \). We have

the result follows. \(\square \)

The next lemma compares the ratios \(\frac{L_{n}\left( \underline{ \theta }\right) }{L_{n}\left( {\underline{\theta }}_{0}\right) }\) and \(\frac{ \widetilde{L}_{n}\left( {\underline{\theta }}\right) }{\widetilde{L}_{n}\left( {\underline{\theta }}_{0}\right) }\). Let \(Z_{n}\left( {\underline{\theta }} \right) =\dfrac{1}{n}\log \left( \frac{L_{n}\left( {\underline{\theta }} \right) }{L_{n}\left( {\underline{\theta }}_{0}\right) }\right) \). Then, we have

Lemma 3.2

Under Assumptions A1–A3, we have

with \(\underset{n\longrightarrow \infty }{\lim }Z_{n}\left( \underline{ \theta }\right) =0\) iff \({\underline{\theta }}={\underline{\theta }}_{0}\) for all \({\underline{\theta }}\in \Theta .\)

Proof

Under assumptions A1–A3, the function \(Z_{n}\left( \underline{ \theta }\right) \) is well defined. Moreover by lemma 3.1 and Jensen’s inequality, we get

Under the Assumption A3, \(Z_{n}\left( {\underline{\theta }}\right) \) converges to Kullback-Leinbler information which equals zero iff \(\underline{ \theta }={\underline{\theta }}_{0}\). \(\square \)

Lemma 3.3

Under A1–A3. For all \({\underline{\theta }} ^{*}\ne {\underline{\theta }}_{0},\) there exists a neighborhood \(\mathcal { V}\left( {\underline{\theta }}^{*}\right) \) of \({\underline{\theta }}^{*} \)such that

Proof

In Eq. ( 3.4), we obtain

So we obtain

Let \({\mathcal {V}}_{m}\left( {\underline{\theta }}^{*}\right) =\left\{ {\underline{\theta }}:\left\| {\underline{\theta }}-\underline{ \theta }^{*}\right\| \le \frac{1}{m}\right\} \) and \(\Sigma _{2:n}^{m}=\underset{{\underline{\theta }}\in {\mathcal {V}}_{m}\left( \underline{ \theta }^{*}\right) }{\sup }\left\| \prod \limits _{t=2}^{n}\mathbb {P} _{{\underline{\theta }}}\left( {\underline{f}}\left( \left. \epsilon _{t}\right| \underleftarrow{\epsilon }_{t-1}\right) \right) \right\| . \) Because the norm is multiplicative, we obtain on \({\mathcal {V}}_{m}\left( {\underline{\theta }}^{*}\right) \)

that implies

Now \(\left( \log \Sigma _{2:n}^{m}\right) \) is a strictly stationary and ergodic process with \(E_{{\underline{\theta }}_{0}}\left\{ \log \Sigma _{2:n}^{m}\right\} \) is finite. Then we get

where \(\xi \left( {\underline{\theta }}\right) \) is the Lyapunov exponent of the sequence \(\left( \mathbb {P}_{{\underline{\theta }}_{0}}\left( f\left( \left. \epsilon _{t}\right| \underleftarrow{\epsilon }_{t-1}\right) \right) ,t\in \mathbb {Z}\right) \) i,e.,

Hence, using Lemma 3.2, there exist\(\ \varepsilon >0\) and \( n_{\varepsilon }\in \mathbb {N}\) such that \(\dfrac{1}{n_{\varepsilon }}E_{ {\underline{\theta }}_{0}}\left\{ \log \left\| \prod \limits _{t=2}^{n_{\varepsilon }}\mathbb {P}_{{\underline{\theta }}^{*}}\left( f \right. \right. \right. \)\(\left. \left. \left. \left( \left. \epsilon _{t}\right| \underleftarrow{\epsilon } _{t-1}\right) \right) \right\| \right\} <\xi \left( {\underline{\theta }} _{0}\right) -\varepsilon \). From the DCT theorem, it follows that for m large enough we get

The result follows by Lemma 3.1. \(\square \)

Second, we present the following main theorem.

Theorem 3.1

Under \(\textbf{A}1-\textbf{A}3,\) the sequence of QML estimators \(\left( \underline{\widehat{\theta }}_{n}\right) _{n}\) satisfying ( 3.3) is strong consistency, i.e.,

Proof

Assume that \(\underline{\hat{\theta }}_{n}\ \)does not convergeto \( {\underline{\theta }}_{0}\) a.s., i.e.,

Using the Lemma 3.3, we have \(L_{n}\left( \underline{\hat{\theta }} _{n}\right)<\) \(L_{n}\left( {\underline{\theta }}_{0}\right) \). However, by the QMLE provided in ( 3.3), we get

for any compact subset \(\Theta ^{*}\) of \(\Theta \) containing \(\underline{ \theta }_{0}\). This discrepancy confers the result. \(\square \)

4 Simulation study

In order to evaluate the performance of the QML method for parameters estimation, we carried out a simulation study based on the Gaussian \(MSAR-SV(p)\) model with \(d=2\). We simulated 1000 data samples with different lengths. The sample sizes to be examined in this simulation study are \(n\in \left\{ 500,1000,2000\right\} \). The corresponding parameter values are chosen to satisfy the stationarity condition \(\gamma _{L}\left( \Gamma \right) <0\). For each trajectory the vector \( {\normalsize {\underline{\theta }}}\) of parameters of interest has been estimated with \({\normalsize QMLE}\) noted as \( \widehat{{\normalsize {\underline{\theta }}}}\). The \({\normalsize QMLE}\) algorithm has been executed for these series under the MATLAB8 using "fminsearch.m" as a minimizer function. In Tables below, the root mean square errors \(\left( RMSE\right) \) of \(\widehat{{\normalsize \theta }}\), are displayed in parenthesis in each table, the true values (TV) of the parameters of each of the considered data-generating process are reported.

The roots mean square errors are the main focus in this study. The results provide some preliminary evidence with respect to the finite sample properties of the QMLE in the \(MSAR-SV\) framework. It can be observed that the parameters are quite well estimated by the QMLE method. Now let us devote a few comments. Table 1 shows that the strong consistency of QMLE of \(MS-\)models is fairly satisfying and the associated RMSE decreases closely as the sample size increases. Regarding outcomes associated with \(MS- \)models reported in Table 2, it is obvious that the strong consistency is fully approved. Furthermore, it can be seen that even with a relatively small sample size, the procedure of estimation gives a good result.

Notes

The concept of controllability is defined in [1].

References

Bibi, A., Ghezal, A.: On the Markov-switching bilinear processes: stationarity, higher-order moments and \( \beta -\)mixing. Stochas. Int. J. Probab. Stochast. Process. 87(6), 919–945 (2015)

Casarin, R.: Bayesian inference for generalised Markov switching stochastic volatility models. Conference materials at the 4th International Workshop on Objective Bayesian Methodology, CNRS, Aussois (2003)

Cavicchioli, M.: Spectral density of Markov-switching \(VARMA\) models. Econ. Lett. 121, 218–220 (2013)

Cavicchioli, M.: Higher order moments of Markov switching \(VARMA\) models. Economet. Theory 33(6), 1502–1515 (2017)

Cavicchioli, M.: Asymptotic Fisher information matrix of Markov switching \(VARMA\) models. J. Multivar. Anal. 157, 124–135 (2017)

Cavicchioli, M.: Markov switching \(GARCH\) models: higher order moments, kurtosis measures and volatility evaluation in recessions and pandemic. J. Bus. Econ. Stat. 40(4), 1772–1783 (2022)

Douc, R., Moulines, E., Stoffer, D.: Nonlinear Time Series Theory, Methods, and Applications with R Examples. CRC Press, New York (2014)

Francq, C., Zakoïan, J.-M.: Linear representation based estimation of stochastic volatility models. Scand. J. Stat. 33(4), 785–806 (2006)

Francq, C., Zakoïan, J.-M.: Deriving the autocovariances of powers of Markov-switching \(GARCH\) models, with applications to statistical inference. Comput. Stat. Data Anal. 52(6), 3027–3046 (2008)

Francq, C., Zakoïan, J.-M.: \(QML\) estimation of a class of multivariate asymmetric \(GARCH\) models. Economet. Theor. 28(1), 179–206 (2012)

Ghezal, A.: A doubly Markov switching AR model: some probabilistic properties and strong consistency. J. Math. Sci. (2023). https://doi.org/10.1007/s10958-023-06262-y

Ghezal, A., Zemmouri, I.: Estimating \(MS-BLGARCH\) models using recursive method. Pan-Am. J. Math. 2, 1–7 (2023)

Haas, M., Mittnik, S., Paolella, M.S.: A new approach to Markov-switching \(GARCH\) models. J. Financ. Economet. 2(4), 493–530 (2004)

Hamilton, J.D.: A new approach to the economic analysis of nonstationary time series and the business cycle. Econometrica 57(2), 357–384 (1989)

Leroux, B.G.: Maximum-likelihood estimation for hidden Markov models. Stochas. Process. Appl. 40, 127–143 (1992)

So, M.E.C.P., Lam, K., Li, W.K.: A stochastic volatility model with Markov switching. J. Bus. Econ. Stat. 16(2), 244–253 (1998)

Stelzer, R.: On Markov-switching \(ARMA\) processes: stationarity, existence of moments and geometric ergodicity. Economet. Theory 25(1), 43–62 (2009)

Vo, M.T.: Regime-switching stochastic volatility: evidence from the crude oil market. Energy Econ. 31(5), 779–788 (2009)

Xie, Y.: Consistency of maximum likelihood estimators for the regimes witching \(GARCH\) model. Stat. A J. Theor. Appl. Stat. 43(2), 153–165 (2009)

Acknowledgements

We should like to thank the Editor in Chief of the journal, an Associate Editor and the anonymous referees for their constructive comments and very useful suggestions and remarks which were most valuable for improvement in the final version of the paper. We also would like to thank our colleagues, Khadidja Himour and Dr. Khalil Zerari, who encouraged us a lot.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ghezal, A., Zemmouri, I. On the Markov-switching autoregressive stochastic volatility processes. SeMA 81, 413–427 (2024). https://doi.org/10.1007/s40324-023-00329-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40324-023-00329-1

Keywords

- Stochastic volatility

- Markov-switching autoregression

- Strictly (second-order) stationarity

- QMLE

- Geometric ergodicity