Abstract

We consider the zero distribution of random polynomials of the form \(P_n(z) = \sum _{k=0}^n a_k B_k(z)\), where \(\{a_k\}_{k=0}^{\infty }\) are non-trivial i.i.d. complex random variables with mean 0 and finite variance. Polynomials \(\{B_k\}_{k=0}^{\infty }\) are selected from a standard basis such as Szegő, Bergman, or Faber polynomials associated with a Jordan domain G whose boundary is \(C^{2, \alpha }\) smooth. We show that the zero counting measures of \(P_n\) converge almost surely to the equilibrium measure on the boundary of G. We also show that if \(\{a_k\}_{k=0}^{\infty }\) are i.i.d. random variables, and the domain G has analytic boundary, then for a random series of the form \(f(z) =\sum _{k=0}^{\infty }a_k B_k(z),\)\(\partial {G}\) is almost surely the natural boundary for f(z).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This work is a sequel to [8] where we showed that zeros of a sequence of random polynomials \(\{P_n\}_{n}\) (spanned by an appropriate basis) associated to a Jordan domain G with analytic boundary L, equidistributed near L, i.e., distribute according to the equilibrium measure of L. We refer the reader to [8] for references to the literature on random polynomials. In this note, we extend the above result to Jordan domains with lesser regularity, namely domains with \(C^{2, \alpha }\) boundary, see Theorem 1.1 below.

To state our results we need to set up some notation. Let \(G\subset {{{\mathbb {C}}}}\) be a Jordan domain. We set \(\Omega ={\overline{{{\mathbb {C}}}}} {\setminus }{\overline{G}},\) the exterior of \({\overline{G}}\) and \(\Delta \) the exterior of the closed unit disc. By the Riemann mapping theorem there is a unique conformal mapping \(\Phi :\Omega \rightarrow \Delta ,\ \Phi (\infty )=\infty ,\ \Phi '(\infty )>0.\) We denote the equilibrium measure of \(E = {\overline{G}}\) by \(\mu _E.\) For a polynomial \(P_n\) of degree n, with zeros at \(\{Z_{k,n}\}_{k=1}^n,\) let \(\tau _n = \dfrac{1}{n}\sum _{k =1}^{n}\delta _{Z_{k,n}}\) denote its normalized zero counting measure. For a sequence of positive measures \(\{\mu _n\}_{n=1}^{\infty },\) we write \(\mu _n {\mathop {\rightarrow }\limits ^{w}}\mu \) to denote weak convergence of these measures to \(\mu .\) A random variable X is called non-trivial if \({{\mathbb {P}}}(X=0)<1\).

Theorem 1.1

Let G be a Jordan domain in \({\mathbb {C}}\) whose boundary L is \(C^{2, \alpha }\) smooth for some \(0< \alpha < 1.\) Consider a sequence of random polynomials \(\{P_n\}_{n=0}^{\infty }\) defined by \(P_n(z) = \sum _{k=0}^{n}a_kB_k(z),\) where the \(\{a_i\}_{i=0}^{\infty }\) are non-trivial i.i.d. random variables with mean 0 and finite variance, with the basis \(\{B_n\}_{n=0}^{\infty }\) being given either by Szegő, or by Bergman, or by Faber polynomials. Then, \(\tau _n {\mathop {\rightarrow }\limits ^{w}} \mu _E\) a.s.

We summarize some useful facts obtained in the proof of Theorem 1.1 below.

Corollary 1.2

Suppose that E is the closure of a Jordan domain G with \(C^{2, \alpha }\) boundary L, and that the basis \(\{B_k\}_{k=0}^{\infty }\) is given either by Szegő, or by Bergman, or by Faber polynomials. If \(\{a_k\}_{k=0}^{\infty }\) are non-trivial i.i.d. complex random variables with mean 0 and finite variance, then the random polynomials \(P_n(z) = \sum _{k=0}^n a_k B_k(z)\) converge almost surely to a random analytic function f that is not identically zero. Moreover,

holds with probability one.

As a consequence of Theorem 1.1, we show that the zeros of the sequence of derivatives \(\{P_n'\}_{n=0}^{\infty }\) also equidistribute.

Corollary 1.3

Let G, \(\{a_i\}_{i=0}^{\infty }\) and \(P_n\) be as in Theorem 1.1. Let \(\tau _n'\) denote the zero counting measures of \(P_n'.\) Then, \(\tau _n' {\mathop {\rightarrow }\limits ^{w}} \mu _E\) a.s.

The natural boundary for a random power series of the form \(\sum _{k=0}^{\infty }a_kz^k\) where \(\{a_k\}_{k=0}^{\infty }\) are i.i.d. random variables has been investigated by quite a few authors. We refer especially to [2], but see also [6] and the references therein. The result there is that for such a random series, the circle of convergence is a.s. the natural boundary. Some extensions are possible when the \(\{a_k\}_{k=0}^{\infty }\) are merely independent. Therefore, it seems reasonable to ask if such a result holds when the random series is formed by other polynomial bases. In [8], we remarked (without proof) that the random series formed by the basis \(\{B_k\}_{k=0}^{\infty },\) has natural boundary L. We prove that result here.

Theorem 1.4

Suppose that E is the closure of a Jordan domain G with analytic boundary L, and that the basis \(\{B_k\}_{k=0}^{\infty }\) is given either by Szegő, or by Bergman, or by Faber polynomials. Assume that the random coefficients \(\{a_k\}_{k=0}^{\infty }\) are i.i.d. complex random variables which are non-constant almost surely, and furthermore satisfy \({{\mathbb {E}}}[\log ^+|a_0|]<\infty .\) Then the series

converges a.s. to a random analytic function \(f\not \equiv 0\) in G, and moreover, with probability one, \(\partial G = L\) is the natural boundary for f.

We believe that Theorem 1.4 holds for any bounded Jordan domain. It would be interesting to see a proof of such a result, which we expect will need rather different techniques from the ones we use.

2 Proofs

Proof of Theorem 1.1 and of Corollary 1.2

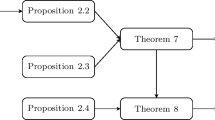

We closely follow the ideas in [8]. The proof consists of two probabilistic lemmas followed by the use of a deterministic theorem in potential theory. The first lemma below follows from a standard application of the Borel–Cantelli lemma. \(\square \)

Lemma 2.1

If \(\{a_k\}_{k=0}^{\infty }\) are non-trivial, independent and identically distributed complex random variables that satisfy \({{\mathbb {E}}}[\log ^+|a_0|]<\infty \), then

and

A slightly more delicate application of Borel–Cantelli gives the following result. For the proof, we refer to [8].

Lemma 2.2

If \(\{a_k\}_{k=0}^{\infty }\) are non-trivial i.i.d. complex random variables, then there is a value \(b>0\) such that

We use the following theorem of Grothmann [4] which describes the zero distribution of deterministic polynomials.

Let \(E\subset {{\mathbb {C}}}\) be a compact set of positive capacity such that \(\Omega ={\overline{{{\mathbb {C}}}}} {\setminus } E\) is connected and regular. The Green function of \(\Omega \) with pole at \(\infty \) is denoted by \(g_\Omega (z,\infty )\). We use \(\Vert \cdot \Vert _K\) for the supremum norm on a compact set K.

Theorem G. If a sequence of polynomials \(P_n(z),\ \deg (P_n)\le n\in {{\mathbb {N}}},\) satisfies

for any closed set \(K\subset E^\circ \)

and there is a compact set \(S\subset \Omega \) such that

then the zero counting measures \(\tau _n\) of \(P_n\) converge weakly to \(\mu _E\) as \(n\rightarrow \infty .\)

The idea now is to check that with probability 1, our sequence of polynomials satisfies the hypothesis in Grothmann’s theorem. Recall that in our setting \(g_\Omega (z,\infty )=\log |\Phi (z)|,\ z\in \Omega .\)

Note that (2.4) is satisfied for E almost surely by (2.2), and the estimate

as

This last fact follows from the well known result that in all three cases of polynomial bases we consider in this theorem, we have

holds uniformly on compact subsets of \(\Omega ,\) see [9]. To check that (2.5) holds, we use the following lemma from [5].

Lemma 2.3

Let \(\psi _n\) be holomorphic functions on a domain \(\Lambda .\) Assume that \(\sum _{n=0}^{\infty }|\psi _n|^2\) converges uniformly on compact sets of \(\Lambda .\) Let \(a_n\) be i.i.d. random variables with zero mean and finite variance. Then, almost surely, \(\sum _{n=0}^{\infty }a_n\psi _n(z)\) converges uniformly on compact subsets of \(\Lambda \) and hence defines a random analytic function.

It is well known that \(\sum _{n=0}^{\infty }|B_n(z)|^2 = K(z,z),\) where K(z, w) denotes the Bergman (or correspondingly Szegő) kernel of the domain G when \(\{B_i\}_{i=0}^{\infty }\) denotes the Bergman or Szegő basis respectively. For the case of the Faber polynomials, the convergence follows from the estimates of the \(\sup \) norm \(||P_n||_K\) on any compact \(K\subset G\), see [11, Ch. 1, Sec. 5]. With this knowledge, taking \(\psi _n = B_n\) and \(\Lambda = G\) in Lemma 2.3, we obtain that almost surely, \(\sum _{n=0}^{\infty }a_nB_n(z)\) converges uniformly on compact subsets of G and hence defines a random analytic function f. The uniqueness of series expansions of these polynomial basis ensures that f is not identically 0. Since \(P_n\rightarrow f,\) an application of Hurwitz’s theorem from basic complex analysis now proves (2.5). Incidentally this also proves the corresponding part of Corollary 1.2.

If \(\tau _n\) do not converge to \(\mu _E\) a.s., then (2.6) cannot hold a.s. for any compact set S in \(\Omega .\) We choose \(S=L_R =\{z: g_{\Omega }(z,\infty )=\log R\},\) with \(R>1\), and find a subsequence \(n_m,\ m\in {{\mathbb {N}}},\) such that

holds with positive probability. It follows from a result of Suetin [11, Ch. 1], that for Bergman polynomials,

holds locally uniformly in \(\Omega \) where we recall that \(\Phi \) is the exterior conformal map, \(\Phi :\Omega \rightarrow \Delta ,\ \Phi (\infty )=\infty ,\ \Phi '(\infty )>0,\) and

Similar asymptotic formulas as (2.9) are valid for Szegő and Faber polynomials but without the factor \(\sqrt{n+1}.\) The proofs for these bases have to be accordingly modified. Equation (2.9) implies that all zeros of \(B_n\) are contained inside \(L_R\) for all large n. This allows us to write an integral representation

which is valid for all large \(n\in {{\mathbb {N}}}\) because \(P_n(z)/(z B_n(z)) = a_n/z + O(1/z^2)\) for \(z\rightarrow \infty .\) The asymptotic on \(B_n\) from (2.9) implies that there are positive constants \(c_1\) and \(c_2\) that do not depend on n and z, such that

We estimate from (2.11) and (2.12) with \(\rho =R\) that

where \(|L_R|\) is the length of \(L_R\) and \(d:=\min _{z\in L_R} |z|.\) It follows that

Applying this estimate repeatedly, we obtain that

so that (2.11) yields

Choosing sufficiently small \(\varepsilon >0\) and using (2.8), we deduce from the previous inequality that

for some \(q\in (0,1)\) and all sufficiently large \(n_m\), with positive probability. The latter estimate clearly contradicts (2.3) of Lemma 2.2. Hence (2.6) holds for \(S=L_R,\) with any \(R>1\), and \(\tau _n\) converge weakly to \(\mu _E\) with probability one. Note that (2.6) for \(S=L_R,\) with \(R>1\), is equivalent to (1.1). Indeed, we have equality in (2.6), with \(\lim \) instead of \(\liminf \), by Bernstein–Walsh inequality and (2.4), see [1, p. 51, Remark 1.2] for more details. This concludes the proof of Theorem 1.1 as well as the proof of Corollary 1.2.

Proof of Corollary 1.3

The method of proof is similar to that of Theorem 1.1, namely check that the conditions in Grothmann’s result hold almost surely. First, we use a Markov–Bernstein result (cf. [7] and the references therein) to bound the sup norm of \(P_n'\) on E.

Therefore, with probability one,

This shows that (2.4) holds for \(P_n'.\) Next, we know from the proof of Theorem 1.1 that with probability one, \(P_n\rightarrow f\) uniformly on compacts, where f is a non-zero random analytic function. From this we obtain that \(P_n'\rightarrow f'\) also uniformly on compacts. The function \(f'\) is not identically 0, for if it were, \(f\equiv c\) for some constant c, and by the uniqueness of series expansion for the polynomial basis under consideration, this would imply that \(a_i =0\) for \(i\ge 1.\) This contradicts Lemma 2.2. From here, an application of Hurwitz’s theorem now yields that \(\tau _n'(K)\rightarrow 0\) for every compact set \(K\subset G.\) This proves Equation (2.5) for \(P_n'.\) Finally, recall that

where \(A_n(z)\) satisfies the estimate (2.10). Differentiating this, we obtain bounds for \(B_n'\) on \(L_R.\) Namely

To obtain this asymptotic, we have used a local Cauchy integral to estimate \(A_n'(z):\)

for \(z\in L_R\) with \(\delta > 0\) being chosen so that the ball \(B_{\delta }(z)\) stays away from the boundary, say \(\delta = \frac{1}{5}d(L_R, L).\) Using the uniform bound (2.10) in the above integral shows that an analogous estimate holds for \(A_n'(z).\) Once we obtain (2.14), we note that the proof for (2.6) for \(P_n'\) follows as in Theorem 1.1. All the conditions in Grothmann’s theorem are satisfied and hence we have the required convergence. \(\square \)

Remark

Although Theorem 1.1 and Corollary 1.3 have been stated for Jordan domains with \(C^{2, \alpha }\) boundary, it is easy to see that the same proof goes through if for instance G is a Jordan domain whose boundary is piecewise analytic (with angles at the corners satisfying certain conditions, see [10, Theorem 1.2]). The asymptotic Eq. (2.9) will then have to be replaced by an analogous one for piecewise analytic boundary.

Proof of Theorem 1.4

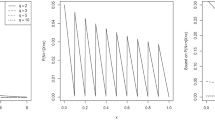

We have that E is the closure of a Jordan domain G bounded by an analytic curve L with exterior \(\Omega .\) It is well known that the conformal mapping \(\Phi :\Omega \rightarrow \Delta ,\ \Phi (\infty )=\infty ,\ \Phi '(\infty )>0,\) extends through L into G, so that \(\Phi \) maps a domain \(\Omega _r\) containing \({\overline{\Omega }}\) conformally onto \(\{|z|>r\}\) for some \(r\in (0,1).\) In particular, the level curves of \(\Phi \) denoted by \(L_\rho \) are contained in G for all \(\rho \in (r,1)\), \(L_1=L\) and \(L_\rho \subset \Omega \) for \(\rho >1.\)

For the proof that the series \(\sum _{k=0}^{\infty }a_k B_k(z)\) converges a.s. to an analytic function f, we refer the reader to [8, Corollary 2.2].

We now show the result about L being the natural boundary of f. We will give the proof for the basis of Faber and Bergman polynomials. The proof for the Szegő polynomials is similar to the Bergman case but simpler.

Let

for z in a neighborhood of infinity. Let \(F_n\) be the nth Faber polynomial. By definition, \(F_n\) is the polynomial part of the Laurent expansion of \(\Phi ^n\) at infinity,

where \(E_n\) is analytic, consisting of all the negative powers of z in the expansion of \(\Phi ^n.\) Fix \(\epsilon >0\) such that \(r+\epsilon < 1.\) It follows that

for \(r+\epsilon < \rho .\) From the above integral representation it is clear that

for \(z\in \Omega _{\rho }.\) Here \(d(\Gamma _{r+\epsilon }, \Gamma _{\rho })\) denotes the distance between \(\Gamma _{r+\epsilon }\) and \(\Gamma _{\rho }.\) Using (2.16) and the fact that \(\limsup |a_n|^{\frac{1}{n}} = 1\) a.s. (see Eq. (2.1)), we deduce that the series \(\sum _{k=0}^{\infty } a_kE_k(z)\) converges a.s. in \(\Omega _{\rho }\) and defines a random analytic function there. From Eq. (2.15) we know that for \(z\in G\cap \Omega _{\rho },\)\(r+\epsilon< \rho < 1,\)

Now suppose that the series \(f = \sum _{k=0}^{\infty }a_k F_k(z)\) has an analytic continuation across \(L = L_1.\) Then, together with the fact that the second series on the right defines an analytic function in \(\Omega _{\rho },\) this implies that \(\sum _{k=0}^{\infty }a_k w^k\) has an analytic continuation across \(|w| = 1,\) where \(w = \Phi (z).\) But this contradicts [2, Satz 8].

If \(\{B_k\}_{k=0}^{\infty }\) denotes the Bergman basis, then Carleman’s asymptotic formula (see [3, Ch. 1]), yields

where

Using \(\limsup |a_n|^{\frac{1}{n}} = 1\) a.s. and estimates (2.18), we observe that the series

converges a.s. in a neighborhood of the boundary L, and defines an analytic function there. Now from (2.17), we have

for \(z\in G\cap \Omega _{\rho },\)\(r< \rho < 1.\) If the series \(\sum _{n=0}^{\infty }a_nB_n(z)\) has an analytic continuation across L, then combined with the fact that the second series on the right defines an analytic function near L, we would obtain that \(\sum _{n=0}^{\infty }a_n\sqrt{\frac{n+1}{\pi }}\Phi ^n(z)\Phi '(z)\) and hence \(\sum _{n=0}^{\infty }a_n\sqrt{\frac{n+1}{\pi }}\Phi ^n(z)\) has an analytic continuation across L. In other words, taking \(w = \Phi (z)\) the series \(\sum _{n=0}^{\infty }a_n\sqrt{\frac{n+1}{\pi }}w^n\) has an analytic continuation across \(|w| = 1.\) This contradicts known results on analytic continuation of power series stating that the unit circle must be the natural boundary for the latter power series with probability one, see [2, Satz 12]. Since the latter reference is not readily available, we give a statement and a brief proof of the claimed fact in the concluding lemma below. \(\square \)

Lemma 2.4

Let \(\{c_n\}_{n=0}^{\infty }\) be a sequence of complex numbers such that

If \(\{a_n\}_{n=0}^{\infty }\) are i.i.d. complex random variables that are not a.s. constant, and that satisfy \({{\mathbb {E}}}[\log ^+|a_0|]<\infty \), then the random power series

has the unit circle as its natural boundary with probability one.

Proof

We follow, in part, the argument of Kahane [6, p. 41]. Let \(\Omega \) be the common probability space in which all the random variables \(a_n\) are defined. Consider the “symmetrized” random power series

where \((\omega _1,\omega _2)\in \Omega \times \Omega .\) Note that the random variables \(\left( a_n(\omega _1)-a_n(\omega _2)\right) c_n,\ n=0,1,2,\dots ,\) are symmetric (a random variable X is symmetric if \(-X\) has the same distribution). Moreover, \(\left( a_n(\omega _1)-a_n(\omega _2)\right) \) random variables are non-trivial i.i.d., because \(a_n\) are i.i.d., and are not almost surely constant. Since

we obtain from Lemma 2.1 that

Combining this fact with (2.19), we conclude that the random power series F has radius of convergence equal to 1 a.s. It is also clear that the same conclusion holds by Lemma 2.1 for the series \(f_1\) and \(f_2\) that represent the original series (2.20) for different events from \(\Omega \). Suppose to the contrary that the series (2.20) can be continued beyond the unit circle with positive probability, which then holds almost surely by a zero-one law as explained in [6, p. 39]. This means that there is an arc J of the unit circle such that both series \(f_1\) and \(f_2\) can be continued analytically beyond J with probability one. Hence F can be continued analytically beyond J with probability one, which is in direct contradiction with [6, p. 40, Theorem 1]. \(\square \)

Remark

Since the submission of this article, it has come to the authors’ notice that Theorem 1.1 has recently been shown in full generality in the preprint https://arxiv.org/pdf/1901.07614.pdf.

References

Andrievskii, V.V., Blatt, H.-P.: Discrepancy of Signed Measures and Polynomial Approximation. Springer, New York (2002)

Arnold, L.: Zur Konvergenz und Nichtfortsetzbarkeit zufa̋lliger Potenzreihen, pp. 223–234. Statistical Decision Functions, Random Processes, Academia, Prague, Trans. Fourth Prague Conf. on Information Theory (1965)

Gaier, D.: Lectures on Complex Approximation. Birkhuser, Boston (1987)

Grothmann, R.: On the zeros of sequences of polynomials. J. Approx. Theory 61, 351–359 (1990)

Hough, J.B., Krishnapur, M., Peres, Y., Virag, B.: Zeros of Gaussian Analytic Functions and Determinantal Point Processes, University Lecture Series, Vol \(51,\) American Mathematical Society, (2009)

Kahane, J.P.: Some Random Series of Functions. Cambridge University Press, Cambridge (1985)

Pritsker, I.: Comparing norms of polynomials in one and several variables. J. Math. Anal. Appl. 216, 685–695 (1997)

Pritsker, I., Ramachandran, K.: Equidistribution of zeros of random polynomials. J. Approx. Theory 215, 106–117 (2017)

Smirnov, V.I., Lebedev, N.A.: Functions of a Complex Variable: Constructive Theory. MIT Press, Cambridge (1968)

Stylianopoulos, N.: Strong asymptotics of Bergman polynomials over domains with corners and applications. Constr. Approx. 38(1), 59–100 (2013)

Suetin, P.K.: Polynomials Orthogonal Over a Region and Bieberbach Polynomials. American Mathematical Society, Providence (1974)

Acknowledgements

Research of the first author was partially supported by the National Security Agency (Grant H98230-15-1-0229) and by the American Institute of Mathematics. We are grateful to the referee for pointing out a correction in the statement of Theorem 1.4, and for other remarks and suggestions which helped in improving the exposition.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Doron Lubinsky.

Dedicated to Prof. R. S. Varga on his 90th birthday.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Pritsker, I., Ramachandran, K. Natural Boundary and Zero Distribution of Random Polynomials in Smooth Domains. Comput. Methods Funct. Theory 19, 401–410 (2019). https://doi.org/10.1007/s40315-019-00273-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40315-019-00273-0