Abstract

In this paper, we obtain a new estimate for the (product) \(\gamma \)-diagonally dominant degree of the Schur complement of matrices. As applications we discuss the localization of eigenvalues of the Schur complement and present several upper and lower bounds for the determinant of strictly \(\gamma \)-diagonally dominant matrices, which generalizes the corresponding results of Liu and Zhang (SIAM J. Matrix Anal. Appl. 27 (2005) 665-674).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To begin with, we recall several well-known subclasses of nonsingular H-matrices. Let \(A\in \mathbb {C}^{n\times n}\) and \(N\equiv \{1,2,\ldots ,n\}\). Given \(\alpha \subseteq N\), denote

and

Given \(\gamma \in [0,1]\), take

A is called a (row) strictly diagonally dominant matrix if

A is called a doubly strictly diagonally dominant matrix if

A is called a strictly \(\gamma \)-diagonally dominant matrix (\(SD^\gamma _n\)) if there exists \(\gamma \in [0,1]\) such that

A is called a product strictly \(\gamma \)-diagonally dominant matrix (\(SPD^\gamma _n\)) if there exists \(\gamma \in [0,1]\) such that

Given \(\gamma \in [0,1]\) and \(1\le i<j\le n\), define the diagonally dominant degree (Liu and Zhang 2005; Cui et al. 2017) with respect to the i-th, the doubly diagonally dominant degree (Liu et al. 2012; Gu et al. 2021) with respect to the ith and jth, the \(\gamma \)-diagonally dominant degree (Liu and Huang 2010; Liu et al. 2010) with respect to the i-th and the product \(\gamma \)-diagonally dominant degree (Liu and Huang 2010; Liu et al. 2010) with respect to the i-th of A as \(|a_{ii}|-P_i(A)\), \(|a_{ii}a_{jj}|-P_i(A)P_j(A)\), \(|a_{ii}|-\gamma P_i(A)-(1-\gamma )S_i(A)\) and \(|a_{ii}|-[P_i(A)]^\gamma [S_i(A)]^{1-\gamma }\), respectively. In this way, we may define other kinds of dominant degrees, for example, Nekrasov diagonally dominant degree (Liu et al. 2022, 2018). It is clear that the positivity of these dominant degrees could characterize the corresponding classes of matrices.

The Schur complement is a useful tool in many fields such as control theory, numerical algebra, big data, polynomial optimization, magnetic resonance imaging and simulation (Li 2000; Zhang 2006; Sang 2021). To solve the large scale linear systems efficiently, the authors (Liu and Huang 2010; Liu et al. 2010) proposed a kind of iteration called the Schur-based iteration, which reduces the order of the involved matrices by the Schur complement. The closure property and the eigenvalue distribution of the Schur complement play an important role in determining the convergence of iteration methods. The properties of the eigenvalues of the Schur complement has been studied extensively in Liu and Zhang (2005); Li et al. (2017); Cvetković and Nedović (2012); Smith (1992); Zhang et al. (2007); Liu and Huang (2004); Li et al. (2022); Song and Gao (2023) and the references therein. It has been proved in Carlson and Markham (1979); Liu and Huang (2004); Li and Tsatsomeros (1997); Liu et al. (2004) that Schur complements of strictly diagonally dominant matrices are also strictly diagonally dominant and the same property holds for nonsingular H-matrices, doubly strictly diagonally dominant matrices, generalized doubly diagonally dominant matrices (S-strictly diagonally dominant matrices). However, this closeness property does not hold for (product) strictly \(\gamma \)-diagonally dominant matrices (Liu and Huang 2010), Nekrasov matrices (Liu et al. 2018) or hence more matrix classes based on these structures. While, the authors (Liu and Huang 2010; Liu et al. 2010; Zhou et al. 2022; Liu et al. 2018; Cvetković and Nedović 2009; Li et al. 2022; Song and Gao 2023) presented several sufficient conditions under which Schur complements of (product) strictly \(\gamma \)-diagonally dominant matrices, Nekrasov matrices, Dashnic-Zusmanovich type matrices, Cvetković-Kostić-Varga type matrices are still in the same original class, respectively.

The disc separations of the Schur complement compares with that of the original matrix and show that each Geršgorin disc of the Schur complement is paired with a particular Geršgorin disc of the original matrix. For more details of the famous Geršgorin disc, see (Varga 2004). Alternatively, we may consider the disc separations as the estimates (lower bounds) of the differences between the diagonally dominant degrees of the Schur complement and that of the original matrix. Roughly speaking, if these differences are all positive, the closeness property holds; otherwise, the estimates also provide sufficient conditions ensuring the schur complement is in the same matrix class (Liu et al. 2022, 2018).

In Liu and Huang (2010); Liu et al. (2010); Zhang et al. (2013); Cui et al. (2017); Zhou et al. (2022) the authors improved the disc separations of strictly diagonally dominant matrices, and gave the lower bounds of the \(\gamma \)-diagonally dominant degrees of the Schur complement minus that of the original matrix. These results require the involved indices are strictly diagonally dominant in both row and column (the condition is a little weaker in Zhou et al. (2022)). In this paper, we present several new estimates for the differences between the corresponding \(\gamma \)-diagonally dominant degrees of the Schur complement and of the original matrix under some more natural conditions. As applications, we give the localization of eigenvalues of the Schur complement and present some upper and lower bounds for the determinant of strictly \(\gamma \)-diagonally dominant matrices.

The rest of the paper is organized as follows. In Sect. 2, we gave some notations and technical lemmas. In Sect. 3, we present the \(\gamma \) (product \(\gamma \))-diagonally dominant degree on the Schur complement of \(\gamma \) (product \(\gamma \))-diagonally dominant matrices. In Sect. 4, the disc theorems for the Schur complements of \(\gamma \) (product \(\gamma \))-diagonally dominant matrices are obtained by applying the diagonally dominant degree on the Schur complement. In Sect. 5, we give some upper and lower bounds for the determinants of strictly \(\gamma \)-diagonally dominant matrices, which generalizes the results in (Liu and Zhang 2005, Theorem 3).

2 Notations and Lemmas

For \(A=(a_{ij})\in \mathbb {C}^{n\times n}\), the comparison matrix \(\mu (A)=(m_{ij})\in \mathbb {C}^{n\times n}\) is defined as

A matrix A is an M-matrix if it can be split into the form \(A=sI-B\), where I is an identity matrix, B is a nonnegative matrix and \(s>\rho (B)\). A matrix A is an H-matrix if \(\mu (A)\) is an M-matrix.

Lemma 2.1

(Horn and Johnson (1990),[p. 117]) If A is an H-matrix, then \([\mu (A)]^{-1}\ge |A^{-1}|.\)

For non-empty index sets \(\alpha , \beta \subseteq N\), we denote by \(A(\alpha ,\beta )\) the submatrix of \(A\in \mathbb {C}^{n\times n}\) lying in the rows indexed by \(\alpha \) and the columns indexed by \(\beta \). In particular, \(A(\alpha ,\alpha )\) is abbreviated as \(A(\alpha )\). Assuming that \(A(\alpha )\) is nonsingular, the Schur complement of A with respect to \(A(\alpha )\), which is denoted by \(A/A(\alpha )\) or simply \(A/\alpha \), is defined to be

Let \(\alpha =\{i_1,i_2,\ldots ,i_k\}\subseteq N\) and \(\bar{\alpha }=N-\alpha =\{j_1,j_2,\ldots ,j_l\}\). Denote by \(|\alpha |\) the cardinality of \(\alpha \). It is clear that \(|\alpha |=k\). For the sake of convenience, denote

Given any \(\gamma \in [0,1]\) and \(\alpha \subseteq N\), denote

Lemma 2.2

Let \(A \in \mathbb {C}^{n\times n}\), let \(\alpha =\{i_1,i_2,\ldots ,i_k\}\subseteq N_{\gamma }(A)\) and \(\bar{\alpha }=N-\alpha =\{j_1,j_2,\ldots ,j_l\}\) with \(1\le k<n\). For each \(t\in \{1,2,\ldots , l\}\), denote

Then \(B_t\) is an M-matrix and \(det(B_t)>0\), if

Proof

It is sufficient to show that there exists a positive diagonal matrix D such that \(DB_tD\) is in \(SD^\gamma _{k+1}\). Since \(x> ms^r_{j_t}(A,\alpha )\) and \(\alpha \subseteq N\), take \(\varepsilon >0\) such that

and

We construct \(D=diag(d_1,d_2,\ldots ,d_{k+1})\), where

Let \(C=DB_tD=(c_{ij})\). For \(u=1,2,\ldots , k+1\), by (2.6), we have

Let \(B_t=(b_{ij})\). Then for \(1\le u\le k\), we have

For \(u=k+1\), by (2.5) and (2.6) we have

Therefore, \(C\in SD_{k+1}^{\gamma }\), and hence \(B_t\) is a nonsingular H-matrix. Since \(B_t=\mu (B_t)\), \(B_t\) ia an M-matrix. It follows from (Horn and Johnson 1990, Theorem 2.5.4) that \(det(B_t)>0\). \(\square \)

Lemma 2.3

Let \(a_1> a_2\ge 0\), \(b_1> b_2\ge 0\) and \(0\le \gamma \le 1\). Then

and

Proof

For \(\gamma =0\) and \(\gamma =1\), the above inequalities hold trivially. If \(a_2=0\) or \(b_2=0\), the above inequalities also hold trivially. Now suppose \(0<\gamma <1\), \(a_1> a_2> 0\) and \(b_1> b_2>0\). Let \(a_i=x_i^{1/\gamma }\) and \(b_i=y_i^{1/(1-\gamma )}\) for \(i=1,2\). By the well-known Hölder inequality (Horn and Johnson 1985, p. 536) we have

which leads to (2.8). Note that (2.8) only requires that \(a_1,a_2,b_1,b_2>0\).

Let \(s=a_1-a_2\) and \(t=b_1-b_2\). It is clear that \(s,t>0\). By (2.8) we have

which leads to (2.9). \(\square \)

3 Disc separation of the Schur complement

In this section, we present the lower and upper bounds for the \(\gamma \)- and the product \(\gamma \)-diagonally dominant degrees of the Schur complent in terms of the entries of original matrices. We need the following notations. Given \(\gamma \in [0,1]\) and \(\alpha \subseteq N\), denote

One of our main results in this section is as follows.

Theorem 3.1

Let \(A =(a_{ij})\in \mathbb {C}^{n\times n}\), and let \(\alpha =\{i_1,i_2,\ldots ,i_k\}\subseteq N_{\gamma }(A)\) and \(\bar{\alpha }=N-\alpha =\{j_1,j_2,\ldots ,j_l\}\) with \(1\le k<n\). Set \(A/\alpha =(a'_{ts})\). For \(1\le t\le l\), we have

and

Proof

Let \(B_t\) be the matrix constructed in Lemma 2.2. First we prove

Since \(B_t/\{1,2,\ldots ,k\}=x-|\overrightarrow{x}_{j_t}^*|\{\mu [A(\alpha )]\}^{-1}\left( \sum \limits _{s=1}^{l}|\overrightarrow{y}_{j_s}|\right) \), it is sufficient to prove \(B_t/\{1,2,\ldots ,k\}\ge 0\) when \(x=mx^r_{j_t}(A)\). Let \(x=mx^r_{j_t}(A,\alpha )+\varepsilon \). For any \(\varepsilon >0\), by Lemma 2.2, \(det(B_{t})>0\). Note that \(\mu (A(\alpha ))=B_t(\{1,2,\ldots ,k\})\) is an M-matrix and hence \(det[\mu (A(\alpha ))]>0\). It is well-known that

which implies that \(B_t/\{1,2,\ldots ,k\}>0\). We obtain (3.3) when \(\varepsilon \rightarrow 0^+\). Adopting the same arguments, we obtain

Then we have

Similarly, we can get (3.2). The proof is completed. \(\square \)

Corollary 3.1

Given \(\gamma \in [0,1]\), let \(A \in SD_{n}^\gamma \), \(\alpha =\{i_1,i_2,\ldots ,i_k\}\subseteq N\) and \(\bar{\alpha }=N-\alpha =\{j_1,j_2,\ldots ,j_l\}\) with \(1\le k<n\). If \(w_{j_t}\ge 0\) for all \(t\in \{1,2,,\ldots ,l\}\), then \(A/\alpha \in SD_{n-k}^{\gamma }\).

Corollary 3.2

Given \(\gamma \in [0,1]\), let \(A \in SD_{n}^\gamma \), \(\alpha =\{i_1,i_2,\ldots ,i_k\}\subseteq N\) and \(\bar{\alpha }=N-\alpha =\{j_1,j_2,\ldots ,j_l\}\) with \(1\le k<n\). If

then \(A/\alpha \in SD_{n-k}^\gamma \).

Recalling the definitions of \(w_j,w_j^r,w_j^c,mx_j^r(A,\alpha ),mx_j^c(A,\alpha ),t_i(A)\), we find \(w_j\) increases as \(|a_{i_si_s}|\) goes bigger, and when all \(|a_{i_si_s}|\) are big enough we always have \(w_j\ge 0\). Hence Corollary 3.1 implies that if each \(|a_{i_si_s}|\) is large sufficiently, Schur complements of matrices in \(SD^{\gamma }_n\) are still in \(SD^{\gamma }_{n-k}\); while Corollary 3.2 tells us that the same result holds if each \(|a_{j_tj_t}|\) is large sufficiently.

Example 3.1

Let \(\gamma =0.7\), \(\alpha =\{3,4\}\) and

It is clear that \(\bar{\alpha }=\{1,2\}\). Then \(i_1=3\), \(i_2=4\), \(j_1=1\) and \(j_2=2\). By directed computation, we have

\(P_{j_t}(A)\) | \(S_{j_t}(A)\) | \(w_{j_t}\) | |

|---|---|---|---|

\(j_1=1\) | 7 | 3 | 0.3374 |

\(j_2=2\) | 3 | 6 | −0.8972 |

Since

by Corollary 3.2, we have \(A/\alpha \in SD^\gamma _2\). Since \(|a_{33}|<P_3(A)\), the corresponding results in Cui et al. (2017); Liu and Huang (2010); Liu et al. (2010); Zhang et al. (2013) are not applicable. Since \(A(\alpha )\) is not diagonally dominant in column, the corresponding result in Zhou et al. (2022) is not applicable.

Notice that \(A/\alpha \) is a number and \(w_{n}^r=w_{n}^c=mx_{n}^r(A,\alpha )=mx_{n}^c(A,\alpha )\) when \(|\alpha |=n-1\). It follows immediately the following corollary from Theorem 3.1.

Corollary 3.3

Given \(0\le \gamma \le 1\), let \(A =(a_{ij})\in SD_{n}^\gamma \), and take \(\alpha =\{1,2,\ldots ,n-1\}\). Then

Proof

Since \(A/\alpha =a'_{11}\) is a number, \(P_{1}(A/\alpha )=S_{1}(A/\alpha )=0\). Moreover, \(P_n(A)=P_n^{\alpha }(A)\) and \(S_n(A)=S_n^{\alpha }(A)\). By Theorem 3.1, we get (3.5). \(\square \)

To get the corresponding results for the product \(\gamma \)-diagonally dominant matrices, we need the following technical lemma.

Lemma 3.1

Let \(A =(a_{ij})\in \mathbb {C}^{n\times n}\). Given \(0\le \gamma \le 1\), let \(\alpha =\{i_1,i_2,\ldots ,i_k\}\subseteq N_{\gamma }(A)\) and \(\bar{\alpha }=N-\alpha =\{j_1,j_2,\ldots ,j_l\}\) with \(1\le k<n\). Set \(A/\alpha =(a'_{ts})\). If for all \(1\le t\le l\), \(P_{j_t}(A)>w^r_{j_t}\ge 0\) and \(S_{j_t}(A)>w^c_{j_t}\ge 0\), then we have

Proof

Since \(\alpha \subseteq N_\gamma (A)\), \(A(\alpha )\in SD_k^{\gamma }\). It follows that \(\{\mu [A(\alpha )]\}^{-1}\ge |A(\alpha )^{-1}|\). Then we have

By the definition of Schur complements, we have

Recalling the definition of \(w_{j_t}^r\) and (3.3), we have

Applying the same arguments, we obtain

By Lemma 2.3, we get

which leads to (3.6). \(\square \)

Theorem 3.2

Let \(A =(a_{ij})\in \mathbb {C}^{n\times n}\). Given \(0\le \gamma \le 1\), let \(\alpha =\{i_1,i_2,\ldots ,i_k\}\subseteq N_\gamma (A)\) and \(\bar{\alpha }=N-\alpha =\{j_1,j_2,\ldots ,j_l\}\) with \(1\le k<n\). Set \(A/\alpha =(a'_{ts})\). If for all \(1\le t\le l\), \(P_{j_t}(A)>w^r_{j_t}\ge 0\) and \(S_{j_t}(A)>w^c_{j_t}\ge 0\), then we have

Proof

By the definition of the Schur complement,

By Lemma 3.1 and Lemma 2.3 we get

Similarly, we obtain

The proof is completed. \(\square \)

Remark that the conditions that \(P_{j_t}(A)>w^r_{j_t}\) and \(S_{j_t}(A)>w^c_{j_t}\) in Lemma 3.1 and Theorem 3.2 are easily satisfied since we always have \(P_{j_t}(A)\ge P_{j_t}^\alpha (A)\ge w^r_{j_t}\) and \(S_{j_t}(A)\ge S_{j_t}^\alpha (A)\ge w^c_{j_t}\).

4 Distribution for eigenvalues

In this section, we present some locations for eigenvalues of the Schur complement by the entries of the original matrix.

Lemma 4.1

(Ostrowski (1951)) Let \(A \in \mathbb {C}^{n\times n}\) and \( 0 \le \gamma \le 1\). Then, for every eigenvalue \( \lambda \) of A, there exists \( 0 \le i \le n \) such that

Theorem 4.1

Let \(A=(a_{ij}) \in \mathbb {C}^{n\times n}\). Given \(0\le \gamma \le 1\), let \(\alpha =\{i_1,i_2,\ldots ,i_k\}\subseteq N_\gamma (A)\) and \(\bar{\alpha }=N-\alpha =\{j_1,j_2,\ldots ,j_l\}\) with \(1\le k<n\). Set \(A/\alpha =(a'_{ts})\). If for all \(1\le t\le l\), \(P_{j_t}(A)>w_{j_t}^r\ge 0\) and \(S_{j_t}(A)>w_{j_t}^c\ge 0\), then for every eigenvalue \(\lambda \) of \(A/\alpha \), there exists \(1\le t\le n-k\) such that

Proof

By Lemma 4.1, for any eigenvalue \( \lambda \) of \(A/\alpha \), there exists \( 0 \le t \le n-k \) such that

Since \(\alpha \subseteq N_\gamma (A)\), \(A(\alpha )\in SD_k^{\gamma }\). It follows that \(\{\mu [A(\alpha )]\}^{-1}\ge |A(\alpha )^{-1}|\). Now we have

which implies (4.1). Note that (4.2) is derived from Lemma 3.1. The proof is completed. \(\square \)

Adopting the theorem of the arithmetic and geometric means on (4.2), we get the following result immediately.

Corollary 4.1

Let \(A=(a_{ij}) \in \mathbb {C}^{n\times n}\). Given \(0\le \gamma \le 1\), let \(\alpha =\{i_1,i_2,\ldots ,i_k\}\subseteq N_\gamma (A)\) and \(\bar{\alpha }=N-\alpha =\{j_1,j_2,\ldots ,j_l\}\) with \(1\le k<n\). Set \(A/\alpha =(a'_{ts})\). If for all \(1\le t\le l\), \(P_{j_t}(A)>w_{j_t}^r\ge 0\) and \(S_{j_t}(A)>w_{j_t}^c\ge 0\), then for every eigenvalue \(\lambda \) of \(A/\alpha \), there exists \(1\le t\le n-k\) such that

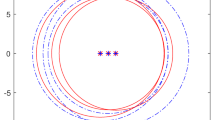

Next, we give a numerical example to estimate eigenvalues of the Schur complement with the entries of the original matrix to show the advantages of our results.

Example 4.1

Let

Then we get \(\bar{\alpha }=\{1,3,5\}\) and the following table.

\(P_{j_t}(A)\) | \(S_{j_t}(A)\) | \(w_{j_t}^r\) | \(w_{j_t}^c\) | \(w_{j_t}\) | |

|---|---|---|---|---|---|

\(j_1=1\) | 8 | 6 | 0.5511 | 3.5284 | 2.9329 |

\(j_2=3\) | 11 | 11 | 3.3737 | 5.4547 | 5.0385 |

\(j_3=5\) | 9 | 7 | 0.5511 | 3.8547 | 3.1940 |

By Theorem 4.1, the eigenvalue \(\lambda \) of \(A/\alpha \) are in the union of the three discs as follows.

By Corollary 4.1, the eigenvalue \(\lambda \) of \(A/\alpha \) are in the union of the three discs as follows.

Notice that \(\Gamma _2\) is not necessarily contained in \(\Gamma _1\). Since the second row is not diagonally dominant, the corresponding results in Cui et al. (2017); Liu and Huang (2010); Liu et al. (2010); Zhang et al. (2013) are not applicable. Since \(A(\alpha )\) is not diagonally dominant in row, the corresponding result in Zhou et al. (2022) is not applicable.

5 Bounds for determinants

Let \(\{j_1,j_2,\ldots ,j_n\}\) be a rearrangement of the elements in \(N=\{1,2,\ldots ,n\}\). Denote \(\alpha _1=\{j_n\},\alpha _2=\{j_{n-1},j_n\},\ldots ,\alpha _n=\{j_1,j_2,\ldots ,j_n\}=N\). Then \(\alpha _{n-k+1}- \alpha _{n-k}=\{j_k\}\), \(k=1,2,\ldots ,n\), with \(\alpha _0=\emptyset \). Let \(\mathcal {J}\) represent any rearrangement \(\{j_1,j_2,\ldots ,j_n\}\) of the elements in N with \(\alpha _1,\alpha _2,\ldots ,\alpha _n\) defined as above. By using disc separation of the Schur complement, Liu and Zhang (2005) presented the lower and upper bounds for determinants of the strictly diagonally dominant matrices as follows.

Theorem 5.1

Let \(A\in SD_n\). Then

and

In this section we present some upper and lower bounds for the determinants of the strictly \(\gamma \)-diagonally dominant matrices as an application of the new estimate of the \(\gamma \)-dominant degree of Schur complements.

Theorem 5.2

Let \(A\in SD_n^{\gamma }\). Then

and

Proof

For the first inequality, since \(\alpha _{n-k}\) is contained in \(\alpha _{n-k+1}\) and \(\alpha _{n-k+1}- \alpha _{n-k}=\{j_k\}\), we have, by Corollary 3.3, for each k

To be explicitly,

It follows that

This implies (5.1). The inequality (5.2) in the theorem is similarly proven. \(\square \)

Remark that if \(\gamma =1\), we obtain that

and

We can see that our results improve (Liu and Zhang 2005, Theorem 3) when \(\gamma =1\). Moreover, Theorem 5.2 has a wider range of applications.

Notice that when \(A\in SD_n^{\gamma }\), for any \(1\le k\le n\) and \(t\in \{k+1,k+2,\ldots ,n\}\),

We have the following claim immediately from the theorem.

Corollary 5.1

Let \(A\in SD_n^{\gamma }\). Then

and

where we let \(\sum \limits _{t=k+1}^n[\gamma |a_{j_kj_t}|+(1-\gamma )|a_{j_tj_k}|]=0\) when \(k=n\).

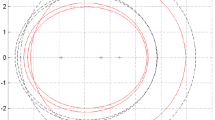

Example 5.1

Let \(n=3\) and take \(j_1=3\), \(j_2=1\) and \(j_3=2\). With \(\alpha _1=\{j_3\}=\{2\}\), \(\alpha _2=\{j_3,j_2\}=\{1,2\}\), and \(\alpha _3=\{j_3,j_2,j_1\}=\{1,2,3\}\), by Corollary 5.1, we have for any \(A\in SD_3^{\gamma }\),

and

Data availability

Not Applicable.

References

Carlson D, Markham T (1979) Schur complements of diagonally dominant matrices. Czech Math J 29(104):246–251

Cui J, Peng G, Lu Q, Huang Z (2017) The new improved estimates of the dominant degree and disc theorem for the Schur complement of matrices, Linear Multilinea. Algebra 65:1329–1348

Cvetković L, Nedović M (2009) Special \(H\)-matrices and their Schur and diagonal-Schur complements. Appl Math Comput 208:225–230

Cvetković L, Nedović M (2012) Eigenvalue localization refinements for the Schur complement. Appl Math Comput 218:8341–8346

Gu J, Zhou S, Zhao J, Zhang J (2021) The doubly diagonally dominant degree of the Schur complement of strictly doubly diagonally dominant matrices and its applications. Bull Iran Math Soc 47:265–285

Horn RA, Johnson CR (1985) Matrix Analysis. Cambridge University Press, New York

Horn RA, Johnson CR (1990) Topics in Matrix Analysis. Cambridge University Press, New York

Li CK (2000) Extremal characterizations of the Schur complement and resulting inequalities. SIAM Rev 42:233–246

Li B, Tsatsomeros M (1997) Doubly diagonally domiant matrices. Linear Algebra Appl 261:221–235

Li GQ, Liu JZ, Zhang J (2017) The disc theorem for the Schur complement of two class Submatrices with diagonally dominant properties. Numer Math Theor Meth Appl 10:84–97

Li C, Huang Z, Zhao J (2022) On Schur complements of Dashnic-Zusmanovich type matrices. Linear Multilinear Algebra 70:4071–4096

Liu JZ, Huang YQ (2004) Some properties on Schur complements of \(H\)-matrices and diagonally dominant matrices. Linear Algebra Appl 389:365–380

Liu JZ, Huang ZJ (2010) The Schur complements of \(\gamma \)-diagonally and product \(\gamma \)-diagonally dominant matrix and their disc separation. Linear Algebra Appl 432:1090–1104

Liu JZ, Zhang FZ (2005) Disc separation of the Schur complements of diagonally dominant matrices and determinantal bounds. SIAM J Matrix Anal Appl 27:665–674

Liu JZ, Huang YQ, Zhang FZ (2004) The Schur complements of generalized doubly diagonally dominant matrices. Linear Algebra Appl 378:231–244

Liu JZ, Huang ZJ, Zhang J (2010) The dominant degree and disc theorem for the Schur complement. Appl Math Comput 215:4055–4066

Liu JZ, Zhang J, Liu Y (2012) The Schur complement of strictly doubly diagonally dominant matrices and its application. Linear Algebra Appl 437:168–183

Liu JZ, Zhang J, Zhou LX, Tu G (2018) The Nekrasov diagonally dominant degree on the Schur complement of Nekrasov matrices and its applications. Appl Math Comput 320:251–263

Liu JZ, Xiong YB, Liu Y (2022) The closure property of the Schur complement for Nekrasove matrices and its applications in solving large linear systems with Schur-based method. Comput Appl Math 39:290

Ostrowski AM (1951) Uber das Nichverchwinder einer Klass von Determinanten und die Lokalisierung der charakterischen Wurzeln von Matrizen. Composition Math 9:209–226

Sang C (2021) Schur complement-based infinity norm bounds for the inverse of DSDD matrices. Bull Iran Math Soc 47:1379–1398

Smith RL (1992) Some Interlacing Properties of the Schur Complement of a Hermitian Matrix. Linear Algebra Appl 177:137–144

Song X, Gao L (2023) On Schur Complements of Cvetković-Kostić-Varga Type Matrices. Bull Malays Math Sci Soc 46:49

Varga RS (2004) Geršgorin and his circles. Springer-Verlag, Berlin

Zhang FZ (2006) The Schur Complement and Its Applications. Springer, Berlin

Zhang C, Xu C, Li Y (2007) The eigenvalue distribution on Schur complements of \(H\)-matrices. Linear Algebra Appl 422:250–264

Zhang J, Liu JZ, Tu G (2013) The improved disc theorems for the Schur complements of diagonally dominant matrices. J Ineq Appl 2013:16

Zhou LX, Lyu ZH, Liu JZ (2022) The Schur Complement of \(\gamma \)-Dominant Matrices. Bull Iran Math Soc 48:3701–3725

Acknowledgements

The authors thank Dr. Shiyun Wang and Mr. Qi Li for their good suggestions which improve the presentation of this manuscript.

Funding

The work was supported by Guangxi Provincial Natural Science Foundation of China (No. 2023GXNSFAA026514) and the National Nature Science Foundation of China (No. 12171323,12301591).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no Conflict of interest.

Ethical approval

Not Applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lyu, Z., Zhou, L. & Ma, J. The \(\gamma \)-diagonally dominant degree of Schur complements and its applications. Comp. Appl. Math. 43, 342 (2024). https://doi.org/10.1007/s40314-024-02868-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-024-02868-3