Abstract

In this paper, several conditions are presented to keep the Schur complement via a non-leading principle submatrix of some special matrices including Nekrasov matrices being a Nekrasov matrix, which is useful in the Schur-based method for solving large linear equations. And we give some infinity norm bounds for the inverse of Nekrasov matrices and its Schur complement to help measure whether the classical iterative methods are convergent or not. At last, in the applications of solving large linear equations by Schur-based method, some numerical experiments are presented to show the efficiency and superiority of our results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In general, it is hard to obtain the exact solutions to all differential equations. Thus, variational method, finite difference method, and finite element method, etc. are applied to compute their numerical solutions. Then, the problem to solve some differential equations, such as the Euler equation, the elliptic equation and the Dirichlet problem (Elsner and Mehrmann 1991; Hadjidimos 1983), and so on often transforms into a problem of solving linear systems. Luckily, the coefficient matrix is usually a special matrix like diagonal dominant matrix, H-matrix or Nekrasov matrix, and so on (Forsythe 1953; Karlqvist 1952).

For instance, considering the two-point boundary value problem: (Ames 2014; Thomas 2013):

with \(u(a)=\alpha ,~u(b)=\beta \), and p is a first-order continuous differentiable function on the region [a, b], \(p(x)\ge p_{min}>0\), r, q, and f are continuous on the region [a, b], and \(\alpha \) and \(\beta \) are constant numbers. The two-point boundary problem is useful in automatic control, design of various electronic devices, calculation of trajectory, study of the stability of aircraft and missile flight, and study of the stability of chemical reaction process (Braun and Golubitsky 1983; Chicone 2006; Nieto and Rodríguez-López 2005). And when the direct differential method with uniform mesh is applied to the problem, we get a linear equations:

where \(h=\frac{b-a}{N}\), \(u_i=u(x_i)\), \(p_i=p(x_i)\), \(r_i=r(x_i)\), \(q_i=q(x_i)\), \(f_i=f(x_i)\), and \(a=x_0\), \(b=x_N\), \(x_{i}=x_{i-1}+h\), with \(x_{i-\frac{1}{2}}=\frac{1}{2}(x_{i-1}+x_i)\), \(i=1,2,\cdots , N\); for details, see Thomas (2013). In particular, consider the problem on the region [0, 10], if \(r=-3\), \(q=200\), and \(p(x)=0.01+0.005\sin (30\pi x+\omega )\), such that \(p_{\frac{2k-1}{2}}=0.01+0.005\cdot (-1)^{k}\), \(k=1,\cdots , N\). If we take \(N=10^{3}\), then the linear equations can be simplified as \(Ax=b\), where:

is not a strictly diagonally dominant matrix but a Nekrasov matrix. In general, an \(n\times n\) complex matrix \(A=(a_{ij})\) is a Nekrasov matrix Szulc (1995), simply for \(A\in {\mathbf {N}}_n\), if \(a_{ii}>R_i(A), i=1,2,\cdots ,n\), where

Nekrasov matrices are widely applied in linear system and control theory (Esnaola and Peña 2014; Pang and Li 2003). And many conclusions on Nekrasov matrices have been proposed, such as their properties of nonsingularity (Pang and Li 2003; Li 1998), the bounds for their determinant (Baily and Crabtree 1969; Yu 2015) and the infinity norm bounds for their inverse (Cvetkovic̆ et al. 2013, 2012; Gao et al. 2014), etc. On the other hand, Nekrasov matrices belong to H-matrices, which lead many classical iterative methods, such as Jacobi method, Gauss-Seidel method, SOR, SSOR, AOR, etc., to being convergent. In addition, Liu and Zhang et al. (Liu et al. (2018)) have proven that the Schur complement via a leading principle submatrix of Nekrasov matrix is still a Nekrasov matrix, and they also presented some bounds for the infinity norm of the inverse of the Schur complements and the determinants of Nekrasov matrices.

To solve linear equations \(Ax=b\), many classical iterative methods, such as Jacobi method, Gauss-Seidel method, SOR, SSOR, AOR, etc. Demmel (1997), have been proposed. However, if the coefficient matrix A is in large scale, the Schur-based method may be more efficient than using classical methods directly in some cases; see Example 5.1-5.3. Let us recall the Schur-based method; suppose P is a suitable permutation matrix, and then the linear system can be changed as \(PAP^{T}Px=Pb\), and partition the system as:

where \(A[\beta _1|\beta _2]\) is the submatrix of A whose rows and columns are determined by \(\beta _1\) and \(\beta _2\), respectively. If \(\beta _1=\beta _2\), \(A[\beta _1|\beta _2]\) is abbreviated as \(A[\beta _1]\). In addition, \(\alpha ^{c}\) is the complement set of \(\alpha \) via the natural set \(\{1,2,\cdots ,n\}\). Assume \(A[\alpha ]\) is nonsingular, then it is equivalent to solve the following two smaller equations (for details Liu and Zhang (2005); Liu et al. (2011)):

where \(A[\alpha ^{c}]-A[\alpha ^{c}|\alpha ](A[\alpha ])^{-1}A[\alpha |\alpha ^{c}]\) is just the Schur complement of A via \(A[\alpha ]\), abbreviated \(A/A[\alpha ]\), it can also be written as \(A/\alpha \). If \(\alpha =\{1,2,\cdots , s\}\), then \(A/\alpha \) is the Schur complement of A via its leading principle submatrix; otherwise, \(A/\alpha \) is the Schur complement of A via its non-leading principle submatrix. In general, the closure property of Nekrasov matrices in this paper means that there is a subset \(\alpha \), such that both \(A[\alpha ]\) and \(A/\alpha \) are Nekrasov matrices under some issues.

Since we need to compute the inverse of a principle submatrix when taking advantage of the Schur-based method, we would better make the principle submatrix to be sparse or simple. However, for a large Nekrasov matrix, according to Liu et al. (2018), both the leading principle submatrix of Nekrasov matrix and its Schur complement are Nekrasov matrices, and hence, we can apply the Schur-based methods to solve the linear equations by choosing the leading principle submatrix. Nevertheless, if there is a more simple sparse non-leading principle submatrix, such as a diagonal matrix, than the leading principle submatrix, we are more willing to choose the non-leading principle submatrix using the Schur-based method. For instance, consider a large-scaled Nekrasov matrix A in (1), and then, we are willing to use Schur-based method to solve \(Ax=b\) for reduction in size. Even the leading principle submatrix of A is a tridiagonal matrix, but observed that the submatrix formed with the odd rows and columns is a diagonal matrix, i.e., let \(\beta =\{1,3,5,9,\cdots ,N-1\}\), and then, \(A[\beta ]=diag(4,4,\cdots ,4)\), which must be more easy to compute its inverse. And then, it is cheaper to solve the large linear equations by choosing the non-leading principle submatrix \(A[\beta ]\) with Schur-based method, that will be verified in Example 5.3 later.

Unfortunately, the Schur complement via a non-leading principle submatrix of Nekrasov matrices may be not a Nekrasov matrix again. For example, given a Nekrasov matrix \(A=\begin{pmatrix}100&{}0&{}10\\ -10&{}20&{}0\\ 9&{}1&{}1 \end{pmatrix}\), let \(\alpha _1=\{3\}\), then  is clearly not a Nekrasov matrix. However, for another Nekrasov matrix \(B=\begin{pmatrix} 8&{}1&{}1&{}0\\ 12&{}8&{}0&{}1\\ 5&{}7&{}8&{}0\\ 16&{}7&{}0&{}8 \end{pmatrix}\), let \(\alpha _2=\{3,4\}\), and then, \(B/ \alpha _2=\left( \begin{array}{cc}7.375&{}0.125\\ 10&{}7.125 \end{array}\right) \) is a Nekrasov matrix. Therefore, we are motivated to find some restrictive conditions to keep the Schur complement via a non-leading principle submatrix of a Nekrasov matrix still being a Nekrasov matrix. In this way, we extend the conclusion in Liu et al. (2018), and for Nekrasov linear equations, we could use the Schur-based method in more ways, which avoid the awkward situation of choosing only the leading principle submatrix.

is clearly not a Nekrasov matrix. However, for another Nekrasov matrix \(B=\begin{pmatrix} 8&{}1&{}1&{}0\\ 12&{}8&{}0&{}1\\ 5&{}7&{}8&{}0\\ 16&{}7&{}0&{}8 \end{pmatrix}\), let \(\alpha _2=\{3,4\}\), and then, \(B/ \alpha _2=\left( \begin{array}{cc}7.375&{}0.125\\ 10&{}7.125 \end{array}\right) \) is a Nekrasov matrix. Therefore, we are motivated to find some restrictive conditions to keep the Schur complement via a non-leading principle submatrix of a Nekrasov matrix still being a Nekrasov matrix. In this way, we extend the conclusion in Liu et al. (2018), and for Nekrasov linear equations, we could use the Schur-based method in more ways, which avoid the awkward situation of choosing only the leading principle submatrix.

Furthermore, in problems of solving a linear system \(Ax=b\), the bound of \(\Vert A^{-1}\Vert _{\infty }\) plays a significant role. First, \(\Vert A^{-1}\Vert _{\infty }\) determines the condition number of A, that measure the error bounds of the solutions. Second, it helps estimate the spectrum radius of A, which is usually used to predict whether the classical iterative methods are convergent or not. Thus, if we use the Schur-based method to solve large-scale linear equations, for predicting the convergence of the classical iterative methods, we need to estimate \(\Vert (A[\alpha ])^{-1}\Vert _{\infty }\) and \(\Vert (A/\alpha )^{-1}\Vert _{\infty }\), where \(\alpha \subset \{1,2,\cdots ,n\}\). The former is easy to obtain by existed upper bounds, so we will put more emphasis on the latter one.

In this paper, we give several conditions such that the Schur complement of a Nekrasov matrix is still a Nekrasov matrix. And one of the conditions is established naturally when it is acted on the leading principle submatrix of Nekrasov matrices, which generalizes the conclusion of Theorem 2.1 in Liu et al. (2018). In addition, the bounds of \(\Vert (A/\alpha )^{-1}\Vert _{\infty }\) are presented with initial elements of A, bringing much convenience in computing. Excitedly, for a large-scale Nekrasov matrix A, even the bound of \(\Vert A^{-1}\Vert _{\infty }\) is lost to its exact value and much more than 1, but the bounds of \(\Vert (A[\alpha ])^{-1}\Vert _{\infty }\) and \(\Vert (A/\alpha )^{-1}\Vert _{\infty }\) are much more accuracy, which shows that the classical iterative methods are convergent to solve the smaller Eqs. (2) and (3). In the end, some numerical experiments of the applications in solving large linear equations with Schur-based method are presented to show the superiority of our results.

The rest of the paper is organized as follows. In Sect. 2, we introduce some definitions and lemmas. In Sect. 3, we present our main results on the Schur complement of some special matrices including Nekrasov matrices. In Sect. 4, some infinity norm upper bounds for the inverse of the Schur complements of Nekrasov matrices are presented. And in Sect. 5, we present some numerical experiments to show the effectiveness in the applications of solving large linear equations with Schur-based method by choosing a non-leading principle submatrix in some cases.

2 Preliminaries

To begin with our idea, we would present some designment about the symbols, \(A=(a_{ij})\in {\mathbb {C}}^{n\times n}\) \(({\mathbb {R}}^{n\times n})\) implies A is an \(n\times n\) complex (real) matrix, and \(A\ge ~(>) ~0\) means that A is a nonnegative (positive) matrix. In addition, \(|A|=(|a_{ij}|)\) is the matrix with all elements being its own length of that in A, and \(\rho (A)\) is the spectral radius of A. Let \({\mathbf {Z}}_n\) be the set for all matrices whose nondiagonal elements are nonpositive and we denote \({\mathbb {N}}=\{1,2,\cdots ,n\}\).

Definition 2.1

Zhang (2005) Let \(\alpha \subset {{\mathbb {N}}}\) be arranged in increasing sequence, \(\alpha ^{c}\) is the complement set of \(\alpha \) in \({{\mathbb {N}}}\), and \(A[\alpha |\alpha ^{c}]\) implies the submatrix of A with its rows and columns indicated by \(\alpha \) and \(\alpha ^{c}\), respectively. In particular, we simplify \(A[\alpha |\alpha ]\) for \(A[\alpha ]\), and if \(A[\alpha ]\) is nonsingular, the Schur complement of A with respect to \(A[\alpha ]\) is denoted by \(A/A[\alpha ]\) or simply \(A/\alpha \) to be:

If there is no specific explanation, in the rest of the paper, all sets are arranged in increasing sequence. Next, we would draw on some of the definitions and conclusions in Berman and Plemmons (1979).

Definition 2.2

Berman and Plemmons (1979) Let \(A=(a_{ij})\in {\mathbb {C}}^{n\times n}\), and denote \(P_i(A)=\sum \limits _{j\ne i}|a_{ij}|\); if

then A is a diagonal dominant matrix, denote \(A\in {\mathbf {D}}_n\). While if the inequalities are all held strictly, then A is said to be a strictly diagonal dominant matrix, and denote \(A\in {\mathbf {SD}}_n\). If there exists a positive diagonal dominant matrix D, such that \(AD\in {\mathbf {SD}}_n\), then A is a generalized diagonal dominant matrix.

Definition 2.3

Berman and Plemmons (1979) A matrix \(A\in {\mathbb {R}}^{n\times n}\) is an M-matrix if and only if \(A=sI-B\), where \(B\ge 0\) and \(s\ge \rho (B)\). In particular, A is a nonsingular M-matrix, if and only if \(s>\rho (B)\), simply for \(A\in {\mathbf {M}}_n\).

Actually, \(A\in {\mathbf {Z}}_n\) is a nonsingular M-matrix if and only if \(A^{-1}\ge 0\).

Definition 2.4

Berman and Plemmons (1979) For \(A=(a_{ij})\in {\mathbb {C}}^{n\times n}\), we define its comparison matrix \(\mu (A)=(\tilde{a}_{ij})\in {\mathbb {R}}^{n\times n}\) by \(\tilde{a}_{ii}=|a_{ii}|\), \(\tilde{a}_{ij}=-|a_{ij}|, ~i\ne j, ~i,j\in {{\mathbb {N}}}\). If \(A=\mu (A)\) is a nonsingular M-matrix, then A is an H-matrix, simply for \(A\in {\mathbf {H}}_n\).

Lemma 2.1

Horn and Johnson (1991) Let A be an H-matrix, and then, \(|A^{-1}|\le \{\mu (A)\}^{-1}\).

For the fact that \({\mathbf {N}}_n\subset {\mathbf {H}}_n\) Ostrowski (1937), if \(A\in {\mathbf {N}}_n\), \(|A^{-1}|\le \{\mu (A)\}^{-1}\).

Lemma 2.2

Let \(a>b>c>0\), and then, \(\frac{b-c}{a-c}\ge \frac{b}{a}\).

Proof

For simple computation, \(\frac{b-c}{a-c}=\frac{a(b-c)}{a(a-c)}\le \frac{ab-bc}{a(a-c)}=\frac{b}{a}\). \(\square \)

3 The main results

In this section, we would present three conditions to keep both the principles submatrix and its Schur complement being Nekrasov matrices for some special matrices including Nekrasov matrices. Since we need deal with the inverse matrix in the definition of the Schur complement, we will first present a lemma that would be used in the following theorems.

Lemma 3.1

Let \(A=(a_{ij})\in {\mathbb {C}}^{n\times n}\) be a Nekrasov matrix. Suppose \(D=diag(d_1,\cdots ,d_n)\), where \(d_i=\frac{R_i(A)+\tilde{\varepsilon _i}}{|a_{ii}|}\), and \(\tilde{\varepsilon }_i>\sum \nolimits _{j=1}^{i-1}\frac{|a_{ij}|}{|a_{jj}|}\tilde{\varepsilon }_{j}\ge 0\), with \(\tilde{\varepsilon _i}<|a_{ii}|-R_i(A)\), \(i\in {{\mathbb {N}}}\). If \(\mu (A)Dx=b\), where \(0\le b\in {\mathbb {R}}^{n}\), then:

that is:

Proof

It is obvious that \(0<d_i=\frac{R_i(A)+\tilde{\varepsilon }_i}{|a_{ii}|}<1, i=1,2,\cdots ,n\), and thus, for any \(i\in {{\mathbb {N}}}\):

Hence, \(AD\in {\mathbf {SD}}_n\). According to Lemma 2.1, \(x=D^{-1}\{\mu (A)\}^{-1}b\ge D^{-1}|A^{-1}|b \ge 0\). Suppose \(\Vert x\Vert _{\infty }=x_p\), and consider the pth row of the equation:

since \(AD\in {\mathbf {SD}}_n\), so \(|a_{pp}|d_p-\sum \nolimits _{j\ne p}|a_{pj}|d_j>0\). Then, by (6), we have:

which illustrates:

Then, the proof is completed. \(\square \)

Remark 3.1

Observed that \(\{\tilde{\varepsilon _t}\}_{1\le t\le n}\) in Lemma 3.1 are just for the positivity of the right hand in (6). Thus, if we can get rid of the numbers \(\{\tilde{\varepsilon _t}\}_{1\le t\le n}\) to compute the infinity norm bound in (4), the results would be simple and perfect. In fact, if \(b_h=0\), then \(\frac{b_h}{R_h(A)+\tilde{\varepsilon }_h-\sum \nolimits _{t\ne h}|a_{ht}|\frac{R_t(A)+\tilde{\varepsilon }_t}{|a_{tt}|}}=0\), and it could not be the maximum value. Therefore, if \(R_h(A)-\sum \nolimits _{t\ne h}|a_{ht}|\frac{R_t(A)}{|a_{tt}|}=0\) implies \(b_h=0\), we just denote \(\frac{b_h}{R_h(A)-\sum \nolimits _{t\ne h}|a_{ht}|\frac{R_t(A)}{|a_{tt}|}}=0\), and then, we could get rid of bringing in the numbers \(\{\tilde{\varepsilon _t}\}_{1\le t\le n}\). In this case, we set all numbers \(\{\tilde{\varepsilon _t}\}_{1\le t\le n}\) to be zero.

Next, we will give a restrictive condition for some special matrices, such that their Schur complement is a Nekrasov matrix.

Theorem 3.1

Let \(A\in {\mathbb {C}}^{n\times n}\), and \(A[\alpha ]\in {\mathbf {N}}_s\), where \(\alpha =\{i_1,\cdots ,i_s\}\), and \(\alpha ^{c}=\{j_1,\cdots ,j_{n-s}\}\), suppose \(\varepsilon _t>\sum \nolimits _{k=1}^{t-1}\frac{|a_{i_ti_k}|}{|a_{i_ki_k}|}\varepsilon _k\ge 0\), with \(0<\varepsilon _{i_k}<|a_{i_ki_k}|-R_k(A[\alpha ])\). If, for all \(u=1,\cdots ,n-s:\)

where

then, \(A/\alpha \) is a Nekrasov matrix.

Proof

Let \(A/\alpha =(a'_{pq})\in {\mathbb {C}}^{(n-s)\times (n-s)}\), and then, according to Definition 2.1:

As \(A[\alpha ]\in {\mathbf {N}}_s\), so \(|(A[\alpha ])^{-1}|\le \{\mu (A[\alpha ])\}^{-1}\) by Lemma 2.1, then:

where \(\Delta _{pq}=(|a_{j_pi_1}|,\cdots ,|a_{j_pi_s}|)\{\mu (A[\alpha ])\}^{-1}(|a_{i_1j_q}|, \cdots ,|a_{i_sj_q}|)^{T}\). Furthermore, if we denote a positive diagonal matrix \(D_{\alpha }=diag(\frac{R_1(A[\alpha ])+\varepsilon _1}{|a_{i_1i_1}|},\cdots , \frac{R_s(A[\alpha ])+\varepsilon _s}{|a_{i_si_s|}}),\) by (5) in Lemma 3.1, we obtain:

Next, we shall prove that \(A/\alpha \) is a Nekrasov matrix by definition with mathematical induction. For \(u=1\):

Suppose for \(1\le u\le p-1\):

then, for \(u=p\):

Thus, \(A/\alpha \) is a Nekrasov matrix by definition. And the proof is completed. \(\square \)

There is an example for demonstrating and verifying Theorem 3.1. Furthermore, for a matrix \(A=(a_{ij})\in {\mathbb {C}}^{n\times n}\), we would take \(\{\varepsilon _t\}_{1\le t\le n}\) as follows if necessary:

Suppose \(\varepsilon _n=L\varepsilon _1\), and then, let \(\varepsilon _1=\frac{\min \limits _{1\le i\le n}\{a_{ii}-R_i(A)\}}{2L}\).

Example 3.1

Let \(A=\begin{pmatrix} 3 &{}0.03 &{}2 &{}0&{} 0.9\\ 1 &{}2.2 &{}0 &{}1&{} 0.5\\ 1 &{}0.005 &{}2 &{}0.02&{} 1\\ 0.2&{}0 &{}1 &{}1.8&{} 0.7\\ 3 &{}0 &{}1 &{}0.005&{} 4\\ \end{pmatrix};\) it is obvious that \(A\notin {\mathbf {N}}_5\). However, if \(\alpha =\{1,3,5\}\), \(A[\alpha ]\in {\mathbf {N}}_3\). In addition, according to Theorem 3.1:

and

besides: \({\mathfrak {R}}_2(A)=2.1913<a_{22}, ~{\mathfrak {R}}_4(A)=1.5245<a_{44}.\) Hence, by Theorem 3.1, \(A/\alpha \in {\mathbf {N}}_2\). In fact, by simple computation, we have:

it is easy to see \(A/\alpha \in {\mathbf {N}}_2\). Hence, this example simply clarifies the above theorem.

It is easy to see that the matrix A in Theorem 3.1 needs not to be a Nekrasov matrix, as long as there exist \(\alpha \subset {{\mathbb {N}}}\), such that \(A[\alpha ]\in {\mathbf {N}}_s\) with the condition (8) held; we have \(A/\alpha \in {\mathbf {N}}_{n-s}\). Furthermore, if the rows with the index in \(\alpha \) are all strictly diagonal dominant, the conclusion in Theorem 3.1 stands still.

Corollary 3.1

Let \(A\in {\mathbb {C}}^{n\times n}\); if \(\alpha =\{i_1,\cdots ,i_s\}\), and \(\alpha ^{c}=\{j_1,\cdots ,j_{n-s}\}\) such that \(|a_{i_ti_t}|>R_{i_t}(A), ~t=1,\cdots ,s\), with (8) and (9) held, and \(\varepsilon _t\) are defined the same as that in Theorem 3.1. Then, \(A/\alpha \in {\mathbf {N}}_{n-s}\).

Proof

The proof is similar to that of Theorem 3.1, because \(A[\alpha ]\in {\mathbf {N}}_s\). \(\square \)

If A is a Nekrasov matrix, the conclusion also holds.

Corollary 3.2

Let \(A\in {\mathbf {N}}_n\), \(\alpha =\{i_1,\cdots ,i_s\}\), and \(\alpha ^{c}=\{j_1,\cdots ,j_{n-s}\}\); if (8) and (9) are held, and \(\varepsilon _t\) are defined the same as that in Theorem 3.1, then \(A/\alpha \in {\mathbf {N}}_{n-s}\).

Proof

Since \(A\in {\mathbf {N}}_n\), there must be a fact that \(A[\alpha ]\in {\mathbf {N}}_s\), and then, the proof is completed. \(\square \)

Actually, if A is a Nekrasov matrix, there is a more precise conclusion.

Theorem 3.2

Let \(A\in {\mathbf {N}}_n\), let \(\alpha =\{i_1,\cdots , i_s\}\), \(\alpha ^{c}=\{j_1,\cdots ,j_{n-s}\}\), and then, \(A/\alpha \in {\mathbf {N}}_{n-s}\) if (i) or (ii) holds for all \(j_u\in \alpha ^{c}\):

where

in particular, if \(R_{i_h}(A) -\sum \nolimits _{t\ne h} |a_{i_hi_t}|\frac{R_{i_t}(A) }{|a_{i_ti_t}|}=0, ~i_h\in \alpha \), there must be \(\sum \nolimits _{t=1}^{n-s}|a_{i_hj_t}|=0\); in this case, just design \(\frac{\sum \nolimits _{t=1}^{n-s}|a_{i_hj_t}|}{R_{i_h}(A) -\sum \nolimits _{t\ne h} |a_{i_hi_t}|\frac{R_{i_t}(A) }{|a_{i_ti_t}|}}=0\).

Proof

-

(i)

As A is a Nekrasov matrix, \(\frac{R_i(A)}{|a_{ii}|}<1, i=1,2,\cdots ,n\), thus \(R_{i_h}(A)\ge \sum \nolimits _{t\ne h} |a_{i_hi_t}|\frac{R_{i_t}(A) }{|a_{i_ti_t}|}\), and if \(R_{i_h}(A)-\sum \nolimits _{t\ne h}|a_{i_hi_t}|\frac{R_{i_t}(A) }{|a_{i_ti_t}|}=0\), there must be that \(\sum \nolimits _{t=1}^{n-s}|a_{i_hj_t}|=0\). Hence, if (11) holds, similar to the proof of Theorem 3.1, \(A/\alpha \) is a Nekrasov matrix.

-

(ii)

While if (12) holds, as \(A\in {\mathbf {N}}_n\), by Lemma 2.1, \(|A^{-1}|\le \{\mu (A)\}^{-1}\). Let \(A/\alpha =(a'_{pq})\), and so, \(a'_{pq}=a_{j_pj_q}-(a_{j_pi_1},\cdots ,a_{j_pi_s}) (A[\alpha ])^{-1}(a_{i_1j_q},\cdots ,a_{i_sj_q})^{T}\), then

$$\begin{aligned} |a_{j_pj_q}|-\Delta _{pq} \le |a'_{pq}|\le |a_{j_pj_q}|+\Delta _{pq}, \end{aligned}$$where \(\Delta _{pq}=(|a_{j_pi_1}|,\cdots ,|a_{j_pi_s}|)\{\mu (A[\alpha ])\}^{-1}(|a_{i_1j_q}|,\cdots , |a_{i_sj_q}|)^{T}\). Indeed, by Lemma 3.1 and Remark 3.1, for any \(p=1,2,\cdots , n-s\):

$$\begin{aligned} \sum \limits _{t=1}^{n-s}\Delta _{pt}&=(|a_{j_pi_1}|,\cdots ,|a_{j_pi_s}|)D_sD_s^{-1}\{\mu (A[\alpha ])\}^{-1}\begin{pmatrix}\sum \limits _{t=1}^{n-s}|a_{i_1j_t}|\\ \vdots \\ \sum \limits _{t=1}^{n-s}|a_{i_sj_t}|\\ \end{pmatrix}\\&\le (|a_{j_pi_1}|\frac{R_{i_1}(A)}{|a_{i_1i_1}|},\cdots ,|a_{j_pi_s}|\frac{R_{i_s}(A)}{|a_{i_si_s}|})\cdot M_0\begin{pmatrix}1\\ \vdots \\ 1\end{pmatrix}\\&\le M_0\sum \limits _{t=1}^{s}|a_{j_pi_t}|\frac{R_{i_t}(A)}{|a_{i_ti_t}|}, \end{aligned}$$where \(D_s=diag(\frac{R_{i_1}(A)}{|a_{i_1i_1}|},\cdots ,\frac{R_{i_s}(A)}{|a_{i_si_s}|})\). Hence, for all \(p=1,2,\cdots ,n-s\), we obtain:

$$\begin{aligned} |a_{j_pj_p}|-\Delta _{pp}>&R_{j_p}(A)-M_0 \sum \limits _{t=1}^{s}|a_{j_pi_t}|\frac{R_{i_t}(A)}{|a_{i_ti_t}|} \ge {\mathcal {R}}_{j_p}(A)-M_0\sum \limits _{t=1}^{s}|a_{j_pi_t}|\frac{R_{i_t}(A)}{|a_{i_ti_t}|}\ge 0. \end{aligned}$$Then, by absolute inequalities and Lemma 2.2, for \(p=1\):

$$\begin{aligned} \frac{R_1(A/\alpha )}{|a'_{11}|}=&\frac{\sum \limits ^{n-s}_{t=2}|a_{j_1j_t}-\Delta _{1t}|}{|a_{j_1j_1}-\Delta _{11}|}\le \frac{\sum \limits ^{n-s}_{t=2}(a_{j_1j_t}+\Delta _{1t})}{|a_{j_1j_1}|-\Delta _{11}} =\frac{\sum \limits ^{n-s}_{t=2}|a_{j_1j_t}|+\sum \limits ^{n-s}_{t=1}\Delta _{1t}-\Delta _{11}}{|a_{j_1j_1}|-\Delta _{11}}\\ \le&\frac{\sum \limits ^{n-s}_{t=2}|a_{j_1j_t}|+M_0\sum \limits _{t=1}^{s}|a_{j_1i_t}|\frac{R_{i_t}(A)}{|a_{i_ti_t}|}-\Delta _{11}}{|a_{j_1j_1}|-\Delta _{11}} \le \frac{R_{j_1}(A)-\Delta _{11}}{|a_{j_1j_1}|-\Delta _{11}}\le \frac{R_{j_1}(A)}{|a_{j_1j_1}|}<1, \end{aligned}$$suppose for \( 2\le p\le u-1\):

$$\begin{aligned} \frac{R_p(A/\alpha )}{|a'_{pp}|}\le \frac{R_{j_p}(A)}{|a_{j_pj_p}|}<1; \end{aligned}$$then, for \(p=u\):

$$\begin{aligned} \frac{R_u(A/\alpha )}{|a'_{uu}|}&=\frac{\sum \limits ^{u-1}_{t=1}|a'_{ut}|\frac{R_t(A/\alpha )}{|a'_{tt}|}+\sum \limits ^{n-s}_{t=u+1}|a'_{ut}|}{|a'_{uu}|}\\&\le \frac{{\sum \limits _{t = 1}^{u - 1} {\left( {|{a_{{j_u}{j_t}}}| + {\Delta _{ut}}} \right) } \frac{{{R_{{j_t}}}(A)}}{{|{a_{{j_t}{j_t}}}|}} + \sum \limits _{\mathrm{{t}} = u + 1}^{n - s} {\left( {|{a_{{j_uj_t}}}| + {\Delta _{ut}}} \right) } }}{{|{a_{{j_u}{j_u}}}| - {\Delta _{uu}}}}\\&\le \frac{{\sum \limits _{t = 1}^{u - 1} | {a_{{j_u}{j_t}}}|\frac{{{R_{{j_t}}}(A)}}{{|{a_{{j_t}{j_t}}}|}} + \sum \limits _{t = u + 1}^{n - s} | {a_{{j_u}{j_t}}}| + \sum \limits _{t = 1}^{u - 1} {{\Delta _{ut}}} + \sum \limits _{t = u + 1}^{n - s} {{\Delta _{ut}}} }}{{|{a_{{j_u}{j_u}}}| - {\Delta _{uu}}}}\\&=\frac{\sum \limits ^{u-1}_{t=1}|a_{j_uj_t}|\frac{R_{j_t}(A)}{|a_{j_tj_t}|}+\sum \limits ^{n-s}_{t=u+1}|a_{j_uj_t}|+\sum \limits _{t=1}^{n-s}\Delta _{ut}-\Delta _{uu}}{|a_{j_uj_u}|-\Delta _{uu}}\\&\le \frac{R_{j_u}(A)-\Delta _{uu}}{|a_{j_uj_u}|-\Delta _{uu}} \le \frac{R_{j_u}(A)}{|a_{j_uj_u}|}<1; \end{aligned}$$so, \(A/\alpha \) is a Nekrasov matrix. Then, the proof is finished.

\(\square \)

Actually, if \(\alpha =\{1, 2, \cdots , k\}\), (12) is naturally established, then \(A/\alpha \) is a Nekrasov matrix. And in this case, the theorem is a generalization of that in Liu et al. (2018).

Corollary 3.3

Let \(A\in {\mathbf {N}}_n\) and \(\alpha =\{1,2,\cdots ,s\}\), and then, \(A/\alpha \in {\mathbf {N}}_{n-s}\).

Proof

If \(\alpha =\{1,2,\cdots ,s\}\), consider the inequalities in (12), in this situation, \(M_0=1\), and for \(u=s+1,\cdots ,n\):

Hence,if \(\alpha =\{1,2,\cdots ,s\}\), (12) is established naturally, then by Theorem 3.2, the proof is completed. \(\square \)

4 Upper bounds of \(\Vert (A/\alpha )^{-1}\Vert _{\infty }\)

In this section, we would first estimate the infinity norm bound for the inverse of Nekrasov matrices and its Schur complements, which could be used to predict whether the classical iterative methods are suitable for the smaller equations (2) and (3). In general, the classical iterative methods for linear systems are convergent if and only if the spectrum radius of the inverse of iterative matrix is strictly less than 1. Furthermore, the spectrum radius of the inverse of iterative matrix can be measured by the infinity norm of the inverse of the matrix, and the smaller the spectrum radius of the inverse of iterative matrix is, the faster the classical methods are when they are applied to the system. However, it is difficult to compute the infinity norm bounds for the inverse of Nekrasov matrices; we usually try to estimate them with upper bounds expressed by the initial elements of the original matrices.

Lemma 4.1

Cvetkovic̆ et al. (2012) Let \(A=(a_{ij})\in {\mathbb {C}}^{n\times n}\) be a Nekrasov matrix; then:

and

where

Lemma 4.2

Liu et al. (2018) Let M be a nonsingular matrix and m be a real number; for any vectors x and y, we have:

Based on Lemma 4.1 and Lemma 4.2, we get an infinity norm upper bound for the inverse of the Schur complement.

Theorem 4.1

Let \(A=(a_{ij})\in {\mathbb {C}}^{n\times n}\); if \(A[\alpha ]\in {\mathbf {N}}_s\), where \(\alpha =\{i_1,i_2,\cdots ,i_s\}\subset {{\mathbb {N}}}\), and \(\alpha ^{c}=\{j_1,j_2,\cdots ,j_{n-s}\}\) with (8) held, and \(\varepsilon _i\) are obedient to that in Theorem 3.1, then:

where

M and \({\mathfrak {R}}_{j_t}\) are defined as that in Theorem 3.1, and

Proof

By Theorem 3.1, \(A/\alpha =(a'_{uv})\in {\mathbf {N}}_{n-s}\) and \(|a'_{tt}|-R_t(A/\alpha )<|a_{j_tj_t}|-{\mathfrak {R}}_{j_t}(A))\). Thus:

On the other hand, \(\zeta _1(A/\alpha )=1\) and by Lemma 4.2:

for all \(p=1,2,\cdots ,n-s\). Then, the proof is completed. \(\square \)

Actually, if A is a Nekrasov matrix, we could get a more precise bound.

Corollary 4.1

If \(A\in {\mathbf {N}}_n\) with (11) or (12) held, \(\alpha =\{i_1,i_2,\cdots ,i_s\}\) and \(\alpha ^{c}=\{j_1,j_2,\cdots ,j_{n-s}\}\), then:

where \(\zeta _t(A/\alpha )\) is defined as that in Theorem 4.1; \({\mathcal {R}}_{j_t}(A))\) is defined as that in Theorem 3.2.

Proof

The proof is similar to that of Theorem 4.1; since \({\mathcal {R}}_{j_t}(A)\le {\mathfrak {R}}_{j_t}(A)\), we get a more precise bound for the inverse of the Schur complement. \(\square \)

Theorem 4.2

Let \(A\in {\mathbf {N}}_n\) with (11) or (12) standing, and suppose \(\alpha =\{i_1,\cdots ,i_s\}\) and \(\alpha ^{c}=\{j_1,\cdots ,j_{n-s}\}\), then:

where \(\zeta _t(A/\alpha )\) and \(A_{tt}\) are defined as that in Theorem 4.1 and \({\mathcal {R}}_{j_u}(A)\) is defined as that in Theorem 3.2.

Proof

Since \(A/\alpha \in {\mathbf {N}}_{n-s}\) from Theorem 3.2, let \(A/\alpha =(a'_{uv})\), by (13) and the proof of Theorem 3.2:

Then, the proof is completed. \(\square \)

Lemma 4.3

Li et al. (2016) Let \(A\in {\mathbf {N}}_n\), and then, for \(\mu _1>\frac{R_1(A)}{|a_{11}|}\), and \(\mu _2>\frac{R_1(A)}{|a_{11}|}\):

and

where \(z_i(A)\) are defined the same as that in Lemma 4.1.

In addition, it has been shown in Li et al. (2016) that the parameters would better be taken as:

By Lemma 4.3, we obtain a new infinity norm upper bound for the inverse of the Schur complement.

Theorem 4.3

Let \(A=(a_{ij})\in {\mathbf {N}}_n\) with (11) or (12) established, suppose \(\alpha =\{i_1,i_2,\cdots ,i_s\}\subset {{\mathbb {N}}}\), and \(\alpha ^{c}=\{j_1,j_2,\cdots ,j_{n-s}\}\), and then, for \(\mu '>\frac{{\mathcal {R}}_{j_1}(A)}{|a_{j_1j_1}|}\):

where \(\zeta _t(A/\alpha )\) and \(A_{11}\) are defined as that in Theorem 4.1, \(M_0\) and \({\mathcal {R}}_{j_u}(A)\) are defined in Theorem 3.2.

Proof

It has been proved in Theorem 3.2 that \(A/\alpha \in {\mathbf {N}}_s\), and let \(A/\alpha =(a'_{uv})\); then, by (19), for \(\mu '>\frac{{\mathcal {R}}_1(A/\alpha )}{|a'_{11}|}\):

actually, if \(\mu '>\frac{{\mathcal {R}}_{j_1}(A)}{|a_{j_1j_1}|}\):

On the other hand, \(\zeta _1(A/\alpha )=1\), and by Lemma 4.2:

for all \(p=1,2,\cdots ,n-s\). Then, the proof is completed. \(\square \)

5 Applications in solving large linear systems with Schur-based method

In this section, we will give several numerical experiments to demonstrate the applications of the closure property in solving large linear systems with Schur-based method. All numerical experiments are finished with Matlab \(\hbox {R}2016a\) on a computer with Intel(R) Core(TM) \(\hbox {i}5-6500\) CPU @ \(3.2\hbox {GHz}\). And, the superiority of our results are presented is some cases.

In general, if there is a symmetric Nekrasov matrix A that satisfies Theorem 3.1 and Theorem 3.2 for some \(\alpha \), then we can get upper bounds for \(\Vert A^{-1}\Vert _{\infty }\) by (13), (14), (18), and (19); in addition, we can also obtain the upper bounds for \(\Vert (A/\alpha )^{-1}\Vert _{\infty }\) by (15), (16), (17), and (20). Furthermore, for solving linear equations \(Ax=b\), we can apply Conjugate Gradient (CG) method, Successive Overrelaxation (SOR) method, Symmetric Successive Overrelaxation (SSOR) method, and Accelerated Overrelaxation (AOR) method. And if we apply Schur-based method, those classical iterative method can be also applied to the smaller two equations (2) and (3), and we name them as Schur-based CG (S-CG) method, S-SOR method, S-SSOR method, and S-AOR method, respectively. And for better comparing the results, the tolerable errors of theses classical iterative methods are all set as \(10^{-6}\).

Example 5.1

Suppose \(A=\left( \begin{array}{lll}A_1&{}A_2&{}A_3\\ A_4&{}A_5&{}A_6\\ A_7&{}A_8&{}A_9 \end{array}\right) \in {\mathbb {C}}^{n\times n}\), where \(n=3k\), \(k\ge 1\) is an integer and \(A_i\in {\mathbb {R}}^{k\times k}\), \(i=1,2,\cdots ,9\). Let \(b=(b_j)\in {\mathbb {R}}^{n}\), where \(b_j=j\cdot (-1)^j\), \(j\in {{\mathbb {N}}}\). Assume \(A_6=A_8={\mathbf {0}}\), and \(A_2=A_4^{T}\), \(A_3=A_7^{T}\) are taken as follows:

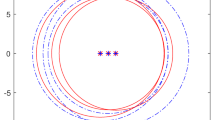

It is not hard to see A is always a symmetric Nekrasov matrix when \(n\ge 3\). Thus, we can use CG method, SOR method, SSOR method, and AOR method directly to solve \(Ax=b\). If we take \(\alpha _1=\{1,2,\cdots ,k\}\) and \(\alpha _2=\{k+1,k+2,\cdots ,2k\}\), then the matrix A with \(\alpha _2\) satisfied the control conditions (8) and (12), and by Corollary 3.2 and Theorem 3.2, we have \(A/\alpha _2\in {\mathbf {N}}_{2k}\), and thus, we can use the Schur-based methods described before by choosing \(\alpha _2\) to solve the linear equations. On the other hand, according to Theorem 2.1 in Liu et al. (2018), i.e., the Corollary 3.3 in this paper, we are sure \(A/\alpha _1\in {\mathbf {N}}_{2k}\), and then, we can also use these Schur-based methods by choosing \(\alpha _1\). However, when we use the upper bounds in Lemma 4.1 to estimate the infinity norm of the inverse of A, we find that the estimations are far from the exact values of \(\Vert A^{-1}\Vert _{\infty }\), see left of Fig. 1.

From the bounds for the inverse of Nekrasov matrices in Lemmas 4.1 and 4.3 in Fig. 1, \(\Vert A^{-1}\Vert _{\infty }\le 8000\) (more than 1), which cannot derives whether those classical iterative methods are suitable or not to solve \(Ax=b\). While we are pleased to find the estimations of \(\Vert (A[\alpha _2)]^{-1}\Vert _{\infty }\) and \(\Vert (A/\alpha _2)^{-1}\Vert _{\infty }\) with \(\Vert (A[\alpha _1)]^{-1}\Vert _{\infty }\) and \(\Vert (A/\alpha _1)^{-1}\Vert _{\infty }\) are much more accuracy, see the center and right of Fig. 1. It is clearly to see \(\Vert (A[\alpha _2)]^{-1}\Vert _{\infty }\le 0.005\) (less than 1) with \(\Vert (A/\alpha _2)^{-1}\Vert _{\infty }\le 0.1\) (less than 1), and \(\Vert (A[\alpha _1)]^{-1}\Vert _{\infty }\le 0.03\) (less than 1) with \(\Vert (A/\alpha _1)^{-1}\Vert _{\infty }\le 0.3\) (less than 1), which illustrates that the CG method, SOR method, SSOR method, and AOR method are convergent when they are applied to the two smaller linear equations by Schur-based method. In addition, from this example, we find the estimations of \(\Vert (A/\alpha _2)^{-1}\Vert _{\infty }\) are stable to be less than 1 even the order of A increases. And, the iterative steps, CPU time, and the residuals of these methods are presented in Fig. 2.

From the numerical results, we could see the S-CG method by selecting \(\alpha _2\) costs less CPU time than that of the other methods; even it costs more iterative steps, and the residuals of the solutions are mussy, but they are at the same order of magnitude. In addition, we also find from the results that the S-SOR method by choosing \(\alpha _2\) costs less CPU time than that by choosing \(\alpha _1\) and SOR method directly, so is the S-AOR method. That illustrates choosing a non-leading principle submatrix of Nekrasov matrix to apply the Schur-based method may be cheaper in some special cases.

Actually, if \(A\notin {\mathbf {N}}_n\), but it is suitable for Theorem 3.1, then we could use Schur-based method to solve \(Ax=b\), but the classical iterative methods may be out of work.

Example 5.2

Let \(\tilde{A}=(\tilde{a}_{ij})\in {\mathbb {R}}^{n\times n}\) be the matrix in example 5.1 except for \(\tilde{a}_{11}=6n-10\), and let \(\tilde{b}=b\). In this case, \(\tilde{A}\notin {\mathbf {N}}_{n}\), but if we take \(\alpha _1=\{1,2,\cdots ,k\}\) and \(\alpha _2=\{k+1,k+2,\cdots ,2k\}\), then the matrix \(\tilde{A}\) with \(\alpha _2\) satisfies conditions (8) in Theorem 3.1. Thus, both \(A[\alpha _2]\) and \(A/\alpha _2\) are Nekrasov matrices, and then, we could apply the Schur-based method to solve the linear equations \(Ax=b\). In this case, the upper bounds in (13), (14), (18), and (19) cannot be use to estimate \(\Vert A^{-1}\Vert _{\infty }\), but they can be used to compute the upper bounds for \(\Vert (A[\alpha _2])^{-1}\Vert _{\infty }\). In addition, the upper bound in (15) can be used to compute the upper bounds for \(\Vert (A/\alpha _2)^{-1}\Vert _{\infty }\). And the estimations of \(\Vert (A[\alpha _2])^{-1}\Vert _{\infty }\) and \(\Vert (A/\alpha _2)^{-1}\Vert _{\infty }\) can be seen in Fig. 3.

In addition, observe that A is an H-matrix, and thus, we can also use CG, SOR, SSOR, and AOR methods directly, and the S-CG, S-SOR, S-SSOR, and S-AOR methods by choosing \(\alpha _1\) are suitable to solve \(Ax=b\), too. From the numerical results from Fig. 3, with the increasing of n, \(\Vert (A[\alpha _2])^{-1}\Vert _{\infty }\le 2.5\times 10^{-4}\) (less than 1), \(\Vert (A/\alpha _2)^{-1}\Vert _{\infty }\le 0.8\), which further illustrates that the CG method, SOR method, SSOR method, and AOR method are convergent to solve the two smaller equations by Schur-based method with choosing \(\alpha _2\). And the iterative steps, CPU times, and residuals of these different methods are presented in Fig. 4.

From Fig. 4, we can see the CPU time of the S-CG, S-SOR, and S-AOR methods by choosing \(\alpha _2\) is less than that by choosing \(\alpha _1\) and the directly classical methods. In addition, the residuals of solutions by different methods are at the same order of magnitude. Thus, this example shows the efficiency and superiority of our results in this paper.

At last, let us recall the problem in the introduction.

Example 5.3

As introduced before, the problem of solving differential equations transforms into a problem of solving some linear equations. And the smaller the step length h is, the larger the order of the coefficient matrix A is. Surely, there must be many other situations that lead the coefficient matrix like the series matrices in (1), but the orders may be changed. In this example, we would consider the linear systems \(Ax=b\), where A is an \(n\times n\) Nekrasov matrix described in (1), let \(b=(b_i)\in {\mathbb {R}}^{n}\), where \(b_i=(-1)^{i}\), \(i\in {{\mathbb {N}}}\). We increase n from 100 to 2000 by 20 once, and set \(\alpha _1=\{1,2,\cdots ,\frac{n}{2}\}\) and \(\alpha _2=\{1,3,\cdots ,2n-1\}\). First, A is a Nekrasov matrix, and thus, we can use SOR method to solve the linear equations. And according to corollary 3.3, i.e., a conclusion in Liu et al. (2018), both \(A[\alpha _1]\) and \(A/\alpha _1\) are Nekrasov matrices, and thus, the Schur-based method with SOR method by selecting \(\alpha _1\) can be useful. Furthermore, \(A[\alpha _2]\) is a diagonal matrix, and A with \(\alpha _2\) satisfy the conditions in Theorem 3.2, and then, the Schur-based method with SOR method by selecting \(\alpha _2\) is also suitable. In addition, some simple estimations of \(\Vert A^{-1}\Vert _{\infty }\), \(\Vert (A/\alpha _1)^{-1}\Vert _{\infty }\), \(\Vert (A/\alpha _2)^{-1}\Vert _{\infty }\), \(\Vert A[\alpha _1]^{-1}\Vert _{\infty }\), and \(\Vert A[\alpha _2]^{-1}\Vert _{\infty }\) are presented in Fig. 5.

From Fig. 5, \(\Vert A^{-1}\Vert _{\infty }\le 2.5\) (more than 1), and we cannot determine the convergence of the SOR method or AOR method from this estimation. While \(\Vert A[\alpha _2]^{-1}\Vert _{\infty }\le 0.25\) (less than 1) and \(\Vert (A/\alpha _2)^{-1}\Vert _{\infty }\le 0.5\) (less than 1), which illustrated that the Schur-based SOR method and Schur-based AOR method are convergent to solve \(Ax=b\). Furthermore, the estimations of \(\Vert (A/\alpha _2)^{-1}\Vert _{\infty }\) is stable and less than 1 as the order of A increases, but upper bounds of \(\Vert (A/\alpha _1)^{-1}\Vert _{\infty }\) are more than 1. In addition, \(\Vert A[\alpha _1]^{-1}\Vert _{\infty }\le 2.5\) (more than 1) and \(\Vert (A/\alpha _1)^{-1}\Vert _{\infty }\le 2.5\) (more than 1) are not sufficient to determine whether the SOR method or the AOR method is convergent or not to solve the two smaller equations. At last, the iterative steps, the CPU time, and the residuals of the solutions by different methods are shown in Fig. 6.

It is clearly to see that the CPU time, iterative steps, and residuals of the Schur-based methods with SOR and AOR methods by choosing \(\alpha _2\) are better than that by choosing \(\alpha _1\) and the directly classical iterative methods. Then, this example further demonstrates the necessity for the results in this paper.

6 Conclusions

In this paper, we present three conditions, such that the Schur complements of some special matrices including Nekrasov matrices via the non-leading principle submatrix are Nekrasov matrices. In large scale problems of linear equations, when the Schur-based method is used to solve the system \(Ax=b\), selecting a non-leading principles submatrix may be more efficient and cheaper in some special cases. Generally, if the leading principle submatrix of A is not sparse, and there exists a non-leading principle submatrix of A being sparse or diagonal, such that Theorem 3.1 or Theorem 3.2 is held, then we would prefer a non-leading principle submatrix using the Schur-based method to solve \(Ax=b\). Some numerical experiments in this paper are presented to show the efficiency and superiority of our results.

References

Ames W (2014) Numerical methods for partial differential equations. Academic Press, New York

Baily D, Crabtree D (1969) Bounds for determinants. Linear Algebra Appl 2(3):303–309

Berman A, Plemmons R (1979) Nonnegative matrices in the mathematical sciences. Academic Press, New York

Braun M, Golubitsky M (1983) Differential equations and their applications. Springer, New York

Chicone C (2006) Ordinary differential equations with applications. Springer Science and Business Media, New York

L. Cvetkovic̆ P. Dai, K. Doroslovac̆ki, Y. Li, (2013) Infinity norm bounds for the inverse of Nekrasov matrices. Appl Math Comput 219:10 (5020–5024)

L. Cvetkovic̆ V. Kostić, K. Doroslovac̆ki, (2012) Max-norm bounds for the inverse of S-Nekrasov matrices. Appl Math Comput 218:18 (9498–9503)

Demmel J (1997) Applied numerical linear algebra. Society For Industrial and Applied Mathematics

Esnaola M, Peña J (2014) Error bounds for linear complementarity problems of Nekrasov matrices. Numer Algor 67(3):655–667

Elsner L, Mehrmann V (1991) Convergence of block iterative methods for linear systems arising in the numerical solution of Euler equations. Numerische Mathematik 59(1):541–559

Fiedler M, Markham T (1988) An inequality for the Hadamard product of an M-matrix and an inverse M-matrix. Linear Algebra Appl 101:1–8

Forsythe G (1953) Solving linear algebraic equations can be interesting. Bull Am Math Soc 59(4):299–329

Gao L, Li C, Li Y (2014) A new upper bound on the infinity norm of the inverse of Nekrasov matrices. J Appl Math 2014

Horn R, Johnson C (1991) Topics in matrix analysis. Cambridge University Press, New York

Hadjidimos A (1983) On the generalization of the basic iterative methods for the solution of linear systems. Int J Comput Math 14(3–4):355–369

Karlqvist O (1952) Numerical solution of elliptic difference equations by matrix methods. Tellus 4(4):374–384

Li C, Pei H, Gao A, Li Y (2016) Improvements on the infinity norm bound for the inverse of Nekrasov matrices. Numer Algor 71(3):613–630

Li W (1998) On Nekrasov matrices. Linear Algebra Appl 218(1–3):87–96

Liu J, Zhang F (2005) Disc seperation of the Schur complements of diagonally dominant matrices and determinant bounds. SIAM J Matrix Anal Appl 27(3):665–674

Liu J, Zhang J, Zhou L, Tu G (2018) The Nekrasov diagonally dominant degree on the Schur complement of Nekrasov matrices and its applications. Appl Math Comput 320:251–263

Liu J, Huang Z, Zhu L, Huang Z (2011) Theorems on Schur complement of block diagonally dominant matrices and their application in reducing the order for the solution of large scale linear systems. Linear Algebra Appl 435(12):3085–3100

Nieto J, Rodríguez-López R (2005) Contractive mapping theorems in partially ordered sets and applications to ordinary differential equations. Order 22(3):223–239

Ostrowski A (1937) Über Die Determinanten Mit Überwiegender Hauptdiagonale. Commentarii Mathematici Helvetici 10:69–96

Pang M, Li Z (2003) Generalized Nekrasov matrices and applications. J Comput Math 21:183–188

Szulc T (1995) Some remarks on a theorem of Gudkov. Linear Algebra Appl 225:221–235

Szulc T, Cvetković L, Nedović M (2015) Scaling technique for partition-Nekrasov matrices. Appl Math Comput 271:201–208

Thomas J (2013) Numerical partial differential equations: finite difference methods. Springer Science and Business Media, New York

Yu L (2015) Kolotilina, bounds for the determinants of Nekrasov and S-Nekrasov matrices. J Math Sci 207(5):776–785

Zhang F (2005) The Schur complements and its applications. Springer, New York

Acknowledgements

The work was supported by the National Natural Science Foundation of China (grant 11971413, 11571292) and by the Hunan Provincial Innovation Foundation for Postgraduate (grant CX20190461).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Zhong-Zhi Bai.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, J., Xiong, Y. & Liu, Y. The closure property of the Schur complement for Nekrasove matrices and its applications in solving large linear systems with Schur-based method. Comp. Appl. Math. 39, 290 (2020). https://doi.org/10.1007/s40314-020-01342-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-020-01342-0