Abstract

This study is devoted to find the numerical solution of the surface heat flux history and temperature distribution in a non linear source term inverse heat conduction problem (IHCP). This type of inverse problem is investigated either with a temperature over specification condition at a specific point or with an energy over specification condition over the computational domain. A combination of the meshless local radial point interpolation and the finite difference method are used to solve the IHCP. The proposed method does not require any mesh generation and since this method is local at each time step, a system with a sparse coefficient matrix is solved. Hence, the computational cost will be much low. This non linear inverse problem has a unique solution, but it is still ill-posed since small errors in the input data cause large errors in the output solution. Consequently, when the input data is contaminated with noise, we use the Tikhonov regularization method in order to obtain a stable solution. Three different kinds of schemes are applied to choose the regularization parameter which are in agreement with each other. Numerical results show that the solution is accurate for exact data and stable for noisy data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Parabolic inverse problems play a major task in modeling some physical phenomena. They appear in various fields of physics and engineering such as analysis of heat conduction, thermoplasticity, chemical diffusion and control theory.

Inverse problems are in nature ’unstable’ because the unknown solutions and parameters have to be determined from indirect observable data which contain measurement error. The main difficulty in establishing any numerical algorithm for approximating the solution is due to the severe ill-posedness of the problem and the ill-conditioning of the resultant discretized matrix (Hon and Wei 2004).

Among the species of parabolic inverse problems such as the works (Cannon 1984; Cannon and Du Chateau 1988; Kanca and Ismailov 2012; Yousefi et al. 2012; Hao and Reinhardt 1996; Shivanian and Jafarabadi 2018b), inverse heat conduction problem (IHCP) is greatly ill-posed in the sense that any small change on the input data can result in a sizeable change to the solution. The IHCP arises in the modelling and control of process with heat propagation in thermophysics and mechanics of continuous media. It befall whenever surface temperatures and surface heat fluxes should be determined at inaccessible portions of the surface from the corresponding measurements at accessible parts (Hon and Wei 2004). For instance, physicists and engineers often come across problems such as estimation of boundary conditions and bulk radiation properties in emitting, absorbing and scattering translucent materials and approximation of boundary heat flux and inlet condition inside ducts, arising due to forced convection. In this respect, the direct problem observes a reduction in error (arising from the boundary or interior measurements) as a result of the diffusive nature of the process of heat conduction. Hence the inverse problem which is under-determined in nature is considered highly ill-posed. The mathematical techniques employed for solving the problems of heat conduction in anisotropic bodies vary extensively from the problems in isotropic media. The tensorial character corresponding to the heat transfer, differs for both anisotropic and isotropic cases, resulting in the presence of mixed partial derivatives in the corresponding differential equation. This just complicates the task of obtaining a solution, be it analytical or numerical. Thus, the application of the principle method of separation of variables becomes impossible owing to the presence of the mixed derivatives with respect to space variables as they can’t be separated. Moreover, the application of integral methods such as Green’s functions or Laplace and Fourier transforms requires that the calculating regions be unlimited on no less than one side (Arora and Dabas 2019).

As a non-exhaustive and more recent list, we can mention to the following works that studied various modes of inverse heat conduction problem. A more complete list is given in Arora and Dabas (2019). The authors of Arora and Dabas (2019) proposed a linear combination of fundamental solutions and heat polynomials for a two-dimensional space inverse heat conduction problem in an anisotropic medium. Shidfar et al. approximated the heat flux through Chebyshev polynomials in Shidfar et al. (2006). Two numerical procedures based upon the Bernstein multi-scaling approximation and the cubic B-spline scaling functions for solving the IHCP with a nonlinear source term presented by Dehghan et al. (2013). The authors of Xiong et al. (2020) presented a sequential conjugate gradient method to reconstruct the undetermined surface heat flux for nonlinear inverse heat conduction problem (IHCP). Their modified method combines the merits of sequential function specification method (SFSM) and conjugate gradient method (CGM). The work Fernandes et al. (2015) proposes a transfer function identification (or impulse response) method to solve inverse heat conduction problems. Its technique is based on Green’s function and the equivalence between thermal and dynamic systems. The authors of Cheng-Yu et al. (2021) applied a collocation method using the space-time radial polynomial series function (SRPSF) for dealing with two-dimensional inverse heat conduction problems (IHCPs) with arbitrary shapes. Different approaches to solving the boundary-value inverse heat conduction problem based on the use of Laplace and Fourier transforms are proposed in Yaparova (2014).

During the last few decades, numerous meshless approaches have been extensively developed for tackling the IHCPs (Hon and Wei 2004; Cheng-Yu et al. 2021; Hussen et al. 2022; Ismail 2017; Deng et al. 2020; Grabski et al. 2019) in which among them, (Hon and Wei 2004; Cheng-Yu et al. 2021; Hussen et al. 2022) investigate the IHCP in one dimension. Although many researchers have studied IHCPs in one dimension through diverse techniques (Hon and Wei 2004; Frankel and Keyhani 1997; Jonas and Louis 2000; Lesnic and Elliott 1999; Lesnic et al. 1996; Liu 1996; Shen 1999), we consider the following one-dimensional time-dependent parabolic heat equation that already introduced in Shidfar et al. (2006), Dehghan et al. (2013):

with the initial condition

and Neumann boundary conditions

where u(x, t) is the temperature distribution and T represents the maximum time of interest for the time evolution of the problem. Also, F, \(u_0(x)\) and g(t) are considered as known functions. Equations (1)–(3) represent the Neumann direct problem which is nonlinear and well-posed if the function a(t) is given. The function F(u(x, t)) is interpreted as a heat or material source, while in a chemical or biochemical application, it may be interpreted as a reaction term (Shidfar et al. 2006). The conditions under which one can find the solution u(x, t) to the direct problem are given in Cannon (1984). A brief review on the solution of direct heat conduction problem are as follows. Let \(\theta (x, t)\) denote the theta function defined by

where K(x, t) is the free-space heat kernel

also, by assumption that the functions F, \(u_0\), g, a in direct problem have the following properties:

-

(a)

\(u_0(x), a(t), g(t)\in C[0,\infty ),\)

-

(b)

The function F(u(x, t)) is a given piecewise differentiable function on the set \(\{u | -\infty< u < \infty \}\),

-

(c)

There exists a constant \(C_F\) such that \(|F(u)-F(v)|\le C_F|u-v|\),

-

(d)

F is a bounded and uniformly continuous function in u,

then u(x, t), called the unique solution of the direct problem (1)–(4), has the form Cannon (1984); Shidfar et al. (2006)

The function \(p(t) = u(1, t; F, a)\) will be viewed as an output corresponding to the input g(t), in the presence of the heat flux \(\partial _{x}u(0,t)=a(t)\). Now, we look at the interaction of a change \(\Delta a\) with the corresponding change \(\Delta p\) in the measured output p(t).

Theorem 1

Suppose that the data functions \(g = g(t)\), \(F = F(u)\) and the heat flux terms \(a_1\) and \(a_2\) at \(x = 0\) satisfy assumptions (a)-(d). Let \(u_n = u(x, t; F, g, u_0, a_n)\) and \(p_n(t) = u_n(1, t)\), for \(n = 1, 2\). Then for any \(\tau \), \(0\le \tau \le T\),

where \(\phi (x, t)\) denotes a solution of the following suitable adjoint problem with input data \(\nu (t)\):

\(\partial _t\phi (x,t)+\partial _{xx}\phi (x,t)+\lambda (x,t)\phi (x,t)=0,\hspace{.5cm}0<x<1, 0<t<\tau ,\)

\(\phi (x,\tau )=0,\hspace{.5cm}0<x<1,\)

\(\phi _x(1,t)=\nu (t),\hspace{.5cm}0<t<\tau ,\)

\(\phi _{x}(0,t)=0,\hspace{.5cm}0<t<\tau .\)

Proof

Refer to Shidfar et al. (2006). \(\square \)

The above adjoint problem is backward in time, but is well posed Shidfar et al. (2006) thus the solution is controlled by the inhomogeneous Neumann condition at \(x= 0\).

In mutuality to the direct problem, in the inverse problem the heat flux a(t) is unknown and clearly, additional information is required in order to render a unique solution. With respect to what additional information can be provided by experiment, in this study we specify two inverse problems, as follows:

-

1.

Inverse Problem 1 (IP1). If the additional measurement be the internal temperature measurement in time at \(x^*\in [0, 1]\), namely

$$\begin{aligned} u(x^*, t)=p(t),\qquad t\in [0, T], \end{aligned}$$(5)then Eqs. (1)–(5) are called the IP1. Notice that if the internal point \(x^*=0\), then the problems (1)–(2) with (4)–(5) is a well-posed forward heat conduction problem. Furthermore, if \(x^*=1\), the problems (1)–(2) with (4)–(5) is called a Cauchy problem for heat equation.

-

2.

Inverse Problem 2 (IP2). In the situation that the additional measurement is the \(L^1\)-norm of u(x, t) over the space domain (0, 1), i.e. the following equation

$$\begin{aligned} \int \limits _0^1 u(x, t) dx=E(t)\qquad t\in [0, T], \end{aligned}$$(6)

A general IHCP is then to determine the temperature and heat flux on the surface \(x=0\). This problem is well known for being highly ill-posed since small errors in the input data cause large errors in the output solution.

The IHCP is difficult because it is extremely sensitive to measurement errors. Other important effects can be the presence of the lag and damping on experimental data. Another problem that appears in IHCP is related to the time sample. For example, the use of small times steps frequently introduces instabilities in the solution of the IHCP. It can be observed that the conditions of small time steps have the opposite effect in the IHCP compared to that in the numerical solution of the heat conduction equation.

This study has three main objects. The first is to provide a local radial point interpolation meshless method to obtain the numerical solution of the IP1 and IP2. This method has been widely applied in solving some kind of partial differential equations(PDEs), such as fractional PDEs and inverse problems in two and three dimensional cases Shivanian and Jafarabadi (2017a, 2017b, 2018a). The second objective is to demonstrate the efficiency of the meshless proposed method in dealing with the highly ill-posed IP1 and IP2 through the detailed numerical results underlying methods such as forward finite difference and Tikhonov regularization. In Shidfar et al. (2006), Dehghan et al. (2013), the authors has been investigated only the IP1 and addition, does not provide a comparison with the other methods whiles in the current work, we study both the IP1 and the IP2, and also numerical comparisons will be done with their results. As the end aim of this study, we will obtain a simple scheme to find the regularization parameter which can be a good competitor to the famous methods such as the L-curve and discrepancy principle methods.

The structure of the article is as follows. In Sect. 2, the method is briefly described and some preliminaries are given which are useful for our main results. In Sects. 3 and 4 the numerical solutions of the direct and inverse problems are given, respectively. The main results of approach are demonstrated through some examples to show its validity and efficiency in Sect. 5, and a brief conclusion is given in Sect. 6.

2 Proposed method

To prevent the singularity problem in the polynomial point interpolation method (PIM), the radial basis function (RBF) is used to expand the radial point interpolation method (RPIM) shape functions for meshless weak and strong form methods (Wang and Liu 2002). Suppose a continuous function u(x) defined in a domain \(\Omega \subset {\mathbb {R}}\), which is represented by a set of field nodes. The u(x) at a point of interest x is approximated in the form of

where \(R_{i}({x})\) is a radial basis function (RBF), n is the number of RBFs, \(P_{j}({x})\) is monomial in the space coordinate x, and np is the number of polynomial basis functions. For the second-order partial differential equation, in this case the IHCP, we choose the second-order thin plate spline (TPS) as radial basis functions in Eq. (7). This RBF is defined as follows:

In the radial basis function \(R_i(x)\), the variable is only the distance between the point of interest x and a node at \(x_i\) which is defined as

Also, The \(P_j(x)\) in Eq. (7) is built using Pascal’s triangle and a complete basis is usually preferred. In the current work, we consider

To obtain unknown coefficients \(a_{i}\) and \(b_{j}\) in Eq. (7), a support domain is built at the point of interest x, and n field nodes which are included in the support domain. The coefficients \(a_{i}\) and \(b_{j}\) can be specified by performing Eq. (7) to be satisfied at these n nodes surrounding the point of interest x, i.e \(s(x_i)=u(x_i), i=1, 2,..., n\). So, we have a linear system corresponding to each node. These equations in matrix form are as follows:

where the vector of function values \({\textbf {U}}_{s}\) is

the RBFs moment matrix is

and the polynomial moment matrix is

Also, the vector of unknown coefficients for RBFs is

and the vector of unknown coefficients for polynomial is

Since there are \(n+np\) unknown variables in Eq. (7), to guarantee the uniqueness of the approximation, we add np conditions as follows

By combining Eqs. (7) and (17) yields the following system of equations in the matrix form:

where

By solving Eq. (18), we obtain

Eq. (7) can be rewritten as

where

The first n functions of the above vector function are called the radial point interpolation (RPIM) shape functions corresponding to the nodal displacements, and we denote

then Eq. (21) is transformed to the following one

The derivative of s(x) is easily obtained as

It is significant that the RPIM shape functions have the Kronecker delta function property, that is

This is because the RPIM shape functions are created to pass through nodal values. Moreover, the shape functions are the partitions of unity, that is,

In the present method, it does not require any kind of integration locally over small quadrature domains because we choose the Dirac delta function instead of the Heaviside step function, the Gaussian weight function, etc. Therefore, computational costs of the present method is less expensive.

When the Lagrangian form of the proposed method is used in the context of solving a time-dependent PDE problem, the solution u(x, t) is approximated by

where \(u_i(t) \simeq u(x_i, t)\) are the unknown functions to determine.

Finally, we assume that the number of total nodes covering \({\overline{\Omega }}=(\Omega \cup \partial \Omega )\) is N. Also, consider the \(n_{x}\), instead n, the number of nodes included in support domain \(\Omega _{x}\) corresponding to the point of interest x. For example, \(\Omega _{x}\) can be an interval centered at x with radius \(r_s\). Therefore, we have \(n_x \le N\) and Eq. (24) can be modified as

If we define \(\Omega ^c_{x}=\lbrace {x}_j:{x}_j\notin \Omega _{x}\rbrace \) and since corresponding to node \({x}_j\) there is a shape function \(\phi _j({x}), j=1, 2,...,N\), then from Eq. (26) we have

The derivative of s(x) is easily obtained as

and generally higher order derivative of s(x) is given as

where \(\dfrac{d ^m(.)}{d x^m}\) is m’th derivative. If we substitute \({x}=x_i\) in Eq. (32), then we have the following matrix–vector multiplication

where

and

Also from Eq. (30), it is clear that \(\forall {x}_j\in \Omega ^c_{x}: d^m \phi _j({x})/dx^m=0, m=1, 2,...\).

3 Numerical solution of the direct problem

In this section, we consider the direct initial boundary value problem (1)–(4). To introduce a finite difference approximation in order to discretize the time derivative, we need some preliminaries. Here, the time derivatives are approximated by the time-stepping method. For this purpose the following approximation can be used

Also the Crank–Nicolson scheme is used to approximate the Laplacian operator \(\partial ^2 (.)/\partial x^2\) at two respective times, as follows

where \(u^k(x)=u(x, k\delta t)\) and \(\delta t\) is the time step. To eliminate the nonlinearity, an iterative approach in this case a simple predictor-corrector (P-C) scheme is performed. Using the above discussion, Eq. (1) can be written as

We can rewrite Eq. (39) as follows

where \(\lambda =\delta t/2\) and \({\tilde{u}}\) is the latest available approximation of u. We assume that \(u^{(k)}({x}_i)\) are known, and our aim is to compute \(u^{(k+1)}({x}_i)\) for \(i=1, 2,..., N\). By substituting approximate formulas (29) and (32) into Eq. (40) yields

In the previous equation, we put \({x}={x}_i\), \(i=1, 2,..., N_{\Omega },\) (\(N_{\Omega }\) is the number of nodes in \(\Omega \)), and then by applying the notations (33)–(36), implies

For \(x_i=0\) and 1, i.e the nodal points on the boundary of \(\Omega \) which we display it by \(\partial \Omega \), using Eqs. (3) and (4), we have the following relation

Also, we have the following equation to compute the favorable output (6) using Simpson’s composite numerical integration rule

where \(d_j\)’s are the coefficients in the Simpson rule and N is the total number of nodes covering \({\bar{\Omega }}\), i.e. \(N=N_{\Omega }+N_{\partial \Omega }\). According to the above discussions, we consider the following system

where the matrices \({\textbf {A}}\) and \({\textbf {B}}\) are N by N, whereas \({\textbf {C}}\) and \(U^{(k+1)}\) are columnar matrices. We define these matrices as follows:

In Eqs. (47) and (48), \(\delta \) is the Kronecker delta function, i.e.

To deal with the nonlinear term in system (46), we explain a simple predictor-corrector scheme. At the first time level, when \(k=0\), according to the initial condition (2), we apply the following assumption

Now, we perform the following procedure Dehghan and Ghesmati (2010):

At first we put \({\tilde{u}}=U^{(k)}\), \(k=0\), and so system (46) can be solved as a system of linear algebraic equations for the unknown \(U^{(1)}\), then we use the following Crank–Nicolson scheme

From Eq. (53), we set \({\tilde{u}}=U\) and using the new \({\tilde{u}}\), Eq. (46) is solved using the new U for unknown \(U^{(1)}\) in iteration (\(l=2\)). We are at the time level \((k + 1)\) yet, and iterate between calculating \(^{\sim } {\tilde{u}}\) and computing the approximation values of the unknown \(U_l^{(k+1)}\) (where l denotes the number of iterations in each time level). We put

In the current work, we iterate this process until the following condition is true for each time level

Finally, we put \(U^{(k+1)}=U^{(k+1)}_l\). Then, we can go to the next time level. So the nonlinearity of system (46) is eliminated by the above discussion. This process is iterated until reaching to the desirable time t.

4 Numerical solution of the inverse problem

We now consider the inverse initial boundary value problem (1)–(4) with either additional conditions (5) or (6) when both the surface heat flux a(t) and the temperature distribution u(x, t) are unknown. In this case, Eqs. (42) and (44) are hold similar to the direct problem, but Eq. (43) converts to the following equality

In practice, either the additional observations (5) or (6) come from measurements which are inherently contaminated with errors. We therefore model this by replacing the exact data \(p\bigl ((k+1)\delta t\bigr )\) and \(E\bigl ((k+1)\delta t\bigr )\) with the noisy data

where \(\gamma \) is the percentage of noise and \({\underline{\epsilon }}\) are random variables generated from a uniform distribution in the interval \([-1, 1]\). Overall, we have \(N_{\Omega }+N_{\partial \Omega }+1\) equations, i.e., \(N+1\) equations and \(N+1\) unknown as \(\lbrace u^{(k+1)}_1, u^{(k+1)}_2,..., u^{(k+1)}_N\rbrace \) and \(a^{(k+1)}\). If we show the sparse system as the following matrix form

then matrices \({\textbf {A}}\) and \({\textbf {B}}\) are \((N+1)\) by \((N+1)\), \({\textbf {C}}\) and \(U^{(k+1)}\) are columnar matrices which we define them as follows

where \(n_0\) is the index of \({x}^*\) in the nodal points.

In the first time level, i.e when \(k=0\), we need to the value a(0) that a combination of Eqs. (2) and (3) leads to \(a(0)=u'_0(0).\)

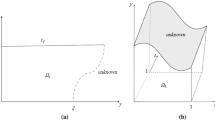

Condition numbers of the matrix \({\textbf {A}}\) in Eq. (59) given in Table 1 are of \({\mathcal {O}}(10^2)\) to \({\mathcal {O}}(10^{17})\). These large condition numbers express that the system of equations (59) is highly ill-conditioned. The ill-conditioning nature of the matrix \({\textbf {A}}\) can also be revealed by plotting its normalised singular values \(\sigma _n/\sigma _1\) for \(n=1:T/\delta t\), in Fig. 1.

5 Numerical results

For numerical purposes, we take \(T = 1\) as final time, \({\bar{\Omega }}=[0, 1]\) as the domain all over and \(r_s=4.2h\) as the radius of support domain where h is the spatial step. In two test problems that will be discussed, we use an agreement as follows. If we consider the IP1 then we set \(x^*=1\), otherwise, we will mention to its value. We implement the proposed method in the foregoing sections with MATLAB 7.0 software in a CI5 machine with 4 Gb of memory.

5.1 Example 1

We consider Eqs. (1)–(4) with the exact solution

where the other functions can be obtained using the exact solution. Moreover, the source term is \(F(u)=2u\). Also, the desired output (6) is given by \(E(t)=0.5e^{2t}\) where \(t\in [0, 1].\) The absolute errors between the numerical and exact solutions for u(x, t) and E(t) with \(h=1/20\), \(\delta t=0.01\) (a, b) and \(h=1/26\), \(\delta t=0.0005\) (c, d), are shown in Fig. 2.

The absolute errors between the exact (66) and numerical solutions u(x, t) and E(t) obtained by solving the direct problem with \(h=1/20\), \(\delta t=0.01\) (a, b), and \(h=1/26\), \(\delta t=0.0005\) (c,d) for Example 1

5.1.1 Exact data

In this subsection, we consider the case of exact data, i.e. there is no noise in the input data (5) and (6) so that the exact solutions are as \(\{u(x, t), a(t)\}=\{x e^{2t}, e^{2t}\}\). In the other words, we assume that \(\gamma =0\) in Eqs. (57) and (58). The absolute errors corresponding to a(t) and u(x, T) by solving the IP1 and IP2 are plotted in Fig. 3 with \(h=1/50, \delta t=1/7\). The results in Fig. 3 related to the IP1 are the best results that we could obtain. This event as for the given condition numbers in Table 1 corresponding to IP1 is not strange. The more accurate numerical results corresponding to IP2 have been depicted in Fig. 4 with \(h=1/50, \delta t=0.001\). In order to a comparison between the proposed meshless method and another numerical technique, we simulate the well-known method of implicit finite differences which is adapted from Shidfar et al. (2006). We assume that \(\{0=t_0<t_1<...<t_M\}\) and \(\{0=x_0<x_1<...<x_N=1\}\) are two partitions of [0, T] and [0, 1], respectively. The implicit finite difference approximation for IP1 may be written in the following form

where \(\delta t\) and h are the step length in time and space coordinates, respectively, and \(U_{i,j}\) is the approximate value of \(u(x_i, t_j)\). If we obtain \(U_{i-1,j+1}\) from (67) and the assumption that \(r =\frac{\delta t}{h^2}\), we have

Here similar to previous sections, to deal with the nonlinear term in above system, we apply the predictor-corrector scheme. Finally, the approximate values of the heat flux at \(x = 0\) can be obtained using the second-order finite-difference approximation Smith (1985)

In Shidfar et al. (2006) the heat flux is approximated with Chebyshev polynomials by using the least-squares method.The numerical results of simulation the functions u(x, T) and a(t) through meshless proposed method and implicit finite difference have been depicted in Fig. 5. The absolute errors of u(x, T) for the meshless proposed method and implicit finite difference are \(4.3412\times 10^{-2}\) and \(3.1109\times 10^{-2}\), respectively. These errors for a(t) are \(1.2172\times 10^{-1}\), meshless proposed method, and \(8.1011\times 10^{-2}\), implicit finite difference. In Fig. 5, the parameters are as \(h=1/32\), \(\delta t=0.2\), meshless proposed method, and \(N=41\), \(M=31\), implicit finite difference method.

5.1.2 Noisy data

In order to investigate the stability of the numerical solution, we include \(\gamma =1\%\) noise into the input data (5) and (6), as given by Eqs. (57) and (58). The numerical solution for a(t) obtained with \(h=1/40\), \(\delta t=1/20\) and no regularization has been found highly oscillatory and unstable, as shown in Fig. 6.

The numerical methods for solving discrete ill-posed problems have been presented in many papers. These methods are based on the so-called regularization techniques and the main aim of the regularization is to stabilize the problem and find a stable solution (Krawczyk-Stańdo and Rudnicki 2007). The most usual form of regularization is that of Tikhonov. In order to apply the Tikhonov regularization in the time level “\(k+1\)”, we first rewrite the system (59) as follows

where \(\underline{{\textbf {a}}}=[u_1^{(k+1)}, u_2^{(k+1)},...,u_N^{(k+1)}, a^{(k+1)}]^{tr}\) and \(\underline{{\textbf {b}}}^{\epsilon }={\textbf {B}}U^{(k)}+{\textbf {C}}\) in which the right hand side of Eq. (74) includes the noisy data (57) or (58). Now, the Tikhonov regularization gives the regularized solution as

where \(D_j\) is the regularization derivative operator of order \(j \in \{0, 1, 2\}\) and \(\lambda \ge 0\) is the regularization parameter. In the current work, we set \(j=0\), i.e the zeroth-order Tikhonov regularization, namely \(D_0=I\). The regularization parameter \(\lambda \) can be chosen according to either the L-curve method (Hansen 1999), or the discrepancy principle (Morozov 1966). The L-curve method suggests choosing \(\lambda \) at the corner of the L-curve which is a plot of the norm of the residual \(\Vert {\textbf {A}}\underline{{\textbf {a}}}_{\lambda }-{\underline{b}}^{\epsilon }\Vert \) versus the solution norm \(\Vert \underline{{\textbf {a}}}_{\lambda }\Vert \). Alternatively, the discrepancy principle chooses \(\lambda >0\) such that the residual \(\Vert {\textbf {A}}\underline{{\textbf {a}}}_{\lambda }-{\underline{b}}^{\epsilon }\Vert \approx \epsilon .\) Since, in this paper, the exact solution is available, a simple procedure to choose the regularization parameter is proposed as follows. Firstly using the knowledge of the desired solution \(\underline{{\textbf {a}}}_{ex}\), we compute the norm \(\Vert \underline{{\textbf {a}}}_{ex}-\underline{{\textbf {a}}}_{\lambda }\Vert \) versus the regularization parameter \(\lambda \). Now, we determine the \(\lambda _{opt}\) corresponding to smallest norm \(\Vert \underline{{\textbf {a}}}_{ex}-\underline{{\textbf {a}}}_{\lambda }\Vert \) as the optimal regularization parameter. We apply the above procedure to obtain the regularized solution in every time level.

Figure 7 shows the analytical and numerical solutions of a(t) beside the choice regularization parameter curve for \(\gamma =1\%\) and \(\gamma =5\%\), by solving the IP1 with \(h=1/40\), \(\delta t=1/10\). Two optimal regularization parameters \(\lambda _{opt}\), in Fig. 7, are corresponding to last iteration of time level. Table 2 lists the maximum norms for a(t) and u(x, T) with \(h=1/45\), \(\delta t=1/10\) and various percentages of noise \(\gamma \in \{ 10^{-2}, 10^{-1}, 1, 5\}\%\) for IP1 and IP2. Figures 8 and 9 are corresponding to the Table 2 so that they exhibit a comparison between the exact a(t) and its regularized solutions in some different percentages of noise. The aim of the Fig. 10 is a comparison between the discrepancy principle curve, L-curve and proposed method to obtain the regularization parameters in first and last time level, i.e \(t_{first}=\delta t\) and \(t_{last}=T\). It is worth noting that the discrepancy principle uses the knowledge of the level of noise

The absolute errors between the exact (77) and numerical solutions u(x, t) and E(t) obtained by solving the direct problem with \(h=1/20\), \(\delta t=0.01\) (a, b), and \(h=1/26\), \(\delta t=0.0005\) (c, d) for Example 2

Also, Fig. 10 displays a comparison between the exact and their regularized solutions of u(x, T) and a(t) with \(h=1/46, \delta t=1/10\) and \(\gamma =1\%\) by solving the IP1. Clearly, it can be seen that the numerical solutions are stable and that they are more accurate as the amount of noise \(\gamma \) decreases. Also from Fig. 10, it is obvious that the proposed method to obtain the regularization parameter, in this work, is in good agreement with the discrepancy principle curve and L-curve. Finally in Table 3, we compare the obtained results via the proposed method with \(h=1/32\), \(\delta t=1/5\) and \(\gamma \in \{1, 5\}\%\) to method of Dehghan et al. (2013), by solving the IP1. From Table 3, the more accurate results of our proposed method with respect to CBF (Dehghan et al. 2013) is evident so that the maximum norm corresponding to columns \(1\%\) and \(5\%\) are \(7.9718\times 10^{-2}\) and \(4.2318\times 10^{-1}\), respectively.

5.2 Example 2

As another example, we first consider the direct problem (1)–(4) with the exact solution

We can obtain the required functions using the exact solution as Eq. (77). Moreover, the nonlinear source term is \(F(u)=u-\sqrt{1-u^2}\). Also, the desired output (6) is given by \(E(t)=\sin (1+t)-\sin (t)\) where \(t\in [0, 1].\) The absolute errors between the numerical and exact solutions for u(x, t) and E(t) with \(h=1/20\), \(\delta t=1/100\) (a, b) and \(h=1/26\), \(\delta t=1/2000\) (c, d), have been depicted in Fig. 11.

5.2.1 Exact data

The inverse problem is given by IP1 and IP2 with the exact solutions as follows

We first consider the case of exact data, i.e. \(\gamma =0\) in Eqs. (57) and (58). The absolute errors corresponding to a(t) and u(x, T) have been displayed in Fig. 12 by solving the IP1 and IP2, with \(h=1/50, \delta t=1/7\). Also, the acceptable errors corresponding to IP2 have been shown in Fig. 13 with \(h=1/50\) and \(\delta t=0.001\) in which confirms the efficiency of the present method. Once again, we compare the ability of meshless proposed method to implicit finite difference that have been shown in Fig. 14. We simulate the u(x, T) and a(t) in IP1 with \(h=1/40\), \(\delta t=0.2\), meshless proposed method, and N=41, M=31, implicit finite difference method in Fig. 14. The absolute errors corresponding to Fig. 14 are \(2.1804\times 10^{-2}\) and \(1.4206\times 10^{-2}\) for a(t) and u(x, T), respectively in meshless prposed method. These values in implicit finite difference are as \(7.7569\times 10^{-3}\) and \(1.7396\times 10^{-3}\) for a(t) and u(x, T), respectively.

5.2.2 Noisy data

In order to investigate the stability of the numerical solution, we include some \(\gamma \in \{ 1, 5\}\%\) noise into the input data (6), as given by Eq. (58). So in Fig. 15, we exhibit the analytical and numerical solutions of a(t) beside the choice regularization parameter curve, by solving the IP2 with \(h=1/26\), \(\delta t=1/10\). Here, the optimal regularization parameters \(\lambda _{opt}\), in Fig. 15, are corresponding to last iteration of time level, moreover the maximum norms corresponding to percentages of noise \(\gamma =1\%\) and \(5\%\) are \(4.5783\times 10^{-2}\) and \(1.5792\times 10^{-1}\), respectively. Table 4 collects the maximum norms for a(t) and u(x, T) with \(h=1/56\), \(\delta t=1/5\) and different percentages of noise \(\gamma \in \{ 10^{-2}, 10^{-1}, 1, 5\} \%\). Corresponding to Table 4, we produce a comparison between the exact a(t) and its regularized solution in Figs. 16 and 17 by solving the IP1 and IP2. Obviously, it can be seen that the numerical solutions are stable and that they are more accurate as the amount of noise \(\gamma \) decreases. Figure 18 displays a comparison between the discrepancy principle curve, L-curve and proposed method to obtain the regularization parameters in the first and last time level with \(h=1/56\), \(\delta t=1/5\) and \(\gamma =1\%\) by solving the IP1. Moreover in Fig. 18, the exact solutions of u(x, T) and a(t) have been shown versus their approximate solutions with maximum norms \(1.1930\times 10^{-2}\) and \(9.9502\times 10^{-2}\) for u(x, T) and a(t), respectively. Once we can see the proposed method to obtain the regularization parameter, in this work, is confirmed by the discrepancy principle curve and L-curve. We compare the numerical results of heat flux a(t) through the present method with \(h=1/20\), \(\delta t=1/10\) and \(\gamma =1\%\) to method of Dehghan et al. (2013), by solving the IP1 and IP2 in Table 5. More accurate results of the present method with respect to method of Dehghan et al. (2013) is clear so that the maximum norm corresponding to columns IP1 and IP2 are \(4.6141\times 10^{-2}\) and \(1.9396\times 10^{-2}\), respectively. As the last simulation, we consider IP1 with \(x^*=0.2\) and \(x^*=0.5\) that the numerical results are presented in Table 6. Also, Figs. 19 and 20 are corresponding to Table 6 with \(h=1/20\), \(\delta t=1/7\), \(x^*=0.2\) and \(x^*=0.5\), respectively. Figure 21 depicts the exact solutions of u(0.2, t) and u(0.5, t) versus the approximate solutions for percentages of noise \(\gamma =1\%\) and \(2\%\) with \(h=1/20\), \(\delta t=1/10\) by solving the IP1 in the cases of \(x^*=0.2\) and \(x^*=0.5\).

6 Conclusion

In this work, a kind of meshless local radial point interpolation method was successfully employed for solving the IHCP with a nonlinear source term. The problem is discretized numerically using the FDM, and in order to stabilize the solution, the Tikhonov regularization method has been used.Two illustrative examples have been solved numerically which demonstrate the validity and applicability of the technique. Although the numerical method and results have been presented for the one-dimensional IHCP, a similar meshless proposed method can easily be extended to higher dimensions. Future work will consist in investigating the IHCP in higher dimensions (Arora and Dabas 2019).

Data availability

The data that support the findings of this study are available from the corresponding author, [Ahmad Jafarabadi], upon reasonable request.

References

Arora S, Dabas J (2019) Inverse heat conduction problem in two-dimensional anisotropic medium. Int J Appl Comput Math 5(6):161

Cannon JR (1984) The one-dimensional heat equation, vol 23. Cambridge University Press, Cambridge

Cannon JR, Du Chateau P (1998) Structural identification of an unknown source term in a heat equation. Inverse Problems 14(3):535

Dehghan M, Ghesmati A (2010) Numerical simulation of two-dimensional sine-Gordon solitons via a local weak meshless technique based on the radial point interpolation method (RPIM). Comput Phys Commun 181(4):772–786

Dehghan M, Yousefi SA, Rashedi K (2013) Ritz–Galerkin method for solving an inverse heat conduction problem with a nonlinear source term via Bernstein multi-scaling functions and cubic B-spline functions. Inverse Problems Sci Eng 21(3):500–523

Deng C, Zheng H, Fu M, Xiong J, Chen CS (2020) An efficient method of approximate particular solutions using polynomial basis functions. Eng Anal Boundary Elem 111:1–8

Fernandes AP, dos Santos MB, Guimarães G (2015) An analytical transfer function method to solve inverse heat conduction problems. Appl Math Model 39(22):6897–6914

Frankel JI, Keyhani M (1997) A global time treatment for inverse heat conduction problems. J Heat Trans 119:673–683

Grabski JK (2019) Numerical solution of non-Newtonian fluid flow and heat transfer problems in ducts with sharp corners by the modified method of fundamental solutions and radial basis function collocation. Eng Anal Boundary Elem 109:143–152

Hansen PC (1999) The L-curve and its use in the numerical treatment of inverse problems

Hao DN, Reinhardt H-J (1996) Recent contributions to linear inverse heat conduction problems. J Inverse Ill-Posed Problems 4:23–32

Hon YC, Wei T (2004) A fundamental solution method for inverse heat conduction problem. Eng Anal Boundary Elem 28(5):489–495

Hussen G et al (2022) Meshless and homotopy perturbation methods for one dimensional inverse heat conduction problem with Neumann and robin boundary conditions. J Appl Math Inf 40(3–4):675–694

Ismail S et al (2017) Meshless collocation procedures for time-dependent inverse heat problems. Int J Heat Mass Transf 113:1152–1167

Jonas P, Louis AK (2000) Approximate inverse for a one-dimensional inverse heat conduction problem. Inverse Prob 16(1):175

Kanca F, Ismailov MI (2012) The inverse problem of finding the time-dependent diffusion coefficient of the heat equation from integral overdetermination data. Inverse Problems Sci Eng 20(4):463–476

Krawczyk-Stańdo D, Rudnicki M (2007) Regularization parameter selection in discrete ill-posed problems—the use of the U-curve. Int J Appl Math Comput Sci 17(2):157–164

Ku C-Y, Liu C-Y, Xiao J-E, Hsu S-M, Yeih W (2021) A collocation method with space–time radial polynomials for inverse heat conduction problems. Eng Anal Boundary Elem 122:117–131

Lesnic D, Elliott L (1999) The decomposition approach to inverse heat conduction. J Math Anal Appl 232(1):82–98

Lesnic D, Elliott L, Ingham DB (1996) Application of the boundary element method to inverse heat conduction problems. Int J Heat Mass Transf 39(7):1503–1517

Liu J (1996) A stability analysis on Beck’s procedure for inverse heat conduction problems. J Comput Phys 123(1):65–73

Morozov VA (1966) On the solution of functional equations by the method of regularization. Dokl Akad Nauk 167:510–512

Shen S-Y (1999) A numerical study of inverse heat conduction problems. Comp Math Appl 38(7–8):173–188

Shidfar A, Karamali GR, Damirchi J (2006) An inverse heat conduction problem with a nonlinear source term. Nonlinear Anal Theory Methods Appl 65(3):615–621

Shivanian E, Jafarabadi A (2017a) Inverse Cauchy problem of annulus domains in the framework of spectral meshless radial point interpolation. Eng Comput 33(3):431–442

Shivanian E, Jafarabadi A (2017b) Numerical solution of two-dimensional inverse force function in the wave equation with nonlocal boundary conditions. Inverse Problems Sci Eng 25(12):1743–1767

Shivanian E, Jafarabadi A (2018a) The numerical solution for the time-fractional inverse problem of diffusion equation. Eng Anal Boundary Elem 91:50–59

Shivanian E, Jafarabadi A (2018b) An inverse problem of identifying the control function in two and three-dimensional parabolic equations through the spectral meshless radial point interpolation. Appl Math Comput 325:82–101

Smith GD (1985) Numerical solution of partial differential equations: finite difference methods. Oxford University Press, Oxford

Wang JG, Liu GR (2002) A point interpolation meshless method based on radial basis functions. Int J Numer Methods Eng 54(11):1623–1648

Xiong P, Deng J, Tao L, Qi L, Liu Y, Zhang Y (2020) A sequential conjugate gradient method to estimate heat flux for nonlinear inverse heat conduction problem. Ann Nucl Energy 149:107798

Yaparova N (2014) Numerical methods for solving a boundary-value inverse heat conduction problem. Inverse Problems Sci Eng 22(5):832–847

Yousefi SA, Lesnic D, Barikbin Z (2012) Satisfier function in Ritz–Galerkin method for the identification of a time-dependent diffusivity. J Inverse Ill-Posed Problems 20(5–6):701–722

Acknowledgements

The authors would like to thank the anonymous referees for their valuable comments and helpful suggestions that improve the quality of our paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Antonio José Silva Neto.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dinmohammadi, A., Jafarabadi, A. Inverse heat conduction problem with a nonlinear source term by a local strong form of meshless technique based on radial point interpolation method. Comp. Appl. Math. 42, 284 (2023). https://doi.org/10.1007/s40314-023-02414-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-023-02414-7

Keywords

- Meshless local radial point interpolation method

- Radial basis function

- Inverse heat conduction problem

- Surface heat flux