Abstract

In this paper, we introduce a new iterative algorithm for approximating a common element of the set of solutions of an equilibrium problem, a common zero of a finite family of monotone operators and the set of fixed points of nonexpansive mappings in Hadamard spaces. We also give numerical examples to solve a nonconvex optimization problem in a Hadamard space to support our main result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Equilibrium problems were originally studied in Blum and Oettli (1994) as a unifying class of variational problems. Let K be a nonempty closed convex subset of a Hadamard space X and \(f: K\times K\rightarrow {\mathbb {R}}\) be a bifunction. An equilibrium problem is to find \(x\in K\) such that

The solution set of the equilibrium problem (1.1) is denoted by EP(f, K). Equilibrium problems and their generalizations have been important tools for solving problems arising in the fields of linear or nonlinear programming, variational inequalities, complementary problems, optimization problems, fixed point problems and have been widely applied to physics, structural analysis, management sciences and economics. An extragradient method for equilibrium problems in a Hilbert space has been studied in Quoc et al. (2008). It has the following form:

Under certain assumptions, the weak convergence of the sequence \(\{x_n\}\) to a solution of the equilibrium problem has been established. In recent years some algorithms defined to solve equilibrium problems, variational inequalities and minimization problems, have been extended from the Hilbert space framework to the more general setting of Riemannian manifolds, especially Hadamard manifolds and the Hilbert unit ball. This popularization is due to the fact that several nonconvex problems may be viewed as a convex problem under such perspective. Equilibrium problems in Hadamard spaces were recently investigated in (Iusem and Mohebbi 2020; Khatibzadeh and Mohebbi 2019, 2021; Khatibzadeh and Ranjbar 2017; Kumam and Chaipunya 2017). In 2019, Khatibzadeh and Mohebbi (2019) studied \(\Delta \)-convergence and strong convergence of the sequence generated by the extragradient method for pseudo-monotone equilibrium problems in Hadamard spaces. Furthermore, in Khatibzadeh and Mohebbi (2021), the authors proved \(\Delta \)-convergence of the sequence generated by the proximal point algorithm to an equilibrium point of the pseudo-monotone bifunction and the strong convergence under additional assumptions on the bifunction in Hadamard spaces.

One of the most important problems in monotone operator theory is approximating a zero of a monotone operator. Martinet (1970) introduced one of the most popular methods for approximating a zero of a monotone operator in Hilbert spaces that is called the proximal point algorithm; see also (Bruck and Reich 1977; Rockafellar 1976). In 2017, Khatibzadeh and Ranjbar (2017) generalized monotone operators and their resolvents to Hadamard spaces by using the duality theory. Very recently, Moharami and Eskandani (2020) proposed the following hybrid extragradient method for approximating a common element of the set of solutions of an equilibrium problem for a single bifunction f and a common zero of a finite family of monotone operators \(A_1,A_2,\ldots ,A_N\) in Hadamard spaces;

where \(\{\alpha _n\}\), \(\{\lambda _n\}\) and \(\{\gamma _n^i\}\) are sequences satisfying some conditions. They proved strong convergence theorem of the sequence \(\{x_n\}\) generated by the above scheme.

In recent years, the problem of finding a common element of the set of solutions for equilibrium problems, zero-point problems and fixed point problems in the framework of Hilbert spaces, Banach spaces and Hadamard spaces have been intensively studied by many authors, for instance, see (Alakoya and Mewomo 2022; Alakoya et al. 2022a, b; Eskandani and Raeisi 2019; Iusem and Mohebbi 2020; Khatibzadeh and Ranjbar 2017; Kumam and Chaipunya 2017; Li et al. 2009; Moharami and Eskandani 2020; Ogwo et al. 2021; Uzor et al. 2022).

Motivated and inspired by the above results, in this paper, we propose a new iterative algorithm by using a modified hybrid extragradient method for finding a common element of the set of solutions of an equilibrium problem, a common zero of a finite family of monotone operators and the set of fixed points for nonexpansive mappings in Hadamard spaces. The \(\Delta \)-convergence theorem is established under suitable assumptions. We also provide a numerical example to illustrate and show the efficiency of the proposed algorithm for supporting our main results.

2 Preliminaries

In this section, we will mention basic concepts, definitions, notations, and some useful lemmas on Hadamard spaces for use in the next sections. Let (X, d) be a metric space. A geodesic from x to y is a map \(\gamma \) from the closed interval \([0,d(x,y)]\subset {\mathbb {R}}\) to X such that \(\gamma (0)=x,\gamma (d(x,y))=y\) and \(d(\gamma (t_1),\gamma (t_2))=|t_1-t_2|\) for all \(t_1,t_2\in [0,d(x,y)].\) The image of \(\gamma \) is called a geodesic (or metric) segment joining x and y. When it is unique, this geodesic segment is denoted by [x, y]. The space X is said to be a geodesic metric space if every two points of X are joined by a geodesic, and X is said to be uniquely geodesic metric space if there is exactly one geodesic joining x and y for each \(x,y\in X\). A subset C of X is said to be convex, if for any two points \(x,y\in C\), the geodesic joining x and y is contained in C. Let X be a uniquely geodesic metric space. For each \(x,y\in X\) and for each \(\alpha \in [0,1]\), there exists a unique point \(z\in [x,y]\) such that \(d(x, z) = (1-\alpha ) d(x, y)\) and \(d(y, z) = \alpha d(x, y)\). We denote the unique point z by \(\alpha x \oplus (1-\alpha )y\). A geodesic metric space (X, d) is a CAT(0) space if it satisfies the \((CN^*)\) inequality (Dhompongsa and Panyanak 2008):

for all \(x,y,z\in X\) and \(\alpha \in [0,1]\). In particular, if x, y, z are points in X and \(\alpha \in [0,1]\), then we have

It is well known that a CAT(0) space is a uniquely geodesic space. A complete CAT(0) space is called a Hadamard space. Hilbert spaces and \({\mathbb {R}}\)-trees are two basic examples of Hadamard spaces, which in some sense represent the most extreme cases; curvature 0 and curvature \(-\infty \). The most illuminating instances of Hadamard spaces are Hadamard manifolds. A Hadamard manifold is a complete simply connected Riemannian manifold of nonpositive sectional curvature. The class of Hadamard manifolds includes hyperbolic spaces, manifolds of positive definite matrices, the complex Hilbert ball with the hyperbolic metric and many other spaces (see Goebel and Reich 1984; Kohlenbach 2015; Tits 1977).

In the following examples, we give some Hadamard spaces.

Example 2.1

Consider \({\mathcal {H}}=\{(x,y)\in {\mathbb {R}}^2: y^2-x^2=1\,\text {and }y>0\}\). Let d be a metric defined by the function \(d:{\mathcal {H}}\times {\mathcal {H}}\rightarrow {\mathbb {R}}\) that assigns to each pair of vectors \(u=(u_1,u_2)\) and \(v=(v_1,v_2)\) the unique nonnegative number \(d(u,v)\ge 0\) such that

It is known that, in general, the metric space \(({\mathcal {H}},d)\) is a Hadamard space and also a one-dimensional hyperbolic space (see Bridson and Haefliger 1999; Kaewkhao et al. 2015). Furthermore, it is easy to see that \(({\mathcal {H}},d)\) is an \({\mathbb {R}}\)-tree.

Example 2.2

Let \({\mathcal {M}}=P(n,{\mathbb {R}})\) be the space of \((n\times n)\) positive symmetric definite matrices endowed with the Riemannian metric

for all \(A,B\in T_E({\mathcal {M}})\) and every \(E\in {\mathcal {M}}\), where \(T_E({\mathcal {M}})\) denotes the tangent plane at \(E\in {\mathcal {M}}\). Therefore, \(({\mathcal {M}},\langle A,B \rangle >_E)\) is a Hadamard space (see Khatibzadeh and Mohebbi 2019).

Example 2.3

Let \(Y=\{(x,e^x): x\in {\mathbb {R}}\}\) and \(X_n=\{(n,y): y\ge e^n\}\) for each \(n\in {\mathbb {Z}}\). Set \({\mathcal {M}}=Y\cup \bigcup \limits _{n \in {{\mathbb {Z}}}} {X_n }\) equipped with a metric \(d: X\times X \rightarrow [0,\infty )\), defined for all \(x=(x_1,x_2),y=(y_1,y_2)\in X\) by

where \({\dot{\gamma }}\) is the derivative of the curve \(\gamma : {\mathbb {R}}\rightarrow X\) given as \(\gamma (t)=(t,e^t)\) for each \(t\in {\mathbb {R}}\). Therefore, \(({\mathcal {X}},d)\) is a Hadamard space (see Chaipunya and Kumam 2017).

Let K be a nonempty subset of a Hadamard space X and \(T: K\rightarrow K\) be a mapping. The fixed point set of T is denoted by F(T), that is, \(F(T) = \{x\in K: x = Tx\}\). Recall that a mapping T is called nonexpansive if

for all \(x,y\in K\).

The fixed point theory in Hadamard spaces was first studied by Kirk (2003) in 2003. Many authors have then published papers on the existence and convergence of fixed points for nonlinear mappings in such spaces (e.g., see Bačák and Reich 2014; Nanjaras et al. 2010; Phuengrattana and Suantai 2012, 2013; Reich and Salinas 2015, 2016, 2017; Reich and Shafrir 1990; Sopha and Phuengrattana 2015).

The notion of the asymptotic center can be introduced in the general setting of a Hadamard space X as follows: Let \(\{x_n\}\) be a bounded sequence in X. For \(x\in X\), we define a mapping \(r\left( \cdot ,\{x_{n}\} \right) :X\rightarrow [0,\infty )\) by

The asymptotic radius of \(\{x_{n}\} \) is given by

and the asymptotic center of \(\{x_{n}\}\) is the set

It is known that in a Hadamard space, the asymptotic center \(A\left( {\left\{ {x_n } \right\} } \right) \) consists of exactly one point (Dhompongsa et al. 2006). A sequence \(\{x_n\}\) in a Hadamard space X is said to be \(\Delta \)-converge to \(x\in X\) if x is the unique asymptotic center of \(\{u_n\}\) for every subsequence \(\{u_n\}\) of \(\{x_n\}\). It is well known that every bounded sequence in a Hadamard space has a \(\Delta \)-convergent subsequence (Kirk and Panyanak 2008).

Lemma 2.4

(Ranjbar and Khatibzadeh 2016) Let X be a Hadamard space and \(\{x_n\}\) be a sequence in X. If there exists a nonempty subset F of X satisfying:

-

(i)

For every \(z\in F\), \(\lim _{n\rightarrow \infty }d(x_n,z)\) exists.

-

(ii)

If a subsequence \(\{x_{x_k}\}\) of \(\{x_n\}\) \(\Delta \)-converges to \(x\in X\), then \(x\in F\).

Then, there exists \(p\in F\) such that \(\{x_n\}\) \(\Delta \)-converges to \(p\in X\).

Lemma 2.5

(Dhompongsa and Panyanak 2008) Let K be a nonempty closed and convex subset of a Hadamard space X, \(T:K\rightarrow K\) be a nonexpansive mapping and \(\{x_n\}\) be a bounded sequence in K such that \(\lim _{n\rightarrow \infty } d(x_n,Tx_n)=0\) and \(\{x_n\}\) \(\Delta \)-converges to x. Then \(x=Tx\).

A function \(g:K\rightarrow (-\infty ,\infty ]\) defined on a nonempty convex subset K of a Hadamard space is convex if \(g(tx\oplus (1-y)y)\le tg(x) + (1-t)g(y)\) for all \(x,y\in K\) and \(t\in (0,1)\). We say that a function g defined on K is lower semicontinuous (or upper semicontinuous) at a point \(x\in K\) if

for each sequence \(\{x_n\}\) such that \(\lim _{n\rightarrow \infty }x_n= x\). A function g is said to be lower semicontinuous (or upper semicontinuous) on K if it is lower semicontinuous (or upper semicontinuous) at any point in K.

In 2010, Kakavandi and Amini (2010) introduced the concept of quasilinearization in a Hadamard space X, see also (Berg and Nikolaev 2008), as follows:

Denote a pair \((a,b)\in X\times X\) by \(\overrightarrow{ab}\) and call it a vector. The quasilinearization is a map \(\langle \cdot ,\cdot \rangle : (X\times X)\times (X\times X)\rightarrow {\mathbb {R}}\) defined by

for any \(a,b,c,d\in X\). We say that X satisfies the Cauchy-Schwarz inequality if

for any \(a,b,c,d\in X\). It is known that a geodesically connected metric space is a CAT(0) space if and only if it satisfies the Cauchy–Schwarz inequality; see (Berg and Nikolaev 2008). Later, Kakavandi and Amini (2010) defined a pseudometric D on \({\mathbb {R}}\times X\times X\) by

where \(\Theta : {\mathbb {R}}\times X\times X\rightarrow C(X;{\mathbb {R}})\) defined by \(\Theta (t,a,b)(x) = t \langle \overrightarrow{ab},\overrightarrow{ax} \rangle \) for all \(x\in X\) and \(C(X;{\mathbb {R}})\) is the space of all continuous real-valued functions on X. For a Hadamard space X, it is obtained that \(D((t,a,b),(s,u,v))=0\) if and only if \(t \langle \overrightarrow{ab},\overrightarrow{xy} \rangle = s \langle \overrightarrow{uv},\overrightarrow{xy} \rangle \) for all \(x,y\in X\). Then, D can impose an equivalent relation on \({\mathbb {R}}\times X\times X\), where the equivalence class of (t, a, b) is

The set \(X^* = \{[t \overrightarrow{ab}]: (t,a,b)\in {\mathbb {R}}\times X\times X\}\) is a metric space with metric \(D([tab],[scd]):=D((t,a,b),(s,c,d))\), which is called the dual metric space of X. It is clear that \([\overrightarrow{aa}=\overrightarrow{bb}]\) for all \(a,b\in X\). Fix \(o\in X\), we write \(0=\overrightarrow{oo}\) as the zero of the dual space. Note that \(X^*\) acts on \(X\times X\) by \(\langle x^*,\overrightarrow{xy} \rangle = t \langle \overrightarrow{ab},\overrightarrow{xy} \rangle \), \((x^*=[t\overrightarrow{ab}]\in X^*,\,x,y\in X)\).

Let X be a Hadamard space with dual \(X^*\) and let \(A: X \rightrightarrows X^*\) be a multi-valued operator with domain \(D(A):=\{x\in X| Ax\ne \emptyset \}\), range \(R(A):=\cup _{x\in X}Ax\), \(A^{-1}(x^*):=\{x\in X| x^*\in Ax\}\) and graph \(gra(A):=\{(x,x^*)\in X\times X^* | x\in D(A), x^*\in Ax\}\). A multi-valued \(A: X \rightrightarrows X^*\) is said to be monotone (Khatibzadeh and Mohebbi 2019) if the inequality \(\langle x^*-y^*, \overrightarrow{yx}\rangle \ge 0\) holds for every \((x,x^*),(y,y^*)\in gra(A)\). A monotone operator \(A: X \rightrightarrows X^*\) is maximal if there exists no monotone operator \(B: X \rightrightarrows X^*\) such that gra(B) properly contains gra(A), that is, for any \((y,y^*)\in X\times X^*\), the inequality \(\langle x^*-y^*, \overrightarrow{yx}\rangle \ge 0\) for all \((x,x^*)\in gra(A)\) implies that \(y^*\in Ay\). The resolvent of A of order \(\gamma \), is the multi-valued mapping \(J^A_{\gamma }: X \rightrightarrows X\), defined by \(J^A_{\gamma }(x):=\{z\in X | [\frac{1}{\gamma } \overrightarrow{zx}]\in Az\}\). Indeed

where o is an arbitrary member of X and \(\overrightarrow{oI}(x):=[\overrightarrow{ox}]\). It is obvious that this definition is independent of the choice of o.

Theorem 2.6

(Khatibzadeh and Ranjbar 2017) Let X be a Hadamard space with dual \(X^*\). and let \(A: X \rightrightarrows X^*\) be a multi-valued mapping. Then

-

(i)

For any \(\gamma >0\), \(R(J_\gamma ^A)\subset D(A)\), \(F(J_\gamma ^A)=A^{-1}(0)\).

-

(ii)

If A is monotone, then \(J_\gamma ^A\) is single-valued on its domain and

$$\begin{aligned} d(J_\gamma ^Ax,J_\gamma ^Ay)^2\le \langle \overrightarrow{J_\gamma ^AxJ_\gamma ^Ay},\overrightarrow{xy}\rangle ,\, \forall x,y\in D(J_\gamma ^A). \end{aligned}$$In particular, \(J_\gamma ^A\) is a nonexpansive mapping.

-

(iii)

If A is monotone and \(0<\gamma \le \mu \), then \(d(J_\gamma ^Ax,J_\mu ^Ax)^2\le \frac{\mu -\gamma }{\mu +\gamma }d(x,J_\mu ^Ax)^2\), which implies that \(d(x,J_\gamma ^Ax)\le 2d(x,J_\mu ^Ax)\).

It is well known that if T is a nonexpansive mapping on a subset K of a Hadamard space X, then F(T) is closed and convex. Thus, if A is a monotone operator on a Hadamard space, then, by parts (i) and (ii) of Theorem 2.6, \(A^{-1}(0)\) is closed and convex. Also by using part (ii) of Theorem 2.6 for all \(u\in F(J_\gamma ^A)\) and \(x\in D(J_\gamma ^A)\), we have

We say that \(A: X \rightrightarrows X^*\) satisfies the range condition if, for every \(\lambda >0\), \(D(J^A_{\lambda })=X\). It is known that if A is a maximal monotone operator on a Hilbert space H, then \(R(I+\lambda A)=H\) for all \(\lambda >0\). Thus, every maximal monotone operator A on a Hilbert space satisfies the range condition. Also as it has been shown in Li et al. (2009) if A is a maximal monotone operator on a Hadamard manifold, then A satisfies the range condition.

For solving the equilibrium problem, we assume that the bifunction \(f:K\times K \rightarrow {\mathbb {R}}\) satisfies the following assumption.

Assumption 2.7

Let K be a nonempty closed convex subset of a Hadamard space X. Let \(f:K\times K \rightarrow {\mathbb {R}}\) be a bifunction satisfies the following conditions:

- \((B_1)\):

-

\(f(x,\cdot ):X\rightarrow {\mathbb {R}}\) is convex and lower semicontinuous for all \(x\in X\).

- \((B_2)\):

-

\(f(\cdot ,y)\) is \(\Delta \)-upper semicontinuous for all \(y\in X\).

- \((B_3)\):

-

f is Lipschitz-type continuous, i.e. there exist two positive constants \(c_1\) and \(c_2\) such that

$$\begin{aligned} f(x,y) + f(y,z) \ge f(x,z) - c_1d(x,y)^2-c_2d(y,z)^2,\,\,\text {for all }x,y,z\in X. \end{aligned}$$ - \((B_4)\):

-

f is pesudo-monotone, i.e. for every \(x,y\in X\), \(f(x,y)\ge 0\) implies \(f(y,x)\le 0\).

Remark 2.8

It is known in Moharami and Eskandani (2020) that if a bifunction f satisfying conditions \((B_1)\), \((B_2)\) and \((B_4)\) of Assumption 2.7, then EP(f, K) is closed and convex.

3 Main results

In this section, we prove \(\Delta \)-convergence theorems for finding a common element of the set of solutions to an equilibrium problem, a common zero of a finite family of monotone operators and the set of fixed points of nonexpansive mappings in Hadamard spaces. In order to prove our main results, the following two lemmas are needed.

Lemma 3.1

Let K be a nonempty closed convex subset of a Hadamard space X and let \(f:K\times K \rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.7. Let \(A_1,A_2,\ldots ,A_N: X \rightrightarrows X^*\) be N multi-valued monotone operators that satisfy the range condition with \(D(A_N)\subset K\) and let \(T:K\rightarrow K\) be a nonexpansive mapping. Assume that \(\Theta = F(T) \cap EP(f,K) \cap \bigcap _{i=1}^N A_i^{-1}(0) \ne \emptyset \). Let \(x_1\in K\) and \(\{x_n\}\) be a sequence generated by

where \(\{\alpha _n\}, \{\beta _n\} \subset (0,1)\), \(0<\alpha \le \lambda _n\le \beta < \min \{\frac{1}{2c_1},\frac{1}{2c_2}\}\) and \(\{\gamma _n^i\} \subset (0,\infty )\) for all \(i=1,2,\ldots ,N\). If \(x^*\in \Theta \), then we have the following:

-

(i)

\(f(y_n,w_n)\le \frac{1}{2\lambda _n}[d(z_n,x^*)^2-d(z_n,w_n)^2-d(w_n,x^*)^2]\);

-

(ii)

\(\left( \frac{1}{2\lambda _n}-c_1\right) d(z_n,y_n)^2 +\left( \frac{1}{2\lambda _n}-c_2\right) d(y_n,w_n)^2-\frac{1}{2\lambda _n}d(z_n,w_n)^2\le f(y_n,w_n)\);

-

(iii)

\(d(w_n,x^*)^2\le d(z_n,x^*)^2-(1-2c_1\lambda _n)d(z_n,y_n)^2-(1-2c_2\lambda _n)d(y_n,w_n)^2\).

Proof

The proof of this fact is similar to that of (Khatibzadeh and Mohebbi 2019, Lemma 2.1). For convenience of the readers, we include the details.

(i) Take \(x^*\in \Theta \). By letting \(y=tw_n\oplus (1-t)x^*\) such that \(t\in [0,1)\), we have

Since \(f(x^*,y_n)\ge 0\), pseudo-monotonicity of f implies that \(f(y_n,x^*)\le 0\). Thus, we can write the above inequality as

Now, if \(t\rightarrow 1^-\), we get

(ii) By letting \(y=ty_n\oplus (1-t)w_n\) such that \(t\in [0,1)\), we have

which implies that

Now, if \(t\rightarrow 1^-\), we get

Also, by \((B_3)\), f is Lipschitz-type continuous with constants \(c_1\) and \(c_2\), hence we have

It follows by (3.2) and (3.3) that

(iii) By (i) and (ii), we can conclude that

This completes the proof. \(\square \)

Lemma 3.2

Let K be a nonempty closed convex subset of a Hadamard space X and let \(f:K\times K \rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.7. Let \(A_1,A_2,\ldots ,A_N: X \rightrightarrows X^*\) be N multi-valued monotone operators that satisfy the range condition with \(D(A_N)\subset K\) and let \(T:K\rightarrow K\) be a nonexpansive mapping. Assume that \(\Theta = F(T) \cap EP(f,K) \cap \bigcap _{i=1}^N A_i^{-1}(0) \ne \emptyset \). Let \(x_1\in K\) and \(\{x_n\}\) be a sequence generated by (3.1) where \(\{\alpha _n\}, \{\beta _n\} \subset (0,1)\), \(0<\alpha \le \lambda _n\le \beta < \min \{\frac{1}{2c_1},\frac{1}{2c_2}\}\) and \(\{\gamma _n^i\} \subset (0,\infty )\) for all \(i=1,2,\ldots ,N\). If \(x^*\in \Theta \), then we have \(d(w_n,x^*)\le d(z_n,x^*)\le d(x_n,x^*)\) and \(d(x_{n+1},x^*)\le d(x_n,x^*)\).

Proof

From the nonexpansivity of \(J_{\gamma _n^{i}}^{A_i}\) for all \(i=1,2,\ldots ,N\), we have

Using Lemma 3.1(iii), we have

This completes the proof. \(\square \)

We now state and prove our main result.

Theorem 3.3

Let K be a nonempty closed convex subset of a Hadamard space X and let \(f:K\times K \rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.7. Let \(A_1,A_2,\ldots ,A_N: X \rightrightarrows X^*\) be N multi-valued monotone operators that satisfy the range condition with \(D(A_N)\subset K\) and let \(T:K\rightarrow K\) be a nonexpansive mapping. Assume that \(\Theta = F(T) \cap EP(f,K) \cap \bigcap _{i=1}^N A_i^{-1}(0) \ne \emptyset \). Let \(x_1\in K\) and \(\{x_n\}\) be a sequence generated by (3.1) where \(\{\alpha _n\}, \{\beta _n\} \subset (0,1)\) and \(\{\lambda _n\}, \{\gamma _n^i\} \subset (0,\infty )\) satisfy the following conditions:

-

(C1)

\(\liminf _{n\rightarrow \infty }\gamma _n^i >0\) for all \(i=1,2,\ldots ,N\),

-

(C2)

\(0<\alpha \le \lambda _n\le \beta < \min \{\frac{1}{2c_1},\frac{1}{2c_2}\}\),

-

(C3)

\(\lim _{n\rightarrow \infty }\alpha _n=0\) and \(\sum _{n=1}^{\infty }\alpha _n=\infty \),

-

(C4)

\(\liminf _{n\rightarrow \infty }\beta _n(1-\beta _n)>0\).

Then the sequence \(\{x_n\}\) \(\Delta \)-converges to a point of \(\Theta \).

Proof

Let \(x^*\in \Theta \). It implies by Lemma 3.2 that \(d(x_{n+1},x^*)\le d(x_n,x^*)\). Therefore \(\lim _{n\rightarrow \infty }d(x_n,x^*)\) exists for all \(x^*\in \Theta \). This show that \(\{x_n\}\) is bounded.

Put \(S_n^i=J_{\gamma _n^{i}}^{A_i}\circ J_{\gamma _n^{i-1}}^{A_{i-1}}\circ \cdots \circ J_{\gamma _n^{1}}^{A_1}\), for \(i=1,2,\ldots ,N\) and \(n\in {\mathbb {N}}\). Then \(z_n=S_n^Nx_n\). We also assume that \(S_n^0=I\), where I is the identity operator. By the nonexpansivity of \(S_n^i\), we have \(d(S_n^ix_n,x^*)\le d(x_n,x^*)\). This implies that

Using (3.1) and Lemma 3.2, we can write

So

By the conditions (C3) and (C4), for \(i=1,2,\ldots ,N\), we have

Applying (2.1), we obtain

This implies by (3.7) that

and hence for any \(i=1,2,\ldots ,N\), we have

Then

Since \(\liminf _{n\rightarrow \infty }\gamma _n^i >0\), there exists \(\gamma _0\in {\mathbb {R}}\) such that \(\gamma _n^i\ge \gamma _0>0\) for all \(n\in {\mathbb {N}}\) and \(i=1,2,\ldots ,N\). By using Theorem 2.6, for all \(i=1,2,\ldots ,N\), we have

By (3.8), we get

Now for every \(i=1,2,\ldots ,N\), we have

This implies by (3.9) and (3.10) that

Using (3.1) and Lemma 3.2, we have

Hence

It implies by the conditions (C3) and (C4) that

Consider

Applying (3.9) and (3.12), we obtain

Let \(\{x_{n_k}\}\) be a subsequence of \(\{x_n\}\) such that \(\{x_{n_k}\}\) \(\Delta \)-converges to \(p\in K\). Using Lemma 2.5 and (3.13), we have \(p\in F(T)\). By Lemma 2.5 and (3.11), we get \(p\in A_i^{-1}(0)\) for any \(i=1,2,\ldots ,N\). So \(p\in \bigcap _{i=1}^NA_i^{-1}(0)\). To show \(p\in EP(f,K)\), we assume that \(z=\epsilon w_n\oplus (1-\epsilon )y\), where \(0<\epsilon <1\) and \(y\in K\). So we have

Therefore

Now, if \(\epsilon \rightarrow 1^-\), we obtain

Since

it implies by (3.14) that

By \(\liminf _{n\rightarrow \infty }(1-c_i\lambda _n)>0\), for \(i=1,2\), using Lemma 3.1(iii), we have

This implies that

Using Lemma 3.1(i), Lemma 3.1(ii), (3.16) and (3.17), we have

Since \(S_n^Nx_n=z_n\), it follows by (3.9) and (3.16) that \(\{y_{n_k}\}\) \(\Delta \)-converges to p. Now replacing n with \(n_k\) in (3.15), taking \(\limsup \) and using (3.17) and (3.18), we have

Therefore \(p\in EP(f,K)\) and so \(p\in \Theta \). This implies by Lemma 2.4 that the sequence \(\{x_n\}\) \(\Delta \)-converges to a point of \(\Theta \). \(\square \)

We obtain the following convergence result, by replacing \(T = I\), the identity mapping in Theorem 3.3.

Theorem 3.4

Let K be a nonempty closed convex subset of a Hadamard space X and let \(f:K\times K \rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.7. Let \(A_1,A_2,\ldots ,A_N: X \rightrightarrows X^*\) be N multi-valued monotone operators that satisfy the range condition with \(D(A_N)\subset K\). Assume that \(\Theta = EP(f,K) \cap \bigcap _{i=1}^N A_i^{-1}(0) \ne \emptyset \). Let \(x_1\in K\) and \(\{x_n\}\) be a sequence generated by

where \(\{\alpha _n\}, \{\beta _n\} \subset (0,1)\) and \(\{\lambda _n\}, \{\gamma _n^i\} \subset (0,\infty )\) satisfy the following conditions:

-

(C1)

\(\liminf _{n\rightarrow \infty }\gamma _n^i >0\) for all \(i=1,2,\ldots ,N\),

-

(C2)

\(0<\alpha \le \lambda _n\le \beta < \min \{\frac{1}{2c_1},\frac{1}{2c_2}\}\),

-

(C3)

\(\lim _{n\rightarrow \infty }\alpha _n=0\) and \(\sum _{n=1}^{\infty }\alpha _n=\infty \),

-

(C4)

\(\liminf _{n\rightarrow \infty }\beta _n(1-\beta _n)>0\).

Then the sequence \(\{x_n\}\) \(\Delta \)-converges to a point of \(\Theta \).

Let X be a Hadamard space with dual \(X^*\) and let \(g: X \rightarrow (-\infty , \infty ]\) be a proper function with effective domain \(D(g):= \{x: g(x) < \infty \}\). Then, the subdifferential of g is the multi-valued mapping \(\partial g: X \rightrightarrows X^*\) defined by:

It has been proved in Kakavandi and Amini (2010) that \(\partial g(x)\) of a convex, proper and lower semicontinuous function g satisfies the range condition. So using Theorem 3.3, we can obtain the following corollary.

Corollary 3.5

Let K be a nonempty closed convex subset of a Hadamard space X and let \(f:K\times K \rightarrow {\mathbb {R}}\) be a bifunction satisfying Assumption 2.7. Let \(g_1,g_2,\ldots ,g_N: K \rightarrow (-\infty , \infty ]\) be N proper convex and lower semicontinuous functions and let \(T:K\rightarrow K\) be a nonexpansive mapping. Assume that \(\Theta = F(T) \cap EP(f,K) \cap \bigcap _{i=1}^N \textrm{argmin}_{y\in K}g_i(y) \ne \emptyset \). Let \(x_1\in K\) and \(\{x_n\}\) be a sequence generated by

where \(\{\alpha _n\}, \{\beta _n\} \subset (0,1)\) and \(\{\lambda _n\}, \{\gamma _n^i\} \subset (0,\infty )\) satisfy the following conditions:

-

(C1)

\(\liminf _{n\rightarrow \infty }\gamma _n^i >0\) for all \(i=1,2,\ldots ,N\),

-

(C2)

\(0<\alpha \le \lambda _n\le \beta < \min \{\frac{1}{2c_1},\frac{1}{2c_2}\}\),

-

(C3)

\(\lim _{n\rightarrow \infty }\alpha _n=0\) and \(\sum _{n=1}^{\infty }\alpha _n=\infty \),

-

(C4)

\(\liminf _{n\rightarrow \infty }\beta _n(1-\beta _n)>0\).

Then the sequence \(\{x_n\}\) \(\Delta \)-converges to a point of \(\Theta \).

4 Numerical examples

In this section, we proceed to perform two numerical experiments to show the computational efficiency of our algorithms.

Example 4.1

Let \(X={\mathbb {R}}^2\) be endowed with a metric \(d_X: {\mathbb {R}}^2\times {\mathbb {R}}^2\rightarrow [0,\infty )\) defined by

for all \(x=(x_1,x_2),y=(y_1,y_2)\in {\mathbb {R}}^2\). Then, \(({\mathbb {R}}^2,d_X)\) is a Hadamard space (see Eskandani and Raeisi 2019) with the geodesic joining x to y given by

Let \(f_1, f_2: {\mathbb {R}}^2\rightarrow {\mathbb {R}}\) and \(T:{\mathbb {R}}^2\rightarrow {\mathbb {R}}^2\) be mappings defined by

It follows that \(f_1\) and \(f_2\) are convex and lower semicontinuous in \(({\mathbb {R}}^2,d_X)\) and T is nonexpansive. Let \(f: {\mathbb {R}}^2\times {\mathbb {R}}^2\rightarrow {\mathbb {R}}\) be a function defined by

It is obvious that f satisfies \((B_1)\), \((B_2)\), \((B_3)\) and \((B_4)\). Letting \(N=2\), \(A_1=\partial f_1\) and \(A_2=\partial f_2\), the sequence \(\{x_n\}\) generated by (3.1) takes the following form:

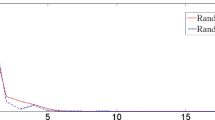

We choose \(\alpha _n=\frac{1}{2n+1}, \beta _n=\frac{9}{11},\gamma ^1_n=\gamma ^2_n=4n, \lambda _n=\frac{1}{n+5}+\frac{1}{5},\) for all \(n\in {\mathbb {N}}\). It can be observed that all the assumptions of Theorem 3.3 are satisfied and \(\Theta = F(T) \cap EP(f,{\mathbb {R}}^2) \cap \bigcap _{i=1}^N A_i^{-1}(0) = \{(0,0)\}\). Using the algorithm (4.1) with the initial point \(x_1=(5.4,2.9)\), we have the numerical results in Table 1, Figs. 1 and 2.

Plotting of \(d_X(x_n,0)\) in Table 1

Plotting of \(d_X(x_n,x_{n-1})\) in Table 1

Moreover, we test the effect of the different control sequence \(\{\beta _n\}\) on the convergence of the algorithm (4.1). In this test, Fig. 3 presents the behaviour of \(x_n\) by choosing three different control sequences (a) \(\beta _n=\frac{1}{10}\), (b) \(\beta _n=\frac{9}{11}\) and (c) \(\beta _n=\frac{9}{11}-\frac{1}{5n}\) where the initial point \(x_1=(5.4,2.9)\). We see that the sequence \(\{x_n\}\) by choosing the control sequence \(\beta _n=\frac{1}{10}\) converges to the solution \((0,0)\in \Theta \) faster than the others.

To compare the proposed algorithm (3.19) with the algorithm (1.2) in Moharami and Eskandani (2020), we consider the following example.

Example 4.2

Let X, \(d_X\), \(f_1\), \(f_2\), f, N, \(A_1\), \(A_2\), \(\{\alpha _n\}\), \(\{\beta _n\}\), \(\{\gamma ^1_n\}\), \(\{\gamma ^2_n\}\), \(\{\lambda _n\}\) are as in Example 4.1. We computed the iterates of (1.2) and (3.19) for the initial point \(x_1=(0.7,0.5)\) and \(u=(1,1)\). The numerical experiments of all iterations for approximating the point \((0,0)\in \Theta = EP(f,{\mathbb {R}}^2) \cap \bigcap _{i=1}^N A_i^{-1}(0)\) are given in Table 2 and Fig. 4.

From Table 2 and Fig. 4, we observe that the convergence rate of the proposed algorithm (3.19) is much quicker than that of the algorithm (1.2) in Moharami and Eskandani (2020).

References

Alakoya TO, Mewomo OT (2022) Viscosity S-iteration method with inertial technique and self-adaptive step size for split variational inclusion, equilibrium and fixed point problems. Comput Appl Math 41:39

Alakoya TO, Mewomo OT, Shehu Y (2022a) Strong convergence results for quasimonotone variational inequalities. Math Methods Oper Res 95:249–279

Alakoya TO, Uzor VA, Mewomo OT, Yao JC (2022b) On a system of monotone variational inclusion problems with fixed-point constraint. J Inequal Appl 2022:47

Bačák M, Reich S (2014) The asymptotic behavior of a class of nonlinear semigroups in Hadamard spaces. J Fixed Point Theory Appl 16:189–202

Berg ID, Nikolaev IG (2008) Quasilinearization and curvature of Alexandrov spaces. Geom Dedicata 133:195–218

Blum E, Oettli W (1994) From optimization and variational inequalities to equilibrium problems. Math Stud 63:123–145

Bridson M, Haefliger A (1999) Metric spaces of non-positive curvature. Springer, Berlin

Bruck RE, Reich S (1977) Nonexpansive projections and resolvents of accretive operators on Banach spaces, Houston. J Math 3:459–470

Chaipunya P, Kumam P (2017) On the proximal point method in Hadamard spaces. Optimization 66:1647–1665

Dhompongsa S, Kirk WA, Sims B (2006) Fixed points of uniformly Lipschitzian mappings. Nonlinear Anal 65:762–772

Dhompongsa S, Panyanak B (2008) On \(\Delta \)-convergence theorems in CAT(0) spaces. Comput Math Appl 56:2572–2579

Eskandani GZ, Raeisi M (2019) On the zero point problem of monotone operators in Hadamard spaces. Numer Algorithm 80:1155–1179

Goebel K, Reich S (1984) Uniform convexity, hyperbolic geometry, and nonexpansive mappings. Marcel Dekker, New York

Iusem AN, Mohebbi V (2020) Convergence analysis of the extragradient method for equilibrium problems in Hadamard spaces. Comput Appl Math 39:44

Kakavandi BA, Amini M (2010) Duality and subdifferential for convex functions on complete CAT(0) metric spaces. Nonlinear Anal 73:3450–3455

Kaewkhao A, Inthakon W, Kunwai K (2015) Attractive points and convergence theorems for normally generalized hybrid mappings in CAT(0) spaces. Fixed Point Theory Appl 2015:96

Khatibzadeh H, Mohebbi V (2019) Approximating solutions of equilibrium problems in Hadamard spaces. Miskolc Math Notes 20:281–297

Khatibzadeh H, Mohebbi V (2021) Monotone and pseudo-monotone equilibrium problems in Hadamard spaces. J Aust Math Soc 110:220–242

Khatibzadeh H, Ranjbar S (2017) Monotone operators and the proximal point algorithm in complete CAT(0) metric spaces. J Aust Math Soc 103:70–90

Kirk W A (2003) Geodesic geometry and fixed point theory. in: Seminar of Mathematical Analysis, Malaga/Seville, 2002/2003. In: Colecc A, Univ. Sevilla Secr. Publ., Seville 64:195–225

Kirk WA, Panyanak B (2008) A concept of convergence in geodesic spaces. Nonlinear Anal 68:3689–3696

Kohlenbach U (2015) Some logical metatheorems with applications in functional analysis. Trans Am Math Soc 357:89–128

Kumam P, Chaipunya P (2017) Equilibrium problems and proximal algorithms in Hadamard spaces. Optimization 8:155–172

Li G, Lopez C, Martin-Marquez V (2009) Monotone vector fields and the proximal point algorithm on Hadamard manifolds. J Lond Math Soc 79:663–683

Martinet B (1970) Régularisation dinéquations variationelles par approximations successives. Revue Fr Inform Rech Oper 4:154–159

Moharami R, Eskandani GZ (2020) An extragradient algorithm for solving equilibrium problem and zero point problem in Hadamard spaces. RACSAM 114:152

Nanjaras B, Panyanak B, Phuengrattana W (2010) Fixed point theorems and convergence theorems for Suzuki-generalized nonexpansive mappings in CAT(0) spaces. Nonlinear Anal Hybrid Syst 4:25–31

Ogwo GN, Alakoya TO, Mewomo OT (2021) Iterative algorithm with self-adaptive step size for approximating the common solution of variational inequality and fixed point problems. Optimization. https://doi.org/10.1080/02331934.2021.1981897

Phuengrattana W, Suantai S (2012) Fixed point theorems for a semigroup of generalized asymptotically nonexpansive mappings in CAT(0) spaces. Fixed Point Theory Appl 2012:230

Phuengrattana W, Suantai S (2013) Existence theorems for generalized asymptotically nonexpansive mappings in uniformly convex metric spaces. J Convex Anal 20(3):753–761

Quoc TD, Muu LD, Nguyen VH (2008) Extragradient methods extended to equilibrium problems. Optimization 57:749–776

Ranjbar S, Khatibzadeh H (2016) \(\Delta \)-convergence and W-convergence of the modified Mann iteration for a family of asymptotically nonexpansive mappings in complete CAT(0) spaces. Fixed Point Theory. 17:151–158

Reich S, Salinas Z (2015) Infinite products of discontinuous operators in Banach and metric spaces. Linear Nonlinear Anal 1:169–200

Reich S, Salinas Z (2016) Weak convergence of infinite products of operators in Hadamard spaces. Rend Circolo Mat Palermo 65:55–71

Reich S, Salinas Z (2017) Metric convergence of infinite products of operators in Hadamard spaces. J Nonlinear Convex Anal 18:331–345

Reich S, Shafrir I (1990) Nonexpansive iterations in hyperbolic spaces. Nonlinear Anal 15:537–558

Rockafellar RT (1976) Monotone operators and the proximal point algorithm. SIAM J Control Optim 14:877–898

Sopha S, Phuengrattana W (2015) Convergence of the S-iteration process for a pair of single-valued and multi-valued generalized nonexpansive mappings in CAT(K) spaces. Thai J Math 13(3):627–640

Tits J (1977) A theorem of Lie–Kolchin for trees, contributions to algebra: a collection of papers dedicated to Ellis Kolchin. Academic Press, New York

Uzor VA, Alakoya TO, Mewomo OT (2022) Strong convergence of a self-adaptive inertial Tseng’s extragradient method for pseudomonotone variational inequalities and fixed point problems. Open Math 20:234–257

Acknowledgements

The authors are thankful to the referees for careful reading and the useful comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Juagwon, K., Phuengrattana, W. Iterative approaches for solving equilibrium problems, zero point problems and fixed point problems in Hadamard spaces. Comp. Appl. Math. 42, 75 (2023). https://doi.org/10.1007/s40314-023-02209-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-023-02209-w