Abstract

In this paper, to develop less conservative delay-dependent stability criterion and the method for \(H_{\infty }\) performance analysis, the problem of stability and \(H_{\infty }\) performance for discrete-time neural networks with time-varying delay is investigated. Inequality is an important tool for stability and \(H_{\infty }\) performance analysis. To reduce the conservatism of some existing inequalities, an improved reciprocally convex inequality is proved. This inequality is related to the quadratic of delay and encompasses some existing inequalities as its special cases. Based on the proposed reciprocally convex approach, a novel free-matrix-based summation inequality is derived. A delay-product-type Lyapunov–Krasovskii functional (LKF) term is introduced. By utilizing the constructed LKF, information of time delay, and the proposed reciprocally convex approach, two improved sufficient conditions for stability and \(H_{\infty }\) performance of discrete-time neural networks with time-varying delay are derived in terms of linear matrix inequalities (LMIs), respectively. Finally, several numerical examples are provided to illustrate the effectiveness and benefits of our proposed approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Neural networks have aroused considerable interest of many researchers owing to their extensive and successful applications such as signal and image processing, control, system identification, and telecommunications (Haykin 1998; Gabrijel and Dobnikar 2003; Liu 2002; Zeng et al. 2015; Shi et al. 2021). Since signal transduction and response between neurons in most practical systems cannot be instantaneously carried out, time-delay is unavoidably encountered in neural networks (Zhang et al. 2008), which is a non-negligible factor that will result in degradation of performance and instability of the systems (Gu et al. 2003). In view of the fact that discrete-time systems have a strong background in engineering applications (Zhang et al. 2016), the stability of discrete-time systems with time-varying delay has become the subject extensively studied in the last several decades (Mathiyalagan et al. 2012; Meng et al. 2010; Qiu et al. 2019). To derive sufficient conditions for stability of delayed systems, the Lyapunov–Krasovskii functional (LKF) approach is an efficient way, but it leads to conservatism to some extent. With the purpose of finding the maximal admissible delay upper bound, there are a great deal of efforts made on these two aspects: constructing appropriate LKFs and seeking some sharper summation inequalities to obtain a tighter upper bound of the forward difference of the constructed LKFs.

From the previous studies (Banu and Balasubramaniam 2016; Banu et al. 2015; Chen et al. 2020), we know that more available system information benefits to reduce the conservatism of stability criteria. A class of discrete recurrent neural networks with time-varying delays was investigated in Wu et al. (2010); an improved global exponential stability criterion was obtained through constructing augmented LKF terms containing the activation functions \(g_i(x_i(k))\). By adding triple summation terms into the LKF and fully utilizing the information of time-delay, some novel sufficient conditions with less conservatism were established to guarantee a class of discrete-time delayed dynamical networks to be asymptotically stable (Wang et al. 2013). By employing a newly augmented LKF and a newly augmented vector including summation terms of states, a new delay-dependent stability criterion for the discrete-time neural networks with time-varying delays was proposed in Kwon et al. (2013). How to construct an appropriate LKF to reduce conservatism effectively is a difficulty in dynamic analysis for delayed discrete-time systems. By taking the advantage of the changing information of delay, the delay-variation-dependent stability of discrete-time systems with a time-varying delay is concerned in Zhang et al. (2016). By constructing the delay-product-dependent term in LKF, some significantly improved stability criteria have been derived (Zhang et al. 2016, 2017a; Nam and Luu 2020). Inspired by these works, we will introduce the delay-product-type term in the construction of the LKF to enlarge the delay bounds.

In the dynamic analysis of delayed discrete-time systems, summation terms such as \(\sum ^{-1}_{s=-h}\varDelta x^{\mathrm{T}}(s)R\varDelta x(s)\) often arise in the forward difference of the constructed LKFs. To derive less conservative criteria, it is another difficulty how to bound these summation terms. Many summation inequalities have been proposed to fill the bounding gap. The discrete Jensen inequality (Gu et al. 2003) and the Wirtinger-based summation inequality (Seuret et al. 2015) were widely used to estimate the single summation term in the forward difference of the LKF. Nam et al. (2015) presented an auxiliary function-based summation inequality, which extended the Wirtinger-based summation inequality. The free-matrix-based summation inequality was developed in Chen et al. (2016), which contained the discrete Wirtinger-based inequality as a special case. The general summation inequalities including the Jensen inequality, the Wirtinger-based inequality, and the auxiliary function-based summation inequalities as special cases were obtained in Chen et al. (2016). Based on orthogonal group of sequences and the idea of approximation of a vector, a refined auxiliary function-based summation inequality was obtained in Liu et al. (2017). Although we can obtain more general summation inequality via orthogonal polynomials of high order, the computation burden may result from the orthogonal polynomials with high degree. Later, a general free-matrix-based summation inequality was proposed in Chen et al. (2019), which generalized the free-matrix-based ones proposed in Zhang et al. (2017b). Inspired by the aforementioned literatures, this paper will further investigate the summation inequality. Noting that the forward difference of an LKF may be dominated by a quadratic function of time-delay, we hope to derive a delay-quadratic-dependent inequality. To avoid the complexity of polynomials of high order, by following the main idea (Liu et al. 2017; Zhang et al. 2017a), a novel free-matrix-based summation inequality will be established.

It is well known that there often exist various external disturbances. The \(H_{\infty }\) control aims to minimize the effects of the external disturbances. The objective of \(H_{\infty }\) performance analysis is to find the saddle point of objective functional calculus depending on the disturbance (Kwon et al. 2013). As an important dynamic performance for neural networks, \(H_{\infty }\) performance of the systems with time-varying delay has also drawn many researchers’ attention (Lee et al. 2014; Huang et al. 2015; He et al. 2020; Tian and Wang 2021). The guaranteed \(H_{\infty }\) performance state estimation problem of static neural networks with time-varying delay was considered in Huang et al. (2013), in which some better performance was achieved by the proposed double-integral inequality and the reciprocally convex combination technique. Using the augmented LKF and the Writinger-based integral inequality, Kwon et al. (2016) investigated \(H_{\infty }\) performance for systems of linear model with interval time-varying delays and obtained smaller disturbance attenuation \(\gamma \). For delayed Markovian jump neural networks, \(H_{\infty }\) performance analysis was conducted by proposing the third-order Bessel–Legendre integral inequality and the LKF with delay-product-type terms (Tan and Wang 2021). The non-integral quadratic terms and the integral terms were connected by employing the third-order Bessel–Legendre integral inequality rather than the Wirtinger-based integral inequality. Several less conservative sufficient conditions that guaranteed the \(H_{\infty }\) performance for delayed Markovian jump neural networks were obtained. Zhang et al. (2021) investigated the \(H_{\infty }\) performance of discrete-time networked systems subject to network-induced delays and malicious packet dropouts. A novel approach related to quartic polynomial inequalities was presented to deal with the \(H_{\infty }\) performance of discrete-time networked systems. Although various methods have been proposed to tackle the \(H_{\infty }\) performance analysis problem, \(H_{\infty }\) performance analysis for delayed discrete-time neural networks has not yet been fully studied and there remains some space for improvement.

Motivated by the above consideration, this paper aims to improve the reciprocally convex inequality and establish a novel free-matrix-based summation inequality. By employing an LKF with delay-product-term and the new free-matrix-based summation inequality, less conservative sufficient conditions of stability and \(H_{\infty }\) performance for delayed discrete-time neural networks are obtained. The major contributions and improvement of this paper are summarized as follows:

-

1.

An improved reciprocally convex inequality with six free matrices is proved. To make the most of the newly proved reciprocally convex inequality, a novel free-matrix-based summation inequality is derived.

-

2.

Two new zero equalities are introduced. These zero equalities are merged into the estimation of the forward difference of the constructed LKF to increase the freedom of criteria.

-

3.

By combining the LKF containing delay-product-term with the improved reciprocally convex combination inequality and the newly proposed summation inequalities, a new stability condition for delayed discrete-time neural networks is developed and corresponding \(H_{\infty }\) performance condition for the disturbance-affected delayed neural networks is established. Compared with the existing literatures, the stability criterion and the \(H_{\infty }\) performance criterion for the considered system in this paper are with less conservatism. Their effectiveness are demonstrated by some numerical examples.

Notations Throughout this paper, \({\mathfrak {R}}^n\) is the n-dimensional Euclidean vector space, and \({\mathfrak {R}}^{m\times n}\) denotes the set of all \(m\times n\) real matrices. The superscript \(^{\mathrm{T}}\) stands for the transpose of a matrix. \(P>0(\ge 0)\) implies that P is a positive definite (semi-positive-definite) matrix, \(I_n\) and \(0_{m\times n}\) represent the \(n\times n\) identity matrix and \(m\times n\) zero matrix, respectively. The symmetric term in a symmetric matrix is denoted by the symbol ‘\(*\)’ and \(\text {sym}\{A\}=A+A^{\mathrm{T}}.\)

2 Preliminaries

Consider the following discrete-time neural network with time-varying delay:

where \(x(k)=[x_1(k),x_2(k),\ldots ,x_n(k)]^{\mathrm{T}}\in {\mathfrak {R}}^n\) denotes the neuron state vector, n is the number of neurons, \(f(x(k))=[f_1(x_1(k)),f_2(x_2(k)),\ldots ,f_n(x_n(k))]^{\mathrm{T}}\in {\mathfrak {R}}^n\) is the activation function, \(B,W_0,W_1\) are the state feedback matrix, the interconnection weight matrix, and the delayed interconnection weight matrix, respectively, d(k) denotes the state time-varying delay, \(d_m \le d(k) \le d_M\), \(\mu _m \le \varDelta d(k)=d(k+1)-d(k) \le \mu _M\), \(d_m \), \(d_M\), \(\mu _m \) and \(\mu _M\) are known integers.

The activation function \(f(\cdot )\) in system (1) is assumed to be continuous and bounded with \(f_j(0)=0\), and there exist constants \(l_{j}^-,l_{j}^+\), such that

Corresponding to neural network (1), the discrete-time system subject to external disturbance u(k) can be described as follows:

where \(u(k)\in {\mathfrak {R}}^n\) represents the exogenous disturbance, \(v(k)\in {\mathfrak {R}}^m\) is the output vector, and C, D are real matrices with compatible dimensions.

The problem of \(H_{\infty } \) performance analysis for delayed discrete-time neural networks is stated as follows. For a given scalar \(\gamma >0\), the neural network (3) is said to have \(H_{\infty } \) performance level \(\gamma \) if the following conditions are satisfied:

-

1.

System (3) with \(u(t)=0\) is asymptotically stable;

-

2.

For any positive integer h, under the zero-initial condition

$$\begin{aligned} \langle v,v\rangle _h\le \gamma ^2 \langle u,u\rangle _h \end{aligned}$$holds for \(\forall u(k)\in l_2\) and \(u(k)\ne 0\), where \(\langle u,u\rangle _h= \sum ^{h}_{k=0}u^{\mathrm{T}}(k)u(k).\)

To facilitate the subsequent research, we introduce the following lemmas.

Lemma 1

For positive definite matrices \(R_1,R_2 \in {\mathfrak {R}}^{n\times n},\) if there exist symmetric matrices \(X_i\in {\mathfrak {R}}^{n\times n},i=1,2,3,4\) and any matrices \(Y_1,Y_2\in {\mathfrak {R}}^{n\times n},\) such that

then the following inequality holds for any \(\alpha \in (0,1){:}\)

Proof

It is easy for us to get the following identical equation after simple calculation:

For any \(\alpha \in (0,1)\), if \(\begin{bmatrix} R_1-X_1&{}\quad -Y_1\\ * &{}\quad R_2\\ \end{bmatrix}\ge 0,\) \(\begin{bmatrix} R_1&{}\quad -Y_2\\ * &{}\quad R_2-X_2\\ \end{bmatrix}\ge 0\), and \(\begin{bmatrix} R_1-X_1-X_4&{}\quad -Y_1\\ * &{}\quad R_2-X_3\\ \end{bmatrix}\ge 0,\) then

For any \(\alpha \in (0,1)\), pre- and post-multiplying (6) by \(\begin{bmatrix} \sqrt{\frac{1-\alpha }{\alpha }}I&{}\quad 0\\ * &{}\quad \sqrt{\frac{\alpha }{1-\alpha }}I\\ \end{bmatrix}\) yields

This completes the proof. \(\square \)

Remark 1

The classical reciprocally convex inequality \( \begin{bmatrix} \frac{1}{\alpha }R&{}\quad 0\\ * &{}\quad \frac{1}{(1-\alpha )}R\\ \end{bmatrix} \ge \begin{bmatrix} R&{}\quad S\\ * &{}\quad R\\ \end{bmatrix} \) was proved (Park et al. 2011). It played an important role in dealing with non-convex terms occurring in the forward difference of an LKF. Seuret and Gouaisbaut (2016) extended the classical reciprocally convex inequality into the form \( \begin{bmatrix} \frac{1}{\alpha }R&{}\quad 0\\ * &{}\quad \frac{1}{(1-\alpha )}R\\ \end{bmatrix} \ge \begin{bmatrix} R+(1-\alpha )X_{1}&{}\quad \alpha Y_{1}+ (1-\alpha )Y_{2}\\ * &{}\quad R+\alpha X_{2} \\ \end{bmatrix}. \) By weakening the constraints (Park et al. 2011), an improved reciprocally convex inequality comprising three slack matrices was presented (Zhang and Han 2018). In stability and \(H_{\infty }\) performance analysis, a main difficulty is how to estimate the forward difference of the LKF V(k) and prove \(\triangle V(k)<0\). The forward difference of an LKF may be dominated by a quadratic function of time-delay. However, the right-hand sides of both reciprocally convex inequalities (Seuret and Gouaisbaut 2016; Zhang and Han 2018) are the linear functions of \(\alpha \). These reciprocally convex inequalities (Seuret and Gouaisbaut 2016; Zhang and Han 2018) cannot be directly used to estimate \(\triangle V(k)\) with the square of the time-delay. A generalized reciprocally convex inequality is proved in Lemma 1. This novel reciprocally convex inequality involves the square of \(\alpha \) and more slack matrices. Kim (2016) proved the quadratic function negative determination lemma, which could be used to handle the quadratic function of time-delay. Using the generalized reciprocally convex inequality derived in this paper, non-convex terms in the forward difference of the LKF can be merged into one expression of \(\alpha ^{2}\), whose sign can be determined via the quadratic function negative determination lemma.

Remark 2

Let \(X_3=X_4=0\), the generalized reciprocally convex inequality in Lemma 1 degenerates into the reciprocally convex inequality (Seuret and Gouaisbaut 2016). Let \(X_3=X_4=0\), \(Y_1=Y_2=Y\), the generalized reciprocally convex inequality in Lemma 1 degenerates into the improved reciprocally convex inequality in Zhang and Han (2018). Set \(X_i=0,i=1,2,3,4\), \(Y_1=Y_2=Y\), the generalized reciprocally convex inequality in Lemma 1 becomes to the classical reciprocally convex inequality (Park et al. 2011). If \(X_3>0, X_4>0\), the generalized reciprocally convex inequality in Lemma 1 is less conservative than the reciprocally convex inequality (Seuret and Gouaisbaut 2016).

Lemma 2

For a vector function \(y(s) : [-h,0]\rightarrow {\mathfrak {R}}^{n},\) a positive definite matrix \(R\in {\mathfrak {R}}^{n\times n},\) positive integer \(h\ge 1,\) any real matrix M and any vector \(\chi _0\) with appropriate dimensions, the following inequality holds :

where \(\chi _1:=\sum ^{-1}_{s=-h}y(s),\) \(\chi _2:=\frac{2}{h+1}\sum ^{-1}_{s=-h}\sum ^{-1}_{j=s}y(j)-\sum ^{-1}_{s=-h}y(s).\)

Proof

Let \(f(s)=\frac{2s+h+1}{h+1}\). Carrying out simple algebraic calculation yields: \(\sum ^{-1}_{s=-h}f(s)=0,\) \(\sum ^{-1}_{s=-h}f^{2}(s)=\frac{h(h-1)}{3(h+1)},\) \(\sum ^{-1}_{s=-h}f(s)y(s)=\chi _2.\)

For any vector \(\chi _0\) with appropriate dimension, let \(\delta (s)=\hbox {col}[\chi _0,f(s)\chi _0,y(s)]\) \( \varPhi =\begin{bmatrix} LR^{-1}L^{\mathrm{T}}&{}\quad LR^{-1}M^{\mathrm{T}}&{}\quad L\\ * &{}\quad MR^{-1}M^{\mathrm{T}}&{}\quad M\\ *&{}\quad *&{}\quad R \end{bmatrix}\). Using Schur complement, it is obvious that \(\varPhi \ge 0\). Using the Jensen inequality gives

Direct computation yields

Combining (8) with (9), we can get

Since \(\frac{h(h-1)}{3(h+1)}\le \frac{h}{3}\), inequality (7) can be derived from inequality (10). This completes the proof. \(\square \)

Corollary 1

Let vector function \(x(s) : [-h,0]\rightarrow {\mathfrak {R}}^{n}\) and \(\varDelta x(s)=x(s+1)-x(s).\) For any positive definite matrix R, integer \(h\ge 1,\) the following inequality holds :

where \({\bar{\chi }}_1=x(0)-x(-h),\) \({\bar{\chi }}_2=x(0)+x(-h)-\frac{2}{h+1}\sum ^{0}_{s=-h}x(s).\)

Remark 3

If \(M=0\) in Lemma 2, then inequality (7) will degrade into the Jensen summation inequality (Gu et al. 2003). By setting \(y(s)=\varDelta x(s)=x(s+1)-x(s)\), \(M= -\frac{3}{h}R\), \(\chi _0=\chi _2\) in (7), inequality (7) becomes the Wirtinger-based summation inequality (Seuret et al. 2015), which is presented in Corollary 1. Using inequality \(\sum ^{-1}_{s=-h}\delta ^{\mathrm{T}}(s)\varPhi \delta (s)>0\), the free-matrix-based summation inequality (Chen et al. 2016) was derived. Different from the method in Chen et al. (2016), based on the Jensen summation inequality, inequality (7) is proved by utilizing \(\sum ^{-1}_{s=-h}\delta ^{\mathrm{T}}(s)\varPhi \delta (s)\ge \frac{1}{h}(\sum ^{-1}_{s=-h}\delta (s))^{\mathrm{T}}\varPhi (\sum ^{-1}_{s=-h}\delta (s))\). Since \(\frac{1}{h}(\sum ^{-1}_{s=-h}\delta (s))^{\mathrm{T}}\varPhi (\sum ^{-1}_{s=-h}\delta (s))\ge 0\), inequality (7) may be with less conservatism than the free-matrix-based summation inequality.

Based on Lemmas 1 and 2, it is easy for us to obtain the following lemma.

Lemma 3

For a positive definite matrix \(R\in {\mathfrak {R}}^{n\times n},\) \(\tau _1\le \tau _{k}\le \tau _2,\) any real matrices \(F_1,F_2\) with appropriate dimensions, and any vectors \(\eta _1,\eta _2,\) if there exist symmetric matrices \(X_i\in {\mathfrak {R}}^{n\times n},i=1,2,3,4\) and any matrices \(Y_1,Y_2\in {\mathfrak {R}}^{n\times n},\) such that

\( \begin{bmatrix} R-X_1&{}\quad -Y_1\\ * &{}\quad R\\ \end{bmatrix} \ge 0,\) \( \begin{bmatrix} R&{}\quad -Y_2\\ * &{}\quad R-X_2\\ \end{bmatrix} \ge 0,\) \(\begin{bmatrix} R-X_1-X_4&{}\quad -Y_1\\ * &{}\quad R-X_3\\ \end{bmatrix} \ge 0,\)

then the following inequality holds :

where \(\alpha _1(k)=x(k-\tau _1)-x(k-\tau _k),\) \(\alpha _2(k)=x(k-\tau _k)-x(k-\tau _2),\) \(\alpha _3(k)=x(k-\tau _1)+x(k-\tau _k)-2\omega _1(k),\) \(\alpha _4(k)=x(k-\tau _k)+x(k-\tau _2)-2\omega _2(k),\) \(\omega _1(k)=\sum _{s=k-\tau _k}^{k-\tau _1}\frac{x(s)}{\tau _k-\tau _1+1},\) \(\omega _2(k)=\sum _{s=k-\tau _2}^{k-\tau _k}\frac{x(s)}{\tau _2-\tau _k+1},\) \(\alpha =\frac{\tau _k-\tau _1}{\tau _2-\tau _1}.\)

Remark 4

Different from the existing summation inequality, the free-matrix-based summation inequality given in Lemma 3 is related to the square of the delay. Vectors \(\eta _1\) and \(\eta _2\) can be freely and independently chosen. Since more free matrices are introduced in Lemma 3, the free-matrix-based summation inequality in Lemma 3 can provide more freedom.

Lemma 4

(Kim 2016) For a quadratic function \(f(x)=a_2x^2+a_1x+a_0,\) where \(a_0,a_1,a_2 \in {\mathfrak {R}},\) if \(({\mathrm{i}})\ f(h_1)<0,\quad ({\mathrm{ii}})\ f(h_2)<0, \quad ({\mathrm{iii}})\ -(h_2-h_1)^2a_2+f(h_1)<0,\) then \(f(x)<0, \forall x \in [h_1,h_2].\)

3 Main results

In this section, by resorting to the above new summation inequalities and improved reciprocally convex inequality, improved sufficient conditions for stability and \(H_{\infty }\) performance of delayed discrete-time neural networks are proposed. For simplifying the representation of subsequent parts, the related notations are given as follows:

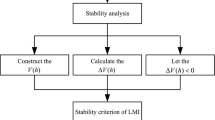

Theorem 1

For given integers \(d_{m},\) \(d_{M},\) \(\mu _{m},\) \(\mu _{M},\) system (1) is asymptotically stable if there exist positive definite matrices \(Q_i\in {\mathfrak {R}}^{n\times n}, R_i\in {\mathfrak {R}}^{n\times n}, i=1,2,\) \(S\in {\mathfrak {R}}^{2n\times 2n},\) positive definite diagonal matrices \(J_j\in {\mathfrak {R}}^{n\times n},j=1,2,3,\) symmetric matrices \(P_1\in {\mathfrak {R}}^{3n\times 3n},\) \(P_2\in {\mathfrak {R}}^{2n\times 2n},\) \(U_1,U_2\in {\mathfrak {R}}^{n\times n},\) \(X_k\in {\mathfrak {R}}^{n\times n},\) \({\bar{X}}_k\in {\mathfrak {R}}^{2n\times 2n},k=1,2,3,4,\) matrices \(M_l,\) \(N_l,\) \(H_l,Y_l,{\bar{Y}}_l,l=1,2\) with appropriate dimensions, such that the following LMIs hold:

Proof

Consider the following LKF:

with

First, we verify the positive definiteness of the LKF candidate in (16). \( V_{1}(k)\) can be equivalently written in the following form:

where \(P(d(k))=P_1+d(k) \begin{bmatrix} P_2&{}\quad 0\\ *&{}\quad 0\\ \end{bmatrix}\).

Since \(S>0\), \(Q_i>0\), and \(R_i>0, i=1,2\), the positive definiteness of V(k) can be guaranteed by condition (12).

Now, we calculate the forward differences \(\varDelta V(k)\) along the trajectory of system (1) and estimate the upper bound of \(\varDelta V(k)\).

Using Corollary 1, we obtain

For any matrices \(M_1,N_1\) with appropriate dimensions, applying Lemma 3 yields

where \({\tilde{\chi }}_1=\varXi _{31}^{\mathrm{T}}\zeta (k),{\tilde{\chi }}_2=\varXi _{32}^{\mathrm{T}}\zeta (k),\) \({\bar{\chi }}_1=\varXi _{33}^{\mathrm{T}}\zeta (k),{\bar{\chi }}_2=\varXi _{34}^{\mathrm{T}}\zeta (k),\) \({\tilde{\chi }}_0=\varXi _{35}^{\mathrm{T}}\zeta (k),\) \({\bar{\chi }}_0={\tilde{\varXi }}_{35}^{\mathrm{T}}\zeta (k),\) \(\alpha =(d_k-d_m)/d_1.\)

It follows from (19)–(21) that:

The calculation of \(\varDelta V_{4}(k)\) leads to

Similar to the method in Park et al. (2015), we introduce the following zero equations:

where \(U_1\) and \(U_2\) are any symmetric matrices with appropriate dimensions.

Let \(S_1=S+\begin{bmatrix} 0&{}\quad U_1\\ * &{}\quad U_1\\ \end{bmatrix}, S_2=S+\begin{bmatrix} 0&{}\quad U_2\\ * &{}\quad U_2\\ \end{bmatrix}.\) Combining (23) with (24) yields

For any matrix \(M_2\) with appropriate dimension, applying Lemma 2 yields

where \({\tilde{\kappa }}_1=\varOmega _{1}^{\mathrm{T}}\zeta (k),\) \({\tilde{\kappa }}_2=\varOmega _{3}^{\mathrm{T}}\zeta (k),\) \({\tilde{\kappa }}_0=\varOmega _{5}^{\mathrm{T}}\zeta (k),\) \(\alpha =(d_k-d_m)/d_1.\)

Similarly, for any matrix \(N_2\) with appropriate dimension, we have

where \({\bar{\kappa }}_1=\varOmega _{2}^{\mathrm{T}}\zeta (k),\) \({\bar{\kappa }}_2=\varOmega _{4}^{\mathrm{T}}\zeta (k),\) \({\bar{\kappa }}_0=\varOmega _{6}^{\mathrm{T}}\zeta (k).\)

Using Lemma 1 to deal with \(\alpha \)-dependent terms gives

Since the activation function \(f(\cdot )\) satisfies (2), then

where \(L_1=\text {diag}\{l_1^-,l_2^-,\ldots ,l_n^-\}, L_2=\text {diag}\{l_1^+,l_2^+,\ldots ,l_n^+\},\) \(l_i^-,l_i^+(i=1,2,\ldots ,n)\) are constants given in (2), and \(J_i (i=1,2,3)\) are any positive definite diagonal matrices with appropriate dimensions.

In addition, we have the following two zero equalities with any matrices \(H_1\), \(H_2\):

From (17) to (31), the upper bound of \(\varDelta V(k)\) can be described by

where \(\varPhi (d_k,\varDelta d_k)=\sum ^{6}_{i=1}\varPi _i+{\tilde{\varPi }}_3+{\tilde{\varPi }}_4.\)

Since \(\varPhi (d_k,\varDelta d_k )\) is quadratic with respect to \(d_k\) and linear with respect to \(\varDelta d_k\), by applying Lemma 4, \(\varPhi (d_k,\varDelta d_k )< 0\) is guaranteed by conditions (13)–(15), which means that system (1) is asymptotically stable. This complete the proof. \(\square \)

Remark 5

To reduce the conservatism of stability criteria, one of the possible approaches is to introduce some new zero equations. The introduction of two zero equations in (31) will enhance the feasible region of stability criteria. However, two slack matrices \(H_{1}\) and \(H_{2}\) are introduced with these two zero equations. The number of decision variables in Theorem 1 increases by \(26n^2\), which is relatively time-consuming to find the feasible solutions of the LMIs. Computational complexity will also increase moderately. When the sizes of LMIs are not too large, the computational burden problem does not occur.

In what follows, the \(H_{\infty }\) performance for the neural network (3) will be discussed.

Theorem 2

For given integers \(d_{m},\) \(d_{M},\) \(\mu _{m},\) \(\mu _{M}\) and \(\gamma >0,\) the \(H_{\infty }\) performance analysis problem for system (3) is solvable, if there exist positive definite matrices \(Q_i\in {\mathfrak {R}}^{n\times n}, R_i\in {\mathfrak {R}}^{n\times n}, i=1,2,\) \(S\in {\mathfrak {R}}^{2n\times 2n},\) positive definite diagonal matrices \(J_j\in {\mathfrak {R}}^{n\times n},j=1,2,3,\) symmetric matrices \(P_1\in {\mathfrak {R}}^{3n\times 3n},\) \(P_2\in {\mathfrak {R}}^{2n\times 2n},\) \(U_1,U_2\in {\mathfrak {R}}^{n\times n},\) \(X_k\in {\mathfrak {R}}^{n\times n},\) \({\bar{X}}_k\in {\mathfrak {R}}^{2n\times 2n},k=1,2,3,4,\) matrices \(M_l,\) \(N_l,\) \(H_l,Y_l,{\bar{Y}}_l,l=1,2\) with appropriate dimensions, such that the following LMIs hold :

where

The other notations are the same as those in Theorem 1.

Proof

Consider the same LKF V(k) as in Theorem 1. Denote

It is easy to deduce that

\(\varDelta V(k)-J(k)< 0\) is guaranteed by Conditions (34)–(36). Summing k from 0 to h gives \(\sum ^{h}_{k=0}\varDelta V(k)-\sum ^{h}_{k=0}J(k) <0.\) Under zero-initial conditions, it is straightforward that \(\sum ^{h}_{k=0}v^{\mathrm{T}}(k)v(k) \le \sum ^{h}_{k=0}\gamma ^2 u^{\mathrm{T}}(k)u(k) \). This complete the proof. \(\square \)

Remark 6

To reduce the conservatism of the stability criterion and \(H_{\infty }\) performance analysis, more information among the system states, time-delay, and the activation functions should be considered. Therefore, many matrix variables are introduced to reflect the relationships between these factors, which results in many complex notations. In practical engineering application, the engineers only pay attention to the notations relating to the LMIs in these criteria. Basing on symmetry, we can simplify programming of MATLAB. The LMIs in these criteria can be easily solved by employing LMI toolbox in MATLAB.

Remark 7

Given a scalar \(\gamma >0\), the neural network (3) is said to have \(H_{\infty } \) performance level \(\gamma \) if (i) when the exogenous disturbance input \(u(k)=0\), system (3) is asymptotically stable; (ii) under the zero-initial condition, for all nonzero \(u(k)\in l_2\) and all integer \( h>0\), \(\sum ^{h}_{k=0}v^{\mathrm{T}}(k)v(k) \le \gamma ^2 \sum ^{h}_{k=0}u^{\mathrm{T}}(k)u(k) \) holds. The neural network (3) is said to be passive if there exists a constant \(\gamma >0\), such that, for all nonzero input \(u(k)\in l_2\) and all integer \( h>0\), \( 2\sum _{k=0}^{h}v^{\mathrm{T}}(k)u(k)\ge -\gamma \sum _{k=0}^{h}u^{\mathrm{T}}(k)u(k)\) under the zero-initial condition. \(H_{\infty }\) performance and the passivity both are relevant to input, output and index \(\gamma \). They both consider the relationships between input and output under the zero-initial condition. Different from passivity, \(H_{\infty }\) performance requires that system (3) with the exogenous disturbance \(u(k)=0\) should be asymptotically stable. \(H_{\infty }\) performance index \(\gamma \) is used to prescribe the level of noise attenuation. As a special case of dissipativity, passivity relates the input and output to the storage function.

Remark 8

How to construct an appropriate LKF is a main difficulty in reducing effectively the conservatism of stability criteria. To overcome this difficulty, a delay-product-type LKF term is introduced and three multiple summation LKF terms are constructed in this paper.

4 Numerical examples

Example 1

Consider the discrete-time system (1) with

The maximum admissible delay upper bounds (MADUBs) \(d_M\) for different \(d_m\), such that the neural network (1) is asymptotically stable, are listed in Tables 1 and 2, where \(\mu =-\mu _m=\mu _M\). From Tables 1 and 2, the MADUBs \(d_M\) obtained in this paper are greater than or equal to those in the existing literatures, which shows that our approach is less conservative.

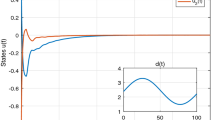

When \(d(k)=17.5+5.5\cos k\pi \), the initial values \(x(k)=\varphi (k)=[0.3,-0.4]^{{\mathrm{T}}}\), \(k\in [-23,0]\), the trajectories of the neural network in Example 1 are depicted in Fig. 1, which shows that the discrete-time system in this example is asymptotically stable. It verifies the effectiveness of the proposed criterion.

The trajectories of the system in Example 1 with \(d(k)=17.5+5.5\cos k\pi \) and \(x(0)=[0.3,-0.4]^{{\mathrm{T}}}\)

Example 2

Consider the discrete-time system (1) with

The MADUBs \(d_M\) for different \(d_m\), such that the system in this example is asymptotically stable , are listed in Table 3, where \(\mu =-\mu _m=\mu _M\). It can be discerned from Table 3 that the MADUBs \(d_M\) calculated by our method are larger than those in the existing literatures, which shows that our approach is with less conservatism.

Example 3

Consider the discrete-time system (3) with

Let \(d_m=3\) and \(\mu =-\mu _m=\mu _M\). The optimal \(H_{\infty }\) performance levels \(\gamma \) for different \(d_M\) computed by Theorem 2 and the methods (Feng and Zheng 2015; Jin et al. 2018) are listed in Table 4. When \((d_m,d_M)=(3,9)\) and \((d_m,d_M)=(3,11)\), Tables 5 and 6 list the optimal \(H_{\infty }\) performance levels \(\gamma \) for different \(\mu \), respectively. From Tables 4, 5 and 6, we can see that \(H_{\infty }\) performance level is improved. This means that our results are of less conservatism.

Set the initial state \(x(k)=[2,-2]^{{\mathrm{T}}},k\in [-13,0]\), \(d(k)=\hbox {int}[8+5*\sin (\frac{k\pi }{4})]\) and \(J(k)=-v^{\mathrm{T}}(k)v(k)+\gamma ^2u^{\mathrm{T}}(k)u(k)\). Figure 2 displays the state response of the system in Example 3 with the exogenous disturbance \(u(k)=\text {col}[2{\mathrm{e}}^{-0.01k}\),\(3{\mathrm{e}}^{-0.02k}]\). The trajectory of \(\sum _{k=0}^{h}J(k)\) is depicted in Fig. 3, which testifies the validity of the results of Theorem 2.

The state trajectories of the system in Example 3 with \(d(k)=\hbox {int}[8+5*\sin (\frac{k\pi }{4})]\), \(x(0)=\hbox {col}[2,-2]\) and \(u(k)=\hbox {col}[2{\mathrm{e}}^{-0.01k}\), \(3{\mathrm{e}}^{-0.02k}]\)

The trajectory of \(\sum _{k=0}^{h}J(k)\) in Example 3 with \(d_m=3\), \(d_M=13\), \(u(k)=[2{\mathrm{e}}^{-0.01k}\), \(3{\mathrm{e}}^{-0.02k}]^{{\mathrm{T}}}\) and \(\gamma =2.8633\)

Example 4

The repressilator model for Escherichia coli with three repressor protein concentrations and their corresponding mRNA concentrations was considered in Elowitz and Leibler (2000). The discrete repressilator model with the stochastic jumping was investigated in Xia et al. (2020). Removing the stochastic jumping factor in Xia et al. (2020), we can obtain the following deterministic discrete repressilator model:

where \(p(k)=[p_{1}(k), p_{2}(k), p_{3}(k)]^{{\mathrm{T}}}\) and \(m(k)=[m_{1}(k), m_{2}(k), m_{3}(k)]^{{\mathrm{T}}}\), \(p_{i}(k)\) and \(m_{i}(k)\) denote concentrations of protein and mRNA at time k, respectively; g(p(k)) is the feedback regulation of the protein on the transcription; the diagonal matrices \(B_1, B_2, B_3\) represent the decay rates of mRNA, the decay rates of protein, and the translation rates of mRNA, respectively; \(D_0,D_1\) are the coupling matrices; \(u_{1}(k)\) and \(u_{2}(k)\) are the external disturbances.

Let \(x(k)=\text {col}[m(k),p(k)]\), \(g(x(k))=\text {col}[g(m(k)),g(p(k))]\), \(B=\begin{bmatrix} B_1&{}\quad 0\\ B_3&{}\quad B_2\\ \end{bmatrix}\), \(W_0=\begin{bmatrix} 0&{}\quad D_0\\ 0&{}\quad 0\\ \end{bmatrix},\) \(W_1=\begin{bmatrix} 0&{}\quad D_1\\ 0&{}\quad 0\\ \end{bmatrix}\), \(u(k)=\begin{bmatrix} u_{1}(k)\\ u_{2}(k)\\ \end{bmatrix}\), \(u_{i}(k)=\begin{bmatrix} u_{i1}(k)\\ u_{i2}(k)\\ u_{i3}(k)\\ \end{bmatrix}\), \(i=1,2\).

Then, (37) can be rewritten as follows:

Set \(B_1=\begin{bmatrix} 0.2&{}\quad 0&{}\quad 0\\ 0&{}\quad 0.2&{}\quad 0\\ 0&{}\quad 0&{}\quad 0.2 \end{bmatrix}\), \(B_2=\begin{bmatrix} 0.1&{}\quad 0&{}\quad 0\\ 0&{}\quad 0.1&{}\quad 0\\ 0&{}\quad 0&{}\quad 0.1 \end{bmatrix}\), \(B_3=\begin{bmatrix} 0.09&{}\quad 0&{}\quad 0\\ 0&{}\quad 0.09&{}\quad 0\\ 0&{}\quad 0&{}\quad 0.09 \end{bmatrix}\), \(D_0=-0.5V\), \(D_1=-0.1V\), \(V=\begin{bmatrix} 0&{}\quad 0&{}\quad 1\\ 1&{}\quad 0&{}\quad 0\\ 0&{}\quad 1&{}\quad 0\\ \end{bmatrix}\), the regulation function \(g(x)=\frac{x^2}{1+x^2}\). It is obvious that the activation function \(g(\cdot )\) satisfies (2) with \(l_i^-=0,l_i^+=0.65, i=1,2,\ldots ,6.\)

The state trajectories of the system in Example 4 with \(d(k)=\hbox {int}[49.5+0.5*\cos (\frac{k\pi }{4})]\), \(x(0)=[2,1,-0.8,0.7,-0.9,-1.5]^{{\mathrm{T}}}\) and \(u_{ij}(k)=0.015\sin (0.02k),i=1,2,j=1,2,3\)

If \(d_m=1\), the MADUB \(d_M\) calculated by Theorem 1 (Zhang et al. 2017a) is 316. This discrete repressilator system is asymptotically stable for \(1\le d(k)\le 316\). However, the range of d(k) derived by Theorem 1 in this paper is \(1\le d(k)\le 1101\). Compared with Theorem 1 (Zhang et al. 2017a), our stability criterion can provide less conservative result.

Set \(d_m=4,d_M=50\). For \(\mu \ge 1\), by applying Theorem 2 in this paper, the allowable minimum \(H_{\infty }\) performance level \(\gamma =1.8100\).

Let \(d(k)=\hbox {int}[49.5+0.5*\cos (\frac{k\pi }{4})]\), the disturbance \(u_{ij}(k)=0.015\sin (0.02k)\), \(i=1,2, j=1,2,3\), and the initial value \(x(0)=[2,1,-0.8,0.7,-0.9,-1.5]^{{\mathrm{T}}}\). The state trajectories of the considered system are showed in Fig. 4, which indicates that the synthetic genetic regulatory network is asymptotically stable.

5 Conclusions

In this paper, the stability and \(H_{\infty }\) performance for the discrete-time neural networks with a time-varying delay has been investigated. A new free-matrix-based summation inequality is proposed and applied to estimate the single summation terms. By constructing a suitable LKF with a delay-product-term and bounding its forward difference by the proposed new inequality and the improved reciprocally convex inequality, we derive less conservative conditions for stability and \(H_{\infty }\) performance respectively. Four numerical examples are given to further verify the validity of the proposed criteria.

Data availability statement

Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

References

Banu LJ, Balasubramaniam P (2016) Robust stability analysis for discrete-time neural networks with time-varying leakage delays and random parameter uncertainties. Neurocomputing 179:126–134

Banu LJ, Balasubramaniam P, Ratnavelu K (2015) Robust stability analysis for discrete-time uncertain neural networks with leakage time-varying delay. Neurocomputing 151:808–816

Chen J, Lu JW, Xu SY (2016) Summation inequality and its application to stability analysis for time-delay systems. IET Control Theory Appl 10(4):391–395

Chen J, Park JH, Xu SY (2019) Stability analysis of discrete-time neural networks with an interval-like time-varying delay. Neurocomputing 329:248–254

Chen J, Park JH, Xu SY (2020) Stability analysis for delayed neural networks with an improved general free-matrix-based integral inequality. IEEE Trans Neural Netw Learn Syst 31:675–684

Elowitz MB, Leibler S (2000) A synthetic oscillatory network of transcriptional regulators. Nature 403:335–338

Feng ZG, Zheng WX (2015) On extended dissipativity of discrete-time neural networks with time delay. IEEE Trans Neural Netw Learn Syst 26(12):3293–3300

Gabrijel I, Dobnikar A (2003) On-line identification and reconstruction of finite automata with generalized recurrent neural networks. Neural Netw 16:101–120

Gu K, Kharitonov VL, Chen J (2003) Stability of time-delay systems. Birkhäuser, Boston

Haykin S (1998) Neural networks: a comprehensive foundation, 3rd edn. Macmillan, London

He J, Liang Y, Yang F, Yang F (2020) New $H_\infty $ state estimation criteria of delayed static neural networks via the Lyapunov–Krasovskii functional with negative definite terms. Neural Netw 123:236–247

Huang H, Huang T, Chen X (2013) Guaranteed $H_\infty $ performance state estimation of delayed static neural networks. IEEE Trans Circuits Syst II 60(6):371–375

Huang H, Huang T, Chen X (2015) Further result on guaranteed $H_\infty $ performance state estimation of delayed static neural networks. IEEE Trans Neural Netw Learn Syst 26:1335–1341

Jin L, He Y, Jiang L, Wu M (2018) Extended dissipativity analysis for discrete-time delayed neural networks based on an extended reciprocally convex matrix inequality. Inf Sci 462:357–366

Kim JH (2016) Further improvement of Jensen inequality and application to stability of time-delayed systems. Automatica 64:121–125

Kwon OM, Park MJ, Park JH, Lee SM, Cha EJ (2013) New criteria on delay-dependent stability for discrete-time neural networks with time-varying delays. Neurocomputing 121:185–194

Kwon OM, Park MJ, Park JH, Lee SM (2016) Improvement on the feasible region of $H_\infty $ performance and stability for systems with interval time-varying delays via augmented Lyapunov–Krasovskii functional. J Frankl Inst 353:4979–5000

Lee WI, Lee SY, Park PG (2014) Improved criteria on robust stability and $H_\infty $ performance for linear systems with interval time-varying delays via new triple integral functionals. Appl Math Comput 243:570–577

Liu GP (2002) Nonlinear identification and control: a neural network approach. Ind Robot 29(5):469–470

Liu XG, Wang FX, Tang ML (2017) Auxiliary function-based summation inequalities and their applications to discrete-time systems. Automatica 78:211–215

Mathiyalagan K, Sakthivel R, Anthoni SM (2012) Exponential stability result for discrete-time stochastic fuzzy uncertain neural networks. Phys Lett A 376(8–9):901–912

Meng X, Lam J, Du B, Gao HJ (2010) A delay-partitioning approach to the stability analysis of discrete-time systems. Automatica 46(3):610–614

Nam PT, Luu TH (2020) A new delay-variation-dependent stability criterion for delayed discrete-time systems. J Frankl Inst 357:6951–6967

Nam PT, Trinh H, Pathirana PN (2015) Discrete inequalities based on multiple auxiliary functions and their applications to stability analysis of time-delay systems. J Frankl Inst 352(12):5810–5831

Park PG, Kob JW, Jeong CK (2011) Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47:235–238

Park MJ, Kwon OM, Park JH, Lee SM, Cha EJ (2015) $H_\infty $ state estimation for discrete-time neural networks with interval time-varying and probabilistic diverging disturbances. Neurocomputing 153:255–270

Qiu SB, Liu XG, Wang FX, Chen Q (2019) Stability and passivity analysis of discrete-time linear systems with time-varying delay. Syst Control Lett 134:104543

Seuret A, Gouaisbaut F (2016) Delay-dependent reciprocally convex combination lemma. Rapport LAAS no. 16006 hal-01257670

Seuret A, Gouaisbaut F, Fridman E (2015) Stability of discrete-time systems with time-varying delays via a novel summation inequality. IEEE Trans Autom Control 60(10):2740–2745

Shi C, Hoi K, Vong S (2021) Free-weighting-matrix inequality for exponential stability for neural networks with time-varying delay. Neurocomputing 466:221–228

Song QK, Wang ZD (2007) A delay-dependent LMI approach to dynamics analysis of discrete-time recurrent neural networks with time-varying delays. Phys Lett A 368(1–2):134–145

Song CW, Gao HJ, Zheng WX (2009) A new approach to stability analysis of discrete-time recurrent neural networks with time-varying delay. Neurocomputing 72:2563–2568

Tan GQ, Wang ZS (2021) $H_\infty $ performance analysis for delayed Markovian jump neural networks via the Lyapunov–Krasovskii functional with delay-product-type terms. J Frankl Inst 358:8609–8624

Tian YF, Wang ZS (2021) A new result on $H_\infty $ performance state estimation for static neural networks with time-varying delays. Appl Math Comput 388:125556

Wang T, Xue MX, Fei SM, Li T (2013) Triple Lyapunov functional technique on delay-dependent stability for discrete-time dynamical networks. Neurocomputing 122:221–228

Wu M, Liu F, Shi P, He Y, Yokoyama R (2008) Improved free-weighting matrix approach for stability analysis of discrete-time recurrent neural networks with time-varying delay. IEEE Trans Circuit Syst II Express Briefs 55(7):690–694

Wu ZG, Su HY, Chu J, Zhou WN (2010) Improved delay-dependent stability condition of discrete recurrent neural networks with time-varying delays. IEEE Trans Neural Netw 21(4):692–697

Xia WF, Xu SY, Lu JW, Zhang ZQ, Chu YM (2020) Reliable filter design for discrete-time neural networks with Markovian jumping parameters and time-varying delay. J Frankl Inst 357:2892–2915

Zeng HB, He Y, Wu M, She J (2015) Free-matrix-based integral inequality for stability analysis of systems with time-varying delay. IEEE Trans Autom Control 60(10):2768–2772

Zhang XM, Han QL (2018) State estimation for static neural networks with time-varying delays based on an improved reciprocally convex inequality. IEEE Trans Neural Netw Learn Syst 29:1376–1381

Zhang BY, Xu SY, Zou Y (2008) Improved delay-dependent exponential stability criteria for discrete-time recurrent neural networks with time-varying delays. Neurocomputing 72:321–330

Zhang CK, He Y, Jiang L, Wu M, Zeng HB (2016) Delay-variation-dependent stability of delayed discrete-time systems. IEEE Trans Autom Control 61(9):2663–3669

Zhang CK, He Y, Jiang L, Wang QG, Wu M (2017a) Stability analysis of discrete-time neural networks with time-varying delay via an extended reciprocally convex matrix inequality. IEEE Trans Cybern 47(10):3040–3049

Zhang CK, He Y, Jiang L, Wu M, Zeng HB (2017b) Summation inequalities to bounded real lemmas of discrete-time systems with time-varying delay. IEEE Trans Autom Control 62(5):2582–2588

Zhang XM, Han QL, Ge XH (2021) A novel approach to $H_\infty $ performance analysis of discrete-time networked systems subject to network-induced delays and malicious packet dropouts. Automatica. https://doi.org/10.1016/j.automatica.2021.110010

Acknowledgements

The authors would like to thank the editor and anonymous reviewers for their valuable comments and suggestions.

Funding

This work is partly supported by the National Natural Science Foundation of China under Grant nos. 61773404 and 61271355, Fundamental Research Funds for the Central Universities of Central South University no. 2018zzts098, and Scientific Research Fund of Hunan Provincial Education Department no. 20C0349.

Author information

Authors and Affiliations

Contributions

All authors contributed equally and significantly in writing this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflicts of interest to this work.

Additional information

Communicated by Graçaliz Pereira Dimuro.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, Q., Liu, X., Guo, P. et al. Improved results on stability and \(H_{\infty }\) performance analysis for discrete-time neural networks with time-varying delay. Comp. Appl. Math. 41, 206 (2022). https://doi.org/10.1007/s40314-022-01902-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-022-01902-6