Abstract

This paper studies a new computational method for the approximate solution of the space fractional advection–dispersion equation in sense of Caputo derivatives. In the first method, a time discretization is accomplished via the compact finite difference, while the fourth kind shifted Chebyshev polynomials are used to discretize the spatial derivative. The unconditional stability and convergence order of the method are studied via the energy method. Three examples are given for illustrating the effectiveness and accuracy of the new scheme when compared with existing numerical methods reported in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus (FC) can be viewed as the generalization of classical calculus to non-integer orders (Podlubny 1998; Oldham and Spanier 1974; Milici et al. 2018). In recent years, FC has gained considerable popularity and importance in various fields of science and engineering including economics, optimal control, materials, chemistry, physics, and social science (Ortigueira and Machado 2020; Tenreiro Machado and Lopes 2019; Rigi and Tajadodi 2019; Mahmoudi et al. 2019). In fact, due to the adequacy of fractional derivatives for capturing memory effects, many physical systems can be well described by means of fractional differential equations (Toubaei et al. 2019; Golbabai et al. 2019b, a; Nikan et al. 2020).

We consider the general advection–dispersion equation that is naturally utilized to explain the transient transport of solutes as

where \(D_{L}=D_{e}+\alpha _{L}\nu \), \(D_{L}>0,\) and \(\nu > 0\). Table 1 lists the required parameters and variables for the equation (1).

Fractional space derivatives are applied for modeling anomalous diffusion or dispersion, where a particle spreads at a rate inconsistent with the classical Brownian motion model. The model (1) is based on the Fick’s law, which describes the transport of passive tracers carried through a fluid flow in a porous medium (Liu et al. 2004). The FADE is a fundamental equation of motion that is used for modeling water flow movement (Hu et al. 2016), material transport and diffusion (Hernandez et al. 1995). For convenience and without losing the generality, let us introduce dimensionless space, time, and concentration variables by

respectively. Then the dimensionless advection–dispersion equation (ADE) can be rewritten as

where the constants \(\gamma \) and \(\mu \) are the dispersion coefficient and the average fluid velocity, respectively. In virtue of the non-local importance of fractional derivatives, we suggest fractional order in Eq. (2) is used in the groundwater hydrology for modeling transport phenomena. The space fractional advection–dispersion equation (SFADE) is obtained from the classical equation by replacing the first-order and the second-order spatial derivatives by fractional derivatives termed in Caputo sense of order \(\alpha \in (1, 2]\) and \(\beta \in (0, 1]\), respectively. The SFADE is presented as

In addition, the advection–dispersion equation (ADE) of integer or fractional orders is widely utilized in environmental engineering and aviation (Liu et al. 2016), as well as in the marine (Farahani et al. 2015), chemical (Colla et al. 2015) and metallurgy (Zaib and Shafie 2014) areas. Therefore, the development of efficient numerical schemes for solving ADE is important both from the theoretical and practical point of views.

Hereafter we outline some preliminary concepts of fractional derivatives that are useful in the subsequent discussion (Podlubny 1998; Oldham and Spanier 1974; Milici et al. 2018).

Definition 1

The fractional derivative of Caputo type can be defined as

Remark 1

Some important properties of the Caputo derivative \({{\mathcal {D}}}_{x}^{\beta }\) are as listed:

-

1.

\({{\mathcal {D}}}_{x}^{\beta }x^{\alpha }= \frac{\varGamma (1+\alpha )}{\varGamma (1+\alpha -\beta )}x^{\alpha -\beta }, \quad 0< \beta <\alpha +1, \beta >-1,\)

-

2.

\( {{\mathcal {D}}}_{x}^{\beta }(\gamma f(x, t)+ \eta u(x, t))=\gamma {{\mathcal {D}}}_{x}^{\beta }f(x, t)+\eta {{\mathcal {D}}}_{x}^{\beta }u(x, t),\)

-

3.

\( {{\mathcal {D}}}_{x}^{\beta }{{\mathcal {D}}}_{x}^{n}u(x, t)={{\mathcal {D}}}_{x}^{\beta +n}u(x, t)\ne {{\mathcal {D}}}_{x}^{n}{{\mathcal {D}}}_{x}^{\beta }u(x, t).\)

In this article, we propose an numerical approach for computing the approximate solution of the SFADE as follows:

with the initial condition

and boundary conditions

in which \(0< \beta \le 1,\quad 1 < \alpha \le 2 \).

Several numerical algorithms have been proposed for solving the SFADE. Ervin and Roop (2007) investigated an approach for FADE using the variational iteration method on bounded domain. Su et al. (2010) used the weighted average finite difference method. Khader and Sweilam (2014) adopted the Legendre collocation method. Saw and Kumar (2018, 2019) applied the Chebyshev collocation methods to obtain the approximation solution of the SFADE. Safdari et al. (2020a, 2020b) adopted the spectral collocation method for solving SFADE. Aghdam et al. (2020) formulated a spectral collocation method to approximate SFADE.

The rest of this paper has the following organization. Section 2 presents the operational matrices of the fourth kind Chebyshev polynomials (FKCP) for fractional derivative. Section 3 describes the approximation of the fractional operator \({{\mathcal {D}}}_{x}^{\alpha }u(x, t)\) and implements the Chebyshev collocation approach to solve (4). The fourth kind shifted Chebyshev polynomials (FKSCP) and the compact finite difference are implemented to discretize the SFADE in the spatial and temporal variable, respectively. Section 4 discusses error analysis and upper bounds of time-discrete approach. Section 5 presents two numerical examples illustrating effectiveness and accuracy of the new scheme. Finally, Sect. 6 includes the main conclusions.

2 Some properties of the FKSCP

The FKCP \({\mathcal {W}}_{i}(x)\) defined in the domain \([-1, 1]\) are orthogonal polynomials of degree i as follows:

where \(P_{i}^{(r , s)}(x)\) is a Jacobi polynomial orthogonal corresponding to the weight function \(\omega ^{(r , s)}(x)=(1-x)^{r}(1+x)^{s}\) over \([-1, 1]\), such that

\({\mathcal {W}}_{i}(x)\) can be organized

where

The polynomials \({\mathcal {W}}_{i}(x)\) on \([-1, 1]\) corresponding to the weight function are orthogonal with the inner product as

In the domain [0, 1], the SPCFK \({\mathcal {W}}_{i}^{*}(x)={\mathcal {W}}_{i}(2x-1)\) can be defined as follows:

These polynomials are orthogonal in the domain [0, 1] with respect to \(\sqrt{\frac{1-x}{x}}\):

Let g(x) be a square-integrable function in [0, 1]. Then g(x) may be extended in terms of \( {\mathcal {W}}^{*}_{i}(x) \) as

where the coefficients \(c_{i}, i=0, 1, \ldots , N\) are defied by

The fractional derivative of \({\mathcal {W}}_{i}^{*}(x)\) is formulated based on the linearity of the Caputo definition

where \(\lceil \omega \rceil \) denotes the ceiling part of \(\omega \). The closed formulation of \({\mathcal {D}}^{\omega }({\mathcal {W}}_{i}^{*}(x))\) can be written as

and \(N_{i, k, \xi }^{\omega ,\lceil \omega \rceil }\) is defined by

Using the properties listed in Remark 1 and combining Eqs. (7), (9) and (10), we have

3 Numerical scheme

For discretizing (4), we consider the nodes \(t_j = j \delta t~ (j = 0, 1,\ldots , M)\) in the time domain [0, T], where \(t_n\) satisfies \(0 = t_0< t_1<\cdots < t_{M} = T\) with mesh length \(\delta t=T/M\) for some positive integer M and define the collocation points \(\lbrace x_{r-1}\rbrace _{r=1}^{N+1-\lceil \upsilon \rceil }\) using the roots of the SCPSK \(U^{*}_{N+1-\lceil \upsilon \rceil }(x)\). Based on the Taylor formula of \(u(x, t)\in {\mathbb {C}}^{3}(0, 1)\), we have

where \(P_{\delta \tau }u(x_{r}, t_{j})=\frac{u_{r}^{j}-u_{r}^{j-1}}{\delta \tau }\). Now, discretizing (4) in the grid points \((x_{r}, t_{j})\) and by substituting (12), it yields

with

and notice that

Substituting Eq. (14) in \(T_{j}\) and as well as in Eq. (13), one obtains

Let us define \(u(x_{r}, t_{j})=U_{r}^{j}\), \(q(x_{r}, t_{j})=q_{r}^{j}\). Then we get the semi-discrete scheme as

where \({\mathcal {R}}^{j}(x)\) stands for a truncation term. It follows that, for fully discretizing (4), we need to approximate the Caputo derivative in \(\frac{{\partial }^{\alpha } U_{r}^{j}}{\partial x^{\alpha }}\) and \(\frac{{\partial }^{\beta } U_{r}^{j}}{\partial x^{\beta }}\) using the result of Eq. (11). In the Chebyshev collocation scheme, the approximate solution u(x, t) can be represented as

In view of relations (11), (16) and (17), we have

where \({\mathfrak {u}}_{i}^{j}\) represents the coefficients at the point \(t_{j}\). With the roots of the FKSCP, \(\{x_{r}\}_{r=1}^{N+1-\lceil \upsilon \rceil }\), we collocate Eq. (18) as follows:

Substituting the boundary conditions given in Eq. (6) into (17), we obtain the \(\lceil \upsilon \rceil \) equations

Equation (19), together with \(\lceil \nu \rceil \) equations of the boundary conditions (20) lead \(N + 1\) of algebraic equations that can be obtained the unknowns \(u_i,i = 0, 1,\ldots , N.\)

4 Error analysis

This section examines the time-discrete scheme in terms of unconditional stability and convergence issues. Assume that \(\varOmega \) represents an a bounded and open domain in \({\mathbb {R}}^{2}\). First, let us introduce the functional spaces endowed with standard norms and inner product

which induces the norm \(\Vert u(x)\Vert _{2}=\big \langle u(x), u(x)\big \rangle ^{\frac{1}{2}}\) and let us define

Now, relation (16) can be rearranged according to the expression

We some lemmas that are introduced in the following (Ervin et al. 2007).

Lemma 1

Assume that \(1< \alpha <2\). Then for any \(u, \nu \in H^{\frac{\alpha }{2}}(\varOmega )\) it holds that

Lemma 2

Let \(\alpha > 0\) be given. Then it follows that

Now, we need to prove the following lemma:

Lemma 3

For \(1<\alpha \le 2\) and the functions \( g(x), {}_{a}{{\mathcal {D}}}_{x}^{\alpha }g(x)\in H^{\alpha }(\varOmega )\), there exists a sufficiently small \(\delta \tau \) such that

Proof

By virtue of the nature of the inner product, one can arrive at

From Lemma 2 and knowing that \(1<\alpha \le 2\), we obtain

thus, by choosing a small enough \(\delta \tau \) that guarantees

Finally, we obtain

which proves the theorem. \(\square \)

Lemma 4

If \(U^{k}\in H^{1}(\varOmega ), k=1, 2, \ldots , M,\) and \(U^{0}\) be the solution of the time-discretized scheme (21) and the initial condition, respectively, then

Proof

We will prove above result by principle of mathematical induction. First, when \(k=1\), we have

Taking the inner product of Eq. (23) by \(U^{1}\), one can obtain

From Lemmas 1 and 2, it is clear that

Regarding Lemma 3 and the Schwarz inequality, we have

The aforesaid relation can be rewritten as

Suppose that the theorem is true for all j

Taking the inner product of Eq. (23) by \(U^{k}\), we have

From the Schwarz inequality and \(U^{k}\in H^{1}(\varOmega )\), we have the following inequality:

Therefore, Lemma 4 is proven by induction on k. \(\square \)

Next theorem proves the stability of relation (16).

Theorem 1

The time semi-discretization (16) is unconditionally stable.

Proof

Let us consider that \({\widehat{U}}_{r}^{j}, j=1, 2, \ldots , M,\) is an approximate solution of (16), with the initial condition \({\widehat{U}}_{r}^{0}=u(x, 0)\). Then the error \(\varepsilon ^{j}=U_{r}^{j}-{\widehat{U}}_{r}^{j}\) satisfies

Using the aforesaid equation and Lemma 4, it follows that

This shows that the scheme (16) is unconditionally stable. \(\square \)

Theorem 2

Let \(\varepsilon ^{j}=u(x, t_{j})-U^{j}, ~j=1, 2, \ldots , M,\) be the errors associated with Eq. (16). Then we obtain that

where \(C_{x} >0\) is depends on x.

Proof

First, we obtain the following weak form using Eq. (16) as

for \(j=1, 2, \ldots , M\).

Based on the Lemmas 1, 2 ,3 and Cauchy–Schwarz inequality, we conclude that

So, one can get

Summing for j from 1 to M, we obtain

From the above relation, we can conclude

since \(\Vert \varepsilon ^{0}\Vert =0\) and \(\delta \tau =\frac{T}{M}\), we have

where \(C_{x}=CT\). The proof of Theorem 2 is completed. \(\square \)

5 Numerical examples

In this section, we present the numerical results of the proposed method on three test problems. Moreover, we will test the accuracy of proposed method for different values of N, M at final times T. In addition, the computational order (denoted by \(C_{\delta \tau } \)) is computed by the formula

where \(E_{1}\) and \(E_{2}\) are the errors corresponding to grids with time steps \({\delta \tau }_{1}\) and \({\delta \tau }_{2}\), respectively.

Example 1

Consider the following SFADE

with boundary and initial conditions

The analytical solution of this problem is \(u(x, t)=e^{-2t}(x^{\alpha }-x^{\beta })\).

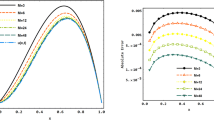

Tables 2–6 list the results for Example 1, with various values of M and N at different values of T. Tables 2 and 3 make a comparison between the obtained results with the techniques described in (Khader and Sweilam 2014; Saw and Kumar 2018, 2019) at \(T=1\) and \(T=2.\) We verify that the proposed method achieves superior accuracy than the techniques described in (Khader and Sweilam 2014; Saw and Kumar 2019, 2018). Table 4 includes the maximum norm error \(L_{\infty }\) yielded by the proposed method for \(N=5\) and various values of M, at \(T=1\). Table 5 reports that the computational order of the method in the time variable is approximately \({\mathcal {O}}( \delta \tau ^{2})\) which is in accordance with the theoretical results. Table 6 demonstrates the computational order at the final times \( T \in \{2, 10\}\). Figure 1 illustrates the numerical solution and the maximum norm error \(L_{\infty }\) with \(N=7\) and \(M=400\) at \(T=1\). Figure 2 draws the behaviour of the maximum norm errors \(L_{\infty }\) when adopting \(N=3\) and \(N=7\) for various values of M at \(T=2\). Figure 3a includes the behaviour of the maximum norm error \(L_{\infty }\) and \(L_{2}\)-norm when choosing \(M=400,\) and various values N at \(T=1\). Figure 3b plots the maximum norm error \(L_{\infty }\) for \(N=5\) and various values M at \(T=1\). Figure 4 represents the behaviour of the maximum norm errors \(L_{\infty }\) for different values of \(\{\alpha ,\beta \},\) at \(T=1\).

The approximate solution (left panel) and the maximum norm error \(L_\infty \) (right panel) with \(M=400\) and \(N=7\) at \(T=1\) for Example 1

The maximum error norms \(L_\infty \) with \(\{\alpha =1.9, \beta =1 \}\) and different values of N and M at \(T=2\) for Example 1

The behavior of the approximate solutions for \(\alpha \in \{1.5,1.6,1.7,1.8,1.9\}\), \(\beta =1\) (left panel) and \(\beta \in \{0.5,0.6,0.7,0.8,0.9\}\), \(\alpha =1.5\) (right panel) for Example 1

Example 2

Consider the following SFADE

with boundary and initial conditions

such that the analytical solution is \(u(x, t)=xt(x-1)(t-1)\).

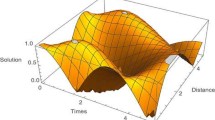

Table 7 makes the comparison between the numerical and the analytical solutions for various values of \(T \in \{0.3,06,0.9\}\), showing that the method rapidly converges to the analytical solution. Figure 5 plots the behaviour of the maximum norm error for different values of \(\{\alpha ,\beta \}\) at \(T=10\).

The approximate solutions of Example 2 for \(\beta \in \{0.5,0.6,0.7,.8,0.9\}\), \(\alpha =1\) (left panel) and \(\alpha \in \{1.1,1.2,1.3,1.4\}\), \(\beta =1\) (right panel) at final time \(T=10\)

Example 3

Consider the following SFADE

with boundary and initial conditions

which the analytical solution is \(u(x, t)=(x^{2}-x^{3})\cos (t)\).

Table 8 demonstrates the computational order at the final times \( T \in \{1,2\}\) which is in accordance with the theoretical results.

6 Conclusion

This paper proposed a new method for solving the SFADE. The numerical algorithm involves two steps. First, the compact finite difference is applied to discretize the time derivative. Second, the FKSCP is implemented to approximate the space fractional derivatives. The error analysis of the proposed method was investigated in \(L^{2}\) space. To illustrate the applicability and validity of the new scheme, illustrative examples were provided. The numerical results verify well the theoretical analysis.

References

Aghdam YE, Mesgrani H, Javidi M, Nikan O (2020) A computational approach for the space-time fractional advection-diffusion equation arising in contaminant transport through porous media. Eng Comput. https://doi.org/10.1007/s00366-020-01021-y

Colla L, Fedele L, Buschmann M (2015) Laminar mixed convection of TiO2-water nanofluid in horizontal uniformly heated pipe flow. Int J Therm Sci 97:26–40

Ervin VJ, Roop JP (2007) Variational solution of fractional advection dispersion equations on bounded domains in 1d. Numer Methods Partial Differ Equ Int J 23(2):256–281

Ervin VJ, Heuer N, Roop JP (2007) Numerical approximation of a time dependent, nonlinear, space-fractional diffusion equation. SIAM J Numer Anal 45(2):572–591

Farahani A, Taghaddos H, Shekarchi M (2015) Prediction of long-term chloride diffusion in silica fume concrete in a marine environment. Cement Con Compos 59:10–17

Golbabai A, Nikan O, Nikazad T (2019a) Numerical analysis of time fractional Black–Scholes European option pricing model arising in financial market. Comput Appl Math 38(4):173

Golbabai A, Nikan O, Nikazad T (2019b) Numerical investigation of the time fractional mobile-immobile advection-dispersion model arising from solute transport in porous media. Int J Appl Comput Math 5(3):50

Hernandez J, Crespo A, Duijm N (1995) Numerical modeling of turbulent jet diffusion flames in the atmospheric surface layer. Combust flame 101(1–2):113–131

Hu G, Zhao L, Wu X, Li R, Wu T, Xie C, Qiao Y, Shi J, Li W, Cheng G (2016) New Fourier-series-based analytical solution to the conduction–convection equation to calculate soil temperature, determine soil thermal properties, or estimate water flux. Int J Heat Mass Transfer 95:815–823

Khader M, Sweilam N (2014) Approximate solutions for the fractional advection-dispersion equation using legendre pseudo-spectral method. Comput Appl Math 33(3):739–750

Liu F, Anh V, Turner I (2004) Numerical solution of the space fractional Fokker–Planck equation. J Comput Appl Math 166(1):209–219

Liu L, Zheng L, Liu F, Zhang X (2016) Anomalous convection diffusion and wave coupling transport of cells on comb frame with fractional Cattaneo–Christov flux. Commun Nonlinear Sci Numer Simul 38:45–58

Mahmoudi M, Ghovatmand M, Jafari H (2019) An adaptive collocation method for solving delay fractional differential equations. Int J Appl Comput Math 5(6):157

Milici C, Drăgănescu G, Machado JT (2018) Introduction to fractional differential equations, vol 25. Springer, Berlin

Nikan O, Machado JT, Golbabai A (2020) Numerical solution of time-fractional fourth-order reaction-diffusion model arising in composite environments. Appl Math Model 89:819–836

Oldham KB, Spanier J (1974) The fractional calculus, vol. 111 of Mathematics in science and engineering

Ortigueira MD, Machado JT (2020) On the properties of some operators under the perspective of fractional system theory. Commun Nonlinear Sci Numer Simul 82:105022

Podlubny I (1998) Fractional differential equations: an introduction to fractional derivatives, fractional differential equations, to methods of their solution and some of their applications, vol 198. Elsevier, Amsterdam

Rigi F, Tajadodi H (2019) Numerical approach of fractional Abel differential equation by Genocchi polynomials. Int J Appl Comput Math 5(5):134

Safdari H, Mesgarani H, Javidi M, Aghdam YE (2020b) Convergence analysis of the space fractional-order diffusion equation based on the compact finite difference scheme. Comput Appl Math 39(2):1–15

Safdari H, Aghdam YE, Gómez-Aguilar J (2020a) Shifted Chebyshev collocation of the fourth kind with convergence analysis for the space-time fractional advection–diffusion equation. Eng Comput. https://doi.org/10.1007/s00366-020-01092-x

Saw V, Kumar S (2018) Fourth kind shifted Chebyshev polynomials for solving space fractional order advection-dispersion equation based on collocation method and finite difference approximation. Int J Appl Comput Math 4(3):82

Saw V, Kumar S (2019) Second kind Chebyshev polynomials for solving space fractional advection-dispersion equation using collocation method. Iran J Sci Technol Trans A Sci 43(3):1027–1037

Su L, Wang W, Xu Q (2010) Finite difference methods for fractional dispersion equations. Appl Math Comput 216(11):3329–3334

Tenreiro Machado JA, Lopes AM (2019) Fractional-order kinematic analysis of biomechanical inspired manipulators. J Vibrat Control: 102–111

Toubaei S, Garshasbi M, Reihani P (2019) Boundary functions determination in an inverse time fractional heat conduction problem. Comput Appl Math 38(4):190

Zaib A, Shafie S (2014) Thermal diffusion and diffusion thermo effects on unsteady mhd free convection flow over a stretching surface considering joule heating and viscous dissipation with thermal stratification, chemical reaction and hall current. J Franklin Inst 351(3):1268–1287

Acknowledgements

The authors are thankful to the respected reviewers for their valuable comments and constructive suggestions towards the improvement of the original paper. The authors are also very grateful to the Associate Editor, Professor Vasily E. Tarasov for managing the review process.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Vasily E. Tarasov.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mesgarani, H., Rashidinia, J., Aghdam, Y.E. et al. Numerical treatment of the space fractional advection–dispersion model arising in groundwater hydrology. Comp. Appl. Math. 40, 22 (2021). https://doi.org/10.1007/s40314-020-01410-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-020-01410-5

Keywords

- Space fractional advection–dispersion equation

- Compact finite difference

- Chebyshev collocation method

- Error analysis