Abstract

This paper presents a method for solving fuzzy linear systems, where the coefficient matrix is an \(n\times n\) real matrix, using a block structure of the Core inverse, and we use the Hartwig–Spindelböck decomposition to obtain the Core inverse of the coefficient matrix A. The aim of this paper is twofold. First, we obtain a strong fuzzy solution of fuzzy linear systems, and a necessary and sufficient condition for the existence strong fuzzy solution of fuzzy linear systems are derived using the Core inverse of the coefficient matrix A. Second, general strong fuzzy solutions of fuzzy linear systems are derived, and an algorithm for obtaining general strong fuzzy solutions of fuzzy linear systems by Core inverse is also established. Finally, some examples are given to illustrate the validity of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Linear systems play an important role in various fields, such as information acquisition, optimization control, physics, statistics, engineering, economics, and even social science.

Therefore, it is meaningful to study general strong fuzzy solutions of fuzzy liner system (FLS) and promote various disciplines using program algorithms. In Friedman et al. (1998), proposed a general model for solving FLS, whose right-hand side column is an arbitrary fuzzy number vector and the coefficient matrix is crisp, by the embedded method. Based on the result of Friedman et al. (1998), in Allahviranloo (2004), Allahviranloo and Ghanbari (2012), Lodwick and Dubois (2015) introduced various methods for solving the FLS. Abbasbandy et al. (2008) introduced an interesting approach to solve the FLS using the generalized inverses. However, using aforementioned methods, the general solution of the FLS can not be obtained. Mihailović et al. (2018a, b) proposed two different methods for obtaining all solutions of the FLS using the Moore–Penrose inverse and group inverse, and brought to light the importance of the core inverse of the coefficient matrix in solving FLS.

Friedman et al. (1998) presented a method for solving square FLS and the solution vector called either a strong fuzzy solution or a weak fuzzy solution. However, some authors think that weak fuzzy solutions are not solutions of the FLS, for example, in Allahviranloo (2003) and Lodwick and Dubois (2015). In addition, it is known that the sufficient condition for the existence of unique solution of a square FLS is not a necessary condition Allahviranloo (2003), Friedman et al. (2003), and we try to clarify it. Based on the method of Friedman et al. (1998), Mihailović et al. (2018a, b) used the coefficients matrix unique block representation of Moore–Penrose inverse and Group inverse to get general solution of the FLS, and meanwhile, the form of algorithm for obtaining general solution of the FLS by Moore–Penrose inverse and {1}-inverse are established, respectively. However, in [Th. 5, 12] and [Th. 6, 13], the author assumed that Moore–Penrose inverse and {1}-inverse are nonnegative to obtain a strong fuzzy solution. On the other hand, the matrices of index one are called group matrices [P. 141, 7] or Core matrices [P. 47, 14]; Baksalary and Trenkler (2010) introduced the Core inverse and studied the properties of Core inverse and one special partial order. Next, Wang (2016) gave the Core-EP decomposition for studying the Core-EP inverse and its applications, where the Core-EP inverse is a generalization of the Core inverse. It is well known that Core inverse is unique and can solve general linear equations, see Baksalary and Trenkler (2010) and Ben-Israel and Greville (2003). Although the authors in Mihailović et al. (2018a, b) proposed a necessary and sufficient condition for the existence of a solution of all FLS of Friedman type, and meanwhile, they established the general solution of a square FLS in terms of any {1}-inverse of its coefficient matrix. However, the author also stated that the first important step is finding one strong fuzzy number vector which will be the starting vector for the determination of all strong fuzzy number vector solutions of the FLS, see [Th. 5,12]. Therefore, our goal now is to take a closer look on a square FLS and investigate the block structure of unique Core inverse.

Inspired by the above discussion, in this paper, we state a necessary and sufficient condition for the existence of \(S^{\{1\}}\ge 0\) (\(S^{\{1\}}\) is called {1}-inverse of the coefficient matrix S). Next, we obtain a necessary and sufficient condition for the existence of  (

( is called core inverse of the coefficient matrix S) to obtain a strong fuzzy number solution of FLS. Moreover, the core inverse of the coefficient matrix A is employed to obtain a necessary and sufficient condition for the solution existence of the FLS. Meanwhile, all strong fuzzy number solutions of the FLS are given by the core inverse of its coefficient matrix, and an algorithm for solving all strong fuzzy number solutions of the FLS is derived. Finally, some examples are given to illustrate the validity of an algorithm.

is called core inverse of the coefficient matrix S) to obtain a strong fuzzy number solution of FLS. Moreover, the core inverse of the coefficient matrix A is employed to obtain a necessary and sufficient condition for the solution existence of the FLS. Meanwhile, all strong fuzzy number solutions of the FLS are given by the core inverse of its coefficient matrix, and an algorithm for solving all strong fuzzy number solutions of the FLS is derived. Finally, some examples are given to illustrate the validity of an algorithm.

This paper is divided into five sections. In Sect. 2, we introduce some characteristics of generalized inverses and fuzzy numbers. In Sect. 3, a method for finding a strong fuzzy solution of the FLS based on  calculation, is given when the coefficient matrix of model FLS is real \(2n\times 2n\) matrix. In Sect. 4, another method for finding general solution of the FLS based on

calculation, is given when the coefficient matrix of model FLS is real \(2n\times 2n\) matrix. In Sect. 4, another method for finding general solution of the FLS based on  calculation, is given when the coefficient matrix of the FLS is a real matrix. Next, an algorithm for solving FLS is derived, and we use some examples to explain the new algorithm. In Sect. 5, we give a summary of this work.

calculation, is given when the coefficient matrix of the FLS is a real matrix. Next, an algorithm for solving FLS is derived, and we use some examples to explain the new algorithm. In Sect. 5, we give a summary of this work.

2 Preliminary

This section mainly contains two aspects. On the one hand, we review the definition of fuzzy numbers, fuzzy sets, and the symbols commonly used in FLS. On the other hand, we introduce generalized inverses and some common symbols.

2.1 The concept of the FLS

Definition 2.1

(Mihailović et al. 2018b, Definition 1) A fuzzy set \({\tilde{z}}\) with a membership function \({\tilde{z}}:{\mathbb {R}}\rightarrow [0,1]\) satisfying the following three conditions are called a fuzzy number.

- 1.

\({\tilde{z}}(x)=0\) outside of interval [a, b].

- 2.

\({\tilde{z}}\) is the upper semi-consistent continuous function.

- 3.

There are real numbers c and d such that \(a\le c\le d\le b\).

- 3.1.

\({\tilde{z}}(x)\) is monotonic increasing on [a, c],

- 3.2.

\({\tilde{z}}(x)\) is monotonic decreasing on [d, b],

- 3.3.

\({\tilde{z}}(x)=1\), \(c\le x\le d\).

The set of all fuzzy numbers is denoted by \(\xi \). The \(\alpha \)-cut of a fuzzy number is the crisp set, a bounded closed interval for each \(\alpha \in [0,1]\), denoted with \([{\tilde{z}}]_{\alpha }\), such that \([{\tilde{z}}]_{\alpha }=[\underline{z}(\alpha ),{\bar{z}}(\alpha )]\), where \({\bar{z}}(\alpha )=\mathrm{sup}\{x\in {\mathbb {R}}:{\tilde{z}}(x)\ge \alpha \}\) and \( \underline{z}(\alpha )=\mathrm{inf}\{x\in {\mathbb {R}}:{\tilde{z}}(x)\ge \alpha \}\) . Using the lower and upper branches, \(\underline{z}\) and \({\bar{z}}\), a fuzzy number \({\tilde{z}}\) can be equivalently defined as a pair of function \((\underline{z}, {\bar{z}})\) where \(\underline{z}:[0,1]\rightarrow {{\mathbb {R}}}\) is a non-increasing left-continuous function, \({\bar{z}}:[0,1] \rightarrow \mathrm{{\mathbb {R}}}\) is a non-decreasing left-continuous function and \(\underline{z}(\alpha )\le {\bar{z}}(\alpha )\), for each \(\alpha \in [0,1]\).

Definition 2.2

(Mihailović et al. 2018b, Definition 3) Let arbitrary fuzzy numbers \({\tilde{z}}=(\underline{z}(\alpha ),{\bar{z}}(\alpha ))\), \({\tilde{u}} =(\underline{u}(\alpha ), {\bar{u}}(\alpha ))\) for each \(\alpha \in [0,1]\) and real number k, we define the scalar multiplication and the addition of fuzzy numbers.

- 1.

\([{\tilde{u}}+{\tilde{z}}]_\alpha =[\underline{z}(\alpha )+\underline{u}(\alpha ), {\bar{z}}(\alpha )+{\bar{u}}(\alpha )]\),

- 2.

\( [k{\tilde{z}}]_\alpha =\left\{ \begin{array}{rcl} {}[k\underline{z}(\alpha ),k{\bar{z}}(\alpha )], &{} &{} k\ge 0,\\ {}[k{\bar{z}}(\alpha ),k\underline{z}(\alpha )], &{} &{} k<0, \end{array} \right. \)

- 3.

\({\tilde{z}}={\tilde{u}}\Leftrightarrow \underline{z}(\alpha )=\underline{u}(\alpha )\) and \({\bar{z}}(\alpha )={\bar{u}}(\alpha ).\)

Definition 2.3

(Friedman et al. 1998) The fuzzy linear matrix system \(A{\tilde{X}}={\tilde{Y}}\) is as follows:

where the matrix \(A=[a_{ij}]\) is a real matrix \(({\tilde{y}}_{ij}\in \xi , {\tilde{x}}_{ij}\in \xi )\). Satisfying the above equations and conditions, it is called FLS. At the same time, let \({\tilde{Z}}=({\tilde{z}}_1, {\tilde{z}}_2, \ldots {\tilde{z}})_n^{*}\)\(([{\tilde{z}}_j]_\alpha =[\underline{z}_j(\alpha ), {\bar{z}}_j(\alpha )],~\alpha \in [0,1])\) be a solution of the FLS (2.1), where \(()^{*}\) indicates the transposition of (). We have

Then,

where \(a^{+}_{ij}=a_{ij}\vee 0\) and \(a^{-}_{ij}=-a_{ij}\vee 0\). Then, we have

where \([{\tilde{X}}]_{\alpha }=([{\tilde{x}}_1]_{\alpha },[{\tilde{x}}_2]_{\alpha }, \cdots [{\tilde{x}}_n]_{\alpha })^{*}\) and \([{\tilde{Y}}]_{\alpha }=([{\tilde{y}}_1]_{\alpha },[{\tilde{y}}_2]_{\alpha }, \cdots [{\tilde{y}}_n]_{\alpha })^{*}\), \(\alpha \in [0,1]\). The matrix form of this family of interval linear systems, is \(A[{\tilde{X}}]_{\alpha }=[{\tilde{Y}}]_{\alpha }\), and its solution is an interval-valued vector \([{\tilde{Z}}]_{\alpha }\), where its components are an intervals \([{\tilde{z}}_j]_\alpha =[\underline{z}_j(\alpha ), {\bar{z}}_j(\alpha )]\) and \(\underline{z}_j(\alpha )\le {\bar{z}}_j(\alpha )\), for each \(\alpha \in [0,1]\), such that \((\underline{z}, {\bar{z}})\) determines the parametric form of a fuzzy number, for \(1\le j\le n\).

We have

where

and

The matrix S is as follows:

where D and E are \(n\times n\) matrices, \(D=[a_{ij}^{+}]\) and \(E=[a_{ij}^{-}]\). According to Friedman et al. (1998), if S is non-negative and \(X^0=S^{-1}Y\) is defined as a solution of the (2.2), then \({\tilde{X}}^0\in \xi \) is a strong fuzzy number solution of the FLS (2.1). Next, according to Allahviranloo (2003), and Friedman et al. (1998, 2003), sufficient conditions for FLS (2.1) having a strong fuzzy number solution can be obtained. However, a solution vector of (2.2), does not need to be the representative vector of any fuzzy number vector (Table 1). But, if there exists a solution vector of (2.2), such that it is the representative vector of a fuzzy number vector, then that fuzzy number vector is a solution of the FLS (2.1). If a solution exists, we say that the FLS (2.1) is consistent. The matrix S will be called the matrix associated to the FLS (2.1).

2.2 The block representation of the core inverse

In this aspect, we review the characteristics of the Core inverse and the Hartwig–Spindelböck decomposition. Many characteristics of generalized inverses can be found in Wang (2016), Wang (2011) and Wang and Liu (2016). Let \({\mathbb {R}}_{m\times n}\), rk(P) and \(I_{n}\) be the set of \( m\times n\) real matrices, the rank of P, and the identity matrix of rank n, respectively. If the singular matrix P satisfies \(rk(P^2)=rk(P)\), then the index of it is one. If P is nonsingular, the index of it is zero. Denote

According to Hartwig and Spindelböck (1983), each matrix has the following form of decomposition (called Hartwig–Spindelböck decomposition):

where \(U\in {\mathbb {R}}_{n\times n}\) is unitary, \(\Sigma =\mathrm{diag}(\alpha _{1},\alpha _{2},\ldots ,\alpha _{r})\) is the diagonal matrix of singular values of A, \(\alpha _{1}\ge \alpha _{2}\ge \ldots \ge \alpha _{r}>0\), and \(K\in {\mathbb {R}}_{r\times r}\), \(L\in {\mathbb {R}}_{r\times (n-r)}\) satisfies (see Baksalary and Trenkler 2010)

Some matrices equations for a matrix \(P\in {\mathbb {R}}_{m\times n}\) will be reviewed as follows:

Definition 2.4

(Berman and Plemmons 1994; Wang 2016; Zhou et al. 2012) For any \(P\in {\mathbb {R}}_{m\times n}\), let \({\mathbb {T}}\{i,j,\ldots h\}\) denotes the set of matrices \(X\in {\mathbb {R}}_{m\times n}\) which fulfill equations \((i),(j), \ldots , (h)\) among the equations (1)–(5) and \((2)^\prime \). The matrix \(X\in {\mathbb {T}}\{i,j,\ldots h\}\) is called an \(\{i,j,\ldots h\}\)-inverse of P and is denoted by \(P^{\{i,j,\ldots h\}}\).

- (i):

If the matrix \(X\in {\mathbb {R}}_{m\times n}\) satisfies (1)–(4), then it is called the Moore–Penrose inverse of \(P\in {\mathbb {R}}_{m\times n}\). It is denoted by \(P^\dag \) or \(P^{\{1,2,3,4\}}\).

- (ii):

If the matrix \(X\in {\mathbb {R}}_{n\times n}\) satisfies (1), (2) and (5), then it is called group inverse of \(P\in {\mathbb {R}}_{n}^{CM}\). It is denoted by \(P^\sharp \) or \(P^{\{1,2,5\}}\).

- (iii):

If the matrix \(X\in {\mathbb {R}}_{n\times n}\) satisfies (1), \((2)^\prime \) and (3), then it is called core inverse of \(P\in {\mathbb {R}}_{n}^{CM}\). It is denoted by

or \(P^{\{1,2^\prime ,3\}}\).

or \(P^{\{1,2^\prime ,3\}}\).

For the given \(P=[p_{ij}],~ P\in {\mathbb {R}}_{m\times n}\), we denote it with |P| whose entries are the absolute of entries of P, \(|P|=[|P_{ij}|]\), \(|P|\in {\mathbb {R}}_{m\times n}\). We say that P is non-negative matrix if \(p_{ij}\ge 0\), for each i and j.

Lemma 2.1

(Baksalary and Trenkler 2010; Hartwig and Spindelböck 1983) The  can be obtained as follows:

can be obtained as follows:

As we all know, different generalized inverses have different purposes. We can be found some properties and applications of core inverse and group inverse in Ma and Li (2019), Wang and Zhang (2019) and Zhou et al. (2012).

3 Block structure of core inverse of the associated matrix S

In this section, a matrix block structure of core inverse of S is crucial for our further consideration.

Lemma 3.1

(Ben-Israel and Greville 2003) A vector X\((\alpha )\) is a solution of the consistent (2.2) if and only if

Thus, the general solution is

where \(S^{\{1\}}\) is a \(\{1\}\)-inverse of S and O is an arbitrary vector.

Lemma 3.2

(Ma and Li 2019) Let \(X(\alpha )\in R(S)\) and \(S\in {\mathbb {R}}_{2n}^{CM}\), the vector \(X(\alpha )\) is unique solution of the consistent (2.2) if and only if

Thus, the unique solution is

Lemma 3.3

(Wang and Zhang (2019)) Let \(X(\alpha )\in R(S)\) and \(S\in {\mathbb {R}}_{2n}^{CM}\), the vector \(X(\alpha )\) is unique least square solution of the inconsistent (2.2) if and only if

Thus, the unique least squares solution is

By the above lemma, we know that the general solution of the consistent (2.2) can be expressed as \(X(\alpha )=S^{\{1\}}Y(\alpha )+(I_n-S^{\{1\}}S)O\). However, this paper studies correlated fuzzy linear vector general solution to FLS (2.1). On the other hand, if the inconsistent (2.2) satisfies \(X(\alpha )\in R(S)\), its unique least squares solution can be expressed as  . Next, a matrix block structure of Core inverse as S is crucial for our further consideration.

. Next, a matrix block structure of Core inverse as S is crucial for our further consideration.

Theorem 3.4

Let \(S\in {\mathbb {R}}_{2n}^{CM}\) be the coefficient matrix of (2.2). The Core inverse  of the associated singular matrix S is

of the associated singular matrix S is

if and only if

Proof

Let A be the coefficient matrix of FLS (2.1) and S is its associated matrix from (2.3). We have \(A=A^+-A^-=D-E\) and \(|A|=A^++A^-=D+E\).

Necessity: according to (1), we have

It gives

We have

According to (1), we have

Thus,

According to (2)\(^\prime \), we have

It gives

We obtain

According to (2)\(^\prime \), we have

We get

According to (3), we have

It gives

We have

According to (3), we have

It is easy to obtain

Then,

Sufficiency: using (3.2) and (3.3) we get

and

and

, i.e.

\(H+Z\) and

\(H-Z\) are the Core inverses of |A| and A, respectively. We have

, i.e.

\(H+Z\) and

\(H-Z\) are the Core inverses of |A| and A, respectively. We have

It is easy to know that

Then,

We have

Then,

We have

and

We get

Then,

\(\square \)

Remark 3.1

From Theorem 3.4, we note that if the matrix \(S\in {\mathbb {R}}_{2n}^{CM}\), we need the matrix \(A\in {\mathbb {R}}_{n}^{CM}\) and the matrix \(|A|\in {\mathbb {R}}_{n}^{CM}\). Meanwhile, it is well known that the matrix S is non-singular if and only if A and |A| are both non-singular, see Friedman et al. (1998). Furthermore, we know that ind(S)=1 if and only if (ind(A),ind(|A|)) \(\in \) {(0, 1), (1, 0), (1, 1)}, see Mihailović et al. (2018b). Then, we know that if the matrix \(S\in {\mathbb {R}}_{2n}^{CM}\), we can get the matrix A and the matrix |A| to be non-singular matrix or singular matrix with index one.

Theorem 3.5

\(S\in {\mathbb {R}}_{2n}^{CM}\) is obtained from the singular matrix of consistent (2.2), giving a representative vector Y. If

is a non-negative matrix and it admits (3.1), then

is a non-negative matrix and it admits (3.1), then

represents a solution vector of (2.2), and the correlated fuzzy number vector

\({\tilde{X}}^0\) is one solution of the FLS (2.1).

represents a solution vector of (2.2), and the correlated fuzzy number vector

\({\tilde{X}}^0\) is one solution of the FLS (2.1).

Proof

Let \(Y = \left[ {{\begin{array}{ll} \underline{Y} \\ -{\bar{Y}} \end{array} }} \right] \), according to  ,

,

It follows from (3.16) and (3.17) that

Then,

Since each \(\alpha \in [0,1]\), it holds: \({\bar{Y}}\ge \underline{Y}\) and \(H+Z\) is non-negative, so we have \({\bar{X}}^0\ge \underline{X}^0\). Since H and Z are non-negative, we know that for all \(i,~\underline{x}^0_i(\alpha )\) (resp. \({\bar{x}}^0_i(\alpha )\)) is bounded, non-decreasing (resp. non-increasing) and left-continuous as the linear combination of functions of the same type on the unit interval. Therefore, \({\tilde{X}}=({\tilde{x}}^0_1,\ldots ,{\tilde{x}}^0_n)^*\), where \({\tilde{x}}^0_i=(\underline{x}^0_i,~{\bar{x}}^0_i),~i=1,\ldots ,n\), is a fuzzy number vector. The family of linear systems \(SX(\alpha )=Y(\alpha )\) is consistent, therefore,  is one of its solutions, and by the construction of S, \({\tilde{X}}^0\) is a solution of the FLS (2.1). \(\square \)

is one of its solutions, and by the construction of S, \({\tilde{X}}^0\) is a solution of the FLS (2.1). \(\square \)

Corollary 3.6

\(S\in {\mathbb {R}}_{2n}^{CM}\) is obtained from the singular matrix of consistent (2.2) with \(X(\alpha )\in R(S)\), giving a representative vector Y . If  is a non-negative matrix and it admits (3.1), then

is a non-negative matrix and it admits (3.1), then  represents the unique solution vector of inconsistent (2.2), and the correlated fuzzy number vector \({\tilde{X}}^0\) is a solution of the consistent FLS (2.1).

represents the unique solution vector of inconsistent (2.2), and the correlated fuzzy number vector \({\tilde{X}}^0\) is a solution of the consistent FLS (2.1).

Corollary 3.7

\(S\in {\mathbb {R}}_{2n}^{CM}\) is obtained from the singular matrix of inconsistent (2.2) with \(X(\alpha )\in R(S)\), giving a representative vector Y. If  is a non-negative matrix and it admits (3.1), then

is a non-negative matrix and it admits (3.1), then  represents the unique least square solution vector of inconsistent (2.2), and the correlated fuzzy number vector \({\tilde{X}}^0\) is a least square solution of the inconsistent FLS (2.1).

represents the unique least square solution vector of inconsistent (2.2), and the correlated fuzzy number vector \({\tilde{X}}^0\) is a least square solution of the inconsistent FLS (2.1).

Remark 3.2

From Theorem 3.4, we know that if  is non-negative and linear equation \(SX(\alpha )=Y(\alpha )\) is consistent, then we will get a strong fuzzy solution \({\tilde{X}}^0\) of the FLS (2.1) through the representative solution vector

is non-negative and linear equation \(SX(\alpha )=Y(\alpha )\) is consistent, then we will get a strong fuzzy solution \({\tilde{X}}^0\) of the FLS (2.1) through the representative solution vector  of the (2.2). On the other hand, in [Th. 5, 12] and [Th. 6, 13], the author assumed that Moore–Penrose inverse and {1}-inverse are nonnegative to obtain a strong fuzzy solution. However, the coefficient matrix S admits a non-negative {1}-inverse if and only if S has a {2}-inverse of the form \(\left[ {{\begin{array}{ll} B_1D^*B_3 &{} B_1E^*B_4 \\ B_2E^*B_3 &{} B_2D^*B_4 \\ \end{array} }} \right] \), where \(B_1\), \(B_2\), \(B_3\), and \(B_4\) are non-negative diagonal matrices (see Zheng and Wang 2006). Therefore, we will give some results for such

of the (2.2). On the other hand, in [Th. 5, 12] and [Th. 6, 13], the author assumed that Moore–Penrose inverse and {1}-inverse are nonnegative to obtain a strong fuzzy solution. However, the coefficient matrix S admits a non-negative {1}-inverse if and only if S has a {2}-inverse of the form \(\left[ {{\begin{array}{ll} B_1D^*B_3 &{} B_1E^*B_4 \\ B_2E^*B_3 &{} B_2D^*B_4 \\ \end{array} }} \right] \), where \(B_1\), \(B_2\), \(B_3\), and \(B_4\) are non-negative diagonal matrices (see Zheng and Wang 2006). Therefore, we will give some results for such  and \(S^\sharp \) to be non-negative in the next section.

and \(S^\sharp \) to be non-negative in the next section.

Theorem 3.8

if and only if

if and only if

for some positive diagonal matrix B. Meanwhile,  , \((D-E)^{{\textcircled {\tiny \#}}} =B(D-E)^*\).

, \((D-E)^{{\textcircled {\tiny \#}}} =B(D-E)^*\).

Proof

According to Berman and Plemmons (1994), it is easy to find that  if and only if \(S^{{\textcircled {\tiny \#}}}=B^\bullet S^*\) for some positive diagonal matrix \(B^\bullet =\left[ {{\begin{array}{ll} B_1 &{} 0 \\ 0 &{} B_2 \\ \end{array} }} \right] \). We have

if and only if \(S^{{\textcircled {\tiny \#}}}=B^\bullet S^*\) for some positive diagonal matrix \(B^\bullet =\left[ {{\begin{array}{ll} B_1 &{} 0 \\ 0 &{} B_2 \\ \end{array} }} \right] \). We have

Therefore, \(B_1D^*=B_2D^*\) and \(B_1E^*=B_2E^*\).

Let \(B_1=diag(b_{11}, b_{12},\ldots ,b_{1n})\), \(B_2=diag(b_{21}, b_{22},\ldots ,b_{2n})\),

We have

From the structure of the (2.3), of \(d_{1i},\ldots ,d_{ni},~e_{1i},\ldots , e_{ni} ~(i = 1,\ldots ,n)\), at least one is nonzero. Let \(d_{ni}\ne 0\), we know \(b_{1i}d_{ni}=b_{2i}d_{ni}\), then \(d_{1i}=d_{2i}~(i = 1,\ldots ,n)\), etc. We know \(B_1=B_2=B\). Since

it is easy to obtain  , \((D-E)^{{\textcircled {\tiny \#}}}=B(D-E)^*\). \(\square \)

, \((D-E)^{{\textcircled {\tiny \#}}}=B(D-E)^*\). \(\square \)

Theorem 3.9

\(S^\sharp \ge 0\) if and only if

for some positive diagonal matrix N. Meanwhile, \((D+E)^\sharp =N(D+E)^*\), \((D-E)^\sharp =N(D-E)^*\).

Proof

The proof goes in the same manner as the proof of Theorem 3.8. \(\square \)

We explain previous Theorems and Definitions by example.

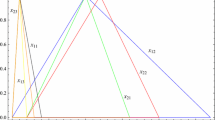

Example 3.1

It is a \(2\times 2\) order consistent fuzzy linear system.

By (2.7), the matrices U, \(U^*\) and sub-matrices \(\Sigma ,~K\), and L are:

and

We obtain

According to 3.19, we have

for some positive diagonal matrix \(B=\left[ {{\begin{matrix} 0.04 &{} 0 \\ 0 &{} 0.04 \\ \end{matrix} }} \right] .\) According to formula  , we obtain a strong fuzzy solution \({\tilde{X}}^0=({\tilde{x}}^0_1,{\tilde{x}}^0_2)^*\),

, we obtain a strong fuzzy solution \({\tilde{X}}^0=({\tilde{x}}^0_1,{\tilde{x}}^0_2)^*\),

4 A method for solving FLS

In this section, we present the general solution of the FLS (2.1). First, we determine one fuzzy number vector \({\tilde{X}}^\imath \) which refers to general solution set of the FLS (2.1). Let \(F\in {\mathbb {R}}_{n\times n}\), \(A\in {\mathbb {R}}_{n}^{CM}\),  and \(|F|=[|f_{ij}|]\). Let the form of \(S_F\in {\mathbb {R}}_{2n\times 2n}\) be as follows:

and \(|F|=[|f_{ij}|]\). Let the form of \(S_F\in {\mathbb {R}}_{2n\times 2n}\) be as follows:

where \(F^+=[f_{ij}^+]\) and \(F^-=[f_{ij}^-]\). Let Y be an arbitrary representative vector, and \(X^\imath =S_FY\). Since \(F^+\), \(F^-\) and \(S_F\) are non-negative, with the same argumentation as in the proof of Theorem 3.4, we obtain that \({\tilde{X}}^\imath \) is a fuzzy number vector, even if the FLS (2.1) has no solution.

Theorem 4.1

\(A\in {\mathbb {R}}_{n}^{CM}\) is a singular coefficient matrix of the consistent FLS (2.1), where \({\tilde{Y}}\) is a column of fuzzy vectors as the FLS (2.1) If  , where \(S_F\) is in the form (4.1). The following statements hold:

, where \(S_F\) is in the form (4.1). The following statements hold:

- (i)

\(A({\bar{X}}^\imath +\underline{X}^\imath )={\bar{Y}}+\underline{Y}\).

- (ii)

If |F| is the Core inverse of |A|, then it holds \(|A|({\bar{X}}^\imath -\underline{X}^\imath )={\bar{Y}}-\underline{Y}\), and fuzzy number vector \({\tilde{X}}^\imath \) is a solution of the FLS (2.1).

Proof

(i) Let’s compute \(X^\imath =S_FY\), then

It follows from (4.2) and (4.3) that

Then,

Since the FLS (2.1) are consistent, \(A({\bar{X}}+\underline{X})={\bar{Y}}+\underline{Y}\) is a consistent family of classical linear systems (for \(\alpha \in [0,1]\)). Furthermore,  , so from (4.4) we obtain:

, so from (4.4) we obtain:

(ii) According to (4.2) and (4.3), we have

Since |F| is the Core inverse of |A|, we have  then

then

According to Theorem 3.4, we have \(H=F^+,~Z=F^-\). Then

and

Hence \(S^{{\textcircled {\tiny \#}}}=S_F\), then \(X^\imath \) is a solution to (2.2). Through (4.4), (4.5), and (4.6) , we have

Any matrix \(A\in {\mathbb {R}}_{n\times n}\) has \(A^+=\frac{1}{2}(|A|+A)\) and \(A^-=\frac{1}{2}(|A|-A)\). It follows from (4.5), (4.6) and (4.7) that

Therefore, the conclusion is proved. \(\square \)

In the following theorem, we give the general solution to FLS (2.1).

Theorem 4.2

The coefficient matrix of the FLS (2.1) is A, an arbitrary fuzzy vector \({\tilde{Y}}=({\tilde{y}}_1,{\tilde{y}}_2,\ldots ,{\tilde{y}}_n)\), such that for \(X^\imath =S_FY\) it has \(A({\bar{X}}^\imath +\underline{X}^\imath )={\bar{Y}}+\underline{Y}\). Let \(W=(w_1(\alpha ),w_2(\alpha ),\ldots ,w_n(\alpha ))^*\), where \(W=\underline{Y}-\left[ {{\begin{matrix} A^+&A^- \end{matrix} }} \right] X^\imath \), \(\left[ {{\begin{matrix} A^+&A^- \end{matrix} }} \right] \) is \(n\times 2n\) order matrix. Define \(\Lambda =(\lambda _1(\alpha ), \lambda _2(\alpha ),\ldots ,\lambda _n(\alpha ))^*\) and \(\Theta =(\theta _1(\alpha ),\theta _2(\alpha ),\ldots ,\theta _n(\alpha ))^*\), where \(\Lambda \) and \(\Theta \) are solutions of \(A\Lambda =0\) and \(|A|\Theta =W\), respectively. We have

Proof

Using the general solution in Lemma 3.1, with  the proof goes in the same manner as the proof of [Th. 8, 12]. \(\square \)

the proof goes in the same manner as the proof of [Th. 8, 12]. \(\square \)

Next we will present an algorithm to solve the FLS (2.1). The coefficient matrix of FLS (2.1) is \(A=[a_{ij}]\). The matrix \(S_F\) is given by the formula (4.1).

We will explain our previous Theorems, Definitions and validity of Algorithm through examples. The Example 4.1 is a \(2\times 2\) order consistent fuzzy linear system. In Example 4.1, A and |A| are singular, and  is nonnegative. It is easy to know that we can give a strong fuzzy solution of the Example 4.1 through a solution vector

is nonnegative. It is easy to know that we can give a strong fuzzy solution of the Example 4.1 through a solution vector  or a solution vector \(X^\imath =S_FY\). Next, we can give general solution of the Example 4.1 through above Algorithm. The Example 4.2 is a \(3\times 3\) order consistent fuzzy linear system. In Example 4.2, the matrix A is singular and |A| is non-singular. Through calculation, we know that

or a solution vector \(X^\imath =S_FY\). Next, we can give general solution of the Example 4.1 through above Algorithm. The Example 4.2 is a \(3\times 3\) order consistent fuzzy linear system. In Example 4.2, the matrix A is singular and |A| is non-singular. Through calculation, we know that  is not nonnegative. To further verify the validity of an Algorithm, we will consider the general solution of the Example 4.2 through the above Algorithm. The Example 4.3 is a \(2\times 2\) order inconsistent fuzzy linear system. In Example 4.3, since the inconsistent fuzzy linear system satisfies \(X(\alpha )\in R(S)\), we can get the unique least squares solution of inconsistent (2.2). Further, we get a least squares solution in the Example 4.3.

is not nonnegative. To further verify the validity of an Algorithm, we will consider the general solution of the Example 4.2 through the above Algorithm. The Example 4.3 is a \(2\times 2\) order inconsistent fuzzy linear system. In Example 4.3, since the inconsistent fuzzy linear system satisfies \(X(\alpha )\in R(S)\), we can get the unique least squares solution of inconsistent (2.2). Further, we get a least squares solution in the Example 4.3.

Example 4.1

It is a \(2\times 2\) order consistent fuzzy linear system.

By (2.7), we have

where matrices U, \(U^*\), \(\Sigma \), and K are:

and

According to formula \(X^\imath =S_FY\), we obtain a general fuzzy solution \({\tilde{X}}^\imath =({\tilde{x}}^\imath _1,{\tilde{x}}^\imath _2)^*\)

The solution vector for equation \(A\Lambda =0\) is \(\Lambda =(2f(\alpha ),4f(\alpha ))^*\), and for any \(\alpha \in [0,1]\). Let \(f(\alpha )\in {\mathcal {F}}^\imath \), where \({\mathcal {F}}^\imath \) (depends on \({\tilde{X}}^\imath \)) denotes the class of functions on the unite interval \(y=f(\alpha )\), such that the adequate functions \(\underline{x}^{\imath \Lambda }(\alpha )\) (resp.\({\bar{x}}^{\imath \Lambda }(\alpha )\)) are bounded, non-decreasing (recp.non-increasing) and left-continuous. Hence, we have

By formula \(W=\underline{Y}-\underline{S}X^\imath \) for each \(\Lambda \), we have

By formula \(|A|\Theta =W\), we have

From the above formula, we can denote \(\Theta =(h(\alpha ), -2h(\alpha ))\), where \(h(\alpha ),~ \alpha \in [0,1]\) is an arbitrary function on the unit interval. We need \(h(\alpha )\in {\mathcal {F}}^{\imath \Lambda }\), (\({\mathcal {F}}^{\imath \Lambda }\) depends on \({\tilde{X}}^{\imath \Lambda }\)), and the additional necessary constrains, to obtain proper intervals, adequate to represent \(\alpha \)-cuts of fuzzy numbers:

Since \(\theta _2(\alpha )=-2\theta _1(\alpha )\), we have \(\alpha -1\le \theta _1(\alpha )\le 1-\alpha \). Finally, we have \({\tilde{X}}=({\tilde{x}}_1,{\tilde{x}}_2)\), where \(f(\alpha )\in {\mathcal {F}}^{\imath \Lambda },~\theta _1 =h(\alpha )\in {\mathcal {F}}^{\imath \Lambda }\), and \(\alpha -1\le h(\alpha )\le 1-\alpha \), for all \(\alpha \in [0,1]:\)

For example, for \(h(\alpha )=-0.25+0.25\alpha \) and \(f(\alpha )=1.125+0.125\alpha \), we have

On the other hand, the matrices U, \(U^*\)K, and \(\Sigma \) are:

and

According to (2.7), we have

where the positive diagonal matrix \(B=\left[ {{\begin{matrix} 0.025 &{} 0 \\ 0 &{} 0.10 \\ \end{matrix} }} \right] \). Hence

Since  is a non-negative matrix, a correlated fuzzy linear vector solution to FLS (2.1) is \({\tilde{X}}^0=({\tilde{x}}^0_1,{\tilde{x}}^0_2)^*\), given by

is a non-negative matrix, a correlated fuzzy linear vector solution to FLS (2.1) is \({\tilde{X}}^0=({\tilde{x}}^0_1,{\tilde{x}}^0_2)^*\), given by

All other fuzzy linear vector solutions of the FLS (2.1) can be determined by applying Algorithm with \({\tilde{X}}^0\) (We note that if  is non-negative, then \({\tilde{X}}^0={\tilde{X}}^\imath \) holds).

is non-negative, then \({\tilde{X}}^0={\tilde{X}}^\imath \) holds).

Example 4.2

It is a \(3\times 3\) order consistent fuzzy linear system.

According to (2.4), the matrices U, \(U^*\), \(\Sigma \) and K are:

and

Hence

According to formula \(X^\imath =S_FY\), we obtain the fuzzy number vector \({\tilde{X}}^\imath =({\tilde{x}}^\imath _1,{\tilde{x}}^\imath _2,{\tilde{x}}^\imath _3)^*\),

The solution vector for equation \(A\Lambda =0\) is \(\Lambda =(6f(\alpha ),3f(\alpha ),2f(\alpha ))^*\), and for any \(\alpha \in [0,1]\). Let \(f(\alpha )\in {\mathcal {F}}^\imath \), where \({\mathcal {F}}^\imath \) (depends on \({\tilde{X}}^\imath \)) denotes the class of functions on the unite interval \(y=f(\alpha )\), such that the adequate functions \(\underline{x}^{\imath \Lambda }(\alpha )\) (resp.\({\bar{x}}^{\imath \Lambda }(\alpha )\)) are bounded, non-decreasing (recp.non-increasing) and left-continuous. Hence, we have

By formula \(W=\underline{Y}-\underline{S}X^\imath \) for each \(\Lambda \), we have

By formula \(|A|\Theta =W\), we have

From the above equation, \(|A|\Theta =W\) has the unique solution \(\Theta =( 1.9998 - 1.9998\alpha , -0.6192 + 0.6192\alpha , 1.0477 - 1.0477\alpha )\), \(\alpha \in [0,1]\). Therefore, we obtain \({\tilde{X}}=({\tilde{x}}_1,{\tilde{x}}_2,{\tilde{x}}_3)\), where \(f(\alpha )\in {\mathcal {F}}^\imath \).

Example 4.3

It is a \(2\times 2\) order inconsistent fuzzy linear system with \(X(\alpha )\in R(S)\).

According to (2.4), the matrices U, \(U^*\), \(\Sigma \) and K are:

and

Hence

By formula  , we obtain the unique least squares solution as follow:

, we obtain the unique least squares solution as follow:

Then, a least squares fuzzy solution \({\tilde{X}}^\imath =({\tilde{x}}^\imath _1,{\tilde{x}}^\imath _2)^*\) as follow:

5 Conclusion

In this paper, a new algorithm is proposed to solve the FLS whose the coefficient matrix is a real matrix. We use the Hartwig–Spindelböck decomposition to get the Core inverse of the coefficient matrix A, and a numerical algorithm for finding an arbitrary solution of the FLS is established by the Core inverse of the coefficient matrix A. The method is also connected to the original Friedman et al. approach from Friedman et al. (1998). For future work, we try to solve “inconsistent FLS (2.1)” and discuss about their general least squares solution sets.

References

Abbasbandy S, Otadi M, Mosleh M (2008) Minimal solution of general dual fuzzy linear systems. Chaos Solitons Fractals 37(4):1113–1124

Allahviranloo T (2003) A comment on fuzzy linear systems. Fuzzy Sets Syst 140(3):559–559

Allahviranloo T (2004) Numerical methods for fuzzy system of linear equations. Appl Math Comput 155(2):493–502

Allahviranloo T, Ghanbari M (2012) On the algebraic solution of fuzzy linear systems based on interval theory. Appl Math Model 36(11):5360–5379

Baksalary OM, Trenkler G (2010) Core inverse of matrices. Linear Multilinear Algebra 58(6):681–697

Ben-Israel A, Greville TNE (2003) Generalized inverses: theory and applications. Springer Science and Business Media, Berlin

Berman A, Plemmons R J (1994) Nonnegative matrices in the mathematical sciences. Society for Industrial and Applied Mathematics

Friedman M, Ming M, Kandel A (1998) Fuzzy linear systems. Fuzzy Sets Syst 96(2):201–209

Friedman M, Ma M, Kandel A (2003) Author’s reply: “A comment on: ‘fuzzy linear systems’ ”[Fuzzy Sets and Systems 140 (2003), no. 3, 559; MR2035088] by T. Allahviranloo. Fuzzy Sets Syst 140(3): 561–561

Hartwig RE, Spindelböck K (1983) Matrices for which \(A^\ast \) and \(A^\dagger \) commute. Linear Multilinear Algebra 14(3):241–256

Lodwick WA, Dubois D (2015) Interval linear systems as a necessary step in fuzzy linear systems. Fuzzy Sets Syst 281:227–251

Ma H, Li T (2019) Characterizations and representations of the core inverse and its applications. Linear Multilinear Algebra. https://doi.org/10.1080/03081087.2019.1588847

Mihailović B, Jerković VM, Maleśević B (2018) Solving fuzzy linear systems using a block representation of generalized inverses: the Moore–Penrose inverse. Fuzzy Sets Syst 353:44–65

Mihailović B, Jerković VM, Maleśević B (2018) Solving fuzzy linear systems using a block representation of generalized inverses: the group inverse. Fuzzy Sets Syst 353:66–85

Wang H (2011) Rank characterizations of some matrix partial orderings. J East China Normal Univ (Natural Science) 2011(5):5–11

Wang H (2016) Core-EP decomposition and its applications. Linear Algebra Appl 508:289–300

Wang H, Liu X (2016) Partial orders based on core-nilpotent decomposition. Linear Algebra Appl 488:235–248

Wang H, Zhang X (2019) The core inverse and constrained matrix approximation problem. arXiv:1908.11077

Zheng B, Wang K (2006) General fuzzy linear systems. Appl Math Comput 181(2):1276–1286

Zhou J, Bu C, Wei Y (2012) Group inverse for block matrices and some related sign analysis. Linear Multilinear Algebra 60(6):669–681

Acknowledgements

The first author was supported partially by Innovation Project of Guangxi Graduate Education [No. YCSW2019135], the New Centaury National Hundred, Thousand and Ten Thousand Talent Project of Guangxi [No. GUIZHENGFA210647HAO], the School-level Research Projectin Guangxi University for Nationalities [No. 2018MDQN005], and the Special Fund for Bagui Scholars of Guangxi [No. 2016A17]. The second author was supported partially by Guangxi Natural Science Foundation [No. 2018GXNSFAA138181], by the Xiangsihu Young Scholars Innovative Research Team of Guangxi University for Nationalities [No.GUIKE AD19245148], and the Special Fund for Science and Technological Bases and Talents of Guangxi [No. 2019AC20060]. The third author was supported partially by the National Natural Science Foundation of China [No. 61772006], Guangxi Natural Science Foundation [No. 2018GXNSFDA281023] and the Science and Technology Major Project of Guangxi [No. AA17204096].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest was reported by the authors.

Additional information

Communicated by Anibal Tavares de Azevedo.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jiang, H., Wang, H. & Liu, X. Solving fuzzy linear systems by a block representation of generalized inverse: the core inverse. Comp. Appl. Math. 39, 133 (2020). https://doi.org/10.1007/s40314-020-01156-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-020-01156-0

Keywords

- Core inverse

- Fuzzy linear systems

- Block structure

- Hartwig–Spindelböck decomposition

- Strong fuzzy solution

or

or