Abstract

The purpose of this research is to introduce a regularized algorithm based on the viscosity method for solving the proximal split feasibility problem and the fixed point problem in Hilbert spaces. A strong convergence result of our proposed algorithm for finding a common solution of the proximal split feasibility problem and the fixed point problem for nonexpansive mappings is established. We also apply our main result to the split feasibility problem, and the fixed point problem of nonexpansive semigroups, respectively. Finally, we give numerical examples for supporting our main result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Throughout this article, let \(H_{1}\) and \(H_{2}\) be two real Hilbert spaces. Let \(f :H_{1}\rightarrow {\mathbb {R}} \cup \{+\infty \}\) and \(g:H_{2} \rightarrow {\mathbb {R}} \cup \{+\infty \}\) be two proper and lower semicontinuous convex functions and \(A : H_{1}\rightarrow H_{2}\) be a bounded linear operator.

In this paper, we focus our attention on the following proximal split feasibility problem (PSFP): find a minimizer \(x^{*}\) of f, such that \(Ax^{*}\) minimizes g, namely

where \(\mathop {\mathrm {argmin}}f:= \{{\bar{x}} \in H_{1}: f({\bar{x}}) \le f(x) \text { for all } x\in H_{1}\}\) and \(\mathop {\mathrm {argmin}}g := \{{\bar{y}} \in H_{2}: g({\bar{y}}) \le g(y) \text { for all } y\in H_{2}\}\). We assume that the problem (1.1) has a nonempty solution set \(\Gamma := \mathop {\mathrm {argmin}}f \cap A^{-1}(\mathop {\mathrm {argmin}}g)\).

Censor and Elfving (1994) introduced the split feasibility problem (in short, SFP). The split feasibility problem (SFP) has been used for many applications in various fields of science and technology, such as in signal processing and image reconstruction, and especially applied in medical fields such as intensity-modulated radiation therapy (IMRT) (for details, see Censor et al. (2006) and the references therein). Let C and Q be nonempty, closed, and convex subsets of \(H_{1}\) and \(H_{2}\), respectively, and then, the SFP is to find a point:

where \(A : H_{1}\rightarrow H_{2}\) is a bounded linear operator. For solving the problem (1.2), Byrne (2002) introduced a popular algorithm which is called the CQ algorithm as follows:

where \(P_{C}\) and \(P_{Q}\) denote the metric projection onto the closed convex subsets C and Q, respectively, and \(A^{*}\) is the adjoint operator of A and \(\mu _{n}\in (0,2/\Vert A\Vert ^{2})\). Many research papers have increasingly investigated split feasibility problem [see, for instance (Lopez et al. 2012; Chang et al. 2014; Qu and Xiu 2005), and the references therein]. If \(f=i_{C}\) [defined as \(i_{C}(x)=0\) if \(x\in C\) and \(i_{C}(x) = +\infty \) if \(x\notin C\)] and \(g=i_{Q}\) are indicator functions of nonempty, closed, and convex sets C and Q of \(H_{1}\) and \(H_2\), respectively. Then, the proximal split feasibility problem (1.1) becomes the split feasibility problem (1.2).

Moudafi and Thakur (2014) introduced the split proximal algorithm with a way of selecting the step-sizes, such that its implementation does not need any prior information about the operator norm. Given an initial point \(x_{1}\in H_{1}\), assume that \(x_n\) has been constructed and \(\Vert A^{*}(I-{\text {prox}}_{\lambda g })Ax_{n}\Vert ^{2}+\Vert (I-{\text {prox}}_{\lambda f})x_{n}\Vert ^{2} \ne 0\), and then compute \(x_{n+1}\) by the following iterative scheme:

where the stepsize \(\mu _{n}:=\rho _{n}\dfrac{h(x_{n})+l(x_{n})}{\theta ^{2}(x_{n})}\) with \(0<\rho _{n}<4\), \(h(x):=\frac{1}{2}\Vert (I-{\text {prox}}_{\lambda g}) Ax\Vert ^{2}\), \(l(x):=\frac{1}{2}\Vert (I-{\text {prox}}_{\lambda \mu _{n} f}) x\Vert ^{2}\) and \(\theta ^{2}(x):=\Vert A^{*}(I- {\text {prox}}_{\lambda g})Ax \Vert ^{2}+\Vert (I-{\text {prox}}_{\lambda \mu _{n} f}) x\Vert ^{2}.\) If \(\theta ^{2}(x_{n})=0\), then \(x_{n}\) is a solution of (1.1) and the iterative process stops; otherwise, we set \(n:=n+1\) and compute \(x_{n+1}\) using (1.3). They also proved the weak convergence of the sequence generated by Algorithm (1.3) to a solution of (1.1) under suitable conditions of parameter \(\rho _{n}\) where \(\varepsilon \le \rho _{n}\le \dfrac{4h(x_{n})}{h(x_{n})+l(x_{n})}-\varepsilon \) for some \(\varepsilon >0.\)

Yao et al. (2014) gave the regularized algorithm for solving the proximal split feasibility problem (1.1) and proposed a strong convergence theorem under suitable conditions:

where the stepsize \(\mu _{n}:=\rho _{n}\dfrac{h(x_{n})+l(x_{n})}{\theta ^{2}(x_{n})}\) with \(0<\rho _{n}<4\).

Shehu et al. (2015) introduced a viscosity-type algorithm for solving proximal split feasibility problems as follows:

where \(\psi :H_{1}\rightarrow H_{1}\) is a contraction mapping. They also proved a strong convergence of the sequences generated by iterative schemes (1.5) in Hilbert spaces.

Recently, Shehu and Iyiola (2015) introduced the following algorithm for solving split proximal algorithms and fixed point problems for k-strictly pseudocontractive mappings in Hilbert spaces:

where the stepsize \(\gamma _{n}:=\rho _{n}\dfrac{h(x_{n})+l(x_{n})}{\theta ^{2}(x_{n})}\) with \(0<\rho _{n}<4\). They also showed that, under certain assumptions imposed on the parameters, the sequence \(\{x_n\}\) generated by (1.6) converges strongly to \(x^{*}\in F(S)\cap \Gamma .\) Many researchers have proposed some methods to solve the proximal split feasibility problem [see, for instance (Shehu et al. 2015; Shehu and Iyiola 2017a, b, 2018; Abbas et al. 2018; Witthayarat et al. 2018), and the references therein].

We note that Algorithm (1.6) is the Halpern-type algorithm with \(u \equiv 0\) fixed. However, a viscosity-type algorithm is more general and desirable than a Halpern-type algorithm, because a contraction which is used in the viscosity-type algorithm influences the convergence behavior of the algorithm.

In this paper, inspired and motivated by these studies, we are interested to study the proximal split feasibility problem and the fixed point problem in Hilbert spaces. In Sect. 3, we introduce a regularized algorithm based on the viscosity method for finding a common solution of the proximal split feasibility problem and the fixed point problem of nonexpansive mappings, and prove a strong convergence theorem under some suitable conditions. In Sects. 4 and 5, we apply our main result to the split feasibility problem, and the fixed point problem of nonexpansive semigroups, respectively. In the last section, we first give a numerical result in Euclidean spaces to demonstrate the convergence of our algorithm. We also show the number of iterations of our algorithm by choosing different contractions \(\psi \). In this case, if we take \(\psi = 0\) in our algorithm, then we obtain Algorithm (1.6) (Shehu and Iyiola 2015, Algorithm 1). Moreover, we give an example in the infinite-dimensional spaces for supporting our main theorem.

2 Preliminaries

Throughout this article, let H be a real Hilbert space with inner product \( \langle \cdot , \cdot \rangle \) and norm \( \Vert \cdot \Vert \). Let C be a nonempty closed convex subset of H. Let \(T:C\rightarrow C \) be a nonlinear mapping. A point \(x\in C\) is called a fixed point of T if \(Tx=x\). The set of fixed points of T is the set \(F(T):= \{x\in C: Tx=x\}\).

Recall that A mapping T of C into itself is said to be

-

(i)

nonexpansive if

$$\begin{aligned} \left\| Tx-Ty\right\| \le \left\| x-y\right\| , \quad \forall x,y \in C. \end{aligned}$$ -

(ii)

contraction if there exists a constant \(\delta \in [0,1)\), such that

$$\begin{aligned} \Vert Tx-Ty\Vert \le \delta \Vert x-y\Vert , \quad \forall x,y\in C. \end{aligned}$$

Recall that the proximal operator \({\text {prox}}_{\lambda g}:H \rightarrow H\) is defined by:

Moreover, the proximity operator of f is firmly nonexpansive, namely:

for all \(x,y\in H\), which is equivalent to

for all \(x,y\in H\). For general information on proximal operator, see Combettes and Pesquet (2011a).

In a real Hilbert space H, it is well known that:

-

(i)

\(\left\| \alpha x + (1-\alpha )y\right\| ^{2} = \alpha \left\| x \right\| ^{2} + (1-\alpha ) \left\| y \right\| ^{2} -\alpha (1-\alpha ) \left\| x-y \right\| ^{2},\) for all \(x,y \in H\) and \(\alpha \in [0,1]\);

-

(ii)

\(\Vert x-y \Vert ^{2}=\Vert x \Vert ^{2}-2\langle x,y \rangle +\Vert y \Vert ^{2}\) for all \(x,y \in H\);

-

(iii)

\(\Vert x+y \Vert ^{2} \le \Vert x \Vert ^{2}+2\langle y,x+y \rangle \) for all \(x,y \in H\).

Recall that the (nearest-point) projection \(P_{C}\) from H onto C assigns to each \(x \in H\) the unique point \(P_{C}x \in C\) satisfying the property:

Lemma 2.1

(Takahashi 2000) Given \(x \in H\) and \(y \in C\). Then, \(P_{C}x=y\) if and only if there holds the inequality:

Lemma 2.2

(Xu 2003) Let \(\{s_{n}\}\) be a sequence of nonnegative real numbers satisfying:

where \(\{\alpha _{n}\}\) is a sequence in (0, 1) and \(\{\delta _{n}\}\) is a sequence, such that

-

1.

\(\displaystyle \sum _{n=1}^{\infty } \alpha _{n} = \infty \);

-

2.

\(\displaystyle \limsup _{n \rightarrow \infty } \frac{\delta _{n}}{\alpha _{n}} \le 0\) or \(\displaystyle \sum _{n=1}^{\infty } |\delta _{n} |< \infty \).

Then, \(\lim _{n \rightarrow \infty } s_{n} = 0\).

Definition 2.3

Let C be a nonempty closed convex subset of a real Hilbert space H. A mapping \(S: C \rightarrow C\) is called demi-closed at zero if for any sequence \(\{x_{n}\}\) which converges weakly to x, and if the sequence \(\{Tx_{n}\}\) converges strongly to 0, then \(Tx = 0\).

Lemma 2.4

(Browder 1976) Let C be a nonempty closed convex subset of a real Hilbert space H. If \(S : C \rightarrow C\) is a nonexpansive mapping, then \(I-\)S is demi-closed at zero.

Lemma 2.5

(Mainge 2008) Let \(\{\Gamma _n\}\) be a sequence of real numbers that does not decrease at infinity in the sense that there exists a subsequence \(\{\Gamma _{n_i}\}\) of \(\{\Gamma _n\}\) which satisfies \(\Gamma _{n_i}<\Gamma _{n_{i}+1}\) for all \(i\in {\mathbb {N}}\). Define the sequence \(\{\tau (n)\}_{n\ge n_0}\) of integers as follows:

where \(n_0\in {\mathbb {N}}\), such that \(\{k\le n_0:\Gamma _k<\Gamma _{k+1}\}\ne \emptyset \). Then, the following hold:

-

(i)

\(\tau ({n_0})\le \tau ({n_0+1})\le \cdots \) and \(\tau (n)\longrightarrow \infty \);

-

(ii)

\(\Gamma _{\tau _n}\le \Gamma _{\tau (n)+1}\) and \(\Gamma _n\le \Gamma _{\tau (n)+1}\), \(\forall n\ge n_0\).

3 Main results

In this section, we introduce an algorithm and prove a strong convergence for solving a common element of the set of fixed points of a nonexpansive mapping and the set of solutions of proximal split feasibility problems (1.1). Let \(H_{1}\) and \(H_{2}\) be two real Hilbert spaces. Let \(f :H_{1}\rightarrow {\mathbb {R}} \cup \{+\infty \}\) and \(g:H_{2} \rightarrow {\mathbb {R}} \cup \{+\infty \} \) be two proper and lower semicontinuous convex functions and \(A : H_{1}\rightarrow H_{2}\) be a bounded linear operator. Let \(S : H_{1}\rightarrow H_{1}\) be a nonexpansive mapping and Let \(\psi :H_{1}\rightarrow H_{1}\) be a contraction mapping with \(\delta \in (0,1)\).

We introduce the modified split proximal algorithm as follows:

Algorithm 3.1

Given an initial point \(x_{1}\in H_{1}\). Assume that \(x_n\) has been constructed and \(\Vert A^{*}(I-{\text {prox}}_{\lambda g })Ax_{n}\Vert ^{2}+\Vert (I-{\text {prox}}_{\lambda f})x_{n}\Vert ^{2}\ne 0\), then compute \(x_{n+1}\) by the following iterative scheme:

where the stepsize \(\mu _{n}:=\rho _{n}\dfrac{\left( \frac{1}{2}\Vert (I-{\text {prox}}_{\lambda g}) Ax_{n}\Vert ^{2} \right) +\left( \frac{1}{2}\Vert (I-{\text {prox}}_{\lambda f}) x_{n}\Vert ^{2} \right) }{\Vert A^{*}(I-{\text {prox}}_{\lambda g })Ax_{n}\Vert ^{2}+\Vert (I-{\text {prox}}_{\lambda f})x_{n}\Vert ^{2}}\) with \(0<\rho _{n}<4\) and \(\{\alpha _{n}\}\), \(\{\beta _{n}\} \subset (0,1)\).

We now prove our main theorem.

Theorem 3.2

Let \(H_{1}\) and \(H_{2}\) be two real Hilbert spaces. Let \(f :H_{1}\rightarrow {\mathbb {R}} \cup \{+\infty \}\) and \(g:H_{2} \rightarrow {\mathbb {R}} \cup \{+\infty \} \)be two proper and lower semicontinuous convex functions, and \(A : H_{1}\rightarrow H_{2}\) be a bounded linear operator. Let \(\psi :H_{1}\rightarrow H_{1}\) be a contraction mapping with \(\delta \in [0,1)\) and let \(S : H_{1}\rightarrow H_{1}\) be a nonexpansive mapping, such that \(\Omega := F(S)\cap \Gamma \ne 0\). If the control sequences \(\{\alpha _{n}\}\), \(\{\beta _{n}\}\) and \(\{\rho _{n}\}\) satisfy the following conditions:

-

(C1)

\(\displaystyle \lim _{n \rightarrow \infty } \alpha _{n}= 0\) and \(\displaystyle \sum _{n=1}^{\infty }\alpha _{n}=\infty \);

-

(C2)

\(\displaystyle 0< \liminf _{n\rightarrow \infty }\beta _{n}\le \limsup _{n\rightarrow \infty }\beta _{n}< 1\);

-

(C3)

\(\varepsilon \le \rho _{n}\le \dfrac{4(1-\alpha _{n})\left( \Vert (I-{\text {prox}}_{\lambda g}) Ax_{n}\Vert ^{2}\right) }{\left( \Vert (I-{\text {prox}}_{\lambda g}) Ax_{n}\Vert ^{2} \right) +\left( \Vert (I-{\text {prox}}_{\lambda f}) x_{n}\Vert ^{2} \right) }-\varepsilon \) for some \(\varepsilon >0.\)

Then, the sequence \(\{x_{n}\}\) defined by Algorithm 3.1 converges strongly to a point \(x^{*}\in \Omega \) which also solves the variational inequality:

Proof

Given any \(\lambda > 0\) and \(x \in H_{1}\), we define \(h(x):=\frac{1}{2}\Vert (I-{\text {prox}}_{\lambda g}) Ax\Vert ^{2}\), \( l(x):=\frac{1}{2}\Vert (I-{\text {prox}}_{\lambda f}) x\Vert ^{2}\), \(\theta ^{2}(x):=\Vert A^{*}(I-{\text {prox}}_{\lambda g })Ax\Vert ^{2}+\Vert (I-{\text {prox}}_{\lambda f})x\Vert ^{2}\), and hence, \(\mu _{n}=\rho _{n}\dfrac{h(x_{n})+l(x_{n})}{\theta ^{2}(x_{n})}\) where \(0<\rho _{n}<4\).

By Banach fixed point theorem, there exists \(x^{*} \in \Omega \) such that \(x^{*} = P_{\Omega }\psi (x^{*})\). Then, \(x^{*}={\text {prox}}_{\lambda \mu _{n} f}x^{*}\) and \(Ax^{*}={\text {prox}}_{\lambda g}Ax^{*}\). Since \({\text {prox}}_{\lambda g }\) is firmly nonexpansive, we have \(I-{\text {prox}}_{\lambda g }\) is also firmly nonexpansive. Hence

From the definition of \(y_{n} \) and the nonexpansivity of \({\text {prox}}_{\lambda \mu _{n} f}\), we have:

From (3.2), we have:

By the condition (C3), we have \(\dfrac{4h(x_{n})}{(h(x_{n})+l(x_{n}))}-\dfrac{\rho _{n}}{1-\alpha _{n}}\ge 0\) for all \(n\ge 1.\) From (3.3) and (3.4), we have:

Since S is nonexpansive, by (3.1) and (3.5), we obtain:

By mathematical induction, we have:

Hence, \(\{x_{n}\}\) is bounded and so are \(\{\psi (x_{n})\}\), \(\{Sy_{n}\}\).

From the definition of \(y_{n}\) and (3.4), we have:

From the definition of \(x_{n}\) and (3.6), we obtain:

It implies that

It follows from (3.6) that

which implies that

Now, we divide our proof into two cases.

Case 1 Suppose that there exists \(n_{0}\in {\mathbb {N}}\), such that \(\{\Vert x_{n}-x^{*}\Vert \}^{\infty }_{n=1}\) is nonincreasing. Then, \(\{\Vert x_{n}-x^{*}\Vert \}^{\infty }_{n=1}\) converges and \(\Vert x_{n}-x^{*}\Vert ^{2}-\Vert x_{n+1}-x^{*}\Vert ^{2} \rightarrow 0\) as \(n \rightarrow \infty \). From (3.7) and the condition (C1) and (C3), we obtain:

Hence, we have:

By the linearity and boundedness of A and the nonexpansivity of \({\text {prox}}_{\lambda g}\), we obtain that \(\{\theta ^{2}(x_{n})\}\) is bounded.

It follows that

which implies that

Next, we show that \(\limsup _{n \rightarrow \infty } \left\langle \psi (x^{*})-x^{*}, x_{n} - x^{*} \right\rangle \le 0\), where \(x^{*}=P_{\Omega }{\psi }(x^{*})\). Since \(\{x_{n}\}\) is bounded, there exists a subsequence \(\left\{ x_{n_j} \right\} \) of \(\left\{ x_{n}\right\} \) satisfying \(x_{n_{j}} \rightharpoonup \omega \) and

By the lower semicontinuity of h, we have:

Therefore, \(h(\omega )=\frac{1}{2}\Vert (I-{\text {prox}}_{\lambda g}) A\omega \Vert ^{2}=0\). Therefore, \(A\omega \) is a fixed point of the proximal mapping of g or equivalently, \(A\omega \) is a minimizer of g. Similarly, from the lower semicontinuity of l, we obtain:

Therefore, \(l(\omega )=\frac{1}{2}\Vert (I-{\text {prox}}_{\lambda \mu _{n} f}) \omega \Vert ^{2}=0\). That is \(\omega \in F({\text {prox}}_{\lambda \mu _{n} f})\). Then \(\omega \) is a minimizer of f. Thus, \(\omega \in \Gamma .\) We observe that

and hence, \(\mu _{n} \rightarrow 0 \text { as } n\rightarrow \infty \).

Next, we show that \(\omega \in F(S)\). From (3.8) and the condition (C1), (C2), we have:

For each \(n\ge 1\), let \(u_{n}:=\alpha _{n}\psi (x_{n})+(1-\alpha _{n})x_{n}\). Then

From the condition (C1), we have:

Observe that

From \(\lim _{n \rightarrow \infty }l(x_{n})=\lim _{n \rightarrow \infty }\frac{1}{2}\Vert (I-{\text {prox}}_{\lambda \mu _{n} f}) x_{n}\Vert ^{2}=0\) and (3.12), we have:

By the nonexpansiveness of \({\text {prox}}_{\lambda \mu _{n} f}\), we have:

From (3.13) and \(\mu _{n}\rightarrow 0\) as \(n \rightarrow \infty \), we have:

Since

from (3.13) and (3.14), we obtain:

From (3.12) and (3.15), we obtain

From

From the definition of \(x_{n}\), we have:

This implies from (3.16), and (3.17) that

Using \(x_{n_j}\rightharpoonup \omega \in H_{1}\) and (3.16), we obtain \(y_{n_j}\rightharpoonup \omega \in H_{1}\). Since \(y_{n_j}\rightharpoonup \omega \in H_{1}\), \(\Vert y_{n}-Sy_{n}\Vert \rightarrow 0\) as \(n\rightarrow \infty \), by Lemma 2.4, we have \(\omega \in F(S)\). Hence, \(\omega \in {\mathcal {F}}= F(S)\cap \Gamma .\) Since \(x_{n_j} \rightharpoonup \omega \) as \(j\rightarrow \infty \) and \(\omega \in {\mathcal {F}}\), by Lemma 2.1, we have:

Now, by the nonexpansiveness of S and \({\text {prox}}_{\lambda \mu _{n} f}\), and from (3.1) and (3.4), we have:

where \(\epsilon _{n}=\alpha _{n}(2-\alpha _{n}-2(1-\alpha _{n})\delta )\) and

Note that \(\mu _{n}\Vert A^{*}(I-{\text {prox}}_{\lambda g})Ax_{n} \Vert =\rho _{n}\dfrac{h(x_{n})+l(x_{n})}{\theta ^{2}(x_{n})}\Vert A^{*}(I-{\text {prox}}_{\lambda g})Ax_{n}\Vert \). Thus, \(\mu _{n}\Vert A^{*}(I-{\text {prox}}_{\lambda g})Ax_{n} \Vert \rightarrow 0\) as \(n\rightarrow \infty \). From the condition (C1), (3.19), (3.20) and Lemma 2.2, we can conclude that the sequence \(\{x_{n}\}\) converges strongly to \(x^{*}\).

Case 2 Assume that \(\{\Vert x_{n}-x^{*}\Vert \}\) is not monotonically decreasing sequence. Then, there exists a subsequence \({n_l}\) of n, such that \(\Vert x_{n_{l}}-x^{*}\Vert <\Vert x_{n_{l}+1}-x^{*}\Vert \) for all \(l\in {\mathbb {N}}.\) Now, we define a positive integer sequence \(\tau (n)\) by:

for all \(n \ge n_{0}\) (for some \(n_{0}\) large enough). By Lemma 2.5, we have \(\tau \) which is a non-decreasing sequence, such that \(\tau (n)\rightarrow \infty \) as \(n\rightarrow \infty \) and

By a similar argument as that of case 1, we can show that

Then, we have:

It follows that

which implies that

Moreover, we have

By the same computation as in Case 1, we have:

where \(\epsilon _{\tau (n)}=\alpha _{\tau (n)}(2-\alpha _{\tau (n)}-2(1-\alpha _{\tau (n)})\delta )\) and

Since \(\Vert x_{\tau (n)}-x^{*}\Vert ^{2} \le \Vert x_{{\tau (n)}+1}-x^{*}\Vert ^{2}\), then by (3.22), we have:

We note that \( \limsup _{n \rightarrow \infty } \xi _{\tau (n)}\le 0.\) Thus, it follows from above inequality that

From (3.22), we also have:

It follows from Lemma 2.5 that

as \(n \rightarrow \infty \). Therefore, \(\{x_{n}\}\) converges strongly to \(x^{*}\). This completes the proof.

\(\square \)

Taking \(\psi (x)\) = u in Algorithm 3.1, we have the following Halpern-type algorithm.

Algorithm 3.3

Given an initial point \(x_{1}\in H_{1}\). Assume that \(x_n\) has been constructed and \(\Vert A^{*}(I-{\text {prox}}_{\lambda g })Ax_{n}\Vert ^{2}+\Vert (I-{\text {prox}}_{\lambda f})x_{n}\Vert ^{2}\ne 0\), and then compute \(x_{n+1}\) by the following iterative scheme:

where the stepsize \(\mu _{n}:=\rho _{n}\dfrac{\left( \frac{1}{2}\Vert (I-{\text {prox}}_{\lambda g}) Ax_{n}\Vert ^{2} \right) +\left( \frac{1}{2}\Vert (I-{\text {prox}}_{\lambda f}) x_{n}\Vert ^{2} \right) }{\Vert A^{*}(I-{\text {prox}}_{\lambda g })Ax_{n}\Vert ^{2}+\Vert (I-{\text {prox}}_{\lambda f})x_{n}\Vert ^{2}}\) with \(0<\rho _{n}<4\) and \(\{\alpha _{n}\}\), \(\{\beta _{n}\} \subset [0,1]\).

The following result is obtained directly by Theorem 3.2.

Corollary 3.4

Let \(H_{1}\) and \(H_{2}\) be two real Hilbert spaces. Let \(f :H_{1}\rightarrow {\mathbb {R}} \cup \{+\infty \}\) and \(g:H_{2} \rightarrow {\mathbb {R}} \cup \{+\infty \} \)be two proper and lower semicontinuous convex functions and \(A : H_{1}\rightarrow H_{2}\) be a bounded linear operator. Let \(S : H_{1}\rightarrow H_{1}\) be a nonexpansive mapping, such that \(\Omega := F(S)\cap \Gamma \ne 0\). If the control sequences \(\{\alpha _{n}\}\), \(\{\beta _{n}\}\) and \(\{\rho _{n}\}\) satisfy the following conditions:

-

(C1)

\(\displaystyle \lim _{n \rightarrow \infty } \alpha _{n}= 0\) and \(\displaystyle \sum _{n=1}^{\infty }\alpha _{n}=\infty \);

-

(C2)

\(\displaystyle 0< \liminf _{n\rightarrow \infty }\beta _{n}\le \limsup _{n\rightarrow \infty }\beta _{n}< 1\);

-

(C3)

\(\varepsilon \le \rho _{n}\le \dfrac{4(1-\alpha _{n})\left( \Vert (I-{\text {prox}}_{\lambda g}) Ax_{n}\Vert ^{2}\right) }{\left( \Vert (I-{\text {prox}}_{\lambda g}) Ax_{n}\Vert ^{2} \right) +\left( \Vert (I-{\text {prox}}_{\lambda f}) x_{n}\Vert ^{2} \right) }-\varepsilon \) for some \(\varepsilon >0.\)

Then, the sequence \(\{x_{n}\}\) defined by Algorithm 3.3 converges strongly to \(z=P_{\Omega }u\).

4 Convergence theorem for split feasibility problems

In this section, we give an application of Theorem 3.2 to the split feasibility problem.

Algorithm 4.1

Given an initial point \(x_{1}\in H_{1}\). Assume that \(x_n\) has been constructed and \(\Vert A^{*}(I-P_{Q})Ax_{n}\Vert ^{2}+\Vert (I-P_{C})x_{n}\Vert ^{2}\ne 0\), and then compute \(x_{n+1}\) by the following iterative scheme:

where the stepsize \(\mu _{n}:=\rho _{n}\dfrac{\left( \frac{1}{2}\Vert (I-P_{Q}) Ax_{n}\Vert ^{2} \right) +\left( \frac{1}{2}\Vert (I-P_{C}) x_{n}\Vert ^{2} \right) }{\Vert A^{*}(I-P_{Q})Ax_{n}\Vert ^{2}+\Vert (I-P_{C})x_{n}\Vert ^{2}}\) with \(0<\rho _{n}<4\) and \(\{\alpha _{n}\}\), \(\{\beta _{n}\} \subset (0,1)\).

We now obtain a strong convergence theorem of Algorithm 4.1 for solving the split feasibility problem and the fixed point problem of nonexpansive mappings as follows:

Theorem 4.2

Let \(H_{1}\) and \(H_{2}\) be two real Hilbert spaces, and let C and Q be nonempty, closed and convex subsets of \(H_{1}\) and \(H_{2}\), respectively. Let \(A : H_{1}\rightarrow H_{2}\) be a bounded linear operator. Let \(\psi :H_{1}\rightarrow H_{1}\) be a contraction mapping with \(\delta \in [0,1)\) and let \(S : H_{1}\rightarrow H_{1}\) be a nonexpansive mapping. Assume that \(\Omega := F(S) \cap C \cap A^{-1}(Q) \ne \emptyset \). If the control sequences \(\{\alpha _{n}\}\), \(\{\beta _{n}\}\) and \(\{\rho _{n}\}\) satisfy the following conditions:

-

(C1)

\(\displaystyle \lim _{n \rightarrow \infty } \alpha _{n}= 0\) and \(\displaystyle \sum _{n=1}^{\infty }\alpha _{n}=\infty \),

-

(C2)

\(\displaystyle 0< \liminf _{n\rightarrow \infty }\beta _{n}\le \limsup _{n\rightarrow \infty }\beta _{n}< 1\);

-

(C3)

\(\varepsilon \le \rho _{n}\le \dfrac{4(1-\alpha _{n})\left( \Vert (I-P_{Q}) Ax_{n}\Vert ^{2}\right) }{\left( \Vert (I-P_{Q}) Ax_{n}\Vert ^{2} \right) +\left( \Vert (I-P_{C}) x_{n}\Vert ^{2} \right) }-\varepsilon \) for some \(\varepsilon >0.\)

Then, the sequence \(\{x_{n}\}\) generated by Algorithm 4.1 converges strongly to \(z=P_{\Omega }{\psi }(z)\).

Proof

Taking \(f=i_{C}\) and \(g=i_{Q}\) in Theorem 3.2 (\(i_{C}\) and \(i_{Q}\) are indicator functions of C and Q, respectively), we have \({\text {prox}}_{\lambda f}=P_{C}\) and \({\text {prox}}_{\lambda g}=P_{Q}\) for all \(\lambda \). We also have \(\mathop {\mathrm {argmin}}f = C\) and \(\mathop {\mathrm {argmin}}g = Q\). Therefore, from Theorem 3.2, we obtain the desired result. \(\square \)

5 Convergence theorem for nonexpansive semigroups

In this section, we prove a strong convergence theorem for finding a common solution of the proximal split feasibility problem and the fixed point problem of nonexpansive semigroups in Hilbert spaces.

Let C be a nonempty, closed, and convex subset of a real Banach space X. A one-parameter family \(S = {S(t) : t \ge 0} : C\rightarrow C\) is said to be a nonexpansive semigroup on C if it satisfies the following conditions:

-

(i)

\(S(0)x=x\) for all \(x\in C\);

-

(ii)

\(S(s+t)x=S(s)S(t)x\) for all \(t,s>0\) and \(x\in C\);

-

(iii)

for each \(x\in C\) the mapping \(t\longmapsto S(t)x\) is continuous;

-

(iv)

\(\Vert S(t)x-S(t)y \Vert \le \Vert x -y\Vert \) for all \(x,y \in C\) and \(t>0.\)

We use F(S) to denote the common fixed point set of the semigroup S, i.e., \(F(S) = \bigcap _{t>0} F(S(t))=\{x\in C: x=S(t)x\}\). It is well known that F(S) is closed and convex (see Browder 1956).

Definition 5.1

(Aleyner and Censor 2005) Let C be a nonempty, closed, and convex subset of a real Hilbert space H, \(S = {S(t) : t > 0}\) be a continuous operator semigroup on C. Then, S is said to be uniformly asymptotically regular (in short, u.a.r.) on C if for all \(h \ge 0\) and any bounded subset K of C, such that

Lemma 5.2

(Shimizu and Takahashi 1997) Let C be a nonempty, closed, and convex subset of a real Hilbert space H, and let K be a bounded, closed, and convex subset of C. If we denote \(S = {S(t) : t > 0}\) is a nonexpansive semigroup on C, such that \(F(S) = \bigcap _{t>0} F(S(t))\ne \emptyset \). For all \(h>0\), the set \(\sigma _{t}(x)=\frac{1}{t}\int _{0}^{t}S(s)x\mathrm{d}s\), then

Let \(H_{1}\) and \(H_{2}\) be two real Hilbert spaces. Let \(f :H_{1}\rightarrow {\mathbb {R}} \cup \{+\infty \}\) and \(g:H_{2} \rightarrow {\mathbb {R}} \cup \{+\infty \} \) be two proper and lower semicontinuous convex functions and \(A : H_{1}\rightarrow H_{2}\) be a bounded linear operator and let \(\psi :H_{1}\rightarrow H_{1}\) be a contraction mapping with \(\delta \in [0,1)\). Let \(S:=\{S(t):t>0\}\) be a u.a.r nonexpansive semigroup on \(H_{1}\).

Algorithm 5.3

Given an initial point \(x_{1}\in H_{1}\). Assume that \(x_n\) has been constructed and \(\Vert A^{*}(I-{\text {prox}}_{\lambda g })Ax_{n}\Vert ^{2}+\Vert (I-{\text {prox}}_{\lambda f})x_{n}\Vert ^{2}\ne 0\), and then compute \(x_{n+1}\) by the following iterative scheme:

where the stepsize \(\mu _{n}:=\rho _{n}\dfrac{\left( \frac{1}{2}\Vert (I-{\text {prox}}_{\lambda g}) Ax_{n}\Vert ^{2} \right) +\left( \frac{1}{2}\Vert (I-{\text {prox}}_{\lambda f}) x_{n}\Vert ^{2} \right) }{\Vert A^{*}(I-{\text {prox}}_{\lambda g })Ax_{n}\Vert ^{2}+\Vert (I-{\text {prox}}_{\lambda f})x_{n}\Vert ^{2}}\) with \(0<\rho _{n}<4\), \(\{\alpha _{n}\}\), \(\{\beta _{n}\} \subset (0,1)\) and \(\{t_{n}\}\) is a positive real divergent sequence.

We now prove a strong convergence result for the problem (1.1) and the fixed point problem of nonexpansive semigroups as follows:

Theorem 5.4

Suppose that \(\bigcap _{t>0} F(S(t))\cap \Gamma \ne 0\). If the control sequences \(\{\alpha _{n}\}\), \(\{\beta _{n}\}\) and \(\{\rho _{n}\}\) satisfy the following conditions:

-

(C1)

\(\displaystyle \lim _{n \rightarrow \infty } \alpha _{n}= 0\) and \(\displaystyle \sum _{n=1}^{\infty }\alpha _{n}=\infty \);

-

(C2)

\(\displaystyle 0< \liminf _{n\rightarrow \infty }\beta _{n}\le \limsup _{n\rightarrow \infty }\beta _{n}< 1\);

-

(C3)

\(\varepsilon \le \rho _{n}\le \dfrac{4(1-\alpha _{n})\left( \Vert (I-{\text {prox}}_{\lambda g}) Ax_{n}\Vert ^{2}\right) }{\left( \Vert (I-{\text {prox}}_{\lambda g}) Ax_{n}\Vert ^{2} \right) +\left( \Vert (I-{\text {prox}}_{\lambda f}) x_{n}\Vert ^{2} \right) }-\varepsilon \) for some \(\varepsilon >0.\)

Then, the sequence \(\{x_{n}\}\) generated by Algorithm 5.3 converges strongly to a point \(x^{*}\in \bigcap _{t>0} F(S(t))\cap \Gamma \).

Proof

By continuing in the same direction as in Theorem 3.2, we have that \(\lim _{n \rightarrow \infty }\Vert y_{n}-S(t_{n})y_{n}\Vert =0.\) Now, we only show that \(\lim _{n \rightarrow \infty }\Vert y_{n}-S(h)y_{n}\Vert =0\) for all \(h\ge 0.\) We observe that

Since \(\{S(t) : t \ge 0\}\) is a u.a.r. nonexpansive semigroup and \(t_{n}\rightarrow \infty \) for all \(h\ge 0\), we have:

for all \(h\ge 0\). This completes the proof. \(\square \)

6 Numerical examples

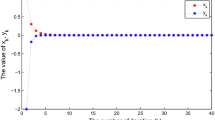

We first give a numerical example in Euclidean spaces to demonstrate the convergence of Algorithm (3.1).

Example 6.1

Let \(H_1 = {\mathbb {R}}^{2}\) and \(H_2 = {\mathbb {R}}^{3}\) with the usual norms. Define a mapping \(S : {\mathbb {R}}^{2} \rightarrow {\mathbb {R}}^{2}\) by:

One can show that S is nonexpansive. Define two functions \(f{:}\, {\mathbb {R}}^{2} \rightarrow (-\infty , \infty ]\) and \(g : {\mathbb {R}}^{3} \rightarrow (-\infty , \infty ]\) by \(f:= 0\), where 0 is a zero operator and

Then, the explicit forms of the proximity operators of f and g can be written by \( {\text {prox}}_{\lambda f} = I\) and \( {\text {prox}}_{1 g} = B^{-1}\), where \(B = \begin{pmatrix} 10 &{} -21 &{} 6 \\ -21 &{} 50 &{} -14 \\ 6 &{} -14 &{} 5 \\ \end{pmatrix} \) (see Combettes and Pesquet 2011b). Let \(A: {\mathbb {R}}^{2} \rightarrow {\mathbb {R}}^{3}\) be defined by:

and let \(\Omega := F(S) \cap \mathop {\mathrm {argmin}}f \cap A^{-1}(\mathop {\mathrm {argmin}}g)\). Now, we rewrite Algorithm (3.1) in the form:

where

Take \(\alpha _{n} = \frac{1}{n+1}\), \(\beta _{n} = \frac{1}{2}\), \(\rho _{n} = \frac{2n}{n+1}\). Consider a contraction \(\psi : {\mathbb {R}}^{2} \rightarrow {\mathbb {R}}^{2}\) defined by \(\psi (x) = \delta x\) for \(0 \le \delta < 1\). We first start with the initial point \(x_{1} = (3, -2)\) and the stopping criterion for our testing process is set as: \(E_{n} := \Vert x_{n} - x_{n-1} \Vert < 10^{-6}\), where \(x_{n} = (a_{n}, b_{n}).\) In Table 1, we show the convergence behavior of Algorithm (6.1) by choosing \(\delta = 0.1\). In Table 2, we also show the number of iterations of Algorithm (6.1) by choosing different constants \(\delta \). –

Remark 6.2

In Example 6.1, by testing the convergence behavior of Algorithm (6.1), we observe that

-

(i)

It converges to a solution, i.e., \(x_{n} \rightarrow (0, 0) \in \Omega \).

-

(ii)

The selection of a contraction \(\psi \) in our algorithm influences the number of iterations of the algorithm. We also note that if \(\psi \equiv 0\) is zero, then our algorithm becomes Algorithm (1.6) (Shehu and Iyiola 2015, Algorithm 1).

Next, we give an example in the infinite-dimensional space \(L^{2}\) as follows.

Example 6.3

Let \(H_1 = L^{2}([0, 1]) = H_{2}\). Let \(x \in L^{2}([0, 1])\). Define a bounded linear operator \(A : L^{2}([0, 1]) \rightarrow L^{2}([0, 1])\) by:

Define a mapping \(S : L^{2}([0, 1]) \rightarrow L^{2}([0, 1])\) by:

Then, S is nonexpansive. Let

where \(w \in L^{2}([0, 1])\), such that \(w(t) = 2t^{3}\), and let

Define two functions \(f, g :L^{2}([0, 1]) \rightarrow (-\infty , \infty ]\) by \(f := i_{C}\) and \(g := i_{Q}\), where \(i_{C}\) and \(i_{Q}\) are indicator functions of C and Q, respectively. We can write the explicit forms of the proximity operators of f and g in the following forms:

and \({\text {prox}}_{\lambda g}x = P_{Q}x = x_{+}\), where \(x_{+}(t) = \max \{x(t), 0\}\) (see Cegielski 2012). Therefore, Algorithm (3.1) can be rewritten in the form:

\(\mu _{n}=\rho _{n}\dfrac{\left( \frac{1}{2}\Vert (I-P_{Q}) Ax_{n}\Vert ^{2} \right) +\left( \frac{1}{2}\Vert (I-P_{C}) x_{n}\Vert ^{2} \right) }{\Vert A^{*}(I-P_{Q})Ax_{n}\Vert ^{2}+\Vert (I-P_{C})x_{n}\Vert ^{2}}\), for finding a common element in the set \(\Omega := F(S) \cap C \cap A^{-1}(Q)\). By choosing the control sequences \(\{\alpha _{n}\}\), \(\{\beta _{n}\}\) and \(\{\rho _{n}\}\) satisfying the conditions (C1)–(C3) in Theorem 3.2, it can guarantee that the sequence \(\{x_{n}\}\) generated by (6.2) converges strongly to \(x^{*} = 0 \in \Omega \).

References

Abbas M, AlShahrani M, Ansari QH, Iyiola OS, Shehu Y (2018) Iterative methods for solving proximal split minimization problems. Numer Algorithms 78:193–215

Aleyner A, Censor Y (2005) Best approximation to common fixed points of a semigroup of nonexpansive operator. J Nonlinear Convex Anal 6(1):137–151

Browder FE (1956) Nonexpansive nonlinear operators in a Banach space. Proc Natl Acad Sci USA 54:1041–1044

Browder FE (1976) Nonlinear operators and nonlinear equations of evolution in Banach spaces. Proc. Symp. Pure Math. 18:78–81

Byrne C (2002) Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl 18(2):441–453

Cegielski A (2012) Iterative methods for fixed point problems in Hilbert spaces. Lecture notes in mathematics, vol 2057. Springer, Heidelberg

Censor Y, Elfving T (1994) A multiprojection algorithm using Bregman projections in a product space. Numer Algorithms 8:221–239

Censor Y, Bortfeld T, Martin B, Trofimov A (2006) A unified approach for inversion problems in intensity-modulated radiation therapy. Phys Med Biol 51:2353–2365

Chang SS, Kim JK, Cho YJ, Sim J (2014) Weak and strong convergence theorems of solutions to split feasibility problem for nonspreading type mapping in Hilbert spaces. Fixed Point Theory Appl 2014:11

Combettes PL, Pesquet JC (2011a) Proximal splitting methods in signal processing. In: Fixed-point algorithms for inverse problems in science and engineering. Springer, New York, pp 185–212

Combettes PL, Pesquet JC (2011b) Proximal splitting methods in signal processing. Fixed Point Algorithms Inverse Probl Sci Eng 49:185–212

Lopez G, Martin-Marquez V, Wang F et al (2012) Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl 28:085004

Mainge PE (2008) Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set Valued Anal 16:899–912

Moudafi A, Thakur BS (2014) Solving proximal split feasibility problems without prior knowledge of operator norms. Optim Lett 8:2099–2110

Qu B, Xiu N (2005) A note on the CQ algorithm for the split feasibility problem. Inverse Probl 21(5):1655–1665

Shehu Y, Iyiola OS (2015) Convergence analysis for proximal split feasibility problems and fixed point problems. J Appl Math Comput 48:221–239

Shehu Y, Iyiola OS (2017a) Convergence analysis for the proximal split feasibility problem using an inertial extrapolation term method. J Fixed Point Theory Appl 19(4):2483–2510

Shehu Y, Iyiola OS (2017b) Strong convergence result for proximal split feasibility problem in Hilbert spaces. Optimization 66(12):2275–2290

Shehu Y, Iyiola OS (2018) Accelerated hybrid viscosity and steepest-descent method for proximal split feasibility problems. Optimization 67(4):475–492

Shehu Y, Cai G, Iyiola OS (2015) Iterative approximation of solutions for proximal split feasibility problems. Fixed Point Theory Appl 2015:123

Shimizu T, Takahashi W (1997) Strong convergence to common fixed points of families of nonexpansive mappings. J Math Anal Appl 211(1):71–83

Takahashi W (2000) Nonlinear functional analysis. Yokohama Publishers, Yokohama

Witthayarat U, Cho YJ, Cholamjiak P (2018) On solving proximal split feasibility problems and applications. Ann Funct Anal 9(1):111–122

Xu HK (2003) An iterative approach to quadric optimization. J Optim Theory Appl 116:659–678

Yao Z, Cho SY, Kang SM et al (2014) A regularized algorithm for the proximal split feasibility problem. Abstr Appl Anal 6:894272

Acknowledgements

The authors would like to thank the referees for valuable comments and suggestions for improving this work. W. Khuangsatung would like to thank Rajamangala University of Technology Thanyaburi and S. Suantai would like to thank Chiang Mai University for the financial support.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Ernesto G. Birgin.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Khuangsatung, W., Jailoka, P. & Suantai, S. An iterative method for solving proximal split feasibility problems and fixed point problems. Comp. Appl. Math. 38, 177 (2019). https://doi.org/10.1007/s40314-019-0956-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-019-0956-8