Abstract

Based on the subgradient methods and fixed point techniques, we develop a new iteration method for solving variational inequalities on the solution set of Ky Fan inequalities. The convergence for the proposed algorithms to the solution is guaranteed under certain assumptions in the Euclidean space \(\mathcal R^{n}\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let C be a nonempty closed convex subset of the Euclidean space \(\mathcal R^{n}\) with inner product 〈⋅,⋅〉 and norm ∥⋅∥. The Ky Fan inequalities, shortly K F(f, C), are formulated as follows:

where f is a bifunction from C × C to \(\mathcal R\) such that f(x, x) = 0 for all x ∈ C, and for each x ∈ C, the function f(x, y) is convex and subdiffentiable with respect to the second argument y on C. The solution set of Problem K F(f, C) is denoted by Sol(f, C). An important example of Ky Fan inequalities is the variational inequalities denoted by V I(F, C). It corresponds to f(x, y) = 〈F(x),y − x〉 for every x, y ∈ C, where \(F: C\to \mathcal R^{n}\) is a continuous mapping, and can be expressed as follows:

In the present work, we are concerned with the variational inequalities on the solution set of Ky Fan inequalities, shortly V I K F(F, f, C), which consist in finding a point x ∗, such that

where the bifunction \(f: C\times C\to \mathcal R\) and \(F: C\to \mathcal R^{n}.\) The variational inequalities on the solution set of Ky Fan inequalities are special classes of quasivariational inequalities and bilevel Ky Fan inequalities (see [1, 7, 9, 15, 16, 23]). However, this class of problems includes, as special cases, lexicographic variational inequalities, Ky Fan inequalities, some classes of mathematical programs with equilibrium constraints (see [4, 8, 13, 18, 27]), bilevel minimization problems (see [2, 25]), variational inequalities (see [14]), minimum-norm problems of the solution set of variational inequalities (see [6, 30]), and bilevel convex programming models (see [26, 29]). The problems have recently attracted many researcher’s interest, due to the fact that those algorithms have extensive applications in a variety of areas such as image recovery, signal processing, and network resource allocation (see for instance [3, 9, 12, 19, 27]). The merit of these problems is that it unifies all these particular problems in a convenient way.

A particularly interesting problem occurs in the case that F(x) = x for all x ∈ C, the problem reduces to a minimum-norm problem over the solution set of the following Ky Fan inequalities:

where PrSol(G, C)(0) is the projection of 0 onto Sol(f, C). To solve Problem V I K F(F, f, C): find x ∗∈Sol(f, C) such that

where F is Lipschitz continuous and strongly monotone from \(\mathcal R^{n}\) to \(\mathcal R^{n}\), and

where \(G: \mathcal R^{n}\to \mathcal R^{n}\) is monotone and \(\varphi : \mathcal R^{n}\to (-\infty , +\infty ]\) is lower semicontinuous and convex; Maingé [21] proposed the projected subgradient method and showed the convergence of the iteration sequences under certain appropriate conditions imposed on the regular parametrics.

Anh et al. [7] introduced an extragradient algorithm for solving V I K F(F, f, C), where f(x, y) = 〈G(x),y − x〉 for every x, y ∈ C. Then, the algorithm consists of roughly two loops. At each iteration k of the outer loop, they applied the extragradient method to the lower variational inequality problem. Then, starting from the obtained iterate in the outer loop, they compute an 𝜖 k -solution of Problem V I(G, C). The convergence of the algorithm crucially depends on the starting point x 0 and the parameters chosen in advance. Under assumptions that F is strongly monotone and Lipschitz continuous, G is pseudomonotone and Lipschitz continuous on C, and the sequences of parameters were chosen appropriately. They showed that two iterative sequences {x k} and {z k} converge to the same point x ∗ which is a solution of the problem. However, at each iteration of the outer loop, the scheme requires computing an approximation solution to a variational inequality problem.

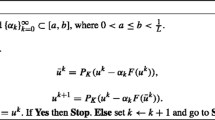

There exist some other solution-methods for lexicographic variational inequalities when the cost operator has some monotonicity (see [13, 18, 23, 26]). In all of these methods, it requires solving auxiliary variational inequalities. In order to avoid this requirement, we combine the contractivity of the mapping T := I − μ F in [16] for solving variational inequalities V I(F, C) with some conditions of F and μ, and the extragradient methods proposed by Korpelevich in [20]. Then, the strong convergence of proposed sequences will be considered in a real Hilbert space. Let Fix (T) be the fixed point set of a demicontractive mapping T : C → C. For solving V I(F,Sol(f, C) ∩Fix(T)) in a real Hilbert space, by using the hybrid steepest descent method for fixed point problems introduced by Yamada and Ogura in [28], Maingé and Moudafi in [22] proposed the following iterative process:

where I stands for the identity mapping on \(\mathcal H, \lambda \in (0, 1), \{\alpha _{k}\}\subset [0, 1)\) and \(\{r_{k}\}\subset (0, \infty )\). Under certain conditions on the parameters, the sequence {x k} converges strongly to a solution of Problem V I(F,Sol(f, C) ∩Fix(T)). By replacing the computation of z k in the Maingé and Moudafi algorithm by an extragradient iteration, Vuong et al. in [24] recently proposed extragradient-viscosity methods for solving Problem V I(F,Sol(f, C) ∩Fix(T)). The strong convergence of the iterates generated by these algorithms is obtained by combining a viscosity approximation method with an extragradient method. This is done when the basic iteration comes directly from the extragradient method, under a Lipschitz-type continuous and pseudomonotone assumptions on the equilibrium bifunction. In this paper, we are interested in finding a solution to variational inequalities on the solution set of Ky Fan inequalities V I K F(F, f, C), where the operators F and f satisfy the following usual conditions:

(A 1) For each x ∈ C, f(x,⋅) is lower semicontinuous convex on C. If {x k}⊂ C is bounded and 𝜖 k ↘ 0 as \(k\to \infty \), then the sequence {w k} with w k ∈ ∂2𝜖 k f(x k,x k) is bounded, where ∂2𝜖 k f(x k,x k) stands for 𝜖-subdifferential of the convex function f(x k,x) with respect to the second argument at x k:

(A 2)f is pseudomonotone on C with respect to every solution x ∗ of Problem V I K F(F, f, C) and satisfies the following condition, called the strict paramonotonicity property

-

(A 3) For each x ∈ C, f(⋅,x) is upper semicontinuous on C;

-

(A 4) The solution set Sol(f, C) of Problem V I(F, C) is nonempty;

-

(A 5)F is L-Lipschitz continuous and β-strongly monotone.

It is well known that if the cost mapping F of variational inequalities V I(F, C) is continuous and strongly monotone on the nonempty closed convex subset \(C\subset \mathcal H\), then Problem V I(F, C) has a unique solution (see [12, 14]). When f is pseudomonotone and Sol(f, C)≠∅, Sol(f, C) is convex (see [10]). Thus, under Conditions (A 2),(A 4), and (A 5), Problem V I K F(F, f, C) has a unique solution. The purpose of this paper is to propose an algorithm for directly solving the variational inequalities on the solution set of Ky Fan inequalities by using the projected subgradient method and fixed point techniques.

The rest of this paper is divided into three sections. In Section 2, we recall some properties for monotonicity, the metric projection onto a closed convex set, and introduce in detail a new algorithm for solving Problem V I K F(F, f, C). The third section is devoted to the convergence analysis for the algorithm. Finally, in the last section, we consider a numerical example to illustrate the convergence of the algorithm.

2 Preliminaries

Let C be a nonempty closed convex subset of the Euclidean space \(\mathcal R^{n}\). There exists a unique point in C, denoted by Pr C (x) satisfying

Then, Pr C is called the metric projection of \(\mathcal R^{n}\) to C. It is well known that Pr C satisfies the following properties:

-

(a)

\(\|\text {Pr}_{C}(x)-\text {Pr}_{C}(y)\|\leq \|x-y\|~ \forall x,y\in \mathcal R^{n};\)

-

(b)

\(\|\text {Pr}_{C}(x)-\text {Pr}_{C}(y)\|^{2}\leq \langle \text {Pr}_{C}(x)-\text {Pr}_{C}(y), x-y\rangle ~ \forall x,y\in \mathcal R^{n};\)

-

(c)

\(\langle x-\text {Pr}_{C}(x), y-\text {Pr}_{C}(x)\rangle \leq 0~ \forall y\in C, x\in \mathcal R^{n};\)

-

(d)

\(\|\text {Pr}_{C}(x)-y\|^{2}\leq \|x-y\|^{2}-\|\text {Pr}_{C}(x)-x\|^{2}~ \forall y\in C, x\in \mathcal R^{n};\)

-

(e)

\(\|\text {Pr}_{C}(x)-\text {Pr}_{C}(y)\|^{2}\leq \|x-y\|^{2}-\|\text {Pr}_{C}(x)-x+y-\text {Pr}_{C}(y)\|^{2}~ \forall x,y\in \mathcal R^{n}.\)

Now, we list some well-known definitions which will be used in our analysis.

Definition 2.1

Let C be a nonempty subset in \(\mathcal R^{n}\). The operator \(\varphi : C \to \mathcal R^{n}\) is said to be

- (i):

-

γ-strongly monotone on C if for each x, y ∈ C,

$$\langle \varphi(x)-\varphi(y), x-y\rangle\geq \gamma \|x-y\|^{2};$$ - (ii):

-

pseudomonotone on C if for each x, y ∈ C,

$$\langle \varphi(y), x-y\rangle\geq 0 \Rightarrow \langle \varphi(x), x-y\rangle\geq 0;$$ - (iii):

-

Lipschitz continuous with constant L > 0 (shortly L-Lipschitz continuous) on C if for each x, y ∈ C,

$$\|\varphi(x)-\varphi(y)\|\leq L\|x-y\|.$$If φ : C → C and L = 1, then φ is called nonexpansive on C.

The bifunction \(f: C\times C \to \mathcal R\) is said to be

- (iv):

-

pseudomonotone on C × C if for each x, y ∈ C,

$$f(x, y)\geq 0\Rightarrow f(y, x)\leq 0.$$

In the case f(x, y) = 0, Problem V I K F(f, C) is the variational inequalities V I(F, C) and Algorithm 1 becomes the basic projection algorithm (see Algorithm 12.1.1 [14]).

To investigate the convergence of this algorithm, we recall the following technical lemmas which will be used in the sequel.

Lemma 2.2 (See [16])

Let \(A: \mathcal R^{n}\to \mathcal R^{n}\) be a β -strongly monotone and L-Lipschitz continuous, λ ∈ (0,1]and \(\mu \in (0, \frac {2\beta }{L^{2}})\). Then, the mapping T(x) := x − λ μ A(x)for all \(x\in \mathcal R^{n}\), satisfies the inequality:

where \(\tau =1-\sqrt {1-\mu (2\beta -\mu L^{2})}\in (0, 1]\).

Lemma 2.3 (See [30])

Let {a k }and {δ k }be sequences of nonnegative real numbers such that

where {δ k }satisfies\({\sum }_{k=0}^{\infty }\delta _{k}<\infty \). Then, there exists the limit \(\lim _{k\to \infty }a_{k}\).

3 Convergence Results

In this section, we will state and prove the convergence of the sequences in Algorithm 1.

Theorem 3.1

Let C be a nonempty closed convex subset of \(\mathcal R^{n}\). Let the mapping \(F: C\to \mathcal R^{n}\) and the bifunction \(f: C\times C\to \mathcal R\) satisfy Assumptions (A 1)– (A 5). Then, the sequences {x k}and {y k}in Algorithm 1 converge to the same point which is the unique solution of Problem V I K F(F, f, C).

Suppose that \(x^{*}\) is the unique solution of Problem V I K F(F, f, C). The proof of the theorem is divided into five parts.

Claim 1

where \(\tau =1-\sqrt {1-\mu (2\beta -\mu L^{2})}, \bar \xi _{k}:=1-\tau \xi _{k}, S_{k}=\frac {2\beta _{k}\epsilon _{k}\bar {\xi _{k}}}{\lambda }+{\beta _{k}^{2}}\bar {\xi _{k}}+\frac {\xi _{k}\tau \mu ^{2}}{\tau ^{2}}\|F(x^{*})\|^{2}\) and there exists the limit \(\lim _{k\to \infty }\|x^{k}-x^{*}\|^{2}=c\).

Proof

For each x ∈ C, set g k (x) := x − ξ k μ F(x) for all k = 0,1,⋯. By Lemma 2.2, we have

By using the nonexpansiveness of the projection and the triangle inequality, we get that

This implies that

Since y k =Pr C (x k − α k w k) and x ∗∈Sol(F, f, C) ⊆ C, we have

Combining this and (3.2), we obtain (3.1).

On the other hand, since x ∗∈Sol(F, f, C) ⊆Sol(f, C), i.e., f(x ∗,x) ≥ 0 for all x ∈ C, x k ∈ C, by pseudomonotonicity of f with respect to x ∗, we have f(x k,x ∗) ≤ 0. Then, using \(\bar \xi _{k}\in (0, 1]\) and (3.1), we get

Then, we have

where a k := ∥x k − x ∗∥2 and δ k := S k . By the assumptions (2.1), we have

and hence \({\sum }_{k=0}^{\infty }S_{k}<\infty \), and using Lemma 2.3, there exists the limit \(c:=\lim _{k\to \infty }\|x^{k}-x^{*}\|^{2}\). □

Claim 2

\(\limsup \limits _{k\to \infty }f(x^{k}, x^{*})=0\) and \(\lim \limits _{k\to \infty }\|y^{k}-x^{k}\|=0\).

Proof

Since − f(x k,x ∗) ≥ 0, Claim 1 and \(\bar \xi _{k}\in (0, 1]\) for every k, one has

Then, we have

and hence,

Then, by \({\sum }_{k=0}^{\infty }\beta _{k}=\infty \) and − f(x k,x ∗) ≥ 0, we can deduce that \(\limsup \limits _{k\to \infty }f(x^{k}, x^{*})=0\) as desired.

Otherwise, by the definition of y k,x k ∈ C and the property of Pr C , we have

So, \(\lim _{k\to \infty }\|y^{k}-x^{k}\|=0\). □

Claim 3

Suppose that \(\{x^{k_{j}}\}\) is the subsequence of \(\{x^{k}\}\) such that

and \(\bar x\) is a limit point of \(\{x^{k_{j}}\}\). Then, \(\bar x\in \text {Sol}(f, C)\).

Proof

For simplicity of notation, without loss of generality, we may assume that \(x^{k_{j}}\) converges to \(\bar x\) as \(j\to \infty \). Since f(⋅,x ∗) is upper semicontinuous, Claim 2 and (3.3), we have

On the other hand, since f is pseudomonotone with respect to x ∗ and \(f(x^{*}, \bar x)\geq 0\), we have \(f(\bar x, x^{*})=0\). Thus, \(f(\bar x, x^{*})=0\). Then, by the assumption (A 2), we can deduce that \(\bar x\) is a solution of K F(f, C) as well. □

Claim 4

The sequences {x k} and {y k} converge to the unique solution x ∗∈Sol(F, f, C).

Proof

By Claim 2 and Claim 3, if the subsequence \(\{x^{k_{j}}\}\) converges to \(\bar x\), then \(\bar x\in \text {Sol}(f, C)\) and also \(y^{k_{j}}\rightharpoonup \bar x\). In a similar way as Claim 1, we also have

Combining this and \(\lim _{k\to \infty }\|x^{k}-x^{*}\|^{2}=c^{2}\), we obtain

From \(y^{k_{j}}\rightharpoonup \bar x\in \text {Sol}(f, C)\) as \(j\to \infty \), it follows that

Since F is β-strongly monotone on C, we get

Assume, to get a contradiction, that c > 0, and choose \(\epsilon =\frac {1}{2} \beta c^{2}\). It follows from (3.5) that there exists k 0 such that the inequality

holds for all k ≥ k 0. Otherwise,

where \(M:=\sup \{\mu ^{2}\|F(y^{k})\|^{2}\,:\, k=0,1,\dots \}<\infty \). Then, using (3.4), we get

After summation, we can write

Passing to the limit as \(k\to \infty \), we get \({\sum }_{j=k_{0}}^{\infty }\beta _{j} <\infty \). This is a contradiction with the assumption \({\sum }_{j=k_{0}}^{\infty }\beta _{j} =\infty \) of (2.1). As a consequence, we have c = 0, x k → x ∗ and y k → x ∗. □

Now, we use Algorithm 1 to solve the variational inequalities V I(F, C) on the solution set of the variational inequalities V I(G, C), which is called lexicographic variational inequalities, shortly L V I(F, G, C). In this case, f(x, y) = 〈G(x),y − x〉 for all x, y ∈ C and

Thus, Algorithm 1 and its convergence lead to the following results.

When f(x, y) = 〈G(x),y − x〉 for all x, y ∈ C, we give the following application of Theorem 3.1. We suppose that the mappings F and G satisfy five conditions:

(B 1)G is upper semicontinuous on C;

(B 2)F is pseudomonotone on C with respect to every solution x ∗ of Problem L V I(F, G, C) and satisfies the following condition, called the strict paramonotonicity property

(B 3) For each x ∈ C, 〈G(⋅),x −⋅〉 is upper semicontinuous on C;

(B 4)F is L-Lipschitz continuous and β-strongly monotone;

(B 5) The solution set of Problem L V I(F, G, C) is nonempty.

Theorem 3.2

Let C be a nonempty closed convex subset of \(\mathcal R^{n}\). Let the mappings \(F: C\to \mathcal R^{n}\) and \(G: C\to \mathcal R^{n}\) satisfy Assumptions (B 1)– (B 5). Then, the sequences (x k)and (y k)in Algorithm 2 converge to the same point x ∗ , which is the unique solution of Problem L V I(F, G, C).

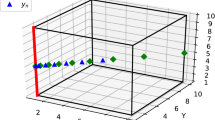

4 Numerical Illustration

In this section, we consider a numerical example to illustrate the convergence of Algorithm 1. The computations are performed by Matlab R2013a running on Laptop Intel(R) Core(TM) i3-3110M CPU@2.40 GHz 2.40 GHz 4 Gb RAM. To compute the projections in Algorithm 1, we implemented them in Matlab to solve strongly convex quadratic problems by using the quadratic-program solver from the Matlab optimization toolbox.

Example 4.1

Let us take \(\mathcal H:=\mathcal R^{5}, F(x)=Mx+q\) with the matrix M generated as suggested in [17], later in [22]:

where A is a 5 × 5 matrix, B is a 5 × 5 skew-symmetric matrix, D is a 5 × 5 diagonal matrix, and q is a vector in \(\mathcal R^{5}\). The feasible set C and the equilibrium bifunction f are defined by

The pseudomonotone bifunction f(x, y) is suggested by Bnouhachem in [11] and later in [5]. Then, we have

and hence,

The initial data is listed in the following.

-

Every entry of A, B, and q is randomly and uniformly generated from (−3,3), and every diagonal entry of D is randomly generated from (0,1);

-

The tolerance error is 𝜖-solution, if \(\max \{\|y^{k}-x^{k}\|, \|x^{k+1}-x^{k}\|\}\leq \epsilon \);

-

The parameters: \(\mu =\frac {1}{\|M\|}\in \left (0, \frac {2\beta }{L^{2}}\right )=\left (0, \frac {2}{\|M\|}\right ), \lambda _{k}=1+\frac {10}{k+2}, \beta _{k}=\frac {1}{k+1}, \xi _{k}=\frac {1}{k^{2}+1}\) for all k ≥ 0;

-

The starting point x 0 = (1,2,0,0,1)T or x 0 = (1,1,1,1,0)T.

The matrix M is positive definite, so F is strongly monotone and Lipschitz continuous on C. The numerical results are showed in Tables 1 and 2.

From the preliminary numerical results reported in the tables, we observe that:

-

(a)

Similar to other methods for equilibrium problems such as the proximal point algorithm, or the extragradient method, the gap function method, and other methods, the rapidity of Algorithm 1 depends very much on the starting point x 0;

-

(b)

Algorithm 1 is quite sensitive to the choice of the parameters λ k ,β k , and ξ k .

References

Anh, P.N.: Strong convergence theorems for nonexpansive mappings and Ky Fan inequalities. J. Optim. Theory Appl. 154, 303–320 (2012)

Anh, P.N.: A new extragradient iteration algorithm for bilevel variational inequalities. Acta Math. Vietnam. 37, 95–107 (2012)

Anh, P.N.: A hybrid extragradient method extended to fixed point problems and equilibrium problems. Optimization 62, 271–283 (2013)

Anh, P.N.: An interior proximal method for solving pseudomonotone nonlipschitzian multivalued variational inequalities. Nonl. Anal. Forum 14, 27–42 (2009)

Anh, P.N., Le Thi, H.A.: An Armijo-type method for pseudomonotone equilibrium problems and its applications. J. Glob. Optim. 57, 803–820 (2013)

Anh, P.N., Le Thi, H.A.: Outer-interior proximal projection methods for multivalued variational inequalities. Acta Math. Vietnam. 42, 61–79 (2017)

Anh, P.N., Kim, J.K., Muu, L.D.: An extragradient method for solving bilevel variational inequalities. J. Glob. Optim. 52, 627–639 (2012)

Anh, P.N., Muu, L.D., Strodiot, J.J.: Generalized projection method for non-Lipschitz multivalued monotone variational inequalities. Acta Math. Vietnam. 34, 67–79 (2009)

Baiocchi, C., Capelo, A.: Variational and Quasivariational Inequalities, Applications to Free Boundary Problems. Wiley, New York (1984)

Bianchi, M., Schaible, S.: Generalized monotone bifunctions and equilibrium problems. J. Optim. Theory Appl. 90, 31–43 (1996)

Bnouhachem, A.: An LQP method for psedomonotone variational inequalities. J. Glob. Optim. 36, 351–363 (2006)

Daniele, P., Giannessi, F., Maugeri, A.: Equilibrium Problems and Variational Models. Kluwer, Boston (2003)

Dempe, S., Zemkoho, A.B.: The bilevel programming problem: reformulations, constraint qualifications and optimality conditions. Math. Progr. 138, 447–473 (2013)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementary Problems. Springer-Verlag, New York (2003)

Giannessi, F., Maugeri, A., Pardalos, P. M.: Equilibrium Problems: Nonsmooth Optimization and Variational Inequality Models. Kluwer, Boston (2004)

Iiduka, H.: Strong convergence for an iterative method for the triple-hierarchical constrained optimization problem. Nonl. Anal. 71, 1292–1297 (2009)

Harker, P.T., Pang, J.S.: A damped-Newton method for the linear complementarity problem. Lect. Appl. Math. 26, 265–284 (1990)

Kalashnikov, V.V., Kalashnikova, N.I.: Solving two-level variational inequality. J. Glob. Optim. 8, 289–294 (1996)

Konnov, I.V.: Combined Relaxation Methods for Variational Inequalities. Springer-Verlag, Berlin (2000)

Korpelevich, G.M.: Extragradient method for finding saddle points and other problems. Ekonomika i Matematicheskie Metody 12, 747–756 (1976)

Maingé, P.E.: Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Val. Anal. 16, 899–912 (2008)

Maingé, P.E., Moudafi, A.: Coupling viscosity methods with the extragradient algorithm for solving equilibrium problems. J. Nonl. Conv. Anal. 9, 283–294 (2008)

Moudafi, A.: Proximal methods for a class of bilevel monotone equilibrium problems. J. Glob. Optim. 47, 287–292 (2010)

Vuong, P.T., Strodiot, J.J., Nguyen, V.H.: On extragradient-viscosity methods for solving equilibrium and fixed point problems in a Hilbert space. Optimization 64, 429–451 (2015)

Solodov, M.: An explicit descent method for bilevel convex optimization. J. Conv. Anal. 14, 227–237 (2007)

Trujillo-Corteza, R., Zlobecb, S.: Bilevel convex programming models. Optimization 58, 1009–1028 (2009)

Xu, M.H., Li, M., Yang, C.C.: Neural networks for a class of bi-level variational inequalities. J. Glob. Optim. 44, 535–552 (2009)

Yamada, I., Ogura, N.: Hybrid steepest descent method for the variational inequality problem over the the fixed point set of certain quasi-nonexpansive mappings. Numer. Funct. Anal. Optim. 25, 619–655 (2004)

Yao, Y., Marino, G., Muglia, L.: A modified Korpelevichś method convergent to the minimum-norm solution of a variational inequality. Optimization 63, 559–569 (2014)

Zeng, L.C., Yao, J.C.: Strong convergence theorem by an extragradient method for fixed point problems and variational inequality problems. Taiwanese J. Math. 10, 1293–1303 (2006)

Acknowledgements

We are very grateful to anonymous referees for their helpful and constructive comments that helped us very much in improving the paper.

This research is funded by the Vietnam National Foundation for Science and Technology Development (NAFOSTED) under grant number “101.02-2017.15.”

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Anh, P.N., Anh, T.T.H. & Kuno, T. Convergence Theorems for Variational Inequalities on the Solution Set of Ky Fan Inequalities. Acta Math Vietnam 42, 761–773 (2017). https://doi.org/10.1007/s40306-017-0226-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40306-017-0226-z