Abstract

Motivated by the problem to approximate all feasible schedules by one schedule in a given scheduling environment, we introduce in this paper the concepts of strong simultaneous approximation ratio and weak simultaneous approximation ratio. Then we study the two variants under various scheduling environments, such as non-preemptive, preemptive or fractional scheduling on identical, related or unrelated machines.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the scheduling research, people always hope to find a schedule that achieves the balance of the loads of the machines well. To the end, some objective functions, such as minimizing makespan and maximizing machine cover, are designed to find a reasonable schedule. Representative publications can be found in Csirik et al. [1], Deuermeyer et al. [2], Graham [3], Graham [4] and McNaughton [5], among many others. But these objectives do not reveal the global fairness for the loads of all machines. Motivated by the problem to approximate all feasible schedules by one schedule in a given scheduling environment and thus realizing the global fairness, we introduce two new parameters: strong simultaneous approximation ratio (SAR) and weak simultaneous approximation ratio (WAR) for scheduling problems.

Our research is also enlightened from the research on global approximation of vector sets. Related work can be found in Bhargava et al. [6], Goel et al. [7], Goel et al. [8], Kleinberg et al. [9] and Kumar and Kleinberg [10]. Kleinberg et al. [9] proposed the notion of the coordinate-wise approximation for the fair vectors of allocations. Based on this notion, Kumar and Kleinberg [10] introduced the definitions of the global approximation ratio and the global approximation ratio under prefix sums.

For a given instance \({\mathcal {I}}\) of a minimization problem, we use \(V({\mathcal {I}})\) to denote the set of vectors induced by all feasible solutions of \({\mathcal {I}}\). For a vector \(X= (X_1, X_2, \cdots , X_m)\in V({\mathcal {I}})\), we use \(\overleftarrow{X}\) to denote the vector in which the coordinates (components) of X are sorted in non-increasing order, that is, \(\overleftarrow{X} =(X'_1, X'_2, \cdots , X'_m)\) is a resorting of \((X_1, X_2, \cdots , X_m)\) so that \(X'_1\geqslant X'_2\geqslant \cdots \geqslant X'_m\). For two vectors \(X,Y\in V({\mathcal {I}})\), we write \(X\preceq _{c}Y\) if \(X_i\leqslant Y_i\) for all i. The global approximation ratio of a vector \(X \in V({\mathcal {I}})\), denoted by c(X), is defined to be the infimum of \(\alpha \) such that \(\overleftarrow{X}\preceq _{c}\alpha \overleftarrow{Y}\) for all \(Y\in V({\mathcal {I}})\). Then the best global approximation ratio of instance \({\mathcal {I}}\) is defined to be \(c^*({\mathcal {I}})=\inf _{X\in V({\mathcal {I}})}c(X)\). For a vector \(X\in V({\mathcal {I}})\), we use \(\sigma (X)\) to denote the vector in which the ith coordinate is equal to the sum of the first i coordinates of X. We write \(X\preceq _{s}Y\) if \(\sigma (\overleftarrow{X})\preceq _{c}\sigma (\overleftarrow{Y})\). The global approximation ratio under prefix sums of a vector \(X \in V({\mathcal {I}})\), denoted by s(X), is defined to be the infimum of \(\alpha \) such that \(X\preceq _{s}\alpha Y\) for all \(Y\in V({\mathcal {I}})\). Then the best global approximation ratio under prefix sums of instance \({\mathcal {I}}\) is defined to be \(s^*({\mathcal {I}})=\inf _{X\in V({\mathcal {I}})}s(X)\).

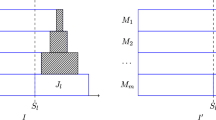

In terms of scheduling, the above concepts about the global approximation of vector sets can be naturally formulated as the simultaneous approximation of scheduling problems. Let \({\mathcal {I}}\) be an instance of a scheduling problem \(\mathcal{P}\) on m machines \(M_1, M_2, \cdots , M_m\), and let \(\mathcal{S}\) be the set of all feasible schedules of \({\mathcal {I}}\). For a feasible schedule \(S \in \mathcal{S}\), the load\(L^S_i\) of machine \(M_i\) is defined to be the time by which the machine finishes all the processing of the jobs and the parts of the jobs assigned to it. \(L(S)=(L^S_1, L^S_2, \cdots , L^S_m)\) is called the load vector of machines under S. Then \(V({\mathcal {I}})\) is defined to be the set of all load vectors of instance \({\mathcal {I}}\). We write \(c(S) = c(L(S))\) and \(s(S)= s(L(S))\) for each \(S \in \mathcal{S}\). Then \(c^*({\mathcal {I}})=\inf _{S\in \mathcal{S}} c(S)\) and \(s^*({\mathcal {I}})=\inf _{S\in \mathcal{S}} s(S)\). The strong simultaneous approximation ratio of problem \(\mathcal{P}\) is defined to be \(\mathrm{SAR}(\mathcal{P})=\sup _{{\mathcal {I}}}c^*({\mathcal {I}})\), and the weak simultaneous approximation ratio of problem \(\mathcal{P}\) is defined to be \(\mathrm{WAR}(\mathcal{P})=\sup _{{\mathcal {I}}}s^*({\mathcal {I}})\).

A scheduling problem is usually characterized by the machine type and the job processing mode. In this paper, the machine types under consideration are identical machines, related machines and unrelated machines, and the job processing modes under consideration are non-preemptive, preemptive and fractional. Let \({\mathcal {J}}=\{J_1,J_2,\cdots ,J_n\}\) and \({\mathcal {M}}=\{M_1,M_2,\cdots ,M_m\}\) be the set of jobs and the set of machines, respectively. The processing time of job \(J_j\) on machine \(M_i\) is given by \(p_{ij}\), which is assumed to be a nonnegative integer. If \(p_{ij}=p_{kj}\) for \(i\ne k\), the machine type is identical machines. In this case, \(p_j\) is used to denote the processing time of job \(J_j\) on all machines. If \(p_{ij}=\frac{p_j}{s_i}\) for all i, the machine type is related machines. In this case, \(p_j\) is called the standard processing time of job \(J_j\) and \(s_i>0\) is called the processing speed of machine \(M_i\). If there is no restriction for \(p_{ij}\), the machine type is unrelated machines. If each job must be non-preemptively processed on some machine, the processing mode is non-preemptive. If each job can be processed preemptively and can be processed on at most one machine at any time, the processing mode is preemptive. If each job can be partitioned into different parts which can be processed on different machines concurrently, the processing mode is fractional. We assume that each machine can process at most one job at any time under any processing mode.

Since we cannot avoid the worst schedule in which all jobs are processed on a common machine, it can be easily verified that, under each processing mode, \(\mathrm{SAR}(\mathcal{P})= m\) for identical machines, \(\mathrm{SAR}(\mathcal{P})= (s_1+s_2+\cdots +s_m)/s_1\) for related machines with speeds \(s_1\geqslant s_2\geqslant \cdots \geqslant s_m\) and \(\mathrm{SAR}(\mathcal{P})=+\infty \) for unrelated machines.

We then concentrate our research on the weak simultaneous approximation ratio \(\mathrm{WAR}(\mathcal{P})\) of the scheduling problems defined as above. For convenience, we use P, Q and R to represent identical machines, related machines and unrelated machines, respectively, and use NP, PP and FP to represent non-preemptive, preemptive and fractional processing, respectively. Then the notation \(Pm(\mathrm{NP})\) represents the scheduling problem on m identical machines under non-preemptive processing mode. Other notations for scheduling problems can be similarly understood. The main results are given in Table 1, where \(\rho \) is the value of \(\mathrm{WAR}\).

This paper is organizes as follows. In Sect. 2, we study the weak simultaneous approximation ratio for scheduling on identical machines. In Sect. 3, we study the weak simultaneous approximation ratio for scheduling on related machines. In Sect. 4, we study the weak simultaneous approximation ratio for scheduling on unrelated machines.

2 Identical Machines

For problem \(P2(\mathrm{NP})\), we have \(s(S)=1\) for every schedule S which minimizes the makespan. So \(\mathrm{WAR}(P2(\mathrm{NP})) =1\). For problem \(Pm(\mathrm{NP})\) with \(m\geqslant 3\), the following instance shows that \(\mathrm{WAR}(Pm(\mathrm{NP})) >1\).

In the instance, there are m jobs with processing time \(m-1\), \((m-1)(m-2)\) jobs with processing time m and a big job with processing time \((m-1)^2+r_m\), where

It can be verified that \(0<r_m<m-2\). Let S be the schedule in which the m jobs with processing time \(m-1\) are scheduled on one machine, the big job with processing time \((m-1)^2+r_m\) is scheduled on one machine, and the remaining \((m-1)(m-2)\) jobs with processing time m are scheduled on the remaining \(m-2\) machines averagely. Let T be the schedule in which the big job is scheduled on one machine together with a job of processing time \(m-1\), and each of the remaining machines has a job of processing time \(m-1\) and \(m-2\) jobs of processing time m. Then the makespan of schedule S is \(m(m-1)\), and the \((m-1)\)th prefix sum of \(\overleftarrow{L(T)}\) is \(m(m-1)^2-(m-2-r_m)\). Now we consider an arbitrary schedule R. If the big job is scheduled on one machine solely in R, then the \((m-1)\)th prefix sum of \(\overleftarrow{L(R)}\) is at least \(m(m-1)^2\). Thus, by considering the \((m-1)\)th prefix sums of \(\overleftarrow{L(T)}\) and \(\overleftarrow{L(R)}\), we have

If the big job is scheduled on one machine together with at least one other job, then the makespan of schedule R is at least \((m-1)+(m-1)^2+r_m\). Thus, by considering the makespans of S and R, we have \(s(R) \geqslant 1+\frac{r_m}{m(m-1)}\). It follows that

To establish the upper of \(\mathrm{WAR}(Pm(\mathrm{NP}))\), we first present a simple but useful lemma.

Lemma 2.1

Let X and Y be two vectors of n dimensions, and let \(X'\) and \(Y'\) be two vectors of two dimensions. If \(X\preceq _{s}Y\) and \(X'\preceq _{s}Y'\), then \((X, X')\preceq _{s}(Y,Y')\).

Proof

Suppose that \(X'=(x_1, x_2)\) and \(Y'=(y_1, y_2)\). Without loss of generality, we may further assume that \(x_1\geqslant x_2\) and \(y_1\geqslant y_2\). Then \(x_1\leqslant y_1\) and \(x_1+x_2 \leqslant y_1+y_2\). Let \(Z_x =(X, X')\) and \(Z_y=(Y, Y')\). For \(Z\in \{Z_x, Z_y\}\), we use \((\overleftarrow{Z})_k\) to denote the kth coordinate of \(\overleftarrow{Z}\) and use \(|\overleftarrow{Z}|_k\) to denote the sum of the first k coordinates of \(\overleftarrow{Z}\) for \(1\leqslant k \leqslant n+2\). Similar notations are also used for X and Y. Given an index k with \(1\leqslant k \leqslant n+2\), we use \(\delta (k, X')\) to denote the number of elements in \(\{x_1, x_2\}\) included in the first k coordinates of \(\overleftarrow{Z_x}\) and \(\delta (k, Y')\) the number of elements in \(\{y_1, y_2\}\) included in the first k coordinates of \(\overleftarrow{Z_y}\). Then \(0\leqslant \delta (k, X'), \delta (k, Y')\leqslant 2\).

If \(\delta (k, X')=\delta (k, Y')\), then we clearly have \(|\overleftarrow{Z_x}|_k \leqslant |\overleftarrow{Z_y}|_k\).

If \(\delta (k, X')=0\), then \(|\overleftarrow{Z_x}|_k = |\overleftarrow{X}|_k \leqslant |\overleftarrow{Y}|_k \leqslant |\overleftarrow{Z_y}|_k\).

If \(\delta (k, Y')=0\) and \(\delta (k, X') \geqslant 1\), we suppose that \(x_1\) is the ith coordinate of \(\overleftarrow{Z_x}\). Then, for each j with \(i\leqslant j\leqslant k\), \((\overleftarrow{Z_x})_j \leqslant x_1 \leqslant y_1 \leqslant (\overleftarrow{Z_y})_j\). Consequently, \(|\overleftarrow{Z_x}|_k = |\overleftarrow{X}|_{i-1} +\sum _{i\leqslant j\leqslant k} (\overleftarrow{Z_x})_j \leqslant |\overleftarrow{Y}|_{i-1} +\sum _{i\leqslant j\leqslant k} (\overleftarrow{Z_y})_j = |\overleftarrow{Z_y}|_k\).

If \(\delta (k, X')=2\) and \(\delta (k, Y')=1\), then \((\overleftarrow{Y})_{k-1} \geqslant y_2\). Thus, \(|\overleftarrow{Z_x}|_k = |\overleftarrow{X}|_{k-2} +x_1 +x_2 \leqslant |\overleftarrow{Y}|_{k-2} +y_1+y_2 \leqslant |\overleftarrow{Y}|_{k-1} +y_1 = |\overleftarrow{Z_y}|_k\).

If \(\delta (k, X')=1\) and \(\delta (k, Y')=2\), then \((\overleftarrow{Y})_{k-1} \leqslant y_2\). Thus, \(|\overleftarrow{Z_x}|_k = |\overleftarrow{X}|_{k-1} +x_1 \leqslant |\overleftarrow{Y}|_{k-1} +y_1 \leqslant |\overleftarrow{Y}|_{k-2} +y_1 + y_2 = |\overleftarrow{Z_y}|_k\).

The above discussion covers all possibilities. Then the lemma follows.

Theorem 2.1

\(\mathrm{WAR}(Pm(\mathrm{NP})) \leqslant \frac{3}{2}\) for \(m\geqslant 4\) and \(\mathrm{WAR}(P3(\mathrm{NP})) \leqslant \sqrt{5}-1\approx 1.236\).

Proof

Consider an instance of n jobs on \(m\geqslant 4\) identical machines with \(\mathcal{J}=\{J_1,J_2,\cdots ,J_n\}\) and \(\mathcal{M}=\{M_1,M_2,\cdots , M_m\}\). We assume that \(p_1\geqslant p_2\geqslant \cdots \geqslant p_n\). Let S be a schedule produced by LPT algorithm (which is the LS algorithm with the jobs being given in the LPT order) such that \(L^S_1\geqslant L^S_2\geqslant \cdots \geqslant L^S_m\). Then \(L(S)=\overleftarrow{L(S)}=(L^S_1, L^S_2, \cdots , L^S_m)\). If \(n\leqslant m\), it is easy to verify that \(s(S)=1\). Hence, we assume in the following that \(n\geqslant m+1\). Then some machine has at least two jobs in S.

Let \(i_0\) be the smallest index such that either \(M_{i_0+1}\) has at least three jobs in S, or \(M_{i_0+1}\) has exactly two jobs in S and the size of the shorter job on \(M_{i_0+1}\) is at most half of the size of the longer job on \(M_{i_0+1}\). If there is no such index, we set \(i_0=m\). Then \(i_0\geqslant 0\), and in the case \(i_0\geqslant 1\), each of \(M_1,M_2,\cdots ,M_{i_0}\) has at most two jobs in S. Let \(J_k\) be the shortest job scheduled on \(M_1,M_2,\cdots ,M_{i_0}\) and set \(\mathcal{J}_k=\{J_1,J_2,\cdots ,J_k\}\). Then \(\mathcal{J}_k\) contains the jobs scheduled on \(M_1,M_2,\cdots ,M_{i_0}\). We use \(M_{k'}\) to denote the machine occupied by \(J_k\) in S. Let T be the schedule derived from S by deleting \(J_{k+1},J_{k+2},\cdots ,J_n\). Then T is an LPT schedule for \(\mathcal{J}_k\) with \(L^T_i=L^S_i,~i=1,2,\cdots ,i_0\). We claim that \(s(T)=1\).

In the case \(i_0=0\), the claim holds trivially. Hence, we assume in the following that \(i_0\geqslant 1\).

If each of \(M_1,M_2,\cdots ,M_{i_0}\) has only one job in S, then \(i_0=k\leqslant m\) and it is easy to see that \(s(T)=1\).

Suppose in the following that at least one of \(M_1,M_2,\cdots ,M_{i_0}\) has exactly two jobs in S. Then \(m+1\leqslant k \leqslant 2m\) and the machine \(M_{k'}\) has exactly two jobs, say \(J_t\) and \(J_k\), in S. Note that there are at most two jobs on each machine in T. (Otherwise, some machine \(M_i\) with \(i\geqslant i_0+1\) has \(r\geqslant 3\) jobs, say \(J_{h_1},J_{h_2},\cdots ,J_{h_r}\), in T. By LPT algorithm, \(p_t\geqslant \sum ^{r-1}_{j=1}p_{h_j}\geqslant 2p_k\), contradicting the choice of \(i_0\).) From the LPT algorithm, we have \(t= {2m+1-k}\). By the choice of \(i_0\), we have \(p_k>\frac{1}{2}p_{2m+1-k}\).

Let R be an arbitrary schedule for \(\mathcal{J}_k\). If each machine has at most two jobs in R, we set \(R_1=R\). If some machine \(M_x\) has at least three jobs in R, by the pigeonhole principle, a certain machine \(M_y\) has either no job or exactly one job in \(\{J_{2m+1-k},J_{2m+2-k},\cdots ,J_k\}\). Let \(R'\) be the schedule obtained from R by moving the shortest job, say \(J_{x'}\), on \(M_x\) to \(M_y\). Then \(L^{R'}_x \geqslant 2p_k>p_{2m+1-k}\geqslant L^R_y\) and \(L^{R'}_y= L^{R}_y+ p_{x'}\geqslant L^R_y\). Note that \(L^{R}_x \geqslant L^{R'}_x , L^{R'}_y \geqslant L^{R}_y\) and \(L^{R}_x + L^{R}_y = L^{R'}_x +L^{R'}_y\). Then we have \(L(R')\preceq _{s}L(R)\) by Lemma 2.1. This procedure is repeated until we obtain a schedule \(R_1\) so that each machine has at most two jobs in \(R_1\). Then we have \(L(R_1)\preceq _{s}L(R)\).

If \(J_1, J_2, \cdots , J_m\) are processed on distinct machines, respectively, in \(R_1\), we set \(R_2= R_1\). If some machine \(M_x\) has two jobs \(J_{x'}, J_{x''}\in \{J_1, J_2, \cdots , J_m\}\) in \(R_1\), by the pigeonhole principle, a certain machine \(M_y\) is occupied by at most two jobs in \(\{J_m, J_{m+1}, \cdots , J_k\}\). Suppose that \(p_{x'}\geqslant p_{x''}\) and \(J_{y'}\) is the shorter job on \(M_y\). Let \(R'_1\) be the schedule obtained from \(R_1\) by shifting \(J_{x''}\) to \(M_y\) and shifting \(J_{y'}\) to \(M_x\). Then \(L^{R_1}_x \geqslant L^{R'_1}_x , L^{R'_1}_y \geqslant L^{R_1}_y\) and \(L^{R_1}_x + L^{R_1}_y = L^{R'_1}_x +L^{R'_1}_y\). Consequently, by Lemma 2.1, \(L(R'_1)\preceq _{s}L(R_1)\). This procedure is repeated until we obtain a schedule \(R_2\) so that \(J_1, J_2, \cdots , J_m\) are processed on distinct machines, respectively, in \(R_2\). Then we have \(L(R_2)\preceq _{s}L(R_1)\).

Without loss of generality, we assume that \(J_j\) is processed on \(M_j\) in \(R_2\), \(1\leqslant j\leqslant m\). Let \(t= k-m\). Then the t jobs \(J_{m+1}, J_{m+2}, \cdots , J_k\) are processed on t distinct machines in \(R_2\). For convenience, we add another \(m-t\) dummy jobs with sizes 0 in \(R_2\) so that each machine has exactly two jobs. We define a sequence of t schedules \(R_2^{(1)}, R_2^{(2)}, \cdots , R_2^{(t)}\) for \(\mathcal{J}_k\) by the following way.

Initially we set \(R_2^{(0)}=R_2\). For each i from 1 to t, the schedule \(R_2^{(i)}\) is obtained from \(R_2^{(i-1)}\) by exchanging the shorter job on \(M_{m-i+1}\) with job \(J_{m+i}\).

We only need to show that \(L(R_2^{(i)})\preceq _{s}L(R_2^{(i-1)})\) for each i with \(1\leqslant i\leqslant t\). Note that the jobs \(J_{m+1}, J_{m+2}, \cdots , J_{m+i-1}\) are processed on machines \(M_m, M_{m-1}, \cdots , M_{m-i+2}\), respectively, in \(R_2^{(i-1)}\). If \(J_{m+i}\) is processed on \(M_{m-i+1}\) in \(R_2^{(i-1)}\), we have \(R_2^{(i)} =R_2^{(i-1)}\), and so, \(L(R_2^{(i)})\preceq _{s}L(R_2^{(i-1)})\). Thus, we may assume that \(J_{m+i}\) is processed on a machine \(M_x\) with \(x\leqslant {m-i}\) in \(R_2^{(i-1)}\). Let \(J_{j}\) be the shorter job on \(M_{m-i+1}\) in \(R_2^{(i-1)}\). Then \(p_j \leqslant p_{m+i}\) and \(p_x \geqslant p_{m-i+1}\). It is easy to see that \((L^{R_2^{(i)}} _x, L^{R_2^{(i)}}_{m-i+1}) = (p_x + p_j, p_{m-i+1} +p_{m+i}) \preceq _{s} (p_x + p_{m+i}, p_{m-i+1} +p_j) = (L^{R_2^{(i-1)}} _x, L^{R_2^{(i-1)}}_{m-i+1})\). Consequently, by Lemma 2.1, \(L(R_2^{(i)})\preceq _{s}L(R_2^{(i-1)})\).

The above discussion means that \(L(R_2^{(t)}) \preceq _{s} L(R_2) \preceq _{s} L(R_1) \preceq _{s} L(R)\). Since \(R_2^{(t)}\) is essentially an LPT schedule, we have \(\overleftarrow{L(T)} = \overleftarrow{L(R_2^{(t)})}\), and so, \(L(T) \preceq _{s} L(R_2^{(t)})\). It follows that \(L(T) \preceq _{s} L(R)\). The claim follows.

Now let \({\bar{S}}\) be an arbitrary schedule for \(\mathcal{J}\), and let \({\bar{T}}\) be the schedule for \(\mathcal{J}_k\) derived from \({\bar{S}}\) by deleting jobs \(J_{k+1},J_{k+2},\cdots ,J_n\). Then \(L({\bar{T}})\preceq _{s} L({\bar{S}})\). Assume without loss of generality that \(L^{{\bar{S}}}_1\geqslant L^{{\bar{S}}}_2\geqslant \cdots \geqslant L^{{\bar{S}}}_m\) and \(L^{{\bar{T}}}_{\pi (1)}\geqslant L^{{\bar{T}}}_{\pi (2)}\geqslant \cdots \geqslant L^{{\bar{T}}}_{\pi (m)}\), where \(\pi \) is a permutation of \(\{1,2,\cdots ,m\}\). For each i with \(1\leqslant i\leqslant i_0\), the above claim implies that \(\sum ^i_{j=1}L^S_j=\sum ^i_{j=1}L^T_j\leqslant \sum ^i_{j=1}L^{{\bar{T}}}_{\pi (j)}\leqslant \sum ^i_{j=1}L^{{\bar{S}}}_j\).

Write \(P=\sum ^n_{j=1}p_j\), \(Q=\sum ^{i_0}_{i=1}L^S_i\) and \({\bar{Q}}=\sum ^{i_0}_{i=1}L^{{\bar{S}}}_i\). Then \(Q\leqslant {\bar{Q}}\). Note that, in the case \(i_0=0\), we have \(Q= {\bar{Q}} =0\). Let \(J_d\) be the last job scheduled on machine \(M_{i_0+1}\) in S. By the choice of \(i_0\), \(p_d\leqslant \frac{1}{2}(L^S_{i_0+1}-p_d)\). From the LPT algorithm, we have \(L^S_{i_0+1}-p_d\leqslant L^S_{j}\), \(j=i_0+1,i_0+2,\cdots ,m\). Hence,

Thus, for each i with \(i_0+1\leqslant i\leqslant m\), we have

and

From (2.1) and (2.2), we conclude that \(\sum ^i_{j=1}L^S_j\leqslant \frac{3}{2}\sum ^i_{j=1}L^{{\bar{S}}}_j\). Consequently, \(s(S)\leqslant \frac{3}{2}\). It follows that \(\mathrm{WAR}(Pm(\mathrm{NP})) \leqslant \frac{3}{2}\) for \(m\geqslant 4\).

Now let us consider problem \(P3(\mathrm{NP})\). Let \(\mathcal{I}\) be an instance. Denote by S the schedule which minimizes the makespan and by T the schedule which maximizes the machine cover. Without loss of generality, we may assume that

Then

Consequently,

Note that

Then

Set

Then \(\frac{2}{3}\leqslant t\leqslant 1\) and

If \(x\geqslant \frac{\sqrt{1+4t^2}-1}{2}\), then

If \(x\leqslant \frac{\sqrt{1+4t^2}-1}{2}\), then

Note that \(\frac{\sqrt{1+4t^2}-1}{t}\leqslant \sqrt{5}-1\) for all t with \(\frac{2}{3}\leqslant t\leqslant 1\). It follows that \(s^*(\mathcal{I})\leqslant \sqrt{5}-1\). The result follows.

For problem \(Pm(\mathrm{PP})\), [5] presented an optimal algorithm to generate a schedule which minimizes the makespan. A slight modification of the algorithm can generate a schedule S with \(s(S)=1\).

Algorithm 1 (with input \({\mathcal {M}}\) and \({\mathcal {J}}\) )

Step 1 Find the longest job \(J_h\) in \({\mathcal {J}}\). If \(p_h\leqslant \frac{\sum _{J_j\in {\mathcal {J}}}p_j}{|{\mathcal {M}}|}\), then apply McNaughton’s algorithm to assign all jobs in \({\mathcal {J}}\) to the machines in \({\mathcal {M}}\) evenly, and stop. Otherwise, assign \(J_h\) to an arbitrary machine \(M_i\in {\mathcal {M}}\).

Step 2 Reset \({\mathcal {M}}={\mathcal {M}}{\setminus }\{M_i\}\) and \({\mathcal {J}}={\mathcal {J}}{\setminus }\{J_h\}\). If \(|{\mathcal {J}}|\ne 0\), then go back to 1. Otherwise, stop.

Lemma 2.2

Assume \(p_1\geqslant p_2\geqslant \cdots \geqslant p_n\) and let S be a preemptive schedule with \(L^S_1\geqslant L^S_2\geqslant \cdots \geqslant L^S_m\). Then \(\sum ^k_{i=1}p_i\leqslant \sum ^k_{i=1}L^S_i\), \(k=1,2,\cdots ,m\).

Proof

Let \(\mathcal{J}_k=\{J_1,J_2,\cdots ,J_k\}\). Then at most k jobs in \(\mathcal{J}_k\) can be processed simultaneously in the time interval \([0,L^{S}_k]\) and at most \(k-i\) jobs of \(\mathcal{J}_k\) can be processed simultaneously in the time interval \([L^{S}_{k+1-i},L^{S}_{k-i}]\), \(i=1,2,\cdots ,k-1\). Therefore,

The lemma follows.

Theorem 2.2

\(\mathrm{WAR}(Pm(\mathrm{PP)})=1\).

Proof

Assume that \(p_1\geqslant p_2\geqslant \cdots \geqslant p_n\). Let \(i_0\) be the largest job index such that \(p_{i_0}>\frac{\sum _{j=i_0}^{n}p_j}{m-i_0+1}\). If there is no such index, we set \(i_0=0\). Let S be the preemptive schedule generated by Algorithm 1 with \(L^S_1\geqslant L^S_2\geqslant \cdots \geqslant L^S_m\). Then we have

and

Let T be a preemptive schedule with \(L^T_1\geqslant L^T_2\geqslant \cdots \geqslant L^T_m\). If \(1\leqslant k\leqslant i_0\), by Lemma 2.2 and (2.3), we have \(\sum ^k_{i=1}L^S_i=\sum ^k_{i=1}p_i\leqslant \sum ^k_{i=1}L^T_i\). If \(i_0+1\leqslant k\leqslant m\), by noting that \(\sum ^{i_0}_{i=1}L^S_i\leqslant \sum ^{i_0}_{i=1}L^T_i\), we have

Hence, \(\mathrm{WAR}(Pm(\mathrm{PP}))=1\). The result follows.

For problem \(Pm(\mathrm{FP})\), the schedule S averagely processing each job on all machines clearly has \(s(S)=1\). Then we have

Theorem 2.3

\(\mathrm{WAR}(Pm(\mathrm{FP}))=1\).

3 Related Machines

Assume that \(s_1\geqslant s_2\geqslant \cdots \geqslant s_m\). We first present the exact expression of \(\mathrm{WAR}(Qm(\mathrm{FP}))\) on the machine speeds \(s_1, s_2, \cdots , s_m\). Then we show that it is a lower bound for \(\mathrm{WAR}(Qm(\mathrm{PP}))\) and \(\mathrm{WAR}(Qm(\mathrm{NP}))\).

The fractional processing mode means that all jobs can be merged into a single job with processing time equal to the sum of processing times of all jobs. Thus, we may assume that \(\mathcal{I}\) is an instance of \(Qm(\mathrm{FP})\) with just one job \(J_\mathcal{I}\). Suppose without loss of generality that \(p_\mathcal{I}=1\). A schedule S of \(\mathcal{I}\) is called regular if \(L^{S}_1\geqslant L^{S}_2\geqslant \cdots \geqslant L^{S}_m\). Then \(\overleftarrow{L(S)}=L(S)\) if S is regular. The following lemma can be observed from the basic mathematical knowledge.

Lemma 3.1

Suppose that \(x_1\geqslant {x_2}\geqslant \cdots \geqslant {x_n}\geqslant 0\) and \(y_1\geqslant {y_2}\geqslant \cdots \geqslant {y_n}\geqslant 0\). Then \(\sum _{i=1}^{n}x_iy_{\pi (i)}\leqslant \sum _{i=1}^{n}x_iy_i\) for any permutation \(\pi \) of \(\{1,2,\cdots ,n\}\).

Lemma 3.2

For any schedule T of \({\mathcal {I}}\), there exists a regular schedule S such that \(L(S)\preceq _{c}\overleftarrow{L(T)}\).

Proof

Let T be a schedule of \({\mathcal {I}}\) and \(\pi \) a permutation of \(\{1,2,\cdots ,m\}\) such that \(L^T_{\pi (1)}\geqslant L^T_{\pi (2)}\geqslant \cdots \geqslant L^T_{\pi (m)}\). By Lemma 3.1, \(\sum ^m_{i=1}s_iL^{T}_{\pi (i)}\geqslant \sum ^m_{i=1}s_{\pi (i)}L^{T}_{\pi (i)}\geqslant 1\). Let \(i_0\) be the smallest machine index such that \(\sum ^{i_0}_{i=1}s_iL^{T}_{\pi (i)}\geqslant 1\). Let S be the schedule in which a part of processing time \(s_iL^{T}_{\pi (i)}\) is assigned to \(M_i\), \(i=1,2,\cdots ,i_0-1\), and the rest part of processing time \(1-\sum ^{i_0-1}_{i=1}s_iL^{T}_{\pi (i)}\) is assigned to \(M_{i_0}\). Then we have \(L^S_i=L^T_{\pi (i)}\), for \(i=1,2,\cdots ,i_0-1\),

and \(L^S_i=0\leqslant L^T_{\pi (i)}\) for \(i=i_0+1,i_0+2,\cdots ,m\). It can be observed that S is regular and \(L(S)\preceq _{c}\overleftarrow{L(T)}\). The lemma follows.

Let f(i) be the infimum of the sum of the first i coordinates of \(\overleftarrow{L(T)}\) in all feasible schedule T of \({\mathcal {I}}\), \(i=1,2,\cdots ,m\). By Lemma 3.2, we have \(f(i)=\inf \{\sum _{k=1}^{i}L^{S}_{k}: S \text{ is } \text{ regular }\},~i=1,2,\cdots ,m\). Then, for each schedule T of \({\mathcal {I}}\) with \(L^T_{\pi (1)}\geqslant L^T_{\pi (2)}\geqslant \cdots \geqslant L^T_{\pi (m)}\) for some permutation \(\pi \) of \(\{1,2,\cdots ,m\}\), we have

The following lemma gives the exact expression for each f(i).

Lemma 3.3

\(f(i)=\left\{ \begin{array}{ll} \frac{i}{\sum ^m_{k=1}s_k}, &{} \quad \text{ if } i\leqslant \frac{\sum ^m_{k=1}s_k}{s_1},\\ \frac{1}{s_1}, &{} \quad \text{ if } i>\frac{\sum ^m_{k=1}s_k}{s_1}. \end{array} \right. \)

Proof

Fix index i and let S be a regular schedule. Then we have

and

So we only need to find a regular schedule S meeting (3.2) and (3.3) such that \(\sum _{k=1}^{i}L^{S}_{k}\) reaches the minimum.

If \(i\leqslant \frac{\sum ^m_{k=1}s_k}{s_1}\), by (3.2) and (3.3), we have

The equality holds if and only if \(L^S_1=L^S_2=\cdots =L^S_m=\frac{1}{\sum ^m_{k=1}s_k}\). Then the regular schedule S can be defined by the way that a part of processing time \(\frac{s_k}{\sum ^m_{i=1}s_i}\) is assigned to \(M_k\), \(k=1,2,\cdots ,m\). Thus, \(f(i)=\frac{i}{\sum ^m_{k=1}s_k}\).

If \(i>\frac{\sum ^m_{k=1}s_k}{s_1}\), we can similarly deduce

The equality holds if and only if \(L^S_1=\frac{1}{s_1},~L^S_2=\cdots =L^S_m=0\). Then the regular schedule S can be defined by the way that \(J_{{\mathcal {I}}}\) is scheduled totally on \(M_1\) in S. Thus, \(f(i)=\frac{1}{s_1}\). The lemma follows.

By Lemma 3.2, \(s^*({\mathcal {I}})=\inf \{s(S): S \text{ is } \text{ regular }\}\). For each regular schedule S, by (3.1) and Lemma 3.3, we have \(\sum ^i_{k=1}L^{S}_k\leqslant {s(L(S))}{f(i)}\) for \(i=1,2,\cdots ,m\).

Let \(s_{m+1}=0\) and \(\frac{\sum ^m_{i=1}s_i}{s_1}=t+\Delta \), where t with \(1\leqslant t\leqslant m\) is a positive integer and \(0\leqslant \Delta <1\). By Lemma 3.3, we have

and

Hence, \(s(S)\geqslant \frac{\sum ^m_{i=1}s_i}{\sum ^t_{i=1}s_i+\left( \frac{\sum ^m_{i=1}s_i}{s_1}-t\right) s_{t+1}}=\frac{\sum ^m_{i=1}s_i}{\sum ^t_{i=1}s_i+\Delta s_{t+1}}\). Note that the equality holds if and only if \(L^S_1=L^S_2=\cdots =L^S_t=\frac{1}{\sum ^t_{i=1}s_i+\Delta s_{t+1}}\), \(L^S_{t+1}=\frac{\Delta }{\sum ^t_{i=1}s_i+\Delta s_{t+1}}\) and \(L^S_{t+2}=L^S_{t+3}=\cdots =L^S_m=0\). Then the corresponding regular schedule S can be defined by the way that a part of processing time \(\frac{s_i}{\sum ^t_{k=1}s_k+\Delta s_{t+1}}\) is assigned to \(M_i\), \(i=1,2,\cdots ,t\), and the rest part of processing time \(\frac{\Delta s_{t+1}}{\sum ^t_{i=1}s_i+\Delta s_{t+1}}\) is assigned to \(M_{t+1}\). Hence, \(s^*({{\mathcal {I}}})=\frac{\sum ^m_{i=1}s_i}{\sum ^t_{i=1}s_i+\Delta s_{t+1}}\). Consequently, \({ WAR}(Qm(FP)) = \frac{\sum ^m_{i=1}s_i}{\sum ^t_{i=1}s_i+\Delta s_{t+1}}\) if the machine speeds are fixed.

If machine speeds are parts of the input, by the fact that \(s_1\geqslant s_2\geqslant \cdots \geqslant s_m\), we have

Let \(\theta =\frac{\sum ^m_{i=2}s_i}{m-1}\) and \(\vartheta =\frac{s_1}{\theta }>1\). Then

Obviously, \(\frac{m}{\vartheta -1}+(\vartheta -1)\geqslant 2\sqrt{\frac{m}{\vartheta -1}(\vartheta -1)}=2\sqrt{m}\). By (3.6) and (3.7), we have

So we have \(s^*({\mathcal {I}})\leqslant \frac{\sqrt{m}+1}{2}\), and therefore, \(\mathrm{WAR}(Qm(\mathrm{FP})) \leqslant \frac{\sqrt{m}+1}{2}\).

To show that \({ WAR}(Qm(\mathrm{FP})) = \frac{\sqrt{m}+1}{2}\), we consider the following instance \({\mathcal {I}}\) with \(p_{\mathcal {I}} =1\), \(s_1=s=\sqrt{m}+1>1\) and \(s_2=s_3=\cdots =s_m=1\). Let S be a regular schedule and write \(x=sL^{S}_1\). Then \(\sum ^m_{t=2}L^{S}_t=1-x\). By Lemma 3.3 and (3.1), we have

where the inequality follows from the fact that \(\frac{x(s+m-1)}{s}\) is an increasing function in x, while \(x+s(1-x)\) is a decreasing function in x and they meet with \(\frac{s^2+sm-s}{s^2+m-1}\) when \(x=\frac{s^2}{s^2+m-1}\). Then \(s^*({\mathcal {I}})\geqslant \frac{\sqrt{m}+1}{2}\). Consequently, \(\mathrm{WAR}(Qm(\mathrm{FP})) = \frac{\sqrt{m}+1}{2}\).

The above discussion leads to the following conclusion.

Theorem 3.1

If the machine speeds \(s_1,s_2,\cdots ,s_m\) are fixed, then \(\mathrm{WAR}(Qm(\mathrm{FP})) = \frac{\sum ^m_{i=1}s_i}{\sum ^t_{i=1}s_i+\Delta s_{t+1}}\), where \(\frac{\sum ^m_{i=1}s_i}{s_1}=t+\Delta \), t with \(1\leqslant t\leqslant m\) is a positive integer and \(0\leqslant \Delta <1\). Alternatively, if the machine speeds \(s_1,s_2,\cdots ,s_m\) are parts of the input, then \({ WAR}(Qm(\mathrm{FP})) = \frac{\sqrt{m}+1}{2}\).

Lemma 3.4

If the machine speeds \(s_1,s_2,\cdots ,s_m\) are fixed, then \(\mathrm{WAR}(Qm(\mathrm{NP})) \geqslant \mathrm{WAR}(Qm(\mathrm{FP}))\) and \(\mathrm{WAR}(Qm(\mathrm{PP})) \geqslant \mathrm{WAR}(Qm(\mathrm{FP}))\).

Proof

We only consider the non-preemptive processing mode. For the preemptive processing mode, the result can be similarly proved. Given a schedule S, we denote by \(\pi ^{S}\) the permutation of \(\{1,2,\cdots ,m\}\) such that \(L^S_{\pi ^{S}(1)}\geqslant L^S_{\pi ^{S}(2)}\geqslant \cdots \geqslant L^S_{\pi ^{S}(m)}\).

Suppose without loss of generality that \(s_m=1\). Write \(\eta =\mathrm{WAR}(Qm(\mathrm{NP}))\). Let \({\mathcal {I}}\) be an instance of \(Q_m(\mathrm{FP})\) with only one job \(J_{{\mathcal {I}}}\) of processing time 1. For each i, set f(i) to be the infimum of \(\sum _{k=1}^{i}L^{S}_{\pi ^{S}(k)}\) of schedule S over all fractional schedules of \({\mathcal {I}}\). We only need to show that \(s^*({\mathcal {I}})\leqslant \eta \).

Assume to the contrary that \(s^*({\mathcal {I}})>\eta \). Let \(\varepsilon >0\) be a sufficiently small number such that \(\eta (f(i)+i\varepsilon )<s^*({\mathcal {I}})f(i)\), \(i=1,2,\cdots ,m\). Let \({\mathcal {H}}\) be an instance of \(Q_m(\mathrm{NP})\) such that the total processing time of jobs is equal to 1 and the processing time of each job is at most \(\varepsilon \). For each i, let g(i) be the infimum of \(\sum _{k=1}^{i}L^{S}_{\pi ^{S}(k)}\) of schedule S over all feasible schedules of \({\mathcal {H}}\). We assert that

To the end, let \(S_i\) be the regular schedule of \({\mathcal {I}}\) such that \(\sum ^{i}_{k=1}L^{S_i}_{k}=f(i)\), \(i=1,2,\cdots ,m\). Fix index i, we construct a non-preemptive schedule S of \({\mathcal {H}}\) such that \(\sum _{k=1}^{i}L^{S}_{\pi ^{S}(k)}\leqslant f(i)+i\varepsilon \). This leads to \(g(i)\leqslant \sum _{k=1}^{i}L^{S}_{\pi ^{S}(k)}\leqslant f(i)+i\varepsilon \) and therefore proves the assertion. The construction of S is stated as follows. First, we assign jobs to \(M_i\) one by one until \(L^S_1\geqslant L^{S_{i}}_1\). Then we assign the rest jobs to \(M_2\) one by one until \(L^S_2\geqslant L^{S_i}_2\). This procedure is repeated until all jobs are assigned. According to the construction of S, we have \(L^S_{k}\leqslant L^{S_{i}}_k+\frac{\varepsilon }{s_k}\leqslant L^{S_{i}}_k+\varepsilon \), \(k=1,2,\cdots ,m\). Note that \(L^{S_i}_1\geqslant L^{S_i}_2\geqslant \cdots \geqslant L^{S_i}_m\). Then \(\sum _{k=1}^{i}L^{S}_{\pi ^{S}(k)}\leqslant \sum _{k=1}^{i}(L^{S_i}_{\pi ^{S}(k)}+\varepsilon ) \leqslant \sum ^{i}_{k=1}L^{S_i}_{k}+i\varepsilon =f(i)+i\varepsilon \).

Let R be the schedule of \({\mathcal {H}}\) such that \(s(R)=s^*({\mathcal {H}})\). It can be observed that there exists a schedule T of \({\mathcal {I}}\) such that \(L(T)\preceq _{c}L(R)\). Hence, for each i with \(1\leqslant i\leqslant m\), we have

This contradicts the definition of \(s^*({\mathcal {I}})\). So \(s^*({\mathcal {I}})\leqslant \eta \). The result follows.

By Theorem 3.1 and Lemma 3.4, the following theorem holds.

Theorem 3.2

If the machine speeds \(s_1,s_2,\cdots ,s_m\) are fixed, then

where \(\frac{\sum ^m_{i=1}s_i}{s_1}=t+\Delta \), t is a positive integer with \(1\leqslant t\leqslant m\) and \(0\leqslant \Delta <1\). If the machine speeds \(s_1,s_2,\cdots ,s_m\) are parts of the input, then \(\mathrm{WAR}(\mathcal{P}) \geqslant \frac{\sqrt{m}+1}{2}\) for \(\mathcal{P} \in \{ Qm(\mathrm{NP}), Qm(\mathrm{PP})\}\).

4 Unrelated Machines

Since Qm is a special version of Rm, from the results in the previous section, the weak simultaneous approximation ratio is at least \(\frac{\sqrt{m}+1}{2}\) for each of \(Rm(\mathrm{NP})\), \(Rm(\mathrm{PP})\) and \(Rm(\mathrm{FP})\). The following lemma establishes an upper bound of the weak simultaneous approximation ratio for the three problems.

Lemma 4.1

\(\mathrm{WAR}(\mathcal{P}) \leqslant \sqrt{m}\) for \(\mathcal{P} \in \{ Rm(\mathrm{NP}), Rm(\mathrm{PP}), Rm(\mathrm{FP})\}\).

Proof

Let \(\mathcal{I}\) be an instance of \(R_m(\mathrm{NP})\), \(R_m(\mathrm{PP})\) or \(R_m(\mathrm{FP})\). Let S be a schedule which minimizes the makespan with \(L^S_1\geqslant L^S_2\geqslant \cdots \geqslant L^S_m\). Let \(p_{[j]}=\min _{1\leqslant i\leqslant m}\{p_{ij}\}\).

If \(L^S_1\leqslant \frac{\sum ^n_{j=1}p_{[j]}}{\sqrt{m}}\), let T be a feasible schedule with \(L^T_{\pi (1)}\geqslant L^T_{\pi (2)}\geqslant \cdots \geqslant L^T_{\pi (m)}\) for some permutation \(\pi \) of \(\{1,2,\cdots ,m\}\). For each i, we have

This means that \(s^{*}(\mathcal{I})\leqslant \sqrt{m}\).

If \(L^S_1>\frac{\sum ^n_{j=1}p_{[j]}}{\sqrt{m}}\), let R be the schedule, in which each job \(J_j\) is assigned to the machine \(M_i\) with \(p_{ij}= p_{[j]}\). Let O be an arbitrarily feasible schedule, and let \({\pi }_1\) and \({\pi }_2\) be two permutations of \(\{1,2,\cdots ,m\}\) such that \(L^R_{{\pi }_1(1)}\geqslant L^R_{{\pi }_1(2)}\geqslant \cdots \geqslant L^R_{{\pi }_1(m)}\) and \(L^O_{{\pi }_2(1)}\geqslant L^O_{{\pi }_2(2)}\geqslant \cdots \geqslant L^O_{{\pi }_2(m)}\). For each i, we have

This also means that \(s^{*}(\mathcal{I})\leqslant \sqrt{m}\). The lemma follows.

Combining with the results of the previous section, we have the following theorem.

Theorem 4.1

For each problem \(\mathcal{P} \in \{Qm(\mathrm{NP}), Qm(\mathrm{PP}), Qm(\mathrm{FP}), Rm(\mathrm{NP}), Rm(\mathrm{PP}), Rm(\mathrm{FP})\}\), we have \(\frac{\sqrt{m}+1}{2} \leqslant \mathrm{WAR}(\mathcal{P}) \leqslant \sqrt{m}\).

5 Conclusion

We introduced and studied the strong and weak simultaneous approximation ratios, denoted by \(\mathrm{SAR}(\mathcal{P})\) and \(\mathrm{WAR}(\mathcal{P})\), of various parallel machine scheduling problems \(\mathcal{P}\). Since determining \(\mathrm{SAR}(\mathcal{P})\) is trivial for most standard problems, we mainly presented research on the values \(\mathrm{WAR}(\mathcal{P})\). Our contributions are summarized in Table 1.

For further research, it is worth studying to determine the exact value of \(\mathrm{WAR}(\mathcal{P})\) or improve the bounds of \(\mathrm{WAR}(\mathcal{P})\) for

References

Csirik, J., Kellerer, H., Woeginger, G.: The exact LPT-bound of maximizing the minimum completion time. Oper. Res. Lett. 11, 281–287 (1992)

Deuermeyer, B.L., Friesen, D.K., Langston, A.M.: Scheduling to maximize the minimum processor finish time in a multiprocessor system. SIAM J. Discrete Math. 3, 190–196 (1982)

Graham, R.L.: Bounds for certain multiprocessing anomalies. Bell Syst. Tech. J. 45, 1563–1581 (1966)

Graham, R.L.: Bounds for multiprocessing timing anomalies. SIAM J. Appl. Math. 17, 416–429 (1969)

McNaughton, R.: Scheduling with deadlines and loss functions. Manag. Sci. 6, 1–12 (1959)

Bhargava, R., Goel, A., Meyerson, A.: Using approximate majorization to characterize protocol fairness. In: Proceedings of the 2001 ACM Special Interest Group for the computer systems performance evaluation community. International Conference on Measurement and Modeling of Computer Systems, pp. 330–331. ACM, New York (2001)

Goel, A., Meyerson, A., Plotkin, S.: Combining fairness with throughput: online routing with multiple objectives. J. Comput. Syst. Sci. 63, 62–79 (2001)

Goel, A., Meyerson, A., Plotkin, S.: Approximate majorization and fair online load balancing. ACM Trans. Algorithms 1, 338–349 (2005)

Kleinberg, J., Rabani, Y., Tardos, É.: Fairness in routing and load balancing. J. Comput. Syst. Sci. 63, 2–20 (2001)

Kumar, A., Kleinberg, J.: Fairness measures for resource allocation. SIAM J. Comput. 36, 657–680 (2006)

Acknowledgements

The authors would like to thank the associate editor and two anonymous referees for their constructive comments and helpful suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper is dedicated to Professor Ding-Zhu Du in celebration of his 70th birthday.

The first author was supported by the National Natural Science Foundation of China (Nos. 11601198 and 71761015). The second author was supported by the National Natural Science Foundation of China (No. 11671368).

Rights and permissions

About this article

Cite this article

Wan, L., Yuan, JJ. Simultaneous Approximation Ratios for Parallel Machine Scheduling Problems. J. Oper. Res. Soc. China 7, 485–500 (2019). https://doi.org/10.1007/s40305-019-00253-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40305-019-00253-0