Abstract

Marginal analysis evaluates changes in a regression function associated with a unit change in a relevant variable. The primary statistic of marginal analysis is the marginal effect (ME). The ME facilitates the examination of outcomes for defined patient profiles or individuals while measuring the change in original units (e.g., costs, probabilities). The ME has a long history in economics; however, it is not widely used in health services research despite its flexibility and ability to provide unique insights. This article, the second in a two-part series, discusses practical issues that arise in the estimation and interpretation of the ME for a variety of regression models often used in health services research. Part one provided an overview of prior studies discussing ME followed by derivation of ME formulas for various regression models relevant for health services research studies examining costs and utilization. The current article illustrates the calculation and interpretation of ME in practice and discusses practical issues that arise during the implementation, including: understanding differences between software packages in terms of functionality available for calculating the ME and its confidence interval, interpretation of average marginal effect versus marginal effect at the mean, and the difference between ME and relative effects (e.g., odds ratio). Programming code to calculate ME using SAS, STATA, LIMDEP, and MATLAB are also provided. The illustration, discussion, and application of ME in this two-part series support the conduct of future studies applying the concept of marginal analysis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Incremental costs are widely reported when evaluating competing interventions and there is another statistic that is applicable to decision making focused on the consequences of a unit (i.e., marginal) change in a variable (or combination of variables). |

Despite its historical availability, flexibility, and ability to yield useful insights, the marginal effect (ME) is not widely reported in health services research studies investigating, for example, medication use, hospital stays, utilization of other health services, and survival. |

This two-part study reviews prior literature regarding the use and reporting of ME (Part I) and provides ME formulas (Part I), statistical code (Part II), and empirical examples (Part II) to support its use and interpretation across a variety of models commonly used in health services research. |

1 Introduction

Marginal effect (ME) is an inferential summary statistic that provides a measure of the impact of a unit change in an independent variable or covariate (of a regression model) on the dependent variable, where the change is measured in the natural units of the dependent variable [1]. The theoretical derivation of the ME involves the application of standard rules for differentiation, and, accordingly, is conceptually straightforward. However, the practical application of the concept raises new issues about which guidance is limited. These issues include: (1) the choice between and interpretation of the average ME (AME) or the ME at the mean (MEM); (2) the software options and coding for calculating AME or MEM; (3) the choice of estimator for calculating confidence intervals from the ME statistic.

This study investigated the practical issues related to the calculation and reporting of ME in health services research; this article represents Part two in a two-part series. Part one presented the theoretical concept and justification for use of the ME, derivation of ME for a number of statistical models, and estimators for standard error estimation of ME. We discuss the empirical issues mentioned above and include empirical examples designed to illustrate these points. The empirical examples do not focus on model specification and assume that the statistical adequacy of the regression model has already been established in order to focus specifically on the issues being examined.

Increased use of the ME will provide opportunities for gaining unique insights into cost and utilization patterns. These insights will be unique to ME because, unlike odds ratios and relative risks, the ME is measured in absolute, not relative terms, and is measured in natural units of the dependent variable (e.g., probability, cost, survival time), facilitating interpretation and translation for decision making.

2 Interpretation and Use of the Marginal Effect

A researcher faces an array of considerations regarding which measure of ME to report, which approach to take when estimating confidence intervals (and standard errors), and what statistical software to use, which can result in nuances in the reporting and interpretation of results using the ME. In discussing these considerations, we place stronger emphasis on discrete differences because regression models commonly applied in cost and utilization studies include categorical independent variables such as race or disease severity. The empirical examples include a continuous covariate, age, for which ME are reported as well. Part one of this two-part series provided derivations for ME for continuous covariates that enter both in a linear and nonlinear fashion. The topics discussed in this primer regarding the use of ME apply to both categorical and continuous independent variables.

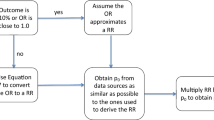

The discussion in this section focuses on calculating ME using SAS [2], STATA [3], and LIMDEP/NLOGIT [4]. Before presenting specifics about the software, it is important to understand the difference in interpretation between relative and absolute effects as well as the different types of ME that can be estimated. Studies investigating health services utilization and costs utilize models of categorical outcomes, time to event data, or rates. Effects are often represented in relative terms (i.e., ratios) as odds ratios (ORs), risk ratios (RRs), or hazard ratios (HRs). The origin of these measures is 1 while the origin of ME is 0 in the case of a linear difference. In addition, the ratios are always positive while the ME may span the entire real number line to include positive and negative values. The ratios quantify the relative differences between defined groups while the ME quantifies the incremental difference in outcomes between defined groups. The relative difference adjusts for baseline differences between patient groups. At the same time, the relative difference is unitless and does not convey a sense of magnitude.

The ME is an appropriate measure of association when the goal is to convey the size of a change in the outcome. An OR of 1.7 associated with an indicator for receipt of drug X in a model for the probability of a serious adverse event (SAE) indicates that the odds of an SAE in the treated group are 1.7 times higher than the odds of an SAE in the control group. This result confirms the direction of the association but does not convey the magnitude of the impact at the patient level. An ME of 0.13 indicates that there is a 13 % point increase in the probability of experiencing an SAE associated with receipt of drug X. Compared to changes in the odds of an SAE, changes in the probability of an SAE are easier to understand and explain when considering the effect of a policy that would provide access to drug X. Similarly, if the outcome of interest was the total 12-month expenditure among patients experiencing an SAE, a payer faced with a formulary decision regarding drug X would be more interested in a measure that conveyed the magnitude of the effect (e.g., a $2,500 increase in total expenditure associated with receipt of drug X) compared to a relative effect estimate (e.g., a cost ratio of 1.13 which depicts a 13 % increase in average costs comparing the treated group to the control group).

There are two types of ME that can be estimated: (i) MEM and (ii) AME. The MEM estimates are produced using the sample means of the data, while AME estimates are obtained by estimating the ME separately for each observation and then averaging over the individual ME [5]. The AME is also known as an average partial effect [5], predictive margin [6], and an ME estimated using the method of recycled predictions [7]. The difference in AME and MEM estimates will depend on the parameter estimates and variability in the data [8]. The MEM approach provides the ME for an individual with average characteristics or could be calculated at other specific values of the independent variables, providing ME estimates for different representative individuals. This approach may be of interest to obtain stratified results for the ME. The AME estimates what the ME will be on average for the individuals in the sample; that is, the quantity of interest here is \( E_{x} \left( {ME_{i} } \right) \) [5]. Another benefit of using the AME is the availability of ME for each individual in the sample.

In theory, the AME is preferred to the MEM because the mean values specified for the MEM may not always be realistic across all variables included in the regression model and there may be no data points that represent the specified mean values. For example, the Charlson comorbidity index (CCI) is often entered into regression models as a categorical variable. Whether based on the categorical version or the original index values of the CCI, the mean value is likely to be a noninteger. Because the variable can only take on integer values, there would be no individual represented at the specified mean value of the CCI. Conceptually, the calculation of the MEM value is not desirable with a binary independent variable because, as noted by Dowd et al. [9], “no one in the dataset will be 60 % female or 20 % pregnant”. As an alternative to calculating the ME at the mean of the independent categorical variables, researchers can set the categorical variable at its mode, or the value that occurs most often. The approaches for calculating AME and MEM are discussed in the software packages described below.

SAS: The SAS software package has two primary procedures that produce ME estimates. PROC QLIM can model and provide ME estimates for models of categorical dependent variables. The procedure provides a vector of individual ME that can be used to calculate AME for each covariate (by specifying the “marginal” option in the “OUTPUT” statement of the procedure), but standard errors for the AME must be calculated separately. PROC NLMIXED can model and provide AME estimates for user-specified nonlinear regression models. The user must explicitly code the formulas for the relevant ME of interest within the procedure and the procedure will provide standard error estimates for the AME using the delta method [5]. Procedures for obtaining AME estimates for the logistic regression model and more details concerning PROC QLIM and PROC NLMIXED can be found in the SAS Help Menu and on the SAS website [10]. A benefit of manually coding the ME is that it encourages an understanding of the ME concept before using it in analysis. On the other hand, the need to manually code the ME leaves the analysis open to errors due to mistakes in coding. This latter possibility is reduced with automated procedures in STATA.

STATA: To estimate the MEM or AME in STATA, a modeler can use the post-estimation command “margins”. This command provides both MEM and AME estimates for a wide range of regression models in STATA, including the models discussed in Parts one and two of this series. By default, the “margins” command estimates AME, but MEM estimates can be obtained by including “atmeans” in the options part of the command. Standard errors for ME are estimated using the delta method, but a linearization method is available that allows for heteroskedasticity, violation of distributional assumptions, and correlation among observations (e.g., from sampling). More information can be found in the STATA manual [11].

LIMDEP/NLOGIT: The LIMDEP/NLOGIT software package may be less familiar to health services researchers and is useful to discuss due to the functionality it brings to the analysis of categorical dependent variables. There are two methods available in LIMDEP/NLOGIT to obtain ME estimates [4]. The first method is to specify the option “marginal effects” or “partial effects” within the specified regression command. This will provide MEM estimates calculated using analytical derivatives for each covariate, along with standard errors estimated using the delta method. The second method is to use the post-estimation “partial effects” command. By default, this command will provide the AME estimates for each covariate, along with standard errors estimated using the delta method. MEM estimates can be obtained using the command by specifying the “Means” option.

The functions and estimation of interaction ME in all of the above software packages will have to be explicitly coded to be estimated. In addition, depending on the method employed, the calculation of the confidence interval may require manual coding. In the AME approach, the gradient will need to be calculated for each observation and then the sample average of the gradient used to calculate the standard error [5]. Other methods for standard error estimation include the bootstrap, delete-d jackknife, and the method of Krinsky and Robb [5]. It should be emphasized that when computing standard errors for AME, the standard error of interest is the standard error of the estimator for the AME; that is, \( E_{x} \left( {ME_{i} } \right) \), and not the standard deviation across the individual ME estimates used in computing the AME. This latter method is adopted by Li and Mahendra [7], and, as seen in the empirical example, leads to incorrect confidence intervals associated with an increased Type II error.

3 Empirical Examples

The following examples utilize regression models applicable to cost and utilization studies to illustrate the differences between AME and MEM, the comparison between software packages, the estimation of confidence intervals on the ME, and insights unique to the ME.

3.1 Example 1: Factors Impacting the Probability of Treatment with Metformin Using a Logistic Regression

The results from the logistic regression are traditionally presented in terms of ORs (or RRs under certain conditions). For the discrete choice regression models, the estimated ME will often range from −1 to 1, as the conditional mean is a conditional probability with range (0,1). If the estimated ME falls outside this range, the researcher may want to re-examine performance during model estimation, data, and estimation code. This example shows how to calculate the AME and MEM for a logistic regression. The interaction term is included and the results are presented and interpreted. This empirical application examines the factors that influence the likelihood of a type-2 diabetic patient being prescribed metformin instead of all other oral hypoglycemic agents (OHAs). The study population consisted of a Maryland population covered by a large private insurance group. Only adult patients (over 18 years of age) who were continuously enrolled between 1 January 2005 and 30 November 2007 with both medical and pharmacy benefits and at least one pharmacy claim for OHAs were included. Patients were excluded if they used insulin or any injectable (e.g., exenatide) or newer agents (e.g., sitagliptin alone or in combination). The model included demographic variables, type of insurance, and observable risk factors for myocardial infarction and heart failure measured within 12 months prior to the index date. The 95 % confidence intervals for AME and MEM were derived using the bootstrap technique for 1,000 resamples.

There were 6,697 patients with an average age of 56.6 ± 9.9 years; 53 % were male (Table 1). Based on the AME, we found that men were 9.4 % points (95 % CI −11.7 to −7.2 %) less likely than women to be prescribed metformin (Table 1). In addition, the likelihood of being prescribed metformin was 0.8 % points lower (95 % CI −0.94 to −0.64 %) for every 1-year increase in age. Results based on the MEM were similar. The ME estimates for the MEM and AME of each covariate and the interaction effects are close in value but not identical. These differences are caused by the differences in the parameter estimates and the variability in the data [8]. These differences will be more pronounced in small to medium-sized samples [5]. Using Li and Mahendra’s code for CI estimation resulted in confidence intervals for the MEs that were too narrow, which could lead a modeler to wrongly conclude that the MEs for some independent variables were statistically significant when they were not.

3.2 Example 2: Race Differences in Initial Costs Using a Generalized Linear Model

A generalized linear model was used to investigate initial costs among men experiencing skeletal complications following a diagnosis of prostate cancer (PCa), comparing non-Hispanic African American (AA) and white men. The skeletal-related events (SREs) of interest were pathological fracture (PF), spinal cord compression (SCC), or bone surgery (BS). Understanding initial cost patterns could be important for investigating cost accumulation after the development of SREs. The application utilizes linked cancer registry and Medicare claims data for men aged 66 years and older diagnosed with incident PCa between 2000 and 2007 and with associated claims from 1999 to 2009. Cases were limited to those diagnosed with stage III, stage IV, or unstaged PCa, as identified by the American Joint Committee on Cancer Tumor-Node-Metastasis stage, sixth edition [12]. Additional inclusion criteria were: (1) continuous enrollment in Medicare Parts A and B during the 12 months prior to and including the month of diagnosis; (2) survived 6 months post-diagnosis of PCa; (3) utilization associated with PF, SCC, or BS. Exclusion criteria were: (1) health maintenance organization enrollment during the 12 months prior to and including the month of diagnosis; (2) history of other cancers within 5 years prior to PCa diagnosis; and (3) diagnosis of PCa during autopsy. The ME were estimated using the AME approach and 95 % confidence intervals were derived using the bootstrap technique for 1,000 resamples.

Application of study inclusion and exclusion criteria resulted in a sample of 5,342 men with SREs. The sample was 89 % white non-Hispanic and 7 % AA with a mean age of 77 years. The median follow-up was 1,347 days and the median times to a PF, SCC, or BS were 721, 915, and 1,105 days, respectively. The relationship between AA race and total costs was investigated for the 6-month post-diagnosis period. The AME was manually coded in SAS and calculated using Li and Mehandra’s [7] code. The AME was calculated in STATA using the margins command. The CI on the AME was reported using the delta method (STATA) and the bootstrap method (SAS). Additional information on calculating the confidence intervals is available from published work [5, 9]. Codes for calculating the results reported in Table 2 are available in the Appendix.

The consistent result across all approaches was that the initial treatment costs for AA men were higher than the treatment costs for non-Hispanic white men, after controlling for demographic factors, clinical status, and prediagnosis costs from the 6 months prior to the cancer diagnosis (Table 2). Treatment costs were $9,678 higher among AA men than for white men (95 % CI $3,994–$15,553; p < 0.01). From Table 2, the CI associated with the AME was larger using the bootstrap method than for the delta method. The CI calculated based on the t-test was narrow compared with the CI obtained via either the bootstrap or delta method. As seen in Table 2, the CI based on the t-test provided in Li and Mehandra [7] is too narrow because it is based on variation in the individual-level ME estimates (used when calculating the AME) rather than on variation in the AME estimate itself. It is recommended that analysts utilize either the delta method or a data-based method such as the bootstrap to estimate CIs for the AME.

3.3 Example 3: Factors Impacting Post-Stroke Hospital Discharge Disposition Using a Multinomial Model

This application of the multinomial model illustrates the calculation of AME and interaction ME. The dependent variable was post-stroke hospital discharge disposition and explanatory variables included demographic and clinical factors. The analysis used discharge data from non-Federal short-stay hospitals in the state of Maryland. Study inclusion criteria were as follows: (1) hospital admissions with a discharge diagnosis of stroke as identified by the International Classification of Diseases, Ninth Revision, Clinical Modification (ICD-9-CM) codes 431.XX-434.XX and 436.XX-438.XX [13]; (2) patients discharged alive; and (3) at least 18 years old at admission. Discharges were excluded for the following reasons: nature of admission is listed as “Delivery”; in-hospital death; no information on patient disposition; invalid or missing identifier. The dataset consisted of 69,921 hospital admissions for stroke between 2000 and 2005. Categories of discharge disposition included home (N = 7,730), home healthcare (N = 525), rehabilitation (N = 9,997), nursing home (including intermediate care) (N = 7,323), discharges against medical advice (N = 4,755), and all other (N = 39,591).

A multinomial logistic regression model was estimated. The predictor or log odds function was assumed to be linear in the variables with the addition of three interaction terms: (i) age × race; (ii) insured status × race; and (iii) hemorrhagic stroke × race. The first and third interaction terms reflect the potential for variation across age groups in the racial disparity in discharge outcomes [14] and the potential of a differential impact on discharge outcomes associated with the increased risk of hemorrhagic stroke among AA patients relative to Caucasians [15, 16]. The second interaction term was included to account for any differential effect on discharge outcome due to lower rates of insurance coverage for non-Caucasians compared to Caucasians [17]. Standard errors were estimated using a delete-d jackknife estimator with d equal to 10 % of the data selected randomly without replacement over 5,000 pseudo-random samples. The size of the datasets may task computer memory during simulations, so the delete-d jackknife method was selected. Model and ME estimation were accomplished using SAS, LIMDEP, and MATLAB. Codes are available in the Appendix.

Estimation results for the ME are reported in Table 3. Many of the individual and interaction ME were significant at a 10 % level of significance. A category of particular interest was the discharge against medical advice (AMA). Individual ME indicated that the likelihood of a discharge AMA was 0.6, 0.3, and 1.8 % points higher among patients who were, respectively, a transfer admission, male, or uninsured. The example also provided estimation of interaction ME. Non-Caucasian patients who were older and who were uninsured were less likely to discharge AMA than Caucasian patients. Compared to Caucasians, the likelihood of being sent home following a stroke was 32.7 % points lower among non-Caucasians, while the likelihood of receiving nursing/intermediate care was 22.5 % points higher among non-Caucasians. According to the interaction ME, the results depended on the patients’ insurance status. Among uninsured non-Caucasians, the likelihood of being sent home was 17 % points higher, the likelihood of receiving nursing/intermediate care was 6.8 % points lower, and the likelihood of receiving home healthcare was 1.4 % points lower compared to insured non-Caucasians and Caucasians.

4 Conclusion

With regards to the use and reporting of ME in cost and utilization studies, we have illustrated that: (1) the choice between MEM and AME may not result in material differences in the point estimate or its confidence interval; (2) the choice of estimator for calculating confidence intervals for the ME statistic results in meaningful differences in the width of the interval, with the t-test approach underestimating variation in the ME; (3) the ME and interaction ME provide unique insights regarding heterogeneity in health services utilization; (4) software packages differ with respect to the functionality for calculating ME and its confidence interval. This two-part primer reviewed the theory and application of ME to support its use and interpretation across a variety of models used in health services research.

References

Karaca-Mandic P, Norton EC, Dowd B. Interaction terms in nonlinear models. Health Serv Res. 2012;47(1 Pt 1):255–74.

SAS. SAS Version 9.2, Copyright 2002–2008 ed: SAS Institute.

STATA. STATA Version 11.2, Copyright 1985–2009 ed: StataCorp LP.

LIMDEP / NLOGIT. LIMDEP Version 10/NLOGIT Version 5, Copyright 1986–2012. ed: Econometric Software, Inc.

Greene W. Econometric analysis. 7th ed. Upper Saddle River: Prentice Hall; 2012.

Graubard B, Edwards L, Korn E. Predictive margins with survey data. Biometrics. 1999;55(2):652–9.

Li Z, Mahendra G. Using “recycled predictions” for computing marginal effects. SAS Global Forum 2010, Statistics and Data Analysis, Paper 272-2010; 2010.

Verlinda JA. A comparison of two common approaches for estimating marginal effects in binary choice models. Appl Econ Lett. 2006;13:77–80.

Dowd BE, Greene WH, Norton EC. Computation of standard errors. Health Serv Res. 2014;49(2):731–50.

SAS. Usage note 22604: marginal effect estimation for predictors in logistic and probit models; 2014.

STATA. STATA Manual: chapter 13 margins.

AJCC. Manual for Staging of Cancer. 6th ed. Philadelphia: Lippincott; 2002.

Fang J, Alderman MH. Trend of stroke hospitalization, United States, 1988–1997. Stroke. 2001;32(10):2221–6.

Feng W, Nietert PJ, Adams RJ. Influence of age on racial disparities in stroke admission rates, hospital charges, and outcomes in South Carolina. Stroke. 2009;40(9):3096–101.

Qureshi AI, Giles WH, Croft JB. Racial differences in the incidence of intracerebral hemorrhage: effects of blood pressure and education. Neurology. 1999;52(8):1617–21.

Sturgeon JD, Folsom AR, Longstreth WT Jr, Shahar E, Rosamond WD, Cushman M. Risk factors for intracerebral hemorrhage in a pooled prospective study. Stroke. 2007;38(10):2718–25.

Hargraves JL, Hadley J. The contribution of insurance coverage and community resources to reducing racial/ethnic disparities in access to care. Health Serv Res. 2003;38(3):809–29.

Ai C, Norton EC. Interaction terms in logit and probit models. Econ Lett. 2003;80:123–9.

Efron B, Tibshirani R. An introduction to the bootstrap. New York: Chapman and Hall; 1993

Acknowledgments

The authors have no conflicts of interest to declare.

The review and interpretation of findings are the sole responsibility of the authors. Eberechukwu Onukwugha drafted manuscript sections, conducted statistical analyses, revised the manuscript with input from all co-authors and serves as the overall guarantor for the work. Jason Bergtold conducted statistical analyses, drafted manuscript sections and reviewed, commented on, and edited all drafts of the manuscript. Rahul Jain conducted statistical analyses, drafted manuscript sections, reviewed, commented on, and edited all drafts of the manuscript.

Funding

No funding was received for the preparation of this article.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Onukwugha, E., Bergtold, J. & Jain, R. A Primer on Marginal Effects—Part II: Health Services Research Applications. PharmacoEconomics 33, 97–103 (2015). https://doi.org/10.1007/s40273-014-0224-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-014-0224-0