Abstract

Phase transformations in materials systems can be tracked using atomic force microscopy (AFM), enabling the examination of surface properties and macroscale morphologies. In situ measurements investigating phase transformations generate large datasets of time-lapse image sequences. The interpretation of the resulting image sequences, guided by domain-knowledge, requires manual image processing using handcrafted masks. This approach is time-consuming and restricts the number of images that can be processed. In this study, we developed an automated image processing pipeline which integrates image detection and segmentation methods. We examine five time-series AFM videos of various fluoroelastomer phase transformations. The number of image sequences per video ranges from a hundred to a thousand image sequences. The resulting image processing pipeline aims to automatically classify and analyze images to enable batch processing. Using this pipeline, the growth of each individual fluoroelastomer crystallite can be tracked through time. We incorporated statistical analysis into the pipeline to investigate trends in phase transformations between different fluoroelastomer batches. Understanding these phase transformations is crucial, as it can provide valuable insights into manufacturing processes, improve product quality, and possibly lead to the development of more advanced fluoroelastomer formulations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

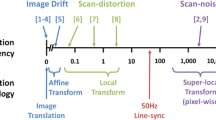

Atomic force microscopy (AFM) has revolutionized the way we visualize and understand the nano-world. It provides high-resolution, three-dimensional images of surfaces at the atomic level [1], making it an invaluable tool in materials science [2]. By offering scientists the ability to investigate surface topography, AFM has opened up new horizons in the study of surface science and nanotechnology. However, the rich information encapsulated in AFM images is not always straightforward to interpret and requires substantial domain knowledge and expertise [3].

AFM has been used in studying fluoroelastomers [4, 5], high-performance polymers characterized by wear resistance, corrosion resistance, high-temperature resilience, and excellent air tightness [6]. These materials have their properties and performance intrinsically tied to their nanoscale, specifically crystallinity [7].

AFM image analysis poses several challenges. The labor-intensive nature, coupled with a significant need for labeled training data when object detection algorithms are used [8], demands substantial expertise. This dependence often results in less accurate and inconsistent findings due to human errors and biases, compromising large-scale analysis reliability [9]. Adding to this complexity, traditional image segmentation tools, including the likes of Gwyddion, struggle with noise, irregular shapes, and manual intervention. They are time-consuming for extensive sample processing and often fall short in handling complex images and sample variations [10], further limiting their adaptability and efficiency in comprehensive analysis.

To address these challenges, we propose VizFCD (Visualization of Fluoroelastomer Crystallite Detection), an end-to-end image processing pipeline that focuses on analyzing the phase transformations of image sequences. We demonstrate this for fluoroelastomer AFM image sequences in the form of videos. VizFCD requires only a few annotated data and raw AFM images as inputs. It uses a combination of object detection and segmentation algorithms and applies data augmentation techniques to improve model performance, even with limited labels [11].

With the advent of computer vision technologies, the analysis of AFM images has seen significant improvements [12, 13]. Algorithms such as YOLO (You Only Look Once) for object detection [14] and DeepLab for image segmentation [15] can automate the detection and segmentation of fluoroelastomer grains, making the process more efficient, accurate, and consistent. These methods have proven to be successful in identifying complex patterns and structures in images, thereby overcoming some of the limitations of manual analysis. However, these methods still require a considerable amount of labeled training data, which is often challenging to obtain for scientific use.

VizFCD reduces the manual effort and time required for labeling and detection and provides automatic analysis of the results. By adopting data augmentation that integrates object detection and segmentation, it enables a few-shot learning-based detection [16] that can mitigate human biases in manual analysis. Moreover, it enhances the scalability of the analysis, enabling researchers to process and analyze larger datasets more efficiently.

VizFCD demonstrates how the combination of advanced imaging techniques with deep learning [17] can revolutionize the analysis of materials science problems. By providing an automated and streamlined approach to analyze the crystallization behavior of fluoroelastomer, it offers important insights for the design and development of high-performance materials. This work exemplifies how deep learning and AI can be leveraged to enhance our understanding of materials at the nanoscale and help drive advancements in materials science.

Experimental and Analytical Details

FK-800 is a polymer composed of 75% chlorotrifluoroethylene (CTFE) and 25% vinylidene fluoride (VDF) [18,19,20]. When FK-800 is aged at temperatures ranging from 35 to 90\(^\circ \)C, it forms polychlorotrifluoroethylene (PCTFE) crystals [21].

This aging process is a crucial aspect to consider when analyzing fluoroelastomer phase transformation, as it significantly affects mechanical properties [22].

To prepare FK-800 for AFM analysis, the powdered sample was dissolved in ethyl acetate and spin-coated onto a silicon wafer. The aging process was induced by controlling the environmental temperature. Similarly, Kel-F, another fluoroelastomer, was prepared following the same method [23].

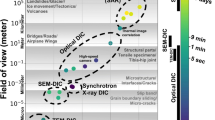

In our study, we used AFM to obtain height, phase, amplitude, and Z-sensor images for both Kel-F and FK-800 samples using a scanning density of 512 lines/frame, as shown in Fig. 1.

To analyze AFM images and identify unique features, we started by visually examining the images to identify distinct crystallization behaviors. We were able to differentiate two types of crystallization behaviors: spherulitic or isotropic growth [24], and lamellar or biaxial growth [25], as shown in Fig. 2. Once we had identified these two classes of behaviors, we manually trained our classifiers using the AFM images to obtain the necessary training data. The training data were then used for fast feature detection to classify new images into one of the two identified classes. By using this approach, we were able to automate the identification of the different types of crystallites in new samples, significantly reducing the time and effort required for the analysis.

Data Ingestion and Pre-processing

Our pipeline starts with data ingestion so the raw datasets can be accessed and analyzed directly in our compute cluster. This step consists of data cleaning, data organization, and creating associations among the datasets and their respective metadata. In order to establish these associations, proper mapping allows for querying of our data using CRADLE and HBase [26], ensuring efficiency and fast output. The added benefit of centralizing our operations is that it allows us to fully script our workflow, establish encryption using REDcap [27] for added security, and easily add back the outputs from our analysis or deep learning models into our centralized environment so that we can create new associations. We first inspect that datasets that correspond to experiments. Raw files, metadata, and parameter files are ingested. Macros, processed files pertaining to specific software formats, and .txt files that do not pertain to the samples are not ingested.

The AFM movies consist of processed AFM image sequences that are stitched into a movie (as either .avi/.mov). There are five movie files with a cumulative file size of 900 MB. Table 1 shows that the combined file size of the raw files total \(\sim \) 82 GB.

\(MDS^3-VizFCD\) Pipeline

Objective

The goal of the \(MDS^3\,(Materials\quad Data\quad Science\quad for\quad Stockpile\quad Stewardship)-VizFCD\) Pipeline is to extract fluoroelastomer crystallites from raw AFM images, obtain their classification and location information, and automatically estimate their growth kinetics based on the detection results. First, the pipeline employs advanced object algorithm:YOLO [14], to process the raw images, thus obtaining accurate information about the fluoroelastomer’s classification and location. Second, the pipeline integrates an image segmentation algorithm:DeepLab [15] to provide accurate crystallites area. The segmented images are then annotated and used as training data to improve the object detection model, enabling image retrieval functionality. As the third step, the pipeline analyzes the growth of fluoroelastomer over time, using the obtained position information and area information provided by the results of object detection and image segmentation, respectively.

Pipeline Framework

The \(MDS^3-VizFCD\) Pipeline effectively accomplishes its objectives by incorporating and modularizing object detection, image segmentation, data augmentation, and statistical analysis. The entire pipeline is composed of four interconnected modules, as illustrated in Fig. 3. Users provide AFM raw images and a small set of annotated data to Module 1: Object Detection Module. The resulting fluoroelastomer detection output from Module 1 serves as the input for Module 2: Image Segmentation Module. Subsequently, the fluoroelastomer segmentation output from Module 2 is sent into Module 3: Data Augmentation Module. Module 3 produces augmented annotated images, which are then used as training data for Module 1 to improve the detection accuracy. Furthermore, Modules 1 and 2 generate fluoroelastomer classification and area information, which are used as inputs for Module 4: Fluoroelastomer Crystallite Analysis Module. Ultimately, Module 4 provides an output detailing the kinetics estimation relationship between fluoroelastomer crystallites area and time.

Modules

Module 1: Object Detection Module

In the Object Detection Module, we applied YOLO (You Only Look Once) [14], a real-time object detection algorithm that can identify both the category and position of objects within an image through a single glance. This module processes the AFM raw data, which are used as input after data ingestion.

In contrast to the typical region-based models, YOLO adopts a completely different approach to object detection. It divides the input image into a \(S \times S\) grid, and each grid cell predicts a fixed number of bounding boxes along with the class probabilities. Each bounding box is represented by five predictions: the \(x\), \(y\) coordinates, the width, the height, and a confidence score. The confidence score reflects the likelihood of containing an object and the accuracy of the bounding box.

Each grid cell also predicts a class probability distribution over the potential classes. The final prediction is obtained by multiplying the confidence scores with the class probability distributions. This process results in each bounding box being assigned to a specific class with a certain probability.

This unique approach allows YOLO to predict multiple objects in different locations of the image in one forward pass, making it exceptionally efficient and suitable for real-time applications, with a substantial gain in speed compared to traditional region-based models [28].

We extended our analysis by incorporating YOLOv3 into our study [29]. This version of YOLO significantly improved upon the previous iterations by using three different scales of feature maps for predictions, allowing for enhanced object detection at varying sizes. It employed a new deep neural network structure known as Darknet-53, which expands the depth and width of the network, thereby optimizing the model’s performance. Although YOLOv3 demonstrated substantial improvement in terms of computational resource utilization and speed, it left room for enhancement in predictive accuracy.

By integrating YOLOv4 into this module, we achieved enhanced performance in both accuracy and speed of object detection compared to previous versions of YOLO. The upgraded module benefits from utilizing advanced techniques such as CSPNDarknet53, SPP, PAN, and the Mish activation function. CSPNDarknet53 is a new backbone architecture that improves feature reuse and reduces computation by introducing a Cross-Stage Partial Network (CSP) [30] module. This architecture allows for communication between different stages of the network, enabling the reuse of features and reducing the overall number of computations required. This leads to faster processing and improved accuracy. Spatial Pyramid Pooling (SPP) [31] enables the network to capture features at multiple scales, which is particularly useful for object detection tasks where objects can vary greatly in size. Path Aggregation Network (PAN) [32] aggregates features across different levels of the network, improving detection accuracy. Mish activation function [33] is a novel activation function that outperforms traditional functions like ReLU. Overall, the integration of these advanced techniques into YOLOv4 makes it a powerful tool for object detection tasks that require high accuracy and fast processing speeds.

We also investigated the cutting-edge YOLOv7 for object detection [34]. This latest addition to the YOLO family delivers a substantial leap in real-time object detection accuracy without increasing inference costs. YOLOv7 substantially reduces parameters by approximately 40% and computation by 50%, compared to other state-of-the-art real-time object detectors. It offers a faster, more potent network architecture, leading to higher detection accuracy and quicker inference speeds.

YOLOv7 introduces significant architectural changes, including the Extended Efficient Layer Aggregation Network (E-ELAN), model scaling for concatenation-based models, trainable bag of freebies, planned re-parameterized convolution, and coarse for auxiliary and fine for lead loss. These enhancements offer more effective feature integration, more robust loss functions, and increased label assignment and model training efficiency. As a result, YOLOv7 requires significantly less computing hardware compared to other YOLO versions. It can also be trained more rapidly on small datasets without any pre-trained weights.

Overall, the incorporation of YOLOv3, YOLOv4, and YOLOv7 into our object detection module has not only allowed us to compare and contrast their performance, but also to harness their unique strengths for our specific needs. We integrated the YOLO family into a built-in library for the object detection module of our general framework. As a result, we optimized both the accuracy and speed of object detection, enabling more efficient processing of AFM datasets with limited labeled data.

Module 2: Image Segmentation Module

In this module, we conducted image segmentation on AFM time-series images of fluoroelastomer thin-films, using results from Module 1 to accurately segment their areas. Notably, the segmentation analysis yields geometric features like borders, distances, geometric centers, angles, radii, and more. These features are essential, as they offer valuable image characteristics closely related to the detected crystallite objects, enhancing our downstream data-driven analysis. DeepLab [15] is a popular image segmentation architecture that has achieved state-of-the-art results on several benchmark datasets. It is based on the Fully Convolutional Network (FCN) architecture [35] and utilizes atrous convolution [36], or dilated convolution [37], to effectively capture multi-scale contextual information. DeepLab also includes a novel feature called the Atrous Spatial Pyramid Pooling (ASPP) module, which allows the network to capture features at multiple scales using different dilation rates. This enables the network to accurately segment objects of different sizes in the image. Another important feature of DeepLab is the use of the global pooling layer, which aggregates information from the entire feature map and produces a fixed-size representation of the image. This helps to ensure that the network is able to capture global contextual information, which is particularly important for accurately segmenting objects in complex scenes. Overall, DeepLab is a powerful image segmentation architecture that has achieved state-of-the-art results on several benchmark datasets. It uses of atrous convolution and the ASPP module, as well as its incorporation of global contextual information the architecture, make it well-suited for our specific segmentation module tasks on isolated fluoroelastomer.

Module 3: Data Augmentation Module

The lacking of annotated training examples have been another challenge. In response, we perform data augmentation [11] to generate synthetic training images that simulates the real distribution of the given ones, to improve the generality and accuracy of our detection framework. In the Data Augmentation Module, we built on the output from Module 2’s image segmentation and emulated the output format of image annotation tools to produce annotated data that are similar to generated by the tools, thereby achieving data augmentation. The primary objective was to increase the number of images in our training set, consequently enhancing the accuracy of object detection.

We have selected LabelMe [38] as our preferred labeling tool. LabelMe is a graphical image annotation tool that can label images using polygons, rectangles, circles, polylines, line segments, and points. It generates training datasets in JSON format, which, after proper formatting, can be fed into our object detection algorithm as training data.

Module 3 took the segmented object output from Module 2 and processed it further to achieve data augmentation, as shown in Fig. 4. Utilizing the pixels drawn by Module 2, Module 3 extracted a mask for each fluoroelastomer crystallite, effectively isolating and defining the boundaries of the mask. The process involved the following steps:

-

1.

For each fluoroelastomer crystallite in Module 2’s segmentation result, Module 3 identified its boundary coordinates by analyzing the changes in pixel intensity or color.

-

2.

Once the boundary coordinates were determined, Module 3 connected them, creating a well-defined shape that accurately represents the original fluoroelastomer.

-

3.

Module 3 then generated a label for the fluoroelastomer, which contained information such as the type of fluoroelastomer (defined by Module 1), and any additional attributes or properties.

-

4.

Afterward, Module 3 mimicked the JSON format output by script, combining the labels and the boundary coordinates to create annotated data for each fluoroelastomer.

-

5.

Finally, Module 3 combined the annotated data for all the fluoroelastomer in the image to generate a comprehensive JSON format dataset, ready to be used as training data for the object detection algorithm.

Through data augmentation, we effectively increased the diversity and quantity of the training dataset, which are essential for reducing overfitting and improving the model’s generalization capabilities. By focusing on the best-performing segmentation results for spherulitic growth fluoroelastomer crystallites, we were able to create an enriched, high-quality training dataset. This, in turn, contributed to the overall performance and robustness of our object detection model when encountering new, unobserved data.

Workflow for Module 3: Data Augmentation. The process begins with utilizing results from the Object Detection and Image Segmentation Modules. Each fluoroelastomer crystallite is then isolated using extracted masks, which precisely define their boundaries. This augmentation subsequently aids in retraining the Object Detection Module

Module 4: Fluoroelastomer Crystallite Analysis Module

In this module, we complete the end-to-end framework by linking the few-shot detection modules to Fluoroelastomer Crystallite analysis. Since the AFM image under study is a time-series, object detection can only address detection in a single frame and is incapable of tracking the fluoroelastomer crystallite through temporal changes. In order to study the behavior of a single fluoroelastomer crystallite over time, we need to use Module 4 for fluoroelastomer crystallite tracking.

Through the object detection algorithm of Module 1, we can obtain the classification of fluoroelastomer crystallite and its location information. Module 1 generates a bounding box for each detected fluoroelastomer crystallite, from which we calculate the geometry of the center of each bounding box. At different time points, we use the DBSCAN algorithm to cluster all detected fluoroelastomer crystallites according to their geometric centers. We then assign all fluoroelastomer crystallites classified into the same group as identical fluoroelastomer crystallites across different time periods for behavioral studies.

Density-Based Spatial Clustering of Applications with Noise (DBSCAN) is a density-based clustering algorithm that we used in our experiment to analyze the fluoroelastomer crystallites [39, 40]. DBSCAN clustering groups samples based on the maximum density obtained from their density reachability relationship. The first step in our approach was the object detection in Module 1, which provided classification and location information for each fluoroelastomer crystallite. This process generated a bounding box for each detected crystallite, from which we calculated the geometric center. We then employed DBSCAN to cluster all detected fluoroelastomer crystallites according to their geometric centers across different time periods. Each cluster, composed of crystallites that shared the same group classification, was considered to be identical for behavioral studies. This approach leveraged the unique capability of DBSCAN to group data samples based on their density, providing us with a powerful tool for tracking the behavior of specific fluoroelastomer crystallites over time.

After obtaining identical fluoroelastomer crystallite clusters at different time intervals, we used the area of the fluoroelastomer crystallite obtained by Module 3 to estimate the kinetic change in a single fluoroelastomer crystallite over time. This is a regression problem. By combining the data from Module 1 and Module 2, we fit the Avrami equation to infer the kinetic growth trend of fluoroelastomer crystallites using the rate constant, k.

The Avrami equation [41] is a widely used model to describe the kinetics [42] of phase transformations. The equation describes the fraction of transformed material as a function of time.

where X is the fraction of transformed material, k is the rate constant, t is time, and n is the Avrami exponent. The rate constant k, is related to the rate of nucleation and growth of the new phase. A higher value of k indicates a faster transformation rate. The Avrami exponent n is a measure of the growth mechanism of the new phase. A value of n = 1 corresponds to one-dimensional growth, n = 2 to two-dimensional growth, and n = 3 to three-dimensional growth.

We chose the Avrami equation in this study because it provides a suitable mathematical model to describe the growth of fluoroelastomer crystallites. Its ability to account for both the nucleation and growth rates allowed us to effectively analyze and predict the transformation process over time. In our case, we used n = 2 to represent spherical growth since crystallites are 50 nm thick and microns laterally so most of the growth can be approximated as a two-dimensional growth. By fitting the Avrami equation to our data, we estimated the rate constant, k, for each crystallite, which helps us to better understand and predict the growth trends of fluoroelastomer crystallites.

Evaluation Metrics

In our experiment, we introduced several evaluation methods to evaluate the performance of data augmentation module. mAP (mean average precision) is commonly used to measure the performance of object detection algorithms. It is calculated by calculating the average precision (AP) of each category in the detection results of multiple categories, and then calculating the AP of all categories. The average of these AP values gives us the mAP value [43].

We also used recall [44] to evaluate our data augmentation module performance. It is defined as the ratio of true positives (TP) to the sum of true positives and false negatives (FN). The formula for Recall is as follows:

The Recall value ranges from 0 to 1, where a higher value indicates a better ability of the model to capture the positive instances accurately.

F1-score is a metric that combines precision and recall into a single value, providing an overall measure of the model’s performance. It is the harmonic mean of precision and recall and helps assess the balance between them. The formula for F1-score is given by:

A higher F1-score indicates a better trade-off between precision and recall, reflecting a more accurate and comprehensive object detection performance.

mIOU (Mean Intersection over Union) is a widely used evaluation metric in image segmentation tasks. It measures the average Intersection over Union (IOU) [45] for each class, providing an overall assessment of the Image Segmentation Module’s accuracy. IOU measures the overlap between the predicted segmentation and the ground truth label for each class. It is calculated as the ratio of the intersection area to the union area between the prediction and the ground truth. The IOU values range from 0 to 1, where a higher value indicates a better alignment between the predicted and ground truth segmentations.

It should be noted that in object detection tasks, mAP [14] is generally considered to be a more reliable model performance indicator because it directly reflects the accuracy of the model and its ability to balance precision and recall. In image segmentation tasks, mIOU is considered as a performance indicator.

Dataset Splitting and Augmentation Strategy

In order to investigate the impact of the Data Augmentation Module on the Object Detection Module, a systematic and comprehensive approach was employed. Given a initial dataset consisting of 60 annotated images of size 512x512 pixels from five AFM movies, a thoughtful approach was adopted to assess the model’s performance under various levels of data augmentation, while ensuring the comparability and reliability of the results across different experiments. To this end, a consistent split of 60

To thoroughly examine the impact of data augmentation, different levels of augmentations were applied, specifically adding 0, 30, 60, 100, and 150 augmented images from Data Augmentation Module to the training set. It is crucial to note that these augmentations were exclusively applied to the training data, leaving the validation and test sets unaltered to preserve the integrity of the evaluation process.

This strategy, ensuring uniformity in dataset partitioning and augmentation application, established a controlled experimental setting. It allowed for an unbiased comparison of the model’s adaptability, robustness, and performance under different degrees of data diversity introduced through augmentations. By maintaining the same images for validation and testing across all levels of augmentation, we were able to accurately isolate and assess the impact of data augmentation on the model’s learning efficacy and generalization capabilities.

Results

Evaluation of Object Detection Module

Model Performance

In our experiments, we carried out a comparative analysis of the impact of data augmentation on Module 1: Object Detection. We evaluated three different versions of YOLO (YOLOv3, YOLOv4, YOLOv7) in conjunction with various levels of data augmentation. Initially, our model was trained using a relatively small dataset of 20 annotated images. To study the effect of data augmentation, we enhanced the original dataset by applying 30, 60, 100, and 150 image augmentations, respectively. Then, we compared their loss and Mean Average Precision (mAP) values during the training phase.

Our findings, which include the mAP, F1-Score and training loss with different data augmentation for each YOLO model, are presented in Figs. 5 and 6. The insights gained from this analysis offer a valuable understanding of the relationship between different versions of YOLO and varying levels of data augmentation.

Comparison of performance metrics for different YOLO versions across varying numbers of data augmentation images on test set. a mean Average Precision (mAP) indicates the accuracy of the object detection across different versions. b F-1 score shows the balance between precision and recall, with YOLOv7 generally leading in most cases. The results consistently indicate the performance improvement of YOLOv7 over YOLOv3 and YOLOv4, especially as the number of augmentation images increases

Training loss across iterations for various models and data augmentation levels. The plot illustrates the evolution of the training loss over iterations for three object detection models: YOLOv3, YOLOv4, and YOLOv7, each subjected to different data augmentation levels (0, 30, 60, 100, and 150). Each line represents a unique combination of model type and data augmentation level, with 15 distinct color-coded lines in total

Case Study of Object Detection Module

Figure 7a–d showcases the performance of the object detection output of Module 2 after integrating data augmentation from Module 3 into its training data, exhibiting object detection results on various AFM images. We generated bounding boxes of two colors to differentiate between crystallites with two distinct growth trends. The pink bounding box represents crystallization behavior in spherulitic or isotropic growth, while the green bounding box indicates crystallization behavior in lamellar or biaxial growth.

Results of YOLO detection for Kel-F and FK-800. The pink bounding box represents crystallization behaviors in spherulitic or isotropic growth, while the green bounding box indicates crystallization behaviors in lamellar or biaxial growth. YOLO object detection result for a FK-800 lot:G-mixed b FK-800 lot:G c FK-800 lot:H d FK-800 lot:Q

Evaluation of Image Segmentation Module

To ensure the accuracy and reliability of the segmentation results from Module 2, the performance of the DeepLab algorithm in segmenting crystallization behaviors exhibiting spherulitic or isotropic growth was assessed by specialists in this domain.

DeepLab generated a trained model weight file. We used OpenCV [46] to analyze the file and draw red pixels on the segmented spherulites.

These red pixels correspond to the segments determined by DeepLab, which were then converted into physical distances and areas. By plotting these areas over time, we were able to observe and analyze the crystallization behavior, as shown in Fig. 8. We also compared different versions of the Object Detection Module with the Image Segmentation Module. The comparison between different versions of the Object Detection Module with the Image Segmentation Module and Gwyddion ground truth areas are shown in Fig. 9.

Overall, our study demonstrates that the Module 3: Image Segmentation Module’s algorithm is a feasible and effective tool for image segmentation of spherulitic structures, and these segmented images can be used as the input of Module 3: Data Augmentation’s Input.

In this figure, subfigures a, b and c present a side-by-side comparison of original fluoroelastomer images from the Object Detection Module (Original)output with their corresponding processed results using the DeepLab Image Segmentation Module(DeepLab). The juxtaposition highlights the enhancements and segmentation by the Image Segmentation Module processing in AFM images

Comparison of image segmentation areas predicted by three different DeepLab models versus the ground truth area (Gwyddion). The table in the lower right corner provides details on each model’s Mean Intersection over Union (mIOU) and Accuracy. Specifically, the YOLO v3 +DeepLabV3 model has an mIOU of 0.75 and an accuracy of 0.92. The YOLO v4 +DeepLabV3 model achieves an mIOU of 0.82 and an accuracy of 0.94. Lastly, the YOLO v7 +DeepLabV3 model outperforms the others with an mIOU of 0.88 and an accuracy of 0.96

Fluoroelastomer Crystallite Clustering

To track the same fluoroelastomer crystallites in different AFM images over time, we utilized Fluoroelastomer Crystallite Clustering in Module 4 to cluster the geometric center information of the bounding box provided by YOLO results. We assigned an ID to each AFM batch based on the clustering results obtained from DBSCAN. We applied this method to various AFM batches to evaluate the clustering results. To validate the clusters obtained, we engaged domain experts who visually inspected the results. Their assessments were based on their extensive experience and familiarity with the appearance and characteristics of fluoroelastomer crystallites in AFM images. The results were visually inspected and deemed feasible by domain experts, as illustrated in Fig. 10a and b.

Growth of Crystallite through Time Sequence AFM Images and Kinetics Analysis

Using the area information we derived from Module 3 for each fluoroelastomer crystallite, we generated plots using the seconds obtained from the metadata for each AFM image.

We then created a model to fit our data, using the Avrami equation for model regression fitting [47]. We utilized the cluster outputs from Fluoroelastomer Crystallite Clustering. Each crystallite was then assigned a specific ID for the purpose of tracking. After assigning IDs for all fluoroelastomer crystallites in a set of AFM images, we fit the model to find the relationship between time and crystalline area. The parameters corresponding to kinetics for each crystallite in AFM batch G are represented in Fig. 11, while the results of the kinetics for each AFM videos are summarized in Table 2. The table presents a summary of the rate kinetics of crystallite growth in different batches of fluoroelastomer. It includes information about each sample batch, the number of frames analyzed, the number of crystallites detected, and various rate constants such as the average rate constant (\( {\bar{k}} \)), harmonic mean rate constant (\( {\tilde{k}} \)), standard deviation of rate constants (\( \sigma _k \)), maximum rate constant (\( k_{\text {max}} \)), and minimum rate constant (\( k_{\text {min}} \)). This data offers insights into the growth of crystallites within various fluoroelastomer batches.

This figure illustrates the growth trend of fluoroelastomer crystallites area over time(seconds) in FK-800 batch G. From (a–f),each subplot corresponds to a unique crystallite identified by its ID. The observed normalized areas are represented by blue scatter points, while the black curve depicts the best fit to the data using the Avrami equation The best-fit parameter k for each crystallite is displayed in red on each subplot

Discussion

In this study, we designed a comprehensive end-to-end image processing pipeline, named \(MDS^3-VizFCD\), specifically geared toward the analysis of fluoroelastomer time-series AFM images. Our pipeline is designed to identify, classify, segment, and track the crystallization behavior of fluoroelastomer through a series of modular components. This pipeline integrates state-of-the-art techniques, including image pre-processing, object detection, image segmentation, and data tracking, providing a estimation kinetics analysis for materials science research.

Modular End-to-End Image Processing Pipeline

Our pipeline’s modular design offers both flexibility and adaptability. Each module serves a specific purpose and can be modified or replaced independently, according to the specific requirements of the task. For instance, different deep learning algorithms can be employed in the object detection (Fig. 5) or image segmentation modules (Fig. 8), depending on the data characteristics or the specific goals of the analysis. This modularity allows our pipeline to be easily customized and extended to a wide range of materials science problems.

The \(MDS^3-VizFCD\) pipeline effectively identifies, classifies, segments, and tracks the crystallization behavior of fluoroelastomer in time-series AFM images. Our results have been evaluated by domain experts and deemed acceptable, emphasizing the potential of this pipeline. Our research demonstrates the broad applicability of data-driven analysis for complex materials, with fluoroelastomer serving as just one example. Furthermore, the proposed framework can be extended and applied to other material systems for analysis, such as metals, ceramics, or composites, providing a powerful tool for researchers in the field of materials science.

Data Augmentation

Our framework can achieve relatively high recognition and detection accuracy even with a limited amount of training data. This is mainly due to the introduction of data augmentation methods, which to some extent alleviate the problem of insufficient samples. Moreover, our method’s ability to identify and segment two different crystallization behaviors in fluoroelastomer demonstrates the advantage of data-driven methods when dealing with complex materials.

A comparative analysis of mAP values, before and after data augmentation, clearly illustrates the enhancement in the performance of various versions of the object detection model, as evidenced by Fig. 5. While data augmentation modestly impacts F1-score, the improvement is discernible.

It is discernible that different YOLO versions and data augmentation levels influence the training loss variably evidenced by Fig. 6. Notably, YOLOv7 exhibits the smallest training loss across various data augmentation levels, indicating its superior performance in this context. Furthermore, the effect of data augmentation is markedly evident; as the level of data augmentation increases, the training loss for the same YOLO version tends to decrease, underscoring the utility of data augmentation in enhancing model training and potentially preventing overfitting. This suggests a crucial interplay between model version and data augmentation in optimizing training loss. The online supplementary material shows the hyperparameter we choose for different versions of YOLO.

It is noteworthy that escalating the training iteration of the same training dataset from 5000 to 10,000 did not significantly boost the mAP, F-1 score values. This observation suggests that the model likely reaches convergence before the 5000th iteration. Therefore, we opted for 5000 as our training iteration to curtail the risk of overfitting.

Fluoroelastomers Crystallite Kinetics Analysis

The parameters corresponding to kinetics of crystal growth in fluoroelastomer samples Kel-F and FK-800, as shown in Table 2, reveal distinct differences. Notably, for the lots evaluated, FK-800 exhibits a significantly higher crystal growth rate, indicated by larger k values, implying more rapid crystallites formation over time. Variability in growth rates across FK-800’s crystallites, denoted by a larger standard deviation, suggests a greater inconsistency in crystal growth rates compared to the uniformity seen in Kel-F. Interestingly, within FK-800, batch-to-batch variations are observable, implying that processing methods may influence the kinetics of crystal growth. For instance, FK-800 lot H shows the highest average growth rate, while FK-800 lot G records the lowest. However, further insights would require a more comprehensive understanding of the experimental setup, sample preparation, and data collection methodologies used in this study, alongside relevant domain expertise.

Limitations and Future Work

Despite the significant contributions presented by this study, some limitations remain and require further research. In terms of fluoroelastomer crystallite kinetics estimation, the interpretation of rate constants is largely dependent on the 2D approximation of AFM images. Given the inherent three-dimensional nature of these AFM images, 2D analysis might overlook important 3D characteristics of crystallization kinetics such as height variations of the crystallites. This approximation could affect our understanding of the true kinetics and rate constants involved in fluoroelastomer crystallization. Future work could benefit from 3D image analysis techniques to better capture the kinetics in fluoroelastomer.

The narrow field of view in our AFM dataset presents another limitation. Crystallites not fully contained within this field might affect the accuracy of our findings. Although we tracked crystallites both within and growing out of the field of view, a more comprehensive field of view could provide a better understanding of the crystallization process. Future studies could consider obtaining AFM image sequences with a larger field of view or employing different imaging techniques to address this issue.

Regarding the Fluoroelastomer Crystallite Kinetics Analysis Module, although our framework has achieved estimation results in the analysis of fluoroelastomer crystallization behavior, there are still some areas for improvement. For example, as shown in Fig. 8, our Image Segmentation Module may be influenced by the type and shape of the fluoroelastomer crystallites. To overcome these influences, we only analyzed and augmented data for spherulitic growth fluoroelastomer crystallites in this study. Additionally, we observed unexpected variability or fluctuations, particularly in the interval [190, 300] in Fig. 9, which could be attributed to the crystal growth phase of batch G during this period. This phase can induce instability in the bounding boxes produced by YOLO, resulting in discrepancies in the segmented area. The exploration and mitigation of this variability, and its implications on the segmentation accuracy and subsequent analysis, become pivotal aspects, and will be addressed with a detailed investigation in our future work to enhance the reliability and robustness of our proposed module.

Conclusion

In this study, we designed a comprehensive framework, \(MDS^3-VizFCD\) Pipeline, for the analysis of fluoroelastomer time-series atomic force microscopy (AFM) images, which effectively identified, segmented, and tracked the crystallization behavior of fluoroelastomer. Our approach achieved significant improvements in analysis speed and accuracy compared to traditional manual methods, reducing human effort. The proposed framework can be extended and applied to other material systems, providing a powerful tool for analyzing to researchers in the field of materials science.

A key aspect of our framework that contributed to its success is the data augmentation module. Despite having a limited amount of training data, our framework demonstrated relatively high recognition and segmentation accuracy. The introduction of data augmentation methods played a crucial role in addressing the problem of insufficient samples and demonstrated the advantage of data-driven methods when dealing with complex materials, such as fluoroelastomer. The results of our study highlight the importance of data augmentation in improving the performance of the object detection model, as evidenced by the increase in mAP values after implementing data augmentation.

In conclusion, our research underscores the growing potential of data-driven approaches for AFM analysis. By further optimizing and improving our framework, we can more accurately process different crystallization behaviors and extend our method to the analysis of other material systems, revealing a broader range of crystallization behaviors and kinetic properties. Combining machine learning and deep learning techniques with other advanced imaging techniques can lead to more comprehensive material characterization and analysis, providing valuable insights for materials science researchers.

References

Nguyen-Tri P, Ghassemi P, Carriere P, Nanda S, Assadi AA, Nguyen DD (2020) Recent applications of advanced atomic force microscopy in polymer science: a review. Polymers 12(5):1142

Binnig G, Quate CF, Gerber C (1986) Atomic force microscope. Phys Rev lett 56(9):930

Maiti M, Bhowmick AK (2006) New insights into rubber-clay nanocomposites by AFM imaging. Polymer 47(17):6156–6166

Ornaghi FG, Bianchi O, Ornaghi HL Jr, Jacobi MA (2019) Fluoroelastomers reinforced with carbon nanofibers: a survey on rheological, swelling, mechanical, morphological, and prediction of the thermal degradation kinetic behavior. Polym Eng Sci 59(6):1223–1232

Ameduri B, Boutevin B, Kostov G (2001) Fluoroelastomers: synthesis, properties and applications. Prog Polym Sci 26(1):105–187

Améduri B (2020) The promising future of fluoropolymers. Macromol Chem Phys 221(8):1900573. https://doi.org/10.1002/macp.201900573

Hobbs JK, Farrance OE, Kailas L (2009) How atomic force microscopy has contributed to our understanding of polymer crystallization. Polymer 50(18):4281–4292. https://doi.org/10.1016/j.polymer.2009.06.021

Wang Y, Yao Q, Kwok JT, Ni LM (2020) Generalizing from a few examples: a survey on few-shot learning. ACM Comput Surv CSUR 53(3):1–34

Gaponenko I, Tückmantel P, Ziegler B, Rapin G, Chhikara M, Paruch P (2017) Computer vision distortion correction of scanning probe microscopy images. Sci Rep 7(1):669

Wang Y, Lu T, Li X, Wang H (2018) Automated image segmentation-assisted flattening of atomic force microscopy images. Beilstein J Nanotechnol 9(1):975–985

Shorten C, Khoshgoftaar TM (2019) A survey on image data augmentation for deep learning. J Big Data 6(1):1–48

Giergiel M, Zapotoczny B, Czyzynska-Cichon I, Konior J, Szymonski M (2022) AFM image analysis of porous structures by means of neural networks. Biomed Signal Process Control 71:103097. https://doi.org/10.1016/j.bspc.2021.103097

Ge M, Su F, Zhao Z, Su D (2020) Deep learning analysis on microscopic imaging in materials science. Materials Today Nano 11:100087. https://doi.org/10.1016/j.mtnano.2020.100087

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 779–788

Chen L-C, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2018) Deeplab: semantic image segmentation with deep convolutional nets, Atrous convolution, and fully connected CRFS. IEEE Trans Pattern Anal Mach Intell 40(4):834–848. https://doi.org/10.1109/TPAMI.2017.2699184

Wang Y, Yao Q (2019) Few-shot learning: a survey. CoRR arXiv: 1904.05046

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444

Mandelkern L, Martin G, Quinn F Jr (1957) Poly-(vinylidene fluoride), and their copolymers. J Res Natl Bur Stand 58(3):137

Ameduri B (2009) From vinylidene fluoride (VDF) to the applications of VDF-containing polymers and copolymers: recent developments and future trends. Chem Rev 109(12):6632–6686

Kelly K, Brown G, Anthony S (2020) Quantifying CTFE content in FK-800 using ATR-FTIR and time to peak crystallization. Int J Polym Anal Charact 25(8):621–633. https://doi.org/10.1080/1023666X.2020.1827859

Willey TM, DePiero SC, Hoffman DM (May 2009) A comparison of new TATBs, FK-800 binder and LX-17-like PBXs to legacy materials. Technical report LLNL-CONF-412929, Lawrence Livermore National Lab. (LLNL), Livermore, CA (United States). https://www.osti.gov/biblio/966908 Accessed 2021-12-14

Orme CA (2018) Progress summary: developing experimental methods to quantify the degree of crystallinity in fluoropolymer binders. Tech Rep Lawrence Livermore Nat Lab. https://doi.org/10.2172/1476198

Cady W, Caley L (1977) Properties of Kel F-800 polymer. Technical report UCRL-52301, Lawrence Livermore National Lab. (LLNL), Livermore CA, USA. https://doi.org/10.2172/5305005. http://www.osti.gov/servlets/purl/5305005/ Accessed 2021-11-08

Crist B, Schultz JM (2016) Polymer spherulites: a critical review. Prog Polym Sci 56:1–63. https://doi.org/10.1016/j.progpolymsci.2015.11.006

Su Y, Liu G, Xie B, Fu D, Wang D (2014) Crystallization features of normal alkanes in confined geometry. Accounts Chem Res 47(1):192–201. https://doi.org/10.1021/ar400116c

George L (2011) HBase the definitive guide. O’Reilly, Sebastopol, CA. http://shop.oreilly.com/product/0636920014348.do Accessed 2013-04-18

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG (2009) Research electronic data capture (redcap)-a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 42(2):377–381. https://doi.org/10.1016/j.jbi.2008.08.010

Girshick R (2015) Fast r-cnn. In: 2015 IEEE International conference on computer vision (ICCV), pp 1440–1448. https://doi.org/10.1109/ICCV.2015.169

Redmon J, Farhadi A (2018) Yolov3: An incremental improvement. CoRR arXiv:1804.02767

Wang CY, Liao HYM, Yeh IH, Wu YH, Chen PY, Hsieh JW (2019) CSPNet: A new backbone that can enhance learning capability of CNN. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW)

He K, Zhang X, Ren S, Sun J (2014) Spatial pyramid pooling in deep convolutional networks for visual recognition. In: Computer vision—ECCV 2014, pp 346–361. Springer, Berlin. https://doi.org/10.1007/978-3-319-10578-9_23

Liu S, Qi L, Qin H, Shi J, Jia J (2018) Path aggregation network for instance segmentation. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 8759–8768. Salt Lake City, UT. https://doi.org/10.1109/CVPR.2018.00913

Misra D (2020) Mish: A self regularized non-monotonic activation function

Wang CY, Bochkovskiy A, Liao HYM (2022) YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: 2015 IEEE Conference on computer vision and pattern recognition (CVPR), pp 3431–3440. https://doi.org/10.1109/CVPR.2015.7298965

Chen LC, Papandreou G, Schroff F, Adam H (2017) Rethinking Atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122

Russell BC, Torralba A, Murphy KP, Freeman WT (2008) Labelme: a database and web-based tool for image annotation. Int J Comput Vision 77(1):157–173

Ester M, Kriegel HP, Sander J, Xu X (1996) A density-based algorithm for discovering clusters in large spatial databases with noise. In: Proceedings of the second international conference on knowledge discovery and data mining. KDD’96, pp 226–231. AAAI Press, Pomona

Schubert E, Sander J, Ester M, Kriegel HP, Xu X (2017) DBSCAN revisited, revisited: why and how you should (still) use DBSCAN. ACM Trans Database Syst TODS 42(3):1–21. https://doi.org/10.1145/3068335

Avrami M (2004) Kinetics of phase change I general theory. J Chem Phys 7(12):1103–1112. https://doi.org/10.1063/1.1750380

Long Y, Shanks RA, Stachurski ZH (1995) Kinetics of polymer crystallisation. Prog Polym Sci 20(4):651–701. https://doi.org/10.1016/0079-6700(95)00002-W

Everingham M, Van Gool L, Williams CK, Winn J, Zisserman A (2010) The pascal visual object classes (VOC) challenge. Int J Comput Vis 88:303–338

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge. http://www.deeplearningbook.org

Rezatofighi H, Tsoi N, Gwak J, Sadeghian A, Reid I, Savarese S (2019) Generalized intersection over union: a metric and a loss for bounding box regression. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 658–666

Bradski G (2000) The opencv library. Dr Dobb’s J Softw Tools Prof Program 25(11):120–123

Cantor B (2020) The Avrami equation: phase transformations. In: Cantor, B. (ed.) The equations of materials, pp. 180–206. Oxford University Press, Oxford. https://doi.org/10.1093/oso/9780198851875.003.0009

Acknowledgements

This material is based upon research in the Materials Data Science for Stockpile Stewardship Center of Excellence (MDS3−COE), and supported by the Department of Energy’s National Nuclear Security Administration under Award Number(s) DE-NA0004104. CO acknowledges useful discussions with En Ju Cho, Chami Swaminathan, Xiaojie Xu, and James Lewicki. Work performed by CO was under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52- 07NA27344. This work made use of the High Performance Computing Resource in the Core Facility for Advanced Research Computing at Case Western Reserve University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lu, M., Venkat, S.N., Augustino, J. et al. Image Processing Pipeline for Fluoroelastomer Crystallite Detection in Atomic Force Microscopy Images. Integr Mater Manuf Innov 12, 371–385 (2023). https://doi.org/10.1007/s40192-023-00320-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40192-023-00320-8