Abstract

In this paper, we have proposed a new generalized robust estimators of population mean under different ranked set sampling. Robust estimators are recently defined by Zaman and Bulut (Commun Stat Theory Methods 48(8):2039–2048, 2019a) and Ali et al. (Commun Stat Theory Methods, 2019. https://doi.org/10.1080/03610926.2019.1645857) under simple random sampling. We have generalized robust-type estimators where Zaman and Bulut (2019a) and Ali et al. (2019) estimators are members of our generalized estimator. We have also extended our results to ranked set and median ranked set sampling designs. The simulation study showed that our proposed robust-type estimator performs better.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

When the data set does not follow a normal distribution or contains outliers, estimations of parameters are affected badly. To overcome this difficulty and get reliable conclusions about the contaminated data, robust estimators are defined in statistical analysis. This relies on finding proper estimates of the data location and scale ([9, 10, 13]).

Using information of auxiliary variable in the estimates also increases the efficiency. We can list some important studies as follows: Hanif and Shahzad [11] considered the issue of estimating the population variance utilizing trace of kernel matrix in absence of non-response under simple random sampling (SRS) scheme. Shahzad et al. [25] defined a new class of ratio-type estimators for the population mean. Shahzad et al. [26] proposed a new class of exponential-type estimators, based on the known median of study variable.

The authors also studied robust ratio-type estimators when the data contained outliers. Zaman and Bulut [30] have studied the robust estimators in simple random sampling and they also extended their studies to stratified simple random sampling [31]. Recently, Ali et al. [1] generalized Zaman and Bulut [30]’s estimators and they have studied sensitive data case. Subzar et al. [28] adapted the various robust regression techniques to the ratio estimators. Shahzad et al. [27] defined class of regression-type estimators utilizing robust regression tools.

Ranked set sampling (RSS) is an effective design introduced by [20]. The efficiency of RSS depends on the sampling allocation whether balanced or unbalanced. The balanced RSS features an equal allocation of the ranked order statistics. It has been shown theoretically and empirically the variance of the balanced RSS estimator is less than that of the estimator SRS estimator regardless of ranking errors ([3]). In literature, many authors showed that this design performs better compared to the SRS and proposed new RSS designs such as median ranked set (MRSS) by Muttlak [22], double ranked set by Al-Saleh and Al-Kadiri [7], pair ranked set by Muttlak [21], L ranked set by Al-Nasser [2], neoteric ranked set sampling by Zamanzade and Al-Omari [32] and so on.

We can also list some important papers to improve estimation under ranked set sampling designs as: Al-Omari [6] studied ratio estimators under MRSS. Koyuncu [17] studied regression-type estimators under different ranked set sampling. Koyuncu [18] has proposed regression-type estimators and more general class of estimators under MRSS. Koyuncu [16] has studied difference-cum-ratio and exponential type estimators under MRSS.

The aim of this study is to propose more general estimators of the population mean using robust statistics under RSS and MRSS.

The article is constructed as follows: In "Robust estimators in SRS" section, we have reviewed the recent robust literature in SRS. In "Proposed class of robust-regression-type-estimators under SRS, RSS and MRSS" section, the new generalized robust estimators under SRS, RSS and MRSS are presented. The mean square error (MSE) is derived up to the first-order of approximation. The theoretical efficiency comparison is given in "Efficiency comparison" section. In "Simulation study" section, a simulation study is conducted using a real data set and summarized our findings in "Conclusion" section.

Robust estimators in SRS

When the information about auxiliary variable is known and the using this in the estimator can results more efficient estimates. Also the normality assumption of data is not hold, we need to use robust statistics. Moving this direction Zaman and Bulut [30] suggested following robust estimators as follows:

where \(b_{i}\) is slope or regression coefficient, calculated from the robust regression methods such as least absolute deviations (LAD), least median of squares method (LMS), least trimmed squares method (LTS), Huber-M, Hample-M, Tukey-M, Huber-MM. When the data contain outliers, these observations cause problems because they may strongly influence the result. Classical methods can be affected by outliers. The aim of using robust statistics in estimators is to describe well the data majority and get reliable estimates. We can summarized these well known robust methods are as follows:

LAD is a method which minimizes the sum of absolute error and is described as

LMS method rather than minimize the sum of the least-squares function, this model minimizes the median of the squared residuals

LTS proceeds with OLS after eliminating the most extreme positive or negative residuals. LTS orders the squared residuals from smallest to largest: \((E^2)_{(1)}, (E^2)_{(2)},\ldots ,(E^2)_{(n)}\), and then, it calculates b that minimizes the sum of only the smaller half of the residuals

where \(m=[n/2]+1\); the square bracket indicates rounding down to the nearest integer.

Huber-M is based on minimizing another function of outliers instead of error squares. Objective function of M-estimator is given as

and is asymmetric function of outliers. Huber’s function \(\rho\) is designed as

The influence function is determined by taking the derivative

where sgn(.) is sign function and represented as

Hample-M Estimation function is defined as

where \(a=1.7\), \(b=3.4\) and \(c=8.5\).

Tukey M estimation function is given by

where \(k=5\) or \(k=6\).

Huber MM estimation method is described as follows:

-

A starting estimation with high breakdown point (0.5 if possible) is chosen.

-

Outliers are calculated as \(e_{i}(T_{0})=y_{i}-T_{0}x_{i}, \quad 1\le i \le n\)

where \(T_{0}\) is starting estimation. Under \(b/a=0.5\) constraints, b is calculated as below

where \(s_{n}\) is M scale estimation and it is calculated as \(s_{n}=s(e(T_{0}))\). For more information robust estimators kindly see [12, 24, 29])

Ali et al. [1] generalized Zaman and Bulut [30] estimators as

where i shows robust regression methods LAD, LMS, LTS, Huber-M, Hample-M, Tukey-M, Huber-MM, respectively.

\(F \ne 0\) and G are any constants, either (0,1) or known characteristics of the population such as, \(C_x\), the coefficients of variation, \(\beta _{2}(x)\), the coefficients of kurtosis from the population having N identifiable units. We can generate many new estimators using suitable variables for \(b_{i}, F\) and G.

The MSE of \({\bar{y}}_{z}\) is given as

where \(B_{i}\) robust regression coefficient of population, \(R_{FG}=\dfrac{F{\bar{Y}}}{F{\bar{X}}+G}\), \(S^{2}_{y}= \dfrac{\sum _{i=1} ^{N} (y_{i}-{\bar{Y}})^{2}}{N-1}\), \(S^{2}_{x}= \dfrac{\sum _{i=1} ^{N} (x_{i}-{\bar{X}})^{2}}{N-1}\) are the unbiased variances of Y and X, respectively, \(S_{xy}= \dfrac{\sum _{i=1} ^{N} (y_{i}-{\bar{Y}})(x_{i}-{\bar{X}})}{N-1}\) is the covariance between (X, Y). \({\bar{X}}\), \({\bar{Y}}\) are population means \({\bar{x}}\), \({\bar{y}}\) are the sample means of auxiliary and study variables, respectively.

Then, Ali et al. [1] have proposed classical regression-type estimators using robust b in the equation instead of least-square estimator. They found that their regression-type estimators are more efficient than Zaman and Bulut [30]. Ali et al. [1] estimator and MSE of estimator are given as

Proposed class of robust-regression-type-estimators under SRS, RSS and MRSS

Ranked-based sampling designs have found many applications in other fields including environmental monitoring [19], clinical trials and genetic quantitative trait loci mappings [8] and medicine ([33, 34]). In this section, RSS and MRSS designs are described and we have introduced a new generalized robust estimators under these designs.

RSS design

The RSS design introduced by McIntyre [20] can be described as follows:

-

1.

Select a simple random sample of size \(m^{2}\) units from the target finite population and divide them into m samples each of size m.

-

2.

Rank the units within each sample in increasing magnitude by using personal judgment, eye inspection or based on a concomitant variable.

-

3.

Select the ith ranked unit from the ith sample.

-

4.

Repeat steps 1 through 3, k times if needed to obtain a RSS of size \(n=mk\).

Let

be m independent bivariate random samples with pdf f(x, y), each of size m in the jth cycle, \((j=1,2,\ldots ,k)\). Let

be the order statistics of \(X_{i1j},X_{i2j},\) \(\ldots ,X_{imj}\) and the judgment order of \(Y_{i1j},Y_{i2j},\ldots ,Y_{imj}\) \((i=1,2,\ldots ,m)\), where round parentheses () and square brackets [] indicate that the ranking of X is perfect and ranking of Y has errors. Assume measured units using RSS are

Then, the RSS estimators of population mean for the study and auxiliary variables can be written as

MRSS design

The MRSS design can be described as in the following steps:

-

1.

Select m random samples each of size m bivariate units from the population of interest.

-

2.

The units within each sample are ranked by visual inspection or any other cost free method with respect to a variable of interest.

-

3.

If m is odd, select the \(((m+1)/2)\) th-smallest ranked unit X together with the associated Y from each set, i.e., the median of each set. If m is even, from the first m/2 sets select the (m/2)th ranked unit X together with the associated Y and from the other sets select the \(((m+2)/2)\) the ranked unit X together with the associated Y.

-

4.

The whole process can be repeated k times if needed to obtain a sample of size \(n=mk\) units.

For odd and even sample sizes, the units measured using MRSS are denoted by MRSSO and MRSSE, respectively. For odd sample size,

the sample mean estimators using MRSSO are given,

For even sample size,

the sample means of X and Y using MRSSE are given

and

Proposed class of robust-regression-type-estimators in SRS

In this section, we can generalized Zaman et al. [30] and Ali et al. [1] estimators under SRS as

To obtain the MSE of the generalized estimators, let us define the following expectations under SRS:

We can re-write the \({\bar{y}}_{N(\mathrm{SRS})}\) using e terms according to first order of approximation as follows:

The expectations of e terms are

Substituting these expectations in Eq. 3.8, we can get MSE as given by

Putting \(\alpha\)=0 in the \(\mathrm{MSE}({\bar{y}}_{N(\mathrm{SRS})})\), we can get Ali et al. [1] \(\mathrm{MSE}({\bar{y}}_{a})\) estimator. While for appropriate constants, we can also get MSEs of Zaman and Bulut [30] estimators.

Proposed class of robust-regression-type-estimators in RSS and MRSS

In RSS and MRSS to improve the efficiency of estimators, some authors used information of auxiliary variable in estimators. Koyuncu [18] has proposed regression-type estimators under MRSS. Al-Omari and Bouza [4] studied the ratio estimators of population mean with missing values using RSS. Al-Omari et al. [5] suggested ratio-type estimators of the mean using extreme RSS. Jemain et al. [15] suggested a modified ratio estimator for the population mean using double MRSS.

In this section, we generalized also our proposed estimators in the previous section to new classes based on RSS and MRSS designs as follows

where (j) represents the sampling design such as SRS, RSS and MRSS.

To obtain the bias and the MSE of suggested class of estimators in Eq. (3.10) under RSS, let us define following notations

To obtain the MSE of the generalized estimators, let us define the following expectations under SRS:

where \(\psi =\dfrac{F{\bar{X}}}{F{\bar{X}}+G}\). One can easily obtain the specific MSE from Eq. 3.11 putting expectation terms belong to design. For example if (j) represents the RSS design we can write following notations:

If (j) represents the MRSS design and the sample size n is odd we can write following notations:

Then, we can get the MSE as follows:

If (j) represents the MRSS design and the sample size n is even we can write following notations:

After defining these terms, the procedure is very easy. When we put these e terms in Eq. 3.11 we get the MSE is given by

Efficiency comparison

In this section, the efficiency comparison between the MSE equations is obtained as below:

(1) Comparison of Ali et al. [1] \({\bar{y}}_{z}\) estimator which is general form of Zaman and Bulut [30] with proposed estimators under SRS

\(\mathrm{MSE}({\bar{y}}_{N(\mathrm{SRS})})<\mathrm{MSE}({\bar{y}}_{z})\) if

From Eq. (4.1), one can easily see that when the \(\alpha =0\) we get same MSE.

(2) Comparison of Ali et al. [1] \({\bar{y}}_{a}\) estimator with proposed estimators under SRS

\(\mathrm{MSE}({\bar{y}}_{N(\mathrm{SRS})})< \mathrm{MSE}({\bar{y}}_{a})\) if

When the conditions Eqs. (4.1–4.2) are satisfied the proposed estimator is more efficient than existing estimators.

Simulation study

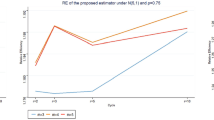

In this section, we used a real data set which is used by Jemain et al. [14] to illustrate the efficiency of proposed estimators over existing ones. These data consist of height and the diameter at breast height of 399 trees. We have used Height (H) in feet as study variable and Diameter (D) as auxiliary variable. The summary statistics of data is given in Table 1. The scatter plot of the data set is given in Fig. 1. From the scatter plot, we can say that the data set contains outliers. In the simulation study, we have selected 10000 samples with different sample sizes (\(n=3,4,5,7,8\)) under SRS, RSS and MRSS designs using R Software version 3.1.1 [23] We have calculated the MSE of Zaman and Bulut [30], Ali et al. [1] and the proposed estimators under SRS which is given by Table 2 based on linear, Huber-M, LMS, LTS, LAD, S and MM robust estimators. In this table, we can also see the efficiency of different robust betas in the same type estimator. The same procedure is also extended for the RSS and MRSS estimators as given Tables 3 and 4. We can summarized the simulation study as follows:

-

From Table 2, we can say that \({\bar{y}}_{N(\mathrm{SRS})_{2}}\) proposed estimator which used third quantile of auxiliary variable and LMS robust beta is the best for all sample sizes.

-

From Table 3, it can be seen that \({\bar{y}}_{N(\mathrm{RSS})_{4}}\) proposed estimator which used third quantile of auxiliary variable and LMS robust beta is the best for all sample sizes under RSS design.

-

From Table 4, under MRSS design \({\bar{y}}_{N(\mathrm{MRSS})_{4}}\) is the best using third quantile and LMS robust beta when the sample size \(n=3,4,5.\) When the sample size is increasing, proposed \({\bar{y}}_{N(\mathrm{MRSS})_{4}}\) with S robust beta is perform better.

-

PRE of estimators over different sampling designs is given in Tables 5 and 6. From these tables, we can see that ranked set sampling designs have better performance over SRS for all simulation cases.

-

When we compare the efficiency according to sample size, we can say that for all sample sizes the proposed estimator is more efficient than existing estimators. Especially for small sample sizes, efficiency over existing estimators is quite high than large sample sizes.

Conclusion

When the data contain outliers or not hold normality assumption to get more reliable results on estimates, we need to consider robust statistics. In this study, moving this direction we have considered robust techniques for estimation of population mean. First, we have examined newly proposed robust estimators in SRS and tried to define more general class of estimators in SRS which newly proposed estimators are member of our class. Then, we have extended our theoretical findings to different sampling designs such as RSS and MRSS. A general form of estimators of population mean and MSE formula are obtained. Theoretical MSEs of the robust family are also given for each designs. To see the performance of our estimators, we have conducted a simulation study using a real data set. We have compared the existing estimators with our proposed estimators and concluded that our proposed estimators perform better than recently proposed Zaman and Bulut [30] and Ali et al. [1] estimators. As a future work, these robust estimators can be introduced under new RSS designs and new robust methods also can be proposed.

References

Ali, N., Ahmad, I., Hanif, M., Shahzad, U.: Robust-regression-type estimators for improving mean estimation of sensitive variables by using auxiliary information. Commun. Stat. Theory Methods (2019). https://doi.org/10.1080/03610926.2019.1645857

Al-Nasser, A.D.: L ranked set sampling: a generalization procedure for robust visual sampling. Commun. Stat. Simul. Comput. 36(1), 33–43 (2007)

Al-Omari, A.I., Bouza, C.N.: Review of ranked set sampling: modifications and applications. Investigación Operacional 35(3), 215–235 (2014)

Al-Omari, A.I., Bouza, C.N.: Ratio estimators of population mean with missing values using ranked set sampling. Environmetrics 26(2), 67–76 (2015)

Al-Omari, A.I., Jaber, K., Ibrahim, A.: Modified ratio-type estimators of the mean using extreme ranked set sampling. J. Math. Stat. 4(3), 150 (2008)

Al-Omari, A.I.: Ratio estimation of the population mean using auxiliary information in simple random sampling and median ranked set sampling. Stat. Probab. Lett. 82, 1883–1890 (2012)

Al-Saleh, M.F., Al-Kadiri, M.A.: Double-ranked set sampling. Stat. Probab. Lett. 48(2), 205–212 (2000)

Chen, Z.: Ranked set sampling: its essence and some new applications. Environ. Ecol. Stat. 14(4), 355–363 (2007)

Collins, J.R.: Robust estimation of a location parameter in the presence of asymmetry. Ann. Stat. 4, 68–85 (1976)

Daszykowski, M., Kaczmarek, K., Vander Heyden, Y., Walczak, B.: Robust statistics in data analysis–a review: basic concepts. Chemometr. Intell. Lab. Syst. 85(2), 203–219 (2007)

Hanif, M., Shahzad, U.: Estimation of population variance using kernel matrix. J. Stat. Manag. Syst. 22(3), 563–586 (2019)

Huber, P.J.: Robust regression: asymptotics, conjectures and Monte Carlo. Ann. Stat. (1973). https://doi.org/10.1214/aos/1176342503

Huber, P.J.: Robust Estimation of a Location Parameter. Breakthroughs in Statistics, pp. 492–518. Springer, New York (1992)

Jemain, A.A., Al-Omari, A.I., Ibrahim, K.: Balanced groups ranked set sampling for estimating the population median. J. Appl. Stat. Sci. 17(1), 39–46 (2008a)

Jemain, A.A., Al-Omari, A.I., Ibrahim, K.: Modified ratio estimator for the population mean using double median ranked set sampling. Pak. J. Stat. 24(3), 217–226 (2008b)

Koyuncu, N.: New difference-cum-ratio and exponential type estimators in median ranked set sampling. Hacet. J. Math. Stat. 45(1), 207–225 (2016)

Koyuncu, N.: Regression estimators in ranked set, median ranked set and neoteric ranked set sampling. Pak. J. Stat. Oper. Res. 14(1), 89–94 (2018)

Koyuncu, N.: A class of estimators in median ranked set sampling. İstatistikçiler Dergisi: İstatistik ve Aktüerya 12(2), 58–71 (2019)

Kvam, P.H.: Ranked set sampling based on binary water quality data with covariates. J. Agric., Biol., Environ. Stat. 8(3), 271 (2003)

McIntyre, G.A.: A method for unbiased selective sampling using ranked sets. Aust. J. Agric. Res. 3, 385–390 (1952)

Muttlak, H.A.: Pair rank set sampling. Biom. J. 38, 879–885 (1996)

Muttlak, H.A.: Median ranked set sampling. J. Appl. Stat. Sci. 6, 245–255 (1997)

R Core Team: R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. URL https://www.R-project.org/ (2020)

Rousseeuw, P.J., Leroy, A.M.: Robust Regression and Outlier Detection. Wiley Series in Probability and Mathematical Statistics. Wiley, New York (1987)

Shahzad, U., Perri, P.F., Hanif, M.: A new class of ratio-type estimators for improving mean estimation of nonsensitive and sensitive variables by using supplementary information. Commun. Stat. Simul. Comput. 48(9), 2566–2585 (2019)

Shahzad, U., Al-Noor, N.H., Hanif, M., Sajjad, I.: An exponential family of median based estimators for mean estimation with simple random sampling scheme. Commun. Stat. Theory Methods 1–10 (2020)

Shahzad, U., Al-Noor, N.H., Hanif, M., Sajjad, I., Muhammad Anas, M.: Imputation based mean estimators in case of missing data utilizing robust regression and variance-covariance matrices. Commun. Stat. Simul. Comput. 1–20 (2020)

Subzar, M., Bouza, C.N., Al-Omari, A.I.: Utilization of different robust regression techniques for estimation of finite population mean in srswor in case of presence of outliers through ratio method of estimation. Investigación Operacional 40(5), 600–609 (2019)

Tukey, J.W.: Exploratory Data Analysis. Addison-Wesley, Boston (1977)

Zaman, T., Bulut, H.: Modified ratio estimators using robust regression methods. Commun. Stat. Theory Methods 48(8), 2039–2048 (2019a)

Zaman, T., Bulut, H.: Modified regression estimators using robust regression methods and covariance matrices in stratified random sampling. Commun. Stat. Theory Methods (2019b). https://doi.org/10.1080/03610926.2019.1588324

Zamanzade, E., Al-Omari, A.I.: New ranked set sampling for estimating the population mean and variance. Hacet. J. Math. Stat. 45(6), 1891–1905 (2016)

Zamanzade, E., Mahdizadeh, M.: A more efficient proportion estimator in ranked set sampling. Stat. Probab. Lett. 129, 28–33 (2017)

Zamanzade, E., Wang, X.: Estimation of population proportion for judgment post-stratification. Comput. Stat. Data Anal. 112, 257–269 (2017)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Koyuncu, N., Al-Omari, A.I. Generalized robust-regression-type estimators under different ranked set sampling. Math Sci 15, 29–40 (2021). https://doi.org/10.1007/s40096-020-00360-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-020-00360-7

Keywords

- Ratio-type estimators

- Regression-type estimators

- Robust regression methods

- Ranked set sampling

- Median ranked set sampling