Abstract

To solve a stochastic linear evolution equation numerically, finite dimensional approximations are commonly used. If one uses the well-known Galerkin scheme, one might end up with a sequence of ordinary stochastic linear equations of high order. To reduce the high dimension for practical computations we consider balanced truncation as a model order reduction technique. This approach is well-known from deterministic control theory and successfully employed in practice for decades. So, we generalize balanced truncation for controlled linear systems with Levy noise, discuss properties of the reduced order model, provide an error bound, and give some examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Model order reduction is of major importance, for example, in the field of system and control theory. A commonly used method is balanced truncation, which was first introduced by Moore [19] for linear deterministic systems. A good overview containing all results of this scheme is stated in Antoulas [1]. Balanced truncation also works for deterministic bilinear equations (see Benner and Damm [5] and Zhang et al. [26]). Benner and Damm additionally pointed out the relation between balanced truncation for deterministic bilinear control systems and linear stochastic systems with Wiener noise. So, in both cases the reachability and observability Gramians are solutions of generalized Lyapunov equations under certain conditions. We resume working on balanced truncation for stochastic systems and want to generalize the results known for the Wiener case. The main idea is to allow the states to have jumps. Furthermore, we want to ensure that the Gramians we define are still solutions of generalized Lyapunov equations. So, a convenient noise process is given by a square integrable Levy process.

In Sect. 2, we provide the necessary background on semimartingales, square integrable Levy processes, and stochastic calculus in order to render this paper as self-contained as possible. Detailed information regarding general Levy processes one can find in Bertoin [8] and Sato [24], and we refer to Applebaum [2] and Kuo [17] for an extended version of stochastic integration theory. In Sect. 3, we focus on a linear controlled state equation driven by uncorrelated Levy processes, which is asymptotically mean square stable and equipped with an output equation. We introduce the fundamental solution \({\varPhi }\) of the state equation and point out the differences compared to fundamental solutions of deterministic systems. Using \({\varPhi }\) we introduce reachability and observability Gramians the same way like Benner and Damm [5]. We prove that the observable states and the corresponding energies are characterized by the observabilty Gramian and that the reachability Gramian provides partial information about the degree of reachability of a state. In Sect. 4, we describe the procedure of balanced truncation for the linear system with Levy noise, which is similar to the procedure known from the deterministic case (see Antoulas [1] and Obinata and Anderson [20]). We discuss properties of the resulting reduced order model (ROM). We will show that it is mean square stable, not balanced, that the Hankel singular values (HV) of the ROM are not a subset of the HVs of the original system, and that one can lose complete observability and reachability. Finally, we provide an error bound for balanced truncation of the Levy driven system. This error bound has the same structure as the \({\fancyscript{H}}_2\) error bound of linear deterministic systems. In Sect. 5, we deal with a linear controlled stochastic evolution equation with Levy noise (compare Da Prato and Zabczyk [10], Peszat and Zabczyk [21], Prévôt and Röckner [22]). To solve such a problem numerically, finite dimensional approximations are commonly used. The scheme we state here is the well-known Galerkin method (see Grecksch and Kloeden [12]), leading to a sequence of ordinary stochastic differential equations of the kind we considered in Sect. 4. For a particular case, we apply balanced truncation to that Galerkin solution and compute the error bounds and the exact errors of the approximation.

2 Basics from stochastics

Let all stochastic processes appearing in this section be defined on a filtered probability space \(\left( {\varOmega }, \fancyscript{F}, (\fancyscript{F}_t)_{t\ge 0}, \mathbb {P}\right) \) Footnote 1. We denote the set of all cadlagFootnote 2 square integrable \(\mathbb {R}\)-valued martingales with respect to \((\fancyscript{F}_t)_{t\ge 0}\) by \(\fancyscript{M}^2(\mathbb {R})\).

2.1 Semimartingales and Ito’s formula

Below, we introduce the class of semimartingales.

Definition 2.1

-

(i)

An \((\fancyscript{F}_t)_{t\ge 0}\)-adapted cadlag process X with values in \(\mathbb {R}\) is called semimartingale if it has the representation \(X=X_0+M+A\). Here, \(X_0\) is an \(\fancyscript{F}_0\)-measurable random variable, \(M\in \fancyscript{M}^2(\mathbb {R})\) and A is a cadlag process of bounded variation.Footnote 3

-

(ii)

An \(\mathbb {R}^d\)-valued process \(\mathbf {X}\) is called semimartingale if all components are real-valued semimartingales.

The following is based on Proposition 17.2 in [18].

Proposition 2.2

Let \(M, N \in \fancyscript{M}^2(\mathbb {R})\), then there exists a unique predictableFootnote 4 process \(\left\langle M, N\right\rangle \) of bounded variation such that \(MN-\left\langle M, N\right\rangle \) is a martingale with respect to \((\fancyscript{F}_t)_{t\ge 0}\).

Next, we consider a decomposition of square integrable martingales (see Theorem 4.18 in [15]).

Theorem 2.3

A process \(M\in \fancyscript{M}^2(\mathbb {R})\) has the following representation:

where \(M^c(0)=M^d (0)=0\), \(M_0\) is an \(\fancyscript{F}_0\)-measurable random variable, \(M^c\) is a continuous process in \(\fancyscript{M}^2(\mathbb {R})\) and \(M^d\in \fancyscript{M}^2(\mathbb {R})\).

We need the quadratic covariation \([Z_1, Z_2]\) of two real-valued semimartingales \(Z_1\) and \(Z_2\), which can be introduced by

for \(t\ge 0\). By the linearity of the integrals in (1) we obtain the property

From Theorem 4.52 in [15], we know that \([Z_1, Z_2]\) is also given by

for \(t\ge 0\), where \(M_1^c\) and \(M_2^c\) are the continuous martingale parts of \(Z_1\) and \(Z_2\). Furthermore, we set \({\varDelta } Z(s):=Z(s)-Z(s-)\) with \(Z(s-):=\lim _{t\uparrow s} Z(t)\) for a real-valued semimartingale Z. If we rearrange Eq. (1), we obtain the Ito product formula

for \(t\ge 0\), which we use for the following corollaries:

Corollary 2.4

Let Y and Z be two \(\mathbb {R}^d\)-valued semimartingales, then

for all \(t\ge 0\).

Proof

We have

by applying the product formula in (3). \(\square \)

Corollary 2.5

Let Y be an \(\mathbb {R}^d\)-valued and Z be an \(\mathbb {R}^n\)-valued semimartingale, then

for all \(t\ge 0\).

Proof

We consider the stochastic differential of the ij-th component of the matrix-valued process \(Y(t) Z^T(t), t\ge 0\), and obtain the following via the product formula in (3):

for all \(t\ge 0, i\in \left\{ 1, \ldots , d\right\} \), and \(j\in \left\{ 1, \ldots , n\right\} \), where \(e_i\) is the i-th unit vector in \(\mathbb {R}^d\) or in \(\mathbb {R}^n\), respectively. Hence, in compact form we have

for all \(t\ge 0\). \(\square \)

2.2 Levy processes

Definition 2.6

Let \(L=\left( L\left( t\right) \right) _{t\ge 0}\) be a cadlag stochastic process with values in \(\mathbb {R}\) having independent and homogeneous increments. If, furthermore, \(L\left( 0\right) =0\) \(\mathbb {P}\)-almost surely, and L is continuous in probability, then L is called (real-valued) Levy process.

Below, we focus on Levy processes L being square integrable. The following theorem is proven analogously to Theorem 4.44 in [21].

Theorem 2.7

We set \(\tilde{m}=\mathbb {E} \left[ L(1)\right] \). For square integrable Levy processes L and \(t, s\ge 0\) it holds

Proposition 2.8

Let L be a square integrable Levy process adapted to a filtration \((\fancyscript{F}_t)_{t\ge 0}\), such that the increments \(L(t+h)-L(t)\) are independent of \(\fancyscript{F}_t\) \((t, h\ge 0)\), then L is a martingale with respect to \((\fancyscript{F}_t)_{t\ge 0}\) if and only if L has mean zero.

Proof

First, we assume that L has mean zero, then the conditional expectation \(\mathbb {E}\left\{ L(t) \vert \fancyscript{F}_s\right\} \) fulfills

for \(0\le s <t\). If we know that L is a martingale, then it easily follows that it has a constant mean function, since

for \(0\le s <t\). But by Theorem 2.7, we know that the mean function is linear. Thus, \(\mathbb {E}\left[ L(t)\right] =0\) for all \(t\ge 0\). \(\square \)

We set \(M(t):=L(t)-t \mathbb {E} [L(1)], t\ge 0\), where L is square integrable. By Proposition 2.8, M is a square integrable martingale with respect to \((\fancyscript{F}_t)_{t\ge 0}\) and a Levy process as well. So, we have the following representation for square integrable Levy processes L:

The compensator \(\left\langle M, M\right\rangle \) of M is deterministic and continuous and given by

because \(M^2(t)-\mathbb {E}\left[ M^2(1)\right] t\), \(t\ge 0\), is a martingale with respect to \((\fancyscript{F}_t)_{t\ge 0}\).

2.3 Stochastic integration

We assume that \(M\in \fancyscript{M}^2(\mathbb {R})\). The definition of an integral with respect to M is similar to that with respect to a Wiener process W. This makes things comfortable. A definition for an integral based on W can for example be found in Applebaum [2], Arnold [3] and Kloeden and Platen [16]. Furthermore, Applebaum [2] gives a definition of an integral with respect to the so-called “martingale-valued measures”, which is a generalization of the integral introduced here. We take the definition of the integral with respect to M from Chap. 6.5 in the book of Kuo [17].

Fist of all, we characterize the class of simple processes.

Definition 2.9

A process \({\varPsi }=\left( {\varPsi }(t)\right) _{t\in [0,T]}\) is called simple if it has the following representation:

for \(0=t_0<t_1<\cdots <t_{m+1}=T\). Here, the random variables \({\varPsi }_i\) are \(\fancyscript{F}_{t_i}\)-measurable and bounded, \(i\in \left\{ 0, 1,\ldots , m\right\} \).

For simple processes \({\varPsi }\), we define

and for \(0\le t_0\le t\le T\), we set

Definition 2.10

Let \(\left( F(t)\right) _{t\in [0,T]}\) be adapted to the filtration \(\left( \fancyscript{F}_t\right) _{t\in [0,T]}\) with left continuous trajectories. We define \(\fancyscript{P}_T\) as the smallest sub \(\sigma \)-algebra of \(\fancyscript{B}({[0,T]})\otimes \fancyscript{F}\) with respect to which all mappings \(F:{[0,T]}\times {\varOmega } \rightarrow \mathbb {R}\) are measurable. We call \(\fancyscript{P}_T\) predictable \(\sigma \)-algebra.

Remark

\(\fancyscript{P}_T\) is generated as follows:

In Definition 2.10, we can replace the time interval [0, T] by \(\mathbb {R}_+\). Then the predictable \(\sigma \)-algebra is denoted by \(\fancyscript{P}\). \(\fancyscript{P}_T\)- or \(\fancyscript{P}\)-measurable processes we call predictable.

We want to extend the set of all integrable processes with respect to M. Therefore, we introduce \(\fancyscript{L}^2_T\) as the space of all predictable mappings \({\varPsi }\) on \([0,T]\times {\varOmega }\) with \(\left\| {\varPsi }\right\| _T<\infty \), where

and \(\left\langle M, M\right\rangle \) is the compensator of M introduced in Proposition 2.2.

By Chap. 6.5 in Kuo [17], we can choose a sequence \(\left( {\varPsi }_n\right) _{n\in \mathbb {N}}\subset \fancyscript{L}^2_T\) of simple processes, such that

for \({\varPsi }\in \fancyscript{L}^2_T\) and \(n\rightarrow \infty \). Hence, we obtain that \(\left( I^M_T({\varPsi }_n)\right) _{n\in \mathbb {N}}\) is a Cauchy sequence in \(L^2\left( {\varOmega }, \fancyscript{F}, \mathbb {P}\right) \). Therefore, we can define

and for \(0\le t_0\le t\le T\) we set

Here, “\(L^2-\lim _{n\rightarrow \infty }\)” denotes the limit in \(L^2\left( {\varOmega }, \fancyscript{F}, \mathbb {P}\right) \).

By Theorem 6.5.8 in Kuo [17], the integral with respect to M has the following properties:

Theorem 2.11

If \({\varPsi }\in \fancyscript{L}^2_T\) for \(T>0\), then

-

(i)

the integral with respect to M has mean zero:

$$\begin{aligned} \mathbb {E}\left[ \int _0^T {\varPsi }(s) dM(s)\right] =0, \end{aligned}$$ -

(ii)

the second moment of \(I^M_T({\varPsi })\) is given by

$$\begin{aligned} \mathbb {E}\left| \int _0^T {\varPsi }(s) dM(s)\right| ^2=\mathbb {E} \int _0^T\left| {\varPsi }(s)\right| ^2 d\left\langle M, M\right\rangle _s, \end{aligned}$$ -

(iii)

and the process

$$\begin{aligned} \left( \int _0^t {\varPsi }(s) dM(s)\right) _{t\in [0, T]} \end{aligned}$$is a martingale with respect to \(\left( \fancyscript{F}_t\right) _{t\in [0, T]}\).

2.4 Levy type integrals

Below, we want to determine the mean of the quadratic covariation of the following Levy type integrals:

where the processes \(M^i\) (\(i=1, \ldots , q\)) are uncorrelated scalar square integrable Levy processes with mean zero. In addition, the processes \(B^i_1, B^i_2\) are integrable with respect to \(M^i\) (\(i=1, \ldots , q\)), which by Sect. 2.3 means that they are predictable with

considering (6) with \(\left\langle M, M\right\rangle _t=\mathbb {E}\left[ M^2(1)\right] t\). Furthermore, \(A_1, A_2\) are \(\mathbb {P}\)-almost surely Lebesgue integrable and \((\fancyscript{F}_t)_{t\ge 0}\)-adapted.

We set \(b_1(t):=\sum _{i=1}^q\int _0^t B^i_1(s) dM^i(s)\) and \(b_2(t):=\sum _{i=1}^q\int _0^t B^i_2(s) dM^i(s)\) and obtain

for \(t\ge 0\) considering Eq. (2), because \(\tilde{Z}_i\) has the same jumps and the same martingale part as \(b_i\) (\(i=1, 2\)). We know that

for \(t\ge 0\). Using the definition in (1) yields

Thus,

Since \(M^i\) and \(M^j\) are uncorrelated processes for \(i\ne j\), we get

by applying Theorem 2.11 (ii), where \(c_i:=\mathbb {E}\left[ \left( M^i(1)\right) ^2\right] \). Hence,

Analogously, we can show that

hold for \(t\ge 0\). Considering Eq. (7), we obtain

At the end of this section, we refer to Sect. 4.4.3 in Applebaum [2]. There, one can find some remarks regarding the quadratic covariation of the Levy type integrals defined in that book.

3 Linear control with levy noise

Before describing balanced truncation for the stochastic case, we define observability and reachability. We introduce observability and reachability Gramians for our Levy driven system like Benner and Damm [5] do (Sect. 2.2). We additionally show that the sets of observable and reachable states are characterized by these Gramians. This is analogous to deterministic systems, where observability and reachability concepts are described in Sects. 4.2.1 and 4.2.2 in Antoulas [1]. This section extends [7] by providing more details and by considering a more general framework.

3.1 Reachability concept

Let \(M_1, \ldots , M_q\) be real-valued uncorrelated and square integrable Levy processes with mean zero defined on a filtered probability space \(\left( {\varOmega }, \fancyscript{F}, (\fancyscript{F}_t)_{t\ge 0}, \mathbb {P}\right) \).Footnote 5 In addition, we assume \(M_k\) (\(k=1, \ldots , q\)) to be \((\fancyscript{F}_t)_{t\ge 0}\)-adapted and the increments \(M_k(t+h)-M_k(t)\) to be independent of \(\fancyscript{F}_t\) for \(t, h\ge 0\). We consider the following equations:

where \(A, {\varPsi }^k \in \mathbb {R}^{n\times n}\) and \(B\in \mathbb {R}^{n\times m}\). With \(L^2_T\) we denote the space of all adapted stochastic processes v with values in \(\mathbb {R}^m\), which are square integrable with respect to \(\mathbb {P} \otimes dt\). The norm in \(L^2_T\) we call energy norm. It is given by

where we define the processes \(v_1\) and \(v_2\) to be equal in \(L^2_T\) if they coincide almost surely with respect to \(\mathbb {P} \otimes dt\). For the case \(T=\infty \), we denote the space by \(L^2\). Further, we assume controls \(u\in L^2_T\) for every \(T>0\). We start with the definition of a solution of (9).

Definition 3.1

An \(\mathbb {R}^n\)-valued and \((\fancyscript{F}_t)_{t\ge 0}\)-adapted cadlag process \(\left( X(t)\right) _{t\ge 0}\) is called solution of (9) if

\(\mathbb {P}\)-almost surely holds for all \(t\ge 0\).

Below, the solution of (9) at time \(t\ge 0\) with initial condition \(x_0\in \mathbb {R}^n\) and given control u is always denoted by \(X(t, x_0, u)\). For the solution of (9) in the uncontrolled case (\(u\equiv 0\)), we briefly write \(Y_{x_0}:=X(t, x_0, 0)\). \(Y_{x_0}\) is called homogeneous solution. Furthermore, by \(\left\| \cdot \right\| _2\) we denote the Euclidean norm. We assume the homogeneous solution to be asymptotically mean square stable, which means that

for \(t\rightarrow \infty \) and \(y_0\in \mathbb {R}^n\). This concept of stability is also used in Benner and Damm [5] and is necessary for defining (infinite) Gramians, which are introduced later.

Proposition 3.2

Let \(Y_{y_0}\) be the solution of (9) in the uncontrolled case with any initial value \(y_0\in \mathbb {R}^n\), then \(\mathbb {E} \left[ Y_{y_0} (t) Y_{y_0}^T(t)\right] \) is the solution of the matrix integral equation

for \(t\ge 0\).

Proof

We determine the stochastic differential of the matrix-valued process \(Y_{y_0} Y_{y_0}^T\) via using the Ito formula in Corollary 2.5. This yields

where \(e_i\) is the i-th unit vector. We obtain

by inserting the stochastic differential of \(Y_{y_0}\). Thus, by taking the expectation, we obtain

applying Theorem 2.11 (i). Considering Eq. (8), we have

where \(c_k:=\mathbb {E} \left[ M_k(1)^2\right] \). In addition, we use the property that a cadlag process has at most countably many jumps on a finite time interval (see Theorem 2.7.1 in Applebaum [2]), such that we can replace the left limit by the function value itself. Thus,

\(\square \)

We introduce an additional concept of stability for the homogeneous system (\(u\equiv 0\)) corresponding to Eq. (9). We call \(Y_{y_0}\) exponentially mean square stable if there exist \(c, \beta >0\) such that

for \(t\ge 0\). This stability turns out to be equivalent to asymptotic mean square stability, which is stated in the next theorem.

Theorem 3.3

The following are equivalent:

-

(i)

The uncontrolled Eq. (9) is asymptotically mean square stable.

-

(ii)

The uncontrolled Eq. (9) is exponentially mean square stable.

-

(iii)

The eigenvalues of \(\left( I_n\otimes A+A\otimes I_n+\sum _{k=1}^q{\varPsi }^k\otimes {\varPsi }^k \cdot \mathbb {E}\left[ M_k(1)^2\right] \right) \) have negative real parts.

Proof

Due to the similarity of the proofs we refer to Theorem 1.5.3 in Damm [11], where these results are proven for the Wiener case. \(\square \)

As in the deterministic case, there exists a fundamental solution, which we define by

for \(t\ge 0\), where \(e_i\) is the i-th unit vector (\(i=1, \ldots , n\)). Thus, \({\varPhi }\) fulfills the following integral equation:

The columns of \({\varPhi }\) represent a minimal generating set such that we have \(Y_{y_0}(t)={\varPhi }(t) y_0\). With \(B=\left[ b_1, b_2, \ldots , b_m\right] \) one can see that

Hence, we have

such that

holds for every \(t\ge 0\). Due to the assumption that the homogeneous solution \(Y_{y_0}\) is asymptotically mean square stable for an arbitrary initial value \(y_0\), yielding \(\mathbb {E} \left[ Y_{y_0}^T(t) Y_{y_0}^{}(t)\right] \rightarrow 0\) for \(t\rightarrow \infty \), we obtain

by taking the limit \(t\rightarrow \infty \) in Eq. (13). Therefore, we can conclude that \(P:=\int _0^\infty \mathbb {E}\left[ {\varPhi }(s) B B^T {\varPhi }^T(s)\right] ds\), which exists by the asymptotic mean square stability assumption, is the solution of a generalized Lyapunov equation

P is the reachability Gramian of system (9), where this definition of the Gramian is also used in Benner and Damm [5] for stochastic systems driven by Wiener noise. Note that in this case \(\mathbb {E} \left[ M_k(1)^2\right] =1\).

Remark

The solution of the matrix equation

is unique if and only if the solution of

is unique. By the assumption of mean square asymptotic stability the eigenvalues of the matrix \(I\otimes A+A\otimes I+\sum _{k=1}^q{\varPsi }^k\otimes {\varPsi }^k \cdot \mathbb {E}\left[ M_k(1)^2\right] \) are non zero, hence the matrix Eq. (14) is uniquely solvable.

More general, we consider stochastic processes \(\left( {\varPhi }(t, \tau )\right) _{t\ge \tau }\) with starting time \(\tau \ge 0\) and initial condition \({\varPhi }(\tau , \tau )=I_n\) satisfying

for \(t\ge \tau \ge 0\). Of course, we have \({\varPhi }(t, 0)={\varPhi }(t)\). Analogous to Eq. (13), we can show that

This yields that \(\mathbb {E} \left[ {\varPhi }(t, \tau ) B B^T {\varPhi }^T(t, \tau )\right] \) is the solution of the differential equation

for \(t\ge \tau \) with initial condition \(\mathbb {Y}(\tau )=B B^T\).

Remark

For \(t\ge \tau \ge 0\), we have \({\varPhi }(t, \tau )={\varPhi }(t) {\varPhi }^{-1}(\tau )\), since \({\varPhi }(t) {\varPhi }^{-1}(\tau )\) fulfills Eq. (15).

Compared to the deterministic case \(({\varPsi }^k=0)\) we do not have the semigroup property for the fundamental solution. So, it is not true that \({\varPhi }(t, \tau )={\varPhi }(t-\tau )\) \(\mathbb {P}\)-almost surely holds, because the trajectories of the noise processes on \([0, t-\tau ]\) and \([\tau , t]\) are different in general. We can however conclude that \(\mathbb {E} \left[ {\varPhi }(t, \tau ) B B^T {\varPhi }^T(t, \tau )\right] =\mathbb {E} \left[ {\varPhi }(t-\tau ) B B^T {\varPhi }^T(t-\tau )\right] \), since both terms solve Eq. (17) as can be seen employing (13).

Now, we derive the solution representation of the system (9) via using the stochastic variation of constants method. For the Wiener case, this result is stated in Theorem 1.4.1 in Damm [11].

Proposition 3.4

\(\left( {\varPhi }(t)z(t)\right) _{t\ge 0}\) is a solution of Eq. (9), where z is given by

Proof

We want to determine the stochastic differential of \({\varPhi }(t)z(t),\, t\ge 0\), where its i-th component is given by \(e_i^T {\varPhi }(t) z(t)\). Applying the Ito product formula from Corollary 2.4 yields

Above, the quadratic covariation terms are zero, since z is a continuous semimartingale with a martingale part of zero (see Eq. (2)). Applying that \(s\mapsto {\varPhi }(\omega , s)\) and \(s\mapsto {\varPhi }(\omega , s-)\) coincide ds-almost everywhere for \(\mathbb {P}\)-almost all fixed \(\omega \in {\varOmega }\), we have

This yields

\(\square \)

Below, we set \(P_t:=\int _0^t \mathbb {E}\left[ {\varPhi }(s) B B^T {\varPhi }^T(s)\right] ds\) and call \(P_t\) finite reachability Gramian at time \(t\ge 0\). Furthermore, we define the so-called finite deterministic Gramian \(P_{D, t}:=\int _0^t {\text {e}}^{A s} B B^T {\text {e}}^{A^T s} ds\). \(P_t\) and \(P_{D, t}, t\ge 0\), coincide in the case \({\varPsi }^k=0\). By X(T, 0, u) we denote the solution of the inhomogeneous system (9) at time T with initial condition zero for a given input u. From Proposition 3.4, we already know that

Now, we have the goal to steer the average state of the system (9) from zero to any given \(x\in \mathbb {R}^n\) via the control u with minimal energy. First of all we need the following definition, which is motivated by the remarks above Theorem 2.3 in [5].

Definition 3.5

A state \(x\in \mathbb {R}^n\) is called reachable on average (from zero) if there is a time \(T>0\) and a control function \(u\in L^2_T\), such that we have

We say that the stochastic system is completely reachable if every average vector \(x\in \mathbb {R}^n\) is reachable. Next, we characterize the set of all reachable average states. First of all, we need the following proposition, where we define \(P:=\int _0^\infty \mathbb {E}\left[ {\varPhi }(s) B B^T {\varPhi }^T(s)\right] ds\) in analogy to the deterministic case.

Proposition 3.6

The finite reachability Gramians \(P_t, t> 0\), have the same image as the infinite reachability Gramian P, i.e.,

for all \(t> 0\).

Proof

Since P and \(P_t\) are positive semidefinite and symmetric by definition it is sufficient to show that their kernels are equal. First, we assume \(v\in {\text {ker}}P\). Thus,

since \(t\mapsto v^T P_t v\) is increasing such that \(v\in {\text {ker}}P_t\) follows. On the other hand, if \(v\in {\text {ker}}P_t\) we have

Hence, we can conclude that \(v^T \mathbb {E}\left[ {\varPhi }(s) B B^T {\varPhi }^T(s)\right] v=0\) for almost all \(s\in [0, t]\). Additionally, we know that \(t\mapsto \mathbb {E}\left[ {\varPhi }(t) B B^T {\varPhi }^T(t)\right] \) is the solution of the linear matrix differential equation

with initial condition \(\mathbb {Y}(0)=B B^T\) for \(t\ge 0\). The vectorized form \({\text {vec}}({\mathbb {Y}})\) satisfies

Thus, the entries of \(\mathbb {E}\left[ {\varPhi }(t) B B^T {\varPhi }^T(t)\right] \) are analytic functions. This implies that the function \(f(t):=v^T\mathbb {E}\left[ {\varPhi }(t) B B^T {\varPhi }^T(t)\right] v\) is analytic, such that \(f\equiv 0\) on \([0, \infty )\). Thus,

\(\square \)

The next proposition shows that the reachable average states are characterized by the deterministic Gramian \(P_D:=\int _0^\infty {\text {e}}^{As} B B^T {\text {e}}^{A^T s} ds\), which exists due to the asymptotic stability of the matrix A, which is a necessary condition for asymptotic mean square stability of system (9).

Proposition 3.7

An average state \(x\in \mathbb {R}^n\) is reachable (from zero) if and only if \(x\in {\text {im}}P_D\), where \(P_D:=\int _0^\infty {\text {e}}^{As} B B^T {\text {e}}^{A^T s} ds\).

Proof

Provided \(x\in {\text {im}}P_D\), we will show that this average state can be reached with the following input function:

where \(P^{\#}_{D, T}\) denotes the Moore-Penrose pseudoinverse of \(P_{D, T}\). Thus,

by inserting the function u. Applying the expectation to both sides of Eq. (15) yields

Using this fact, we obtain

We substitute \(s=T-t\) and since \(x\in {\text {im}}P_{D, T}\) by Proposition 3.6, we get

The energy of the input function \(u(t)=B^T {\text {e}}^{A^T (T-t)} P^{\#}_{D, T} x\) is

On the other hand, if \(x\in \mathbb {R}^n\) is reachable, then there exists an input function u and a time \(t> 0\) such that

by definition. The last equation we get by applying the expectation to both sides of Eq. (9). We assume that \(v\in {\text {ker}}P_D\). Hence,

Employing the Cauchy-Schwarz inequality, we obtain

By the Hölder inequality, we have

Since \(t\mapsto v^T P_{D, t} v\) is increasing, we obtain

Thus, \(\left\langle x, v\right\rangle _2=0\), such that we can conclude that \(x\in {\text {im}}P_D\) due to \({\text {im}}P_D=\left( {\text {ker}}P_D\right) ^\bot \). \(\square \)

Below, we point out the relation between the reachable set and the Gramian \(P:=\int _0^\infty \mathbb {E}\left[ {\varPhi }(s) B B^T {\varPhi }^T(s)\right] ds\).

Proposition 3.8

If an average state \(x\in \mathbb {R}^n\) is reachable (from zero), then \(x\in {\text {im}}P\). Consequently, \({\text {im}}P_D\subseteq {\text {im}}P\) by Proposition 3.7.

Proof

By definition, there exists an input function u and a time \(t> 0\) such that

for reachable \(x\in \mathbb {R}^n\). We assume that \(v\in {\text {ker}}P\). So, we have

Employing the Cauchy-Schwarz inequality, we obtain

By the Hölder inequality, we have

With the remarks above Proposition 3.4, we obtain

such that

Since \(t\mapsto v^T P_t v\) is increasing, it follows

Thus, \(\left\langle x, v\right\rangle _2=0\), such that we can conclude that \(x\in {\text {im}}P\) due to \({\text {im}}P=\left( {\text {ker}}P\right) ^\bot \). \(\square \)

Now, we state the minimal energy to steer the system to a desired average state.

Proposition 3.9

Let \(x\in \mathbb {R}^n\) be reachable, then the input function given by (18) is the one with the minimal energy to reach x at any time \(T>0\). This minimal energy is given by \(x^T P^{\#}_{D, T} x\).

Proof

We use the following representation from the proof of Proposition 3.7:

Let u(t) be like in (18) and \(\tilde{u}(t), t\in [0, T]\), an additional function for which we can reach the average state x at time T, then

such that

follows. Hence, we have

From the proof of Proposition 3.7, we know that the energy of u is given by \(x^T P^{\#}_{D, T} x\).

\(\square \)

The following result shows that the finite reachability Gramian \(P_T\) provides information about the degree of reachability of an average state as well.

Proposition 3.10

Let \(x\in \mathbb {R}^n\) be reachable, then

for every time \(T>0\).

Proof

Since x is reachable, \(x\in {\text {im}}P_T\) by Proposition 3.6 and Proposition 3.8. Hence, we can write \(x=P_T P^{\#}_T x\), where \(P^{\#}_T\) denotes the Moore-Penrose pseudoinverse of \(P_{T}\). From its definition, the finite reachability Gramian is represented by \( P_T= \mathbb {E}\left[ \int _0^T {\varPhi }(T-t) B B^T {\varPhi }^T(T-t) dt\right] \) and since

we have

Now, we choose the control \(u(t)=B^T {\text {e}}^{A^T(T-t)} P^{\#}_{D, T} x, t\in \left[ 0, T\right] \), of minimal energy to reach x, then

Setting \(v(t)=B^T {\varPhi }^T(T, t) P^{\#}_T x\) for \(t\in \left[ 0, T\right] \) yields

We obtain

\(\square \)

Consequently, the expression \(x^T P^{\#}_{T} x\) yields a lower bound for the energy to reach x and is the \(L^2_T\)-norm squared of the function \(v(t)=B^T {\varPhi }^T(T, t) P^{\#}_T x, t\in \left[ 0, T\right] \). With v we would also be able to steer the system to x in case it would be a valid control. Unfortunately, unavailable future information enters in v which means that it is not \(\left( \fancyscript{F}_t\right) _{t\in [0, T]}\)- adapted. So, one can interpret the energy \(x^T \left( P^{\#}_{T, D}-P^{\#}_{T}\right) x\) as the benefit of knowing the future until time T.

By Proposition 3.9, the minimal energy that is needed to steer the system to x is given by \(\inf _{T>0} x^T P^{\#}_{D, T} x\). By definition of \(P_{D, T}\) we know that it is increasing in time such that the pseudoinverse \(P^{\#}_{D, T}\) is decreasing. Hence, it is clear that the minimal energy is given by \(x^T P_D^{\#} x\), where \(P_D^{\#}\) is the pseudoinverse of the deterministic Gramian \(P_D\). Using the result in Proposition 3.10 provides a lower bound for the minimal energy to reach x:

with \(P^{\#}\) being the pseudoinverse of the reachability Gramian P. Using inequality (19), we get only partial information about the degree of reachability of an average state x from \(P^{\#}\). So, it remains an open question whether an alternative reachability concept would be more suitable to motivate the Gramian P.

Similar results are obtained by Benner and Damm [5] in Theorem 2.3 for stochastic differential equations driven by Wiener processes. For the deterministic case we refer to Sect. 4.3.1 in Antoulas [1].

3.2 Observability concept

Below, we introduce the concept of observability for the output equation

corresponding to the stochastic linear system (9), where \(C\in \mathbb {R}^{p\times n}\). Therefore, we need the following proposition.

Proposition 3.11

Let \(\hat{Q}\) be a symmetric positive semidefinite matrix and \(Y_a:=X(\cdot , a, 0)\), \(Y_b:=X(\cdot , b, 0)\) the homogeneous solutions to (9) with initial conditions \(a, b\in \mathbb {R}^n\), then

Proof

By applying the Ito product formula from Corollary 2.4, we have

where \(e_i\) is the i-th unit vector (\(i=1,\ldots , n\)). We get

and

By Eq. (8), the mean of the quadratic covariations is given by

With Theorem 2.11 (i), we obtain

using that the trajectories of \(Y_a\) and \(Y_b\) only have jumps on Lebesgue zero sets. \(\square \)

If we set \(a=e_i\) and \(b=e_j\) in Proposition 3.11, we obtain

This yields

Let Q be the solution of the generalized Lyapunov equation

Then,

and by taking the limit \(t\rightarrow \infty \), we have

due to the asymptotic mean square stability of the homogeneous equation (\(u\equiv 0\)), which provides the existence of the integral in Eq. (23) as well.

Remark

The matrix Eq. (22) is uniquely solvable, since

has non zero eigenvalues and hence the solution of \(L \cdot {\text {vec}}(Q)=-{\text {vec}}(C^T C)\) is unique.

Next, we assume that the system (9) is uncontrolled, that means \(u\equiv 0\). By using our knowledge concerning the homogeneous system, \(X(t, x_0, 0)\) is given by \({\varPhi }(t)x_0\), where here, \(x_0\in \mathbb {R}^n\) denotes the initial value of the system. So, we obtain \({\fancyscript{Y}}(t)=C {\varPhi }(t) x_0\).

We observe \({\fancyscript{Y}}\) on a time interval \([0, \infty )\). The problem is to find \(x_0\) from the observations we have. The energy produced by the initial value \(x_0\) is

where we set \(Q:=\mathbb {E} \int _0^\infty {\varPhi }^T(s) C^T C {\varPhi }(s) ds\). As in Benner and Damm [5], Q takes the part of the observability Gramian of the stochastic system with output Eq. (20). We call a state \(x_0\) unobservable if it is in the kernel of Q. Otherwise it is said to be observable. We say that a system is completely observable if the kernel of Q is trivial.

4 Balanced truncation for stochastic systems

For obtaining a reduced order model for a deterministic LTI system, balanced truncation is a method of major importance. For the procedure of balanced truncation in the deterministic case, see Antoulas [1], Benner et al. [4] and Obinata, Anderson [20]. In this section, we want to generalize this method for stochastic linear systems, which are influenced by Levy noise.

4.1 Procedure

We assume \(A, {\varPsi }^k \in \mathbb {R}^{n\times n}\) (\(k= 1, \ldots , q\)), \(B\in \mathbb {R}^{n\times m}\) and \(C\in \mathbb {R}^{p\times n}\), and consider the following stochastic system:

where the noise processes \(M_k\) (\(k= 1, \ldots , q\)) are uncorrelated real-valued and square integrable Levy processes with mean zero. We assume the homogeneous solution \(Y_{y_0}\), which fulfills

to be mean square asymptotically stable. In addition, we require that the system (25) is completely reachable and observable, which is equivalent to \(P_D\) and Q being positive definite. Hence, the reachability Gramian P is also positive definite using Proposition 3.8.

Let \(T\in \mathbb {R}^{n\times n}\) be a regular matrix. If we transform the states using

we obtain the following system:

where \(\tilde{A}=T A {T}^{-1},\, \tilde{{\varPsi }}^k=T {\varPsi }^k {T}^{-1},\, \tilde{B}=T B\) and \(\tilde{C}=C {T}^{-1}\). For an arbitrary fixed input, the transformed system (26) has always the same output as the system (25).

The reachability Gramian \(P:=\int _0^\infty \mathbb {E}\left[ {\varPhi }(s) B B^T {\varPhi }^T(s)\right] ds\) of system (25) fulfills

where \(c_k=\mathbb {E}\left[ M_k(1)^2\right] \). By multiplying T from the left and \({T}^T\) from the right hand side, we obtain

Hence, the reachability Gramian of the transformed system (26) is given by \(\tilde{P}=T P {T}^T\). For the observability Gramian of the transformed system it holds \(\tilde{Q}={T}^{-T} Q {T}^{-1}\), where \(Q:=\int _0^\infty \mathbb {E}\left[ {\varPhi }^T(s) C^T C {\varPhi }(s)\right] ds\) is the observability Gramian of the original system. Hence,

In addition, it is easy to verify that the generalized Hankel singular values \(\sigma _1\ge \cdots \ge \sigma _n>0\) of (25), which are the square roots of the eigenvalues of P Q, are equal to those of (26).

Like in the deterministic case (see [1] and [20]), we choose T such that \(\tilde{Q}\) and \(\tilde{P}\) are equal and diagonal. A system with equal and diagonal Gramians is called balanced. The corresponding balancing T is given by

where \({\varSigma }={\text {diag}}(\sigma _1, \ldots , \sigma _n),\, U\) comes from the Cholesky decomposition of \(P=U U^T\) and K is an orthogonal matrix corresponding to the eigenvalue decomposition (singular value decomposition (SVD) respectively) of \(U^T Q U= K {\varSigma }^2 K^T\). So, we obtain

Our aim is to truncate the average states that are difficult to observe and difficult to reach, which are those producing least observation energy and causing the most energy to reach, respectively. By equation (24), we can say that the states which are difficult to observe are contained in the space spanned by the eigenvectors corresponding to the small eigenvalues of Q. Using (19), an average state x is particularly difficult to reach if the expression \(x^T P^{-1} x\) is large. Those states are contained in the space spanned by the eigenvectors corresponding to the small eigenvalues of P (or to the large eigenvalues of \(P^{-1}\), respectively). The eigenspaces that correspond to the small eigenvalues of P contain all difficult-to-reach states if we would know the future completely, see the remarks below Proposition 3.10. In a balanced system, the dominant reachable and observable states are the same.

We consider the following partitions:

where \(W^T\in \mathbb {R}^{r\times n}, V\in \mathbb {R}^{n\times r}\) and \(\tilde{X}\) takes values in \(\mathbb {R}^r\) (\(r<n\)). Hence, we have

and

By truncating the system and neglecting the \(X_1\) terms, the approximating reduced order model is given by

We will show now that the homogeneous solution \(\tilde{Y}_{y_0}\) of the reduced system (29) fulfilling

is mean square stable which means that it is bounded in mean square.

Proposition 4.1

Let \(\tilde{Y}_{y_0}\) be the homogeneous solution satisfying Eq. (30) with initial condition \(y_0\in \mathbb {R}^r\), then

Proof

In Eq. (28), we block-wise set

In the corresponding output equation, we block-wise define

We know

where \({\varSigma }_1={\text {diag}}\left( \sigma _1, \ldots , \sigma _r\right) , {\varSigma }_2={\text {diag}}\left( \sigma _{r+1}, \ldots , \sigma _n\right) \) and \(c_k=\mathbb {E}[M_k(1)^2]\). Considering the left upper block, we obtain

From Eq. (21), we can conclude that

Thus,

Using \(\sigma _r v^T v\le v^T{\varSigma }_1 v\le \sigma _1 v^T v\), we obtain

\(\square \)

Remark

-

(i)

One persisting problem is to find an explicit structure of the Gramians of the reduced order model. As we will see in an example below, in contrast to the deterministic case the reduced order model is not balanced, that means the Gramians are neither diagonal nor equal. In addition, the Hankel singular values are different from those of the original system.

-

(ii)

From Proposition 4.1, we know that \(\tilde{Y}_{y_0}\) is bounded. This is equivalent to

$$\begin{aligned} I_r\otimes A_{11}+A_{11}\otimes I_r+\sum _{k=1}^q{\varPsi }_{11}^k\otimes {\varPsi }_{11}^k \cdot \mathbb {E}\left[ M_k(1)^2\right] \end{aligned}$$has just eigenvalues with non positive real parts, where \(A_{11}:=W^T A V\) and \({\varPsi }^k_{11}:=W^T {\varPsi }^k V\). To prove the asymptotic mean square stability of the uncontrolled reduced order model it remains to show that the Kronecker matrix above has no eigenvalues on the imaginary axis. This was shown in [6]. Hence, we know that balanced truncation preserves asymptotic mean square stability, also in the stochastic case.

Example 4.2

We consider the case, where \(q=1\) and the noise process is a Wiener process W. So, the system we focus on is

The following matrices (up to the digits shown) provide a balanced and asymptotically mean square stable system:

The Gramians are given by

The reduced order model \((r=2)\) is asymptotically mean square stable and has the following Gramians:

The Hankel singular values of the reduced order model are 7.6633 and 2.7001.

At the end of this section, we provide a short example that shows that the reduced order model need not be completely observable and reachable even if the original system is completely observable and reachable:

Example 4.3

We consider the Eqs. (32) with the matrices

and obtain a balanced and asymptotically mean square stable system being completely reachable and observable. The Hankel singular values are 2 and 1. Truncating yields a system with coefficients

\((A_{11}, B_1, {\varPsi }_{11}, C_1)=(-0.25, 0, 0, 0)\) having Gramians \(P_R=Q_R=0\).

4.2 Error bound for balanced truncation

Let \(\left( A, {\varPsi }^k, B, C\right) \) (\(k=1, \ldots , q\)) be a realization of system (25). Furthermore, we assume the initial condition of the system to be zero. We introduce the following partitions:

where T is the balancing transformation defined in (27) and \(\left( A_{11}, {\varPsi }_{11}^k, B_1, C_1\right) \) are the coefficients of the reduced order model. The output of the reduced (truncated) system is given by

where \(\tilde{{\varPhi }}\) is the fundamental matrix of the truncated system. In addition, we use a result from [6]. Therein it is proven that the homogeneous Eq. (\(u\equiv 0\)) of the reduced system is still asymptotically mean square stable. This is vital for the error bound we provide below since the existence of the Gramians of the reduced order model is ensured. Moreover, we know

It is our goal to steer the average state via the control u and to truncate the average states that are difficult to reach for obtaining a reduced order model. Therefore, it is a meaningful criterion to consider the worst case mean error of \({\hat{\fancyscript{Y}}}(t)\) and \({\fancyscript{Y}}(t)\). Below, we give a bound for that kind of error:

and by the Cauchy-Schwarz inequality, it holds

Now,

Due to the remarks before Proposition 3.4, we have

for \(0\le s\le t\). Furthermore, we need to analyze the term in (34). For that reason, we need the following proposition:

Proposition 4.4

The \(\mathbb {R}^{n\times r}\)-valued function \(\mathbb {E} \left[ {\varPhi }(t) B B_1^T \tilde{{\varPhi }}^T(t)\right] \), \(t\ge 0\), is the solution of the following differential equation:

Proof

With \(B=\left[ b_1, \ldots , b_m\right] \) and \(B_1=\left[ \tilde{b}_1, \ldots , \tilde{b}_m\right] \), we obtain

By applying the Ito product formula from Corollary 2.5, we have

From (8), we know that

With Theorem 2.11 (i), we obtain

using that the trajectories of \({\varPhi }\) and \(\tilde{{\varPhi }}\) only have jumps on Lebesgue zero sets. By Eq. (36), we have

which proves the result. \(\square \)

By Proposition 4.4, we can conclude that the function \(\mathbb {E} \left[ {\varPhi }(t-\tau ) B B_1^T \tilde{{\varPhi }}^T(t-\tau )\right] \), \(t\ge \tau \ge 0\), is the solution of the equation

for all \(t\ge \tau \ge 0\). Analogous to Proposition 4.4 we can conclude that \(\mathbb {E} \left[ {\varPhi }(t, \tau ) B B_1^T \tilde{{\varPhi }}^T(t, \tau )\right] \) is also a solution of Eq. (38), which yields

for all \(t\ge \tau \ge 0\). Using Eq. (39), we have

By substitution, we obtain

The homogeneous equation of the truncated system is still asymptotically mean square stable due to [6]. Hence, the matrices \(P_R=\mathbb {E} \int _0^\infty \tilde{{\varPhi }}(\tau ) B_1 B_1^T \tilde{{\varPhi }}^T(\tau ) d\tau \in \mathbb {R}^{r\times r}\) and \(P_M=\mathbb {E} \int _0^\infty {\varPhi }(\tau ) B B_1^T \tilde{{\varPhi }}^T(\tau ) d\tau \in \mathbb {R}^{n\times r}\) exist. So, it holds

where \(P=\mathbb {E} \int _0^\infty {\varPhi }(\tau ) B B^T {\varPhi }^T(\tau ) d\tau \) is the reachability Gramian of the original system, \(P_R\) the reachability Gramian of the approximating system and \(P_M\) a matrix that fulfills the following equation:

which we get by taking the limit \(t\rightarrow \infty \) on both sides of Eq. (37). We summarize these results in the following theorem:

Theorem 4.5

Let \(\left( A, {\varPsi }^k, B, C\right) \) be a realization of system (25) and \(\left( A_{11}, {\varPsi }_{11}^k, B_1, C_1\right) \) the coefficients of the reduced order model defined in (33), then

for every \(T>0\), where \(\fancyscript{Y}\) and \(\hat{\fancyscript{Y}}\) are the outputs of the original and the reduced system, respectively. Here, P denotes the reachability Gramian of system (25), \(P_R\) denotes the reachability Gramian of the reduced system and \(P_M\) satisfies Eq. (40).

Remark

If \(u\in L^2\) we can replace \(\left\| \cdot \right\| _{L^2_T}\) by \(\left\| \cdot \right\| _{L^2}\) and [0, T] by \(\mathbb {R}_+\) in inequality (41).

Now, we specify the error bound from (41) in the following proposition.

Proposition 4.6

If the realization \((A, {\varPsi }^k, B, C)\) is balanced, then

where \(P_{M, 1}\) are the first r and \(P_{M, 2}\) the last \(n-r\) rows of \(P_M,\, c_k=\mathbb {E}\left[ M_k(1)^2\right] \) and \({\varSigma }_2={\text {diag}}(\sigma _{r+1}, \ldots , \sigma _n)\).

Proof

For simplicity of notation, we prove this result just for the case \(q=1\) but of course it is easy to generalize the proof for an arbitrary q. Here, we additionally set \({\varPsi }:={\varPsi }^1\) and \(c:=c_1\). Then, we have

Hence,

and

Furthermore,

such that one can conclude

and

From

we also know that

We define \(\fancyscript{E}:=\left( {\text {tr}}\left( C {\varSigma } C^T\right) +{\text {tr}}\left( C_1 P_R C_1^T\right) -2\;{\text {tr}}\left( C P_M C_1^T\right) \right) ^{\frac{1}{2}}\) and obtain

Using Eq. (44) yields

By Eq. (47), we obtain

Using Eq. (42), we have

and hence

Thus,

using the identity \({\text {tr}}(C_1 P_R C_1^T)={\text {tr}}(B_1^T Q_R B_1)\). Inserting Eq. (45) provides

So, it holds

From (43), it follows

Using (46) yields

such that

and hence,

By definition, the Gramians \(P_R\) and \(Q_R\) satisfy

and

Thus,

Finally, we have

\(\square \)

The error bound we obtained in Proposition 4.6 has the same structure as the \(\fancyscript{H}_2\) error bound in the deterministic case, which can be found in Sect. 7.2.2 in Antoulas [1]. Furthermore, with this representation of the error bound we are able to emphasize the cases in which balanced truncation is a good approximation. In Proposition 4.6 the bound depends on \({\varSigma }_2\) which contains the \(n-r\) smallest Hankel singular values \(\sigma _{r+1}, \ldots , \sigma _n\) of the original system. In case these values are small, the reduced order model computed by balanced truncation is of good quality.

5 Applications

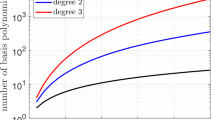

In order to demonstrate the use of the model reduction method introduced in Sect. 4 we apply it in the context of the numerical solution of linear controlled evolution equations with Levy noise. For that reason, we apply the Galerkin scheme to the evolution equation and end up with a sequence of ordinary stochastic differential equations. Then, we use balanced truncation for reducing the dimension of the Galerkin solution. Finally, we compute the error bounds and exact errors for the example considered here.

5.1 Finite dimensional approximations for stochastic evolution equations

In this section, we deal with an infinite dimensional system, where the noise process is denoted by M. We suppose that M is a Levy process with values in a separable Hilbert space U. Additionally, we assume that M is square integrable with zero mean. The most important properties regarding this process and the definition of an integral with respect to M can be found in the book of Peszat, Zabczyk [21].

Suppose \(A:D(A)\rightarrow H\) is a densely defined linear operator being self adjoint and negative definite such that we have an orthonormal basis \(\left( h_k\right) _{k\in \mathbb {N}}\) of H consisting of eigenvectors of A:

where \(0\le \lambda _1\le \lambda _2\le \cdots \) are the corresponding eigenvalues. Furthermore, the linear operator A generates a contraction \(C_0\)-semigroup \(\left( S(t)\right) _{t\ge 0}\) defined by

for \(x\in H\). It is exponentially stable for the case \(0<\lambda _1\). By \(\fancyscript{Q}\) we denote the covariance operator of M which is a symmetric and positive definite trace class operator that is characterized by

for \(x, y\in U\) and \(s,t\ge 0\). We can choose an orthonormal basis of U consisting of eigenvectors \(\left( u_k\right) _{k\in \mathbb {N}}\) of \(\fancyscript{Q}\).Footnote 6 The corresponding eigenvalues we denote by \(\left( \mu _k\right) _{k\in \mathbb {N}}\) such that

We then consider the following stochastic differential equation:

We make the following assumptions:

-

\({\varPsi }\) is a linear mapping on H with values in the set of all linear operators from U to H such that \({\varPsi }(h)\fancyscript{Q}^{\frac{1}{2}}\) is a Hilbert-Schmidt operator for every \(h\in H\). In addition,

$$\begin{aligned} \left\| {\varPsi }(h)\fancyscript{Q}^{\frac{1}{2}}\right\| _{L_{HS}(U, H)}\le \tilde{M} \left\| h\right\| _H \end{aligned}$$(49)holds for some constant \(\tilde{M}>0\), where \(L_{HS}\) indicates the Hilbert-Schmidt norm.

-

The process \(u:\mathbb {R}_+\times {\varOmega }\rightarrow \mathbb {R}^m\) is \((\fancyscript{F}_t)_{t\ge 0}\)-adapted with

$$\begin{aligned} \int _0^T \mathbb {E}\left\| u(s)\right\| ^2_2 ds<\infty \end{aligned}$$for each \(T>0\).

-

B is a linear and bounded operator on \(\mathbb {R}^m\) with values in H and \(C\in L(H, \mathbb {R}^p)\).

Definition 5.1

An adapted cadlag process \(\left( X(t)\right) _{t\ge 0}\) with values in H is called mild solution of (48) if \(\mathbb {P}\)-almost surely

holds for all \(t\ge 0\).

Remark

Since the operator A generates a contraction semigroup, the stochastic convolution in Eq. (50) has a cadlag modification (Theorem 9.24 in [21]), which enables us to construct a cadlag mild solution of Eq. (48). This solution is unique for every fixed u considering Theorem 9.29 in [21].

We will now approximate the mild solution of the infinite dimensional Eq. (48). We use the Galerkin method for a finite dimensional approximation that one can find for example in Grecksch, Kloeden [12]. Therein they deal with strong solutions of stochastic evolution equations with scalar Wiener noise.

We construct a sequence \(\left( X_n\right) _{n\in \mathbb {N}}\) of finite dimensional cadlag processes with values in \(H_n={\text {span}}\left\{ h_1, \ldots , h_n\right\} \) given by

where

-

\(M_n(t)=\sum _{k=1}^n \left\langle M(t), u_k\right\rangle _U u_k ,\, t\ge 0\), is a \({\text {span}}\left\{ u_1, \ldots , u_n\right\} \)-valued Levy process,

-

\(A_n x=\sum _{k=1}^n \left\langle A x, h_k\right\rangle _H h_k \in H_n\) holds for all \(x\in D(A)\),

-

\(B_n x=\sum _{k=1}^n \left\langle B x, h_k\right\rangle _H h_k \in H_n\) holds for all \(x\in \mathbb {R}^m\),

-

\({\varPsi }_n(x) y=\sum _{k=1}^n \left\langle {\varPsi }(x) y, h_k\right\rangle _H h_k \in H_n\) holds for all \(y\in U\) and \(x\in H\),

-

\(x_{0, n}=\sum _{k=1}^n \left\langle x_0, h_k\right\rangle _H h_k\in H_n\).

Since \(A_n\) is a bounded operator for every \(n\in \mathbb {N}\), we know that \(A_n\) generates a \(C_0\)-semigroup on H of the form \(S_n(t)={\text {e}}^{A_n t}, t\ge 0\). For all \(x\in H_n\) it has the representation \(S_n(t) x=\sum _{k=1}^n {\text {e}}^{-\lambda _k t} \left\langle x, h_k\right\rangle _H h_k \) such that the mild solution of Eq. (51) is given by

for \(t\ge 0\). Furthermore, we consider the p dimensional approximating output

With similar arguments like in the proof of Theorem 1 in Grecksch, Kloeden [12] one can show the Theorem 5.2 below. This shows that

is true for \(n\rightarrow \infty \) and \(t\ge 0\):

Theorem 5.2

It holds

for \(n\rightarrow \infty \) and \(t\ge 0\).

Remark

If \(U=\mathbb {R}^q\), one has to replace \(M_n\) by M in Eq. (51) and Theorem 5.2 holds for this case as well.

We now determine the components of \(Y_n\). They are given by

for \(l=1, \ldots , p\), where \(e_l\) is the l-th unit vector in \(\mathbb {R}^p\). We set

and obtain

The components of \(\fancyscript{X}\) fulfill the following equation:

Considering the representation \(S_n(t)x=\sum _{i=1}^n {\text {e}}^{-\lambda _i t}\left\langle x, h_i\right\rangle _H h_i\) (\(x\in H_n\)), we have

and

for \(k=1, \ldots , n\), where \(e_l\) is the l-th unit vector in \(\mathbb {R}^m\). Furthermore,

Hence, in compact form \(\fancyscript{X}\) is given by

where

-

\(\fancyscript{A}={\text {diag}}(-\lambda _1, \ldots , -\lambda _n)\), \(\fancyscript{B}=\left( \left\langle B e_i , h_k\right\rangle _H\right) _{\begin{array}{c} k=1, \ldots , n \\ i=1, \ldots , m \end{array}},\, \fancyscript{N}^j =\left( \left\langle {\varPsi }(h_i)u_j, h_k\right\rangle _H\right) _{k, i=1, \ldots , n}\),

-

\(\fancyscript{X}_0=\left( \left\langle x_0, h_1\right\rangle _H, \ldots , \left\langle x_0, h_n\right\rangle _H\right) ^T\) and \(M^j(s)=\left\langle M(s),u_j \right\rangle _U\).

The processes \(M^j\) are uncorrelated real-valued Levy processes with \(\mathbb {E} \left| M^j(t)\right| ^2=t \mu _j, t\ge 0\), and zero mean. Below, we show that the solution of Eq. (52) fulfills the strong solution equation as well. We set

and determine the stochastic differential of \({\text {e}}^{\fancyscript{A} t} f(t)\) via the Ito product formula in Corollary 2.4:

where \(e_i\) is the i-th unit vector of \(\mathbb {R}^n\) and the quadratic covariation terms are zero, since \(t\mapsto e_i^T {\text {e}}^{\fancyscript{A} t}\) is a continuous semimartingale with a martingale part of zero. Hence,

Example 5.3

We consider a bar of length \(\pi \), which is heated on \([0, \frac{\pi }{2}]\). The temperature of the bar is described by the following stochastic partial differential equation:

for \(t\ge 0\) and \(\zeta \in [0, \pi ]\). Here, we assume that M is a scalar square integrable Levy process with zero mean, \(H=L^2([0, \pi ]),\,U=\mathbb {R},\, m=1,\, A=\frac{\partial ^2}{\partial \zeta ^2}\). Furthermore, we set \(B=1_{[0, \frac{\pi }{2}]}(\cdot )\) and \({\varPsi }(x)=a x\) for \(x\in L^2([0, \pi ])\). Additionally, we assume \(\mathbb {E} \left[ M(1)^2 \right] a^2<2\), which is equivalent to that the solution of the uncontrolled Eq. (53) satisfies

for \(c, \alpha >0\). This equivalence is a consequence of Theorem 3.1 in Ichikawa [14] and Theorem 5 in Haussmann [13]. For further information regarding the exponential mean square stability condition (54), see Sect. 5 in Curtain [9].Footnote 7 It is a well-known fact that here the eigenvalues of the second derivative are given by \(-\lambda _k=-k^2\) and the corresponding eigenvectors which represent an orthonormal basis are \(h_k=\sqrt{\frac{2}{\pi }}\sin (k\cdot )\). We are interested in the average temperature of the bar on \([\frac{\pi }{2}, \pi ]\) such that the scalar output of the system is

where \(C x=\frac{2}{\pi }\int _{\frac{\pi }{2}}^{\pi } x(\zeta ) d\zeta \) for \(x\in L^2([0, \pi ])\). We approximate Y via

\(\fancyscript{C}^T=\left( C h_k\right) _{k=1, \ldots , n}=\left( \left( \frac{2}{\pi }\right) ^{\frac{3}{2}}\frac{1}{k}\left[ \cos (\frac{k\pi }{2})-\cos (k \pi )\right] \right) _{k=1, \ldots , n}\).

\(\fancyscript{X}\) is given by

where

-

\(\fancyscript{A}={\text {diag}}\left( -1, -4, \ldots , -n^2\right) \),

-

\(\fancyscript{N}=\left( \left\langle {\varPsi }(h_i), h_k\right\rangle _H\right) _{k, i=1, \ldots , n}=\left( \left\langle a h_i, h_k\right\rangle _H\right) _{k, i=1, \ldots , n}=a I_n\),

-

\(\fancyscript{B}=\left( \left\langle B , h_k\right\rangle _H\right) _{k=1, \ldots , n}=\left( \left\langle 1_{[0, \frac{\pi }{2}]}(\cdot ), h_k\right\rangle _H\right) _{k=1, \ldots , n}=\left( \left( \frac{2}{ \pi }\right) ^{\frac{1}{2}}\frac{1}{k}\left[ 1-\cos (\frac{k \pi }{2})\right] \right) _{k=1, \ldots , n}\).

Since we now choose \(x_0\equiv 0\) for simplicity, we additionally have \(\fancyscript{X}_0=0\).

Next, we consider a more complex example with a two dimensional spatial variable:

Example 5.4

We determine the Galerkin solution of the following controlled stochastic partial differential equation:

Again, M is a scalar square integrable Levy process with zero mean, \(H=L^2([0, \pi ]^2),\, U=\mathbb {R},\, m=1,\, A\) is the Laplace operator, \(B=1_{[\frac{\pi }{4}, \frac{3 \pi }{4}]^2}(\cdot )\), and \({\varPsi }(x)={\text {e}}^{-\left| \cdot -\frac{\pi }{2}\right| -\cdot } x\) for \(x\in L^2([0, \pi ]^2)\). The eigenvalues of the Laplacian on \([0, \pi ]^2\) are given by \(-\lambda _{i j}=-(i^2+j^2)\) and the corresponding eigenvectors which represent an orthonormal basis are \(h_{i j}=\frac{f_{ij}}{\left\| f_{i j}\right\| _H}\), where \(f_{i j}=\cos (i\cdot ) \cos (j\cdot )\). For simplicity we write \(-\lambda _k\) for the k-th largest eigenvalue, and the corresponding eigenvector we denote by \(h_k\). The scalar output of the system is

where \(C x=\frac{4}{3 \pi ^2}\int _{[0, \pi ]^2\setminus [\frac{\pi }{4}, \frac{3 \pi }{4}]^2} x(\zeta ) d\zeta \) for \(x\in L^2([0, \pi ]^2)\). The output of the Galerkin system is

with \(\fancyscript{C}^T=\left( C h_k\right) _{k=1, \ldots , n}\). The Galerkin solutions \(\fancyscript{X}\) satisfies

where

5.2 Error bounds for the examples

We consider the system from Example 5.3. Using Theorem 3.3, the uncontrolled Eq. (55) is asymptotically mean square stable if and only if the Kronecker matrix

is Hurwitz. From Sect. 2.6 in Steeb [25] we can conclude that the largest eigenvalue of the Kronecker matrix is \(-2+\mathbb {E}\left[ M(1)^2\right] a^2\). Thus, the solution of the uncontrolled system (55) is asymptotically mean square stable if and only if \(\mathbb {E} \left[ M(1)^2 \right] a^2<2\), which is fulfilled by (54).

We want to obtain a reduced order model via balanced truncation. We choose \(a=\mathbb {E} \left[ M(1)^2 \right] =1\) and additionally let \(n=1000\). It turns out that the system is neither completely observable nor completely reachable since the Gramians do not have full rank. So, we need an alternative method to determine the reduced order model. We use a method for non minimal systems that is known from the deterministic case and which is for example described in Sect. 1.4.2 in Benner et al. [4]. In this algorithm we do not compute the full transformation matrix T. So, we obtain the matrices of the reduced order model by

Above, we set

where \(V_1\) and \(U_1\) are obtained from the SVD of \(S R^T\):

where \(Q=R^T R\) and \(P=S^T S\). Reducing the model yields the following error bounds:

Dimension of the reduced order model | \(\left( {\text {tr}}\left( \fancyscript{C} P {\fancyscript{C}}^T\right) +{\text {tr}}\left( \tilde{\fancyscript{C}} P_R {\tilde{\fancyscript{C}}}^T\right) -2\;{\text {tr}}\left( \fancyscript{C} P_M {\tilde{\fancyscript{C}}}^T\right) \right) ^{\frac{1}{2}}\) |

|---|---|

8 | \(4.5514 \cdot 10^{-6}\) |

4 | \(2.3130\cdot 10^{-4}\) |

2 | \(1.7691\cdot 10^{-3}\) |

1 | 0.0879 |

Below, we reduce the Galerkin solution of Example 5.4 with dimension \(n=1000\) and \(\mathbb {E} \left[ M(1)^2 \right] =1\). Here, the matrix \(\fancyscript{A}={\text {diag}}\left( 0, -1, -1, -2, \ldots \right) \) is not stable, such that we need to stabilize system (57) before using balanced truncation. Inserting the feedback control \(u(t)=-2 e_1^T \fancyscript{X}(t),\, t\ge 0\), where \(e_1\) is the first unit vector in \(\mathbb {R}^n\), yields a asymptotically mean square stable system, since the following sufficient condition holds (see Corollary 3.6.3 in [11] and Theorem 5 in [13]): \(\fancyscript{A}_S=\fancyscript{A}-2 \fancyscript{B} e_1^T\) is stable and

We repeat the procedure from above and obtain

Dimension of the reduced order model | \(\left( {\text {tr}}\left( \fancyscript{C} P {\fancyscript{C}}^T\right) +{\text {tr}}\left( \tilde{\fancyscript{C}} P_R {\tilde{\fancyscript{C}}}^T\right) -2\;{\text {tr}}\left( \fancyscript{C} P_M {\tilde{\fancyscript{C}}}^T\right) \right) ^{\frac{1}{2}}\) |

|---|---|

8 | \(3.7545\cdot 10^{-6}\) |

4 | \(6.4323\cdot 10^{-4}\) |

2 | \(3.1416\cdot 10^{-3}\) |

1 | 0.0333 |

for the the stabilized system (57) meaning that we replaced \(\fancyscript{A}\) by \(\fancyscript{A}_S\).

5.3 Comparison between exact error and error bound

Since Eqs. (55) and (57) do not have an explicit solution in general, we need to discretize in time for estimating the exact error of the estimation given here. For simplicity, we assume that \(n=80\) and M is a scalar Wiener process and use the Euler-Maruyama schemeFootnote 8 for approximating the original system, yielding

and the reduced order model:

where we consider these equations on the time interval \([0, \pi ]\). Furthermore, we choose \(\fancyscript{X}_0=0, h=\frac{\pi }{10000}\) and \(t_k=k h\) for \(k=0, 1, \ldots , 10000, {\varDelta } M_k=M(t_{k+1})-M(t_{k})\).

For system (55) we insert the normalized control functions \(u_1(t)=\frac{\sqrt{2}}{\pi } M(t)\), \(u_2(t)=\sqrt{\frac{2}{\pi }}\cos (t)\), \(u_3(t)=\sqrt{\frac{2}{1-{\text {e}}^{-2\pi }}}{\text {e}}^{-t}\), \(t\in [0, \pi ]\) and obtain \(\fancyscript{D}:=\max _{k=1, \ldots , 10000} \mathbb {E}\left| \fancyscript{C} X_k-\tilde{\fancyscript{C}}{\tilde{X}}_k \right| \) for different dimensions of the reduced order model (ROM) and different inputs:

Dimension of the ROM | \(\fancyscript{D}\; \text {with}\; u=u_1 \) | \(\fancyscript{D}\; \text {with}\; u=u_2\) | \(\fancyscript{D}\; \text {with}\; u=u_3\) | \(\fancyscript{{EB}}\) |

|---|---|---|---|---|

8 | \(9.0615\times 10^{-9}\) | \(7.8832\times 10^{-8}\) | \(1.3987\times 10^{-7}\) | \(1.4813\times 10^{-6}\) |

4 | \(3.8702\times 10^{-6}\) | \(6.4204\times 10^{-6}\) | \(1.1353\times 10^{-5}\) | \(2.2706\times 10^{-4}\) |

2 | \(6.8932\times 10^{-5}\) | \(1.1195\times 10^{-4}\) | \(1.9549\times 10^{-4}\) | \(1.7671\times 10^{-3}\) |

1 | 0.0141 | 0.0243 | 0.0354 | 0.0879 |

Here, \({\fancyscript{{EB}}}:=\left( {\text {tr}}\left( \fancyscript{C} P {\fancyscript{C}}^T\right) +{\text {tr}}\left( \tilde{\fancyscript{C}} P_R {\tilde{\fancyscript{C}}}^T\right) -2\;{\text {tr}}\left( \fancyscript{C} P_M {\tilde{\fancyscript{C}}}^T\right) \right) ^{\frac{1}{2}}\) is the balanced truncation error bound derived in Sect. 4.

For system (57) we use the inputs \(\tilde{u}_i(t)=-2 e_1^T \fancyscript{X}(t)+u_i(t),\, t\ge 0,\, i=1, 2, 3\) and obtain

Dimension of the ROM | \(\fancyscript{D}\; \text {with}\; u=\tilde{u}_1\) | \(\fancyscript{D}\; \text {with}\; u=\tilde{u}_2\) | \(\fancyscript{D}\; \text {with}\; u=\tilde{u}_3\) | \({\fancyscript{{EB}}}\) |

|---|---|---|---|---|

8 | \(5.6162\times 10^{-7}\) | \(5.5374\times 10^{-7}\) | \(6.5699\times 10^{-7}\) | \(3.5376\times 10^{-6}\) |

4 | \(4.7245\times 10^{-5}\) | \(5.2722\times 10^{-5}\) | \(6.8758\times 10^{-5}\) | \(3.1487\times 10^{-4}\) |

2 | \(5.1270\times 10^{-4}\) | \(4.6627\times 10^{-4}\) | \(6.2103\times 10^{-4}\) | \(2.4164\times 10^{-3}\) |

1 | \(3.7520\times 10^{-3}\) | 0.0118 | \(9.9629\times 10^{-3}\) | 0.0327 |

These results show that the balanced truncation error bound, which is a worst case bound holding for all feasible input functions, also provides a good prediction of the true time domain error. In particular, it quite well predicts the decrease of the true error for increased dimension of the reduced order model.

6 Conclusions

We generalized balanced truncation for stochastic system with noise processes having jumps. In particular, we focused on a linear controlled state equation driven by uncorrelated Levy processes which is asymptotically mean square stable and equipped with an output equation. We showed that the Gramians we defined are solutions of generalized Lyapunov equations. Furthermore, we proved that the observable states and the corresponding energy are characterized by the observability Gramian Q and that the reachability Gramian P provides partial information about the reachabilty of an average state. We showed that the reduced order model (ROM) is mean square stable, not balanced, the Hankel singular values (HV) of the ROM are not a subset of the HVs of the original system and one can lose complete observability and reachability. Furthermore, we provided an error bound for balanced truncation of the Levy driven system. Finally, we demonstrated the use of balanced truncation for stochastic systems. We applied it in the context of the numerical solution of linear controlled evolution equations with Levy noise and computed the error bounds and exact errors for the example considered here.

Notes

\((\fancyscript{F}_t)_{t\ge 0}\) shall be right continuous and complete.

Cadlag means that \(\mathbb {P}\)-almost all paths are right continuous and the left limits exist.

This means that \(\mathbb {P}\)-almost all paths are of bounded variation.

The process \(\left\langle M, N\right\rangle \) is measurable with respect to \(\fancyscript{P}\), which we characterize below Definition 2.10.

We assume that \(\left( \fancyscript{F}_t\right) _{t\ge 0}\) is right continuous and that \(\fancyscript{F}_0\) contains all \(\mathbb {P}\) null sets.

By Theorem VI.21 in Reed, Simon [23], \(\fancyscript{Q}\) is a compact operator such that this property follows by the spectral theorem.

Curtain, Ichikawa and Haussmann stated these conditions for exponential mean square stability for the Wiener case, which can be easily generalized for the case of square integrable Levy process with mean zero.

The theory regarding this method can be found in Kloeden and Platen [16].

References

Antoulas, A.C.: Approximation of Large-Scale Dynamical Systems. Advances in Design and Control, vol. 6. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2005)

Applebaum, D.: Lévy Processes and Stochastic Calculus. Cambridge Studies in Advanced Mathematics, vol. 116, 2nd edn. Cambridge University Press, Cambridge (2009)

Arnold, L.: Stochastische Differentialgleichungen. Theorie und Anwendungen. R. Oldenbourg Verlag, München-Wien (1973)

Benner, P., Mehrmann, V., Sorensen, D.C. (eds.): Dimension reduction of large-scale systems. In: Proceedings of a Workshop, Oberwolfach, Germany, October 19–25, 2003. Lecture Notes in Computational Science and Engineering, vol. 45. Springer, Berlin (2005)

Benner, P., Damm, T.: Lyapunov equations, energy functionals, and model order reduction of bilinear and stochastic systems. SIAM J. Control Optim. 49(2), 686–711 (2011)

Benner, P., Damm, T., Redmann, M., Cruz, Y.R.R.: Positive Operators and Stable Truncation. Linear Algebra and its Applications (2014)

Benner, P., Redmann, M.: Reachability and observability concepts for stochastic systems. Proc. Appl. Math. Mech. 13, 381–382 (2013)

Bertoin, J.: Lévy Processes. Cambridge Tracts in Mathematics, vol. 121. Cambridge University Press, Cambridge (1998)

Curtain, R.F.: Linear stochastic Ito equations in Hilbert space. Stochastic control theory and stochastic differential systems. In: Proceedings of the Workshop, Bad Honnef 1979. Lecture Notes in Control and Information Sciences, vol. 16, pp. 61–84 (1979)

Da Prato, G., Zabczyk, J.: Stochastic Equations in Infinite Dimensions. Encyclopedia of Mathematics and Its Applications, vol. 44. Cambridge University Press, Cambridge (1992)

Damm, T.: Rational Matrix Equations in Stochastic Control. Lecture Notes in Control and Information Sciences, vol. 297. Springer, Berlin (2004)

Grecksch, W., Kloeden, P.E.: Time-discretised Galerkin approximations of parabolic stochastic PDEs. Bull. Aust. Math. Soc. 54(1), 79–85 (1996)

Haussmann, U.G.: Asymptotic stability of the linear Ito equation in infinite dimensions. J. Math. Anal. Appl. 65, 219–235 (1978)

Ichikawa, A.: Dynamic programming approach to stochastic evolution equations. SIAM J. Control Optim. 17, 152–174 (1979)

Jacod, J., Shiryaev, A.N.: Limit Theorems for Stochastic Processes. Grundlehren der Mathematischen Wissenschaften, vol. 288, 2nd edn. Springer, Berlin (2003)

Kloeden, P.E., Platen, E.: Numerical Solution of Stochastic Differential Equations. Applications of Mathematics, vol. 23. Springer, Berlin (2010)

Kuo, H.-H.: Introduction to Stochastic Integration. Universitext. Springer, New York (2006)

Metivier, M.: Semimartingales: A Course on Stochastic Processes. De Gruyter Studies in Mathematics, vol. 2. de Gruyter, Berlin (1982)

Moore, B.C.: Principal component analysis in linear systems: controllability, observability, and model reduction. IEEE Trans. Autom. Control 26, 17–32 (1981)

Obinata, G., Anderson, B.D.O.: Model Reduction for Control System Design. Communications and Control Engineering Series. Springer, London (2001)

Peszat, S., Zabczyk, J.: Stochastic Partial Differential Equations with Lévy Noise. An Evolution Equation Approach. Encyclopedia of Mathematics and Its Applications, vol. 113. Cambridge University Press, Cambridge (2007)

Prévôt, C., Röckner, M.: A Concise Course on Stochastic Partial Differential Equations. Lecture Notes in Mathematics, vol. 1905. Springer, Berlin (2007)

Reed, M., Simon, B.: Methods of Modern Mathematical Physics. I: Functional Analysis. Academic Press, A Subsidiary of Harcourt Brace Jovanovich, Publishers, New York (1980)

Sato, K.-I.: Lévy Processes and Infinitely Divisible Distributions. Cambridge Studies in Advanced Mathematics, vol. 68. Cambridge University Press, Cambridge (1999)

Steeb, W.-H.: Matrix Calculus and the Kronecker Product with Applications and C++ Programs. With the Collaboration of Tan Kiat Shi. World Scientific, Singapore (1997)

Zhang, L., Lam, J., Huang, B., Yang, G.-H.: On gramians and balanced truncation of discrete-time bilinear systems. Int. J. Control 76(4), 414–427 (2003)

Acknowledgments

The authors would like to thank Tobias Damm for his comments and advice and Tobias Breiten for providing Example 4.3.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Benner, P., Redmann, M. Model reduction for stochastic systems. Stoch PDE: Anal Comp 3, 291–338 (2015). https://doi.org/10.1007/s40072-015-0050-1

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40072-015-0050-1