Abstract

Lensless imaging is an imaging modality that allows high-resolution and large field-of-view (FOV) imaging with cost-effective and portable devices. In lensless imaging, the objects’ complex amplitude information is computationally reconstructed from the diffracted intensity measured on a sensor plane. This holographic reconstruction has been traditionally implemented by iterative phase retrieval algorithms. However, due to the limited capability of the traditional algorithms, such as excessive processing time and high chance of failure in confluent specimens, lensless imaging has not been practically used in the relevant application areas. Here, we review the recent applications of deep learning (DL) algorithms in holographic image reconstruction that are proposed to achieve robust and fast holographic reconstruction in lensless imaging. These DL approaches include the supervised learning approach with paired training datasets and the unsupervised learning approach with unpaired training datasets or without any ground truth data. We also highlight some unique capabilities of the DL approaches, including lensless imaging with an extended depth-of-field (DOF) or virtual staining. Finally, we discuss new opportunities for exploiting domain adaptation techniques and physics-integrated approaches in lensless imaging.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Optical microscopy is an indispensable tool in modern biological research as it allows visualizing biological samples at the micro and nano-scale. However, conventional optical microscopes are composed of bulky and expensive lenses that require precise alignment with micron-scale precision. In the 2010s, an entirely new imaging modality, called lensless microscopy, has been developed to address these issues. As its name implies, lensless microscopy enables microscopic imaging without any lens. Instead, computational algorithms take over the role of lenses to retrieve an optical image. Therefore, lensless microscopy requires only a pair of illuminator and camera, providing a cost-effective and portable solution for point-of-care biomedical applications [1].

As shown in Fig. 1a, conventional optical microscopy directly records an objects' image at the sensor plane where the object is optically conjugated by lenses (Fig. 1a). In lensless microscopy, unlike conventional microscopy, there is sufficient distance for diffraction in between the sample and the sensor plane, so that the camera captures the diffracted light intensity from the sample without any lenses (Fig. 1b). The most distinctive feature of lensless microscopy is that the optical field information of the sample is computationally reconstructed from the diffraction pattern called hologram [2]. This computational process, called holographic reconstruction, is traditionally implemented by phase recovery and digital light propagation. As an optoelectronic camera can only record light intensity because of high-frequency oscillation of an optical field, the phase recovery—the process to recover the missing phase information from the hologram intensity—is the most challenging and critical process in lensless imaging. Therefore, many iterative phase recovery algorithms [3,4,5,6] have been proposed to recover the lost phase information.

source and a camera. z1: Source-to-sample distance, z2: Sample-to-sensor distance, Δs: Diameter of a light source

Comparison between conventional bright-field microscopy and lensless microscopy. a In conventional bright-field microscopy, the sample plane is optically conjugated to the sensor plane using lenses. Additional lenses are required for an even illumination of the sample. b In lensless microscopy, the diffracted light from the sample is recorded at the sensor plane. General lensless microscopy setup is composed of an illumination light

With the developments of the algorithms, researchers have demonstrated numerous important advantages of lensless microscopes over conventional microscopes, aside from the benefits of small form factor and cost-effectiveness. First of all, the space-bandwidth-product (SBP) of the imaging system is significantly improved in lensless microscopy. The SBP of conventional microscopes is typically limited to ~ 20 M pixels by objective lenses that impose the trade-off limit between field-of-view (FOV) and resolution due to manufacturing difficulties. On the other hand, the resolution of lensless microscopy is generally determined as sensor pixel size [7], and FOV is equal to the entire active area of the camera sensor. Thanks to the tremendous advances in sensor technology, SBP of lensless imaging has exceeded 100 M pixels, capable of simultaneously achieving the resolution of ~ 700 nm and the FOV of ~ 100 mm2. Furthermore, as a single hologram can encode the 3D volumetric information of the sample, 3D refocusing over the entire sample volume is available in lensless microscopy. Because of those advantages, lensless imaging is actively applied in blood smear inspection for malaria diagnosis [8], 3D motion tracking of biological specimens [9, 10], and air quality monitoring [11].

Over the past decade, with a significant enhancement in computing power, deep learning has been applied to the broad range of applications and outperforms the existing computational methods. In recent days, deep learning (DL) approaches have become the mainstream methods to solve various inverse problems in imaging, such as super-resolution imaging [12,13,14], 3D tomographic imaging [15, 16], Fourier ptychographic microscopy [17], and imaging through scattering [18, 19]. Among those, lensless imaging is one of the first areas that DL approaches have been applied and made a tremendous success [20, 21].

In this review, we aim to highlight some important advances in DL approaches for holographic reconstruction in lensless imaging. First, we introduce the hardware configuration and working principles of lensless imaging, including traditional iterative phase recovery algorithms. Then, we will categorize and discuss two different DL approaches in lensless imaging: supervised and unsupervised learning approaches. Finally, we will discuss the potential of DL approaches in lensless imaging.

2 Lensless imaging

2.1 Imaging principle

The lensless imaging hardware is simply composed of an illumination source and a camera sensor. The illumination source can be either a coherent laser or a partially coherent light-emitting diode (LED). In a typical lensless imaging setup, LEDs are used in conjunction with a spectral filter and a spatial filter (e.g., pinhole or multimode fiber) to enhance the temporal/spatial coherence of the source. The camera sensor is usually Complementary Metal Oxide Semiconductor (CMOS) or Charge-Coupled Device (CCD) image sensor. A sample is placed in between the illumination source and the camera sensor (Fig. 1b). The source-to-sample distance (z1) and sample-to-sensor distance (z2) are typically set to be z1 > 3 cm and z2 < 1 mm [7].

The resolution of such lensless imaging system can be described in two perspectives: the degree of optical coherence and the discretization in digital sensing. Firstly, as the lensless imaging system records a diffracted hologram intensity, the high-frequency features of the sample should be encoded in the hologram to achieve high resolution. Because the hologram is formed by interference between an incident plane wave and the scattered wave from the sample, the temporal and spatial coherence are the important parameters. Temporal coherence determines whether the wave scattered at a large angle can interfere with the unscattered plane wave or not. The effect of the temporal coherence can be controlled by the spectral width (Δλ) of the light source or z2 (which affects the path length difference between the scattered and the unscattered waves). The spatial coherence affects the sharpness and contrast of the holographic intensity pattern, because it determines whether the incident wave originated from the illumination source can be considered as a single plane wave or the superposition of multiple plane waves. The effect of spatial coherence of illumination source can be controlled by the diameter of the light-emitting area (Δs) and the ratio between z1 and z2 (which affects the translation of the interference pattern on the sensor plane for an oblique incident wave originated from an off-axis point within the illumination source). Second, due to the Nyquist-Shannon sampling theorem, the frequency components in the hologram that can be measured with the digital sensor are limited by the sensor’s pixel size. It is important to note that the fundamental resolution limit imposed by the coherence state of optical wave can be smaller than the pixel size, typically, set to several microns in modern digital cameras. To overcome the technical resolution limit imposed by the pixel size, the pixel super-resolution (SR) algorithm that converts a stack of low-resolution shifted images to a single high-resolution image has been widely used to achieve sub-micron resolution [22].

The computational process required for lensless imaging is the holographic reconstruction process that reconstructs an original information of the sample from the measured hologram intensity. In principle, the hardware of lensless imaging is an in-line holography setup recording the interference between the scattered light (Us) by the sample and a uniform reference wave (Ur). At the sensor plane, hologram intensity can be written as

where \(z_{2}\) is the spacing between the sample plane (\(z = 0\)) and sensor plane (\(z = z_{2}\)). The complex amplitude of the scattered field (Us) at the sensor plane is encoded as the intensity pattern, \(U_{r}^{*} \times \;U_{s} \left( {x,y;z_{2} } \right)\). Therefore, the complex amplitude \(U_{s} \left( {x,y;0} \right)\) at the sample plane can be recovered by digitally propagating the measured hologram from the sensor plane to the sample plane, which is typically performed using the angular spectrum method [23]. Finally, the amplitude and phase information of the sample, that provides the optical absorbance and the phase delay of the sample, can be retrieved from the reconstructed complex amplitude at \(z = 0\).

However, the digital propagation of the measured intensity described by Eq. (1) propagates the third term \(U_{r} \times \;U_{s}^{*} \left( {x,y;z_{2} } \right)\) as wells as the second term \(U_{r}^{*} \times \;U_{s} \left( {x,y;z_{2} } \right)\). It should be noted that the first and the last terms do not affect the holographic reconstruction process as the first term is spatially uniform and the last term is negligible in many applications where the sample is weakly scattering. Due to the conjugate operation on \(U_{s}^{*} \left( {x,y;z_{2} } \right)\), the third term \(U_{r} \times \;U_{s}^{*} \left( {x,y;z_{2} } \right)\) propagates in a reversed direction, and thus generating \(U_{s}^{*} \left( {x,y;2z_{2} } \right)\) on top of the reconstructed image \(U_{s} \left( {x,y;0} \right)\) from the second term. The noise from this additional term is called a twin-image artifact. The twin-image artifact significantly compromises the reconstructed sample image in lensless imaging. Many computational approaches have been proposed to address this problem based on traditional iterative phase retrieval algorithms and deep learning methods.

2.2 Traditional iterative holographic reconstruction algorithms

Holographic reconstruction process in lensless imaging can be considered as the process to find the missing phase information of the optical field \(U\left( {x,y;z_{0} } \right)\) from the measured intensity information \(\left| {U\left( {x,y;z_{0} } \right)} \right|^{2}\). Intensity measurement is the process where the complex field information consisting of amplitude and phase, \(U\left( {x,y;z_{0} } \right) = \left| {U\left( {x,y;z_{0} } \right)} \right|\exp \left[ {i\varphi \left( {x,y;z_{0} } \right)} \right]\), is converted into the intensity information, \(\left| {U\left( {x,y;z_{0} } \right)} \right|^{2}\), through the measurement operator \(H: R^{2N} \to R^{N}\) where N is the pixel number of the sensor. Therefore, the phase recovery is an ill-posed problem of finding an inverse operator \(H^{inv} : R^{N} \to R^{2N}\) which does not possess a unique solution. Therefore, additional information such as object support [24] or multiple measurements at different sample-to-sensor distances [25, 26] is required to solve this underdetermined problem.

One of the fundamental phase recovery algorithms is the Gerchberg–Saxton (GS) algorithm [3, 5, 24]. In this algorithm, optical fields are digitally propagated back and forth between two planes, while imposing physical constraints or prior information at each plane (Fig. 2a). At the sensor plane, the physical constraint is the recorded intensity of the optical field, and at the sample plane, the prior information is the approximative object boundaries called object support. After several iterations, phase information converges to a solution that satisfies physical constraints and prior information. It has been shown that the GS algorithm with object support can recover relatively small isolated objects with clear boundaries in lensless imaging [27].

Traditional iterative phase recovery algorithms. a A schematic diagram of Gerchberg–Saxton (GS) algorithm. The optical fields are propagated back and forth between a sample domain and a sensor domain. Physical constraints are imposed at each domain. u_0: amplitude of the optical fields at the sample domain, U_0: amplitude of the optical fields at the sensor domain, \(\varphi\): phase of the optical fields at the sample domain, and \(\phi\): phase of the optical fields at the sensor domain. b A schematic diagram of multi-height phase recovery algorithm. The multiple hologram intensities were measured at different sample-to-sensor distances. The measured amplitude replaces the amplitude of the propagated optical field at each position, while the phase of propagated optical field remains updated. The iterative process—propagation of optical fields and application of physical constraints—converges to a single phase solution at each plane. Finally, the image is reconstructed by back-propagating the complex field with the phase solution to the sample plane. c Lensless imaging results of USAF target based on multi-height phase recovery algorithm. (Left) the reconstructed intensity image of the USAF target on a full field-of-view. (Right) the measured hologram intensity maps and the reconstructed intensity maps at different sub-field of views

However, the GS algorithm frequently failed to recover the phase of dense or connected samples with no clear boundary. To address this limit, the multi-height phase recovery algorithm has been proposed to use the multiple intensity maps measured at different sample-to-sensor distances as more restrictive physical constraints, even without object support [25, 26]. In this scheme, the optical field is sequentially propagated into multiple measurement planes, while enforcing the measured intensities at each plane. More specifically, the amplitude of the propagated optical field is replaced by the square root of the measured intensity, while the phase is directly taken (i.e., updated) from the propagated optical field (Fig. 2b). After several iterations, the phase values at each plane converge in such a way that the propagated fields satisfy the physical constraints of measured intensity at every plane. Typically, the multi-height phase recovery algorithm requires 6–8 measurements to achieve high-quality reconstruction results [7].

Although the traditional multi-height phase recovery algorithm achieves high-quality holographic reconstruction of the dense sample, this algorithm requires many images for only one reconstruction process. The multiple measurements are often limited by the speed of image acquisition and mechanical translation in between the sample and sensor when it comes to measuring the rapid dynamics of the biological system. Also, the iterative algorithm takes a long time for convergence. In that regards, DL approaches have recently been introduced to achieve rapid and robust holographic reconstruction using only a single intensity measurement.

3 Supervised learning approach in lensless imaging

Deep learning [28] is one of the machine learning methods that uses deep neural networks (DNN) to learn the representations of data. The success of DL in the computer vision area naturally led to its active use in computational imaging. Specifically, DL shows superior performance to traditional algorithms, especially in solving various ill-posed inverse problems. This is mainly achieved by the complicated architecture of DNN and non-linear activation function, which makes it possible for DNN to learn very complex non-linear input–output relationships. One of the most general DNN architectures in computational imaging is convolutional neural networks (CNN) [28], inspired by the structure of the human visual cortex. CNN typically consists of convolutional kernels, pooling layers, and non-linear activation functions.

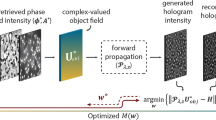

Recently, DL approaches have made significant advances in overcoming various challenges of the conventional reconstruction algorithms for lensless imaging. The most common DL approach in lensless imaging is supervised learning. In supervised learning, DNN finds the complex mapping functions between input data (i.e., the dataset of diffraction intensity maps from various samples) and desired data (i.e., the dataset of the ground truth images of the corresponding samples). This approach requires the large paired datasets of input data and their corresponding ground truth data. During the training process, DNN tries to adjust its weights W_1, W_2 (Fig. 3a) to minimize the loss function, which measures the statistical distance between the network output and the desired ground truth. The learning algorithm called error back-propagation (Fig. 3a) computes the gradients of the loss function with respect to each weight efficiently. Therefore, the weights of each layer can be modified toward the negative direction of this gradient to reduce the loss (Fig. 3a). After training, the trained network can perform the desired task with a single forward propagation of the DNN (i.e., convolution operations and application of non-linear activation functions), which generally takes less than a second using a modern graphics processing unit (GPU). This single inference process of the trained network is one of the significant advantages of the DL approach compared to previous iterative algorithms that require many iterations for only one inference.

a A schematic diagram of supervised learning approach in holographic reconstruction. During training, DNN learns the mapping function between the input measured hologram intensity and the output phase image based on loss function and error back-propagation. b Holographic reconstruction results using a supervised learning approach. DNN successfully recovers the original phase image (V) from the measured hologram intensity (III). DNN was trained on Faces-LFW datasets and ImageNet datasets, respectively. Adapted from ref [20], Open Access Publishing Agreement

3.1 Supervised learning in holographic reconstruction

As discussed in Sect. 2.1, the twin-image artifact due to missing phase information is one of the most critical challenges in lensless imaging. Recently, it has been shown that DL can reconstruct artifact-free phase information from a single hologram [20, 21]. Based on the supervised approach, Sinha et al. [20] demonstrate that CNN can recover phase images generated by a spatial light modulator (SLM) from its hologram intensity (Fig. 3b). Through the training process, DNN learns the mapping function from hologram intensity to its corresponding phase image. Once the network is trained, the network can transform a hologram intensity into the desired phase image with high accuracy (Fig. 3b).

Interestingly, it has been shown that, if the input hologram is preprocessed with simple operation, DNN recovers the complex amplitude information even more effectively [21]. Instead of directly using the hologram intensity as a network input, a hologram intensity was digitally propagated back to the sample plane. This preprocessing process, which is identical to the half of the first iteration cycle of the GS algorithm, reduces the burden of DNN as the complex amplitude in the sample plane (i.e., the preprocessed image with twin-image artifacts) is the statistically closer to the final solution (i.e., ground truth) than the raw hologram intensity. In ref [21], DNN was shown to achieve robust holographic reconstruction of complex biological samples, which has been considered challenging task for even DL approaches due to the complicated geometric features. Furthermore, DNN with preprocessing was shown to be very effective in recovering the phase information under low-photon conditions [29].

3.2 Autofocusing and depth-of-field extension

In addition to the basic capability of holographic reconstruction, a recent work [30] further demonstrates that CNN can be used to perform autofocusing, which is the process where the sample-to-sensor distance is retrieved from the hologram or defocused image. In the traditional phase retrieval algorithms, the precise sample-to-sensor distance is the key prior information in that the optical fields need to be digitally propagated back and forth to the correct measurement and sample planes. In the DL-based autofocusing method, the network was trained using the pairs of the measured hologram and the corresponding propagation distance, which was directly extracted from linear actuators used in experiments. This approach demonstrated significantly reduced error and computation time compared to traditional autofocusing algorithms.

DL has also been used to perform both autofocusing and phase recovery [31], resulting in the significant extension in depth-of-field (DOF). In those DL approaches, called holographic imaging using deep learning for extended focus (HIDEF), DNN was trained using the pairs of randomly propagated (i.e., defocused) holograms and the corresponding in-focus images. After training, DNN could recover the in-focus image of a three-dimensional sample from a single hologram in real time (Fig. 4a). Especially, HIDEF can be useful for lensless imaging of axially distributed samples (e.g., imaging of the red blood cells dispersed in a volumetric blood sample) in the sense that refocusing and recovering the missing phase of the entire sample volume can be simultaneously performed [32].

Some unique capabilities of the DL approaches. a DL approach significantly extends the DOF and increases the reconstruction speed of a 3D volumetric sample. After training, DNN reconstructs an in-focus image of a 3D sample over an extended DOF from a single hologram intensity. Modified from ref [31], Open Access Publishing Agreement. b In “bright-field holography” method, DNN transforms holographic images of a volumetric sample into bright-field microscopy images at desired planes. BP: back-propagation. Modified from ref [33], CC-BY-4.0. c In virtual phase staining method, DNN transforms a reconstructed holographic image into an equivalent bright-field microscope image with histological staining. Modified from ref [34], CC-BY-4.0

3.3 Cross-modality image transformation

The DL-based holographic reconstruction scheme has also been shown to be very efficient in performing the cross-modality image transformation. In typical lensless imaging, the amplitude and phase information of a sample is retrieved. However, often, such intrinsic optical information does not directly render a sufficient contrast to identify the specific structures of interest. In that regards, the cross-modality image transformation can be used to convert an image acquired from lensless imaging scheme into the image of different modalities, such as bright-field and dark-field microscope that provides a high-contrast image for specific structural features. For instance, in the “bright-field holography” framework [33], DNN has been used to learn the mapping function from the digitally back-propagated field of a single hologram to the corresponding bright-field microscopy image (Fig. 4b). Such transformation is especially useful for a 3D volumetric sample where defocused objects create the strong artifacts of concentric ring patterns. In addition, DNN also eliminates the speckle and background interference artifacts that are often caused from the illumination source with a long coherence length. In other words, the “bright-field holography” approach simultaneously achieved both the 3D imaging capability of lensless imaging and the high image-contrast of bright-field microscopy.

One of the significant benefits of lensless imaging is the label-free feature where one may visualize important structures within an intrinsic biological specimen without any preprocessing, based on the optical phase delay from the specimen. However, many traditional diagnostic methods and sample analysis procedures have been developed based on bright-field microscopic imaging with sample labeling/staining. In this regard, a virtual histology staining framework using DL has been suggested to close the gap between the traditional labeling-based imaging modalities and the holographic lensless imaging [34]. In this framework, DNN is trained using the pairs of reconstructed holographic images and corresponding bright-field microscopy images, which are acquired after staining. As a result, DNN learns to convert a label-free image to a bright-field microscope image with staining (Fig. 4c). Especially, considering that lensless imaging provides a larger FOV in comparison to a conventional bench-top microscope, this virtual histology staining framework can significantly reduce costs and time for histological staining and associated applications including diagnostic tissue pathology.

4 Unsupervised learning approach in lensless imaging

The supervised learning approaches have demonstrated superior image quality and short computation time in holographic reconstruction for lensless imaging. However, the success of the supervised approach highly relies on the datasets of paired images in two different domains—ground truth domain and hologram domain—as it solely depends on the statistical mapping between two domains. The reliance on large datasets significantly limits the practicality of supervised approach for lensless imaging, because it is unlikely to be possible to acquire large paired datasets in an actual lensless imaging situation. Typically, the acquisition of holograms and ground truth images requires two independent experimental setups, so that it is practically impossible to acquire the paired dataset for dynamic objects such as fluctuating cell membranes. The use of unsupervised learning approaches has been explored in the field of lensless imaging to address this critical challenge of the supervised approach.

4.1 Cycle-consistent GAN approach

Cycle-consistent GAN (CycleGAN) is the representative unsupervised framework that performs image-to-image transformation in the absence of paired training datasets [35]. Recently, the CycleGAN approach has been applied to holographic reconstruction [36]. In this approach, CycleGAN is trained using unpaired datasets composed of phase images in SLM and its hologram intensity. There are two neural networks for image transformations: the inverse mapping from hologram intensity to phase image and the forward mapping from phase image to hologram intensity. Generally, unsupervised learning approaches lead to an inferior accuracy of image reconstruction compared to the supervised approaches. In a recent work [37], the near-field Fresnel propagator [38] was combined with the CycleGAN framework to reduce the computational burden of neural networks. Here, the forward mapping from phase image to hologram intensity image was analytically described by near-field Fresnel propagator H (Fig. 5a). In this manner, the neural network GD (Fig. 5a) in the forward mapping process does not need to learn the well-known physical process H (Fig. 5a); instead, it only needs to learn experimental artifacts in the measurements. This unsupervised framework termed phaseGAN has outperformed the CycleGAN framework and has yielded comparable results to the supervised approach in holographic reconstruction even in the absence of paired datasets. The phaseGAN has demonstrated the capability of phase reconstruction in time-resolved X-ray imaging experiments where the paired ground truth images cannot be acquired due to the dynamic nature of experimental scheme.

Unsupervised learning approaches in holographic reconstruction. a Learning process diagram of phaseGAN. Propagator H was integrated into the original CycleGAN DNN structure. GO: DNN, which transforms the measured hologram intensity into a phase image, H: near-field Fresnel propagator, GD: DNN, which refines the generated hologram intensity, DO: DNN that classifies an input phase image as “real” or “fake”, and DD: DNN, that classifies an input hologram intensity as “real” or “fake”. The image-transformation networks GO and GD were trained in a way that they reduce the error between the measured hologram/phase image and the generated hologram/phase image through GO and GD based on the fact that they should be identical if the correct phase image/hologram is generated. DO and DD also improve the performance of the entire network as it helps improving the statistical resemblances between the measured images and the generated images in two separate domains. Adapted from ref [36], Open Access Publishing Agreement. b A schematic diagram of PhysenNet. DNN generates a phase image from a measured hologram. DNN was repeatedly optimized to reduce the error between the measured hologram intensity and the generated hologram intensity, which is created by numerically propagating the output from the neural network. The generated hologram intensity and phase image converge to a solution via the iterative process of adjusting the network weights. Adapted from ref [39], CC-BY-4.0

4.2 Deep image prior approach

Recent works further demonstrated the ability of a DNN to recover phase information even without any ground truth image [39]. This framework is inspired by deep image prior (DIP) [40], the approach that neural network architecture can be used as a handcrafted prior for solving various inverse problems. This approach is significantly different with the conventional compressive sensing algorithms that use the sparsity priors in a known basis, such as wavelet basis. In the DIP-based framework, an untrained neural network, called a physics-enhanced deep neural network (PhysenNet), was combined with a well-known physical model, near-field Fresnel propagator. The PhysenNet network parameters are iteratively optimized through the interplay between the physical model and the neural network. As shown in Fig. 5b, the networks' output is numerically propagated to the sensor plane to simulate the diffraction pattern. Then, the error between the simulated and measured diffraction patterns was used to adjust the network parameters (Fig. 5b). After many iterative cycles, PhysenNet can generate a phase image whose simulated diffraction pattern satisfies the given physical constraints of measured hologram. Compared with the previous supervised approaches, deep image prior approaches require much longer time to reconstruct one image as the iteration cycles are required. It should be noted that such DIP-based frameworks can be applied to various imaging modalities where the image formation model can be precisely described.

5 Conclusion

Lensless imaging has enabled high-resolution and large FOV imaging of specimens with a much more cost-effective and portable manner compared to conventional microscopes. These advantages make lensless imaging particularly well suited for point-of-care diagnostic applications. However, one of the critical bottlenecks for its practical use on sites has been the limited capability of holographic reconstruction. The traditional reconstruction algorithms are time-consuming and need multiple measurements to reliably deal with complex structures. In contrast to these conventional algorithms, the DL approaches have recently been shown to robustly perform holographic reconstruction using a single measured hologram. In addition, once networks are trained, images can be reconstructed nearly in real-time. In this review, we highlighted various DL approaches and their unique capabilities that cannot be demonstrated using the traditional algorithms. First, the supervised learning approaches have presented superior image reconstruction results and additional practical capabilities such as autofocusing, virtual staining, and bright-field holography. Nonetheless, the supervised approaches require large paired datasets, which are not readily available in many practical situations. In that regards, many recent efforts have been made to implement an unsupervised learning approach that can learn to transform without paired datasets.

One of the major drawbacks of conventional DL approaches is that they yield poor reconstruction accuracy when test data differ significantly from training data. One may often encounter this situation, because training datasets cannot cover all possible variations in the measurements. To address this problem, domain adaptation techniques [41] have been actively studied. This approach is to increase the ability of machine learning algorithms trained in one domain called ‘source’ domain to work well in a different domain called ‘target’ domain. It implies that a neural network trained in a certain lensless imaging configuration can be used in another. We expect that the domain adaptation approach will significantly enhance the practicality of lensless imaging.

Although conventional DL approaches that only look for statistical patterns emerging from the images in different domains have achieved significant advances in the field of lensless imaging, we expect that the full potential of DL approaches will be realized when the physical insights are seamlessly integrated into the neural networks. Already, a few physics-integrated DL approaches have shown more robust reconstruction results in practical conditions where the hologram acquisition is photon-starved or the datasets are imperfect [29, 37]. In our view, current DL approaches and table-top demonstrations based on DL are still at the infancy stage, exploring various capabilities of DL in lensless imaging while not rigorously considering the reliability of DL approaches. We speculate that the developments in DL approaches will be progressively focused on achieving reliable neural networks to enable practical use of DL in the real world, and the physics-integrated approach will play an important role in this aspect.

References

A. Ozcan, Mobile phones democratize and cultivate next-generation imaging, diagnostics and measurement tools. Lab Chip 14, 3187–3194 (2014)

A. Greenbaum et al., Imaging without lenses: achievements and remaining challenges of wide-field on-chip microscopy. Nat Methods 9, 889–895 (2012)

R.W. Gerchberg, A practical algorithm for the determination of phase from image and diffraction plane pictures. Optik (Stuttg) 35, 237–246 (1972)

T. Latychevskaia, H.-W. Fink, Solution to the twin image problem in holography. Phys Rev Lett 98, 233901 (2007)

J.R. Fienup, Phase retrieval algorithms: a comparison. Appl Opt 21, 2758–2769 (1982)

V. Elser, Phase retrieval by iterated projections. JOSA A 20, 40–55 (2003)

Y. Wu, A. Ozcan, Lensless digital holographic microscopy and its applications in biomedicine and environmental monitoring. Methods 136, 4–16 (2018)

W. Bishara et al., Holographic pixel super-resolution in portable lensless on-chip microscopy using a fiber-optic array. Lab Chip 11, 1276–1279 (2011)

T.W. Su, L. Xue, A. Ozcan, High-throughput lensfree 3D tracking of human sperms reveals rare statistics of helical trajectories. Proc Natl Acad Sci USA 109, 16018–16022 (2012)

T.W. Su, I. Choi, J. Feng, K. Huang, A. Ozcan, High-throughput analysis of horse sperms’ 3D swimming patterns using computational on-chip imaging. Anim Reprod Sci 169, 45–55 (2016)

Y.-C. Wu et al., Air quality monitoring using mobile microscopy and machine learning. Light Sci Appl 6, e17046–e17046 (2017)

C. Dong, C.C. Loy, K. He, X. Tang, Image super-resolution using deep convolutional networks. IEEE Trans Pattern Anal Mach Intell 38, 295–307 (2015)

C. Dong, C. C. Loy, K. He, X. Tang, Learning a deep convolutional network for image super-resolution. In European conference on computer vision. 184–199. Springer (2014)

H. Wang et al., Deep learning enables cross-modality super-resolution in fluorescence microscopy. Nat Methods 16, 103–110 (2019)

S. Antholzer, M. Haltmeier, J. Schwab, Deep learning for photoacoustic tomography from sparse data. Inverse Probl Sci Eng 27, 987–1005 (2019)

N. Davoudi, X.L. Deán-Ben, D. Razansky, Deep learning optoacoustic tomography with sparse data. Nat Mach Intell 1, 453–460 (2019)

T. Nguyen, Y. Xue, Y. Li, L. Tian, G. Nehmetallah, Deep learning approach for Fourier ptychography microscopy. Opt Express 26, 26470–26484 (2018)

S. Li, M. Deng, J. Lee, A. Sinha, G. Barbastathis, Imaging through glass diffusers using densely connected convolutional networks. Optica 5, 803–813 (2018)

Y. Li, Y. Xue, L. Tian, Deep speckle correlation: a deep learning approach toward scalable imaging through scattering media. Optica 5, 1181–1190 (2018)

A. Sinha, J. Lee, S. Li, G. Barbastathis, Lensless computational imaging through deep learning. Optica 4, 1117–1125 (2017)

Y. Rivenson, Y. Zhang, H. Günaydın, D. Teng, A. Ozcan, Phase recovery and holographic image reconstruction using deep learning in neural networks. Light Sci Appl 7, 17141 (2018)

W. Bishara, T.-W. Su, A.F. Coskun, A. Ozcan, Lensfree on-chip microscopy over a wide field-of-view using pixel super-resolution. Opt Express 18, 11181 (2010)

J.W. Goodman, Introduction to Fourier optics (Roberts and Co. Publisher, Englewood, 2005)

G. Koren, F. Polack, D. Joyeux, Iterative algorithms for twin-image elimination in in-line holography using finite-support constraints. JOSA A 10, 423–433 (1993)

A. Greenbaum, A. Ozcan, Maskless imaging of dense samples using pixel super-resolution based multi-height lensfree on-chip microscopy. Opt Express 20, 3129–3143 (2012)

A. Greenbaum, U. Sikora, A. Ozcan, Field-portable wide-field microscopy of dense samples using multi-height pixel super-resolution based lensfree imaging. Lab Chip 12, 1242–1245 (2012)

O. Mudanyali et al., Compact, light-weight and cost-effective microscope based on lensless incoherent holography for telemedicine applications. Lab Chip 10, 1417–1428 (2010)

Y. LeCun, Y. Bengio, G. Hinton, Deep learning. Nature 521, 436–444 (2015)

A. Goy, K. Arthur, S. Li, G. Barbastathis, Low photon count phase retrieval using deep learning. Phys Rev Lett 121, 243902 (2018)

Z. Ren, Z. Xu, E.Y. Lam, Learning-based nonparametric autofocusing for digital holography. Optica 5, 337–344 (2018)

Y. Wu et al., Extended depth-of-field in holographic imaging using deep-learning-based autofocusing and phase recovery. Optica 5, 704–710 (2018)

Y. Wu et al., Label-free bioaerosol sensing using mobile microscopy and deep learning. ACS Photonics 5, 4617–4627 (2018)

Y. Wu et al., Bright-field holography: cross-modality deep learning enables snapshot 3D imaging with bright-field contrast using a single hologram. Light Sci Appl 8, 1–7 (2019)

Y. Rivenson et al., PhaseStain: the digital staining of label-free quantitative phase microscopy images using deep learning. Light Sci Appl 8, 1–11 (2019)

J-Y. Zhu, T. Park, P. Isola, A. A., Efros Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2223–2232 (2017)

D. Yin et al., Digital Holographic reconstruction based on deep learning framework with unpaired data. IEEE Photonics J 12, 1–12 (2019)

Y. Zhang et al., PhaseGAN: a deep-learning phase-retrieval approach for unpaired datasets. Opt Express 29, 19593–19604 (2021)

M. Born, E. Wolf, Principles of optics: electromagnetic theory of propagation, interference and diffraction of light (Elsevier, 2013)

F. Wang et al., Phase imaging with an untrained neural network. Light Sci Appl 9, 1–7 (2020)

D. Ulyanov, A. Vedaldi, V. Lempitsky, Deep image prior. In Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 9446–9454 (2018)

S. Ben-David et al., A theory of learning from different domains. Mach Learn 79, 151–175 (2010)

Acknowledgements

This research was supported by the Technology Innovation Program (20012464) funded by the Ministry of Trade, Industry & Energy (MOTIE, Korea), the National Research Foundation of Korea(NRF) grant funded by the Korea government(MSIT) (No. NRF-2021R1A5A1032937, NRF-2021R1C1C1011307), by the Korea Agency for Infrastructure Technology Advancement (KAIA) grant funded by the Ministry of Land, Infrastructure and Transport (Grant 21NPSS-C163379-01), by 2021 End-Run Project funded by Korea Advanced Institute of Science and Technology, and by Chungnam National University Hospital Research Fund, 2021.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kim, H., Song, G., You, Ji. et al. Deep learning for lensless imaging. J. Korean Phys. Soc. 81, 570–579 (2022). https://doi.org/10.1007/s40042-022-00412-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40042-022-00412-2