Abstract

The ever-increasing attention of process mining (PM) research to the logs of low structured processes and of non-process-aware systems (e.g., ERP, IoT systems) poses a number of challenges. Indeed, in such cases, the risk of obtaining low-quality results is rather high, and great effort is needed to carry out a PM project, most of which is usually spent in trying different ways to select and prepare the input data for PM tasks. Two general AI-based strategies are discussed in this paper, which can improve and ease the execution of PM tasks in such settings: (a) using explicit domain knowledge and (b) exploiting auxiliary AI tasks. After introducing some specific data quality issues that complicate the application of PM techniques in the above-mentioned settings, the paper illustrates these two strategies and the results of a systematic review of relevant literature on the topic. Finally, the paper presents a taxonomical scheme of the works reviewed and discusses some major trends, open issues and opportunities in this field of research.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The young discipline of process mining (PM) has already produced a wide range of data analytics solutions for turning a log (i.e., a collection of process execution events) into novel and interesting process-oriented knowledge and insight and for offering operational support at run-time [89]. These solutions include both offline log data analytics methods (addressing process discovery, conformance checking and enhancement tasks) and operational-support methods (allowing for performing detection, prediction and recommendation tasks on ongoing process instances, at run-time).

Many success stories have shown these techniques to be quite effective and efficient in improving a business process, when (i) the available log data provide good-quality information and (ii) the process behavior is regular enough. Under these conditions, the full range of PM techniques can be exploited, possibly combined in a pipeline-like fashion (according to the “Extended \(L^*\) lifecycle” model [89]): from the induction and validation of a high-quality control-flow model to the enrichment of this model with stochastic modeling capabilities [76, 78], up to the exploitation of these predictive capabilities for run-time support.

By contrast, many existing PM techniques have problems in dealing with less structured processes and with fine-grained (and possibly imprecise/incomplete) logs, like those that typically arise in important non-process-aware application contexts (e.g., related to legacy transactional systems, as well as to ERP, CRM, service/ message/ event-based systems and, more recently, IoT systems [47]) that have been attracting great attention from the PM community—this interest is justified by the wealth of log data that characterizes these contexts and by the need/opportunity of turning them into valuable process-level knowledge.

In fact, most of the efforts spent in the development of PM projects are usually devoted to iterated data extraction/preparation steps, typically performed across many consecutive “prepare-mine-evaluate” sessions, and refined on the basis of the quality (in terms of interpretability, insight and actionability) of the results obtained at the end of each session. When dealing with heterogenous, incomplete and/or fine-grained logs, the number of PM iterations and the burden of both data extraction and data preparation steps tend to explode, since choosing the granularity and scope of the log data to be passed to PM tools becomes a critical task, which needs high levels of expertise in terms of both domain knowledge and data/process analysis skills. On the other hand, the effectiveness of each PM session is also determined by the ability of the analyst to tune effectively the parameters of the PM algorithms employed and to post-process their results.

In general, the exploitation of AI methods as a support to the internal decision making of PM algorithms can improve the effectiveness, robustness, usability and efficiency of PM projects in such challenging settings.

Two main families of AI-based strategies have been exploited to this end in the literature: (A) using domain knowledge to drive PM tasks and (B) addressing auxiliary AI tasks jointly with the target PM task. Both strategies can be regarded as a form of (AI-) augmented analytics [72] and have been shown to help reduce the efforts spent in each PM session (in terms of time and skills required to the analysts in the preparation of log data, the configuration and application of PM tools, and the evaluation of PM results), as well the total number of PM sessions needed to eventually obtain satisfactory achievements.

Goal, scope, contribution and novelty The main contribution of this work lies in offering a critical study of the combination of PM and AI techniques according to the emerging vision of augmented analytics, by pursuing two main objectives: (i) analyzing what has been done so far in the literature in this direction and (ii) reflecting on emerging trends and potentialities that have not yet been explored in full and on the issues that are still open.

Both kinds of analyses are specifically focused on the empowering of classical PM tasks (i.e., discovery, conformance checking, enhancement, detection, prediction, recommendation) through the adoption of complementary knowledge representation, learning and inference capabilities, as a way to better deal with the above-mentioned challenges (i.e., the low-level, incomplete and/or heterogenous nature of the given log data).

The concrete result of our retrospective study is a systematic (and replicable) literature review and a taxonomical classification of the relevant works reviewed. In performing this study, we excluded the works in the literature that only proposed solutions to log data pre-processing problems (such as event data extraction, integration, correlation, selection, transformation, augmentation, enrichment), which aim at supporting the analyst in preparing a collection of log data to the application of standard PM algorithms, but with no kind of direct interaction/integration with the latter. In fact, a recent survey of approaches (including some that leverage AI methods) to the extraction and preparation of log data is already available in the literature [26].

Despite the theoretical and practical importance of the topic considered in this manuscript, to the best of our knowledge, there is no systematic study in the literature that covers it. The existing literature review that looks the closest to our work is indeed the above-mentioned survey of log data extraction and preparation methods [26], which does not cover at all the efforts made in the literature for injecting, in a stronger and more direct way, complementary AI capabilities into core PM tasks.

Organization The remainder of this manuscript is structured as follows:

-

Preliminaries on PM techniques and PM projects are provided in Sect. 2.

-

Section 3 illustrates three major categories of log quality issues (namely, heterogeneity, incompleteness and hidden activities) that often complicate the application of PM solutions to real contexts and tend to make PM projects more demanding in terms of required time and human expertise.

-

The two general AI-based strategies A and B mentioned above (relying on explicit domain knowledge and on performing synergistically multiple learning/reasoning tasks, respectively) are illustrated in Sect. 4), framed in the wider perspective of augmented analytics.

-

Section 5 illustrates in detail both the research questions underlying our literature review and the search protocol employed in it.

-

A critical structured discussion of the result of our literature review is given in Sects. 6 and 7, relatively to the adoption of strategies A and B, respectively.

-

Two interesting emerging trends (concerning the usage of ensemble learning and deep learning methods in PM tasks) are discussed in detail in Sect. 8, which are both connected to Strategy B and are expected to find profitable applications in the future within the research field explored here.

-

Section 9 summarizes the different classes of works reviewed with the help of an ad hoc taxonomical scheme, while providing the reader with a discussion of related work, and open opportunities and challenges.

2 Background

2.1 PM in Brief: General Aim and some Historical Notes

Following a widely accepted definition, process mining (PM) is a discipline at the intersection between data mining and machine learning, on one side, and business process modeling (BPM), on the other side [89]. The general goal of PM can be roughly stated as that of devising data analytics solutions/methods for extracting knowledge/insights from process logs, to eventually support the comprehension, modeling, monitoring, evaluation and improvement of business processes. In a sense, PM techniques exploit the evidence on the real behavior of a business process stored in log data to allow for better addressing the ultimate goal, shared with BPM approaches, of making the handling of a business process and of organizational resources more efficient, effective and aligned to business objectives and requirements (e.g., by inspiring actions for redesigning or reconfiguring the process or work-allocation policies).

In the last decade, PM has been a prominent prolific subfield of research in the BPM area and has attracted much attention from the industry. This is witnessed by the high number of papers concerning PM-related topics that have been published in top-class journals and conferences, which has continued growing from year to year [19], as well as by the wide variety of PM techniques developed in the academy (often as components of open-source frameworks like popular ProM [94], currently featuring more than 600 PM plug-ins) and many commercial tools [18]—e.g., Aris PPM, Celonis Discovery, Disco, Minit, Myivenio, Perceptive Process Mining, Process Gold, QPR ProcessAnalyzer, Signavio Process Intelligence, UpFlux to name a few. Further evidence of the momentum gained by PM is given by: (i) the popularity of the PM Manifesto [88] promoted in 2011 by the IEEE Task Force on Process Mining, (ii) the establishment in 2019 of a specific conference on the topic (namely, the International Conference on Process Mining), and (iii) the many success stories in public and private organizations of diverse sectors [18]. It is worth noting that the attention of PM researchers and practitioners has started recently spreading beyond the traditional application fields of BPM and started covering diverse relevant sectors, including, e.g., software engineering, healthcare, e-learning, IoT, and Cybersecurity.

2.2 Event Logs and Main Types of PM Techniques

Event logs Most PM techniques were (and still tend to be) conceived to work on a well-structured (“process-aware”) event log, which conceptually consists of multiple (process execution) traces. Each trace is a list of (temporally ordered) events that represent the history of a single process instance (a.k.a. case) mainly in terms of the activities that performed during the unfolding of the process instance. Each event may be also associated with additional pieces of information, such as a temporal mark (i.e., a timestamp), properties of the resource involved in the execution of the activity, as well as other payload data (e.g., parameters/results of the performed activity, performance/cost measures, etc.).

Three fundamental properties are usually required to a process log, in order to allow for meaningful and effective applications of traditional PM techniques [59]:

-

(R1)

each trace is explicitly associated with an identifier (process ID) of the process that produced it or, alternatively, the log only store traces of one process;

-

(R2)

each event explicitly refers to an instance of the process (case ID);

-

(R3)

each event refers, or it can be easily mapped, to an activity (activity label);

-

(R4)

the log as a whole provides a sufficiently correct, complete and precise picture of the possible behaviors of the process that is being analyzed.

As noticed in [26] (and discussed in more detail later on) the very task of obtaining an event log that meets such requirements is quite a complicated task in many real-world application scenarios and a major obstacle for the adoption of the PM technology.

Main types of PM tasks (a.k.a. “use cases”) A standard categorization of PM tasks and techniques differentiates: (i) offline tasks, to be performed on the “postmortem” traces of fully executed process instances, and (ii) online tasks, to be performed on the “pre-mortem,” partial, traces of ongoing process instances [87].

Three foundational offline PM tasks considered in the literature are process discovery, conformance checking and enhancement [89]. Process discovery concerns the induction of a process model from a given log L. Conformance checking essentially relies on aligning the traces in a given log L with a model, in order to assess and measure the level of agreement between them and detect points of divergence. Enhancement techniques use the data stored in a log L to improve the quality/informativeness of an existing process model M, by suitably repairing or enriching M (e.g., with the addition of decision rules or time/performance annotations).

To provide operational support to the execution of an ongoing process instance c (based on its associated pre-mortem trace), three main online PM tasks have been considered in the literature: (i) the detection of deviances between c and a given reference model M; (ii) the prediction of some properties of c (e.g., the remaining execution time of c, the outcome of c, the next activity/activities performed for c), based on predictive models previously extracted from historical log data; (iii) the recommendation of actions/choices concerning forthcoming steps of c, by possibly leveraging a predictive model. Aggregate-level prediction tasks were recently considered in the PM community, concerning the forecasting of measures/properties for process instances groups, or for the process as a whole and/or its surrounding environment [14, 71].

2.3 PM Projects: Life Cycle and Inherent Complexity

Before discussing the structure and main characteristics of PM projects, and the life cycle models proposed for them, let us introduce some basic notions pertaining to the related class of Data Mining (DM) projects.

CRISP-DM model for DM projects Many conceptual models have been proposed in the literature and used in practice to describe and handle the life-cycle of a DM project. For the sake of concreteness, let us examine the one used in the CRISP-DM (CRoss-Industry Standard Process for Data Mining) methodology [10]. As a matter of fact, the structuring of DM project life cycle into high-level phases that is adopted in CRISP is quite similar to those of other DM methodologies [60], if abstracting from naming differences and slight mismatches in the level of granularity of some phases.

In general, after a specification of the project goals and of related business questions/constraints (Business Understanding), and a preliminary exploration of the available data sources (Data Understanding), the actual analysis of relevant data instances starts with a Data Preparation phase. The latter typically consists of actions (e.g., collect, explore, clean, select and transform) that are meant to improve the quality of the selected raw data, in terms of relevance, completeness, precision and reliability, as well as to put these data into a form that better suit DM analyses. In the subsequent Mining phase, the analyst is in charge of selecting, configuring/tuning and running specific DM models and algorithms. The quality of the results obtained in the previous phase is studied in the Evaluation phase, which usually amounts to interpreting the models/patterns discovered, computing quality metrics, analyzing the errors, and estimating a model/pattern application’ risks.

Until the quality of the discovered knowledge is not fully satisfying, a new iteration of the entire life cycle is performed. Usually, many interactive “try-and-evaluate” iterations are required, where the analyst often moves back and forth multiple times between phases in a non-sequential manner—e.g., the lessons learnt during a mining session may inspire new ways to prepare the data or even novel (more focused) business questions.

In cases where the discovered (validated) DM model features predictive/inference capabilities (as it happens, e.g., with data classification/forecasting/tagging models), a further Deployment phase can take place, where the model is suitably implemented and integrated into some operational system, in order to empower the latter with such “intelligent” data processing capabilities.

The \(PM^2\) model for PM projects In principle, the above high-level conceptualization of DM projects could be adapted to PM settings, taking into account the peculiarities of the data, algorithms (addressing process-aware tasks like conformance checking, discovery, enhancement, detection, prediction, etc.) and evaluation metrics (e.g., concerning fitness, precision and generalization criteria for the case of control-flow models) that characterize these settings.

In fact, such a customization of the DM projects life cycle to the case of PM projects was proposed in [95] as part of a methodology, named \(PM^2\), which specifically consists of the following project phases: Planning, Extraction, Data Processing, Mining & Analysis, Evaluation, Process Improvement & Support. Some of these phases have a single counterpart in the CRISP-DM model. By contrast, i.e., Process Improvement & Support (which may possibly involve model deployment actions) emphasizes the strong link that the results of PM analysis are required to have with the ultimate goal of improving and supporting the executions of business processes. Moreover, the Data Preparation phase of CRISP-DM is split into two phases in the \(PM^2\) model: Extraction and Data Processing. Indeed, a preliminary process log is assumed to be derived from the data sources at hand in the Extraction phase, which mainly amounts to locating, extracting and consolidating relevant event-related data (possibly stored in multiple information systems [26]), and putting them into the form of traces satisfying the requirements mentioned in Sect. 2.2. This entails suitably choosing the scope of representation, angle and granularity [89] in the derivation of such traces, and may require accomplishing tricky event correlation and event abstraction tasks, to map each extracted event record to a process instance and a process activity, respectively, whenever these entities are not referred to in the record itself. Prior to the application of PM techniques, these data may undergo an ad hoc Data Processing phase, which consists of data transformation operations like trace/log filtering, abstraction or enrichment. In a sense, each instantiation of the Data Processing phase is meant to yield a collection of traces that represents a particular “process-oriented view” of the original event data, which is expected by the analyst to allow PM algorithms to discover interesting knowledge/models.

The \(L^*\) life-cycle model for PM projects A specialized, five-stage, pipeline-like model for PM projects, named \(L^*\) life-cycle model, was defined in [89], which mainly hinges on the discovery, extension and use of control-flow oriented process models.

Specifically, the first two stages, named Plan & justify and Extract, basically correspond to the first two phases of the \(PM^2\) model, respectively. The third stage, named Create control-flow model and connect event log, is devoted to obtaining a control-flow model that explains the input log accurately, by using process discovery and conformance checking techniques. This control-flow model is then enriched (through extension methods) with additional perspectives and/or predictive capabilities [76, 78] in the fourth stage, named Create integrated process model. The final Operational Support stage consists in performing operational-support tasks on pre-mortem traces, based on a prediction-augmented process model.

The \(L^*\) model hence provides more structured guidelines for the design and implementation of a PM project. However, it can be applied in full only when the processes under analysis are lasagna processes, i.e., structured, regular, controllable and repetitive business processes. A popular rule-of-thumb for characterizing a lasagna process is that “with limited efforts it is possible to create an agreed-upon process model that has a fitness of at least 0.8” [89]. In application settings where the processes exhibit more flexible/chaotic behaviors, it is even difficult to build a control-flow model that is both sufficiently accurate and readable. This makes it prohibitive the application of classical model enhancement and operational-support methods. Things become even more complicated when the traces extracted from log data are affected by noise, inconsistencies and other data quality issues, or they are too heterogenous/fine-grained.

In general, preparing log data is a crucial and difficult task in PM projects (indeed, “finding, merging, and cleaning event data” was identified as an open challenge in the PM manifesto [88]), which can impact negatively on the success of PM initiatives, hence limiting the diffusion of PM techniques in real-life contexts. These issues are discussed in more detail in the following section.

3 Challenging Issues in Real-Life PM Projects

The success of a PM project, which typically requires many long applications of the life cycle phases described above, heavily depends on the expertise of PM analysts, in terms of both domain knowledge and process/data analytics skills, and on their ability (or good luck) in deriving, in a few prepare-mine-evaluate sessions, a log that allows for extracting valuable PM results (e.g., a meaningful/ interesting and conforming enough control-flow model). In particular, most of the efforts spent in a PM project are often devoted to data extraction and data preparation steps—this actually reflects a more general trend of data analytics projects, where such phases consume up to 80% and 50% of the total time and cost [99], respectively. Indeed, as the quality and relevance of the analyzed event log strongly impact on the quality (significance, interpretability, insight/actionability) of the results that can be obtained with PM tools, the construction of such a log is usually an iterative and interactive process in itself, which typically needs multiple data extraction, preparation and mining steps. In fact, data extraction/preparation steps are often refined several times, based on the quality of the results obtained by iteratively applying PM algorithms to different views (differing in scope, angle or granularity) of the same set of original log events.

Two main sources of complexity for PM projects can severely threaten the achievement of satisfactory results: (i) high-levels of variability characterizing the behavior of the process under analysis; (ii) data quality issues affecting the log data available for the analysis.

As to the latter point, different kinds of data quality issues may affect the process logs [5, 81, 88], which should be handled carefully in PM projects in order to avoid the a “garbage in-garbage out” effect. For example, some major quality dimensions for log data identified in the PM manifesto [88] are (i) trustworthiness (i.e., an event is registered only if it actually happened), (ii) completeness (i.e., no events relevant for a particular scope are lost), (iii) semantics (i.e., any event should be interpretable in terms of process concepts). Based on these dimensions, five levels of maturity for the event logs were defined in [88]. The lowest level of maturity (*) is assigned to logs containing events (e.g., recorded manually) featuring many wrong or incomplete entries, whereas the highest level (*****) is assigned instead to logs (typically coming from process-aware information systems) that are both complete and accurate. In fact, the quality of most real-life logs ranges in the middle of these two extremes [5, 82].

The inherent complexity of PM projects is further exacerbated when dealing with the logs of non-process-aware applications (such as CRM and ERP systems, or legacy transactional information systems), which pose problems concerning both the quality of the data and the variability of the processes that generated them. An extreme, but increasingly frequent and important application scenario for PM projects regards the analysis of log data that are not clearly associated with well-defined processes/activities/cases, so that different alternative process-oriented views and models could be extracted from them for the sake of PM-based analyses (consider, e.g., the emerging application of employing PM techniques for analyzing patient histories in healthcare or logs of IoT/event-based systems).

When dealing with event data gathered in the above scenarios, even extracting an informative log (meeting the requirements R1–R4 of Sect. 2) entails a delicate process-oriented interpretation task and suitable data selection and transformation steps.

The rest of this section provides more details on three main issues that tend to cause a gap between the goals and expectancies of PM project analysts and stakeholders, thus complicating the project unfolding and threatening its success:

-

(I1)

the log contains heterogenous traces, as a consequence of the fact that it was generated by multiple processes or by a single but unstructured and flexible process (Sect. 3.1);

-

(I2)

the log consists of low-level events, which fail to represent process executions at an abstraction level that suits the PM tasks to be performed (Sect. 3.2);

-

(I3)

the log is incomplete, in that it does not contain sufficient information (as concerns the PM tasks that are to performed) on the behaviors that the process under analysis has generated and can generate in general (Sect. 3.3).

The last two points above correspond to two well-known types of data quality issues, which affect the semantics and completeness quality dimensions of [88], respectively.

We pinpoint that log data may also suffer from other kinds of issues that have not been listed above (e.g., the presence of noisy or incorrect information and other problems undermining the trustworthiness of the data as well). Moreover, while we are assuming here that the log data under analysis already come in the form of (possibly low-level or incomplete) traces, in real-life scenarios the event records extracted from transactional data sources may lack information on which process instance generated them; therefore, some suitable “event correlation” tasks need to be performed, in order to recognize/define groups of event records that pertain to different process instances and turn each group into a distinct log trace. However, we believe that these additional log quality problems (different from the core issues I1, I2 and I3 above) are beyond the scope of this work, and we refer the reader to [26] for a recent comprehensive survey on these problems and existing solutions in the literature.

Note that all the above described data quality issues tend to occur very frequently when trying to combine Business Process Management (BPM) and Internet of Things (IoT) solutions, as was already noticed in [47], which constitutes a manifesto for such a novel topical area of research. A noticeable work in this area that looks closely related to issues I1-I3 is [53], which addresses the problem of extracting good-quality process models from sensor logs, generated by humans moving in smart spaces (i.e., IoT-enabled environments equipped with presence sensors). Three challenges are, indeed, acknowledged in [53]: (i) the high variability of human behaviors, which makes the log data not structured enough to be effectively described through a high-quality (and readable) process model; (ii) the abstraction gap between fine-granular sensor data and the human activities that should be analyzed; (iii) the need to partition the log into traces (or, in the specific case of modeling human habits, to recognize what really is a habit)—a simple common way to perform such a partitioning consists in applying a fixed time window (e.g., of a day), and regarding each resulting segment as a distinguished trace. Clearly enough, the former two challenges are closely linked to the above-defined general issues I1 and I2, respectively, while the last one might be related to event correlation issues (cf. [26]).

3.1 Issue I1: Heterogenous Logs

PM techniques have been shown to be very effective in settings where the given log data results from the execution of a single, well-structured process featuring a regular behavior. Indeed, in this case, it is possible to discover a good process model easily enough, and to apply enrichment and operational-support methods profitably (hence allowing for a complete application of the \(L^*\) life-cycle model described in Sect. 2.3).

However, there are many real-life applications where such an ideal situation does not hold, and the log to be analyzed describes rather heterogenous process instances. This can descend from two causes: (i) the log was generated by different processes, but this fact is not reflected by the presence of an explicit process identifier in the traces (allowing for separating them into different sub-logs); (ii) the log comes from just one process, but this process is handled in a flexible/unstructured manner, and its process instances follow rather diverse patterns of execution.

An illustrative example for the former situation is presented below.

Example 1

(Running example) Consider the case (inspired to a real-life application studied in [31]) of a phone company, where two business processes are carried out, one (\(W_1\)) for the activation of services and the other (\(W_2\)) for handling tickets, which both consist in performing a subset of the following activities: Receive a request (R), Get more information from the client (G), Retrieve client’s data (I), Send an alert to managers (A), Define a service package (P), Dispatch a contract proposal (D), Fix the issue (F), Notify the request outcome (N). The actual link between these activities and the processes \(W_1\) and \(W_2\) is shown in Fig. 1 via process activity edges—please disregard, for the moment, the event types reported in the bottom of the figure, the meaning of which is clarified in the next subsection. Notice that many activities are shared by the two processes. Assume moreover that the processes are executed in a low structured way by using a non-workflow-based IT system, which does not maintain a process-aware log, but only a collection of traces without any identifier of the process (i.e., either W1 or W2, in this example) that triggered them. \(\square \)

Clearly, a situation like that described in the above example does not occur frequently in traditional BPM settings, but it may not be so rare when analyzing the logs of non-process-aware systems. Anyway, the difficulties that descends from the presence of heterogeneous traces in the input log are similar, from conceptual and technical viewpoints, to those arising in contexts where the traces only regard a single process, which, however, features different (often context-dependent) execution scenarios (a.k.a. process variants, or use cases). As an example of the latter situation, consider, e.g., an e-commerce system where quite different procedures are used to handle the orders of gold customers, on the one hand, and those of the other customers, on the other.

When applying traditional process discovery methods to a log mixing different execution scenarios, there is a high risk that a useless “spaghetti-like” process model is obtained, featuring a great variety of execution paths across the process activities. Beside being difficult to read, such a model is very likely to be imprecise, as a consequence of the ambition of these methods to cover the behaviors of all/most of the traces in the log and of the limited expressivity of the model (owing to typical language biases). In other words, the capability of such a model to explain very heterogeneous behaviors usually comes at the cost of also modeling many extraneous execution patterns. In fact, an effective solution for better modeling heterogenous traces is to take care of the existence of different processes/variants, and to try to recognize and model each of them separately [42], as discussed later on.

3.2 Issue I2: Low-Level Logs

Often, the logs of non-process-aware systems do not refer explicitly to meaningful process activities. Such a circumstance occurs when the tracing/enactment system records “low-level” operations, instead of the corresponding “high-level” activities that the users (analysts or process stakeholders) are used to reasoning about. This causes a mismatch between the alphabet of symbols describing the actions in the log and the alphabet of these high-level activities.

Example of all possible mappings between activities and events in a low-level log (taken from [31])

Turning such a low-level log into traces referring to high-level process activities is a hard and time/expertise consuming task when the possible event-activity mappings are not one-to-one, at the levels of: (i) types (e.g., a low-level operation can be used as a shared functionality to perform different activities) and/or (ii) instances (e.g., there are complex/composite activities that can trigger multiple log events, as smaller “pieces of work”, when executed). Notably, the latter case leads to a gap of representation granularity between the log and the users’ vision, in addition to having mismatching events and activities alphabets.

A toy example of such a situation is shown in Fig. 1, and described in the example below.

Example 2

(... contd) Assume that all activities of the two processes in Example 1 are performed through the execution of generic operations such email exchanges, phone calls, db accesses, and that the log traces produced by the IT system just represent the history of a process instance in terms of such operations, rather than in terms of high-level activities. Figure 1 reports the different low-level event types that can occur in these traces, denoted with Greek letters, as well as their mapping to the (high-level) process activities. There, a link between an activity and an event means that the activity may generate an instance of that event when executed (e.g., an instance of activity N can produce an instance of event \(\delta \) or \(\xi \)). Notably, the event-activity mapping is many to many: for example, activity G can produce an instance of either event \(\delta \) or \(\gamma \), while an instance of event \(\delta \) can be generated by the execution of either activity G or N. Thus, any execution of a process activity generates a (potentially different) instance of one of the events associated with the activity itself. For example, a process instance \(\varPhi \) featuring the activity sequence R I G P N might generate one of two sequences of events: \(\alpha \) \(\beta \) \(\gamma \) \(\beta \) \(\xi \) or \(\alpha \) \(\beta \) \(\delta \) \(\beta \) \(\delta \). Finally, in order to extend this scenario with an example of granularity representation gap, let us now assume that any execution of activity N may trigger both events \(\delta \) and \(\xi \), in addition to generating just one of them. Clearly, under this hypothesis, two further event sequences may be generated from \(\varPhi \), in addition to the two above, namely: \(\alpha \) \(\beta \) \(\gamma \) \(\beta \) \(\xi \) \(\delta \) and \(\alpha \) \(\beta \) \(\delta \) \(\beta \) \(\delta \) \(\xi \). \(\square \)

When directly applied to such logs, classic PM tools typically yield results of little use, such as incomprehensible/trivial process maps featuring no recognizable activities. Worst, some PM tasks like conformance checking cannot be performed at all.

The urgent need to extend PM approaches with the capability to deal with low-level logs is proven by many recent research proposals concerning the definition of (semi-)automated log abstraction methods [2, 4, 31, 36, 59], in two different settings: (1) no predefined activities are known, and the only way to lift the representation of the events is to use unsupervised clustering/filtering methods [4, 36]; (2) there is a conceptualization of process activities, in the stakeholders’ minds or process documentation, which provide a sort of “supervised” event log abstraction [2, 31, 59, 83].

3.3 Issue I3: Incomplete Logs

According to [98], an important context-oriented quality dimension for data is completeness, which generally pertains the capability of the data to have sufficient breadth and scope for the analysis task at hand. Hereinafter, a log L that does not contain sufficient information for carrying out some chosen PM task T will be said an incomplete log (relatively to T).

A direct application of this definition can also lead to classifying low-level logs as incomplete, seeing that the events in such logs mainly lack a reference to high-level process activities. On the other hand, heterogenous logs, mixing traces of different processes/variants, could be effectively handled if those traces were associated with the respective process/variant identifiers (which are instead missing), given that the analyst could select more homogeneous subsets of traces by way of such identifiers; thus, in principle, also heterogenous logs are related to some form of incompleteness. Finally, it is not so rare that a given log only gathers traces that were generated in special operating conditions (e.g., under particular resource allocation regimes, or for specific categories of cases), but this is not reflected explicitly in the form of context-oriented log/trace attributes.

Abstracting from the kinds of incompleteness mentioned here above (which have been partly covered by previous subsections), we next focus on two other challenging situations that make a log incomplete: (a) the log fails to cover the execution flows of a process (in the case of control-flow discovery tasks), and (b) the log data lacks ground-truth labels necessary for performing a supervised PM task (e.g., trace/event classification).

Control-flow incompleteness This kind of incompleteness occurs when a given log L: (i) lacks relevant types of the lifecycle transitions that can characterize the execution of process activities (e.g., it only contains completion events only, so that the activities look as if they were instantaneous), and/or (ii) represents activity sequences that do not satisfy the typical “trace completeness” assumptions [89] that underlie control-flow discovery algorithms.

As to the latter point, roughly speaking, approaches to control-flow discovery typically assume that, whenever an activity b depends on an activity a in a process, the log used to analyze the process should contain traces where a is (immediately) followed by b, as evidence for this dependence; by contrast, for any two concurrent activities x and y, there must be both traces where x (immediately) follows y and traces where the opposite happens. In practice, however, two concurrent activities might always appear in the same relative order in a log storing only completion events, owing to some kind of temporal bias—e.g., because one of the activities always finishes after the other, owing to the different durations of the two activities themselves or of some of their predecessor ones [42]).

On the other hand, log completeness might not hold just because the process under analysis features a high level of non-determinism, and an unreasonably large collection of traces would be required to cover the wide range of execution patterns that it could generate.

Finally, even when there are complete log data exist for a process, it can happen that the analysts only have restricted access to them, e.g., owing to privacy reasons, or to the difficulty of extracting and properly preparing these data (especially when the latter are stored in multiple heterogeneous legacy systems).

Lack of labeled examples Another kind of incompleteness descends from the scarcity of labeled data, which is likely to arise in certain outcome-oriented classification tasks (e.g., security breach detection [12]), where the classification model must be induced from example traces with the help of supervised learning methods, and the labels of these traces need to be assigned (or validated) manually by experts. Clearly, in such a setting, the number of labeled examples available for training could be insufficient to train an accurate classifier, especially when using data-hungry (e.g., deep learning) models, so that there is a high risk of overfitting the training set [34].

A different form of label scarcity can affect those log abstraction methods (e.g., [83]) that rely on supervised sequence-tagging schemes, where each example trace is equipped with per-event labels (representing the ground-truth activities that generated the event itself), and the goal consists in learning the hidden mapping from event sequences to activity sequences. Indeed, such per-event labels are difficult to obtain in practice, owing to the strong (and tedious) involvement of human operators/ experts that is required to assign them.

4 Augmented Analytics and Two Strategies for Pushing more AI into PM Sessions

As discussed above, PM projects are highly interactive and iterative and very demanding in terms of time and expertise required, especially when dealing with hard application settings featuring low-quality logs and/or complex/flexible processes. In particular, when dealing with low-level and/or heterogeneous logs, two log-preparation operations tend to be performed multiple times by the analysts, in the attempt to derive an optimal (w.r.t. the PM analysis that is being carried out) log view: (i) data selection: a subset of events/traces is selected according to either a frequency-based criterion (e.g., retaining only frequent enough activities or paths) or to an application-dependent domain-oriented strategy (e.g., focusing on cases with specific values of their associated data properties); (ii) data abstraction: a (high-level) activity label is assigned to each low-level event by resorting to a predefined mapping/taxonomy.

Many existing commercial tools (e.g., Disco, Celonis Disc., Minit to name a few) provide the analyst with efficient functionalities that allow her/him perform many tentative “prepare-mine-evaluate” sessions, in the ultimate attempt of understanding both the log and the process that generated it. Most of these systems combine intuitive functionalities for visually and interactively select event/case, with rough process discovery algorithms that can quickly return an approximate (but simple) process map, as well as with other data visualization and reporting features borrowed from traditional Business Intelligence (BI) frameworks. Notably, the opportunity to provide the analyst with interactive process discovery algorithms has also been investigated in the research community, in order to support repeated process discovery sessions, while possibly trading lower models precision with higher speed and models readability. A noticeable example of such an algorithm is that underlying the popular ProM plug-in Inductive Visual Miner [51], which allows the analyst to produce expressive Petri net maps interactively, while letting her/him change the level of abstraction for the activities and execution paths represented.

A step ahead on the way of interactive PM analyses was made by the approach in [3] based on the concept of “Process Cubes,” which tries to support the analyst efficiently and intuitively in both data selection and abstraction transformations (through customized versions of the traditional SLICE/DICE and ROLL-UP operators of OLAP, respectively). However, developing such a framework requires skilled experts to carry out a preliminary careful design of the (case/event) attribute aggregations that could be useful for the analysis.

On the other hand, although all the above inter-activity-bound solutions make PM sessions faster and easier, they still need a skilled user, who can exploit the data preparation/mining facilities available in a proper and smart way, as to eventually distillate informative log views and interesting PM patterns/models (possibly navigating through a predefined lattice of log views, in the case of OLAP-like frameworks).

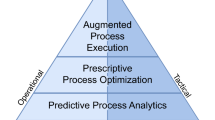

As a more advanced alternative, specifically targeted by our paper, one can think of extending PM methods with smart AI capabilities that help them work better in a more effective, efficient and easier-to-use way, even in hard application scenarios. This is exactly the vision prospected in Augmented Analytics [72], which is the subject of the next subsection.

4.1 Augmented Analytics

The term augmented analytics (i.e., AI-powered analytics) refers to the attempt to instill smart assistance for the analyst and a higher level of automation into the entire life cycle of data analytics/mining processes by leveraging AI (and, in particular, of Machine Learning) methods and technologies. Among the various kinds of improvements that augmented analytics efforts aims at providing, let us mention the following major ones:

-

allowing different kinds of stakeholders to easily collaborate with each other and with smart analytics tools and models, throughout the entire data analytics process;

-

empowering the core Data Mining phase with additional AI capabilities allowing for enhancing the internal decision making of ML algorithms or for automatically tuning ML algorithms/ models (AutoML), or with “human-in-the-loop” ML schemes allowing for better controlling the quality of ML results;

-

giving assistance/guidance in Data Extraction and Data Preparation phases (e.g., through smart services for data exploration/visualization and data quality assessment, or the suggestion of data transformation/ blending/enrichment/augmentation operations);

-

providing assistance/guidance in the Evaluation phase (e.g., by letting the analyst easily navigate across the errors of a predictive model, and perform simulations with the model);

-

extending the cognitive capabilities of decision-makers.

As noticed in [72], this perspective looks particularly valuable in complex scenarios involving the analysis of big and/or low-quality data, as well as a promising solution to the shortage of (skilled) data scientists and the need of enabling a more direct interaction (possibly in the guise of “citizen data scientists” [72]) of domain experts and business users with the analyst, the analytics tools and the analysis results.

In accordance with the perspective of augmented analytics, the following subsections specifically focus on two general strategies for empowering data analytics solutions with additional AI capabilities, which can be exploited in the context of PM projects to make the application of PM techniques smarter:

- A):

-

using declarative background knowledge, provided by domain experts, and expressing requirements, preferences, or constraints that can guide PM algorithms and PM analysts toward more effective and quicker analyses;

- B):

-

performing a main PM task synergistically with other auxiliary data analytics tasks (e.g., the discovery of structures or process-related concepts in the given log data) that can somehow help the former task obtain better and/or more interpretable results in fewer prepare-mine-evaluate sessions.

The following subsections offer a brief general description of these strategies.

4.2 Strategy A: Using Background Knowledge (BK)

Extending PM approaches with the capability to exploit domain knowledge (expressing, e.g., known physical/environmental constraints, user preferences, analyst’s intuitions, requirements, norms) can be a valuable way to complement the information conveyed by the data available in the log, especially when this information is incomplete or of low quality. Indeed, such a source of high-level information may both help improve the quality of the models/patterns/knowledge derived from the log as well as better align their outcomes to the expectancies of domain experts/business users. In particular, when this background knowledge takes the form of hard/mandatory constraints, the mining of patterns/models can be sped up, thanks to fact that uninteresting portions of the search space can be pruned.

As a matter of fact, the attempt at having ML approaches and inference methods capable of using background knowledge has a long history in AI. In particular, logics-based representation and reasoning is natively embedded in Inductive Logic Programming (ILP) and Statistical Relational Learning (SRL) frameworks [41, 49] (consider, e.g., the use of First-Order-Logics clauses in Markov Logic Networks), while many constraint-based methods have been successfully applied to several Data Mining tasks, such as pattern mining and clustering [73].

A recent effort to frame different ways of incorporating background knowledge coherently into ML-based data analytics processes is presented in [97], under the umbrella term of Informed Machine Learning (IML). Basically, IML refers to any kind of ML task where two different (but equally important) sources of information are available: data and Background Knowledge (BK). The latter can be expressed in different forms, such as logical rules, constraints, mathematical equations/inequalities/invariants, probability distributions, similarity measures, knowledge graphs and ontologies, to cite a few.

As sketched in Fig. 2, in an IML process, background knowledge can be used in three different phases:

-

in the preparation of the data (BK4Data), which can so benefit from semantics-driven selection, enrichment, augmentation, and feature engineering;

-

in the core learning/mining phase (BK4Learning), where the knowledge can be used to act either on the hypothesis space (e.g., by choosing a specific structure/architecture or specific hyper-parameters for deterministic ML models, or by assuming independency between certain variables in the case of probabilistic ML models), or on the training algorithm itself (e.g., by choosing specific loss functions in the search for model parameters, or specific priors in the case of probabilistic models);

-

in the evaluation/analysis or the usage (e.g., for inference/prediction tasks) of the discovered patterns/models (BK4Patterns).

Informed Machine Learning (IML) flow (based on an image of [97]). The dashed line between “Knowledge” and “Data” corresponds to the IML modality (namely, BK4Data) that has not been considered in our literature review

It is worth noting that the second, more advanced and intriguing form of IML above (i.e., BK4Learning) has been receiving renewed attention of late—and, in fact, it was the main target of the preliminary literature study conducted in [97]. In particular, several proposals for extending sub-symbolic ML methods with prior knowledge appeared recently [41], most of which rely on stating the learning task as an optimization problem where “fuzzy” constraints encoding both example-based supervision and prior knowledge must be satisfied, and/or on enforcing the prior constraints at testing time.

Strategy A in a PM scenario Different kinds of domain knowledge may be available in real-life PM projects, which can be used as valuable background knowledge for guiding PM tasks, especially when the quality of the log data at hand is not satisfactory. Two major forms of such knowledge are, for example:

-

process-oriented ontologies/taxonomies [15] providing an articulated representation of business processes and/or of organizational resources;

-

existing workflow models or declarative behavioral models (represented, e.g., via simple ordering constraints over process activities [31, 42] or expressive temporal-logics models [96]), possibly induced from historical data with one of the many process discovery techniques available in the literature [1], and suitably validated by experts.

Let us refer to such a (more or less structured) source of background information as knowledge base \({\mathbb {B}}\), and regard it as a complementary input for PM tasks, besides a log L, and possibly a reference model M (in the case of conformance/enhancement tasks).

As mentioned in Sect. 1, we decided not to consider the case where \({\mathbb {B}}\) is used for data preparation (BK4Data). The reason under this choice is that our work is interested in “smart” AI-based solutions addressing some common PM task (such as process discovery, conformance checking, predictive process monitoring, etc.) with the help of IML methods that exhibit a more direct and tight form of integration between the background knowledge and the target PM task itself.

More specifically, we will focus on two ways of using the background knowledge in \({\mathbb {B}}\): (i) using \({\mathbb {B}}\) in discovery tasks entailing some kind of inductive learning process (BK4Learning), and (ii) using \({\mathbb {B}}\) along with (automatically discovered or manually defined) models or patterns when performing inference, classification or verification tasks (BK4Patterns).

4.3 Strategy B: Performing Multiple AI Tasks

An alternative/complementary way to tame a difficult data analytics task T, consists in devising methods that can automatically extract and exploit “auxiliary” domain knowledge that may improve the expected quality of T’s results (in terms of readability and/or meaningfulness/validity). Such hidden knowledge can play indeed as a sort of surrogate of expert-given domain knowledge, which may be particularly useful performing T on complex, low-level, or incomplete data.

In general data analytics settings, such knowledge could be captured (in a more or less interpretable form) by two different kinds of auxiliary (sub-)models: (a) fully fledged ML models, concerning correlated auxiliary tasks and trained in a joint and synergistic way with the primary task T (as in Multi-Task Learning and Learning-with-Auxiliary-Tasks works [8]); (b) “smart” data pre-processing/preparation or post-processing components having some level of integration with the core learning/inference/verification technique.

Such an approach grounds on the expectation that the knowledge or inductive bias coming from correlated tasks provides additional information and intelligence skills to an agent that must perform a learning/decision task on the basis of insufficient/unreliable data and can hence lead to better results in terms of generalization, stability and accuracy. Interestingly, the hidden knowledge extracted automatically via these auxiliary tasks can turn useful in performing T, even when it is left implicit (within the internal representation of an ML model), and it is inherently uncertain and approximate.

Strategy B in a PM scenario In principle, different kinds of auxiliary tasks could be beneficial to pursue when approaching a “main/target” PM task that is difficult to solve effectively, e.g., owing to the fact that the log data at hand are insufficient, too finely granular or unreliable.

For example, performing trace clustering [16] and event abstraction [2, 31, 83] tasks is widely reckoned as an effective means for obtaining high-quality collections of log traces. Indeed, trace clustering can help identify homogeneous and regular groups of traces in a heterogeneous log, which are easier to model and analyze (in that they represent sorts of hidden process variants or usage scenarios); indeed, the application of classic PM discovery tools to such clusters, rather than to the log as a whole, was often shown empirically to yield both better process models [16] (in terms of conformance, generalization and readability) and more accurate forecasts in predictive monitoring settings [93]. Event abstraction tasks allow instead for bringing low-level traces to a higher level of abstraction that is more suitable for traditional PM analyses (e.g., by mapping the given log events to instances of well-recognized process activities that were not explicitly associated with the events, but are assumed/known to have generated them). A further kind of task that can turn useful in process discovery consists in automatically detecting and purging infrequent behaviors and/or anomalous traces (usually referred to in the literature as noise and outliers, respectively) [11].

However, these three kinds of auxiliary tasks have been prevalently employed so far in separate data pre-processing steps, in order to transform a given low-quality collection of process execution data into an event log that better suits the application of standard PM tools. As mentioned in Sect. 1, this way of exploiting clustering, event abstraction and noise/outlier filtering methods, as a data preparation tool, is out of the scope of our work, which ultimately aims at studying more synergistic forms of hybridizing standard PM task with AI-based auxiliary tasks.

5 Literature Search Protocol

We now illustrate the procedure adopted in our literature study, identifying relevant works linked to the attempt to empower PM methods with complementary AI capabilities, according to the strategies A and B described before.

In order to enable a scientific, replicable and rigorous analysis, we defined a structured literature search protocol, inspired some major principles of Systematic Literature Reviews (SLR) [48]. Specifically, we first defined the research questions driving the search, and then performed some search queries on a specific data source. Next, we applied a number of inclusion and exclusion criteria for selecting the a more focused set of truly relevant works, to be eventually examined in detail. The different components of our search protocol are described in the following, in separate subsections.

5.1 Research Questions

The general objective of our review study is investigating on whether and how standard PM tasks (namely, process discovery, conformance checking, model enhancement, detection, prediction, recommendation) have benefitted so far from the two AI-based strategies introduced in Sect. 4.1, in order to gain effectiveness and robustness in presence of the challenging issues I1-I3 defined in Sect. 3. In line with this goal, we formulated the following research questions:

-

(RQ1)

What approaches to challenging PM problems are there in the literature that leverage Strategy A or Strategy B?

-

(RQ2)

What forms of background knowledge (e.g., taxonomies, declarative constraints etc.) have been employed, and according to which kind of IML modality, in the PM approaches using Strategy A?

-

(RQ3)

Which PM tasks have benefitted from using background knowledge, according to Strategy A?

-

(RQ4)

What kinds of auxiliary tasks have been considered in the PM approaches adopting Strategy B?

-

(RQ5)

Which PM tasks have benefitted from jointly pursuing auxiliary tasks, according to Strategy B?

Clearly, RQ1 is the fundamental research question driving our literature search, seeing as it is meant to identify a set of candidate examples for the two classes of AI-based PM approaches corresponding to Strategies A and B, respectively. The remaining questions are meant to structure these two broad classes by using some informative classification dimensions, namely: the target PM tasks (i.e., discovery, prediction, classification, etc.) addressed in works retrieved for either class (RQ3 and RQ5, respectively); the kind of background knowledge and IML modality (i.e., BK4Learning or BK4Patterns, cf. Sect. 4.2) employed in the approaches adhering to Strategy A (RQ2); the kind of auxiliary tasks (e.g., clustering, abstraction) exploited in the approaches of Strategy B (RQ4). All of these classification dimensions are meant to give us a basis for describing the retrieved literature in a structured way (in Sects. 6 and 7), and for eventually providing the reader with a taxonomical scheme (in Sect. 9).

5.2 Search Procedure

The search process was performed on the ScopusFootnote 1 publication database, since, in our opinion, it ensures a better trade-off between the quantity and quality of indexed works, compared to Google Scholar (GS) and Web of Science (WoS). Indeed, as noticed in [62], while on the one hand, GS returns significantly more citations than both WoS and Scopus, about half of GS unique citations are not journal papers and include very many theses/dissertations, white papers, informal reports, and non-peer-reviewed papers of questionable value and impact from a scientific viewpoint. On the other hand, WoS is known to be far less inclusive than both GS and Scopus, which may lead to missing (too many) relevant publications.

In order to identify an initial, wide enough, range of potentially relevant works, we defined two distinct queries, one for Strategy A and the other for Strategy B, which are shown in Table 1, along with their associated strategy and the number of results returned. For the sake of presentation, we will refer hereinafter to each query by using the number associated with it in Table 1, i.e., either \(Query\#1\) or \(Query\#2\). Similarly, we will sometime refer to the answer set returned by the application of these two queries by using the notation \(Group\#1\) and \(Group\#2\), respectively.

Basically, the two queries were devised to have the same structure: three subqueries joined through AND operators, and consisting each of multiple alternative keywords (i.e., of a disjunction of multiple search terms). The former two subqueries define the specific research field (i.e., standard PM tasks) and the challenging scenario considered in our work (featuring incomplete, heterogeneous and/or low-level log data), respectively. As these two subqueries specify our reference “PM setting”, they are instantiated identically in both \(Query\#1\) and \(Query\#2\). The remaining parts of \(Query\#1\) and \(Query\#2\) differ instead from one another, since they aim at defining the specific boundaries of Strategies A and B, respectively.Footnote 2

Precisely, the first shared subquery of \(Query\#1\) and \(Query\#2\) (i.e., the first conjunct in the context-oriented preamble of both queries) contains the keywords “process discovery”, “conformance checking”, “predictive process monitoring”, “predictive process model”, “compliance checking” and “run-time prediction”, which all correspond to well established PM tasks, as well as a few syntactical/semantical variants of the former that can help widen the set of relevant results retrieved—this is the case, e.g., of the term “classify(ing) (business) log traces”, capturing a possible specialized way to carry out certain conformance checking tasks. The second subquery instead contains terms related to the data-related PM issues I1-I3 discussed in Sect. 3 (namely “uncertainty”, “low-level”, “complex processes”, “event abstraction”, “incomplete”, “high-level”, “infrequent”, “noise”, “flexible processes”, and “spaghetti-like”), as well as some variations of these terms.

The rightmost conjuncts in \(Query\#1\) and \(Query\#2\) were chosen as to capture key distinctive aspects of the respective AI-based strategies, based on our personal background and knowledge of the subject matter. In particular, the last subquery of \(Query\#1\) features terms that are likely used in works related to the adoption of Strategy A, namely: “background knowledge”, “a-priori knowledge”, “prior knowledge”, “domain knowledge”, “predefined constraints”, “possible mappings”, “potential mappings” and “activity mapping”—actually, the last three terms may occur in research works where the only kind of a-priori knowledge driving a PM task pertains to the mapping between (low-level) log events and (high-level) process activities.

Devising a specialized set of terms for the works related to Strategy B was not an easy task. Indeed, we noticed that a very small fraction of such works explicitly claimed to synergistically/jointly pursue multiple AI/PM tasks—and very few of them actually contained terms like “auxiliary task,” “multiple tasks,” “joint learning/training/mining,” etc., which one would naturally expect to find instead. We thus had to leverage our knowledge of some major types of auxiliary tasks (e.g., trace clustering, event abstraction) that have been exploited in some popular works following Strategy B, and tried to extend this set of task types with a number of possible alternative ones. As a result, we eventually defined the third subquery of \(Query\#2\) as the disjunction of the following terms: “outliers,” “abstraction” and “clustering,” as well as some terms that might witness the execution of complementary clustering or event abstraction/filtering tasks (namely, “variants,” “groups” and “deviance-aware”) in the case where such tasks are not referred to explicitly through the terms “outliers,” “abstraction” and “clustering.”

The search queries \(Query\#1\) and \(Query\#2\) were run on Scopus on October 30th, 2020, over the titles, keywords and abstracts of all the publications stored in the database. For the sake of fairness and reproducibility, we performed the search in an anonymous mode (i.e., accessing the Scopus website without using any personal account and disabling all cookies), to prevent any possible bias coming from our past searches.

The number of papers returned for these two queries were 48 and 82, respectively, as shown in Table 1.

5.3 Inclusion and Exclusion Criteria

According to [48], the next step in an SRL search protocol, after running the queries, consists in applying a number of well-specified inclusion and exclusion criteria, in order to obtain eventually a selection of really significant and relevant works, while ensuring that this selection is both replicable and objective. The general rule followed for including/excluding a study in/from our review can be summarized as follows: a work must satisfy every inclusion criterion (IC) in order to be retained, whereas it is discarded whenever it satisfies any exclusion criterion (EC).

The following inclusion criteria were applied to any work returned by our searches:

-

(IC1)

The work has already reached its final publication stage—no “in-press” works are allowed.

-

(IC2)

The work was published as a journal article, as a contribution to conference proceedings, or as a book chapter—neither letters nor reviews were considered.

-

(IC3)

The work is written in English.

In practice, all the above described basic inclusion criteria were enforced directly through the Scopus query engine. This reduced the number of works in \(Group\#1\) and \(Group\#2\) (from the values reported in Table 1) to 45 and 82, respectively—actually, the size of \(Group\#2\) remained unchanged after the application of the inclusion criteria.

After that, all remaining works underwent a little more aggressive and semantic selection phase, devoted to filtering out works that might not be sufficiently significant/mature or not relevant to our scope. To this end, we sequentially applied the following exclusion criteria to any retrieved work satisfying the inclusion criteria:

-

(EC1)

The work was published before 2020 and it has received less than 1 citation on average per year, starting from the year of publication till October 30th, 2020.Footnote 3

-

(EC2)

The work appeared in a workshop (seeing as such a kind of venue may accept proposals at an early stage of development).

-

(EC3)

The work is a survey or an empirical/benchmark study (which hence does not propose any new technical solution), or it is a position/research-in-progress paper (so that any proposed solution is likely not to be mature enough from a technical viewpoint).

-

(EC4)

The work is an abridged version of another work, by the same authors, published later (typically as a journal paper).

-

(EC5)

The work proposes a solution that has no connection with (at least) one of the strategies A and B defined in Sect. 4.1.

The application of EC1 cut the size of \(Group\#1\) from 45 to 35 works, and that of \(Group\#2\) from 82 to 42—in fact, this also accounts for the removal (from \(Group\#2\)) of a paper, published in 2020, missing fundamental meta-data data (namely, the author names and work title) in Scopus.

By using EC2, we excluded no paper from \(Group\#1\) and 7 papers from \(Group\#2\), thus shrinking the size of both groups to 35 elements.

Criterion EC3 allowed us to further remove 4 works from \(Group\#1\) and 2 from \(Group\#2\). This way, the number of works reaching the next exclusion step was reduced to 31 for \(Group\#1\) and 33 for \(Group\#2\).

By way of EC4 we excluded 3 and 2 further works from \(Group\#1\) and \(Group\#2\), respectively, so coming to the size of 28 and 31, respectively.

The last exclusion criterion, namely EC5, directly descends from the research question RQ1 and serves the purpose of marking a semantic boundary for our search. For the sake of greater objectivity and fairness, the assessment of EC5 was performed independently by both authors of this manuscript, after carefully reading (at least the abstract, introduction and conclusions of) each remaining work. Any possible disagreement among the authors was resolved through discussion and deeper analyses. Enforcing this latter criterion caused the exclusion of further 19 (resp. 13) works from \(Group\#1\) (resp. \(Group\#2\)).

The 9 works of \(Group\#1\) and the 18 works of \(Group\#2\) that survived the five exclusion stages above are shown in Tables 2 and 3 respectively, along with some major kinds of categorical information (e.g., the main PM task pursued) allowing for characterizing them in a finer way. These are the works that we considered for our literature review study.

For the sake of traceability and replicability, the complete set of works retrieved for Strategy A and Strategy B (after running their respective queries and applying the inclusion criteria) are reported, in a tabular form, in two separate CSV files (named StrategyA.csv and StrategyB.csv, respectively). The files indicating, for each excluded work, the first criterion that determined its exclusion, can be found in the online folder http://staff.icar.cnr.it/pontieri/papers/jods2021/.

The remaining collections of research works obtained for Strategy A and Strategy B are discussed in a structured form in the following two sections, respectively.

6 Review of PM Methods Using Strategy A

This section briefly discusses and categorizes all the relevant PM works that were reckoned to take advantage of explicit background knowledge, based on the results of our literature search. A summarized view of these approaches is offered in Table 2, where column BK Usage indicates, for each work, which of the two considered modes of exploiting the available Background Knowledge (BK)—i.e., either BK4Learning or BK4Patterns (cf. Sect. 4.2)—is adopted in the work, while column Reference Task reports the PM task addressed in the work.

6.1 Using BK for Process Discovery

To improve the quality of the process models (more precisely, control-flow models) discovered from incomplete/noisy logs, some of the works retrieved by our literature search propose to exploit a set of a-priori known activity constraints, providing a partial description of correct/forbidden process behaviors [27, 39, 42, 103].

In particular, in [42] the discovery task is stated as the search for a process model (specifically represented as a C-net [89]) for a given log L that satisfies a set of precedence constraints provided by the expert. Essentially, these constraints are meant to express requirements (in terms of the existence or non-existence of edges and of paths) on the topology of the model, regarded as a dependency graph (while abstracting from local split/join constructs in the C-net). This problem is shown to be equivalent to a constraints satisfaction problem (CSP) [73] where these precedence constraints are complemented with log-driven precedence constraints, derived automatically from the traces of L. The problem is also shown to be tractable in two cases: when the background constraints only concern the absence of paths, or they contain any other kind of constraints but forbidden paths. Two different graph-based algorithms are proposed in [42] for these two cases, respectively, as well as an extension of the former algorithm that solves the general (intractable) case heuristically.

Fairly similar in the spirit to [42] is the approach proposed in [103]. The background knowledge is here expressed by an expert in terms of pairwise activity constraints (precedence/causality, parallelism) and designed start/end activities. These constraints are used to define an ILP problem, which also takes account of activity dependency measures extracted from the input log—in particular, a proximity score capturing both direct and indirect succession relationships. User’s constraints are considered as strong constraints, which can correct log-driven relationships to some extent. For example, even though an activity A never follows activity B in the log, the discovered model is allowed to include an edge from A to B in case the expert deems that B is directly caused by A, provided that the proximity score from A to B is high enough. Moreover, [103] presents a system that allows the user to revise the background knowledge in an incremental and interactive way: after discovering a model, the user can define novel constraints (allowing for obtaining a better process model), before running the discovery algorithm again.

Another example of the same category is the approach proposed in [39], where the discovery of a control-flow from a log L is stated as a multi-relational classification problem, in which pairwise temporal constraints over the activities (including both local and non-local causal dependencies, and parallelism) are known a priori and used as background knowledge. The approach works in four steps: (i) a set of temporal constraints is extracted from L; (ii) L and all the temporal constraints (both those provided by the expert and those derived from the given traces) are used to generate negative examples (precisely, for each prefix of any trace, negative events are generated stating which activities are not allowed to be executed later on in that trace); (iii) using both the log traces (as positive examples) and the artificially generated negative events, a logic program is induced that predicts whether a given activity can occur in a given position of a given trace; (iv) the logic program is converted into a Petri net.

The problem of discovering a control-flow model from a noisy log is faced in [74] by inducing a probabilistic graphical model taking the form of an information control net (ICN), the nodes of which represent different process activities. The proposed method takes as further input background knowledge expressed in terms of degrees of belief, associated with elements of the ICN. This knowledge is exploited according to a Bayesian inductive learning scheme, and it is shown to help improve the quality of the discovered process model.

Quite a different, interactive, approach to injecting the user’s knowledge into a process discovery task is adopted in [29]. Here, the user is allowed to build a Petri net process model incrementally, by possibly combining her/his domain knowledge with statistical information extracted (by using PM techniques) from a given event log. This enables a human-in-the-loop scheme where the user and the process mining component can collaborate, while leaving total control to the former over the latter. More specifically, log-driven statistics concerning the ordering relationships between the process activities are derived from the log and presented to the user by visually projecting them onto the process model defined so far. This lets the user make informed decisions about where and how to accommodate a novel activity (i.e., an activity that appears in the log but not yet in the process model). To this end, only three predefined kinds of synthesis rules [21] can be used, which all ensure the soundness of the resulting model.

A similar incremental process discovery approach is proposed in [28], which describes a framework named Prodigy. Rather than just projecting log-driven statistics on the current process model, in this work the PM component is devised to recommend a number of alternative ways to refine the current version of the process model by inserting a novel activity (according to the same kinds of synthesis rules [21] as in [29]). These recommendations are ranked on the basis of how accurate the corresponding resultant process models are in describing the given log. The user can also leave the control to the system according to an “auto-discovery” operation mode (for a chosen number of refinement steps, or until the conformance scores of the model fall under some thresholds), which consists in automatically selecting and applying the top ranked recommendation.

It is worth noting that, differently from all the other works described in this subsection, the incremental PM schemes proposed in [28, 29] do not require explicit domain knowledge to be available at the beginning of the PM session. However, whenever such knowledge is owned by the user, it will guide her/him (together with new statistics extracted from the log) in each incremental refinement of the process model. On the other hand, the updates made by the user at a certain time impact on those that can be performed subsequently, hence allowing for transferring the user’s knowledge from one iteration of the approach to the following ones.

6.2 Using BK for Model Enhancement