Abstract

Peak load forecasting is a critical aspect of power system operations and planning. Accurate forecasting of peak loads significantly impacts the overall efficiency and reliability of a power system. Among the numerous load forecasting methods that are used, ensemble learning algorithms have emerged as a popular choice due to their high accuracy. In this research, the author proposes an innovative methodology that integrates the Differencing Operator with the Sliding Window procedure for training and predicting peak loads using commonly employed ensemble learning models such as GBDT, XGBoost, LightGBM, and CatBoost. The performance of the proposed approach was evaluated by analyzing the prediction error and execution time. The results obtained demonstrated improved accuracy in peak load forecasting, with no impact on execution time.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Electrical peak load forecasting plays a vital role in ensuring the reliability, safety, and economic efficiency of power system operations and planning. It provides valuable reference values and guidance for integrating renewable energy sources, such as wind and solar power, into the smart grid [1,2,3,4]. The research literature has proposed numerous models for electrical peak load forecasting, which can be categorized into two major groups: (1) classic stage, and (2) advanced stage. The classic stage category includes well-known forecasting methods such as Regression [5, 6], Stochastic time series [7, 8], and Exponential Smoothing [9, 10]. In the advanced stage group, researchers have reported the effectiveness of Fuzzy logic [11, 12], Artificial neural network [13,14,15], Support Vector Machines [16, 17], Hybrid Techniques [18,19,20], and Ensemble Learning [21, 22]. In this context, ensemble learning is a machine learning technique that combines predictions from two or more models to increase the accuracy and reliability of the final results. By leveraging the collective predictions of multiple models, ensemble learning can achieve higher accuracy and more reliable predictions compared to individual models. Recently, there has been extensive research on the application of ensemble learning using decision tree-based machine learning algorithms in load forecasting, resulting in remarkable outcomes. Notably, this paper will consider models such as GBDT, XGBoost, LightGBM, and CatBoost, which have demonstrated promising performance in this field [23,24,25,26,27,28,29,30].

Since peak load is a time series, it is common to utilize the Sliding Window procedure when applying ensemble algorithms. This procedure helps partition the data into input and target sets, enabling the training and forecasting processes for the load profile to be performed using ensemble algorithm models [28, 31, 32]. Another aspect examined in this study is the periodicity of peak load. For example, the load characteristics of a specific Monday may exhibit similarities to those of the previous Monday. When using only the Sliding Window procedure in data processing, the cyclic nature of the load data may inadvertently be overlooked. Therefore, in this study, the author recommends a novel approach that involves incorporating the input data Differencing Operator to account for the cyclical characteristics of the load data. More specifically, the analysis will focus on the series Zt = Yt–Yt-d as an alternative to using the original data Yt, where d represents the differencing order. The Differencing Operator, integrated with the Sliding Window procedure, will be employed in combination with ensemble learning algorithms. The proposed method's effectiveness will be assessed through the evaluation of forecast errors and program execution time. The GBDT, XGBoost, LightGBM, and CatBoost algorithms will be sequentially investigated. Each algorithm will consider a large number of hyperparameter combinations. Furthermore, this study will utilize peak load data from two Australian states, New South Wales and Queensland, enhancing the reliability of the research results.

This paper is organized as follows: In Sect. 2, a brief introduction to ensemble algorithms is presented; Sect. 3 proposes a new approach through the combination of the Sliding Window procedure with the Differencing Operator; Sect. 4 conducts empirical assessments on real datasets from two states of Australia; and finally, Sect. 5 presents the conclusions.

2 Review of Ensemble Algorithm

Ensemble learning is a technique that enhances predictive performance by combining multiple models, surpassing the performance of individual models used in isolation. There are three main classes of ensemble learning: bagging, stacking, and boosting [33]. Bagging is an ensemble learning algorithm that creates a diverse group of ensemble members by training models on different subsets of the training dataset. On the other hand, stacking involves training different types of models on the training data to generate predictions, which are then combined using another model. Boosting is an ensemble algorithm that leverages the mistakes made by previous predictors to improve future predictions. Boosting algorithms have gained significant attention in recent years and will also be employed in this paper. Notably, boosting algorithms come in various forms, including GBDT (Gradient Boosting Decision Trees), XGBoost (Extreme Gradient Boosting), LightGBM (Light Gradient Boosting Machine), and CatBoost (Categorical Boosting) [34,35,36].

2.1 Ensemble Algorithms

GBDT algorithm was first introduced by Friedman in 2001, presenting a novel approach that combines Gradient Boosting and Decision Trees in machine learning [37, 38]. In Gradient Boosting, multiple weak learners are connected sequentially, with each learner aiming to minimize the error of the previous learner. Gradient Boosting utilizes gradient descent to construct new weak learners along the direction of the current model's loss function. The Decision Tree plays a crucial role as the main component of GBDT and serves as a weak learner within the Gradient Boosting process. The integration of Gradient Boosting and Decision Trees in GBDT leads to enhanced effectiveness in learning and optimization.

XGBoost is a scalable, end-to-end tree boosting method developed by Chen and Guestrin in 2016 [39]. It is an improved algorithm based on the GBDT model, which uses second-order Taylor expansion on the loss function and incorporates regular terms into the objective function to achieve the optimal solution. This approach helps control the decline of the objective function and the complexity of a model, resulting in better convergence, prevention of overfitting, and ultimately providing higher forecasting accuracy. Additionally, XGBoost processes the data and stores the results before training, enabling their reuse in subsequent iterations to reduce computational complexity and facilitate parallel execution, thereby increasing efficiency.

LightGBM is a novel gradient boosting framework developed by Microsoft Research Asia in 2017 [40]. It is an enhanced version of GBDT that incorporates two key techniques: Gradient-based One-Side Sampling (GOSS) and Exclusive Feature Bundling (EFB). The core concept behind GOSS is that larger gradients contribute more to the information gain. The GOSS algorithm identifies samples with high gradients and randomly selects a subset from samples with small gradients. This approach effectively utilizes the samples during the training process, optimizing their impact on the model. On the other hand, EFB focuses on reducing the number of features by merging mutually exclusive ones. EFB consists of two algorithms: one for exclusive feature bundling, which combines related features, and another for merging feature bundles and assigning a value to the resulting bundle.

CatBoost is a new gradient descent algorithm that was presented by Prokhorenkova et al. in 2018 [41]. It is highly effective in predicting categorical features and is based on the utilization of binary decision trees as base predictors. This algorithm incorporates several techniques including permutation methods, one-hot-max-size encoding, greedy methods for new tree splits, and target-based statistics. These techniques are applied as follows: The dataset is randomly permuted into subsets, the labels are converted to integer numbers, and the categorical values are transformed into numerical representations. This combination of techniques enhances the effectiveness of CatBoost in handling categorical data and improves its predictive capabilities.

2.2 Hyperparameters

One concern when using hybrid learning techniques is the hyperparameters of the model, which affect the performance and accuracy of an ensemble model. Hyperparameters are parameters that are set prior to training a machine learning model, unlike model parameters which are learned from data during training. There are many hyperparameters for ensemble algorithms, which can be classified into many groups such as Accuracy, Speed, and Overfitting. In this research, each model of GBDT, XGBoost, LightGBM, and CatBoost will be performed and evaluated in combination with different values of typical hyperparameters to increase the reliability of the results [42, 43]. These key hyperparameters that will be considered in this paper include:

-

The learning rate (lr), which determines the step size at each iteration with respect to the loss gradient function.

-

The maximum depth (md), which is an integer that controls the maximum distance between the root node and a leaf node.

-

The number of estimators (ne), which is the number of trees used in the model.

3 Proposed Method

3.1 Sliding Window Procedure

Time series data refers to a collection of observations on the values that a variable takes at different points in time, following a uniform time–frequency. It can be represented by the equation:

where m ranges from 1 to M, representing the number of observation values.

To incorporate time series data into an ensemble algorithm, the Sliding Window procedure has been utilized to extract both time series data and production data features. The Sliding Window procedure is illustrated in Fig. 1, where a window size of 7 has been employed [32].

When working with a time series dataset of length M, the Sliding Window procedure is applied using a window size denoted as N. Subsequently, the dataset is divided into training and testing subsets, where the number of testing instances is denoted as H. The steps for constructing the dataset using the sliding window procedure are detailed in Table 1.

The training dataset is composed of an input sequence Xtrain = {y1, …, yN; y2, …., yN+1; ….; yM-H-N, …, yM-H-1} and an output sequence Ytrain = {yN+1, …yM-H}. On the other hand, the testing dataset includes an input sequence Xtest = {yM-H+1-N, …, yM-H; yM-H+2-N, …., yM-H+1; ….; YM-N, …, yM-1} and an output sequence Ytest = {yM-H+1, …yM}. Following the Sliding Window procedure mentioned earlier, the data is structured into input and output components. The training data is represented as (Xtrain, Ytrain), while the testing data is represented as (Xtest, Ytest). These training and testing datasets serve as the foundation for applying machine learning algorithm in time series forecasting.

3.2 Differencing Operator

The repetitive nature of time series refers to the regular or periodic patterns that occur in the data over time. These patterns can occur at regular intervals, such as hourly, daily, weekly, monthly, or yearly for electric load patterns. To address the repetitive nature and make time series data more amenable to analysis, the Differencing Operator is often applied. The Differencing Operator involves computing the difference between consecutive observations in the time series by subtracting the previous value from the current value. This captures the changes or fluctuations in the data, as shown by the equation below:

where d is the order of differencing.

The algorithm flowchart of the Differencing Operator is shown in Fig. 2. By applying differencing, the operator helps remove the trend and seasonality from the data, making it stationary. This process allows for better modeling and prediction of the time series.

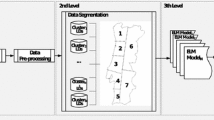

3.3 The Integration of Differencing Operator into the Sliding Window Procedures

Based on the presentation above, the Differencing Operator has the potential to significantly impact the forecast of time series data. Therefore, in this study, the author proposes the utilization of the Differencing Operator integrated into the Sliding Window Procedure for ensemble learning algorithms in the case of peak load forecasting, as illustrated in Fig. 3. Figure 3 depicts the training and testing process of the ensemble learning algorithm. First, ensemble algorithms are trained using {Xtrain, Ytrain} as input and output variables, which generates a regression model called \({{\varvec{m}}{\varvec{d}}{\varvec{l}}}_{{\varvec{i}}}^{(\boldsymbol{ }{\varvec{d}})}\). Secondly, this trained regression model, \({{\varvec{m}}{\varvec{d}}{\varvec{l}}}_{{\varvec{i}}}^{(\boldsymbol{ }{\varvec{d}})}\), produces the output variable \(\widehat{Y}\) corresponding to Xtest in the testing process. The error rate (such as MAPE) between the predict value \(\widehat{Y}\) and real value Ytest values is used to evaluate the effectiveness of the ensemble algorithms.

The pseudocode for the training process is shown in Fig. 4. The input data for this process is assigned to training data, The output of the training stage is a trained model, \({{\varvec{m}}{\varvec{d}}{\varvec{l}}}_{{\varvec{i}}}^{({\varvec{d}})}\), where subscript i represents one case of the combination of hyperparameters, and superscript (d) refers to the differencing order d. The training process is performed as follows:

-

The original data is differenced according to the order d, as defined in Eq. (2).

-

Transforming data into input-target pairs: The input Xtrain and output Ytrain for the training process are established using the Sliding Window procedure discussed earlier in Sect. 3.1.

-

Defining the ensemble model: The ensemble algorithms, namely GBDT, XGBoost, LightGBM, and CatBoost models, are defined within the Python environment for this research. The corresponding libraries used are sklearn, xgboost, lightgbm, and catboost.

-

The model training is conducted using the input-target data (Xtrain and Ytrain), which correspond to the defined model from the previous step.

The pseudocode for the testing process is presented in Fig. 5. During the testing process, the input consists of the testing data, the trained model\({{\varvec{m}}{\varvec{d}}{\varvec{l}}}_{{\varvec{i}}}^{({\varvec{d}})}\), and the training data used to invert the Differencing Operator. The testing process follows these main steps:

-

Obtaining rolling data and differencing offset: The training data is used to obtain the rolling data and determine the differencing offset.

-

Obtaining the input Xtest: The input Xtest is obtained from the rolling data, using the Sliding Window procedure.

-

Obtaining the first predicted value \({\widehat{\text{y}}}_{1}\): Using the model \({{\varvec{m}}{\varvec{d}}{\varvec{l}}}_{{\varvec{i}}}^{(\boldsymbol{ }{\varvec{d}})}\) and the Xtest, the initial predicted value \({\widehat{\text{y}}}_{1}\) is caculated. It is then adjusted by the differencing offset.

-

Updating the rolling data and repeating the process: The rolling data is updated with the actual observation, and the process is repeated for the remaining predicted values \({\widehat{\text{y}}}_{i}\), i = 2, …h.

The output of the testing process is the error rate, which is calculated based on the real values [yn-h+1, yn-h+2, …,yn] and the predicted values [\({\widehat{\text{y}}}_{1}, {\widehat{\text{y}}}_{2}, \dots , {\widehat{y}}_{n}\)]. In this paper, the mean absolute percentage error (MAPE) is calculated to evaluate forecasting accuracy. The MAPE error rate is expressed by the following formula [44, 45]:

Note:

To evaluate the effectiveness of the Differencing Operator based on the Sliding Window Procedure for ensemble algorithms, it is necessary to analyze the performance of these algorithms according to the differencing order d. This is the reason why there is a superscript (d) in the model \({\mathbf{m}\mathbf{d}\mathbf{l}}_{{\varvec{i}}}^{({\varvec{d}})}\), and the error rate \({\mathbf{M}\mathbf{A}\mathbf{P}\mathbf{E}}_{{\varvec{i}}}^{({\varvec{d}})}\) in Figs. 3, 4, and 5as presented above.

Additionally, to enhance the reliability of the results, it is suggested to combine different values of hyperparameters for each ensemble algorithm. That explains why there is the input Hi = {lra, mdb, nec} in the training process, as well as the subscript (i) in the variable \({\mathbf{m}\mathbf{d}\mathbf{l}}_{{\varvec{i}}}^{({\varvec{d}})}\) and the error rate \({\mathbf{M}\mathbf{A}\mathbf{P}\mathbf{E}}_{{\varvec{i}}}^{({\varvec{d}})}\).

Thus, based on the procedure outlined in Fig. 3 and the integrated pseudocode in Figs. 4 and 5, the error rate of each ensemble model can be determined by considering specific values of differencing order (d). This allows for the evaluation of the effectiveness of integrating Differencing Operator into the Sliding Window Procedures based on ensemble algorithms.

4 Experimental Study

4.1 Experimental setup

In this study, the author recommends utilizing the daily peak load data of New South Wales (NSW) and Queensland (QL), Australia, for both training and testing. Figure 6 depicts the peak load graph of these two states from March 4, 2012 to May 31, 2014, along with their corresponding characteristics listed in Table 2. The training phase utilizes data from March 4, 2012 to May 3, 2014, while the testing phase encompasses the period from May 4, 2014 to May 31, 2014, covering a duration of 28 days.

To enhance the reliability of the proposed method, it is crucial to explore multiple cases for each ensemble algorithm. In this study, the author suggests simultaneously investigating different combinations of significant common hyperparameters for the GBD, XGBoost, LightBoost, and CatBoost models. These hyperparameters include the learning rate (lr), maximum depth (md), and number of estimators (ne), as discussed in Sects. 2 and 3. The range and the number of survey participants for these hyperparameters are presented in Table 3 below. The total number of combinations for the lr, md, and ne hyperparameters is 2000 cases.

In the present work, the focus is on forecasting daily peak loads. For this purpose, several differencing values are proposed, including d = 0 (no differencing), d = 1 (first differencing), d = 7 (weekly seasonal differencing), and d = 28 (monthly seasonal differencing). Additionally, for the experimental application of the proposed algorithm to peak load data, three window sizes have been established:

-

Window size = 1, which uses the data taken from the previous day for forecasting.

-

Window size = 7, which uses the data taken from the previous week.

-

Window size = 28, which considers a typical month's data, specifically, from four preceding weeks.

After executing the program and analyzing the results from the mentioned window sizes, the obtained outcomes were quite similar across the board. However, the window size of 7 proved to be the most effective one. Notably, this finding is helpful for the paper focus that is devoted to clarifying the impact of the Differencing Operator. As a result, the window size of 7 was chosen for further study in this research.

The experiments were implemented using the Scikit-learn, math, Matplotlib, and other libraries, as well as the XGBoost, LightGBM, and CatBoost libraries in the Python environment on the Google Colab platform. The runtime type in Colab is TPU with high RAM.

4.2 Evaluation of Error Rates

Figure 7 displays a boxplot of the error rate (MAPE) between the predicted value (\(\widehat{Y}\)) and the actual value (Ytest) for different values of differencing order d (0, 1, 7, 28). The result corresponds to the GBDT, XGBoost, LightBoost, and CatBoost models for the New South Wales and Queensland data cases.

Table 4 presents statistics for each set of differencing order d (d = 0, 1, 7, 28) shown in Fig. 7. For each set, five statistical values are provided, including minimum, 25th percentile, 50th percentile, 75th percentile, and maximum. For example, in the upper-left subfigure of Fig. 7 (GBDT model, New South Wales data), a differencing order d = 0 yields statistical values of 5.84 (minimum), 6.54 (25th percentile), 6.68 (50th percentile), 6.79 (75th percentile), and 7.47 (maximum). Similarly, for the last subfigure on the bottom right (CatBoost model, Queensland data), a differencing order d = 28 gives statistic values of 3.21 (minimum), 3.51 (25th percentile), 3.59 (50th percentile), 3.68 (75th percentile), and 4.32 (maximum).

An in-depth analysis of Figs. 7 and Table 4 reveals that the application of the Differencing Operator to the input data (d = 1, 7, 28) leads to significantly better results, with a drastic reduction in prediction error compared to using the original data (d = 0). Specifically, when examining the GBDT model and New South Wales data, Fig. 7 and Table 4 show that using the original data yields excessively high forecast error values (minimum: 5.84, 25th percentile: 6.54, 50th percentile: 6.68, 75th percentile: 6.79, maximum: 7.47), whereas cases with d = 1 (minimum: 2.45, 25th percentile: 3.11, 50th percentile: 3.30, 75th percentile: 3.49, maximum: 4.01), d = 7 (minimum: 2.82, 25th percentile: 3.13, 50th percentile: 3.23, 75th percentile: 3.36, maximum: 4.11), and d = 28 (minimum: 4.47, 25th percentile: 4.92, 50th percentile: 5.23, 75th percentile: 5.43, maximum: 6.31) demonstrate significantly improved results. Similar trends are observed in all other cases. Moreover, a comparison of the error values across different Differencing Operator cases (d = 1, 7, 28) highlights that the most optimal results are achieved when d = 7.

To accurately evaluate the impact of the Differencing Operator, the next step focuses on calculating the ratio of the error rate between the differencing cases (d = 1, 7, 28) and the original data case (d = 0). Figure 8 displays a boxplot of the error rate ratio for the GBDT, XGBoost, LightGBM, and CatBoost models applied to the New South Wales and Queensland data. The statistical values for each column in Fig. 8 are summarized in Table 5.

The results in Figs. 8 and Table 5 clearly demonstrate the fluctuation range of the error rate ratio for both the New South Wales and Queensland data. For the New South Wales data, the ratio of the error rate ranges from 0.36 to 0.68 for the minimum statistic, 0.46 to 0.75 for the 25th percentile, 0.48 to 0.78 for the median (50th percentile), 0.50 to 0.81 for the 75th percentile, and 0.59 to 0.93 for the maximum statistic. Similarly, for the Queensland data, the ratio ranges from 0.51 to 0.73 for the minimum statistic, 0.58 to 0.85 for the 25th percentile, 0.60 to 0.88 for the median, 0.62 to 0.92 for the 75th percentile, and 0.80 to 1.16 for the maximum statistic.

For the New South Wales data, all error ratios of the Differencing Operator (d = 1, 7, 28) to the original data (d = 0) are less than 1. However, in the Queensland dataset, there are instances where the ratio 28/0 (d = 28/d = 0) exceeds 1, as detailed in Tables 6 below. Table 6 reveals 10 instances in the GBDT model, 12 instances in the XGBoost model, 5 instances in the LightGBM model, and 14 instances in the CatBoost model where the ratios are greater than 1. Considering the total of 2000 combinations of hyperparameters (lr, md, and ne) for each model, the number of cases where the ratio exceeds 1 is exceptionally small. This indicates that the utilization of the Differencing Operator (d = 1, 7, 28) can effectively enhance the precision of the forecasting process for ensemble algorithms. The data analysis also demonstrates that the differencing order of 7 may result in the smallest error ratio compared to the differencing orders of 1 or 28 for most values of the min, 25th, 50th, 75th, and max statistics.

In conclusion, the results confirm that the utilization of the Differencing Operator (d = 1, 7, 28) has a positive impact on reducing errors and improving the accuracy of the forecasting process for ensemble algorithms. The analysis also suggests that a differencing order of 7 tends to yield the smallest error ratio compared to orders of 1 or 28 for various statistical values.

4.3 Evaluation of Execution Time

Figure 9 illustrates a boxplot representing the execution time for different differencing orders (d = 0, 1, 7, 28) corresponding to the GBDT, XGBoost, LightGBM, and CatBoost models applied to the New South Wales and Queensland data cases. Table 7 presents the statistical values for each set of differencing orders (d = 0, 1, 7, 28) as shown in Fig. 9.

A detailed analysis of Fig. 9 and Table 7 reveals that the application of the Differencing Operator (d = 1, 7, 28) does not significantly increase the execution time of the program compared to the case where the original data (d = 0) is used. For example, let's consider the GBDT network with the New South Wales dataset. The results presented in Fig. 9 and Table 7 show that the execution time statistic values for the original data case (d = 0) are as follows: minimum: 0.12 s, 25th percentile: 0.55 s, 50th percentile: 0.95 s, 75th percentile: 1.57 s, and maximum: 3.14 s. These values remain largely unchanged when compared to the cases of d = 1 (minimum: 0.14, 25th percentile: 0.58, 50th percentile: 0.99, 75th percentile: 1.62, and maximum: 3.19), d = 7 (minimum: 0.14, 25th percentile: 0.57, 50th percentile: 0.98, 75th percentile: 1.59, and maximum: 3.15), and d = 28 (minimum: 0.13, 25th percentile: 0.55, 50th percentile: 0.94, 75th percentile: 1.53, and maximum: 3.13). And all other cases have similar results.

In addition, Fig. 10 presents a boxplot illustrating the execution time ratios between the Differencing Operator (d = 1, 7, 28) and the original data case (d = 0). The corresponding statistical values for each column in Fig. 10 are summarized in Table 8. For instance, in the 50th percentile statistical case (median values), the error ratio of the execution time with the Differencing Operator to that with the original data ranges from [0.99 to 1.20] for all New South Wales data cases. Similarly, for the Queensland data, the ratio fluctuates within the range of [0.99–1.18]. These findings indicate that there is no significant difference in the execution time when considering differencing orders of 1, 7, or 28 for the GBDT, XGBoost, LightGBM, and CatBoost models, respectively. Figures 10 and Table 8 consistently demonstrate that the execution time remains largely unchanged when the Differencing Operator is applied.

5 Conclusion

In this study, the author suggests the combination of the input data Differencing Operator with the Sliding Window procedure for ensemble learning algorithms. The objective was to assess the error rate and execution time for the GBDT, XGBoost, LightGBM, and CatBoost models in the forecasting process. Extensive exploration of hyperparameter combinations, such as learning rate, max depth, and number of estimations, was conducted to evaluate the effectiveness of the proposed approach. The results clearly demonstrated that implementing the input data difference approach (d = 1, 7, 28) led to a significant reduction in prediction error. Furthermore, it was observed that the execution time only experienced a slight increase when employing the data partitioning approach. In conclusion, the integration of the Differencing Operator into the Sliding Window Procedure for ensemble learning presents a promising solution to address technical challenges, particularly in the domain of peak load forecasting. This result lays the foundation for the author to further develop the proposed algorithm toward various machine learning models, particularly deep learning models. Additionally, it enables the extension of the algorithm’s application in diverse types of time series data, such as financial and weather data. Moreover, exploring its effectiveness in real-time data processing or under different operational conditions presents an important challenge.

References

Singh, AK.; Ibraheem, Khatoon S.; Muazzam, M.; Chaturvedi, DK.: Load forecasting techniques and methodologies: A review. ICPCES 2012 - 2012 2nd Int Conf Power, Control Embed Syst. 2012;(March 2015). https://doi.org/10.1109/ICPCES.2012.6508132.

Zhang, Y.; Wen, H.; Wu, Q.; Ai, Q.: Optimal adaptive prediction intervals for electricity load forecasting in distribution systems via reinforcement learning. IEEE Trans. Smart Grid. 14(4), 3259–3270 (2023). https://doi.org/10.1109/TSG.2022.3226423

Guo, W.; Che, L.; Shahidehpour, M.; Wan, X.: Machine-Learning based methods in short-term load forecasting. Electr. J. 34(1), 106884 (2021). https://doi.org/10.1016/j.tej.2020.106884

Wu, D.; Lin, W.: Efficient residential electric load forecasting via transfer learning and graph neural networks. IEEE Trans. Smart Grid. 14(3), 2423–2431 (2023). https://doi.org/10.1109/TSG.2022.3208211

Zawadali, M.; Nasmussakib Khan Shabbir, M.; Sifatulalam Chowdhury, M.; Ghosh, A;, Liang, X.: Regression models of critical parameters affecting peak load demand forecasting. Can Conf Electr Comput Eng. 2018;2018-May:31–34. https://doi.org/10.1109/CCECE.2018.8447786.

Hsu, C.C.; Chen, C.Y.: Regional load forecasting in Taiwan––applications of artificial neural networks. Energy Convers. Manag. 44(12), 1941–1949 (2003). https://doi.org/10.1016/S0196-8904(02)00225-X

Kazemzadeh, M.R.; Amjadian, A.; Amraee, T.: A hybrid data mining driven algorithm for long term electric peak load and energy demand forecasting. Energy 204, 117948 (2020). https://doi.org/10.1016/j.energy.2020.117948

Mado, I.; Soeprijanto, A.; Suhartono, S.: Applying of double seasonal ARIMA model for electrical power demand forecasting at PT PLN Gresik Indonesia. Int. J. Electr. Comput. Eng. (IJECE). 8(6), 4892 (2018)

Lim, P.Y.; Nayar, C.V.: Solar irradiance and load demand forecasting based on single exponential smoothing method. Int. J. Eng. Technol. 4(4), 451 (2012)

Ji, P.; Xiong, D.; Wang, P.; Chen, J.: A study on exponential smoothing model for load forecasting. Asia-Pacific Power Energy Eng. Conf., APPEEC. 1, 1–4 (2012)

Yue, LYL.; Zhang, YZY.; Xie, HXH.; Zhong, QZQ.: The fuzzy logic clustering neural network approach for middle and long term load forecasting. 2007 IEEE International Conference on Grey Systems and Intelligent Services. 963–7 (2007)

Nazarko, J.: The fuzzy regression approach to peak load estimation in power distribution systems. IEEE Trans. Power Syst. 14(3), 809–814 (1999). https://doi.org/10.1109/59.780890

Raza, M.Q.; Khosravi, A.: A review on artificial intelligence based load demand forecasting techniques for smart grid and buildings. Renew. Sustain. Energy Rev. 50, 1352–1372 (2015). https://doi.org/10.1016/j.rser.2015.04.065

Arvanitidis, A.I.; Bargiotas, D.; Daskalopulu, A.; Laitsos, V.M.; Tsoukalas, L.H.: Enhanced short-term load forecasting using artificial neural networks. Energies 14(22), 1–14 (2021)

Baliyan, A.; Gaurav, K.; Kumar Mishra, S.: A review of short term load forecasting using artificial neural network models. Procedia Comput. Sci. 48, 121–125 (2015). https://doi.org/10.1016/j.procs.2015.04.160

Arnob, S.S.; Arefin, A.I.M.S.; Saber, A.Y.; Mamun, K.A.: Energy demand forecasting and optimizing electric systems for developing countries. IEEE Access 11, 39751–39775 (2023)

Ceperic, E.; Ceperic, V.; Baric, A.: A strategy for short-term load forecasting by support vector regression machines. IEEE Trans. Power Syst. 4, 4356–4364 (2013). https://doi.org/10.1109/TPWRS.2013.2269803

Wu, L.; Shahidehpour, M.: A hybrid model for integrated day-ahead electricity price and load forecasting in smart grid. IET Gener. Transm. Distrib. 8(12), 1937–1950 (2014)

Laouafi, A.; Mordjaoui, M.; Laouafi, F.; Boukelia, T.E.: Daily peak electricity demand forecasting based on an adaptive hybrid two-stage methodology. Int. J. Electr. Power Energy Syst. 77, 136–144 (2016). https://doi.org/10.1016/j.ijepes.2015.11.046

Campbell PRJ. A hybrid modelling technique for load forecasting. 2007 IEEE Canada Electrical Power Conference, EPC 2007.;435–9 (2007)

Wang, L.; Mao, S.; Wilamowski, B.M.; Nelms, R.M.: Ensemble learning for load forecasting. IEEE Trans. Green Commun. Netw. 4(2), 616–628 (2020). https://doi.org/10.1109/TGCN.2020.2987304

Guo, H.; Tang, L.; Peng, Y.: Ensemble deep learning method for short-term load forecasting. Proc - 14th Int Conf Mob Ad-Hoc Sens Networks, MSN 2018.;(Dl):86–90. (2018) https://doi.org/10.1109/MSN.2018.00021.

Chen, B.; Lin, R.; Zou, H.: A short term load periodic prediction model based on GBDT. Int Conf Commun Technol Proceedings, ICCT. 2019-:1402–1406. (2019) https://doi.org/10.1109/ICCT.2018.8600009.

Liu, S.; Cui, Y.; Ma, Y.; Liu, P.: Short-term load forecasting based on gbdt combinatorial optimization. 2nd IEEE Conf Energy Internet Energy Syst Integr EI2 2018 - Proc. Published online (2018):1-5. https://doi.org/10.1109/EI2.2018.8582108

Liao, X.; Cao, N.; Li, M.; Kang, X.: Research on short-term load forecasting using XGBoost based on similar days. Proc - 2019 Int Conf Intell Transp Big Data Smart City, ICITBS 2019. Published online (2019):675-678. https://doi.org/10.1109/ICITBS.2019.00167

Tran, N.T.; Tran, T.T.G.; Nguyen, T.A.; Lam, M.B.: A new grid search algorithm based on XGBoost model for load forecasting. Bull Electr. Eng. Inf. 12(4), 1857–1866 (2023). https://doi.org/10.11591/eei.v12i4.5016

Hao, M.; Tian, Y.; Gao, J.; Wang, Y.; Tian, R.: Classification of short-term loads of enterprises using LightGBM. IOP Conf Ser Earth Environ Sci. 2020;526(1). https://doi.org/10.1088/1755-1315/526/1/012179.

Wang, Y.; Chen, J.; Chen, X., et al.: Short-Term Load Forecasting for Industrial Customers Based on TCN-LightGBM. IEEE Trans. Power Syst. 8950, 1–1 (2020). https://doi.org/10.1109/tpwrs.2020.3028133

Yang, X.; Chen, Z.: A hybrid short-term load forecasting model based on CatBoost and LSTM. 2021 IEEE 6th Int Conf Intell Comput Signal Process ICSP 2021. 2021;(Icsp):328–332. https://doi.org/10.1109/ICSP51882.2021.9408768.

Zhang, C.; Chen, Z.; Zhou, J.: Research on short-term load forecasting using K-means clustering and catboost integrating time series features. Chinese Control Conf CCC. 2020;2020-July(July 2016):6099–6104. https://doi.org/10.23919/CCC50068.2020.9188856.

Semmelmann, L.; Henni, S.; Weinhardt, C.: Load forecasting for energy communities: a novel LSTM-XGBoost hybrid model based on smart meter data. Energy Inf. 5, 1–21 (2022). https://doi.org/10.1186/s42162-022-00212-9

Park, S.; Jung, S.; Jung, S.; Rho, S.; Hwang, E.: Sliding window-based LightGBM model for electric load forecasting using anomaly repair. J. Supercomput. 0123456789, 27–30 (2021). https://doi.org/10.1007/s11227-021-03787-4

Khwaja, A.S.; Anpalagan, A.; Naeem, M.; Venkatesh, B.: Joint bagged-boosted artificial neural networks: Using ensemble machine learning to improve short-term electricity load forecasting. Electr. Power Syst. Res. 2020(179), 106080 (2019)

Zhao, X.; Xia, N.; Xu, Y.; Huang, X.; Li, M.: Mapping population distribution based on XGBoost using multisource data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 14, 11567–11580 (2021). https://doi.org/10.1109/JSTARS.2021.3125197

Liang, W.; Luo, S.; Zhao, G.; Wu, H.: Predicting hard rock pillar stability using GBDT, XGBoost, and LightGBM algorithms. Mathematics 8(5), 1–17 (2020). https://doi.org/10.3390/MATH8050765

Ben, J.S.; Mefteh-Wali, S.; Viviani, J.L.: Forecasting gold price with the XGBoost algorithm and SHAP interaction values. Ann. Oper. Res. (2022). https://doi.org/10.1007/s10479-021-04187-w

Friedman, J.H.: Greedy function approximation: a gradient boosting machine. Ann. Stat. 29(5), 1189–1232 (2001). https://doi.org/10.1214/aos/1013203451

Friedman, J.H.: Stochastic gradient boosting. Comput. Stat. Data Anal. 38(4), 367–378 (2002). https://doi.org/10.1016/S0167-9473(01)00065-2

Obiora, CN.; Ali. A.; Hasan AN.: Implementing extreme gradient boosting (xgboost) algorithm in predicting solar irradiance. 2021 IEEE PES/IAS PowerAfrica, PowerAfrica 2021. (2021);1–5

Machado, MR.; Karray, S.; De Sousa, IT.: LightGBM: An effective decision tree gradient boosting method to predict customer loyalty in the finance industry. 14th international conference on computer science and education, ICCSE 2019. (2019);(Iccse):1111–6.

Hancock, J.T.; Khoshgoftaar, T.M.: CatBoost for big data: an interdisciplinary review. J. Big Data. 7(1), 94 (2020)

Wang, L.; Wu, J.; Zhang, W.; Wang, L.; Cui, W.: Efficient seismic stability analysis of embankment slopes subjected to water level changes using gradient boosting algorithms. Front. Earth Sci. 9, 1–9 (2021)

Yin, L.; Ma, P.; Deng, Z.: Jlgbmloc—a novel high-precision indoor localization method based on lightgbm. Sensors (2021). https://doi.org/10.3390/s21082722

Yu, C.N.; Mirowski, P.; Ho, T.K.: A sparse coding approach to household electricity demand forecasting in smart grids. IEEE Trans. Smart Grid. 8(2), 738–748 (2017). https://doi.org/10.1109/TSG.2015.2513900

Hoverstad, B.A.; Tidemann, A.; Langseth, H.; Ozturk, P.: Short-term load forecasting with seasonal decomposition using evolution for parametershould be a spacetuning. IEEE Trans. Smart Grid (2015). https://doi.org/10.1109/TSG.2015.2395822

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tran, T.N. Research on the Impact of the Differencing Operator on Ensemble Learning Algorithms in the Case of Peak Load Forecasting. Arab J Sci Eng (2024). https://doi.org/10.1007/s13369-024-09460-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13369-024-09460-1