Abstract

The main objective of blind image deblurring is to restore a high-quality sharp image from a blurry input through estimation of unknown blur kernel and latent sharp image. This is an ill posed problem as a range of additional heuristics are required to accurately estimate the blur kernel. These methods have a major drawback that estimated kernel doesn’t result in minimum cost function for truly sharp image. In this paper, an improved blind image deblurring approach named Scharr Edge-based regularization (SEBR) based on combination of state-of-the-art Scharr edge based (SEB) gradient operator and a normalized regularization is proposed that accurately estimates the blur kernel and provides minimum cost function for sharp images. Initially, the SEB operator is applied to blurry input to preserve the spatial relations between the initial pixel values of the image. Moreover, the isotropic nature of the Scharr operator ensures the rotation symmetry effectively while optimizing the localization of salient edges to produce a gradient image. Finally, after applying normalized regularization, the blur kernel is estimated with high precision, which then recovers a sharp image. Experimental evaluations are performed on publicly available datasets of synthetic and real blur data. The proposed SEBR algorithm is very fast and robust in nature. The experimental result on synthetic data shows that SEBR improves the values of PSNR and SSIM. The SEBR also achieves favorable results in terms of visual quality against state-of-the-art deblurring techniques on real blur images having complex backgrounds with uniform and non-uniform blur, and images taken in low lighting conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the current years, the field of image deblurring has gained significant consideration from researchers due to massive usage of high-quality multimedia in an array of valuable applications such as medical images [1,2,3], security and surveillance [4, 5], remote sensing [6,7,8], underground object detection [9], etc. Unfortunately, the images captured with either personal hand-held or surveillance cameras are degraded due to different types of contaminations such as noise [10], blur [11,12,13,14,15,16,17,18,19,20], and fog [21]. The primary cause of this kind of degradation is blurring which corrupts the visual quality of latent images by averaging the rapid changes in pixel intensities. The blurring is determined by the relative translation or rotation between the objects and the camera. It removes the high spatial frequencies, thereby obscuring or distorting the high-level details from the image. In this regard, several methods have been projected to enhance the visual quality of the corrupted images in which, Image deblurring is the primary countermeasure for restoring the quality of degraded images through precise estimation of the convolution kernel that causes blurriness. There are various kinds of blurring functions such as Gaussian, Motion, Average, or Defocus, etc.

On the basis of prior knowledge regarding the convolved blur kernel and the original sharp image, image deblurring techniques are broadly classified into two major categories i.e., Blind image deblurring (BID) and Non-Blind image deblurring (NBID) [22,23,24]. BID is defined as a kind of deblurring where the blur kernel is not known. In contrast, NBID is defined as the kind of deblurring where the convolved kernel causing blurriness is known. According to the literature, it has been inferred that BID is a challenging problem due to unstable and non-unique solutions [25,26,27,28,29]. Therefore, in our proposed research work, we have more focused on the techniques of BID for image restoration.

In case of blind image deblurring it is very important to estimate the unknown blur kernel accurately. It has been observed that well-detected edges of objects in degraded images are fundamental in the correct estimation of the blur kernel. Likewise, the poorly detected edges can neither determine the blur kernel effectively nor are able to remove ringing effects near strong edges [30,31,32]. Most of the Image deblurring techniques have not considered the weak edges and their rotation symmetry while estimating the blur kernel. The negligence of such minor structures results in poor detection of blur kernel and do not provide minimum cost function for sharp image. A range of additional image priors are required to accurately estimate the blur kernel that can restore the high-quality sharp image.

In this research paper, we have focused on accurately estimating the blur convolution kernel that gives high quality sharp image with minimum cost function. The accurately estimated blur convolution kernel is vital as it is then used in combination with any known image deblurring technique for recovering the latent sharp image. The normalized form of regularization which was used as a measure of sparsity in [33] is utilized in our work in combination with gradient image that gives low cost for sharp images. The blur in the image always attenuates the high frequencies of sharp image and hence decreases value of the L1 norm as it is scale variant. The benefit of using normalized form of regularization is that blur decreases both L1 and L2 norm but L2 norm decreases more that eventually increases the ratio of this normalized regularization and results in minimum cost function for the sharp images. The whole procedure is divided into three main phases: (i) Edge Enhancement by using the Scharr Edge-based (SEB) operator (ii) Estimation of blur kernel from normalized regularization of gradient image and (iii) recovery of latent sharp image. The approach of SEBR is simple as it does not have to apply any complex schemes followed by other techniques to overcome the shortcoming of already developed deblurring models. The kernel estimated with SEBR is more accurate because it can retain the salient edges and minor texture areas of the objects from the degraded image which results in recovering a fine quality sharp image with minimum cost function. The key contributions of this research work are mentioned below.

-

An improved approach for kernel estimation based on Scharr Edge-based (SEB) operator and normalized regularization is proposed that highly optimizes the salient edge extraction from blur images and achieves perfect rotation symmetry that results in an accurate kernel with lowest cost function for sharp images.

-

The proposed SEBR can detect the outliers in the degraded image and minimize the ringing artifact near the strong edges while improving the contrast of the sharp image with a comparatively low cost of computation.

-

The blur convolution kernel estimated with SEBR is scale-invariant because of normalized regularization, flexible to various types of blurriness having different centers and rotations, and applicable to an extensive range of deblurring problems irrespective of images having different characteristics.

-

The proposed technique effectively processes the real blur images having low light, complex scenes, and uniform and non-uniform blur and give favorable results against other state-of-the-art deblurring approaches.

The rest of the research paper is divided into the following sections: Sect. 2 includes the critical review of the techniques proposed in the BID. Section 3 presents edge enhancement with Scharr Edge-based detection operator and Sect. 4 presents the proposed approach of SEBR for the blind image deblurring process in detail. Experimental deblurring results and discussions are presented in Sect. 5. Lastly, Sect. 6 concludes the proposed approach for the BID.

2 Related Work

In the previous years, an extensive variety of deblurring models have been proposed for blind deblurring of corrupted images, however, they were not able to give high-quality results because of restrictions imposed on kernel priors and latent image. The techniques used for estimation of blur kernel are largely classified into two main categories based on their various types of properties: (i) Variational Bayes-based techniques (VB-based) [16,17,18, 34], and (ii) Maximum a Posterior-based technique (MAP-based) [11, 13, 19, 32, 35,36,37,38]. VB-based techniques avoid trivial solutions to the problems, however, are not cost-effective compared to the MAP-based approaches used for image deblurring. Contrary, in MAP-based approaches, Blur kernel is estimated in two ways: (1) Salient Structures (based on /utilizing the salient structures of objects in images) [11, 13, 19, 32, 36, 37, 39,40,41] and (2) sparse regularization based methods [10, 13, 15, 28, 35, 38, 42, 43].

In this research paper, we have explored MAP-based approaches due to their cost-effectiveness. The techniques proposed in [11, 19] for single image deblurring were not able to yield effective results due to low-quality blur kernel estimation, and other variables. These techniques were based on edges of objects in gradient images by calculating their L1 norm. The estimated PSF recovered the latent image with high quality but only under some specific conditions. The L1 norm in combination with another technique based on ringing affect suppression were proposed in [37] to enhance the visual quality of the recovered sharp image. The approach gives comparatively better deblurring results, but some conditions and parameters are required to be set for good quality results. For further improvement of the recovered image, a new regularization method based on L1/L2- norm was anticipated that uses the ratios of L1- norm to L2—norm applied the high pixel values in degraded images [28]. This method performs better but lacks the capability for modeling both the image sparsity and blurry gradients. Consequently, Resultant deblurred images had ringing effects near the edges and were also over-smoothed in some cases.

Another technique based on random transform was introduced in [10] for estimating the convolved blur kernel. It deblurred and denoised the image by using singular values for decomposition and recovered the sharp image. The random transformation of each image was estimated by the application of several directional operators in various orientations. It performs better but the ringing effect was visible in the restored sharp image. A generalized sparse representation-based deblurring technique was introduced in [38] that worked on removing the blur caused by both uniform and non-uniform motion. This technique had mathematically proven the accuracy of the L0-norm for the sparse representation, and it also converged in a few iterations as no extra filters were required for the optimization. However, the proposed method was not able to give good results on images having text regions. An effective kernel estimation technique was introduced in [14] that was based on data fidelity terms for suppressing the negative effect of outliers. This method effectively restored the blurred image but at the expense of greater computational cost. A generalized iteration-wise shrinkage threshold method was proposed to preserve the smooth structure of objects in the restored image [32]. In this method, the generalized shrinkage thresholding algorithm (GST) was used to eliminate the small details while sharpening the salient edges of objects in blur images, but it was not able to accurately estimate the kernel. Dark channel-based image priors were employed in [15] with the assumption that the dark channel image priors are less sparse which achieves good results in different type of scenes. Another method proposed in [43] used Gaussian priors for the estimation of the blur kernel. However, the proposed methodology was incapable of retaining the salient structures of objects because of two main reasons. First, it lacked sparse representation which led to a noisy solution having dense structures, and secondly, the kernel recovered was not of fine quality.

A new MAP-based framework was introduced for kernel estimation in [42] that used the edge priors specifically related to the scene. It works better on some images but fails on a variety of scene images. Sparsity based on Row-Column was utilized for blind image deblurring in [44]. In this method, the problem of sparsity optimization was solved by the inclusion of rows and columns for the kernel estimation. Later, a new method [45]is proposed that combines the dark and black channel and utilizes the extreme channel prior for deblurring the corrupt images. The methods achieved better results, but they have certain drawbacks as the image has no evident bright and dark pixels which poorly estimates the kernel. Inspired from dark channel prior a new technique was presented in [46] that is based on sparse regularization by using the local minimum pixels in the images that speed up the deblurring process greatly. Another blind deblurring technique [47] based on the prior of local maximum gradient prior (LMG) is presented that gives good performance for many different scene images. The method presented in [41] simplifies the work of LMG and introduces the patch based maximum gradient that lowers the cost of calculations. The blind deblurring approach in [40] have designed a new image prior based on the ratio of extreme pixel values which are affected by blur. The image is divided into various patches for extraction of features and finally overlapped all patches for getting detailed information. It gives good results but its performance decreases when the image has severe types of noise. Its computation complexity and run time are also high. An effective regularization method based on probability-weighted moments was proposed in [48], the method effectively preserved the trivial structure of the object in an image and restores the sharp image with good quality but it has large computation time.

As the gradient and intensity based priors are employed mostly to the single or adjacent pixels, it does not account for the relationship among pixels on larger scales. For better reflection of the relationship of pixels in the image, patch based deblurring approaches have been developed. A method inspired by the statistical properties of natural images is presented in [49] that adopts two priors based on edges in patches. Another patch based method is proposed in [50] that combines the similar characteristic in different patches of blur images. In [51] a new deblurring approach combining the selection of salient edges and low ranks constraints is presented. A deblurring method based on minimum patch constraint imposing zero is presented in [52]. These patch-based methods require frequent searching, so it requires much more time to execute.

The above discussed image deblurring techniques [19, 42, 46, 47, 51, 53] mostly follows the MAP based or gradient prior based approaches for deblurring corrupted images. In the MAP based deblurring frameworks the blurring kernel estimation is highly dependent on the extraction of sharp edges. Presently the main edge extraction method relies on the selection of threshold value for retrieving strong edges. The deblurring methods based on explicitly selecting edges from corrupted images have many drawbacks. As some images don’t contain any noticeable edges that can be retrieved so the resultant deblurring images are mostly over sharp and amplify the noise in images. They are also very sensitive to the selection of parameters for a variety of images. The regularization based techniques [37, 43, 48, 54] needs the finer details of the images to be used for kernel estimation and they favors the blurry images rather than sharp images. Secondly, they lacked sparsity which led them to produce dense and noisy output images. Therefore, it is required to develop a prior that is relevant to gradient information of degraded image but favors low cost for sharp images as compared to blurry images. This work presented a Scharr edge-based regularization (SEBR) prior that utilizes both the gradient information of image and normalized form of regularization to accurately estimates the blur kernel that gives the minimum cost for recovering sharp image.

3 Edge Extraction with Scharr Edge-Based Gradient Operator

In deblurring tasks, edges being the high-frequency components plays a significant role in enhancing the quality of recovered image. Moreover, such information is also directly associated with visual cognition and human perception. It has also been observed that clean and clear images have sharp and fine quality edges whereas, in blurred images, abrupt changes in intensity values smoothens out due to averaging of neighboring pixel areas. The edge gradient reduced in a gaussian blur, therefore, edge information around the patches is weak. Likewise, edge information becomes dense and unstable due to the ghosting effect in the motion-blurred images. Owing to this deficient edge-based information, our proposed model will focus on these gradient regions and recover the sharp image.

Numerous state-of-the-art operators are available for extracting edges from images such as Sobel, Prewitt, Robert, Canny, Laplacian, Laplacian of Gaussian (LoG) [55]. These edge detectors smoothen the image while calculating the gradient during removal of noise from image. The convolution of image with Sobel edge detector is only the approximation process of vertical and horizontal pixel intensity differences and it does not contain any information of rotational symmetry [56, 57]. Scharr et al. [58] have improved the Sobel edge detection filter by minimizing the weighted angle difference in the frequency domain and proposed a new edge detection filter known as Scharr filter. The Scharr filter is also fast like Sobel edge filter, but its accuracy is high when it is calculating the derivatives of the image. Different sizes of Scharr edge based gradient operators can be used for detecting edges from the blurred image [59].

The horizontal and vertical masks of Scharr edge detector of size 3 × 3 are given below.

We have used the Scharr edge-based gradient operator to extract the salient edges from the captured degraded images The motivation for using Scharr edge-based gradient operator to extract the edge-based information from the degraded images is twofold: (1) rotation symmetry, and (2) these edge masks facilitate in suppressing noise. SEB gradient operator has the capability to achieve the perfect rotation symmetry which leads to the detection of edges along all its orientations and optimizes edge extraction from blurry and noisy images. SEB operator will perform the same amount of smoothing in all directions. Generally, it is known in advance that edges in an image are not oriented in some specific direction; therefore, there is no prior reason to perform more smoothing in one direction than others. The property of rotational symmetry infers that the Scharr edge masks are not biased to successive edge detections in any specific direction.

Scharr Edge masks will behave like a single lobe filter and remove the noise by replacement of every image pixel value with a weighted average of the neighboring pixel values. The weight assigned to the neighboring pixel in masks decreases monotonically as the distance increases from the center pixel because the edge is the local feature in the image and if more significance is given to the far away pixel it will distort the features during filtering [58]. The Fourier spectrum of the SEB operator also shows a single hemisphere in the frequency domain. Mostly the images captured are corrupted by unwanted frequency signals like gaussian noise and other fine textures. Edges being the most important features within images have components at both high and low frequencies. The single lobe of the SEB operator in the Fourier spectrum will give an output image that is not corrupted by high-frequency components and will retain most of the desirable details in an image. Scharr edge filter simply calculates the magnitude of gradients and efficiently detects both weak and strong edges because it is also equally sensitive to the narrow and wider boundary lines as shown below in Fig. 1.

4 Proposed Methodology of SEBR

In this research article, an improved method named Scharr Edge-based regularization (SEBR) has been proposed for the blind image deconvolution of images. The proposed SEBR is executed in three main steps: (1) Edge Enhancement by using the Scharr Edge-based (SEB) operator, (2) Estimation of a convolved blur kernel, and (3) Recovery of a latent sharp image. Figure 2 below presents the proposed approach used for blind image deblurring. The observed blur image (f (x, y)) is down sampled to a certain size. The state-of-the-art Scharr edge detection operator is then applied to the down-sampled blurry images to extract the edge-based information of objects at different levels. The resultant edge map (Edge-Map) consists of both weak and strong edges. Subsequently, the trilateral filter is then applied to the resultant edge map (Edge-Map). The trilateral filter not only enhances the weak edges but also mitigates the effect of noise. Moreover, the trilateral filter is the fine quality version of the bilateral filter that suppresses the impulse noise effectively and sharpens the edge areas of objects in the blurred image. The kernel estimated with SEBR will effectively recover the sharp images that are either affected by gaussian or impulse noise. The kernel (k) is estimated by mathematical operation. Afterward, the initial image (f (x, y)) is updated by using the fast Iterative Shrinkage-Thresholding Algorithm (ISTA). Finally, a high-quality deblur image is obtained by using the non-blind deblurring method.

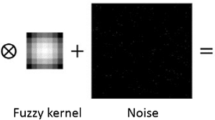

The following equation is used to model the captured blur image.

In the above equation, the symbol B is used to show the captured corrupted image having blurry effects, the symbol I is used for showing an original sharp image that becomes blur after convolving with kernel k, and ⊗ symbol is used to show the operator of the convolution. N shows the environmental noise that also degrades the image during capture. The main objective of our work is to develop an image deblurring technique that gives an efficient solution to the problem and restores the original image I from the input blur image B through estimation of high-quality blur convolution Kernel k.

Afterward, the process of blind image deblurring comprises of two main steps i.e., (1) estimating unknown blurring convolution kernel, and (2) recovery of original sharp image. The quality of the recovered image I can be highly improved through precise estimation of the unknown blur convolution kernel k. The standard process used for blind image deblurring is revealed below in the following algorithm.

4.1 Blind Estimation of Blur Convolution Kernel

In the proposed approach, blur convolution kernel k that convolved with the captured image and make it blur is estimated on the highest frequencies of the image as it was performed in [28]. We take blurred image B as input and apply the Scharr edge based (SEB) filters to get a high-frequency image y. The horizontal and vertical edge-based information is extracted by convolving xmask and ymask, respectively with the down-sampled image (shown in Eqs. (2), (3) and Eq. (4) gives the strength of both horizontal and vertical edges.

Here, \(G_{x}\), \(G_{y}\) presents the vertical and horizontal edge-based information obtained by convolving the image (I) with the xmask and ymask, respectively. Worth noting that the stride of 1 is set to preserve the continuity in edges. The model equation for proposed SEBR based blind image deblurring is shown below in (Eq. 5)

Here k represents the unknown blur convolution kernel, and x represents the original sharp image which is also unknown. Whereas λ and \(\sigma\) are the parameters used for optimization and stability of both the kernel k and image regularizing, the symbol  represent the 2D convolution operation. Considering the physical principles of the formation of blur in the sharp image, there are two types of restrictions that bounds the blur kernel k: (1) k ≥ 0, and (2) \(\mathop \sum \limits_{i} ki\) = 1.

represent the 2D convolution operation. Considering the physical principles of the formation of blur in the sharp image, there are two types of restrictions that bounds the blur kernel k: (1) k ≥ 0, and (2) \(\mathop \sum \limits_{i} ki\) = 1.

The Eq. (5) comprises of 3 main parts. The 1st term shows the likelihood of the deblurring model shown earlier in Eq. (1). The 2nd term shows the Scharr edge-based regularization which is based on the detection of salient edges at different levels and applying the L1/l2 regularization to the image x which will be \(\left| {\left| x \right|} \right|_{1} /\left| {\left| x \right|} \right|_{2}\). The SEBR will further enhance the salient edge detection for kernel estimation K and encourages the scale invariant sparsity during deblurring process. The third term shows that L1—norm-based regularization is then applied to the convolved kernel k to minimize the noisy effects from blurry image and for its sparse representation. To get a highly optimized solution to the blurring problem, we must assume an initialization for the values of latent image x and blur convolution kernel k, and then both will be updated in an alternate manner. Inspired by the method proposed in [28], the sharper image x and blurring kernel k are estimated by using alternating steps.

For the updating of x and k, Eq. 5 is divided further into 2 sub equations as given below:

4.1.1 Updating of Intermediate Image x

The Eq. (6) is highly nonconvex because of the inclusion of normalized form of regularization term \(\left| {\left| x \right|} \right|_{1} /\left| {\left| x \right|} \right|_{2}\). But it can be made convex if the denominator is fixed from the last iteration which makes it L1 regularized. To solve this L1 regularized problem we than used the Fast iterative shrinkage thresholding algorithm (ISTA) [60] because of its enhanced rate of convergence and increased capability of restoration. The fast ISTA algorithm is used for solving the linear inverse problem shown below in Algorithm 2.

In the above algorithm, S stands for soft shrinkage operator that is applied to the input vector of image x. This operator is used for shrinking every component of the input blurry image vector towards zero as shown below in Eq. 8.

This algorithm is the fastest and simplest one and it involves only the multiplications of the matrices of kernel k and blur image x followed by the shrinking process componentry. The ISTA algorithm is applied to innermost iterations in the x-updating algorithm and the outer loop of ISTA is used for re-estimating the weightage of the likelihood term ||x||2 used in Eq. (6). Algorithm 3 is used for updating of initialized image x.

In the above algorithm, the symbol kT is used for the conjugate transpose matrix of estimated kernel k and the symbol λmax(kTk) stands for maximum eigenvalues for kTk. The input value of xM is used for the estimation of the convolved kernel that causes blur for solving the sub-problem of updating k. Our proposed approach is used for the estimation of blur kernel as elaborated in algorithm 3. The estimation based on the gradient values of the image has proved to give more precise results [16]. Because of the normalized form of regularization the problem is highly non convex so we use of the outer and inner iterations to highly reduced the cost function as shown in Eq. (6).

4.1.2 Updating of the Kernel k

After updating the image x with Eq. (6), the convolved kernel k should be updated by using Eq. (7). The Iterative re-weighted Least Squares based technique [61] was applied for the estimation of kernel k without setting any constraints. It has used the weights gained from the former updating of matrix k having only one outer iteration. At the finer level, the estimated kernel k has values that can be either neglected or can be normalized to zero for increasing its robustness towards noise that enhances its efficiency. The kernel k is estimated in the multiscale process and a coarser to the finer pyramid of image resolution is followed.

For converging to a good quality solution, many updates are required on x and k in case of very large kernels. To alleviate this trouble, we have estimated the blurring kernel in a multiscale manner by using a coarser to finer levels of images as used in [16]. We have used the images at different scale levels with a resolution size of ratio √ 2 in every dimension. The size of kernel k is kept 3 × 3 at the coarsest level. We have down sampled the input blur image and then used Scharr edge-based gradient (SEB) operator at each level to get the discrete values gradients of image y. At every scaling level, alternate updating of image x and kernel k is performed. Once we get the blur convolution kernel k and sharper image x then up sampling is performed to be used as the initialized kernel and sharp image for subsequent levels.

4.1.3 Recovery of Sharp Image

After estimating of the blurring convolution kernel K, an extensive number of non-blind image deblurring techniques are available for recovering original image I from the captured blur version image B [28, 61]. The simplest deblurring method is Richardson-Lucy (RL) technique, however, this is very sensitive to an incorrect kernel estimation and ultimately produces ringing artifacts in the restored image. We have used the Hyper-Laplacian priors technique [62] for restoring image after the estimating blurring kernel to avoid high sensitivity towards inaccurate estimation of kernel. This technique for image deblurring proves to be faster than others and it is also robust to the minor errors in kernel estimation. The hyper-Laplacians based method uses a continuous method for solving the cost function as mentioned below in Eq. (9):

Here Gx and Gy represent the horizontal and vertical Scharr operators used aforesaid in Part 4.1. It is important to mention that the value of c is kept at 0.81 and the value of λ is kept at 3000 as used in [28]. Also, we have used the Lp-based regularization instead of using L1/L2 norm for the restoration of the image because non blind deconvolution process is much less ill posed than blind image deblurring.

4.2 Fast and Robust Nature of SEBR

In our approach the blur kernel is estimated in a multiscale iterative manner by using a coarse to fine levels of images which requires only few convolution operations between the updated intermediate image x and blur kernel k which makes it very fast. The cost function of our deblurring model is very simple as shown earlier in Eq. (5). SEBR takes less time than the deblurring techniques of [25, 50]. From the experimental analysis, it is proved that proposed SRBR is robust to selection of parameters. We kept the same values of parameters for the deblurring outputs stated below in the results section. The robustness of SEBR is the major improvement over existing techniques that need to adjust the various parameters for different types of blur data.

5 Experimental Deblurring Results

For validating the effectiveness of the proposed SEBR technique, several experiments are made on synthetically blur images and real blur data. The obtained results of the algorithm are evaluated with the well-known performance matrices. The research paper is further sub-divided into three parts that include: (1) Evaluation Metrics, (2) Results on synthetically blurred images and real blur image data (dataset is available at [63]), and (3) discussion of the obtained results.

5.1 Evaluation Metrics

The renowned evaluation metrics used for performance evaluation of results are Peak Signal to Noise ratio (PSNR), a measure of structure similarity index (SSIM), error ratios, and time of computation taken in seconds.

The following Eq. (10) is utilized to calculate the PSNR value of sharp image recovered with the proposed algorithm.

In the above equation ‘Max’ shows the maximum values of pixels in the original sharp image. 'MSE’ shows the means of square error between the latent sharp image ‘O’ and deblur image ‘R’.

‘MSE’ will be calculated by using Eq. (11) as shown below. The m and n symbols are used to show the height and breadth of the original sharp image.

The PSNR value is a very common performance measure for evaluating the quality of the restored images but the problem with the PSNR value is that it ignores the characteristics of the naked eye visual system as the eyes are extra sensitive to brightness levels as compared to chromatic aberration. Therefore, we also use the value of SSIM along with PSNR value to reflect the subjective feel of a human being’s eyes.

The SSIM of the restored image is computed as follows:

where the symbols \(\Omega _{O} \;{{and}}\; \Omega _{R}\) shows the mean values, the symbols \(\beta_{O}^{{}} \;{{and}}\;\beta_{R}^{{}}\) are used to represent the variance values and \( \beta_{OR} \) shows the covariances of original and restored sharp images while ‘C1' and 'C2' shows the constant values.

The Error ratio is used for the comparison of the performance of proposed algorithm by computing the standard deviation between the images recovered with estimated blur kernel and true blur kernel. The Error ratio will be computed by the following equation:

where STD is used to present the standard deviation while IR represents the Real blur image, IEK shows the sharp image that is recovered using the estimated kernel and IRK shows image estimated with true kernel.

5.2 Results of Synthetically Blurred Image Data

The SEBR method is evaluated on a standard benchmark dataset developed by Levin et. al. [35] for synthetically blur images. The images restored with the proposed technique are compared to the outputs of other up-to-date techniques used for image deblurring. This dataset contains a total of 32 blur images, consisting of 4 ground truth images of size of 255 × 255, 8 images are the ground truth kernels, whose sizes range from kernel size of 13 × 13 to size of 27 × 27.

Table 1 shows the obtained deblurring results of SEBR and other state-of-the-art techniques used for deblurring [11, 15, 19, 25, 28, 35, 46]. We have used the images from dataset of Levin et al. [35]. Output images are compared based on average values of PSNR, SSIM, error ratio, and the computational time used by deblurring process. Our proposed technique Scharr Edge Based Regularization (SEBR) outperforms the existing blind image deblurring techniques in terms of higher values of PSNR and SSIM values except for the method in [15]. However, the proposed SEBR is able to improve the computational cost as compared to the technique proposed in [15]. The ability of SEBR to preserve both the narrow and wider boundary lines during the kernel estimation helps in better recovery of edges from the blurred image that results in far better quality deblur image.

In Fig. 3, visual results obtained by the proposed technique SEBR are compared against the several up-to-date techniques used for image restoration [11, 15, 19, 25] According to the visual evaluations, it is inferred that the SEBR method has not just only deblurred the degraded image but it has also sharpened the weak edges of objects in an image. Also, the time of deblurring with SEBR is lower in comparison to other image recovery algorithms. The images in (b) and (c) are better but it has some regions having high level of blurriness. It can also be detected from the output that the image recovered by (d) is still blurred and has also distorted the significant information at the top portion of restored image. However, the deblurred image by (e) is a little improved but it has over sharped regions and high ringing artifacts been shown. The image (f) shows that the restored image by SEBR appears to be clearer and its PSNR value is also high as compared to the other methods.

5.3 Results of Real Blur Data

The proposed deblurring technique SEBR is also evaluated on the real blur data for comparing its performance with other baseline techniques used for deblurring images from the real world. Real blur image datasets do not have ground truth images; therefore, they are only evaluated on the basis of the visual quality of recovered images. It is also significant to mention that the same parameters are set for all the algorithms for a fair comparison of results. SEBR technique is applied to real-world images having a uniform or non-uniform blur and images taken in low lightning having saturated regions.

5.3.1 Deblurring Results on Uniformly Blur Images

In Fig. 4, the proposed framework of SEBR is evaluated on an example image of a toy having uniform blur, and the resultant deblurred outputs are compared with other state-of-the-art techniques [11, 15, 19, 25]. It is observed that images deblurred by techniques in (b) and (e) are fine enough but it has ringing artifacts near the strong edges. Similarly, the deblurred image in (d) is still blurry. The image in (c) is fully distorted as it has over-smoothed the local textures of objects. However, images deblurred with SEBR have very small effects of ringing and it has also preserved the local texture and contrast of the image during the elimination of blurriness.

In Fig. 5, the deblurring results of SEBR are compared with the results of [11, 15, 19, 46] for a uniform blur image. As it is shown that images restored by the deblurring technique proposed in (b) produce results that is better than other methods, but it has minor fake texture and some regions having blur. The resultant images in (c) and (e) seem to be fine to some extent but the local textures in the objects are over smoothed and the geometrical structure and edges of objects are also not preserved properly. The resultant image in (d) is over smoothed and is also not deblurred effectively. However, the image deblurred with SEBR has fewer ringing artifacts as compared to the other deblurring methods. Interestingly, SEBR is equally able to preserve the fine textures in images while enhancing the edges during the process of deblurring.

In Fig. 6, the deblurring results of SEBR are compared with other image deblurring methodologies [11, 15, 19, 25] used for uniform blur removal. The visual results of the kernel estimated by other deblurring techniques have shown the usefulness of SEBR. The image deblurring technique used in Jinsha et. al [15] produces a distorted output and the content of the image is also not clear as indicated in (b). The results of deblurring displayed in fig (c) and (d) have strong ringing artifacts. The image deblur in (e) has ignored the smaller textures areas during the image restoration process and results in an over-smoothed and a bit blurry image. The blur convolution kernel estimated with SEBR has compact neighboring pixels and is also comparatively less sparse which helps in preserving the salient edges and boundary lines of objects in images and recovering a sharper image with very few ringing effects.

5.3.2 Deblurring Results on Images With Non-uniform Blur

For evaluating SEBR on images having non-uniform blur, the deblurred results are compared with other deblurring techniques used for non-uniformly blurry images [28, 31, 53, 64] as shown below in Fig. 7. It is observed that the image in fig (b) and (c) have strong effects of ringing. The image in (d) is still very blur and not clear. The deblur result of (d) is better but its computational time is large while the image recovered with SEBR generates results that are comparable to deblurring results in (d) having rich textures and very sharp boundaries.

Another example of real blur image having non-uniform blur is shown in Fig. 8. The proposed method of SEBR is compared with other techniques of non-uniform deblurring [28, 31, 53, 64]. It is shown from the results that the deblur images in (b) and (c) are not clear. The deblur result in (c) is a bit oversmoothed and lacks sharp edges. The deblurring result in (d) has blur regions in the center parts. The image recovered with the proposed SEBR as shown in fig (e) has fine textures with better contrast and it has very few ringing artifacts in just some areas of the output image.

Figure 9 shows an example image of real blur RGB image having non-uniform blur. The deblurring results of SEBR are compared with other methodologies used for uniform blur removal from real blur images [28, 31, 53, 64]. The deblurring results in figure (b) and (c) shows the kernel fails to recover the image. The result displayed in (d) is still very blurry. The deblurred results shown in fig. (e) have ringing artifacts near the boundary lines but the image recovered with the estimated kernel of SEBR is clearer and doesn’t have any artifacts near the strong boundary lines.

5.3.3 Deblurring Real Blur Images with Saturated Regions

The proposed Scharr Edge based regularization (SEBR) based deblurring gives favorable deblurring results on images captured in the real world having saturated blur areas. It is very hard to estimate the convolution kernel from the blur images having saturated areas. The already proposed state-of-the-art deblurring methodologies [11, 38, 65, 66] does not give quality results on real-world data having lots of saturated regions or images taken in low illumination. In sharp images the saturated regions are sparse but when the image becomes blurred these regions extend and look like blobs or streaks. The images taken in low lightning often have saturated regions.

Figure 10 shows a real blur image captured in low lightning having many saturated regions. For validation, the deblurring results of proposed SEBR technique is compared with other state of the art methodologies in [11, 38, 65, 66]. As the priors of these deblurring methods were basically developed to exploit the prominent edges in images in case of motion deblurring, these methods were not able to give good results. The technique in [38] was developed to handle a large amount of Gaussian noise in images so it is not effective on images having saturated areas as shown in fig. ( c). It is observed in fig. (b) and (d) that the method has limitations in estimating the latent image and shows many ringing artifacts. The image restored with SEBR based technique is clear and sharp. We notice that the deblurring technique in [65, 66] performs well because it was developed for detecting lightning streaks that come in images captured in low lightning images. The proposed method estimated the blur kernel well and gives favorable results without performing any additional preprocessing steps for the detection of light streaks.

Figure 11 shows another real image taken in low illumination with many saturated regions. The output of SEBR deblurring technique is compared with other methodologies [11, 38, 65, 66] used for blur removal. As it is clearly seen in fig. (b) and fig.(c) that the saturated areas are very large, and the estimated kernel is not good enough to detect the light streaks properly. The deblurring results in fig. (d) and (e) performs well but still have a little blurry effect that’s dilating the white regions in image whereas the proposed SEBR method accurately estimates the kernel and gives better deblurring results which is sharper and clearer.

6 Conclusion

An effective and efficient blind image deblurring technique based on Scharr edge-based regularization is proposed for estimating the blur convolution kernel that can restore a high-quality sharp image with minimum cost function. The Scharr edge based (SEB) gradient operator is used in combination with the normalized regularization that highly optimizes the salient edge extraction from blurry images because of its perfect rotation symmetry property and scale invariance, and results in an accurate estimation of blur kernel. It is concluded from the results that the kernel function estimated by SEBR is precise and preserves all the salient edges and local textures of objects with very few ringing artifacts as compared to other state-of-the-art deblurring methodologies. The effectiveness of SEBR is proved in terms of values of PSNR, SSIM, Error ratio, and costs of computation on synthetically blurred images. The comparison of experimental results demonstrates that SEBR is also very robust and equally effective in terms of visual quality on real blur image data having a uniform or non-uniform blur and low illumination images. The robustness of SEBR is the major improvement over existing techniques as it does not need to adjust the parameters for different types of blur data. In our future research, both global and local textures of blur image will be considered for better estimation of the convolved kernel and restoration of image.

References

Shen, D.; Wu, G.; Suk, H.-I.: Deep learning in medical image analysis. Annu. Review Biomed. Eng. 19, 221 (2017)

Mayberg, M., et al.: Anisotropic neural deblurring for MRI acceleration. Int. J. Comput. Assisted Radiol. Surg. 17(2), 315–327 (2022)

Lee, M.-H.; Yun, C.-S.; Kim, K.; Lee, Y.J.M.: Effect of denoising and deblurring 18F-fluorodeoxyglucose positron emission tomography images on a deep learning model’s classification performance for Alzheimer’s disease. Metabolites 12(3), 231 (2022)

Nimisha, T.; Rajagopalan, A.: Blind super-resolution of faces for surveillance. In: Deep Learning-Based Face Analytics. Springer, pp. 119–136 (2021)

Yang, H.; Liu, C.; Luo, T.: Application and research of image denoising for oil field security monitoring. In: 2022 7th International Conference on Intelligent Computing and Signal Processing (ICSP), 2022, pp. 1798–1802: IEEE

Su, H.; et al.: HQ-ISNet: High-quality instance segmentation for remote sensing imagery. Remote Sens. 12(6), 989 (2020)

Shen, H.; Du, L.; Zhang, L.; Gong, W.J.I.G.; Letters, R.S.: A blind restoration method for remote sensing images. IEEE Geosci. Remote Sens. Lett. 9(6), 1137–1141 (2012)

Zhang, S.; He, G.; Chen, H.-B.; Jing, N.; Wang, Q.J.I.G.; Letters, R.S.: Scale adaptive proposal network for object detection in remote sensing images. IEEE Geosci. Remote Sens. Lett. 16(6), 864–868 (2019)

Lei, W.; Luo, J.; Hou, F.; Xu, L.; Wang, R.; Jiang, X.J.E.: Underground cylindrical objects detection and diameter identification in GPR B-scans via the CNN-LSTM framework. Electronics 9(11), 1804 (2020)

Zhong, L.; Cho, S.; Metaxas, D.; Paris, S.; Wang, J.: Handling noise in single image deblurring using directional filters. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 612–619 (2013)

Cho, S.; Lee, S.: Fast motion deblurring. In: ACM SIGGRAPH Asia 2009 papers, pp. 1–8 (2009)

Chan, T.F.; Wong, C.-K.: Total variation blind deconvolution. IEEE Trans. Image Process. 7(3), 370–375 (1998)

Perrone, D.; Favaro, P.: Total variation blind deconvolution: The devil is in the details. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2909–2916 (2014)

Dong, J.; Pan, J.; Su, Z.; Yang, M-H.: Blind image deblurring with outlier handling. In: Proceedings of the IEEE International Conference on Computer Vision, pp 2478–2486 (2017)

Pan, J.; Sun, D.; Pfister, H.; Yang, M.-H.: Deblurring images via dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 40(10), 2315–2328 (2017)

Fergus, R.; Singh, B.; Hertzmann, A.; Roweis, S.T.; Freeman, W.T.: Removing camera shake from a single photograph. Papers 2006, 787–794 (2006)

Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T.: Efficient marginal likelihood optimization in blind deconvolution. In: CVPR 2011, pp. 2657–2664: IEEE (2011)

Wipf, D.; Zhang, H.: Analysis of Bayesian blind deconvolution. In: International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition, pp. 40–53: Springer (2013)

Xu, L.; Jia, J.: Two-phase kernel estimation for robust motion deblurring. In: European Conference on Computer Vision, pp. 157–170: Springer (2010)

Bibi, N.; Majid, M.N.; Dawood, H.; Guo, P.: Automatic parking space detection system. In: 2017 2nd International Conference on Multimedia and Image Processing (ICMIP), pp. 11–15: IEEE (2017)

Singh, D.; Kumar, V.: Dehazing of remote sensing images using fourth-order partial differential equations based trilateral filter. IET Comput. Vision 12(2), 208–219 (2018)

Singh, R.; Bansal, S.J.: A comparative study of image deblurring techniques. J. Comput. Theor. Nanosci. 17(9–10), 4571–4579 (2020)

Zhang, K.; et al.: Deep image deblurring: a survey. Int. J. Comput. Vis. 130(9), 2103–2130 (2022)

Sankaraiah, Y.R.; Varadarajan, S.: Deblurring techniques—A comprehensive survey. In: 2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI), pp. 2032–2035: IEEE (2017)

Yang, D.; Wu, X.; Yin, H.J.M.: Blind image deblurring via a novel sparse channel prior. Mathematics 10(8), 1238 (2022)

Bai, Y., et al.: Single-image blind deblurring using multi-scale latent structure prior. IEEE Trans. Circuits Syst. Video Technol. 30(7), 2033–2045 (2019)

Sun, S.; Xu, Z.; Zhang, J.J.S.: Spectral norm regularization for blind image deblurring. Symmetry 13(10), 1856 (2021)

Krishnan, D.; Tay, T.; Fergus, R.: Blind deconvolution using a normalized sparsity measure. In: CVPR 2011, pp. 233–240: IEEE (2011).

Fan, W.; Wang, H.; Wang, Y.; Su, Z.J.A.S.: Blind deconvolution with scale ambiguity. Appl. Sci. 10(3), 939 (2020)

Welk, M.; Theis, D.; Weickert, J.: Variational deblurring of images with uncertain and spatially variant blurs. In: Joint Pattern Recognition Symposium, pp. 485–492: Springer (2005)

Hirsch, M.; Schuler, C.J.; Harmeling, S.; Schölkopf, B.: Fast removal of non-uniform camera shake. In: 2011 International Conference on Computer Vision, pp. 463–470: IEEE (2011)

Zuo, W.; Ren, D.; Zhang, D.; Gu, S.; Zhang, L.: Learning iteration-wise generalized shrinkage–thresholding operators for blind deconvolution. IEEE Trans. Image Process. 25(4), 1751–1764 (2016)

Hurley, N.; Rickard, S.: Comparing measures of sparsity. IEEE Trans. Inf. Theory 55(10), 4723–4741 (2009)

Wipf, D.; Zhang, H.: Revisiting Bayesian blind deconvolution. J. Mach. Learn. Res. (JMLR) (2014)

Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T.: Understanding and evaluating blind deconvolution algorithms. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1964–1971: IEEE (2009)

Michaeli, T.; Irani, M.: Blind deblurring using internal patch recurrence. In: European Conference on Computer Vision, pp. 783–798: Springer (2014)

Shan, Q.; Jia, J.; Agarwala, A.: High-quality motion deblurring from a single image. Acm Trans. Graphics (TOG) 27(3), 1–10 (2008)

Xu, L.; Zheng, S.; Jia, J.: Unnatural l0 sparse representation for natural image deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1107–1114 (2013)

Lai, W.S.; Ding, J.J.; Lin, Y.Y.; Chuang, Y.Y.: Blur kernel estimation using normalized color-line prior. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 64–72 (2015)

Zhang, Z.; Zheng, L.; Xu, W.; Gao, T.; Wu, X.; Yang, B.J.S.: Blind remote sensing image deblurring based on overlapped patches’ non-linear prior. Sensors 22(20), 7858 (2022)

Xu, Y.; Zhu, Y.; Quan, Y.; Ji, H.J.C.V.: Attentive deep network for blind motion deblurring on dynamic scenes. Comput. Vis. Image Understanding. 205, 103169 (2021)

Zhou, Y.; Komodakis, N.: A map-estimation framework for blind deblurring using high-level edge priors. In: European Conference on Computer Vision, pp. 142–157: Springer (2014)

Biyouki, S.A.; Hwangbo, H.: Blind Image Deblurring based on Kernel Mixture (2021)

Tofighi, M.; Li, Y.; Monga, V.: Blind image deblurring using row–column sparse representations. IEEE Signal Process. Lett. 25(2), 273–277 (2017)

Yan, Y.; Ren, W.; Guo, Y.; Wang, R.; Cao, X.: Image deblurring via extreme channels prior. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4003–4011 (2017)

Wen, F.; Ying, R.; Liu, Y.; Liu, P.; Truong, T.-K.: A simple local minimal intensity prior and an improved algorithm for blind image deblurring. IEEE Trans. Circuits Syst. Video Technol. 31(8), 2923–2937 (2020)

Chen, L.; Fang, F.; Wang, T.; Zhang, G.: Blind image deblurring with local maximum gradient prior. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1742–1750 (2019)

Dawood, H., et al.: Probability weighted moments regularization based blind image De-blurring. Multimedia Tools Appl. 79(7), 4483–4498 (2020)

Sun, S.; Duan, L.; Xu, Z.; Zhang, J.J.S.: Blind deblurring based on sigmoid function. Sensors 21(10), 3484 (2021)

Ren, W.; Cao, X.; Pan, J.; Guo, X.; Zuo, W.; Yang, M.-H.: Image deblurring via enhanced low-rank prior. IEEE Trans Image Process. 25(7), 3426–3437 (2016)

Dong, J.; Pan, J.; Su, Z.J.S.P.I.C.: Blur kernel estimation via salient edges and low rank prior for blind image deblurring. Signal Process. Image Commun. 58, 134–145 (2017)

Hsieh, P.-W.; Shao, P.-C.J.P.R.: Blind image deblurring based on the sparsity of patch minimum information. Pattern Recogn. 109, 107597 (2021)

Zhao, H.; Wu, D.; Su, H.; Zheng, S.; Chen, J.J.: Gradient-based conditional generative adversarial network for non-uniform blind deblurring via DenseResNet. J. Vis. Commun. 74, 102921 (2021)

Javaran, T.A.; Hassanpour, H.; Abolghasemi, V.: Non-blind image deconvolution using a regularization based on re-blurring process. Comput. Vis. Image Underst. 154, 16–34 (2017)

Öztürk, Ş; Akdemir, B.J.P.-S.: Comparison of edge detection algorithms for texture analysis on glass production. Procedia-Social Behav. Sci. 195, 2675–2682 (2015)

Kanopoulos, N.; Vasanthavada, N.; Baker, R.L.: Design of an image edge detection filter using the Sobel operator. IEEE J. Solid-state Circuits 23(2), 358–367 (1988)

Holder, R.P.; Tapamo, J.R.: Improved gradient local ternary patterns for facial expression recognition. EURASIP J. Image Video Process. 2017, 1–15 (2017)

Scharr, H.: Optimal operators in digital image processing (2000)

Levkine, G.J.: econd Draft, "Prewitt, Sobel and Scharr gradient 5x5 convolution matrices (2012)

Beck, A.; Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2(1), 183–202 (2009)

Levin, A.; Fergus, R.; Durand, F.; Freeman, W.T.: Image and depth from a conventional camera with a coded aperture. Acm Trans. Graphics (TOG) 26(3), 70 (2007)

Jia, J.: Single image motion deblurring using transparency. In: 2007 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8: IEEE (2007)

http://www.wisdom.weizmann.ac.il/levina/papers/LevinEtalCVPR09Data.rar

Whyte, O.; Sivic, J.; Zisserman, A.; Ponce, J.: Non-uniform deblurring for shaken images. Int. J. Comput. Vision 98(2), 168–186 (2012)

Chen, L.; Zhang, J.; Lin, S.; Fang, F.; Ren, J.S.: Blind deblurring for saturated images. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6308–6316 (2021)

Hu, Z.; Cho, S.; Wang, J.; Yang, M-H.: Deblurring low-light images with light streaks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3382–3389 (2014)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bibi, N., Dawood, H. SEBR: Scharr Edge-Based Regularization Method for Blind Image Deblurring. Arab J Sci Eng 49, 3435–3451 (2024). https://doi.org/10.1007/s13369-023-07986-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-023-07986-4