Abstract

Heart diseases pose a serious threat. When arteries that supply oxygen and blood to the heart are completely blocked or narrowed, the cardiac issue happens. The prominent causes of death have been cardiac disease. In a short period, the mortality rate has spiked. Cardiovascular diseases refer to these heart-associated diseases. These diseases are seen more in developing rather than developed countries. Inaccurate diagnosis of the disease may cause fatalities, and hence, precision and safety in diagnosing heart disease would be the prime factor in healthcare practice. In the proposed study, deep learning-based diagnosis system for heart disease prediction is proposed. The proposed classifier model achieves the accuracy for sensitivity with 98.21% the specificity achieving the value of 97.85%, the precision value of 98.41%, recall 97.43%, and 97.09% of accuracy. The BP-NN with mRmR feature extraction obtained a high accuracy rate when compared with the BP-NN classifier without a feature selection process. From the above-obtained results, mRmR with BP-NN algorithm obtains better result compared to the existing algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The increase in the number of patients creates an additional workload to the medical professionals, patients’ queues, and additional expenses in terms of time and money for the patients and health institutions [1]. The ever-growing patient list is stored in a big database for future reference and analysis. Usually, these big data statistics are used best to refer medical history of patients. System learning creates every other possibility to prognosis ischemic heart disease by using the huge stored feature and scientific history data [2]. Data mining techniques have been extensively utilized in medical decisions to assist systems in the prediction and analysis of numerous sicknesses with the right accuracy [3]. As a big quantity of statistics is produced in scientific fields, however, these records aren't always properly applied. The health care system is statistically and data rich. There is a lack of successful evaluation strategies to locate connections and patterns in health care statistics, and for this reason, the data mining techniques may be applied. Consistent with the literature survey a particular limitation is found on analysis of data like accuracy, speed, error rate, etc. [4, 5].

In today's world, the specialists have created numerous calculations with the assistance of mining algorithms and machine learning for discovering the predominance of the heart-based sickness [6]. The data analytics and mining algorithms are used for a large dataset for the prediction of heart disease. Prediction of heart disease in a large dataset is a tough task, and it’s complex too and the results produced are of less efficiency [7]. In the case of the heart disease dataset, 14 main features to improve the efficiency of predicting heart disease can be done by reducing the number of features in the dataset. Researchers have proposed many numbers of algorithms to find the features that cause heart disease [8]. This chapter speaks about various feature selection algorithm for finding the prevalence of the heart-based disease.

E-health studies suggest that every year around 4.4 million patients are visiting the healthcare center. Aged people, such as heart disease or sickness patients, are among folks who are unnecessarily visiting the hospitals [9]. Heart disease patients can be diagnosed by the use of extraordinary strategies. However, physical examination, laboratory test, and heart imaging scan require the physical appearance of the affected person to the medical center [10] (Fig. 1).

2 Related Work

Millions of humans are prone to some kind of ischemic heart disease every year, and it is the largest killer across the globe. The WHO reports that heart diseases kill 17.9 million people each year and overall 31% of the global deaths occur due to heart disease. Every 20 s, heart disease kills one person globally. Clinical analysis plays a crucial role in controlling and treating heart disease [11]. Until now, complex procedures have to be undertaken to successfully analyze and accurately treat the patients. To considerably reduce the expenses for achieving clinical examining the relevant facts and decision support system should be employed. Data mining consist of program strategies for locating patterns and consistency in sets of records. During the last two decades, there was a great opportunity for computer systems to construct and classify the different attributes [12]. The effectiveness of data mining in understanding the additives related to heart disease helps medical specialists to apprehend the reasons behind the IHD. Statistical analysis has revealed that the major risk factors related to ischemic heart disorder are age, blood pressure (BP), cholesterol, diabetes, hypertension, anxiety, genetic factors, obesity, lack of physical exercise, etc. [13].

Prediction and diagnosing of heart disease became a difficult aspect for doctors in India and abroad. To reduce the large scale of deaths due to heart disease a short and efficient detection approach has to be discovered. Data mining techniques and ML algorithms played an essential function in this area [14]. The researchers developed a software program with the support of the ML algorithm which assisted doctors to decide the prediction and diagnosing of heart disease [15]. The primary objective focused on the prediction of heart disorder through ML algorithms. Comparative study of the various overall performances of ML algorithms is performed via graphical representation of the outcomes [16, 17]. There are various frameworks in ANN thoughts that are contributed to yielding maximum hoisted choice exactness over curing therapeutic information. A pair of programming devices and numerous systems had been proposed with the aid of examiners for making intense choice consistent frameworks [18]

The technology like IoT and machine-to-machine communications are used to improve the efficiency of the system [19]. Cloud computing has used technology for storage, and it provides many services like Platform as a service, Infrastructure as a service, Infrastructure as a service [20]. Penny et al.[21] detailed the real-time dataset used to find the abnormalities with ventricular function and used 25 records for the prediction of the result. Elaborated the technique used to predict the abnormalities related to ventricular function present in the patient. For this identification purpose, nearly 25 records with various issues have been taken and compared with the healthy patient’s record and determined that the ventricular relaxation is frequently occurring after the Fontan operation. Fontan operation is performed to improve the level of oxygen supply during the circulation, and it downs the workload of the human heart.

Ultrasound imaging structures are particular structures that assess vascular own within rather than an axial-dimensional discipline view but are normally prescribed for particular massive arterial or venous evaluation. It cannot effortlessly examine large tissue hemodynamic and perfusion which is an important marker for tissue health [22]. Therefore there is a demand for a bedside healthcare tracking technology that is capable of offering huge subject Ischemic heart disease assessment without the use of ionizing radiation or contrast agents.

Senhadji et al. [23] depict the heartbeat classification with wavelet transform and linear discriminant analysis applied to differentiate the normal beat with PVC and ischemic heartbeat. Principal component analysis—PCA algorithm has been used by the author for the investigation of the ECG signal to access the quality of the signal and the decomposition level has been predicted at each level. Linear discriminant analysis has been analyzed for the statistical analysis to identify the linear grouping and to separate the classes to predict the relevant solution at the level of analysis. Nitish et al. [24] anticipated the noise removal technique with filters, and adaptive recurrent filter is used to attain impulse response to predict different types of arrhythmia. The background noise associated with the ECG signal during the recording such as power line interface, baseline wander, and various artifacts are removed with the filters. A new innovative method with modification in the design of bandpass filter for recurrent filter has been named as an adaptive recurrent filter to attain the normalization in the signal, and the QRS complex is identified for the detection of arrhythmia [25].

For providing a better and efficient result, the primary strategies like Support Vector Machine (SVM) and Sobel Edge Detection have been used. This method provides higher output for Ischemic heart disease detection [26]. To find the affected area, the primary strategies such as Sobel Edge detection assist in sprucing the bounds of region classification by isolating the odd and everyday regions in the Ischemic heart cells with SVM for identification of various forms of abnormalities [27]. SVM is one of the best-recognized strategies in pattern classification and image classification. The image of the ischemic heart disease is processed, and using the SVM classification approach the disease images are categorized into normal and odd levels. SVM is considered one of the famous techniques in pattern classification and image characterization [28]. Discrete Wavelet Transform (DWT) and Polynomial Sigmoid Support Vector Machine function (PS-SVM) are few functionalities that play an important role in image processing. DWT function extracts the meaningful and satisfactory image [29]. An advised kernel characteristic referred to as PS-SVM adjoins both sigmoid and polynomial kernels that evaluate the benefits over Polynomial and Sigmoid kernels.

De Chazal et al. [30] analyzed the normal heartbeat with PVC (Premature Ventricular Contraction) with feed-forward neural network classifier, and cross-validation was used in this work and achieved 89% of accuracy in the result. The attributes are extracted depending on the waveform and the heartbeat interval. All these features are removed from the noise which normalizes the signal, and then the extracted features are presented to the classifier model for the automatic prediction of the disease. The linear discriminant has been used in this work, and the algorithm is related to ANOVA which analyze the variance in the extraction of the feature and predicts the best feature for the automatic classification.

3 Proposed Heart Disease Prediction Model

In disease prediction, feature selection plays a vital role in the classification of the input data. Feature selection is a dimensionality reduction where the input data are reduced as much as possible to select the most relevant data to classify and to predict the disease [31]. To achieve a better result in accuracy, the features must be selected which is more relevant and the redundant features are omitted before the classification of the data. Hence, the most relevant feature is taken to classify the input data. In this work, the mRmR algorithm is used to select the best feature in the given input data. The selected best feature is passed to the BP-NN classifier as input for classification.

3.1 Data Pre-processing

Heart disease risk factors are the history of the family, age, sex, habits of smoking, high blood pressure, and blood cholesterol high. Data pre-processing technique is applied to remove the unwanted data in the medical data set [32]. Data pre-processing is the process of removing unwanted data’s from the collected original medical data sets (Fig. 2).

3.2 Feature Selection

Feature selection is a dimensionality reduction where the input data are reduced as much as possible to select the most relevant data to classify and predict the disease. The feature selection process is one of the basic things in all well-known classification applications [33,34,35]. To achieve a better result inaccuracy, the features must be selected which is more relevant and the redundant features are omitted before the classification of the data. Hence, the most relevant feature is taken to classify the input data. This feature selection process is often used in all application areas because it will remove the redundant data without loss of any information and hence this technique is applied with various algorithms. The feature selection technique is used for the following reasons:

-

Reduction of training time.

-

It makes the model easier to interpret the data for classification.

-

Elimination of unwanted data in high dimensional space.

-

Enhance the output data by reducing the variables.

In the proposed method, the feature extraction is performed based on the mRmR algorithm, and mRmR is used to adopt the features that correlate the correlation with the variable used for classification. The mRmR is used to combine the features with high correlation even if they are not similar. This mRmR uses the features and class’s similar info as the relevance of the feature of that class. The mRmR is used to search the feature set FS satisfying,

where \(I\left( {Z_{i} :c} \right)\) shows the mutual information between the c and the \(Z_{i}\), and mRmR uses the mutual information as the redundancy of each feature. The algorithm for finding the mR is given below

where \(I\left( {Z_{i} :Z_{j} } \right)\) shows the similar info between the \(Z_{i} :Z_{j}\).

The formulas (1) and (2) in combined named as Maximum Relevance and Maximum Redundancy. The mRmR have the following formula to optimize R and RD,

where D–R indicates RR of each feature.

4 Classification

In the proposed framework, the feature selection technique has been implemented by eliminating the best and the most relevant feature by the mRmR algorithm to identify the best feature in the high-dimensional search space. The selected features are taken as input to the classifier backpropagation-neural network learning approach with the modification (Fig. 3).

4.1 Deep Learning

Deep learning uses the concept of the neural network to classify the data, and it has the recurrent neural network, convolution neural network, and feed-forward neural network. Each one has its benefit in the user base on the dataset. In deep learning, each layer is connected and this makes it pass the information from one layer to another layer, i.e., the output of the previous layer is the next layer input. In machine learning, the feature extraction is handcrafted, i.e., the user should instruct the system to select the feature, whereas in deep learning classifier it automatically figures out the feature and classifies the data.

-

Deep learning understands the problem and checks whether deep learning suits the problem or not.

-

Deep learning has self-learning capacity and hence it can select the feature and classify the data automatically

-

Identify the relevant dataset and analyze the data.

-

Identify the type of deep learning algorithm to process.

-

Trains the algorithm on the massive labeled data.

-

Test the unlabeled data classification model

4.2 Backpropagation Neural Network (BP-NN) Classifier

The BP-NN algorithm is used for the classification of patients with and without heart disease. BP-NN is one of the supervised networks used for error estimation using gradient descent; this gradient descent function is used to calculate the weight of the network. BP is also named as backpropagation of errors because the error value is calculated at the output and it again sends it back through the layer of the network. The BP-NN uses the gradient descent function where the weight of the neurons is adjusted and the values are estimated within the gradient error function. This classifier finds the best set of input values to give the desired output. It is utilized to train multilayer NN; hence, it can interpretation of given input and provides correct output result in classification. This algorithm has two main processes: the first one is weight updating, and the second one is propagation. If the input data is given in the network, it moves in the forward direction through each layer in the network till it reaches the output layer. The error minimization function is used to compare the result of the output obtained after the computation. The output value is computed for each neuron in the output layer of the network. The neuron the error value is sent back to the network. Based on the results obtained from the gradient error value function, the weight is updated to minimize the error. The input data is fed into the network for the cyclic process to train the input data, and the obtained result is compared with the target data. The main of this algorithm is to reduce the error with the fewer number of iterations; in this algorithm, the weights are adjusted during the iteration process, so the error rate is minimized.

The BP-NN finds the error rate difference between the output results, and the calculated output is backpropagated through the network. During the process, weight adjustment is performed in the network to obtain the hidden node to predict the classification accuracy. There are three main layers in this algorithm: (1) input layer, (2) hidden layer, and (3) output layer, and the hidden unit in each network depends on their problem complexity. As the algorithm depicts the important steps of BP-NN is (1). Forward Propagation (2). Backward propagation of error (Fig. 4).

-

Input—The input unit’s conduct represents raw data provided to the network.

-

Hidden—The activity of the input components and the weights on the links that exist in between the input and hidden links decide the conduct of hidden units.

-

Output—The weights between hidden and output units, combined with the behavior of the hidden units will decide how the output units behave.

Step 1: Forward Propagation.

During this process, based on the input data and current weights the output result is calculated, where the hidden unit and the output result calculations depend on:

-

Previous layer values which are connected to the hidden unit.

-

Current layer and weights in previous layers.

-

Current layer threshold values.

The output is calculated based on the activation function, in this work sigmoid function is used,

Step 2 Backward Propagation of error.

The error value is calculated based on the difference between the target value and actual output value, from the hidden layer the error is backpropagated and for each layer, and the error is calculated to predict the weights and thus reduce the error rate at each unit. The entire process is repeated until the expected output is obtained. Let’s take the number of input layers as ‘n’, the number of hidden layers in the network be ‘h’, the number of output layers is ‘o’, and Ipx shows the P’s instance xth input value. The xth node of the input to the hidden layer is represented by Vhx, and the node weight of the hidden layer h to the jth output layer is represented as ωjh. By considering threshold is connection weights as well as output of hidden layer h,

Output layer for jth node is

Here the sigmoid function is chosen as incentive function

The global error function can be calculated using

where \(E_{p}\) is the error rate of the data p and \(t_{{{\text{ph}}}}\) is the ideal function.

The weight adjustment of the output layer’s neuron can be done using the formula,

where η represents the learning rate and their values range between 0.1 and 0.3.

The weight adjustment function for the hidden layer in the neuron is

the forward propagation process, the input data is fed one layer after the other, and in the course, each intermediate layer is processed and the actual outcome of every unit of ypj is evaluated. In backpropagation the process is reversed. Here, the layer by layer recursive computation of the error difference between the actual and expected output is carried out, in case the expected output is not obtained by the output layer. Weights of the function Δωjh and Δδjh altered using the gradient descent method (Table 1).

4.3 Validation Metrics

The leave-one-out cross-validation (LOOCV) and k-fold validation metrics are the two types of validation types used in this paper to validate the performance of the proposed method.

4.3.1 Leave-One-Out Cross-Validation (LOOCV)

LOOCV is a class validation method that performs testing in rounds. In each round, the classifier is trained by a set of samples and then testes using the rest. Finally, the classification accuracy values of each round are averaged. Many validation methods introduced in later periods also use this class validation method.

LOOCV is the most commonly used metrics for error estimation, and the possible way to find the efficacy of the feature selection method is to define the classification task and to find the accuracy of the classifier. The LOOCV is applied to the heart disease dataset, where the classifier is trained on n-1 samples in the dataset. This process is repeated for n number of times on each observation through this mean square error is computed.

4.3.2 K-Fold Validation

In the K-fold validation method, the dataset is divided randomly into a k set of samples and iterates them for k rounds. It uses one of these sets for testing while using the rest for training. In k-fold validation, k is equal to the number of available samples. It means that in each round one sample is held out for validation, while the rest is used for training.

4.3.3 Matlab Tool

Matlab is one of the most important tools required for data analysis. It has numerous toolboxes which assist in complex calculations. Graphs can be plotted directly using inbuilt functions. It has an infinite number of options that are necessary for microarray data analysis with numerical program runs. Matlab is quite a popular and well-suited software package. Matlab performs well at:

-

Prototyping

-

Engineering simulation

-

Fast visualization of data

Hence, Matlab was engaged for the heart disease dataset in this research. In many circumstances, Matlab is used to optimize the algorithms out of many algorithms tried out. Many present researchers adopt this tool and use it as a primary tool for their research work.

5 Result and Discussion

Heart diseases are a severe threat and typically occur when arteries that provide oxygen and blood to the heart are entirely blocked or made narrow. There is a vast amount of data produced in medical organizations which are not appropriately utilized. The classification problems of designating various observations into various disjointed groups would play an important role in making decisions among others. The sensor data from the various sensors are classified using the BP-NN algorithm to provide better accuracy when compared to the existing algorithms (Table 2).

6 Performance Evaluation

The performance evaluation is evaluated with the following metrics like accuracy, sensitivity, specificity, precision, and recall. The BP-NN classifier with mRmR is compared with the existing algorithm, and the result is shown in the preceding section. From the experimental result, it has been observed that the classification accuracy, specificity, sensitivity, precision, and recall achieve a better result when compared with the standard algorithm (Table 3).

TP—True Positive where a healthy person is diagnosed as healthy.

TN—True Negative representing the unhealthy person diagnosed as unhealthy.

FP—False Positive representing healthy person identified as sick.

FN—False Negative representing the unhealthy person wrongly diagnosed as a healthy person.

The comparative measures of the proposed method without and with feature selection algorithm with the parameters like sensitivity, specificity, precision, recall, and accuracy. The proposed classifier achieves the accuracy for sensitivity with 98.21% the specificity achieves the value of 97.85%, the precision value of 98.41%, recall 97.43%, and 97.09% of accuracy, and the result shows the proposed work attains high accuracy than the existing approach (Figs. 5, 6).

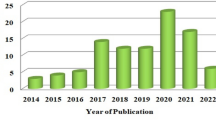

With the help of the above MATLAB rule viewer, the result can be tested easily. After testing on four patient databases, 94.5% accuracy has been achieved by the proposed diagnosis system. The experimental results are compared with earlier research in Table 4 and show that the proposed system is more efficient and accurate as compared to other existing diagnosis systems (Fig. 7).

7 Conclusion and Future Work

Heart diseases pose a serious threat. When arteries that supply oxygen and blood to the heart are completely blocked or narrowed, the cardiac issue happens. Although a huge amount of data are produced by healthcare organizations, the data are not appropriately utilized. In the proposed study, deep learning-based diagnosis system for heart disease prediction is developed. The proposed classifier achieves the accuracy for sensitivity with 98.21% the specificity achieves the value of 97.85%, the precision value of 98.41%, recall 97.43%, and 97.09% of accuracy. The BP-NN with mRmR feature extraction obtained a high accuracy rate when compared with the BP-NN classifier without a feature selection process. From the above-obtained results, the proposed algorithm efficiently predicts heart disease compared to the other classifier algorithms.

Further work can be carried out in the areas for using the automatic medical diagnostic system techniques for classification of another medical diagnosis like dementia and lung conditions such as emphysema, glaucoma and diagnosis.

References

Rao, R.: Survey on prediction of heart morbidity using data mining techniques. Knowl. Manag. 1(3), 14–34 (2011)

Davari Dolatabadi, A.; Khadem, S.E.Z.; Asl, B.M.: Automated diagnosis of coronary artery disease (CAD) patients using optimized SVM, Comput. Method. Programs Biomed. 138, 117–126 (2017).

Bui, A.L.; Horwich, T.B.; Fonarow, G.C.: Epidemiology and risk profile of heart failure. Nat. Rev. Cardiol. 8(1), 30–41 (2011)

Heidenreich, P.A.; Trogdon, J.G.; Khavjou, O.A., et al.: Forecasting the future of cardiovascular disease in the United States: a policy statement from the American heart association. Circulation 123(8), 933–944 (2011)

Ghwanmeh, S.; Mohammad, A.; Al-Ibrahim, A.: Innovative artificial neural networks-based decision support system for heart diseases diagnosis. J. Intell. Learn. Syst. Appl. 5(3), 176–183 (2013)

Al-Shayea, Q.K.: Artificial neural networks in medical diagnosis. Int. J. Comput. Sci. Issue. 8(2), 150–154 (2011)

Vanisree, K.; Singaraju, J.: Decision support system for congenital heart disease diagnosis based on signs and symptoms using neural networks. Int. J. Comput. Appl. 19(6), 6–12 (2011)

Samuel, O.W.; Asogbon, G.M.; Sangaiah, A.K.; Fang, P.; Li, G.: An integrated decision support system based on ANN and Fuzzy_AHP for heart failure risk prediction. Expert Syst. Appl. 68, 163–172 (2017)

Gokulnath, C.B.; Shantharajah, S.P.: An optimized feature selection based on genetic approach and support vector machine for heart disease. Clust. Comput. 22(6), 14777–14787 (2019)

Olaniyi, E.O.; Oyedotun, O.K.: Heart diseases diagnosis using neural networks arbitration. Int. J. Intel. Syst. Appl. 7(12), 75–82 (2015)

Das, R.; Turkoglu, I.; Sengur, A.: Effective diagnosis of heart disease through neural networks ensembles. Expert Syst. Appl. 36(4), 7675–7680 (2009)

Jabbar, M.A.; Deekshatulu, B.L.; Chandra, P.: Classification of heart disease using artificial neural network and feature subset selection, Glob. J. Comput. Sci. Technol. Neural Artif. Intel. 13(11) (2013)

Peng, H.; Long, F.; Ding, C.: Feature selection based on mutual information criteria of max-dependency, maxrelevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intel. 27(8), 1226–1238 (2005)

Chen, H.-L.; Yang, B.; Liu, J.; Liu, D.Y.: A support vector machine classifier with rough set-based feature selection for breast cancer diagnosis. Expert Syst. Appl. 38(7), 9014–9022 (2011)

Babu, G.C.; Shantharajah, S.P.: Optimal body mass index cutoff point for cardiovascular disease and high blood pressure. Neural Comput. Appl. 31(5), 1585–1594 (2019)

Patil, S.B.; Kumaraswamy, Y.S.: Extraction of significant patterns from heart disease warehouses for heart attack prediction, IJCSNS Int. J. Comput. Sci. Netw. 228 Secur., 9(2) (2009)

Abdul, S.; Bhagile, V.D.; Manza, R.R.; Ramteke, R.J.: Diagnosis and medical prescription of heart disease using support vector machine and feed forward back propagation technique, (IJCSE) Int. J. Comput. Sci. Eng. 02(06), 2150–2159 (2010)

Thuy Nguyen, T.T.; Davis, D.N.: A clustering algorithm for predicting cardio vascular risk. In: Proceedings of the World Congress on Engineering 2017 Vol IWCE 2007, London, U.K (2017)

Hu, G.; Root, M.M.: Building prediction models for coronary heart disease by synthesizing multiple longitudinal research findings, Europ. Sci. Cardiol. (2015)

Kumar, P.M.; Lokesh, S.; Varatharajan, R.; Babu, G.C.; Parthasarathy, P.: Cloud and IoT based disease prediction and diagnosis system for healthcare using Fuzzy neural classifier. Futur. Gener. Comput. Syst. 86, 527–534 (2018)

Brahmi, B.; Shirvani, M.H.: Prediction and diagnosis of heart disease by data mining techniques. J. Multidiscip. Eng. Sci. Technol. 2(2), 164–168 (2015)

Ajam, N.: Heart disease diagnoses using artificial neural network. Int. Insit. Sci. Technol. Educ. 5(4), 7–11 (2015)

Mujawar, S.H.; Devale, P.R.: Prediction of heart disease using modified K-means and by using Naïve bayes. Int. J. Innov. Res. Comput. Commun. Eng. 3, 10265–10273 (2015)

Gaziano, T.A.; Bitton, A.; Anand, S.; Abrahams-Gessel, S.; Murphy, A.: Growing epidemic of coronary heart disease in low-and middle-income countries. Curr. Probl. Cardiol. 35(2), 72–115 (2010)

Kumar, P.M., Hong, C.S., Babu, G.C., Selvaraj, J., Gandhi, U.D.: Cloud-and IoT-based deep learning technique-incorporated secured health monitoring system for dead diseases. Soft Comput. 1–16 (2021)

Pouriyeh, S.; Vahid, S.; Sannino, G.; De Pietro, G.; Arabnia, H.; Gutierrez, J.A.: Comprehensive investigation and comparison of machine learning techniques in the domain of heart disease. In: 2017 IEEE Symposium on Computers and Communications (ISCC). IEEE. p. 204–207

Otoom, A.F.; Abdallah, E.E.; Kilani, Y.; Kefaye, A.; Ashour, M.: Effective diagnosis and monitoring of heart disease. Int. J. Softw. Eng. Appl. 9(1), 143–156 (2015)

Duff, F.L.; Munteanb, C.; Cuggiaa, M.; Mabob, P.: Predicting survival causes after out of hospital cardiac arrest using data mining method. Stud. Health Technol. Inform. 107(Pt. 2), 1256–1259 (2004)

Szymanski, B.; Han, L.; Embrechts, M.; Ross, A.; Sternickel, K.; Zhu, L.: Using efficient Supanova Kernel for heart disease diagnosis. In: Procedings of ANNIE 2006, intelligent engineering systems through artificial neural networks 16, 305–310 (2006)

Noh, K.; Lee, H.G.; Shon, H.-S.; Lee, B.J.; Ryu, K.H.: Associative Classification Approach for Diagnosing Cardiovascular Disease, vol. 345, pp. 721–727. Springer (2006)

Dangare, C.S.; Apte, S.S.: Improved study of heart disease prediction system using data mining classification techniques. Int. J. Comput. Appl. 47(10), 44–48 (2012)

Fang, X.; Hodge, B.M.; Du, E.; Zhang, N.; Li, F.: Modelling wind power spatial-temporal correlation in multi-interval optimal power flow: a sparse correlation matrix approach. Appl. Energy 230, 531–539 (2018)

Gavhane, A.; Kokkula, G.; Pandya, I.; Devadkar, K.: Prediction of heart disease using machine learning. In: 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), pp. 1275–1278. IEEE (2018)

Jenzi, I.; Priyanka, P.; Alli, P.: A reliable classifier model using data mining approach for heart disease prediction. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 3(3), 20–24 (2013)

Lopez, A.D.; Mathers, C.D.; Ezzati, M.; Jamison, D.T.; Murray, C.J.: Global and regional burden of disease and risk factors, 2001: systematic analysis of population health hdata. Lancet 367(9524), 1747–1757 (2016)

Acknowledgements

The authors extend their appreciation to the Deanship of Scientific Research at King Khalid University for funding this work through the General Research Project under grant number (R.G.P.1/200/41).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Vincent Paul, S.M., Balasubramaniam, S., Panchatcharam, P. et al. Intelligent Framework for Prediction of Heart Disease using Deep Learning. Arab J Sci Eng 47, 2159–2169 (2022). https://doi.org/10.1007/s13369-021-06058-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-021-06058-9