Abstract

The rate of inoculum decline was determined for Gaeumannomyces graminis var. tritici (Ggt), a fungus causing take-all in cereals which survives saprophytically on crop debris during the intercrop period. Crop residues and Ggt inoculum in soil cores containing residues of six wheat cultivars from two fields were assessed in three experiments at intervals up to 7 months after harvest. At that time, for all cultivars, approximately three quarters of the original residue plant mass remained from crop residues where the stems were on the soil surface. The quantity of Ggt DNA per plant declined on average by 95% after 7 months, with most of the remaining inoculum residing in the stems and crown. Viable inoculum remaining in the plant residues was sufficient to infect newly planted wheat seedlings. It appears that after harvesting wheat exhibiting take-all, a period of more than 7 months is required to ensure inoculum in residues has declined sufficiently in soil to prevent reinfection of Ggt-susceptible cereal crops.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Take-all, caused by the fungus Gaeumannomyces graminis var. tritici (Ggt), is the most important root disease of wheat (Triticum aestivum L.) worldwide (Hornby et al. 1998). It results in blackened roots, stunting and premature death of plants (Cook 2003). Between crops, Ggt survives saprophytically on crop residue, and concentrations decline as these residue decay (Hornby 1981). The amount of inoculum present in the soil when the crop is seeded affects the extent of primary infection of a susceptible host cereal such as wheat (Bailey and Gilligan 1999). Pre-sowing soil Ggt concentrations of <6 pg and >80 pg Ggt DNA/g soil are associated with low and high risk, respectively, of take-all in second wheat crops under environmental conditions that favour disease development (Ophel-Keller et al. 2008; Bithell et al. 2012). Non-host break crops provide an important means of reducing Ggt concentrations in soil (Hornby 1981) and their inclusion in crop rotations is an effective method for take-all management (Yarham 1981). In New Zealand, Ggt concentrations in the soil were reduced proportionally to the length of non-host break crops (van Toor et al. 2013), declining by 78% over 4 months following wheat harvest (Bithell et al. 2009), but still potentially containing viable inoculum 7 months after harvest (van Toor et al. 2016a).

The rate of inoculum decline is related to the decomposition of cereal residues, which in turn is affected by air temperature, soil moisture, initial soil nitrogen (N) content and soil-borne biological activity (Douglas and Richman 1992). The amount of respiration in the soil is indicative of biological activity. Cookson et al. (1998) found the substrate-induced respiration rate after 28 days of decay accurately predicted residue mass-loss over the first 90 days of decay. Earthworm activity (Hume et al. 2015), urea-N and Trichoderma (Sharma et al. 2012) also enhanced decay rates of crop residues, with consequent decline in Ggt inoculum. However, the rate of inoculum decline in crop residues after cereal harvest is not clear. Since cereal cultivars differ in their tolerance to Ggt (van Toor et al. 2016b), the rate of decline of residues may also be influenced by cultivar. The duration after cereal harvest for degradation of crop residues to a point where Ggt is no longer viable is an important consideration for determining the length of a fallow or a break crop before sowing the next Ggt-susceptible crop into that field.

In the present study, the rates of decomposition and Ggt inoculum decline were measured in residues from six wheat cultivars in soil cores removed from Ggt-infected wheat crops. The position in the cores of Ggt inoculum within the crop residues at harvest and its rate of decay within the various components of the plant stem and root system under natural soil conditions were determined in the 7 months following harvest. Seven months was considered the longest time a cereal grower would leave a field fallow before sowing another crop susceptible to take-all. Earthworm density, N concentration and soil conditions were also recorded.

Materials and methods

Core sampling

Soil cores, 100 mm diameter and 150 mm deep, were removed 2–3 weeks after harvest from the crop residues of two wheat fields in Canterbury, New Zealand. A split-hinge soil corer (Kain and Young 1975) was used to extract the cores. Each core was placed into a 1.5 L capacity black plastic plant pot (tapered 132 mm to 100 mm internal diameter, and 140 mm tall). The cores and pots were the same for both experiments.

Experiment 1

On 15 February 2011, 15 days after harvest, eight blocks of 15 cores from each patch of crop residues with take-all were removed from a field of a first-year ‘Excede’ wheat crop following maize at Lincoln (43°37′54″S, 172°27′35″E). The 15 cores within each block were randomly assigned to three treatments for destructive assessments at five time points. The treatments were: ‘Buried crop residues’, to measure rate of decay of crop residues under conditions approximating ploughing; ‘Undisturbed residues’, to measure rate of decay of undisturbed plant material; ‘Infectivity’, to detect presence of pathogenic Ggt.

The cores for Undisturbed residues and Infectivity remained intact. Stubble and roots from the Buried crop residue pots were removed from the top 100 mm portion of the core, washed, and the stems trimmed to approximately 30 mm from the crown. The soil from the top portion of the core was passed through a 2 mm sieve to remove any remaining root fragments and weeds. So that all material from the plant residues could be recovered at each assessment time, a synthetic 1-mm mesh was placed on the remaining 50 mm of core in the pot, and the washed plant residues laid on it. The sieved soil was then returned around the roots.

The assigned cores were transferred to a shade house at Plant & Food Research, Lincoln, Canterbury, and arranged 100 mm apart on a concrete surface according to a randomised block resolvable row-column design generated with CycDesign (CycSoftware 2009). The cores were held there under natural environmental conditions until they were assessed on 15 February, 17 March, 18 April, 15 June and 17 August 2011 - designated as 0, 1, 2, 4 and 7 months after harvest, respectively. Cores designated for Infectivity were sown with germinated wheat seed, the roots of which were assessed later for take-all and DNA. The wheat residues were removed from the cores designated for Buried crop residues and Undisturbed residues for separation into their tissue component dry weights and assessed for concentration of Ggt DNA.

Experiment 2

The second experiment was conducted on soil cores taken from a wheat cultivar trial in a field at Makikihi, Canterbury (44°37′02″S, 171°08′51″E) (van Toor et al. 2016b). Plots had been inoculated with the wheat at sowing on 9 May 2013 with oat grains containing Ggt isolates. The isolates were known to be pathogenic against wheat (Chng et al. 2005). Consequently, the cores contained wheat residues infected with natural and known pathogenic strains of Ggt. Four replicate plots containing the cultivars ‘Consort’, ‘Starfire’, ‘Phoenix’, ‘Sage’ and ‘Wakanui’ were selected for supply of the wheat residues. Wheat in the trial was harvested on 20 February 2014. Four cores were removed from each selected plot 13 days later.

In the laboratory, the gaps between the core and pot were filled with coarse sand. The pots for each cultivar were randomly assigned for each of the four assessments. The stubble was left intact, as for that in Undisturbed residues in Experiment 1. The 20 cores allocated to Time 0 were retained for assessment. The remaining 60 pots were transferred to a grid of prepared holes in a pasture field trimmed to 5 mm at Plant & Food Research, Lincoln. Inserting the pots into the soil retained natural moisture and ambient soil temperature in the cores. The cores were arranged according to a randomised block resolvable row-column design generated with CycDesign. The cores were assessed on 5 March, 6 May, 23 June and 16 September 2014 - designated as 0, 2, 4 and 7 months after harvest, respectively. At each assessment time, the cores were removed from the field and mineral nitrogen (N) was assessed initially in the laboratory. The cores were then sown with wheat seedlings and transferred to a greenhouse for 10 days to test for Ggt infectivity, followed by extraction of the wheat residues for partitioning into their component dry weights and worm counts. The concentration of Ggt DNA in each tissue component for ‘Consort’, ‘Starfire’ and ‘Wakanui’ was also assessed.

Assessments

Mineral nitrogen

Mineral N was measured in cores immediately after they were removed from the field. At each assessment time, a 14 mm diameter by 100 mm deep soil sample was removed 2–3 cm from the centre of each core and the cores were stored at 4 °C in seal-lock plastic bags. The following day mineral N was extracted from a 5-g subsample from each core, by shaking with 25 mL of 2 M KCl solution for 1 h. Ammonium (NH4-N) and nitrate (NO3-N) in the KCl extracts were determined by standard colorimetric methods using a Lachat QuikChem 8500 Series 2 Flow Injection Analysis System (Lachat Instruments, Loveland, Colorado, USA) (Keeney and Nelson 1982; Harbridge 2007a; Harbridge 2007b).

Ggt infectivity

The wheat seedling bioassay used followed that described by Chng et al. (2004). Eight germinated seeds of the wheat ‘Conquest’ were sown into each core in a circle 30 mm from the centre of the core and equidistant from one another at a depth of 20 mm. The cores were transferred to a glasshouse set at 22 °C and watered daily. After 14 days, the established seedlings were assessed for the area of take-all (% severity). After the take-all severity assessments, all roots from the wheat seedlings were then air-dried at 40 °C for 3 days, frozen at −20 °C and assessed for Ggt DNA (see below).

Residue dissection

After wheat seedlings for the Ggt infectivity assessment had been removed from the cores, the crop residues from the same cores was separated from the soil and spray-washed over a series of sieves (Laboratory Test Sieves, Endecotts, London, UK) in sequence from 4.0, 2.0, 1.0 to 0.5 mm mesh sizes. The number of plants contributing to the crop residues was counted. The crop residues was partitioned into stems, crown, large roots (>1 mm diam.) and thin roots (<1 mm diam.), and >4, 2–4, 1–2 and 0.5–1 mm root fragments retained from each sieve. In Experiment 1, stems ranged from 30 to 75 mm, with stem dry weights and Ggt concentrations adjusted to 50 mm lengths. The root fragments were analysed separately. In Experiment 2, all stems were trimmed to 50 mm from the crown. The >4 and 2–4 mm root fragments were combined with the thick roots, and the 1–2 and 0.5–1 mm root fragments were combined with the thin roots. The crop residues parts were air-dried at 40 °C for 72 h then separately weighed. Crop residues parts were then frozen at −20 °C until analysis for Ggt DNA.

Ggt DNA quantification

Roots were prepared for quantitative polymerase chain reaction (qPCR). Each dried root sample was ground in liquid nitrogen using a mortar and pestle. DNA was extracted from a ≈ 20-mg sub-sample of the ground roots using a DNeasy® Plant Minikit (Qiagen, New Zealand) following the manufacturer’s instructions. The extracted DNA was suspended in 200 or 400 μL of TE manufacturer’s buffer (pH 8.0). If necessary, samples were diluted 1:20 with TE buffer (pH 8.0) to reduce the effects from inhibitors.

The amount of Ggt DNA in each sample was quantified using the qPCR method described by Keenan et al. (2015). PCR amplifications were carried out in a StepOnePlus™ qPCR machine (Applied Biosciences) for Experiment 1 and in a CFX96™ Real-Time System (BioRad) for Experiment 2. A Sybr green-based qPCR with primers Pot25SF and Pot25SR, which targeted the plant 25S ribosomal RNA gene sequence, identified the samples containing inhibitors likely to affect detection of Ggt DNA. Only some samples containing root fragments from Undisturbed residues in Experiment 1 had inhibitors. These samples were treated as missing values, but with at least one replicate in each fragment size represented in the Ggt DNA analysis. The probe GgtEFPR1 and primers GgtEFF1 and GgtEFR1 targeting the translation elongation factor 1-alpha (EF1-α) gene from Ggt measured the amount of Ggt DNA in the cereal roots. These quantities were estimated using a standard curve constructed using standard regression of the cycle threshold (Ct) values against the log10 of the initial concentration. An undetected Ct value was assumed to mean the sample contained no Ggt DNA.

Earthworms

When the crop residues was extracted from each soil core at each assessment time in Experiment 2, earthworms were also removed and identified to species using identification guides as in Schon (2016).

Soil conditions

For the period in which the soil cores were in the field, soil temperature and moisture, and rainfall, were accessed from NIWA’s Broadfield weather station at Lincoln (National Institute of Water and Atmospheric Research (NIWA) 2016), within 1 km from the experiment site. For Experiment 2, soil temperature and volumetric moisture content were measured in four additional cores that replicated the conditions of pots in the main trial and were arranged between blocks. All cores contained the ‘Wakanui’ cultivar. A 5TM sensor inserted 50 mm below the top of each core was connected to a Decagon EM50™ data collection system (Decagon Devices) to record temperature and moisture at 30-min intervals.

Statistical analysis

Crop residues dry matter data were divided by the number of plants counted per pot, then transformed using log transformations. These, and mineral N were analysed with standard analysis of variance. All other data were initially analysed with a hierarchical generalized linear model approach (HGLM, Lee et al. 2006). Treatments and Time were included as fixed effects, and replicate and other potentially important layout factors as random effects. The importance of the random effects was assessed with a Χ2 test of the change in likelihood on dropping the effect, as implemented in GenStat’s HGRTEST procedure. Fixed effects were assessed similarly, using GenStat’s HGFTEST procedure. Only important random effects were retained in the final analyses. Percentage take-all severity data were analysed with a binomial-beta HGLM with fixed effects as binomial, and random effects with a beta distribution, both with a logit link. No random effects were found to be important, so none was included in the final analysis. However, the variation between samples increased with the treatment means, and therefore a separate dispersion was estimated for each treatment using the Joint GLM procedure (Lee et al. 2006). Numbers of earthworms and plants per core found in residues were initially analysed with a Poisson-gamma HGLM, with logarithmic links. However, none of the potential random effects was found to be important, so these were ignored in the final analysis, which became a simple Poisson generalized linear model. For qPCR data, Ct values were first converted into quantities using parameters obtained from a standard linear regression values for the standards on the log10 quantities. Where the qPCR result was undetermined, the quantity was fixed at zero. Since DNA quantities are essentially counts multiplied by a molecular weight, the individual data were analysed using a Poisson-gamma HGLM with log links, a method appropriate for the analysis of counts. Fixed effects were Time and Crop residues-part and their interaction. Random effects found to be important were Block (replicates), Pots, and Plant-parts within Pots. The analysis included an offset (McCullagh and Nelder 1989) to adjust the results to be DNA concentrations (ng/mg root DNA). The offset used was:

where EVol is the volume into which the extracted DNA was suspended, qPCRSampleWt is the weight of the sample used for DNA extraction, and WtDM is the dry weight of the plant part from which the extraction sample was taken. Predictions (means) were made for an offset of zero.

All analyses were carried out with GenStat (GenStat Committee 2014). Experiment 2 included some treatments not discussed here, but which were included within the analyses. Means were obtained on the transformed (or link) scale along with 95% confidence limits. These were back-transformed for presentation.

Results

Experiment 1

Buried crop residues

Numbers of plants in the crop residues per core varied from 1 to 6 (mean 2.6) and did not vary over time (P = 0.539). Dry matter weight for each buried part declined similarly over time (P = 0.685; Fig. 1). Total crop residues weight declined by 50% of the original weight of 694, to 348 mg plant−1 after 7 months (P = 0.005). After 7 months, most of the dry weight was in the stems and crowns, which were nine times heavier than the thick roots and 29 times heavier than the thin roots (P < 0.001).

Experiment 1 – Buried crop residues: Mean dry matter weight of crop residues parts per plant (a) and concentration of Gaeumannomyces graminis var. tritici (Ggt) DNA (b), 0, 1, 2, 4 and 7 months after harvest in the wheat cultivar ‘Excede’. Error bars are 95% confidence intervals for selected means (lowest, highest and a mid-range mean in each pane)

The Ggt DNA quantities varied between the crop residues parts over time (P = 0.012) (Fig. 1). Most of the Ggt DNA was detected in the stem and crown at 0 and 1 month after harvest, remaining at detectible amounts thereafter. At the 7-month assessment, the amounts of Ggt DNA in the thick and thin roots were negligible. Averaged over each category, the concentration of Ggt DNA declined by 69%, from 167 ng g−1 tissue at 0 months to 52 ng g−1 tissue after 7 months. The plants, contained a total of 84.4 μg Ggt DNA per plant at 0 months and 24.0 μg Ggt DNA per plant after 7 months, a reduction in inoculum of 71%.

Undisturbed residues

Numbers of plants in the crop residues per core varied from 1 to 6 (mean 2.6) plants per core and were consistent over time (P = 0.377). Crop residues dry weights at 0 months totalled 2246 mg plant−1, declining to 1487 mg plant−1 after 7 months. The rate of change varied between the main plant parts (P < 0.001), with weights of stem and crown stable over the 7 months, whereas weights of thick and thin roots declined from harvest (Fig. 2). Weights of the total root fragments also varied with time (P < 0.001); the highest being 1 month after harvest, at 814 mg per plant, and the lowest after 7 months, at 529 mg per plant. The decrease in thin root weight from 0 to 2 months corresponded with the increase in the 2–4 mm fragment weights over the same period.

Experiment 1 – Undisturbed residues: Mean dry matter weights per plant crop residues parts (a) and root fragments (b), and Gaeumannomyces graminis var. tritici (Ggt) DNA concentration in crop residues parts (c) and in root fragments (d), respectively, in the wheat cultivar ‘Excede’. Error bars are 95% confidence intervals for selected means (lowest, highest and a mid-range mean in each panel)

Ggt DNA concentrations in the crop residues parts also varied over time (P = 0.012) (Fig. 2). They increased from harvest in the stem, thick roots and thin roots until peaking after 2 to 4 months and then declining to negligible concentrations after 7 months, whereas in the crown, the concentration of Ggt DNA was greatest at harvest and steadily decreased thereafter. Ggt DNA concentration increased after harvest to peak 1 month later in the larger root fragments before declining to negligible concentrations after 7 months. For the smaller fragments, concentrations remained negligible for the duration of the experiment. Averaged over each category, the concentration of Ggt DNA declined from 134 ng g−1 tissue at 0 months to 22 ng g−1 tissue after 7 months. The plants, calibrated for 50-mm stem lengths, contained 221.0 μg Ggt DNA per plant at 0 months and 7.8 μg Ggt DNA per plant after 7 months, a reduction in inoculum of 96%.

Ggt infectivity

Take-all severity of roots and the concentration of Ggt DNA in roots from wheat seedlings planted into the soil cores changed over time (P < 0.001) (Table 1). Take-all severity increased from 5% at 0 months to 14% after 1 month and declined to 0.6–1.7% in subsequent assessments. Ggt DNA analysis of the seedlings roots confirmed the symptoms of take-all were caused by Ggt. The quantity of Ggt DNA in the dried seedling roots was 21–27 ng mg−1 plant at 0–2 months after harvest and 3 ng mg−1 plant after 7 months.

Experiment 2

Undisturbed residues

The number of plants per core varied from 1 to 12 (mean 6.8). The weights of the crop residue parts per plant changed with time (P < 0.001) irrespective of cultivar (P > 0.442; Fig. 3). Mean total crop residues weight reduced from 1690 mg per plant at harvest to 1172 mg per plant after 7 months, but varied between the cultivars (P = 0.001). ‘Sage’ had the least dry matter for stem, crown and thick roots but the greatest amount for thin roots.

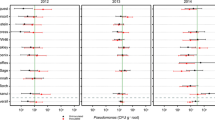

The Ggt DNA concentration in each plant part differed over time (P < 0.001), but less strongly between cultivars (P = 0.033) (Fig. 4). For stems, DNA quantities increased from 0 month in ‘Consort’ and ‘Starfire’, peaked after 2 months and decreased thereafter, whereas for ‘Wakanui’ they continued to decline over time. For the buried plant parts, the Ggt DNA concentration tended to decline over the 7 months. At 7 months, most of the inoculum was in the stem, but averaged 0.2 ng Ggt DNA per mg tissue for all plant parts. Averaged over time, the Ggt DNA concentration was similar for the three cultivars in the four plant parts (P = 0.265) and in the whole plant (P = 0.397), with the Cultivar x Plant-part x Time interaction also not significant (P = 0.644). The concentration, averaged over all cultivars, was 2.3 ng mg−1 at 0 months and 0.2 ng mg−1 after 7 months. The plants contained 973 ng Ggt DNA per plant at 0 months and 86 ng Ggt DNA per plant after 7 months, a reduction in inoculum of 91%.

Ggt infectivity

Take-all severity in roots was below 1% for three-quarters of the samples (Table 1). The small number of high means contributed to a significant Time by Cultivar interaction (P < 0.001), but without consistent trends. However, Ggt remained viable for infection of wheat seedling roots to occur 7 months after harvest.

Nitrogen

The concentration of available mineral N in soil cores differed over time (P < 0.001), with the concentration for all cultivars greatest at harvest than for the subsequent assessments (Fig. 5). The cultivar effects were not significant (P > 0.2), although at harvest, the amount of mineral N μg soil−1 dry matter was greatest in cores containing ‘Consort’ (22.6 (12.5–41.1)) and least in those containing ‘Phoenix’ (5.4 (2.9–9.7)).

Earthworms

Earthworms collected from the soil cores at 0, 2, 4 and 7 months after harvest averaged 32, 119, 72 and 24 m−2, respectively (P < 0.001), with minor differences between cultivars (P > 0.1). Aporrectodea caliginosa dominated the earthworm population, with 4% of the population represented by A. trapezoides.

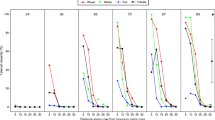

Cultivar and inoculum

The quantities of Ggt DNA per plant from the undisturbed residues in Experiments 1 and 2 for the four cultivars were compared (Fig. 6). The changes in the inoculum over time for each plant part were similar for all cultivars, although ‘Excede’ plants in Experiment 1 contained more inoculum than plants from the other cultivars in Experiment 2.

Experiments 1 and 2 compared – Quantity of Gaeumannomyces graminis var. tritici (Ggt) DNA ng per plant in 50 mm of the stem, crown, thick roots and thin roots from four wheat cultivars at 0, 2, 4 and 7 months after harvest. Data for ‘Excede’ from Experiment 1 were analysed separately from those for the other cultivars in Experiment 2, with the root fragments >1 mm diameter included in with the thick roots and those <1 mm included with the thin roots. The quantity of inoculum was much higher in Experiment 1 but its change over time was similar for both experiments. Error bars are 95% confidence intervals for selected means (lowest, highest and a mid-range mean)

Soil conditions

Mean monthly soil temperatures and moistures from soil nearby or directly from the soil cores for the duration of both experiments are presented in Table 2. NIWA data were similar for both experiments. Monthly means from the data logger in Experiment 2 approximated those from the NIWA weather station for soil temperatures, but soil moisture monthly means were 13–18% drier.

Discussion

Results from both experiments indicate that soils 7 months after the harvest of a previous crop would still be infective, irrespective of the previous wheat cultivar. This finding concurs with those of Bithell et al. (2009), who observed 22% of Ggt concentrations in the soil following second wheat crops remained 4 months after harvest.

In Experiment 1, where Ggt infectivity was assessed from different cores from those used for assessments of crop residues, the severity of take-all in indicative wheat seedlings peaked 1 month after harvesting. In contrast to Experiment 2, where infectivity and crop residue assessments were derived sequentially from the same cores, take-all severity fluctuated throughout the assessment period. The differences in methodology do not explain the differences observed between the two experiments. The time-of-year effect can be excluded as a contributing factor since the soil cores from each experiment were assessed at similar times within the respective year of the trial. Temperature variations between Infectivity assays can be also be excluded as a factor since all cores were placed in the same glasshouse set at 22 °C. Rather, the much higher quantity of inoculum in plants from Experiment 1 could have accounted for the differences. The cores in Experiment 1 were sampled from patches of crop residues exhibiting severe take-all whereas the cores in Experiment 2 were sampled randomly in plots from a previous trial containing natural and inoculated Ggt. Although the inoculated Ggt may have become less virulent in the 10 months from when the inoculum was applied at sowing in 2013 to when the cores for the Experiment 2 were removed, the Ggt in the residues was still pathogenic. In the trial from which the cores were derived, there was no appreciable difference in take-all severity in roots of the relevant wheat cultivars from plots with natural Ggt or plots containing both the natural and inoculated Ggt, which averaged 44% take-all (van Toor et al. 2016b).

The indications of Ggt infectivity in the wheat seedling assay as assessed by take-all severity were inherently variable, particularly at low Ggt concentrations in the residues experienced in Experiment 2. However, quantification of the Ggt concentration in the wheat seedling roots using the qPCR test in Experiment 1 provided additional information on the pathogenicity of Ggt. The Ggt concentration in roots of the seedlings remained similar for the first three assessments but bared little relation to the take-all severity at those times (Table 1). Results inferred ectotrophic expansion of Ggt along the roots as there was little infection 0 and 2 months after harvest. But 1 month after harvest, the increase in take-all severity implied the hyphae was endotrophic and more virulent. After 4 months both the take-all severity and root concentration had decreased. Thus, the increase in take-all severity in indicative wheat seedlings appeared due to an increase in the virulence of Ggt from the wheat residues and not because of an increase of inoculum concentration.

The DNA-based assay detected intact partial DNA sequences of the EF1-α gene in Ggt (Keenan et al. 2015) and would have quantified the DNA of live Ggt plus the accumulated intact DNA from the dead Ggt. Consequently, the Ggt DNA concentration could have over-estimated the quantity of viable Ggt mycelia associated with roots. Herdina et al. (2004) showed that the Ggt DNA concentration from killed Ggt mycelia in wheat roots did not degrade after 16 days, whereas in soil it degraded rapidly to be almost undetectable within 4 days. Furthermore, 10% of the original Ggt DNA in live mycelia in soil was still present after 1 month. Translating these findings to the Infectivity assays, the pathogen would be expected to be in an exponential growth phase in seedling wheat roots within the 14 days of the assessment period and thus the Ggt DNA concentration would have related to the quantity of mycelia in the roots. However, in the senescent wheat residues in the cores, the Ggt concentrations measured in the roots 1 or 2 months apart would have related to live mycelia plus a proportion of dead mycelia. And once the senescent roots had fully mineralised, the live mycelia could remain viable in soil to cause infection at least a month later.

As indicated by DNA analysis, most of the Ggt inoculum in crop residues after 7 months was in the 50 mm length of stems (straw) above the crowns and in the crowns, having declined to negligible amounts in the roots. In Experiment 1, the buried crop residues mimicked ploughing somewhat. Plants comprising the stem, crown and intact roots decayed by half of the original dry weight and contained approximately 24 μg Ggt DNA per plant after 7 months from harvest. In contrast, the treatment of undisturbed crop residues mimicked non-tillage somewhat. The stems, which remained on the soil surface, and the buried crown and attached roots at 0 months declined by a quarter of the original plant dry matter after 7 months, and contained 9 μg Ggt DNA per plant. Nevertheless, for both methods, Ggt tended to accumulate within 2 months after harvest in the 50-mm long stems, in the thick and thin roots attached to the crop residues, and in the 2–4 mm thick root fragments. In Experiment 2, weights of stems on the soil surface declined by 13% and the weights of the buried parts of the crop residues declined by 49% of their original weight over the 7 months, resulting in 0.09 μg Ggt DNA per plant. The differences between experiments in the eventual inoculum quantities per plant after 7 months reflected the different initial inoculum quantities, which were initially 230 times higher in crop residues in Experiment 1 than in Experiment 2.

From both experiments, most of the decline in inoculum was related to its reduction in Ggt concentration in the crop residues over this 7-month duration. The dry matter in the residues with stems on the soil surface declined by 28–34%, compared with reduction in Ggt DNA concentration of 83%. This differential decline appeared to be a result of the Ggt loss being particularly marked in the thin roots, which also decayed much more quickly than the thicker residue parts during the 4–7 month period. While there were differences between cultivars in the component dry matter and concentrations of Ggt DNA, their effects on infection of wheat seedlings appeared immaterial. The amounts of Ggt DNA in plant residues for any cultivar 7 months after harvest were clearly sufficient to cause infection in wheat seedlings.

The rates of decomposition of crop residues over the 7 months in soil were similar to that reported elsewhere: 13% after 6 months for surface straw (Curtin et al. 2008) and 40% after 5.6 months for buried crop residues (Bauer et al. 2008). The normal decomposition rates from the current study occurred despite conditions in the cores being below optimum for residue mineralisation. Earthworms, which contribute to residue decay, were initially absent in the soil cores, eventually colonising the cores only to a third of the earthworm population of 600 m−2 typically found in mixed cropping soils in Canterbury (Fraser et al. 1996). However, even at this low density they may have contributed to inoculum decline. Hume et al. (2015) observed a take-all suppressive effect from populations of 200–300 earthworms m−2. The mean monthly moisture content of the cores at the 50-mm depth of 17% (Table 2) was also below the optimum of 25%, as estimated from the maximum moisture recorded of 30% (HE Brown, Plant & Food Research, pers. comm.). The concentration of mineral N in the cores after 2 months of 3.4 μg g−1 soil (Table 2) was also less than expected in fallowed cropping soil (Fraser et al. 2013), which may be explained by N leaching from the small cores.

These experiments indicate that the factors contributing to the infectivity of the crop residues were not affected by cultivar. Irrespective of the cultivar and within normal crop residues degradation rates, wheat sown within the normal practice of 1–3 months of harvest following a Ggt-infected host crop (RA Craigie, Foundation for Arable Research, pers. comm.), is likely to be infected with take-all. Wheat sown in a field 1 month after harvest of a previous crop containing Ggt, when Ggt may be most virulent, should be avoided. However, 7 months after harvest the distribution of the inoculum in fallow soil would have changed. The initial inoculum residing in the thin roots between the rows would have mostly disappeared, leaving the remaining inoculum, which is likely to be viable, in the stem bases and crowns concentrated in the original rows.

References

Bailey DJ, Gilligan CA (1999) Dynamics of primary and secondary infection in take-all epidemics. Phytopathology 89:84–91

Bauer J, Kirschbaum MUF, Weihermueller L, Huisman JA, Herbst M, Vereecken H (2008) Temperature response of wheat decomposition is more complex than the common approaches of most multi-pool models. Soil Biol Biochem 40:2780–2786

Bithell SL, McLachlan ARG, Hide CCL, McKay A, Cromey MG (2009) Changes in post-harvest levels of Gaeumannomyces graminis var. tritici inoculum in wheat fields. Australas Plant Pathol 38:277–283

Bithell SL, McKay A, Butler RC, Herdina O-KK, Hartley D, Cromey MG (2012) Predicting take-all severity in second-year wheat using soil DNA concentrations of Gaeumannomyces graminis var. tritici determined with qPCR. Plant Dis 96:443–451

Chng SF, Cromey MG, Butler RC (2004) Mycoflora of rhizomes and roots of Elytrigia repens, a potential inoculum source for take-all in wheat. New Zealand. Plant Prot 57:59–66

Chng SF, Cromey MG, Butler RC (2005) Evaluation of the susceptibility of various grass species to Gaeumannomyces graminis var. tritici. New Zealand. Plant Prot 58:261–267

Committee GS (2014) The guide to the GenStat® command language (release 17). VSN International, Hemel Hempsted

Cook RJ (2003) Take-all of wheat. Physiol Mol Plant Pathol 62:73–86

Cookson WR, Beare MH, Wilson PE (1998) Effects of prior crop residue management on microbial properties and crop residue decomposition. Appl Soil Ecol 7:179–188

Curtin D, Francis GS, McCallum FM (2008) Decomposition rate of cereal straw as affected by soil placement. Aust J Soil Res 46:152–160

CycSoftware (2009) CycDesigN 4.0 a package for the computer generation of experimental designs. Version 4.0, CycSoftware Ltd, Hamilton

Douglas CL Jr, Richman RW (1992) Estimating crop residue decomposition from air temperature, initial nitrogen content, and residue placement. Soil Sci Soc Am J 56:272–278

Fraser PM, Williams PH, Haynes RJ (1996) Earthworm species, population size and biomass under different cropping systems across the Canterbury Plains, New Zealand. Appl Soil Ecol 3:49–57

Fraser PM, Curtin D, Harrison-Kirk T, Meenken ED, Beare MH, Tabley F, Gillespie RN, Francis GS (2013) Winter nitrate leaching under different tillage and winter cover crop management practices. Soil Sci Soc Am J 77:1391–1401

Harbridge J (2007a) Determination of ammonia (Salicylcate) in 2M KCl soil extracts by flow injection analysis (high throughput), Lachat QuikChem method 12–107–06-2-F. Lachat Instruments, Loveland

Harbridge J (2007b) Determination of nitrate in 2M KCl soil extracts by flow injection analysis, Lachat QuikChem method 12–107–04-1-J. Lachat Instruments, Loveland

Herdina NS, Jabaji-Hare S, Ophel-Keller K (2004) Persistence of DNA of Gaeumannomyces graminis var. tritici in soil as measured by a DNA-based assay. FEMS Microbiol Ecol 47:143–152

Hornby D (1981) Inoculum. In: Asher MJC, Shipton PJ (eds) Biology and control of take-all. Academic Press, London, pp 271–293

Hornby D, Bateman GL, Gutteridge RJ, Ward E, Yarham D (1998) Take-all disease of cereals: a regional perspective. CAB International, Wallingford, p 384

Hume EA, Horrocks AJ, Fraser PM, Curtin D, Meenken ED, Chng S, Beare MH (2015) Alleviation of take-all in wheat by the earthworm Aporrectodea caliginosa (Savigny). Appl Soil Ecol 90:18–25

Kain WM, Young J (1975) I. A soil sampler suitable for sampling pastureland. N Z J Exp Agric 3:177–180

Keenan S, Cromey MG, Harrow SA, Bithell SL, Butler RC, Beard SS, Pitman AR (2015) Quantitative PCR to detect Gaeumannomyces graminis var. tritici in symptomatic and non-symptomatic wheat roots. Australas Plant Pathol 44:591–597

Keeney DR, Nelson DW (1982) Nitrogen - inorganic forms. In: Page AL, Miller RH, Keeney DR (eds) Methods of soil analysis, part 2. Chemical and microbiological properties. American Society of Agronomy and Soil Science Society of America, Madison, pp 643–709

Lee Y, Nelder JA, Pawitan Y. 2006. Generalized Linear Models with Random Effects: Unified Analysis via H-likelihood: Monographs on Statistics and Applied Probability, v.106. Chapman & Hall/CRC Press, London. p 416

McCullagh P, Nelder JA. 1989. Generalized Linear Models. Chapman & Hall, London. 511+xix p

National Institute of Water and Atmospheric Research (NIWA) (2016) The National Climate Database. http://cliflo.niwa.co.nz/pls/niwp/wgenf.genform1. Accessed 24 March 2016

Ophel-Keller K, McKay A, Hartley D, Herdina CJ (2008) Development of a routine DNA-based testing service for soilborne diseases in Australia. Australas Plant Pathol 37:243–253

Schon N (2016) The great kiwi earthworm. http://agpestconz/wp-content/uploads/2016/02/EARTHWORM-ID-BROCHURE-Jan2016pdf 8 April 2016

Sharma BL, Singh SP, Sharma ML (2012) Bio-degradation of crop residues by Trichoderma species vis-a vis nutrient quality of the prepared compost. Sugar Tech 14:174–180

van Toor RF, Bithell SL, Chng SF, McKay A, Cromey MG (2013) Impact of cereal rotation strategies on soil inoculum concentrations and wheat take-all. New Zealand. Plant Prot 66:204–213

van Toor RF, Butler RC, Braithwaite M, Bienkowski D, Qiu W, Chng SF, Cromey MG (2016a) Pathogenicity of Gaeumannomyces graminis var. tritici increased by nitrogen applied to soil to enhance the decomposition rate of wheat residues. New Zealand. Plant Prot 69:111–119

van Toor RF, Chng S, Warren RM, Butler RC, Cromey MG, Craigie RA, McCloy BL (2016b) Wheat cultivar susceptibility to Gaeumannomyces graminis var. tritici and influence on Pseudomonas fluorescence numbers in the rhizosphere. Australas Plant Pathol 45:595–607

Yarham DJ (1981) Practical aspects of epidemiology and control. In: Asher MJC, Shipton PJ (eds) Biology and control of take-all. Academic Press, London, pp 353–384

Acknowledgements

We thank the cereal grower, Colin Hurst, for supply of land for soil; and Mark O’Hara of New Zealand Arable and Rob Craigie of the Foundation for Arable Research for maintaining the original trial from which the soil for this study was taken. We thank Plant & Food Research staff Sandi Keenan for guidance in conducting qPCR diagnostics of Ggt DNA; Dr. Denis Curtin for interpreting N data; Dr. Trish Fraser for identifying earthworms; Dr. Hamish Brown and Joanna Sharp for interpreting crop residue decay rates; and Mike George for monitoring soil core conditions. Funding was provided by the New Zealand Ministry of Business Investment and Employment Project LINX 0804.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

van Toor, R.F., Butler, R.C. & Cromey, M.G. Rate of decline of Gaeumannomyces graminis var. tritici inoculum and decomposition of residues from wheat cultivars. Australasian Plant Pathol. 47, 35–44 (2018). https://doi.org/10.1007/s13313-017-0526-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13313-017-0526-8