Abstract

In recent years, social media has witnessed an exponential growth in promoting healthy relationships and communication between family, friends, and acquaintances, but it isn’t without its flaws. It is clear that sometimes social media freedom can create an unattractive online environment. Hate speech and offensive language are frequently spread on social media platforms. Thus, they encompass different negative effects on our society. Therefore, detecting hate speech and offensive language has become the theme of one of the major research trends. Although the Arabic language occupies a distinct position among the languages on social media networks such as Twitter and Facebook, the ability to identify Arabic hate speech and offensive language is still developing due to the variety and complexity of Arabic dialects and forms. In this paper, we present an in-depth review focused on studies published between 2019 and September 2023 related to Arabic offensive language and hate speech detection. To conclude, we highlighted the most significant methods, Arabic datasets, taxonomy analysis, and challenges. Moreover, this review provides a foundation of knowledge that can help the researchers design and implement reliable and more accurate solutions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Disclaimer: due to the nature of this kind of study, some examples of offensive or hate speech may be included in this survey. These examples are solely for the purpose of understanding this issue and do not represent the views or opinions of the survey creators or any of their affiliated organizations. We do not condone or support offensive or hate speech of any kind. This work is an attempt to help fight such speech.

Social media networks have revolutionized the way we communicate and interact with each other. Through these networks (Shannaq et al. 2022), people from all over the world can connect and communicate instantly. Moreover, they can feel emboldened to freely (ElZayady et al. 2023; Mansur et al. 2023; Makram 2022) share and express their thoughts, views, and opinions in ways that may not be on a personal level (Azzi and Zribi 2022). Although offensive language and hate speech are unfortunate, they have become very common on social media platforms such as Facebook and Twitter.

In common language, hate speech refers to the term used to describe offensive statements in everyday discourse. Hate speech (Ruwandika and Weerasinghe 2018) can also be defined as the use of language to disparage or incite hatred towards a person or group based on their religion, race, gender, or social standing. Excessive use of social media has led to the spread of this kind of speech. Thus, it impacts negatively on mental health and may lead to real-world consequences such as hate crimes, discrimination, and intimidation. This can affect individuals and communities’ well-being and social cohesion, as mentioned in (Shannaq et al. 2022; Althobaiti 2022). Therefore, finding a solution for detecting hate speech has become crucial for countries, companies, and academic institutions (Elzayady et al. 2023). In addition, numerous studies on hate speech detection have been published, with a greater focus on the English language. In contrast, investigations into detecting Arabic hate speech are still emerging (Abuzayed 2020; Elzayady et al. 2022). Recently, due to the great interest in detecting online hate speech, we found a set of papers published to find appropriate solutions in an automated way for detecting hate speech in Arabic on social media platforms using different approaches and methods.

In the scope of our survey, we have concentrated on studies published in the last five years (2019–2023) pertaining to Arabic offensive language and hate speech detection. However, it is crucial to acknowledge that investigations predating 2019 have made substantial contributions to our comprehension of the distinctive challenges, solutions and the trends in this period regarding Arabic offensive language and hate speech detection. For instance, the authors in (Alakrot et al. 2018a, b) presented a comprehensive approach for detecting abusive language on Arabic social media using a large dataset of YouTube comments in Arabic to train a support vector machine classifier, exploring combinations of word-level features, N-gram features, and various pre-processing techniques achieving superior results. Another approach for detecting abusive language on Arabic social media, specifically in dialectal Arabic, was presented in a study by (Mubarak et al. 2017) The approach utilized two datasets: the first comprised 1100 manually labeled dialectal tweets, and the second included 32k comments flagged as inappropriate by moderators of prominent Arabic newswires. The authors introduced a statistical approach centered on a list of offensive words, achieving better outcomes. Thus, the insights gleaned from earlier research have laid a foundational understanding, providing valuable steps that continue to inform contemporary studies in this evolving field.

Therefore, this review focuses on the most recent studies on the detection of hate speech, offensive language, and abusive texts in Arabic. Our goal is to help researchers in the natural language processing (NLP) field understand the extent of the problem, evaluate the effectiveness of existing models, and develop customized solutions to mitigate the negative impacts of Arabic hate speech on social media. So, we presented this comprehensive survey, including the earlier studies, Arabic datasets, various machine learning (ML) and deep learning (DL) models, hybrid solutions, and data preparation processes: Arabic language preprocessing steps and feature extraction methods. The existing challenges with methods and the Arabic language are discussed. Moreover, we highlighted the challenges for future trends in this field.

1.1 Methodology

This section presents the procedures followed in this review, such as the search strategy, the keywords, inclusion and exclusion criteria, data extraction, and data synthesis.

The main objective of this study is to investigate the state of the art of the latest techniques in NLP to automatically detect Arabic hate speech and offensive language on different social media platforms. This survey covers the following research questions:

-

Q1 What is your understanding of offensive language and hate speech in the Arabic language?

-

Q2 What are the most promising NLP techniques, common preprocessing, and feature extraction methods for Arabic hate speech detection, and how can these techniques be optimized for Arabic datasets?

-

Q3 What are the most available Arabic datasets and how are the datasets annotated? Which social media platforms are the most frequently used?

-

Q4 What are the specific linguistic and socio-cultural features of Arabic language that make it challenging for offensive language and hate speech detection using NLP techniques?

-

Q5 What are the future directions for research in Arabic hate speech detection using NLP, and what are the key challenges and opportunities for advancing this field?

1.1.1 Search strategy

The primary objective of this review is to investigate the current scientific literature from 2019 to September 2023 that concerns Arabic offensive language and hate speech detection on social media platforms. The study aims to analyze and synthesize recent works conducted on social media platforms for detecting offensive Arabic language and hate speech in order to provide an all-inclusive summary of advancements made in this area. Therefore, we formulated a search query to find the most relevant papers on the subject of interest as follows: firstly, we established the most frequently used keywords, such as offensive language, hate speech, Arabic, Arabic offensive, Arabic hate, abusive language, classification, and detection. Second, these terms were used in multiple combinations using the Boolean operators (AND) and (OR) to form the search query.

The databases used in our search process are IEEE, Springer, Science Direct, ACL Anthology, ACM DL, IJECE Journal, IJACSA Journal, SCITEPRESS, Taylor & Francis, Emerald, applied science, Revue d’Intelligence Artificielle Journal, IOS Press, and Scopus. These databases have been carefully selected based on their abundant scientific competence in several high-impact research papers, or at least the databases that are indexed in Scopus provide fair coverage of the reviewed literature.

1.1.2 Inclusion and exclusion criteria

The inclusion and exclusion criteria were used in the selected studies to identify which studies fulfilled the target of this review. The inclusion criteria involved papers that were published from 2019 to September 2023. Our main focus was only on studies about offensive language and hate speech related to the Arabic language and its challenges, whether these studies are experimental, comparative, reviews, or survey articles. While the exclusion criteria are as follows: we excluded all papers related to the detection of offensive and hate speech in other languages, such as English, Turkish, Indian, etc. Also, any publications before 2019 were excluded.

After deep analysis, we have included 54 studies in this review from variant databases published in the last five years, as shown in Fig. 1 and Fig. 2 respectively.

This survey was conducted to provide a background on Arabic offensive language and hate speech detection on social media by answering the questions mentioned above. The rest of this paper is organized as follows: the above section provides a brief introduction to the main topic. Section 2 presents a theoretical background. Then preprocessing steps and feature extraction methods will be presented in Section 3. Section 4 will go through NLP, ML, and DL techniques for detecting offensive Arabic language and hate speech. Thereafter, Section 5 presents the datasets used in previous experiments. Section 6 will go through the work related to Arabic offensive language and hate speech detection. Then a discussion about challenges and future research directions will be presented in Section 7. Finally, we concluded the work in this paper.

2 Background

This section introduces the Arabic language and its significance, as well as defining Arabic hate speech and offensive language. Understanding the uniqueness of the Arabic language and cultural nuances is crucial for effectively detecting and addressing offensive language and hate speech within the Arab-speaking communities. By acknowledging the importance of detecting and combating such harmful speech, we aim to contribute to a safer and more inclusive online environment for Arabic speakers.

2.1 Arabic language

Arabic is a unique language. It is also the original language of the Quran and the HadithFootnote 1 (Referring to reports of statements or actions of the Prophet Muhammad, or of his tacit approval or criticism of something said or done in his presence). The Arabic language, with its profound historical and cultural significance, has distinct characteristics that shape its linguistic landscape. It comprises 28 letters, follows a right-to-left writing system, and incorporates gender-specific forms for various parts of speech (Rahma et al. 2023; Husain and Uzuner 2022a, b). For example, the word “Qaseera/  ” refers to a short female, and the word “Qaseer/

” refers to a short female, and the word “Qaseer/  ” refers to a short male. Moreover, the limited presence of vowels (أ/alef, waaw/و, and ي/yaa) adds another layer of intricacy (Azzi and Zribi 2021). An additional characteristic of the Arabic language is the variability in the appearance of each letter, contingent upon its position within a word. To illustrate, the letter “ق/qaf” can manifest in various forms, such as “

” refers to a short male. Moreover, the limited presence of vowels (أ/alef, waaw/و, and ي/yaa) adds another layer of intricacy (Azzi and Zribi 2021). An additional characteristic of the Arabic language is the variability in the appearance of each letter, contingent upon its position within a word. To illustrate, the letter “ق/qaf” can manifest in various forms, such as “ , ” depending on whether it is positioned at the word’s outset, in the middle, or at the end. Refer to Fig. 3 for a visual representation. Diacritics, commonly referred to as Tashkil or Harakat in Arabic. These diacritics play a crucial role in conveying the precise meaning of an Arabic word. Interestingly, they facilitate disambiguation, as words with distinct meanings may share the same visual form. For example, the Arabic word “

, ” depending on whether it is positioned at the word’s outset, in the middle, or at the end. Refer to Fig. 3 for a visual representation. Diacritics, commonly referred to as Tashkil or Harakat in Arabic. These diacritics play a crucial role in conveying the precise meaning of an Arabic word. Interestingly, they facilitate disambiguation, as words with distinct meanings may share the same visual form. For example, the Arabic word “ ” means the north cardinal directions, and “

” means the north cardinal directions, and “ ” carries a dual meaning, referring not only to the left direction but also encompassing a connotation of something negative or offensive in language in Arabic. Refer to Fig. 4 for a visual representation. Similarly, singular, dual, and plural forms contribute to the language’s expressive depth. On the other hand, the Arabic language consists of mixed dialects (Alsafari et al. 2020a, b), such as Gulf Arabic, Egyptian Arabic, and Levantine Arabic. From the aforementioned characteristics, the Arabic language presents several challenges in the context of natural language processing (NLP), stemming from its complex morphology and the use of dialects with rich cultural and historical roots. Although, the Arabic language has witnessed a substantial increase in its prevalence on various digital spaces, including but not limited to social networks. Moreover, it holds the fourth position among the most frequently utilized languages on the web (Khezzar et al. 2023). Unfortunately, there has been a surge in offensive language and hate speech on Arabic social media platforms in recent years (Shannaq et al. 2022; Mohaouchane et al. 2019). However, in response to these challenges, researchers have leveraged advanced technologies, including natural language processing, machine learning, and deep learning techniques in their studies. The findings from these studies emphasize that hate speech and offensive language in Arabic have evolved into a pressing concern, underscoring the need for further investigation and the development of effective mitigation strategies (ElZayady et al. 2023), (Althobaiti 2022).

” carries a dual meaning, referring not only to the left direction but also encompassing a connotation of something negative or offensive in language in Arabic. Refer to Fig. 4 for a visual representation. Similarly, singular, dual, and plural forms contribute to the language’s expressive depth. On the other hand, the Arabic language consists of mixed dialects (Alsafari et al. 2020a, b), such as Gulf Arabic, Egyptian Arabic, and Levantine Arabic. From the aforementioned characteristics, the Arabic language presents several challenges in the context of natural language processing (NLP), stemming from its complex morphology and the use of dialects with rich cultural and historical roots. Although, the Arabic language has witnessed a substantial increase in its prevalence on various digital spaces, including but not limited to social networks. Moreover, it holds the fourth position among the most frequently utilized languages on the web (Khezzar et al. 2023). Unfortunately, there has been a surge in offensive language and hate speech on Arabic social media platforms in recent years (Shannaq et al. 2022; Mohaouchane et al. 2019). However, in response to these challenges, researchers have leveraged advanced technologies, including natural language processing, machine learning, and deep learning techniques in their studies. The findings from these studies emphasize that hate speech and offensive language in Arabic have evolved into a pressing concern, underscoring the need for further investigation and the development of effective mitigation strategies (ElZayady et al. 2023), (Althobaiti 2022).

2.2 Offensive language

Abusive or offensive language definition is a very complex task and a debatable issue (Husain and Uzuner 2021). Offensive language on social media refers to any language used that is intended to harm, insult, degrade, or discriminate against an individual or group of individuals based on their race, gender, sexual orientation, religion, nationality, or disability. It can take many forms (Alshalan and Al-Khalifa 2020) including hate speech, cyberbullying, trolling, and harassment. For instance, a YouTube comment like “ ”, which means: “May God curse you; your voice is like a donkey’s voice”. As mentioned in (Azzi and Zribi 2021), offensive language can be defined as any content that contains some form of abusive behavior, exhibiting actions with the intention of harming others, causing hurt, and making others angry. Also, (Azzi and Zribi 2022) provides some offensive language classes, namely, racism, sexism, xenophobia, violence, hate, pornography, religious hatred, and LGBTQ hate. The definition of offensive language depends on people’s social and political backgrounds. Regarding the types of offensive language, (Azzi and Zribi 2021) provides the main types of offensive language on social media as follows: discriminative content includes any sort of prejudice against a person showing different physical characteristics, belongings, or preferences, while violent content is the use of any term threatening or promoting an intentioned act of violence. Adult content includes pornography, texts illustrating sexual behavior and more importantly children sexual abuse. Vulnerable categories of people like children or youth are particularly vulnerable to the psychological threat of adult-oriented content on social media.

”, which means: “May God curse you; your voice is like a donkey’s voice”. As mentioned in (Azzi and Zribi 2021), offensive language can be defined as any content that contains some form of abusive behavior, exhibiting actions with the intention of harming others, causing hurt, and making others angry. Also, (Azzi and Zribi 2022) provides some offensive language classes, namely, racism, sexism, xenophobia, violence, hate, pornography, religious hatred, and LGBTQ hate. The definition of offensive language depends on people’s social and political backgrounds. Regarding the types of offensive language, (Azzi and Zribi 2021) provides the main types of offensive language on social media as follows: discriminative content includes any sort of prejudice against a person showing different physical characteristics, belongings, or preferences, while violent content is the use of any term threatening or promoting an intentioned act of violence. Adult content includes pornography, texts illustrating sexual behavior and more importantly children sexual abuse. Vulnerable categories of people like children or youth are particularly vulnerable to the psychological threat of adult-oriented content on social media.

To the best of our knowledge, detecting offensive language on social media is a complex task due to the sheer volume of data, new words continuously emerging (Mubarak and Darwish 2019), the use of slang or highly contextualized language, and the rapidly changing nature of language use on social media platforms. Research has been conducted on the detection of offensive language on social media using natural language processing (NLP) techniques, including machine learning, deep learning techniques, and sentiment analysis.

2.3 Hate speech

The definition of hate speech has always been a topic of discussion (Boulouard et al. 2022a, b). According to (ElZayady et al. 2023; Alhejaili et al. 2022; Awane et al. 2021; Husain and Uzuner 2021; AlKhamissi 2022), hate speech is any form of public expression that promotes, incites, or justifies hatred, discrimination, or hostility against one person or a group of people based on their identity. For instance, a tweet like “ ”, which means: “This is a poorly mannered family, and if I were you, I would slap him on his face”. Hate speech in (Guellil et al. 2020) was defined as any communication that disparages or defames a person or a group on the basis of some characteristic such as race, color, ethnicity, gender, sexual orientation, nationality, religion, or other characteristic, and it was classified into four categories: gender-based hate speech, religious hate speech, racial hate speech, and disability hate speech. The study (Faris et al. 2020) defines hate speech as the use of offensive language to spread hatred and discrimination based on race, sex, religion, or disability.

”, which means: “This is a poorly mannered family, and if I were you, I would slap him on his face”. Hate speech in (Guellil et al. 2020) was defined as any communication that disparages or defames a person or a group on the basis of some characteristic such as race, color, ethnicity, gender, sexual orientation, nationality, religion, or other characteristic, and it was classified into four categories: gender-based hate speech, religious hate speech, racial hate speech, and disability hate speech. The study (Faris et al. 2020) defines hate speech as the use of offensive language to spread hatred and discrimination based on race, sex, religion, or disability.

Finally, hate speech is complex and ambiguous because it is not just word identification (Haddad 2020). It can occur in different linguistic styles and through different acts, such as insulting, abusing, provocation, and aggression (Omar et al. 2020).

2.4 Importance of offensive language and hate speech detection

Arabic offensive language detection and hate speech detection on social media would be crucial for several reasons. First, with the increasing number of Arabic speakers on social media (Boulouard et al. 2022a, b), it is essential to have effective tools to detect offensive language and hate speech produced in Arabic. Second, social media has been used as a platform for hate speech and offensive language due to the freedom of expression on such platforms (Badri et al. 2022). The spread of misinformation, propaganda, and biased narratives has led to social unrest and violence in some countries. By detecting and removing such content in Arabic, social media platforms can promote a safe and inclusive online environment. Third, automated detection systems that can perform real-time analysis of large volumes of social media data in Arabic can help governments and authorities detect and prevent hate crimes, radicalization, and other forms of extremist behavior. In conclusion, Arabic offensive language detection and hate speech detection on social media are critically important for promoting peace, harmony, and inclusivity in society. This review can enhance our understanding of the challenges of detecting and addressing offensive language and hate speech on social media and help develop effective algorithms and tools for mitigating such content.

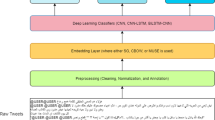

3 Preprocessing and feature extraction methods

Hate speech and abusive language are prevalent on social media platforms, and controlling such language is essential to promoting a safer and more inclusive online environment. In recent years, researchers have started to develop algorithms and models to detect hate speech and abusive language in Arabic and its dialects. Preprocessing steps and feature extraction methods play a critical role in the accuracy of these algorithms. Preprocessing steps usually involve segmentation, normalization, and cleaning techniques. Feature extraction methods used for Arabic language hate speech and abusive language detection include lexical, syntactic, and semantic features. This section aims to provide an overview of the preprocessing steps and feature extraction methods used for Arabic language and dialect hate speech and abusive language detection on social platforms.

3.1 Preprocessing steps

In the literature presented, researchers have employed various preprocessing steps to improve the accuracy of Arabic offensive language detection and hate speech detection methods on social media. Some of the most commonly used preprocessing steps include:

3.1.1 Stop words removal

Stop words are frequently occurring words that do not carry much meaning. Researchers remove these words from the text before running any analysis (Alshalan and Al-Khalifa 2020; Husain 2020; Alotaibi and Abul Hasanat 2020; AbdelHamid et al. 2022). In addition, the authors in (Albadi et al. 2019) presented that they didn’t remove any negation words since these are usually informative in sentiment analysis tasks.

3.1.2 Noise removal

Researchers remove various forms of noise such as URLs, Emojis, digits, punctuation marks, non-Arabic words, repeated characters, mentions, HTML tags, and other symbols such as <div>, emails, dates, and diacritics. Diacritics are short vowels and characters above and beneath letters, such as fatha, damma, kasra, etc. (Shannaq et al. (2022); Elzayady et al. 2023a, b); Makram 2022; Azzi and Zribi 2022; Althobaiti 2022; Berrimi et al. 2020; Alshalan and Al-Khalifa 2020; Haddad 2020; Omar et al. 2020; Husain 2020; Mubarak 2020; Alakrot et al. 2021; AbdelHamid et al. 2022; Badri et al. 2022; Alsafari et al. 2020a, b; Mostafa 2022; Alzubi 2022; Boulouard et al. 2022a, b; Khezzar et al. 2023). In addition, the authors in (Elzayady et al. 2022) raised the removal of empty lines to obtain cleaner text.

3.1.3 Tokenization

Researchers split the text into small units, such as words or phrases, to facilitate analysis. This operation therefore makes it possible to segment a text document into word tokens (Badri et al. 2022).

3.1.4 Stemming and lemmatization

Stemming and lemmatization are used to reduce words to their base forms and reduce the number of unique words in the dataset (Elzayady et al. 2023a, b; Boulouard et al. 2022a, b).

3.1.5 Emoji and emoticon conversion

It means changing emoji and emoticons into Arabic textual labels that explain the content of them such as 😊, is replaced by (سعيد) which means ‘happy’ (Elzayady et al. (2023a, b; Husain and Uzuner 2022a, b; Alshalan and Al-Khalifa 2020; El-Alami et al. 2022), and (Alzubi 2022).

3.1.6 Normalization

(Shannaq et al. (2022); Husain and Uzuner 2022a, b; AlFarah et al. 2022) The normalization of Arabic characters, such as changing the letters ( ) to (ا), and (

) to (ا), and ( ) to (

) to ( ). Also, (Al-Hassan and Al-Dossari 2021) included the removal of the Arabic dash that is used to expand the word (e.g.,

). Also, (Al-Hassan and Al-Dossari 2021) included the removal of the Arabic dash that is used to expand the word (e.g.,  ) to (

) to ( ) which means ‘learning’.

) which means ‘learning’.

3.2 Feature extraction methods

Feature extraction is the process of transforming raw data into features that can be used for model training. Different feature extraction methods have been used to identify the presence of offensive language and hate speech in Arabic social media texts. The most commonly used feature extraction methods include:

3.2.1 Bag of words (BOW)

This method involves counting the frequency of each word in the text and then treating the counts as features.

3.2.2 TF-IDF

This method assigns a weight to each word based on its frequency in the document and its frequency across all documents.

3.2.3 N-grams

This technique involves extracting a sequence of n words from the text and treating them as features, where n can be any positive integer.

3.2.4 Word embedding (WE)

This method involves representing words in a vector space, such that words with similar meanings are closer together. We can also say that it helps in capturing the underlying semantic relationships between words.

3.2.5 Linguistic-based features (part of speech tagging (POS))

This technique involves identifying the grammatical structure of the text and using it to extract meaningful features. It involves labeling each word in the text with its corresponding part of speech, such as noun, verb, adjective, etc., and extracting features based on the frequency of hate speech keywords in each part of speech category.

Finally, Table 1 demonstrates the different feature extraction and word representation methods used in Arabic offensive language and hate speech detection.

4 Taxonomy: NLP, ML and DL models FOR Arabic offensive and hate speech detection

Natural language processing (NLP) is an advanced computational approach that deals with the analysis, understanding, performing natural-language commands, and generation of human language (Mansur et al. 2023). Over the past few years, NLP has gained significant attention from researchers and practitioners due to its promising applications in several fields, including but not limited to text classification, sentiment analysis, and speech recognition. Text classification can be useful for automatically identifying offensive language by assigning labels to new unseen texts (Husain and Uzuner 2021). To the best of our knowledge, one of the most pressing challenges that NLP has recently faced is the rise of offensive language and hate speech on social media platforms. Arabic, as a language with a rich history and a broad user-base, has been heavily affected by this challenge. Therefore, in this section, we aim to provide a comprehensive taxonomy analysis of various methods used in this domain, including machine learning, deep learning, transformer-based methods, and ensemble approaches.

Machine learning methods have been widely used in hate speech detection tasks. These methods have shown promising results in identifying hate speech, but they may struggle to capture complex semantic relationships and dependencies in Arabic text. Table 2 provides a summary of the most common ML methods used in the selected studies.

To overcome this limitation, deep learning techniques have gained popularity due to their ability to capture intricate patterns in text data. Deep learning models, such as Convolutional Neural Networks (CNN), recurrent neural networks (RNN). Table 3 provides a summary of the most common DL methods used in the selected studies.

On other hand, transformer-based methods such as, BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pretrained Transformer) have achieved remarkable success in various natural language processing tasks, including hate speech detection. Table 4 provides a summary of the most Transformer-based and transfer learning methods used in the selected studies.

Additionally, ensemble methods have been proposed to capitalize on the strengths and weakness of different models and enhance hate speech detection performance further. Table 5 provides a summary of the most common ensemble models used in the selected studies.

Finally, an extensive taxonomy analysis of machine learning, deep learning, transformer-based, and ensemble methods for offensive language and Arabic hate speech detection is illustrated in Fig. 5.

5 Datasets

The datasets used in Arabic offensive language and hate speech detection play a crucial role in determining the effectiveness and accuracy of the techniques used. The quality and quantity of the data directly impact the performance of these techniques. Consequently, it is essential to use high-quality datasets that can accurately represent the different types of offensive language. The datasets building process involves three stages (Omar et al. 2020): data collection, data filtering, and data annotation. Figure 6 depicts the dataset building process. In this section, to present a clear overview, we have provided a comprehensive table (Table 6) outlining the datasets employed, offering crucial details such as their names, sizes, sources, and characteristics. These datasets were representing a diverse range of offensive language and Arabic hate speech instances, allowing for a more thorough examination of the problem at hand.

Furthermore, we introduced the dataset from availability/non-availability perspective. Figure 7 shows the percentage of dataset availability. On other hand, this survey revealed that a large majority of the Arabic hate speech datasets are imbalanced in nature. This means that the datasets contain a disproportionate amount of data representing certain types of hate speech, while other types are underrepresented. By analyzing the datasets used in this review, researchers can identify common features and patterns that could be leveraged to improve the accuracy and efficiency of hate speech detection algorithms. Moreover, by comparing the results of different studies and analyzing the underlying datasets, researchers can determine the most effective approaches and identify areas for future improvement. Also, in Table 7 we showed the most frequent datasets used for Arabic offensive language and hate speech detection in recent studies.

6 Literature review

This section highlights a brief summary of the earlier studies related to the domain of our survey and how they contribute to the existing body of knowledge on Arabic offensive language detection on social media. First of all, it should be mentioned that the Arabic language is one of the most widely spoken languages globally, and social media platforms are widely used by Arabic-speaking communities (Azzi and Zribi 2022; Berrimi et al. 2020; Husain and Uzuner 2022a, b; Mohaouchane et al. 2019; Al-Hassan and Al-Dossari 2021). In the research conducted by (Elzayady et al. (2023, 2022); Abuzayed 2020; Husain and Uzuner 2022a, b), and to the best of our knowledge, studies done in Arabic compared to other languages to find an optimum solution for automatically detecting offensive and hate speech are still few. Recently, the researchers paid attention to Arabic natural language processing (ANLP) and its challenges in developing automatic solutions for Arabic offensive language detection on social media.

Researchers used a variety of approaches to detect and classify offensive Arabic languages in these competitions. For instance, some authors examined ML methods such as NB, KNN, SVM, RF, XGBoost, DT, and LR (Shannaq et al. 2022; EL-Zayady et al. 2023a, b; Azzi and Zribi 2022; Makram 2022; Althobaiti 2022; Alhejaili et al. 2022). Others applied a fine tuning of deep bidirectional transformers for Arabic, such as AraBERT and MARBERT (Althobaiti 2022; Elzayady et al. 2023; Husain and Uzuner 2022a, b). However, (Elzayady et al. 2023a, b; Azzi and Zribi 2022; Mohaouchane et al. 2019; Al-Hassan and Al-Dossari 2021; Alsafari et al. 2020a, b; Duwairi et al. 2021) trained various deep neural network models.

This review of Arabic offensive language and hate speech detection does not exceed fifty-four studies, as mentioned above. In addition, a brief summary of the studies, contributions, techniques, and superior results is presented in Table 8.

Several attempts are conducted in the literature to detect Arabic offensive language using a variety of datasets collected from different social media platforms. For instance, the authors in (Shannaq et al. 2022) proposed an intelligent prediction system to detect offensive language in Arabic tweets. For this purpose, they tested the proposed approach on an Arabic Cyber Bullying Corpus (ArCybC), which contains 4505 tweets collected from different domains on Twitter: gaming, sports, news, and celebrities, by fine-tuning the pre-trained word embedding models using seven ML classifiers, namely NB, KNN, SVM, RF, XGBoost, DT, and LR. They found that the XGBoost and SVM algorithms gave excellent results. Therefore, they applied a hybrid approach to these two classifiers using a genetic algorithm (GA), namely GA-SVM and GA-XGBoost, to reduce the time and cost and mitigate the challenges of optimizing their hyperparameters.

The SVM algorithm with the Aravec SkipGram word embedding model achieved superior results in terms of accuracy (88.2%) and F1-score rate (87.8%).

Similarly, the authors in (Shannag et al. 2022) presented the development and evaluation of a multi-dialect and annotated Arabic cyberbullying corpus (ArCybC) for detecting and analyzing cyberbullying in Arabic. They highlighted the lack of annotated Arabic cyberbullying data as a hindrance to the development of effective detection models. To address this, they introduced machine learning models and experimented with techniques such as support vector machine (SVM), random forest (RF), XGBoost, decision tree (DT), and logistic regression (LR) using both TF-IDF and Aravec word embedding. The authors used the same corpus in (Shannaq et al. 2022), and the results of the experiments reveal that the SVM model with word embedding performed the best, achieving an accuracy rate of 86.3% and an F1-score rate of 85%.

In another cyberbullying study (AlFarah et al. 2022), the authors focused on the detection in the Arabic language using machine learning techniques of cyberbullying. They identified the challenges of working with an imbalanced dataset, where the number of cyberbullying instances is significantly lower than the number of non-bullying instances, and proposed the use of sampling techniques such as SMOTE to overcome this issue. The authors used a dataset of 24,560 Arabic tweets and comments collected from Twitter and YouTube and oversampled the minority class to balance the data. They also compared the performance of various machine learning algorithms and found that Naïve Bayes achieved the highest AUC at 89%. The proposed approach shows promise in effectively detecting cyberbullying in Arabic tweets, despite the imbalanced nature of the dataset.

Moreover, the surveys (Khairy et al. 2021; ALBayari et al. 2021) reviewed cyberbullying classification methods for Arabic, classified into three categories: deep learning-based, machine learning-based, and hybrid. These reviews also highlighted the challenges posed by the Arabic language for natural language processing tasks as well as the growing interest in developing machine learning and deep learning models for detection. Contextual features such as sentiment analysis and user profiling were found to be more effective in capturing the nuances of the Arabic language. Results show that SVM and CNN are the most used algorithms, but the quality of datasets and features has a significant impact on performance.

In another study (Azzi and Zribi 2022), the authors aimed to investigate various state-of-the-art models for detecting abusive language in Arabic social media. They conducted their experiments to detect eight specific subtasks of abusive language in Arabic social platforms, namely racism, sexism, xenophobia, violence, hate, pornography, religious hatred, and LGBTQa hate, using CNN, BiLSTM, and BiGRU deep neural networks with pre-trained Arabic word embeddings (AraVec) and also pre-trained Arabic word embeddings and a BERT model comparing the results with an ML-based algorithm (SVM). They compiled a dataset from two famous platforms, which are Twitter and YouTube. The dataset consists of 6000 records. They performed manual annotation for it; 1914 out of the 6000 lines (31%) were labelled as normal, while the rest were marked as abusive. The result shows that CNN, BiLSTM, BiGRU, and BERT have outperformed the base ML classifier SVM, and the BERT model achieved the best results in terms of precision (90%) and micro-averaged F1-Score (79%).

Unlike, a more specific dataset was presented in (Alsafari et al. 2020a, b) for hate and offensive speech, containing 5340 records collected from Twitter. It was written in the most common Arabic languages: the Gulf Arabic dialect, spoken by the Arabian Peninsula countries, and modern standard Arabic, understandable by all Arabic speakers. This corpus has been divided among two-class, three-class, and six-class labelling datasets. The authors proposed single and ensemble artificial neural network (ANN) architectures, CNN and BiLSTM that are trained with different word embedding techniques, non-contextual: Fasttext-SkipGram, and contextual: multilingual Bert (MBERT) and AraBert. The challenge was a six-class classification setting where the goal was not only to detect the existence of hate but also the type of hate.

The experiments showed that for single learners, CNN + AraBERT is the best single classifier on each classification task, and the two contextualized word embeddings, AraBert and MBert, outperformed the non-contextualized FastText with both ANN models. For an ensemble of learners, the ensemble models perform better than the single models across all performance metrics. The CNN-Average improves the performance across two-class and three-class labels, but for the challenge of six-class classifications, the average-based BiLSTMs ensemble model obtained an F1-Macro of 80.23%, which outperformed the average-based CNN ensemble. For future work, they aim to try semi-supervised classification.

Reference (Berrimi et al. 2020) worked on a novel deep learning model based on the attention mechanism for smooth and accurate learning and classification to filter out offensive and abusive Arabic content on social media posts and comments. They used four available Arabic datasets from some previous studies (Mubarak et al. 2017; Albadi et al. 2018; Mulki et al. 2019; Haddad et al. 2019) related to inappropriateness in the Arabic language. These datasets are collected from different platforms as follows: the first one was obtained from comments deleted from Aljazira.com. It contains 32k comments that were annotated using CrowdFlower; these comments were labelled as obscene, offensive, or clean. The second, AraHate dataset, consists of 6000 tweets collected from Twitter and labelled as “hate” or “not hate”. Third, they used the Subtask ‘A’ dataset shared within the 4th Workshop on open-source Arabic Corpora and Processing Tools (OSACT4). It contains 10,000 tweets that were manually annotated and labelled as OFF or NOT OFF. Finally, they combined two datasets, namely, L-HSAB (5846 tweets) and T-HSAB (6024 records collected from Facebook and YouTube), to obtain a larger dataset of different Arabic dialects of abusive and hate speech. The authors proposed a soft attention mechanism to detect different types of inappropriate speech by applying three models, namely, EL LSTM, ESoA, and ELSoA, to each dataset. The results indicated that the ELSoA model has achieved superior accuracy of 97.47%.

Also, F. Husain et al. (Husain and Uzuner 2022a, b) worked on the same dialectal datasets (L-HSAB, T-HSAB, and OSACT4) used in (Berrimi et al. 2020), except the authors used the Egyptian Tweets dataset instead of Aljazira.com. The Egyptian Tweets dataset consists of 1100 records collected from Twitter and labelled as offensive or not offensive. They proposed a transfer learning approach across different Arabic dialects for offensive language detection using the BERT model. They built on the pre-trained AraBERT model using the above dialectal datasets for fine-tuning and evaluating the model to see the effect of different Arabic dialects on offensive language detection. The experiments indicated that the Egyptian and Tunisian dialects gained better performance than Levantine in terms of accuracy of 0.86% and F1 rate of 0.85%.

On the other hand, the study (Mohaouchane et al. 2019) aimed to fill this gap in Arabic offensive language detection on social media. The authors proposed four different neural network models, namely: convolutional neural network (CNN), bidirectional long short-term memory (Bi-LSTM), attentional Bi-LSTM, and a combined CNN-LSTM model for detecting offensive texts on social media platforms. They used an available dataset of 15,050 records of Arabic YouTube comments taken from popular, controversial YouTube videos about Arab celebrities. The dataset was manually annotated, and the data was labelled either offensive or not offensive. The dataset was imbalanced, so the authors used the random oversampling technique to balance its classes and obtain accurate classification results. The combined CNN-LSTM network achieved the best recall rate of 83.46%, while it was clear that the CNN model achieved the best accuracy and precision rates of 87.84% and 86.10%, respectively.

Likewise, the authors in (Alhejaili et al. 2022) built a dataset during the COVID-19 pandemic period from January 31 to March 6, 2021, to provide an automatic way to detect hate speech in Arabic tweets during this pandemic using a variety of machine learning classifiers. The dataset was collected and preprocessed from Twitter and consists of 5408 tweets, which were then annotated as hate or not hate. They used TF-IDF for feature extraction and trained the dataset in three types: unigram, bigram, and trigram. The authors used a set of machine learning classifiers, namely support vector machine (SVM), random forest (RF), logistic regression (DT), decision tree, AdaBoost, k-nearest neighbours (KNN), and Gaussian naïve Bayes (GNB), to classify the content into hate or not hate. The seven classifiers did well, but the classifier LR achieved the highest performance in accuracy (Acc) of 90.8% with unigram. Otherwise, the AdaBoost model achieved the highest precision (P) at 90.8% with trigram. In the future, they aim to use deep learning models for Arabic hate speech detection during COVID-19 and compare the results with the above machine learning models results.

Elzayady et al. 2022 proposed two effective models using online supervised machine learning and deep neural networks, namely, passive-aggressive classifiers (PAC) and bidirectional gated recurrent units with attention (BI-GRU), to improve Arabic hate speech identification. The authors used the first Arabic hate speech multi-platform dataset. It was collected from four social media networks that contributed comments: Twitter, YouTube, Facebook, and Instagram. The dataset is well-balanced and consists of 20,000 posts, tweets, and comments, of which 10,000 are hateful and the other 10,000 are non-hateful. A variety of preprocessing steps for data preparation have been conducted. They used both term frequency-inverse document (TF-IDF) and pre-trained AraVec2.0 word embeddings as feature extraction techniques for text representation. The experiments were done and tested in Google Colab Pro by using NumPy, Pandas, Re, Alphabet Detector, Sklearn, and Keras packages. The results were assessed in terms of accuracy, precision, recall, and F1 score values. It was clear that the BI-GRU model outperformed PAC, where Bi-GRU with an attention layer provided an accuracy of 99.1% and PAC achieved 98.4%.

Moreover, Duwairi et al. 2021 proposed a deep learning framework for automatic detection of hate speech within Arabic tweets. The framework was developed using a hybrid approach of recurrent and convolutional neural networks, namely: CNN, LSTM-CNN, and BiLSTM-CNN, along with pre-processing techniques such as word-level (SG, CBOW) and sentence-level (pre-trained MUSE) embedding to represent and classify text data. The authors evaluated their model on a large dataset of 23,678 Arabic tweets, which was compiled from three datasets: the Arabic Hate Speech (ArHS) dataset, the Levantine Hate Speech and Abusive (L-HSAB) dataset, and the 4th workshop on Open-Source Arabic Corpora and Processing Tools (OSACT4) shared task dataset, and compared its performance with other existing methods, demonstrating the effectiveness of the proposed framework in accurately detecting hate speech. The study highlights the potential of deep learning approaches for hate speech detection in languages other than English. The results showed that the SG-BiLSTM-CNN and SG-CNN were the best-performing models with the multi-class classification using the ArHS dataset.

In addition, the study by (Makram 2022) introduces machine learning and transformer-based models as a hybrid model for detecting offensive and hateful Arabic speech. The model consists of multiple classifiers, such as logistic regression and random forest; each specialized in detecting a specific type of offensive language. The authors trained the model on a dataset of Arabic social media posts using the Arabic pre-trained Bert language model MARBERT for feature extraction of the Arabic tweets in the dataset provided by the OSACT2022 shared task. The results were divided among hate and offensive classes, where the best results achieved for the offensive tweet detection task were achieved by the logistic regression model with accuracy, precision, recall, and f1-score of 80%, 78%, 78%, and 78%, respectively, while the results for the hate speech tweet detection task were 89%, 72%, 80%, and 76%. The authors also discussed the limitations and future directions for improving the model’s performance. They also plan to investigate different machine learning classifiers such as SVM and Naive Bayes for the binary classification tasks using different representation models in the hope of achieving higher scores.

Also, Al-Hassan et al. (Al-Hassan and Al-Dossari 2021) presented a method for detecting hate speech in Arabic-language tweets using deep learning techniques. The authors collected a dataset of Arabic tweets consisting of 11k tweets and manually annotated them as five distinct classes: none, religious, racial, sexism, or general hate. The authors used the SVM model with TF-IDF word representation as a baseline for several deep learning models, namely, LTSM, CNN + LTSM, GRU, and CNN + GRU, to classify new tweets. The results showed that the proposed models achieved high accuracy in detecting hate speech in Arabic tweets, suggesting that these techniques can be useful for identifying and addressing hate speech in online Arabic-language communities. Overall, the ensemble model of CNN + LTSM obtained superior performance with 72% precision, 75% recall, and 73% F1 score.

Another study (Althobaiti 2022) proposed a new approach using the BERT model for hate speech and offensive language detection in Arabic tweets. The approach utilizes both emojis and sentiment analysis as appending features along with the textual content of the tweets in order to improve the accuracy of the detection. The authors compared their model with two conventional machine learning classifiers, support vector machine (SVM) and Logistic Regression (LR). They used the largest and most recently released dataset (OSACT 2022 shared task) for offensive language and hate speech detection in Arabic, which contains 12,698 tweets. The dataset was defined for three tasks: offensive language, hate speech, and fine-grained hate speech, which focus on specific types of hate speech. Various levels of preprocessing were done for data preparation, such as cleaning (CLN), appending sentiments (SA) as additional textual features, and replacing emojis (EmoTxt) with their corresponding textual descriptions. As a result, there are five versions of the dataset: original tweets, CLN, CLN + SA, CLN + EmoTxt, and CLN + SA + EmoTxt. They trained SVM and LR on these datasets’ versions using word n-grams and TF-IDF, and they built five BERT models for each task as follows: AraBERT, QARiB, mBERT, XLM-RoBERTa, and their proposed model with its suggested preprocessing levels. The results of the experiments demonstrate that the proposed approach achieves high performance in detecting offensive language, hate speech, and fine-grained hate speech in Arabic tweets, with an F1-Score of 84.3%, 81.8%, and 45.1% for each task respectively.

Another work by Elzayady et al. (2023a, b) proposed a hybrid approach for hate speech detection in Arabic social media by combining machine learning algorithms and personality trait analysis. They collected a dataset of social media posts (the Arapersonality dataset and the OSACT dataset) and extracted linguistic features using natural language processing (NLP) techniques. They investigated the implementation of both machine learning models: RF, extra trees, DT, SVM, gradient boosting, XGBoost, and logistic regression (LR) and deep learning models: recurrent neural networks (RNNs) and CNN, namely, LSTM, bidirectional long short-term memory (BI-LSTM), a gated recurrent unit (GRU), and hybrids of CNN and RNN models (CNN-LSTM, CNN-BILSTM, and CNN-GRU). Then, they analyzed the personality traits of the authors and used them as additional features in their proposed AraBERT model. The proposed approach achieved promising results with a macro-F1 score of 82.3% compared to other state-of-the-art methods.

Similarly, in another study by (Elzayady et al. 2023a, b), the authors continued their work in (Elzayady et al. 2023a, b) using the same datasets, proposing a novel method for enriching the MARBERT model with hybrid features that incorporate static word embedding (AraVec 2.0) and personality trait features for Arabic hate speech detection. They implemented their experiments by fine-tuning the MARBERT model with hybrid features using the convolutional neural network (CNN) to be utilized for classification. The results showed that they achieved outstanding outcomes for Arabic hate speech challenges, greatly surpassing previous studies, where the proposed model achieved a high-performance score in terms of macro-F1 score of 86.4% compared with the traditional MARBERT. In the future, the authors will need to extend their proposed methodology to include multi-personality trait features rather than binary ones and investigate sampling methods in greater depth to address the issue of imbalanced data. They will also try to improve their proposed model for future goals in Arabic hate speech classification using multi-task learning approaches.

Another trend is semi-supervised learning, which is a hybrid of supervised and unsupervised learning, combining labelled and unlabeled data to understand how it can change learning behaviour. It is of great interest in machine learning and data mining, as it can use readily available unlabeled data to improve supervised learning tasks. Several attempts were made to analyze the effectiveness of several semi-supervised learning approaches. For instance, (Alsafari and Sadaoui 2021a, b) proposed a new approach for Arabic hate speech detection called semi-supervised self-learning (SSSL). The authors used two datasets, a smaller seed dataset of labelled data and a larger unlabeled corpus of data, to train the model that can detect hate speech in Arabic text. The experiments for the SSSL framework consisted of three primary phases: training several pairs of deep learning classifiers with non-contextualized or contextualized word embedding models, labelling the unlabeled dataset using the optimal classifier artificially, and fine-tuning the baseline classifier. The results showed that the CNN + SG achieved superior performance in terms of an F1-Score of 88.59%.

Similarly, in (Alsafari and Sadaoui 2021a, b), the authors presented a semi-supervised self-training framework to detect hate and offensive speech on social media. The authors used the same datasets in (Alsafari and Sadaoui 2021a, b) to train the model. They conducted six groups of experiments to validate the SSST approach and selected the best classifier by assessing several text vectorization algorithms and machine learning algorithms. The results of the experiments showed that the self-training approach outperformed the baseline model, achieving higher accuracy, precision, and recall. The authors also found that ensemble-based selection of confident pseudo-labelled data achieved comparable results to classical self-training. Finally, the CNN + W2VSG achieved an F1-Score of 89.51.

In a further work (Alakrot et al. 2021), the authors introduced a novel approach to identifying offensive language in Arabic online communication using machine learning algorithms. Their dataset of 15,050 labelled YouTube comments served as a unique resource for future research on anti-social behaviour in Arabic online communities. The authors applied a series of text preprocessing, feature extraction, and feature selection techniques to represent the data, including word n-grams, character n-grams, and part-of-speech tags. Various classifiers, such as naive Bayes, support vector machines, and random forest, are trained to detect offensive language, and their performance is evaluated using precision, recall, and F1-score metrics. Additionally, the authors examined different methods for feature selection, including logistic regression, support vector machines with L1 regularization, and feature ranking with recursive feature elimination. The superior results from the RFE ∪ LR-L1 method demonstrate the efficacy of the machine learning approach in effectively detecting offensive language in Arabic online communication.

The issue of racism on social media platforms has become increasingly prevalent, and with it comes potential harm to individuals and society. To address this problem, the authors in (Alotaibi and Abul Hasanat 2020) propose a model for detecting racism in Arabic tweets using deep learning and text mining techniques. This automated tool applies convolutional neural networks (CNN) to classify Arabic tweets as either racist or non-racist, utilizing a Twitter dataset that contains both types of tweets. Pre-processing of the data involves cleaning and tokenizing the tweets, converting them to vectors, and feeding them into models for training and testing. The results demonstrate the effectiveness of deep learning and text mining techniques in detecting racism on Twitter, surpassing the performance of statistical machine learning models. Such models are crucial in mitigating the impact of harmful content on individuals and society and therefore represent a significant contribution to the field of social media analysis.

The Levantine Arabic dialect is very close to standard Arabic. The study presented in (AbdelHamid et al. 2022) is concerned with the detection and classification of hate speech in Arabic tweets from the Levant region. The authors highlighted the harmful effects of hate speech on individuals and society and argued for the need for automated and accurate hate speech detection methods. The authors utilized a variety of models and algorithms for detecting hate speech, including deep learning and traditional machine learning techniques. A hybrid approach was adopted that combined word embedding and TF-IDF features for traditional classification models and BERT models for deep learning models. The dataset used in the study was collected from Twitter using specific keywords related to hate speech from the Levant region and was manually annotated into two classes. The experiment results demonstrate that the concatenation of word embedding and TF-IDF can improve classification performance and that deep learning classifiers show superior performance compared to traditional ones. The best model, using GigaBERT, achieved an AUC-ROC curve of 94.6% and a macro F1-score of 0.81, outperforming other models.

Also, the work (Awane et al. 2021) focused on the detection of hate speech in the Arab electronic press and social networks. The authors proposed the BERT Large model in Arabic, which was pre-trained on various dialects. They used a combination of three hate speech datasets to analyze 38,654 entries made up of texts in classical Arabic, Levantine, and North African dialects. The proposed model was evaluated using precision metrics, recall, and F1-Score, reaching an accuracy of 83% and an F1-Score of 89%.

Furthermore, (Badri et al. 2022) presented a new approach for detecting inappropriate content in Arabic hate speech and abusive language by using multi-dialecticism. The authors built a large dataset called Tun-EL, which covers three Arabic dialects, and proposed a CNN-BiGRU model with fastText and AraVec word embeddings to classify the content. The experimental results showed that the deep learning model outperformed traditional machine learning models, achieving 88% classification accuracy for hateful content and 76% classification accuracy for abusive content. However, the model’s performance varied depending on the dialect used. Therefore, the authors suggested enlarging the dataset and fine-tuning the hyperparameters to improve the model’s accuracy.

However, authors in (Faris et al. 2020) discussed a study on detecting hate speech in Arabic using word embeddings and deep learning techniques. They highlighted the challenges of detecting hate speech in Arabic and presented a novel approach that uses pre-trained word embeddings and deep neural networks. The approach was evaluated using several deep learning models, including convolutional neural networks (CNN), recurrent neural networks (RNN), and long short-term memory (LSTM). The dataset used for the study was collected from Twitter and consisted of 3696 tweets that were manually annotated and labelled as hate, normal, and neutral. The experiments showed that the AraVec word embedding approach with the recurrent convolutional networks was competent and achieved a high accuracy and F1-score of 71.688% compared to existing methods, demonstrating its effectiveness in identifying hate speech in Arabic.

In a recent review article (Azzi and Zribi 2021), the author discussed the use of machine learning and deep learning techniques for detecting abusive messages in Arabic social media. The authors introduced the problem of detecting abusive messages and explained why it is important. They then provide an overview of machine learning and deep learning methods and techniques, along with their taxonomy. The authors also discussed common datasets used for training and testing models for detecting abusive messages. Finally, the paper concluded with a summary of the research and discussed future challenges in this area of research. The results suggested that deep learning models perform better than traditional machine learning models for detecting abusive messages in Arabic social media.

Also, the survey by (Husain and Uzuner 2021) provided a structured overview of previous approaches, including core techniques, tools, resources, methods, and main features used for offensive language detection in the Arabic language. The paper also discussed the limitations and gaps of the previous studies. It concluded that there is still a need for more research in this area and that there are several challenges that need to be addressed, such as data scarcity, dialectal variation, and context dependence. As for the best methods and algorithms used for offensive language detection in Arabic, the paper mentions several approaches, such as supervised learning, unsupervised learning, rule-based methods, and deep learning. However, it does not provide a definitive answer as to which method is best, as each approach has its own advantages and disadvantages depending on the specific use case.

In (Mubarak and Darwish 2019), the focus was on developing a classifier for offensive Arabic language in tweets. Offensive language has become a major concern on social media platforms such as Twitter, prompting the need for a reliable and robust classifier. The main objective of this research was to build a large word list of offensive words and create a classifier that outperformed using a word list. The authors used a seed list of offensive words to tag a large number of tweets, which enabled them to discover other offensive words by contrasting those tweets with random ones. They employed word-list, fastText, and SVM classifiers and used an existing dataset of 1100 Arabic tweets with offensive language. To train the fastText classifier, they utilized 36.6 million automatically tagged tweets and compared the fastText setup to another SVM classifier with promising results. The results of this study showed that the FastText classifier achieved a high level of precision, recall, and F1 of 90%.

The study (Omar et al. 2020) conducted a comprehensive comparison of traditional machine learning and deep learning algorithms for identifying Arabic hate speech on social media platforms. The authors collected a diverse dataset of 20,000 posts, tweets, and comments from multiple social network platforms and manually annotated them as hate or non-hate speech. They trained twelve machine learning algorithms and two deep learning classifiers, CNN and RNN, on the dataset to determine which approach yielded better results. The study found that the RNN model in deep learning achieved the highest accuracy score of 98.70%, while Complement NB in machine learning had the best performance, achieving an accuracy score of 97.59%. The authors concluded that deep learning algorithms are more effective in detecting Arabic hate speech in online social networks and outperform traditional machine learning approaches.

Boulouard et al. (Boulouard et al. 2022a, b) addressed the issue of hate speech in Arabic social media using machine learning techniques. The authors highlighted the negative impact that hate speech can have on society and identified the need for effective tools to prevent and identify such speech online. They trained and evaluated several machine learning algorithms, including logistic regression, Naïve Bayes, random forests, support vector machines, and long short-term memory, on a dataset of 15,050 comments from YouTube channels known for publishing controversial videos. The authors used TF-IDF for feature extraction and found that LSTM had the best performance in terms of F1-Score, with SVM following closely behind. The authors conclude that machine learning algorithms show promise in detecting hate speech in Arabic social media but suggest that fine-tuning is necessary in the preprocessing step and that additional feature extraction may improve performance. Overall, this study demonstrates the potential of machine learning to combat hate speech.

The study (Albadi et al. 2019) investigated the effectiveness of combining handcrafted features and gated recurrent unit (GRU) neural networks for detecting religious hatred on Arabic Twitter. The authors emphasized the importance of addressing issues related to hate speech, specifically religious hate speech. They used an available dataset for evaluating the proposed approach, which was an automatically annotated dataset of Arabic tweets containing religious hatred. The dataset consists of 6,000 Arabic tweets collected from Twitter. They also created three public Arabic lexicons of terms related to religion along with hate scores using three well-known feature selection methods to generate these lexicons: pointwise mutual information (PMI), chi-square, and bi-normal separation (BNS). They employed three different approaches to detect religious hate speech: a lexicon‑based approach, N‑gram‑based approach, and GRU + word embeddings. The proposed approach is a hybrid approach that combines GRU neural networks with handcrafted features to detect religious hatred in Arabic Twitter achieved superior results for detecting religious hatred in Arabic in terms of recall (0.84%).However, the authors in (El-Alami et al. 2022) proposed a multilingual offensive language detection method using transfer learning from transformer fine-tuning models like BERT, mBERT, and AraBERT to improve accuracy across different languages. The authors evaluated their model on a bilingual dataset from SOLID and compared BERT models to various neural models such as CNN, RNN, and bidirectional RNN. They conduct three experiments using joint-multilingual, joint-translated monolingual, and translation methods to evaluate the performance of different models. The results show that BERT outperforms other models in terms of accuracy and F1 value, where the translation-based method in conjunction with Arabic BERT (AraBERT) achieves over 93% and 91% in terms of F1 score and accuracy, respectively.

The study (Alsafari et al. 2020a, b) examined the detection of hate and offensive speech on Arabic social media platforms. The authors highlighted the need to detect such content, as it can have negative effects. However, the complexity of the language and lack of resources make this a unique challenge. The authors used several algorithms and methods, including SVM, naive Bayes, logistic regression, deep neural networks, and various feature extraction methods. They created an Arabic hate/offensive corpus consisting of 5340 manually annotated tweets. The results showed that SVM outperformed other models, and the CNN + mBert model performed the best across all prediction tasks. Additionally, word embedding is efficient with deep learning models and less effective with machine learning models.

Another study (Alzubi 2022) proposed an approach to detect hate speech on social media platforms, which is a critical social issue with severe consequences. The approach is specifically designed for Arabic, which has a complex structure and relies heavily on context. They used a dataset consisting of 12,698 annotated tweets and focused on offensive speech detection. The approach includes three main steps: augmentation, pre-processing, and passing data through an ensemble. The ensemble includes models such as AraBERTv0.2-Twitter-large, Mazajak Pre-trained Embeddings, Character + Word Level N-gram TF-IDF Embeddings, MUSE, and Emoji Score. The results showed that AraBERT outperformed all other models with F1-macro of 0.85%.

In (Mostafa 2022), the GOF (gradient over-fitting) team for the Arabic hate speech detection shared task at the 5th Workshop on Open-Source Arabic Corpora and Processing Tools aimed to improve the performance of imbalanced text detection models in Arabic. They used a dataset of 13,000 Arabic tweets labelled as 35% offensive and 11% hate speech. The team experimented with five different loss functions, including weighted cross-entropy, focal loss, and Tversky loss, and proposed pre-trained models such as QARiB, MARBERT, and AraBERT. The team also proposed a deep learning ensemble approach that achieved superior results with a macro F1 score of 87.044.

The authors in (De Paula 2022) discussed the approach taken by researchers for the Arabic Hate Speech 2022 Shared Task to detect offensive language and hate speech in Arabic social media comments. The team used transformer-based models such as AraBert, AraElectra, Albert-Arabic, AraGPT2, mBert, and XLM-Roberta and ensemble techniques like majority vote and highest sum to improve classification performance. They used the OSACT5 dataset, which contained around 13k tweets, with only 35% annotated as offensive and 11% as hate speech, while tweets marked as vulgar and violent only accounted for 1.5% and 0.7%, respectively. The team achieved impressive results in both offensive language and hate speech detection subtasks. The AraBert model achieved the highest F1-Macro scores in Tasks A and C, while the highest sum ensemble achieved the best results in Task B.

Similarly, the paper (AlKhamissi 2022) was about the authors’ approach to the Arabic hate speech detection (AHSD) task, which is part of the Meta AI competition in 2022. The approach involved using multi-task learning (MTL) with a self-correction mechanism to enhance the classification of hate speech in Arabic text. The dataset used, OSACT5, consists of around 13k tweets, 35% of which are annotated as offensive and only 11% as hate speech. The proposed approach is the AraHS model, which outperformed the QARiB baseline models. MARBERTv2, pretrained with 1B multi-dialectal Arabic (DA) tweets and passed to 3 task-specific classification heads, is used as the core model. The final AraHS model is an ensemble of several trained models, each using different hyperparameters. Self-consistency correction is used to correct errors in one classification head. The results show that the AraHS model is more effective in detecting hate speech and offensive language by utilizing the self-consistency correction mechanism. The authors also conducted a detailed error analysis to identify the strengths and weaknesses of their approach and provide insights for future improvements.

The article (Mubarak 2021) analyzed the use of offensive language in Arabic tweets and evaluated machine learning models’ effectiveness in identifying such language. The authors developed a method to construct an unbiased dataset and produced the most extensive Arabic dataset to date. The dataset involved 10,000 tweets manually annotated with special tags for vulgarity and hate speech. The authors employed various state-of-the-art representations and classifiers, including static and contextualized embeddings, transformer-based and SVM classifiers, and other classification techniques like AdaBoost and logistic regression. The study’s results indicated that AraBERT was the most successful model, attaining an F1 score of 83.2.

The authors in (Haddad 2020) focused on identifying offensive language in Arabic text using deep neural networks with attention. They also utilized the OffensEval2020 dataset, which contains Arabic tweets labelled as offensive or non-offensive. They applied different methods to balance out the dataset and improve model performance. The proposed models, including CNN, Bi-GRU, CNN_ATT, and Bi-GRU_ATT, were tested alongside baseline machine learning classifiers. Their results indicated that the attention-based models performed better, with BiGRU_ATT achieving the highest F1 score of 0.859 for the offensive language detection task and 0.75 for hate speech detection.

In (Husain 2020), the SalamNET deep learning model was developed to detect offensive language in Arabic texts for SemEval-2020 Task 12. The authors tested various deep learning architectures, including a baseline LR-based model, using the Scikit-learn and Keras libraries of Python. The dataset used was the Arabic OffensEval 2020 dataset, which consisted of 10,000 tweets labelled as either offensive or not offensive. However, the dataset had a highly imbalanced distribution of offensive and non-offensive tweets, with only 1900 tweets labelled as offensive. The SalamNET Bi-directional Gated Recurrent Unit (Bi-GRU)-based model achieved a macro-F1 score of 0.83.

The study (Husain and Uzuner 2022a, b) examined six preprocessing techniques that impact the automatic detection of offensive Arabic language. The techniques included different forms of normalization, conversion of selected words to their hypernyms, hashtag segmentation, and cleaning. The study used various traditional and ensemble machine learning classifiers and artificial neural network classifiers. It analyzed two datasets: one that contains multiple dialects, a highly imbalanced dataset, and the other focused on the Levantine dialect. Both datasets were manually annotated. The research showed significant variations in preprocessing effects on each classifier, with AraBert achieving the best results.

The authors in (Alshalan and Al-Khalifa 2020) presented a novel approach for automatic hate speech detection in the Saudi Twittersphere using deep learning techniques. They also discussed the negative impact of hate speech in the Arab world and the challenges of detecting it due to the complexity of the Arabic language and the lack of labelled datasets. They proposed a deep learning model based on four models that were trained on a large Arabic Twitter dataset collected from Saudi Arabia. This dataset was developed using 9316 tweets classified as hateful, abusive, or normal that covered different types of hate speech to test their models. After performing several preprocessing steps and binary classification, the model’s performance was evaluated using different metrics. The results showed that CNN outperformed other models, with an F1-score of 0.79 and an AUROC of 0.89.

Another study (Boulouard et al. 2022a, b) discussed the use of transfer learning to detect hateful and offensive speech in Arabic social media. The authors emphasized the negative consequences of hate speech on individuals and communities and compared the performance of different BERT-based models trained using a dataset of Arabic social media posts collected from YouTube. They preprocessed the dataset by removing missing values, leaving 11,268 YouTube comments with 42% hateful and 58% non-hateful comments. They use a pre-trained language model (BERT) to extract features from the text and a binary classification model to determine whether a given message is hateful or not, including features related to sentiment and emotion. The authors trained different BERT-based models, evaluated their performance using precision, recall, and F1 scores, and found BERT-EN provided accuracy of 98%.

Furthermore, the authors in (Anezi 2022) discussed the issue of hate speech on social media and the need for effective detection mechanisms. The study used a unique dataset of 4203 Arabic comments from various sources and manually labelled them into different categories. The authors conducted experiments using a deep recurrent neural network model (DRNN-2), along with another model (DRNN-1) for binary classification. The models were evaluated using different performance metrics and compared with traditional ML classifiers. The authors found that their proposed approach provides a valuable contribution to hate speech detection research and could have potential applications in combating hate speech on social media platforms.

In another study (Guellil et al. 2020), the authors aimed to develop a supervised learning approach for detecting hate speech against politicians in Arabic social media. Two datasets, one unbalanced and the other balanced, were constructed from YouTube comments and manually annotated. The authors used various preprocessing techniques and experimented with different feature extraction methods, including bag-of-words, word embeddings, and character n-grams. The proposed approach included classical and deep learning algorithms like GNB, LR, RF, SGD Classifier, and LSVC, as well as CNN, MLP, LSTM, and Bi-LSTM. The performance of the LSVC, BiLSTM, and MLP models was the best, with an accuracy rate of up to 91% when associated with the SG model.