Abstract

Ecological science and management often require animal population abundance estimates to determine population status, set harvest limits on exploited populations, assess biodiversity, and evaluate the effects of management actions. However, sampling can harm animal populations. Motivated by trawl sampling of an endangered fish, we present a sequential adaptive sampling design focused on making population-level inferences while limiting harm to the target population. The design incorporates stopping rules such that multiple samples are collected at a site until one or more individuals from the target population are captured, conditional on the number of samples falling within a predetermined range. With this application in mind, we pair the stopping rules sampling design with a density model from which to base abundance indices. We use theoretical analyses and simulations to evaluate inference of population parameters and reduction in catch under the stopping rule sampling design compared to fixed sampling designs. Density point estimates based on stopping rules could theoretically be biased high, but simulations indicated that the stopping rules did not induce noticeable bias in practice. Retrospective analysis of the case study indicated that the stopping rules reduced catch by 60% compared to a fixed sampling design with maximum possible effort.Supplementary materials accompanying this paper appear online.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Abundance estimates are important population metrics for ecological science, being integral for population ecology (Krebs 1999) and modeling (Newman et al. 2014), species status assessments (Smith et al. 2018), and conservation and management (Morris and Doak 2002). Abundance estimates, or indices of abundance, are often based on data collected through a statistical sampling procedure, including, for example, stratified random sampling of areas or volumes (Hankin et al. 2019), capture–recapture methods (McCrea and Morgan 2014), distance sampling (Buckland et al. 2015), and presence–absence sampling (MacKenzie et al. 2017). For a species that is at risk and increasingly rare, there can be pressure to increase monitoring in order to optimize management decisions, especially if the species has not been observed for some time (Chadès et al. 2008).

In ecology, it is recognized that many if not most species have spatial distributions leading to rarity at different scales (recently reviewed at the intersection of conservation, monitoring, and modeling by Jeliazkov et al. (2022)). This has led to sampling designs to address populations that are clustered in space, such as adaptive cluster sampling (Thompson 1990) which increases sampling in areas where the target species is encountered, and analysis using statistical models capable of addressing zero inflation (Cunningham and Lindenmayer 2005).

However, when the species is also at risk and ongoing monitoring further harms the individuals, referred to as “take” in the context of biological opinions (USFWS 2006), it is important to consider whether sampling may negatively impact the population (McGowan and Ryan 2009; Gezon et al. 2015; Hope et al. 2018). While statistical sampling theory generally indicates that more samples are better than fewer samples for population inference, when take must be considered or the cost of sampling is consequentially high in some other way, then a sampling design limiting overall effort may be of interest.

To address these challenges as they relate to threatened populations, we describe an adaptive sampling design with the objective of generating an index of population size, which necessarily involves frequent sampling with good spatial coverage due to rarity and patchiness, while limiting take. Achieving this objective leads to a tension between a need for multiple samples at each site to reduce negative bias by incorrectly concluding there are no individuals at a site (and which may also allow quantification of imperfect detection), and a desire to cap effort and minimize take. In the proposed design, referred to as a stopping rule sampling design, multiple samples are collected at a sampling site until one or more individuals from the target population are successfully sampled, conditional on the number of samples falling within a predetermined range. Because sampling at a site beyond the minimum stops after the species is found, the sampling design limits the handling of target species and reduces field time by minimizing the number of samples taken at a site once the species has been found. Bounding sample effort at the site level is important for achieving a sufficient number of sites across a species’ range for making robust population-level inference. At the same time, collecting multiple samples per site increases the amount of data available for modeling variability in observed counts, though in practice the effects of increasing total sample size in this way may be affected by pseudo-replication at the site level. This design is in contrast to more commonly used adaptive sampling designs in environmental and ecological sampling where samples are added in an area around a site after a nonzero detection (Thompson 2003).

The stopping rule sampling design described here was motivated by the need to monitor delta smelt (Hypomesus transpacificus), a finger-length fish that has experienced severe population declines and is susceptible to harm during sampling, but remains a high priority for active monitoring (Sect. 2). With this application in mind, we present a density model from which to base abundance indices (Sect. 3). Potential effects of the stopping rules on inference about density and comparisons of the performance of the stopping rule sampling design are examined in general using both theoretical analyses and simulation experiments (Sect. 4). We derive delta smelt abundance indices and use a simulation study to estimate the reduction in their take based on stopping rule sampling (Sect. 5). We close with a discussion that includes comparing our approach to other adaptive designs along with some limitations and future research needs (Sect. 6).

2 Background on the Motivating Case Study

Delta smelt are endemic to the San Francisco Estuary (SFE) in California, USA. Human activity has substantially altered the SFE (Nichols et al. 1986; Whipple et al. 2012), resulting in reduced habitat quality for native fishes. These changes have coincided with the long-term decline of delta smelt (Sommer et al. 2007; Moyle et al. 2016), which are included in both state (CFGC 2009) and federal (USFWS 1993) government endangered species lists. There is ongoing interest in contemporary determinants of the distribution and abundance of the delta smelt population, despite the species becoming increasingly rare.

In December 2016, the Enhanced Delta Smelt Monitoring (EDSM) program was initiated by the U.S. Fish and Wildlife Service to provide near real-time and year-round information on the population distribution and abundance of delta smelt through trawl sampling. However, collection and handling of delta smelt can result in harmful levels of stress and injury (Swanson et al. 1996), leading to a conflict between two goals, continued population monitoring and minimizing take. Furthermore, because of their small size compared to the volume of potential habitat, and varying density at multiple spatial scales (Polansky et al. 2018), there is an additional conflict between the need for (1) multiple samples per site while also (2) visiting enough sites to enable population-level inference. EDSM attempts to achieve both of these goals while minimizing take by employing stopping rules.

3 Methods

3.1 Sampling Design

Suppose sampling is to take place at a set of n sites and that at each site k = 1, \(\ldots \), n a set of \(Q_{k}\) independent samples is to be collected. We assume the number of individuals of the target species observed or captured in sample i, denoted \(Y_{k,i}\), is recorded along with a measure of sampling effort, denoted \(v_{k,i}\). This measure of effort is needed because the sample unit is not precisely definable. Under the stopping rule sampling design, the number of samples collected at a site is constrained to fall within a predetermined range denoted \(q_{\min }\) to \(q_{\max }\). Sampling stops after \(q_{\min }\) samples if any of the first \(q_{\min }\) samples results in a positive count of the target species. Otherwise, sampling proceeds until a positive count is observed, after which sampling at the site stops, or until \(q_{\max }\) samples have been taken.

The number of samples collected at a given site k, \(Q_{k}\), is a random variable taking on integer values from \(q_{\min }\) to \(q_{\max }\). Its value depends on which of the following three sets of conditions occur.

\(Q_{k}\)’s probability distribution is thus determined by \(q_{\min }\), \(q_{\max }\), and the probability of observing a zero count in a given sample, \(\text {P}(Y_{k,i}=0)\) (Supplemental Information (SI) Section S1.1). When sampling effort is constant, \(Q_{k}\) can be interpreted as following a doubly truncated non-homogeneous geometric distribution with lower truncation at \(q_{\min }\), upper truncation at \(q_{\max }\), and success defined as observing a positive count.

At each site, the expected total number of samples \(\text {E}(Q_{k})\) and the expected total count \(\text {E}(Y_{k,\cdot })\) are

respectively (SI Sections S1.2 and S1.3). These equations can be used in conjunction with preliminary estimates of parameters defining the distribution of \(Y_{k,i}\) to inform decisions about setting survey design parameters and anticipate take. For example, if \(r_1\) is the average travel time between sites and \(r_2\) is the average time required to collect a single sample, then values of \(q_{\min }\), \(q_{\max }\), and n can be found that constrain (1) total sample time, \(T=r_1 (n-1) + r_2 \sum _{k=1}^{n} Q_k\), and (2) total catch across sites, \(C=\sum _{k=1}^{n} Y_{k, \cdot }\). The corresponding expected values are \(\text {E}(T)=r_1 (n-1) + r_2 n \text {E}(Q_k)\) and \(\text {E}(C)=n \text {E}(Y_{k, \cdot })\).

A density model given stopping rule data is developed next in Sect. 3.2, but here we note that the stopping rules do not influence the probability that a given count will be observed during a given sample. The joint distribution for all random variables associated with site k can therefore be written as the product of the count probabilities (SI Section S1.4): \(\text {P}(Y_{k,1},\ldots ,Y_{k,Q_k},Q_k) = \displaystyle \prod _{i=1}^{Q_k} \text {P}(Y_{k,i})\).

Because stopping depends only on the observed counts and not the parameters to be estimated, the stopping rule is “non-informative” for likelihood inference (Roberts 1967) and the likelihood is the same with regard to model parameters whether the stopping rules are followed or broken (SI Section S1.5; see Roberts (1967) for examples of informative stopping rules). We also note that the non-informative design with regard to the likelihood does not mean that parameter estimates will be unbiased given data collected under a stopping rule sampling design (Sect. 4).

3.2 Density Model

A goal of the stopping rule sampling design is to provide data for fitting models to estimate population densities, abundance indices, or other similar population metrics. Here we present a generalized linear mixed model (GLMM) for estimating relative density using count data collected with the stopping rule sampling design and demonstrate how the fitted model can be used to calculate indices of abundance.

Suppose sampling takes place over \(t=1, \ldots , T\) time periods in \(h=1, \ldots , H\) spatial strata with \(k=1, \ldots , n_{t,h}\) sites in a given time period and stratum. We consider the general model

where \(y_{t,h,k,i}\) is the count from the \(i^{\text {th}}\) sample at site k in stratum h and time period t, and \(\text {D}\) is a probability distribution with expected count \(\mu _{t,h,k,i}\) and optional dispersion parameter \(\theta _{t,h,k,i}\). The expected count is a log-linear combination of (1) time- and stratum-specific intercepts \(\beta _{0,t,h}\), (2) a site- and sample-specific covariate matrix \({\varvec{x}}_{t,h,k,i}\) multiplied by coefficient vector \(\varvec{\beta }\), (3) a normally distributed site random effect (RE) \(\alpha _{t,h,k}\) with standard deviation \(\sigma _{\alpha }\), and (4) sampling effort offset, \(\text {log}\left( v_{t,h,k,i}\right) \). The intercepts reflect large-scale spatiotemporal changes in density (number of organisms per unit of sampling effort) while site random effects account for variability between sites as well as correlation between samples at a site. The covariates reflect finer-scale patterns in availability or detection or both. We assume that a new set of sites is sampled within each time period and stratum (e.g., subscripts t, h, and k are needed to denote a unique site), though the site effect could be simplified. For example, if the same set of sites was visited over time, the site effect could be time invariant, \(\alpha _{h,k} \sim \text {N} \left( 0, \sigma _{\alpha } \right) \).

We consider models where the distribution \(\text {D}\) is either Poisson, in which case \(\theta _{t,h,k,i}\) is not needed, or negative binomial with variance \(\text {Var}(Y_{t,h,k,i}) = \mu _{t,h,k,i} + \mu _{t,h,k,i}^2/\theta _{t,h,k,i}\). The dispersion parameter \(\theta _{t,h,k,i}\) controls the level of aggregation of organisms with smaller values corresponding to increased aggregation (Stoklosa et al. 2022). We treat the log of \(\theta _{t,h,k,i}\) as a linear combination of covariates \({\varvec{w}}_{t,h,k,i}\) with coefficients \(\varvec{\gamma }\) to allow the level of aggregation to change, for example with changes in habitat quality.

One alternative to the GLMM approach is N-mixture models, which separate out patterns in abundance and detection using replicate samples per site (Royle 2004). Such models require, among other things, that replicates are unambiguous re-samples of a closed population at the site, and it is generally recognized that many empirical data sets likely violate this closure assumption (Barker et al. 2018; Goldstein and de Valpine 2022). We expect our motivating case study, which involves sampling of a mobile organism with trawls in a highly dynamic habitat, to fall into this category. The exact boundaries of a site and the amount of overlap in habitat sampled between samples (tows of the trawl) at a site are not easily definable, and the degree to which the movement of fish and water may violate the closure assumption at a site is generally unknown. For this reason, we keep our focus on GLMMs, acknowledging that this class of models produces estimates of relative density or abundance rather than absolute abundance (Dénes et al. 2015; Barker et al. 2018).

Estimates of relative abundance can be calculated using parameter estimates from a fitted model if sampling effort \(v_{t,h,k,i}\) represents a measure of habitat quantity such as water volume sampled for aquatic species or area sampled for terrestrial species, and estimates of total habitat quantity, \(V_{t,h}\), are available. Let \({\hat{\delta }}_{t,h}=\exp ( {\hat{\beta }}_{0,t,h} + \bar{{\varvec{x}}}_{t,h}^T \hat{\varvec{\beta }})\) be the estimated density given average covariate values \(\bar{{\varvec{x}}}_{t,h}\). Then a relative abundance index \({\hat{I}}_{t,h}\) is given by \({\hat{I}}_{t,h}={\hat{\delta }}_{t,h} V_{t,h}\). Standard error estimates \(\widehat{\text {SE}}( {\hat{I}}_{t,h})\) can be calculated with model parameter standard errors using the delta method (Rao 1973). Assuming independence between strata, a total index and its variance for a given time period are given by the sum of stratum-specific estimates: \({\hat{I}}_{t}=\sum _{h=1}^{H} {\hat{I}}_{t,h}\) and \(\widehat{\text {Var}}({\hat{I}}_{t})=\sum _{h=1}^{H} \widehat{\text {Var}}({\hat{I}}_{t,h})\). Further assuming that the sampling distribution for the total estimate \({\hat{I}}_{t}\) is approximately lognormally distributed with log-scale mean \(\mu = \log ( {\hat{I}} / \sqrt{ 1 + ( \widehat{\text {Var}} ({\hat{I}}) / {\hat{I}}^2 ) }\) and log-scale standard deviation \(\sigma = \sqrt{ \log ( 1 + \widehat{\text {Var}} ({\hat{I}}) / {\hat{I}}^2 ) }\), a confidence interval (CI) for \({\hat{I}}\) can be constructed using the quantiles from this lognormal distribution.

3.3 Model Fitting

A range of tools are available for fitting GLMMs (Bolker et al. 2009). All analyses presented in this paper were carried out in R (R Core Team 2023), and models were fit within a frequentist framework using the glmmTMB package (Brooks et al. 2017), with covariates standardized to have mean zero and standard deviation one. The DHARMa package (Hartig 2022) was used for residual analyses in the case study (Sect. 5).

4 Investigation of Parameter Estimation Under the Stopping Rules

The use of stopping rules to collect count data may affect inferences based on the data. Brown and Manly (1998), for example, discuss bias in the context of adaptive cluster sampling with a different stopping rule than the one considered here. We used a combination of analytic and simulation approaches to investigate properties of model parameter estimates given data collected according to the stopping rule sampling design.

4.1 Investigation 1: Poisson Count Distribution

4.1.1 Methods

Here we consider a highly simplified scenario in which there is a single time period and stratum, sites share a constant mean density of organisms (\(\delta \)), sampling effort (v) is constant, and counts follow a Poisson distribution. Dropping non-varying subscripts, the model can be written as \(y_{k,i} \sim \text {Poisson}(\delta v)\) for all samples at all n sites. We analytically derived the maximum likelihood estimate (MLE) of density, \({\hat{\delta }}\), and an approximation of the theoretical bias, \(\text {Bias}\big ({\hat{\delta }}\big ) = \text {E}({\hat{\delta }}) - \delta \), based on a Taylor series expansion of \({\hat{\delta }}\) (SI Section S2.1). We investigated how bias in \({\hat{\delta }}\) changes as a function of \(q_{\min }\), \(q_{\max }\), and the number of sites n. For this, we used \(v=1\) and let true density \(\delta \) range from 0.001 to 4, allowing the probability of observing a zero to vary from 0.018 to 0.999. We set \(q_{\min }\) = 2, 4, 6, or 8, \(q_{\max }\) = 4, 6, 8, or 10, and n = 2 or 4. The ranges of values considered for \(q_{\min }\) and \(q_{\max }\) are generally based on the case study where \(q_{\min }\) has consistently been 2 and \(q_{\max }\) has ranged from 4 to 10 (Sect. 5); we allowed \(q_{\min }\) to be larger than 2 for investigative purposes. For each combination of inputs, we used the bias approximation to calculate percent relative bias as \(100 \times \text {Bias}\big ({\hat{\delta }}\big )/\delta \) and graphically summarized the results.

4.1.2 Results

An approximation of the theoretical bias in the density MLE is given by

The theoretical bias approximation is positive and decreases as total sample effort is increased by increasing n, increasing v, or increasing \(q_{\min }\) while \(q_{\max }\) is held constant (Fig. 1a). We note that with a fixed number of samples per site, i.e., with no stopping rules, the estimate \({\hat{\delta }}\) would be unbiased (SI Section S2.1).

The bias may be associated with the stopping rules that allow data collectors to “quit while ahead,” i.e., stop sampling after the first positive catch after sample \(q_{\min }\) and before sample \(q_{\max }\). This is supported by the observation that relative bias generally increases as density decreases (Fig. 1): In the lower density range, the stopping rules are more likely be invoked after sample \(q_{\min }\) while at larger densities the stopping rule sampling design appears more like a fixed sampling design with the number of samples fixed at \(q_{\min }\). Furthermore, the bias may depend on the difference between \(q_{\min }\) and \(q_{\max }\), with larger differences allowing the stopping rules to have more of an effect on bias and smaller differences again bringing the stopping rule sampling design closer to a fixed sampling design. This is supported by the finding that at lower densities, increasing the difference between \(q_{\min }\) and \(q_{\max }\) by holding \(q_{\min }=2\) constant and increasing \(q_{\max }\) could result in increased relative bias (Fig. 1b).

Relative bias ranged from 0 to 27.1% across the complete sets of inputs considered here. For an example of the magnitude of bias, when \(\delta \) = 0.5 with \(q_{\min }\) = 2, \(q_{\max }\) = 6, n = 2, the expected value of \({\hat{\delta }}\) is \(\text {E}({\hat{\delta }})\) = 0.575, a 15% positive bias. A bias-adjusted estimate of density could theoretically be calculated as \({\hat{\delta }}_{\text {adj}} = {\hat{\delta }} - \text {Bias}({\hat{\delta }})\). However, we note that the expression for \(\text {Bias}({\hat{\delta }})\) depends on the maximum possible number of samples, \(q_{\max }\), and all associated sample volumes. In practice, it is likely that not all \(q_{\max }\) samples will be realized and sample effort will vary, making application of the bias correction factor unrealistic.

4.2 Investigation 2: Negative Binomial Count Distribution

4.2.1 Methods

Here we consider a negative binomial model (Eq. 4) with site- and sample-specific covariates, random site effect, and constant dispersion parameter. We simulated data sets that applied the stopping rules as well as three alternative sampling designs and fit the model to these data sets. Our goals were twofold: to compare parameter estimates based on the different sampling designs, looking for bias in estimates based on the stopping rules, and to investigate trade-offs between the number of organisms caught and parameter estimate quality.

Percent relative bias in density estimates (\({\hat{\delta }}\)) based on stopping rule data as described in Investigation 1 in Sect. 4.1. Panel a reflects the effects of fixing the maximum number of samples (\(q_{\max }\)) and varying the number of sites (n) and the minimum number of samples (\(q_{\min }\)). Panel b reflects the effects of fixing \(q_{\min }\) and varying n and \(q_{\max }\). Quantities indicated in sub-panel titles are held constant at the indicated values

We selected \(T=10\) time periods, \(H=1\) stratum, \(n=40\) new sites per time period and set \(q_{\min }=2\) and \(q_{\max }=10\). The “true” parameter values used for data generation were \(\beta _{0,t}=\log (0.0002 \exp (-0.07(t-1))\), \(\beta _1=0.4\) for a site-specific covariate coefficient, \(\beta _2=0.2\) for a sample-specific covariate coefficient, \(\sigma _{\alpha }=0.8\), and \(\theta =0.4\). Values of sampling effort, \(v_{t,k,i}\), were drawn from a gamma distribution with shape parameters 11.86 and expected value 3100, and were similar to values from the case study (Sect. 5). Site- and sample-specific covariate values were generated from a Uniform (\(-\)1,1) distribution. Inputs were chosen so counts were relatively low with high variability as this is a scenario where the stopping rule sampling design is likely to be relevant. In time period \(t=1\), for example, the expected count in a sample was approximately 0.46 with coefficient of variation 2.16 and probability of observing a zero count equal to 0.74.

Data sets were generated according to the following process. We first generated a single data set according to Eq. 4 with 10 samples per site, designating this the “max” effort data set because sample size is fixed at the value \(q_{\max }\). We then created three additional versions of the data, starting with the max data set each time. First, a “min” effort data set was created by retaining only the first \(q_{\min }=2\) samples from each site. Next, a “stopping rule” data set was created by applying the stopping rules. Finally, a “randomized” data set was created by taking the numbers of samples that would be retained under the stopping rules, randomly reassigning those numbers across sites, and then retaining the assigned number of samples from each site. The max, min, and randomized data sets represent sampling designs where there is no potential effect of stopping rules on model inference and no effort is made to limit catch. The randomized data set keeps the total number of samples equal to that of the stopping rules data set so that potential differences in parameter estimates are less affected by differences in sample size. This complete data generation process was carried out 10,000 times.

We fit the model to each of the 40,000 data sets using restricted maximum likelihood (REML). Although the true log intercepts \(\beta _{0,t}\) were related in time through an exponential function for convenience, we note that this time dependence was not reflected in the model (Eq. 4). We checked to ensure that all models converged.

For each sample design, parameter estimates were summarized using the mean, 95th (2.5\(-\)97.5) quantile range, and mean squared error. Additionally, total catch in each data set (total number of organisms caught across time, sites, and samples) was summarized using the mean and 95th quantile range. To determine how well the proposed lognormal-based CI performed, we calculated abundance indices and CIs from the fitted models using habitat volume \(V_t=\)10,000 for all t, and determined the proportion of times the CI contained the value \(I_{t}=\exp (\beta _{0,t}) V_{t}\).

4.2.2 Results

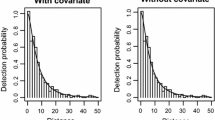

The average number of samples collected under the stopping rule design, across time periods, ranged from 2 to 10 with mean 4.2. Accuracy and precision of the parameter estimates generally improved as the total number of samples increased across sampling designs (Figs. 2a, b and 3; SI Section S2.2), consistent with expected patterns. The stopping rule sampling design fit within this pattern and did not show systematic bias in parameter estimates or unusually high uncertainty compared to the min and randomized designs. All four sampling designs generally estimated the coefficient parameters \(\beta _1\) and \(\beta _2\) comparably. The parameters \(\sigma _{\alpha }\) and \(\theta \) were more likely to be underestimated than overestimated regardless of sampling design, with the bias worst with \(q_{\min }\). Increasing the number of samples beyond \(q_{\min }\) through application of the stopping rules was particularly beneficial in estimating \(\sigma _{\alpha }\) and \(\theta \) and reducing bias in estimates of \(\beta _{0,t}\) compared to the min sampling design. Overall, the stopping rule and randomized designs performed equally, though the extra catch allowed under the randomized design resulted in a marginal decrease in uncertainty in estimates of \(\beta _{2}\) and \(\theta \).

The stopping rule sampling design reduced average total catch by 22.8% and 67.9% relative to the randomized and max sampling designs, respectively, and increased average total catch by 60.3% relative to the min sampling design (Fig. 2c). The lognormal-based CIs had coverage between 94.1% and 95.7%, consistent with the target coverage of 95% (SI Section S2.2).

Summary (mean and 95th quantile range) of parameter estimates (panels a and b) and total catch (panel c) under the four sampling designs from Investigation 2 in Sect. 4.2. Parameters are covariate effect coefficients \(\beta _1\) and \(\beta _2\), site random effect standard deviation \(\sigma _{\alpha }\), dispersion \(\theta \), and log densities \(\beta _{0,t}\), \(t=1, \ldots , 10\). Sampling design is indicated by order (min, stopping rule, randomized, and max) as well as color and point shape across all sub-panels. In panels a and b, thick horizontal black lines reflect true values

Parameter estimate mean squared errors from Investigation 2 in Sect. 4.2. Parameters are covariate effect coefficients \(\beta _1\) and \(\beta _2\), site random effect standard deviation \(\sigma _{\alpha }\), dispersion \(\theta \), and log densities \(\beta _{0,t}\), \(t=1, \ldots , 10\). Sampling design is indicated by order (min, stopping rule, randomized, and max) as well as color and point shape across all sub-panels

5 Case Study

5.1 Data Collection

The EDSM program uses trawls to sample delta smelt from the beginning of July, when individuals reach the juvenile life stage, through the subsequent March, by which time they have developed into mature adults and the majority of spawning is thought to have occurred. The habitat range has been stratified into between 4 and 10 strata throughout the life of the program (2016 to present, SI Figures S3.1, S3.2) to account for geographically varying habitat types and densities. Sampling occurs weekly in all strata. Within each stratum, sites are defined by a set of coordinates generated using a generalized random tessellation stratified design (Stevens and Olsen 2004). The target number of sites per stratum per week, \(n_{t,h}\), has varied over time but generally ranges from 2 to 6.

A tow of the trawl constitutes a single sample and a flow meter is used to estimate the volume (\(\text {m}^3\)) of water sampled in a tow as a measure of sampling effort. Delta smelt are identified and enumerated after each tow. The stopping rules are applied July–March, a period when individuals are typically large enough to be identified in the field. The value of \(q_{\min }\) has remained at 2 while \(q_{\max }\) has varied between 4 and 10 depending on the year and the availability of sampling resources. Auxiliary data on environmental conditions are also collected with each tow including Secchi depth (m; a measure of water clarity), specific conductance (\(\mu \)S/cm at 25 \(^{\circ }\)C, a measure of water electrical conductivity subsequently referred to as EC), water temperature (\(^{\circ }\)C), and tide stage (ft, see below). See SI Section S3.1 for program history and sampling details.

5.2 Model Fitting, Index Calculations, and Theoretical Take Calculations

We used data collected between December 2016 and March 2021 to fit negative binomial models with a weekly time step, geographic stratification, and site random effect. We included four sample-level environmental covariates (Secchi depth, EC, water temperature, tide) and a categorical variable \(s_t\) representing spawning season (winter, approximately December–March) or non-spawning season (summer/fall, approximately July–November). The full model can be written as

where the uth environmental covariate is represented with the notation \(x_{(u)}\). Catch densities of delta smelt have previously been associated with environmental conditions (Feyrer et al. 2007; Polansky et al. 2018; Hendrix et al. 2023), and we hypothesized that the nature of this association may change during the spawning season. We also hypothesized that the level of aggregation would be higher in winter when spawning occurs.

Because EDSM did not record tide data in the early months of the program, we used hourly mean tide level (ft) at Port Chicago, CA (station ID 9415144; NOAA (2024)) as the tide variable throughout the modeled time period. We joined the two data sets by rounding the start time for a tow to the nearest hour and assigning the corresponding mean tide level to that tow. We excluded weeks with no catch from the model data set. Following the assumption that delta smelt are distributed between 0.5 and 4.5 m depth, we adjusted tow volumes to account for the fraction of sampling carried out within this depth stratum (Polansky et al. 2019). See SI Section S3.2 for further details on data processing.

We relied primarily on an information-theoretic approach based on Akaike information criteria (AIC) to arrive at a final model structure (Mundry and Nunn 2009; Zuur et al. 2009). We first found an optimal random structure (site RE or no RE) given the full fixed effects structure using restricted maximum likelihood estimation (REML), then carried out a backward elimination procedure on the fixed effects using maximum likelihood estimation (ML). Results presented here are based on REML.

We examined relationships between environmental covariates and relative catch to verify that these relationships appeared biologically plausible. For each covariate retained in the selected model, we used parameter estimates \({\hat{\beta }}_{u,1}\) and \({\hat{\beta }}_{u,2}\) to calculate and plot the quantity \(\exp ({\hat{\beta }}_{u,1} \hspace{1pt} x_{(u)} + {\hat{\beta }}_{u,2} \hspace{1pt} x^2_{(u)})\) over the observed range of values of \(x_{(u)}\). This effectively fixes the intercept parameter, random effect, log sample volume, and remaining covariate values at zero to allow inspection of the general relationship between \(\mu \) and \(x_u\). We note that combinations of covariate values used in these calculations do not necessarily represent combinations that were observed in the data.

Using the selected model, we calculated \({\hat{I}}_{t,h}\) and \(\widehat{\text {SE}}({\hat{I}}_{t,h})\) using estimates of the volume of water between 0.5 and 4.5 m depth in each stratum (SI Section S3.3). To investigate the effects of the stopping rules on delta smelt catch, we used the selected model to generate catches in additional tows that would have been conducted if there were no stopping rules and sample size was fixed at \(q_{\max }\). For each additional, hypothetical tow \(i^*\), we used site-level covariate averages (standardized in accordance with the model) and site-level sample volume averages to calculate estimates \({\hat{\mu }}_{t,h,k,i^*}\) and \({\hat{\theta }}_{t,h,k,i^*}\), generated the additional counts from negative binomial distributions using these parameters, and added these catches to the realized total catch of delta smelt to estimate a hypothetical total catch across the study period. We carried out this procedure 10,000 times and summarized the distribution of the resulting total catches by calculating the mean and 95th quantile range.

5.3 Results

A total of 23,168 tows were collected from a total of 5,394 sites over the sampling period used in this analysis. At least one delta smelt was caught in 407 (1.76%) of the samples and at 354 (6.6%) of the sites. A total of 888 individual delta smelt were caught with 29% caught in the first sample at a site, 29.7% in the second sample, 16.2% in the third, 14.5% in the fourth, 6.9% in the fifth, 2.2% in the sixth, 0.5% in the seventh, and 1% in the eighth sample.

The selected model retained the site random effect as well as linear and quadratic terms for Secchi depth, EC, and temperature (Table 1; SI Sections S3.4, S3.5). One of the three residual tests did not pass, indicating possible deviation from the expected distribution (SI Section S3.6). However, all tests passed for the same model refit with ML.

Relative catch density decreased with increasing Secchi depth (increasing water clarity) and increasing EC (increasing salinity; Fig. 4), consistent with patterns found previously (Nobriga et al. 2008; Hendrix et al. 2023). The relative effect of temperature was generally highest between 15 and 20 \(^{\circ }\)C. Lack of support for a tide effect is in contrast to other studies (Feyrer et al. 2013; Bennett and Burau 2015; Polansky et al. 2018), but may be due in part to the lack of spatial resolution in the tide data. The estimated standard deviation for the site random effect was \({\hat{\sigma }}_{\alpha }=1.31\), and the estimated dispersion parameter was \({\hat{\theta }}=1.22\). Estimates of relative density, \(\exp ({\hat{\beta }}_{0,t,h})\), ranged from \(1.65 \times 10^{-6}\) to \(4.87 \times 10^{-2}\), and total indices ranged from 0 to 625,986 with high uncertainties (Fig. 5).

Under the hypothetical scenario in which \(q_{\max }\) samples were collected at every site (without stopping rules), the simulated total catches had mean 2220, 150% higher than the actual number caught, and 95th quantile range 1902 to 2618. Based on the mean, this corresponds to a 60% (1332/2220) decrease in catch under the stopping rule sampling design relative to a design with the maximum number of samples per site.

6 Discussion

Ecological field sampling involves balancing the objective of estimating one or more quantities of interest (to a desired level of accuracy and precision) with the cost of sampling. For rare species, this cost can include harm to individuals in the population of interest. We presented an adaptive sampling design developed to provide data for making population-level inferences while aiming to limit the handling or take of the target population, and illustrated how these data can be used to calculate abundance indices via a GLMM that is fit using readily available software. Through an analytic investigation, we found that maximum likelihood estimates of population density based on stopping rule data have increased positive bias as densities decline. Through a simulation-based investigation intended to more closely reflect real data collection processes, we found that the stopping rule sampling design can improve accuracy and precision of parameter estimates through increased data collection while simultaneously reducing catch without noticeable bias. In practice, what constitutes an acceptable trade-off between take-related costs of sampling and quality of estimates of population parameters will be situation specific.

Relationships between each environmental covariate (a Secchi depth, b EC, c water temperature) and relative catch of delta smelt based on the selected case study model (Sect. 5). In each panel, the indicated covariate is allowed to vary while other terms in the log expected count are fixed at zero. Covariate values shown on the horizontal axes are on their original scales (not standardized)

Delta smelt abundance index time series for 5 cohorts from July through March, the period over which a cohort of delta smelt develop through the juvenile to spawning adult life stages. Circles represent point estimates and vertical lines represent 95% confidence intervals. Point type reflects spatial coverage (complete=all strata were sampled; partial=at least one stratum was not sampled)

An important consideration of the stopping rule sampling design is the trade-off between the number of sites sampled and the number of samples collected per site. The stopping rules can help achieve good spatial balance and coverage by allowing a high number of sites, particularly when positive counts are observed and time saved at one site can be used to visit one or more additional sites. At the same time, collecting multiple samples per site may allow for modeling of imperfect detection when model assumptions are met (Royle 2004) or exploration of alternative density models.

There are both similarities and differences between the adaptive design described here and some others used in ecology. Sequential sampling aims to sample until some decision can be made with some amount of certainty (Krebs 1999). In mark–recapture studies, sequential analysis has been used to determine the number of recaptures needed to estimate population size with a given level of precision (Mukhopadhyay and Bhattacharjee 2018; Silva et al. 2023). Such methodology did not apply to our case study because there were no marked delta smelt in the SFE at the time (though see next paragraph) and the bound on sampling effort for recaptures would likely apply to total sites, not number of samples per site or necessarily with the aim of minimizing take. Removal design, whereby surveying stops at a site on first detection, has been developed within an occupancy modeling framework (Azuma et al. 1990; MacKenzie and Royle 2005) where the goals are to estimate species occurrence and detection probabilities, but not abundance. Adaptive cluster sampling does aim to acquire data for estimating abundance, but increases rather than decreases effort upon detection (Thompson 1990). Adaptive cluster sampling has perhaps received the most attention within ecology (see, e.g., the special issue in Environmental and Ecological Statistics edited by Thompson (2003)). Such research has included adding a stopping rule by fixing a maximum number of adaptive sample iterations (Su and Quinn 2003), different than the condition-based rule described here that aims to minimize positive counts.

To maximize generality and applicability, we used a common but relatively simple GLMM to analyze the data. This model could be extended by adding complexity to the fixed effect structure to include explicit spatial, temporal, or spatiotemporal terms. Extension to the probabilistic structure of the model allowing for zero inflation at the sample level (currently available in the glmmTMB package) or site level are some additional ways for building on the model considered here. For example, if no fish are within the water associated with a given site the density at that site may be considered a structural zero. As noted previously, explicitly modeling imperfect detection might be considered.

The delta smelt case study provides insight into the application of the stopping rule sampling design to a species that embodies the challenge of balancing monitoring and take. The stopping rules likely reduced overall take of delta smelt while providing indices of abundance on a weekly basis, whereas other monitoring programs deploying less effort have by in large ceased to detect any delta smelt at all. In recent years, cultured adult delta smelt marked with tags or fin clips have been released into the SFE as part of experimental efforts to supplement the decreasing wild population (USFWS 2020). In addition to potentially bolstering the population, this opens new avenues for mark–recapture modeling and additional efforts to quantify population size and vital rates. As the research community enters this new phase in delta smelt science and management, we expect the question of how to balance take considerations with monitoring objectives to remain relevant.

When species status assessments indicate a population is at risk, the importance of indices increases to uncover mechanisms driving the decline and evaluate the effectiveness of management actions. As a result, pressure to monitor may also increase, but this can impact the larger objective of protecting the population. Pressure for increased monitoring to assess conservation actions can become particularly intense when actions involve significant cost to humans, as is the case with delta smelt. As more species decline (IUCN 2022), it will become increasingly important to develop and apply methods of reducing the harmful impacts of sampling.

Data Availability Statement

The data that support the findings of the case study are available in the supplementary material of this article. R code supporting the article is also available in the supplementary material.

References

Azuma DL, Baldwin JA Noon BR (1990) Estimating the occupancy of spotted owl habitat areas by sampling and adjusting for bias. Technical Report PSW-124, United States Department of Agriculture, Berkeley

Barker RJ, Schofield MR, Link WA, Sauer JR (2018) On the reliability of N-mixture models for count data. Biometrics 74:369–377

Bennett WA, Burau JR (2015) Riders on the storm: selective tidal movements facilitate the spawning migration of threatened Delta Smelt in the San Francisco Estuary. Estuaries Coasts 38:826–835

Bolker BM, Brooks ME, Clark CJ, Geange SW, Poulsen JR, Stevens MHH, White JSS (2009) Generalized linear mixed models: a practical guide for ecology and evolution. Trends in Ecol Evol 24:127–135

Brooks ME, Kristensen K, van Benthem KJ, Magnusson A, Berg CW, Nielsen A, Skaug HJ, Mächler M, Bolker BM (2017) glmmTMB balances speed and flexibility among packages for zero-inflated generalized linear mixed modeling. The R Journal 9:378–400

Brown JA, Manly BJF (1998) Restricted adaptive cluster sampling. Environ Ecol Stat 5:49–63

Buckland ST, Rexstad EA, Marques TA, Oedekoven CS (2015) Distance sampling: methods and applications, vol 431. Springer

California Fish and Game Commission (CFGC) (2009) Final statement of reasons for regulatory action, Amend Title 14, CCR, Section 670.5, Re: uplisting the delta smelt to endangered species status. Technical report, California Fish and Game Commission, Sacramento

Chadès I, McDonald-Madden E, McCarthy MA, Wintle B, Linkie M, Possingham HP (2008) When to stop managing or surveying cryptic threatened species. Proc Natl Acad Sci 105:13936–13940

Cunningham RB, Lindenmayer DB (2005) Modeling count data of rare species: some statistical issues. Ecology 86:1135–1142

Dénes FV, Silveira LF, Beissinger SR (2015) Estimating abundance of unmarked animal populations: accounting for imperfect detection and other sources of zero inflation. Methods Ecol Evol 6:543–556

Feyrer F, Nobriga ML, Sommer TR (2007) Multidecadal trends for three declining fish species: habitat patterns and mechanisms in the San Francisco Estuary, California, USA. Can J Fish Aquat Sci 64:723–734

Feyrer F, Portz D, Odum D, Newman KB, Sommer T, Contreras D, Baxter R, Slater SB, Sereno D, Nieuwenhuyse EV (2013) SmeltCam: Underwater video codend for trawled nets with an application to the distribution of the imperiled Delta Smelt. PLoS One 8:e67829

Gezon ZJ, Wyman ES, Ascher JS, Inouye DW, Irwin RE (2015) The effect of repeated, lethal sampling on wild bee abundance and diversity. Methods Ecol Evol 6:1044–1054

Goldstein BR, de Valpine P (2022) Comparing N-mixture models and GLMMs for relative abundance estimation in a citizen science dataset. Sci Rep 12

Hankin DG, Mohr MS, Newman KB (2019) Sampling theory: for the ecological and natural resource sciences. Oxford University Press, New York

Hartig F (2022) DHARMa: residual Diagnostics for Hierarchical (multi-level/mixed) regression models. R package version 0.4.6

Hendrix AN, Fleishman E, Zillig MW, Jennings ED (2023) Relations between abiotic and biotic environmental variables and occupancy of Delta Smelt (Hypomesus transpacificus) in autumn. Estuaries Coasts 46

Hope AG, Sandercock BK, Malaney JL (2018) Collection of scientific specimens: benefits for biodiversity sciences and limited impacts on communities of small mammals. BioScience 68:35–42

IUCN (2022) The IUCN red list of threatened species. Version 2022-2. https://www.iucnredlist.org. Accessed 30 Jun 2023

Jeliazkov A, Gavish Y, Marsh CJ, Geschke J, Brummitt N, Rocchini D, Haase P, Kunin WE, Henle K (2022) Sampling and modelling rare species: conceptual guidelines for the neglected majority. Glob Chang Biol 28:3754–3777

Krebs CJ (1999) Ecological methodology, 2nd edn. Addison-Welsey Educational Publishers, Inc., Menlo Park

MacKenzie DI, Nichols JD, Royle JA, Pollock KH, Bailey LL, Hines JE (2017) Occupancy estimation and modeling: inferring patterns and dynamics of species occurrence, 2nd edn. Academic Press, London

MacKenzie DI, Royle JA (2005) Designing occupancy studies: general advice and allocating survey effort. J Appl Ecol 42:1105–1114

McCrea RS, Morgan BJ (2014) Analysis of capture-recapture data. CRC Press

McGowan CP, Ryan MR (2009) A quantitative framework to evaluate incidental take and endangered species population viability. Biol Conserv 142:3128–3136

Morris WF, Doak DF (2002) Quantitative conservation biology: theory and practice of population viability analysis. Sinauer Associates, Sunderland

Moyle PB, Brown LR, Durand JR, Hobbs JA (2016) Delta Smelt: life history and decline of a once-abundant species in the San Francisco Estuary. San Franc Estuary Watershed Sci 14

Mukhopadhyay N, Bhattacharjee D (2018) Sequentially estimating the required optimal observed number of tagged items with bounded risk in the recapture phase under inverse binomial sampling. Seq Anal 37:412–429

Mundry R, Nunn CL (2009) Stepwise model fitting and statistical inference: turning noise into signal pollution. Am Nat 173:119–123

Newman K, Buckland S, Morgan B, King R, Borchers D, Cole D, Besbeas P, Gimenez O, Thomas L (2014) Modelling population dynamics: model formulation, fitting and assessment using state-space methods. Springer, New York

Nichols FH, Cloern JE, Luoma SN, Peterson DH (1986) The modification of an estuary. Science 231:567–573

Oceanic and Atmospheric Administration (NOAA) (2024) Tides and currents. https://tidesandcurrents.noaa.gov/waterlevels.html?id=9415144. Accessed 19 Apr 2024

Nobriga ML, Sommer TR, Feyrer F, Fleming K (2008) Long-term trends in summertime habitat suitability for Delta Smelt, Hypomesus transpacificus. San Franc Estuary Watershed Sci 6

Polansky L, Mitchell L, Newman KB (2019) Using multistage design-based methods to construct abundance indices and uncertainty measures for Delta Smelt. Trans Am Fish Soc 148:710–724

Polansky L, Newman KB, Nobriga ML, Mitchell L (2018) Spatiotemporal models of an estuarine fish species to identify patterns and factors impacting their distribution and abundance. Estuaries Coasts 41:572–581

R Core Team (2023) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna

Rao CR (1973) Linear statistical inference and its applications. Wiley, New York

Roberts HV (1967) Informative stopping rules and inferences about population size. J Am Stat Assoc 62:763–775

Royle JA (2004) N-mixture models for estimating population size from spatially replicated counts. Biometrics 60:108–115

Silva IR, Bhattacharjee D, Zhuang Y (2023) Fixed-length interval estimation of population sizes: sequential adaptive Monte Carlo mark-recapture-mark sampling. Comput Appl Math 42

Smith DR, Allan NL, McGowan CP, Szymanski JA, Oetker SR, Bell HM (2018) Development of a species status assessment process for decisions under the U.S. Endangered Species Act. J Fish Wildl Manag 9:302–320

Sommer T, Armor C, Baxter R, Breuer R, Brown L, Chotkowski M, Culberson S, Feyrer F, Gingras M, Herbold B, Kimmerer W, Mueller-Solger A, Nobriga M, Souza K (2007) The collapse of pelagic fishes in the upper San Francisco Estuary. Fisheries 32:270–277

Stevens DL, Olsen AR (2004) Spatially balanced sampling of natural resources. J Am Stat Assoc 99:262–278

Stoklosa J, Blakey RV, Hui FKC (2022) An overview of modern applications of negative binomial modelling in ecology and biodiversity. Diversity 14:320

Su Z, Quinn TJ II (2003) Estimator bias and efficiency for adaptive cluster sampling with order statistics and a stopping rule. Environ Ecol Stat 10:17–41

Swanson C, Mager RC, Doroshov SI, Cech JJ Jr (1996) Use of salts, anesthetics, and polymers to minimize handling and transport mortality in delta smelt. Trans Am Fish Soc 125:326–329

Thompson SK (1990) Adaptive cluster sampling. J Am Stat Assoc 85:1050–1059

Thompson SK (2003) Editorial: special issue on adaptive sampling. Environ Ecol Stat 10:5–6

U.S. Fish and Wildlife Service (USFWS) (1993) Endangered and threatened wildlife and plants; determination of threatened status for the Delta Smelt. Fed Registrar 58:12854–12864

U.S. Fish and Wildlife Service (USFWS) (2006) Endangered Species Act of 1973. As Amended through the 108th congress. Department of the Interior, U.S. Fish and Wildlife Service, Washington

U.S. Fish and Wildlife Service (USFWS) (2020) Delta Smelt supplementation strategy (Interagency agreement no. R20PG00046). Technical report, U.S. Fish and Wildlife Service, San Francisco Bay-Delta Fish and Wildlife Office

Whipple A, Grossinger RM, Rankin D, Stanford B, Askevold RA (2012) Sacramento-San Joaquin Delta historical ecology investigation: exploring pattern and process. Technical Report 672, SFEI, Richmond

Zuur AF, Ieno EN, Walker NJ, Saveliev AA, Smith GM (2009) Mixed effects models and extensions in ecology with R. Springer

Acknowledgements

We thank those who have contributed to the EDSM program including field crew, data managers, local and regional management, and members of the larger scientific community. We also thank several reviewers who provided helpful feedback and suggestions on earlier stages of the manuscript. The California Department of Water Resources, the U.S. Bureau of Reclamation, the U.S. Fish and Wildlife Service, and the Interagency Ecological Program (IEP) for the San Francisco Estuary provided funding and U.S. Endangered Species Act take coverage for this work. Funding for EDSM was provided by the U.S. Bureau of Reclamation under Interagency Agreement R24PG00040. The findings and conclusions in this article are those of the authors and do not necessarily represent the view of the U.S. Department of the Interior, the U.S. Fish and Wildlife Service, or the member agencies of the IEP.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We have no conflict of interest to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mitchell, L., Polansky, L. & Newman, K.B. Stopping Rule Sampling to Monitor and Protect Endangered Species. JABES (2024). https://doi.org/10.1007/s13253-024-00649-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s13253-024-00649-3