Abstract

Obstructive sleep apnea hypopnea syndrome (OSAHS) is a serious respiratory disorder. Snoring is the most intuitively characteristic symptom of OSAHS. Recently, many studies have attempted to develop snore analysis technology for diagnosing OSAHS. The preliminary and essential step in such diagnosis is to automatically segment snoring sounds from original sleep sounds. This study presents an automatic snoring detection algorithm that detects potential snoring episodes using an adaptive effective-value threshold method, linear and nonlinear feature extraction using maximum power ratio, sum of positive/negative amplitudes, 500 Hz power ratio, spectral entropy (SE) and sample entropy (SampEn), and automatic snore/nonsnore classification using a support vector machine. The results show that SampEn provides higher classification accuracy than SE. Furthermore, the proposed automatic detection method achieved over 94.0% accuracy when identifying snoring and nonsnoring sounds despite using small training sets. The sensitivity and accuracy of the results demonstrate that the proposed snoring detection method can effectively classify snoring and nonsnoring sounds, thus enabling the automatic detection of snoring.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Obstructive sleep apnea hypopnea syndrome (OSAHS) is a common sleep-related breathing disorder that may cause neurocognitive dysfunction, arterial hypertension, metabolic disorders, and cardiovascular and cerebrovascular diseases [1–3]. Polysomnography (PSG) is considered the gold standard for diagnosing OSAHS [4]. However, PSG is time-consuming, labor-intensive, and expensive; as a result, many OSAHS sufferers worldwide are not diagnosed in time [5]. It is therefore essential to develop a simple and affordable monitoring method for diagnosing OSAHS.

Snoring is caused by the vibration of the soft palate and the uvula [6]. In recent years, researches [7, 8] have shown the relationship between snoring and OSAHS. Although not all snorers have this condition, most OSAHS sufferers do snore [9]. OSAHS patients usually snore loudly and heavily in their sleep [10, 11]. Snoring is a characteristic symptom of OSAHS patients, and many studies have shown that OSAHS can be identified through an acoustical analysis of snoring [8, 12–15].

It is important to detect snoring episodes from a full-night recording of sleep sounds to evaluate the snoring severity of patients accurately. In many studies, potential snore episodes were segmented manually [16–19]. Only a few studies have realized automatic detection. The short-term energy (STE) and zero crossing rate (ZCR) methods were widely used to detect potential snore episodes [20, 21]. Azarbarzin [22] proposed the Vertical Box (V-Box) algorithm and Dafna et al. [23] explored an adaptive algorithm with energy threshold for the automatic detection of potential snore episodes. It is also important to classify potential snore episodes. Duckitt et al. [16] adopted speech signal methods such as Mel-frequency-cepstral coefficients (MFCCs) and hidden Markov model (HMM)-based classification framework for classifying snoring, breathing, and silence. The results showed 82–89% sensitivity for snoring detection. Dafna et al. [23] used a multifeature analysis method and Ada Boost classifier to classify potential snore episodes into snore and nonsnore. They selected 34 optimal features from a pool of 127 features, and their result showed sensitivity of 98.0% for snoring classification.

Snoring and breathing are the two main components in sleep sounds. Recently, several studies have proposed effective methods for automatically detecting snoring sounds from breathing and snoring sounds. Karunajeewa et al. [17] proposed a method using the mean and covariance of four features extracted from time and spectral domains and reported overall classification accuracy of 90.74% for classifying snoring, breathing, and silence. Yadollahi and Moussavi [18] used energy, ZCR, first formant frequency (F1), and Fisher linear discriminant (FLD) for classifying breath and snoring sound segments and reported more than 90% overall accuracy in tracheal recording experiments. Ankışhan and Yılmaz’s study [19] classified snoring, breathing, and silence using the largest Lyapunov exponent (LLE) and entropy with multiclass support vector machines (SVMs) and adaptive network fuzzy inference system (ANFIS); they reported 91.61 and 86.75% total accuracies for SVMs and ANFIS, respectively.

To reduce the complexity of snoring detection and improve the performance of snore/nonsnore classification, this study proposed an automatic and robust snoring detection algorithm based on the acoustical analysis of snoring. The snoring detection algorithm has three major steps: (1) potential snore episodes are detected by an adaptive effective-value threshold; (2) feature extraction of linear and nonlinear features, use of MPR to reflect jitter of sounds, sum of positive/negative amplitudes, 500 Hz power ratio, spectral entropy (SE) and sample entropy (SampEn) to describe the chaos of sounds; and (3) SVM-based snore and nonsnore classification. The novelty of the present work is that it automatically detects potential snore episodes from whole-night sleep sounds using a new adaptive threshold method. Comprehensive sets of features involving linear and nonlinear characteristics that better reflected the nature of snoring realized the expected results for snoring detection and provided an important foundation for non-contact OSAHS diagnosis.

Methods

Subjects

Whole-night sound recordings of six habitual snorers who were referred for a full-night PSG study were obtained from the First Affiliated Hospital of Guangzhou Medical University. The main outcome of a PSG test to assess the severity of OSAHS is the Apnea–Hypopnea Index (AHI), which is defined as the number of apnea/hypopnea events per hour of sleep. The severity of OSAHS was graded as no OSAHS (AHI <5), mild (AHI 5–15), moderate (AHI 15–30), and severe (AHI >30) [24]. The duration of each recording was over 7 h. Table 1 lists the age, gender, AHIs, and Body Mass Indices (BMI) of these individuals.

Recording of snoring sounds

A digital audio recorder (Roland, Edirol R-44, Japan) with 40–20,000 Hz ± 2.5 dB frequency response and a directional microphone (RØDE, NTG-3, NSW, Australia) placed 45 cm over the patient’s head were used for audio recording. The distance ultimately varied from 50 to 70 cm owing to patient movements. The acquired signal was digitized at a sampling rate of 44.1 kHz with 16-bit resolution.

Detection of potential snore episodes

In a manner similar to Hsu’s snoring detection algorithm [25], the proposed algorithm has three steps:

-

1.

The noise reduction processing of original signals is based on power spectral subtraction. This process relies on automatically tracking background noise segments to estimate their spectra and subtracting them from sleep sound signals [26, 27], and it sets adaptive noise reduction parameters depending on different SNRs to improve the SNR.

-

2.

The absolute value of spectral subtraction signals is calculated. The effective values of signals are calculated as follows: the profile of maximum values is found every 50 points, and the peaks are amplified by summing every 50 maximum values. The final profile is further smoothed by taking a 10-point moving average.

-

3.

The effective value threshold (adaptive threshold) eth is calculated using the snore profile:

$${{e}_{th}}=1.5\times arg~\underset{e}{\mathop{\max }}\,his{{t}_{effective~value}}\left( e \right)$$(1)

Figure 1 shows the detection process. Although this method realized the massive detection of snoring sound segments, some breath sounds and a few noises such as duvet noises, coughs, door shutting, and other environmental sounds remained in the detection result. Segmented sound episodes were identified by an ENT (ear–nose–throat) specialist as snoring/breathing/noise to create datasets for subsequent classification.

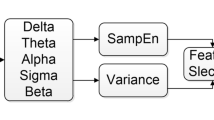

Feature extraction

Potential snore episodes are known to have nonstationary and complex behaviors [28, 29]. Multifeature analysis focuses on linear (sum of positive/negative amplitudes, 500 Hz power ratio, and MPR) and nonlinear features (SE and SampEn) to classify the data.

Sum of positive/negative amplitudes

Recently, Emoto [30] proposed a novel feature called positive/negative amplitude ratio (PNAR) to measure the shape of sound signals. The present study shows that the sum of positive/negative amplitudes provides better performance for classifying breathing and snoring sounds.

The sound signal x(n) is segmented x k (n) with 20-ms frame size and 50% overlap. The maximum positive amplitude in the kth segment x k (n) is calculated as

where K is the total number of segments. The maximum negative amplitude in the kth segment of the signal is also computed as

The sum of positive/negative amplitudes is defined as

where Var(.) is the variance of \({{P}_{k}}+{{N}_{k}}\).

500 Hz power ratio

Spectrum estimation usually uses fast Fourier transform (FFT). However, FFT suffers from several performance limitations such as frequency resolution and spectrum leakage [31, 32]. To overcome these limitations, Welch spectrum estimation is proposed to estimate the PSD (frame size: 20 ms with 50% overlap). This study defines the power ratio at 500 Hz as

where fc (= 8 kHz) is the cut-off frequency. \({{P}_{xx}}({{f}_{i}},k)\) is the PSD of the kth frame, and \({{P}_{x}}\left( {{f}_{i}} \right)\) is the average PSD value of every sound segment.

MPR, a novel feature to quantify sound jitter

This study proposes a novel feature called MPR. MPR reflects sound jitter and can be used to distinguish snoring sounds from breathing and other slight jitter sounds. MPR is given as

SE

SE is used to measure the flatness of PSD, and it is defined as

SampEn

Entropy estimation methods for acoustical snoring analysis usually use frequency domain analysis. However, entropy estimation of a sound segment represented by a time series does not. Richman [33] proposed a new method called SampEn to measure the time series complexity. It was similar to the approximate entropy (ApEn) but was more closely related to entropy than ApEn over a broad range of conditions [33]. Higher SampEn value indicates greater time series complexity. SampEn is calculated as follows:

-

1.

For a time series of N points, m dimension vectors \({{x}_{m}}(i)\) are defined as

-

2.

The distance between two such vectors is calculated as

-

3.

Let B i be the number of vectors \(\text{d}\left[ {{X}_{m}}\left( i \right),{{X}_{m}}(j) \right]\) within r and A i be the number of vectors \(\text{d}\left[ {{X}_{m+1}}\left( i \right),{{X}_{m+1}}(j) \right]\) within r. r is called the template match number. These functions are respectively defined as

-

4.

Finally, SampEn is estimated as

which is quantified by the statistic \(\text{SampEn}\left( \text{m},\text{r},\text{N} \right)=-ln\left[ {{A}^{m}}(r)/{{B}^{m}}(r) \right]\) where N is finite.

Classification

SVMs have been used in numerous fields [34–38]. Studies showed that SVM was a good classifier for snore recognition and could achieve high recognition rate [19, 39]. An SVM is a two-class theoretical statistical model, and its basic principle is to maximize the margin on the feature space. Let xi and yi \(\in\){+1, −1} be feature vectors of the ith subsequence and its class label; this optimization problem is then constructed as

where \(\text{ }\!\!\omega\!\!\text{ }\) is the system’s weight; b, the bias parameter; C, the penalty factor; and \({{\xi }_{i}}\) , the slack variable. By the Lagrange method, the optimal classification function is determined as

where \({{\alpha }_{i}}^{*}\) is an optimal solution; \(~\text{K}\left( {{x}_{i}},{{x}_{j}} \right)\), a kernel function that indicates the dot-product in high-dimensional Hilbert space; and \({{b}^{*}}\), the bias parameter determined by the optimal solution.

Receiver operating characteristic (ROC) analysis for evaluating classification accuracy

To evaluate the classification accuracy, ROC analysis is used to compare the snore and nonsnore classification performance by estimating the following parameters and area under curve (AUC). The sensitivity, specificity, positive predictive value (PPV), and total accuracy were calculated as

where TP, TN, FP, and FN are the numbers of true positive, true negative, false positive, and false negative classified sounds, respectively. AUC gives a quantitative evaluation of the classification accuracy, and it varies from 0.5 to 1.0. The classification accuracy is favorable when AUC approaches 1.

Training/testing data set

In this study, the training and testing materials were divided into files of ~1-min length for processing. Potential snoring episodes were detected by using the adaptive effective-value threshold method. Then, they were labeled as breathing, snoring, and noise segments by two research assistants (including authors, Can Wang and Lijuan Song). All assistants were guided by an ENT specialist (Xiaowen Zhang) in order to make sure that the definition of breathing, snoring, and noise was clear. Three different experiments were performed to evaluate the classification performance:

-

1.

Testing and training data were taken from the same subjects (Exp-1A). However, the recording sections in each dataset were different. Training and testing data were obtained from the first and second half of the recordings, respectively.

-

2.

Testing and training data were obtained from different subjects (Exp-1B). Training and testing materials were obtained from three and the remaining subjects’ recordings.

-

3.

We used k-fold cross-validation, where k was set to 10 (Exp-2). The method divided all data into k groups (each group usually had equal size). The ith group was used as a set of testing data, and the other data were assigned to training data. The cross-validation process was then repeated k times, with each group used exactly once as testing data. The k results from the folds can be averaged to estimate the classification performance [40].

Results

As a result, 80 min of recordings were acquired from each of the six subjects. The dataset contained 6346 potential snore episodes. Table 2 shows the compositions of the testing/training datasets in these experiments.

Classification results

Figure 2 shows the MPR feature distribution of the three sounds (snoring, breathing, and noises) from two subjects (subjects 3 and 6). This figure shows a case where >91% of the snoring events of these two subjects are above 100 and where >89% of breathing events are below 50. MPR for noise usually has a dispersed distribution.

Figure 3 shows a plot of MPR versus SampEn for snoring (asterisk), breathing (open circle), and noise (cross mark) parts of potential snoring episodes. These data are obtained from the testing set of Exp-1A. Snoring sound segments usually show larger MPR and SampEn levels than breath sounds. Although noise segments have a dispersed distribution, most noise can be distinguished from the snoring sound segments. These results suggest that the two features of snoring sounds are significantly different from those of breathing and some noise sounds.

The SVM algorithm was used for snore/nonsnore classification. A radial basis function was found to be the optimal kernel function for these classification results, and penalty factor C of 3.33 was used in the experiments. Table 3 shows the classification performances of Experiments 1 and 2 for snore and nonsnore segments; overall accuracy of 94.18–94.54% was achieved when selecting the abovementioned features. In simple validation experiments, EXP-1A, in which the training and testing datasets were obtained from the same subjects, had higher overall accuracy than EXP-1B. The sensitivity of the algorithm for the detection of snoring was 96.05% with EXP-1A and 95.85% with EXP-1B. The cross-validation results in EXP-2 indicate that the classification performance is similar to that of EXP-1. It should be noted that in all experiments, the sensitivities of the proposed method were higher than its specificities; the results suggest that various noise segments affect nonsnore classification. Additionally, we evaluated the performance of our system using three different feature sets. One contained all of the abovementioned features, another merely excluded SE (denoted as No SE), and the last one had all features except for SampEn (denoted as No SampEn). The results shown in Table 3 indicates that all feature sets give the best classification accuracy, and the No SE feature set has higher classification accuracy than the No SampEn one.

Figure 4 shows the ROC analysis for the abovementioned features; the AUC in the three experiments was calculated as 0.933, 0.926, and 0.935, respectively.

Discussion

This study proposed an automated detection method to identify snore segments from sleep sounds with acoustic feature analysis and an SVM algorithm. To investigate the effect of SNR on classification performance, Karunajeewa et al. [17] compared the classification results of the algorithm when three different noise reduction techniques [amplitude spectral subtraction (ASS), power spectral subtraction (PSS), and short time spectral amplitude (STSA) estimation] and no noise reduction were used; they found that noise reduction along with a proper choice of features could improve the classification accuracy. In the present study, power spectral subtraction techniques and an adaptive effective-value threshold method were adapted to detect potential snore episodes based on a previous study [25, 26]. This algorithm proved effective for detecting potential snore episodes. To fully explore effective features, features from linear and nonlinear domains were implemented. SampEn and MPR were first proposed to distinguish snore and nonsnore sounds. The results shown in Table 3 indicate that SampEn has higher classification performance than SE, and using all features described in the method can realize higher precision. The best system accuracy was achieved using an SVM with Gauss-based kernel functions.

Previous studies have reported snore classification results based on various experimental programs [16–19, 23]. Table 4 shows a comparison of the classification performance of recent methods and our method. Duckitt et al. [16] demonstrated a system based on the manual screening of potential snore episodes from six subjects to identify snoring and nonsnoring sounds such as breathing sounds, duvet noises, silence, and other noises with 82–89% snore sensitivity; however, the specificity of snore detection was poor. Similarly, Karunajeewa et al. [17] proposed a method to classify snoring, breathing, and silence and achieved overall classification accuracy of 90.74%. Yadollahi and Moussavi [18] reported an automatic breathing and snoring sound classification algorithm with 93.2% accuracy for ambient recording when three-dimensional features and FLD were used. Ankışhan et al. [19] used the LLE and entropy to classify potential snore episodes as snoring, breathing, and silence, and the overall classification accuracy was 91.61 and 86.75% for SVM and ANFIS, respectively. However, these studies manually obtained potential snore episodes from recordings and did not consider noise. Dafna et al. [23] recently provided a new algorithm for detecting potential snore episodes and proposed a snore/nonsnore classification method that exhibited high classification accuracy; however, it required the extraction of 34-dimensional feature vectors from 127-dimensional feature vectors by multiple acoustic analysis. The present method automatically detected potential snore episodes and extracted feature vectors with low dimensionalities. It detected snores with lower complexity, and the sensitivity and accuracy results for classifying snoring and nonsnoring sounds from tracheal recordings were superior to those of [16–19] (Table 4).

Noise in sleep sounds is unpredictable and variable, and this uncertainty causes the misclassification of snoring and nonsnoring sounds and affects the overall accuracy of the monitoring system. Future work should assess the characteristics of the main noises to achieve higher overall classification accuracy.

Conclusion

The present study proposes an automatic snoring detection algorithm to identify snore segments from sleep sounds based on acoustic feature analysis and SVM. The PSS technique and adaptive effective-value threshold method are used to detect potential snore episodes. The results show that SampEn can realize better classification accuracy than SE, and the proposed automatic detection method can identify snores and nonsnores with 94.2–94.5% accuracy despite the small size of the training set. We conclude that the proposed algorithm extracted relatively low-dimensional features for automatic detection of snoring has a potential to acquire snoring sounds from massive subjects. It shows promise for realizing an OSAHS diagnostic system. Further study should explore new features to recognize noises so as to improve the performance of the system and develop a potential screening tool for a home-based environment.

References

Adams N, Strauss M, Schluchter M, Redline S (2001) Relation of measures of sleep-disordered breathing to neuropsychological functioning. Am J Respir Crit Care Med 163(7):1626–1631

Nieto FJ, Young TB, Lind BK, Shahar E, Samet JM, Redline S et al (2000) Association of sleep-disordered breathing, sleep apnea, and hypertension in a large community-based study. J Am Med Assoc 283:1829–1836

Lloberes P, Duran-Cantolla J, Martinez-Garcia MA, Marin JM J, Ferrer A, Corral J, Masa JS, Parra O, Alvarez MLA, Santos JT (2011) Diagnosis and treatment of sleep apnea-hypopnea syndrome. Arch Bronconeumol 47:143–156

Abeyratne UR, Patabandi CKK, Puvanendran K (2001) Pitch-jitter analysis of snoring sounds for the diagnosis of sleep apnea. IEEE Engineering in Medicine and biology society. In: Proceedings of the annual international conference. Institute of Electrical and Electronics Engineers, vol 2, pp 2072–2075

Finkel KJ, Searleman AC et al (2009) Prevalence of undiagnosed obstructive sleep apnea among adult surgical patients in an academic medical center. Sleep Med 10(7):753–758

Bernatowska E (2000) The International classification of sleep disorders, revised: diagnostic and coding manual. Przeglad Pediatryczny 30(4):263–266

Cavusoglu M, Ciloglu T, Serinagaoglu Y, Kamasak M, Erogul O, Akcam T (2008) Investigation of sequential properties of snoring episodes for obstructive sleep apnoea identification. Physiol Meas 29:879–898

Michael H, Andreas S, Thomas B, Beatrice H, Werner H, Holger K (2008) Analysed snoring sounds correlate to obstructive sleep disordered breathing. Eur Arch Otorhinolaryngol 265:105–113

Polo OJ, Tafti M, Fraga J, Porkka KV, Déjean Y, Billiard M (1991) Why don’t all heavy snorers have obstructive sleep apnea? Am Rev Respir Dis 143(6):1288–1293

Roberty PD (1996) Clinical assessment in respiratory care. Respir Care 41:748

Lucas J, Golish J, Sleeper G, O’Ryan JA (1988) Home respiratory care chapter 6. Appleton & Lange, Englewood Cliffs, pp 132–136

Xu H, Huang W, Yu L, Chen L (2011) Spectral analysis of snoring sound and site of obstruction in obstructive sleep apnea/hypopnea syndrome. J Audiol Speech Pathol 19:28–32

Azarbarzin A, Moussavi Z (2013) Snoring sounds variability as a signature of obstructive sleep apnea. Med Eng Phys 35:479–485

Mousavi S, Hajipour V, Niaki S, Aalikar N (2013) A multi-product multi-period inventory control problem under inflation and discount: a parameter-tuned particle swarm optimization algorithm. Int J Adv Manuf Technol 70(9–12):1739–1756

Mousavi SM, Alikar N, Niaki STA (2016) An improved fruit fly optimization algorithm to solve the homogeneous fuzzy series—parallel redundancy allocation problem under discount strategies. Soft Comput 20(6):2281–2307

Duckitt WD, Tuomi SK, Niesler TR (2006) Automatic detection, segmentation and assessment of snoring from ambient acoustic data. Physiol Meas 27:1047

Karunajeewa AS, Abeyratne UR, Hukins C (2008) Silence–breathing–snore classification from snore-related sounds. Physiol Meas 29:227

Yadollahi A, Moussavi Z (2010) Automatic breath and snoring sounds classification from tracheal and ambient sounds recordings. Med Eng Phys 32:985–990

Ankışhan H, Yılmaz D (2013) Comparison of SVM and ANFIS for snore related sounds classification by using the largest Lyapunov exponent and entropy. Comput Math Methods Med 2013:238937

Fiz JA, Abad J, Jane R, Riera M, Mananas MA, Caminal P, Rodenstein D, Morera J (1996) Acoustic analysis of snoring sound in patients with simple snoring and obstructive sleep apnoea. Eur Respir J 9:2365–2370

Abeyratne UR, Wakwella AS, Hukins C (2005) Pitch jump probability measures for the analysis of snoring sounds in apnea. Physiol Meas 26:779

Azarbarzin A, Moussavi Z (2010) Unsupervised classification of respiratory sound signal into snore/no-snore classes. In: 2010 annual international conference of the IEEE engineering in medicine and biology, IEEE, pp 3666–3669

Dafna E, Tarasiuk A, Zigel Y (2013) Automatic detection of whole night snoring events using non-contact microphone. PLoS ONE 8:e84139

Maimon N, Hanly PJ (2010) Does snoring intensity correlate with the severity of obstructive sleep apnea? J Clin Sleep Med 6(5):475–478

Hsu YL, Chen MC, Cheng CM, Wu CH (2005) Development of a portable device for home monitoring of snoring. J Biomed Eng 17:176–180

Scalart P (1996) Speech enhancement based on a priori signal to noise estimation. In: Conference proceedings IEEE international conference on acoustics, speech, and signal processing, vol 2, pp 629–632

Mousavi SM, Sadeghi J, Niaki STA, Alikar N, Bahreininejad A, Metselaar HSC (2014) Two parameter-tuned meta-heuristics for a discounted inventory control problem in a fuzzy environment. Inf Sci 276:42–62

Beck R, Odeh M, Oliven A, Gavriely N (1996) The acoustic properties of snores. Eur Respir J 8:2120–2128

Pasandideh SHR, Niaki STA, Mousavi SM (2013) Two metaheuristics to solve a multi-item multiperiod inventory control problem under storage constraint and discounts. Int J Adv Manuf Technol 69(5):1671–1684

Emoto T, Kashihara M, Abeyratne UR, Kawata I, Jinnouchi O, Akutagawa M Konaka S, kinouchiet Y (2014) Signal shape feature for automatic snore and breathing sounds classification. Physiol Meas 35:2489–2500

Alkan A, Yilmaz AS (2007) Frequency domain analysis of power system transients using Welch and Yule–Walker AR methods. Energy Convers Manag 48:2129–2135

Mousavi SM, Niaki STA (2013) Capacitated location allocation problem with stochastic location and fuzzy demand: a hybrid algorithm. Appl Math Modell 37(7):5109–5119

Richman JS, Moorman JR (2000) Physiological time-series analysis using approximate entropy and sample entropy. Am J Physiol Heart Circ Physiol 278:H2039–H2049

Satone M, Kharate G (2014) Face recognition technique using PCA, wavelet and SVM. Int J Comput Sci Eng 6(1):58–62

Wang Y, Li Y, Wang Q, Lv Y, Wang S, Chen X et al (2014) Computational identification of human long intergenic non-coding RNAs using a GA-SVM algorithm. Gene 533(1):94–99

Selakov A, Cvijetinović D, Milović L, Mellon S, Bekut D (2014) Hybrid PSO–SVM method for short-term load forecasting during periods with significant temperature variations in city of burbank. Appl Soft Comput 16(3):80–88

Mousavi SM, Alikar N, Niaki STA, Bahreininejad A (2015) Optimizing a location allocation-inventory problem in a two-echelon supply chain network: a modified fruit fly optimization algorithm. Comput Ind Eng 87:543–560

Mousavi SM, Bahreininejad A, Musa N, Yusof F (2014) A modified particle swarm optimization for solving the integrated location and inventory control problems in a two-echelon supply chain network. J Intell Manuf 580(3):1–16

Mikami T, Kojima Y, Yonezawa K, Yamamoto M, Furukawa M (2013) Spectral classification of oral and nasal snoring sounds using a support vector machine. J Adv Comput Intell Intell Inform 17:611–621

McLachlan G, Do KA, Ambroise C (2004) Analyzing microarray gene expression data. Wiley, New York

Acknowledgements

This work was supported by Guangdong province science and technology plan (2013B060100005) and National Natural Science Foundation of China (81570904).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Ethical approval

This study was approved by the Ethics Committee of Guangzhou Medical University and an informed consent was obtained from each participant.

Additional information

Can Wang and Lijuan Song have contributed equally to this work.

Rights and permissions

About this article

Cite this article

Wang, C., Peng, J., Song, L. et al. Automatic snoring sounds detection from sleep sounds via multi-features analysis. Australas Phys Eng Sci Med 40, 127–135 (2017). https://doi.org/10.1007/s13246-016-0507-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13246-016-0507-1