Abstract

Purpose

Wearable devices in the scenario of connected home healthcare integrated with artificial intelligence have been an effective alternative to the conventional medical devices. Despite various benefits of wearable electrocardiogram (ECG) device, several deficiencies remain unsolved such as noise problem caused by user mobility. Therefore, an insensitive and robust classification model for cardiac arrhythmias detection system needs to be devised.

Methods

A one-dimensional seven-layer convolutional neural network (CNN) classification model with dedicated design of structure and parameters is developed to perform automatic feature extraction and classification based on large volume of original noisy signals. Record-based ten-fold cross validation scheme is devised for evaluation to ensure the independence of the training set and test set, and further improve the robustness of our method.

Results

The model can effectively detect cardiac arrhythmias, and can reduce the computational workload to a certain extent. Our experimental results outperform most recent literature on the cardiac arrhythmias classification with diagnostic accuracy of 0.9874, sensitivity of 0.9811, and specificity of 0.9905 for original signals; diagnostic accuracy of 0.9876, sensitivity of 0.9813, and specificity of 0.9907 for de-noised signals, respectively.

Conclusion

The evaluation indicates that our proposed approach, which performs well on both original signals and de-noised signals, fits well with wearable ECG monitoring and applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

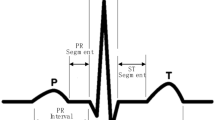

The cardiovascular disease (CVD), one of the foremost causes of human death, is causing increasing number of mortalities worldwide, especially in developing countries.[25] As the principal form of CVD which is broadly described as irregular heartbeat, cardiac arrhythmias can be detected by electrocardiogram (ECG) that records the electrical activity of myocardium to provide rich physiological information on user’s heart state.[7] In ECG diagnosis, normal state and abnormal state classification of heartbeats plays vital role in both research and clinic.

In the past years, many efforts were made on developing automatic cardiac arrhythmia classification based on machine learning (ML) technologies. Researchers utilized features such as higher order statistics of wavelet packet decomposition (WPD) coefficients, wavelet features and morphological features, combined with various types of classifiers such as k-nearest neighbor (KNN) and support vector machine (SVM) to recognize different classes of ECG signals.[12,15,16,20,28,29,31] Generally speaking, extracting appropriate features from ECG signals plays an important part in the result of cardiac arrhythmias classification and prediction. Nevertheless, it is hard to manually extract appropriate features which demands professional knowledge and cost enormous human labor.[8] The selected features may also not be suitable for different types of dataset.

Apart from the aforementioned traditional ML approach, deep learning (DL) including convolutional neural networks (CNN) and recurrent neural networks, has more advantages in prediction tasks. In recent years, DL has become an important methodology to be successfully adopted in computer vision, pattern recognition, bioinformatics, etc.[10] The benefits of DL include: (1) it does not require artificial feature extraction but can acquire features in an effective way, and (2) no need to choose appropriate classifier. Thus, DL alleviates a lot of workload in model construction.

Recently, due to the feasibility and portability of wearable devices, some applications have been gradually applied in ECG signal collection.[6] Compared to the hospital ECG equipment, the wearable ECG device is more user-friendly and more convenient to monitor the heart status in real time. A large volume of dynamic ECG data can be collected in the user’s daily life, transmitted through the Internet of Things, and accessed by the specialists for possible early diagnosis.[17]

However, conventional algorithms do not offer flexibility to handle such huge volumes of wearable ECG data. For instance, user mobility introduces specific challenge especially in terms of signal quality and real time computing. Consequently, a more insensitive, robust and light weight classification system is highly desired for cardiac arrhythmias detection based on ECG signals.

Generally ECG classification models could be categorized into beat-based schemes and record-based schemes. In the beat-based scheme, heartbeat of all the patients are integrated without distinguishing them either into the training set or into the test set. For cardiac arrhythmia classification, many researchers employ beat-based scheme for the purpose of improving classification accuracy (e.g.[16,28,29]). However, the training step is peculiarly prone to the overfitting problem when the training and test samples are from the same patient. Whereas, the record-based classification can avert aforementioned overfitting issue since all the beats in the test set are completely from unknown patients, which is much more closer to the real scenario. Overfitting makes the neural network model perform well on the training set, but has poor generalization ability and performance on the test set. Accordingly in this article, a record-based classification model is proposed to match the practical application.

Previously we have proposed conventional machine learning algorithm for cardiac arrhythmia classification.[30] In this study, our motivation is to develop an automatic method based on big ECG data to recognize cardiac arrhythmias with high accuracy and low computation. To reach our objective, a one-dimensional (1-D) seven-layer CNN model is designed to classify three types of ECG beats, known as normal beat (N), premature ventricular contraction beat (V), and right bundle branch block beat (R). Certain similarities exist between the three types of ECG beats, therefore a detection system needs to be devised to distinguish them.

The main contributions of this work are summarized as below: (1) We have designed a 1-D seven layer CNN classification system that can automatically extract appropriate features and recognize three different types of wearable big ECG data (N, V, R) in arrhythmia monitoring with superior performance. (2) Record-based ten-fold cross validation scheme is employed, i.e., the training set and the test set are separated completely, thus ensuring the independence of the samples and verifying the robustness of our approach, which in turn makes the experimental scenario even closer to the clinical application. (3) In order to study the generalization ability of the proposed method, we validate the classification model both on original signals and de-noised signals. Experimental results verify its insensitivity for wearable ECG signals processing without adjusting the parameters.

Related work

Currently in clinic, cardiac arrhythmias are classified by examining ECG recordings of the patient by the expert cardiologist. This process is expensive as well as time consuming. Thus, there is much desire to design an automatic classification method for diagnosing cardiac arrhythmias during the treatment. It is worth noting that many efforts have been put in the latest years on automatic cardiac arrhythmias classification with good performance especially through the joint investigation of big ECG data analytics by deep learning solutions[1,2,5,13,14,18,19,23,24,27]

An eleven-layer deep CNN system was proposed by Acharya et al. to classify four types of arrhythmia disease, where they implemented two experiments.[1] The results achieved an accuracy of 0.925 for two seconds of ECG duration (experiment A), and an accuracy of 0.949 for five seconds of ECG duration (experiment B), both of which demonstrated high effectiveness in prediction. Sannino et al. designed a deep neural network (DNN) system for identifying abnormal beats from a large quantity of ECG signals.[23] The numerical results illustrated the effectiveness of the approach, especially in terms of accuracy with 0.9909. However, the signal denoising step increased the workload of this work.

Mathews et al. studied the application of the deep belief networks (DBN) and Restricted Boltzmann Machine (RBM) based on deep learning methodology for detecting supraventricular and ventricular heartbeats.[18] Experimental results demonstrated that DBN and RBM can achieve high average classification accuracies of 0.9363 for ventricular ectopic beats (VEB), and 0.9557 for supraventricular ectopic beats (SVEB) with suitable parameters.

Tan et al. utilized eight-layer stacked long short-term memory (LSTM) network with CNN to classify ECG signals automatically.[27] The proposed deep learning model was able to identify CVDs with a diagnostic sensitivity of 0.9576, specificity of 0.957, and accuracy of 0.9985. Kiranyaz et al. used an adaptive implementation of one-dimensional (1-D) CNN for a patient-specific ECG heartbeat classification.[14] The performance of the classification experiments were implemented with diagnose sensitivity of 0.939, specificity of 0.989, and accuracy of 0.99 for VEB, and sensitivity of 0.603, specificity of 0.992, and accuracy of 0.976 for SVEB, respectively.

Currently, there is still no identical CNN model for arrhythmia recognition that can achieve similar good performance on both original signals and de-noised signals. Moreover, for wearable ECG data classification, if the noise removal step can be neglected, overall workload will be greatly reduced for the real-time purpose of processing. Accordingly in this work, a seven-layer CNN with 1-D convolution and 1-D mean-pooling is proposed, outperforming most of the current approaches by solving the aforementioned problems. The model is insensitive to ECG signals based on independent individual records, no matter it is a clean signal or not.

Methodology

Arrhythmia classification can be described as a pattern recognition problem. In this section, a DL ECG pattern recognition system for classifying cardiac arrhythmias is employed. The typical blocks of the classification system contain four main modules: (1) Wearable ECG data acquisition, (2) Cloud platform, (3) Cardiac arrhythmias classification model, and 4) Remote treatment. Specifically, the innovations of this work focus on module 3 with three consecutive steps: (i) Pre-processing, (ii) CNN pattern recognition model and (iii) Performance evaluation. With respect to the evaluation, our experiments involve two data sets denoted as data set A and data set B. The set A consists of raw ECG signals while the set B includes filtered ECG signals. Feature extraction task and classification task can be automatically implemented by the proposed CNN model.

Data Acquisition

The ECG data used are from the Massachusetts Institute of Technology-Beth Israel Hospital (MIT-BIH) arrhythmia database, where 48 ECG records from 47 subjects are consisted.[21] Each record contains approximately half-hour long ECG signals and the signals are sampled at 360 Hz with 11-bit resolution over the 10 mV range. The database includes annotation files for records and beats class information, which is labeled by independent expert cardiologists. The three types of ECG heartbeats (R, V and N) for one lead MLII from 45 records (the records 102, 104 and 114 are not included) produce a total number of 87223 ECG beats. The dataset suffers from the sample imbalance problem with only 16.43% abnormal cases among the whole dataset. The division of the training set and the test set based on the ECG records is summarized in Table 1 and detailed numbers for each type of the ECG beats are presented in Table 2.

Pre-processing

The preprocessing which includes noise removal and heartbeat segmentation is an important step in data analysis. In this work, wavelet transform is performed to remove both high frequency noise and low frequency noise. Heartbeat segmentation algorithm is designed to obtain individual heartbeats. The specific details are presented below.

Noise Removal: The original ECG signals usually contain high-frequency noise caused by power line interference and low-frequency noise due to body movement. In this step, wavelet transform (WT) is selected to analyze the ECG signals, since WT is suitable to deal with the non-linear and non-stationary signals. Meanwhile, WT de-noising preserves useful signals that can distinguish high-frequency noise from high-frequency information effectively.[4] Thus in this work, WT is utilized to analyze the component of specific frequency sub-bands and to further remove the noise.

In the first place, the Daubechies-5 (db5) mother wavelet is utilized to decompose the ECG signals into nine high frequency sub-bands and one low frequency sub-band. After that, we remove the top three high-frequency sub-bands and one low frequency sub-band, then the remaining detailed coefficient sub-bands of the fourth, the fifth, the sixth, the seventh, the eighth, and the ninth are adopted to reconstruct filtered signal by wavelet inverse transform. This noise removal step is only part of the set B. There are two criterias to evaluate the effects of de-noising, namely minimum mean square error (MSE) and signal-to-noise ratio (SNR).[26]

Heartbeat Segmentation: On the contrary to blind segmentation, we segment the input ECG data based on fiducial-points. This ensures that each sample contains essential information of the signal. According to the annotation file, ECG signals are segmented into individual heartbeats, which are 300-points-long with respect to R-peak (fiducial point). A beat is formed by 99 samples forward and 200 samples backward, respectively. Examples of three different types of ECG beats used for set A and set B are displayed in Fig. 1.

Convolutional Neural Network Model

CNN is a computational algorithm that is inspired by the network of biological neurons to solve classification problems and prediction tasks, etc. CNN, as one of the most popular neural networks, consists of many parameters and some hidden layers.[9] Unlike traditional machine learning approaches which need knowledge of expertise and are time consuming, CNN does not need to manually extract a set of appropriate features. In contrast, CNN is able to extract features and complete the classification task automatically which alleviates the burden of training and testing time.[3] The architecture of our proposed CNN model, as shown in Fig. 2, involves input layer, hidden layers (convolution, non-linearity, mean-pooling) and output layer (classification).

The architecture of the CNN is built to take advantage of the two-dimensional (2-D) structure and pixel relations of image recognition. ECG signals can be considered as 1-D images. Therefore, 1-D convolution as a convolutional layer is suitable for ECG signal feature extraction.

Given an input signal sequence s(t), t=1, 2,..., n, and weight w(t), t=1, 2,..., m. Filter performs convolution functions for the characteristics of the upper layer in turn. The convolution output is as follows:

An activation function f(x) is required for the convolutional layer for nonlinear feature mapping. The rectified linear unit (ReLU) as an activation function is applied in the process of convolution. The definition of ReLU is as below:

Then the input of the neuron of i in layer h is defined as below:

where, i=1, 2,..., n, \(b^i\) denotes the bias parameter, \(w^h\in R^m\) is an m-dimensional filter, \(p_{(i+m-1):t}^h=[p_{i+m-1}^h,...,p_{i}^h]^T\), and \(w^h\) is the same for all neurons at the same convolutional layer.

The pooling operation can be described as a self-sampling process, which reduces the number of features and avoids over-fitting, offering strong robustness. The average pooling approach is adopted in this step.

The detailed parameters of CNN structure is listed in Table 3. Regarding our CNN structure, the input layer known as layer 0 is convolved with the kernel size of 3 to get layer 1. A mean-pooling of size 2 is used in each feature map (layer 2). Then, the feature maps from layer 2 are convolved with the kernel size of 4 to generate layer 3. A mean-pooling of size 2 is used in each feature map (layer 4). The feature maps gained from layer 4 are then convolved with a kernel size of 4 to get layer 5. A mean-pooling of size 2 is employed in each feature map (layer 6). At last, the neurons of every map in layer 6 are fully connected to 3 neurons in layer 7. The ReLU activation function is applied in layer 1, layer 3, and layer 5, respectively. The last layer is a softmax layer that has a number of output maps equaling to the number of classes to classify the ECG signals into N, V and R. In addition, the parameter of learning rate is set to 0.002. The hyperparameters and architecture are set based on empirical study of the performance. The CNN classification model is presented in Fig. 3. Back propagation (BP) algorithm with batch size of 20 samples is adopted for the update of weights and biases in the operation process.[11] The weights and biases are updated as follows.

where, l is the learning rate, v is the total training samples, g is the batch size and c is the cost function.

Performance Evaluation

Performance of the proposed approach is evaluated in terms of accuracy, sensitivity and specificity as defined below.

where, TP is True Positive, FN represents False Negative, FP means False Positive, and TN stands for True Negative. Accuracy measures the overall performance of our approach and the other two metrics distinguish certain beat types from other beat types (e.g., distinguish V from non-V).[22]

The record-based ten-fold cross validation is applied in the experiment by dividing the data set into ten parts. Nine of them are taken as training data and the remaining part is adopted as test data. The record division of training and test sets for the record-based ten-fold cross validation scheme is presented in the Table 1, in order to ensure that the training set and test set in each fold contain all ECG data types.

Results and Discussion

The proposed CNN model has been trained and tested by MATLAB 2014b software on a PC workstation with 3.70 GHz CPU and 16 GB RAM. It takes about 1057 s to complete one epoch for set A and 1050 s to complete one epoch for set B. A total of twenty epochs of train and test iterations are run for set A and set B, respectively.

Figure 4 display the loss and accuracy of the training epochs for net A and net B, respectively. The training accuracy obtained for set A and set B are 0.9453 and 0.9482, respectively. This indicates that the training accuracy for set A and set B are almost the same. However, the SNR and MSE value obtained for the filtering approach in set B are 34.0172 and 0.1129, respectively, which demonstrates that a significant noise presents in the raw signal. Thus, from the training accuracy we can conclude that the designed CNN model is insensitive to the noise of the original input ECG signal.

The classification performance (accuracy, sensitivity and specificity) for set A and set B are presented in Table 4. Notably our proposed model obtains the accuracy of 0.9874, the sensitivity of 0.9811 and the specificity of 0.9905 for set A, the accuracy of 0.9876, the sensitivity of 0.9813 and the specificity of 0.9907 for set B, respectively.However the experimental results on both set A and set B reflect that our proposed approach can perform well even without noise removal, accordingly denoising is no longer necessary in our scenario. The designed CNN model can extract appropriate features and classify the ECG beats efficiently and automatically, which saves a lot of time looking for effective features. Additionally, ten-fold cross validation based on individual records is applied to further boost the robustness of our algorithm.

As explained in “Methodology” section, the imbalanced dataset used in our experiments consists of 72896 normal beats, 7080 V beats and 7247 R beats, respectively. This sample imbalance problem usually results in low sensitivity for the classification algorithm. However, for the purpose of alignment with clinical needs, we do not artificially balance the data in order to improve the sensitivity of the results.

Recent studies with good performance on the cardiac arrhythmias classification of ECG beats are summarized in Table 5. Comparing to these state-of-art solutions, our proposed CNN model has the following advantages:

-

(i)

It can simplify the analysis of wearable large ECG data in arrhythmia detection application and increase the classification accuracy.

-

(ii)

Record-based ten-fold cross validation is adopted, which guarantees the independence of the training and test sets, and further enhances the robustness of the proposed method.

-

(iii)

It performs well on both the original ECG signals and de-noised signals since the model can learn appropriate filters by itself. In addition, if the denoising step is removed, overall workload is also reduced.

Nevertheless, our CNN model also consists of some limitations. For instance, this research is completed based on three types of ECG beats only, therefore the type of signals needs to be increased to satisfy clinical requirement.

Conclusion

In this article, a 1-D seven layer CNN model is proposed for cardiac arrhythmias classification based on wearable big ECG data in arrhythmia monitoring application. The model is able to extract appropriate features and distinguish three different types of ECG beats (R, V and N) automatically offering opportunities of transforming the early arrhythmia detection from clinical to daily life. It performs well on both the original ECG signals and de-noised signals, therefore computational workload can be reduced by removing the denoising step. Ten-fold cross validation for record-based scheme (i.e. individual patients) is adopted in our model, which further enhances the robustness. Nowadays, the combination of wearable big data and artificial intelligence has narrowed the gap of daily healthcare. Our designed model and the corresponding algorithm provide opportunities of transforming the early cardiac arrhythmias detection from clinical to daily life.

In the future, we plan to improve the current study maily from two folds: (1) develop an ECG pattern recognition system for the purpose of offering real time services for smart home monitoring, (2) investigate state-of-the-art unsupervised deep learning algorithms, for instance, Generative Adversarial Networks to identify unlabled big ECG data.

References

Acharya, U. R., H. Fujita, O. S. Lih, Y. Hagiwara, J. H. Tan, and M. Adam. Automated detection of arrhythmias using different intervals of tachycardia Computer-aided diagnosis of atrial fibrillation based on ECG signals: a review. segments with convolutional neural network. Inform. Sci. 405:81–90, 2017.

Acharya, U. R., S. L. Oh, Y. Hagiwara, J. H. Tan, M. Adam, A. Gertych, and R. S. Tan. A deep convolutional neural network model to classify heartbeats. Comput. Biol. Med. 89:389–396, 2017.

Alsheikh, M. A., D. Niyato, S. Lin, H. P. Tan, and Z. Han. Mobile big data analytics using deep learning and apache spark. IEEE Netw. 30(3):22–29, 2016.

Bayram, I., and I. W. Selesnick. Frequency-domain design of overcomplete rational-dilation wavelet transforms. IEEE Trans. Signal Process. 57(8):2957–2972, 2009.

Bollepalli, S. C., S. S. Challa, and S. Jana. Robust heartbeat detection from multimodal data via cnn-based generalizable information fusion,” IEEE Trans. Biomed. Eng. 99:1–1, 2018.

Dong, J., X. Miao, H. H. Zhu, and W. F. Lu. Wearable Computer-aided diagnosis of atrial fibrillation based on ECG signals: a review. recognition and monitor. in IEEE Symposium on Computer-based Medical Systems, 2005.

Gallet, C., B. Chapuis, V. Oréa, A. Scridon, C. Barrès, P. Chevalier, and C. Julien. Automatic atrial arrhythmia detection based on rr interval analysis in conscious rats. Cardiovasc. Eng. Technol. 4(4):535–543, 2013.

Hagiwara, Y., H. Fujita, S. L. Oh, J. H. Tan, R. S. Tan, E. J. Ciaccio, and U. R. Acharya. Computer-aided diagnosis of atrial fibrillation based on ECG signals: a review. Inform. Sci. 467:99–114, 2018.

Hatipoglu, N. and G. Bilgin. Cell segmentation in histopathological images with deep learning algorithms by utilizing spatial relationships. Med. Biol. Eng. Comput. 55(10):1–20, 2017.

He, L., K. Ota, and M. Dong. Learning iot in edge: Deep learning for the internet of things with edge computing. IEEE Netw. 32(1):96–101, 2018.

Hsin, H. C., C. C. Li, M. Sun, and R. J. Sclabassi. An adaptive training algorithm for back-propagation neural networks. IEEE Tran. Syst. Man Cybernet. 25(3):512–514, 1992.

Huang, H., J. Liu, Q. Zhu, R. Wang, G. Hu. A new hierarchical method for inter-patient heartbeat classification using random projections and rr intervals.” BioMed. Eng. Online 13(1):90, 2014.

Isin, A., and S. Ozdalili. Cardiac arrhythmia detection using deep learning. Procedia Comput. Sci. 120:268–275, 2017.

Kiranyaz, S., T. Ince, and M. Gabbouj. Real-time patient-specific ECG classification by 1-d convolutional neural networks. IEEE Trans. Biomed. Eng. 63:664–675, 2016.

Kutlu, Y., and D. Kuntalp. Feature extraction for ECG heartbeats using higher order statistics of wpd coefficients. Comput. Methods Programs Biomed. 105(3):257–267, 2012.

Li, P., Y. Wang, J. He, L. Wang, Y. Tian, T. S. Zhou, T. Li, and J. S. Li. High performance personality heartbeat classification model for long-term ECG signal. IEEE Trans. Biomedi. Eng. 1–1, 2016.

Luvisotto, M., Z. Pang, and D. Dzung. Ultra high performance wireless control for critical applications: challenges and directions. IEEE Trans. Indust. Inform. 99:1–1, 2016.

Mathews, S. M. A novel application of deep learning for single-lead ECG classification. Comput. Biol. Med. 99:53–62, 2018.

Pourbabaee, B., M. J. Roshtkhari, and K. Khorasani. Deep convolutional neural networks and learning ECG features for screening paroxysmal atrial fibrillation patients. IEEE Trans. Syst. Man Cybernet. Syst. 99:1–10, 2017.

Qin, Q., J. Li, Z. Li, Y. Yue, and C. Liu. Combining low-dimensional wavelet features and support vector machine for arrhythmia beat classification. Sci. Rep. 7(1):6067, 2017.

Qin, Q., J. Li, Y. Yue, and C. Liu. An adaptive and time-efficient ECG r-peak detection algorithm. J. Healthcare Eng. 2017(1): 1–14, 2017.

Reitsma, J. B., A. S. Glas, A. W. Rutjes, R. J. Scholten, P. M. Bossuyt, and A. H. Zwinderman. Bivariate analysis of sensitivity and specificity produces informative summary measures in diagnostic reviews. J. Clin. Epidemiol. 58(10):982–990, 2005.

Sannino, G., and G. D. Pietro. A deep learning approach for ECG-based heartbeat classification for arrhythmia detection. Future Generat. Comput. Syst. 86:446–455, 2018.

Schmidt, M., T. Hoang, and P. A. Iaizzo. The ability to reproducibly record cardiac action potentials from multiple anatomic locations: Endocardially and epicardially, in situ and in vitro. IEEE Trans. Biomed. Eng. 99:1–1, 2018.

Simopoulos, A. P. The importance of the omega-6/omega-3 fatty acid ratio in cardiovascular disease and other chronic diseases. Exp. Biol. Med. 233(6):674–688, 2008.

Suryaprakash, R. T., and R. Nadakuditi. Consistency and mse performance of music-based doa of a single source in white noise with randomly missing data. IEEE Trans. Signal Process. 63(18): 4756–4770, 2015.

Tan, J. H., Y. Hagiwara, W. Pang, I. Lim, S. L. Oh, M. Adam, R. S. Tan, M. Chen, and U. R. Acharya. Application of stacked convolutional and long short-term memory network for accurate identification of cad ECG signals. Comput. Biol. Med.94:19–26, 2018.

Thomas, M., M. K. Das, and S. Ari. Automatic ECG arrhythmia classification using dual tree complex wavelet based features. AEU - Int. J. Electron. Commun. 69(4):715–721, 2015.

Zadeh, A. E., A. Khazaee, and V. Ranaee. Classification of the electrocardiogram signals using supervised classifiers and efficient features. omput. Methods Programs Biomed. 99( 2):179–194, 2010.

Zhang, Y., Y. Zhang, B. Lo, and W. Xu. Wearable ECG signal processing for automated cardiac arrhythmia classification using cfase-based feature selection. Expert Syst. 4: e12432, 2019.

Zhu, J., L. He, and Z. Gao. Feature extraction from a novel ECG model for arrhythmia diagnosis. Bio-med. Mater. Eng. 24(6):2883–91, 2014.

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China under Grant 62172340, in part by the Natural Science Foundation of Chongqing under Grant cstc2021jcyj-msxmX0041, in part by the Young and Middle-aged Senior Medical Talents Studio of Chongqing under grant ZQNYXGDRCGZS2021002, and in part by the Introduced Talent Program of Southwest University under Grant SWU020008.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

None declared.

Ethical approval

Not required.

Additional information

Associate Editor Igor Efimov oversaw the review of this article.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Zhang, Y., Liu, S., He, Z. et al. A CNN Model for Cardiac Arrhythmias Classification Based on Individual ECG Signals. Cardiovasc Eng Tech 13, 548–557 (2022). https://doi.org/10.1007/s13239-021-00599-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13239-021-00599-8