Abstract

The outcomes of extensive-form games are the realisation of an exponential number of distinct strategies, which may or may not be Nash equilibria. The aim of this work is to determine whether an outcome of an extensive-form game can be the realisation of a Nash equilibrium, without recurring to the cumbersome notion of normal-form strategy. We focus on the minimal example of pure Nash equilibria in two-player extensive-form games with perfect information. We introduce a new representation of an extensive-form game as a graph of its outcomes and we provide a new lightweight algorithm to enumerate the realisations of Nash equilibria. It is the first of its kind not to use normal-form brute force. The algorithm can be easily modified to provide intermediate results, such as lower and upper bounds to the value of the utility of Nash equilibria. We compare this modified algorithm to the only existing method providing an upper bound to the utility of any outcome of a Nash equilibrium. The experiments show that our algorithm is faster by some orders of magnitude. We finally test the method to enumerate the Nash equilibria on a new instances library, that we introduce as benchmark for representing all structures and properties of two-player extensive-form games.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Extensive-form games represent players acting in sequence one after another (cf. [1]). These games are deployed to model situations in many domains, such as e.g., macroeconomics (cf. [2]), law (cf. [3]) and security (cf. [4]). However, from a computational standpoint, extensive-form games are not easy to deal with. Existing methods to compute solutions of a generic extensive-form game do not scale with the game size. In this work, we introduce a graph-based representation for finite extensive games with two players and perfect information. Such representation permits to resort to algorithms on graphs in the game resolution. Hence, building on what we denote graph form, we provide an efficient method to enumerate all the solutions of a game, i.e., to answer to the question whether or not a generic outcome of a game is a Nash equilibrium or not.

Computing Solutions. The standard solution of a game used in this work is the Nash equilibrium (cf. [5]), covering many foreseeable scenarios of game-theoretical models. The subgame perfect equilibrium (SPE) is the customary solution of extensive-form games (cf. [6]). A practical advantage of a SPE is that it can be computed with backward induction. On the other hand, the suitability of SPE and the possibility to employ different notions of equilibria beyond the SPE has been explored since its introduction (cf. [7]). For instance, the work [8] discusses the theoretical assumptions underlying the notion of SPE and, by restricting the horizon of acceptance of the backward induction principle acceptable by a player, it concludes that such solution does not provide complete insight into the possible equilibria. Later works, e.g., [9], show results of empirical tests on basic sample games proving that other Nash equilibria rather than the SPE are attained in practice. The discussion on refined equilibrium concepts is beyond the scope of this paper. Indeed, we are rather interested in analysing the concept of Nash equilibrium, whose fundamental role remains unquestioned. Extensive-form games, in particular, can have other non-SPE equilibria. In this work we analyse Nash equilibria independently from their properties and their corresponding refined equilibrium concepts.

To this respect, the algorithms provided in the present work offer insight into the whole set of Nash equilibria outcomes which can be ascribed to a given extensive-form game.

To the best of our knowledge, there is no efficient method to compute all the Nash equilibria of an extensive-form game. Identifying a Nash equilibrium for a generic game in normal form belongs to the class of PPAD-complete problems (cf. [10]) introduced by [11]. The most known algorithm to identify a Nash equilibrium is the Lemke-Howson algorithm (cf. [12]), that consists in identifying a completely labeled pair of vertices of two polytopes representing the game. Enumerating the Nash equilibria of a game corresponds to enumerating all the completely labeled pair of vertices of the two polytopes. Methods to solve such problem are inefficient and require a large amount of memory (cf. [13]). Recent algorithms have proven to be space efficient (cf. [14]), while others resort to parallel computing to overcome such issue (cf. [15]). In a generic extensive-form game, the number of strategies available to a player is typically exponential in the size of the game, defined as the number of its outcomes (cf. [16]). Transforming an extensive-form game in normal form is therefore highly inefficient, because it relies on the enumeration of an exponential number of strategies. The structure of extensive-form games can be exploited to introduce more efficient algorithms. A Mixed Integer Linear model introduced by [17] provides one Nash equilibrium and is linear in the size of the game. However, no information is provided on possible other Nash equilibria. This method proves to be highly efficient in zero-sum games (cf. [16]), but it might have exponential time complexity in a generic game. A variant of this method (cf. [18]) allows to find the extreme Nash equilibria, which are identified by all the vertices of the polytope corresponding to a Mixed Integer Linear model. Govindan and Wilson [19] describe how to adapt homotopy-based methods, conceived for nomal-form games, in order to determine an approximated Nash equilibrium for extensive-form games. However, how to control the set of equilibria returned by such procedures may not be immediate. To this respect, the graph-based enumeration proposed in this work represents, in its simplicity, a convenient algorithmic tool to provide pure Nash equilibria outcomes, even when the aim is to inspect those satisfying prescribed utility ranges. For a general introduction to homotopy-based methods, see [20].

Outcomes of Nash Equilibria. Since the number of strategy profiles is exponential in the size of the game, the number of Nash equilibria can also be potentially exponential. Throughout this work, the focus of the analysis is thus set on the outcomes of the Nash equilibria, rather than on the Nash equilibria themselves. This choice, which could be a trifling remark in a generic game, is relevant when dealing with extensive-form games for two reasons. First, the number of outcomes of Nash equilibria is proportional to the size of the game. Second, a large class of extensive-form games can be described in a compact way using their outcomes rather than enlisting the full game-three. We mention, among others, the important case of timing games, the case of pricing games and the case of scheduling games. In timing games (cf. [21]), players have to perform a single action within an interval of time, having the possibility of observing the actions of the opponents once they are taken. An outcome of a timing game is just a vector of time values, one for each player. Instead, the game-tree representation of a timing game, as well as that of the following games, requires to list all the possible combinations of choices that players can perform. In a pricing game (cf. [22]), companies have to adjust the prices of their goods in order to avoid churn towards competitors and maximise their profits. The outcomes are the vectors of prices chosen in discrete intervals of time. In the scheduling game (cf. [23]), ordered players choose in sequence a machine to perform one job, being aware of the decisions taken by their predecessors; the objective of any player is to minimise the congestion of the machine she chooses. The outcome of the game is a vector of assignments of players to the machines.

Contributions. In this paper, we provide solution methods for the enumeration of the outcomes of pure Nash equilibria in a two-player perfect-recall game. We chose this specific class of extensive-form games, because it can be described with the most convenient notation and it can be compared to the only other result known in the literature. Our main objective is to introduce a new algorithmic approach that focuses exclusively on the outcomes of extensive-form games. We believe that this method can be extended to a larger class of games, but such analysis is left to future works.

Our new representation of extensive-form games describes a game as a graph of its outcomes. Based on this, a method is provided to determine whether an outcome is the realisation of a Nash equilibrium of a two-player extensive-form game. Since the utility of every outcome is known, it is possible to determine upper (resp., lower) bounds to the utility of Nash equilibria for any player by inspecting them once ordered from the best to the worst (resp., from worst to best), rather than enumerating them all. Moreover, it is possible to enumerate the Nash equilibria whose realisations fit any given set of constraints on the utility by simply checking during our enumeration procedure the outcomes that meet such constraints. The method is more efficient when the games have specific structures. Indeed, it performs very well in games where outcomes can be compared without explicitly constructing the sequences of actions that lead to them. This is the case of games with a regular structure as described by examples later in the manuscript.

Summary. The paper is structured as follows. Section 2 presents extensive-form games with perfect information with a generic number of players. In Sect. 2.2, we describe a known method to bound the utility of the Nash equilibria of a two-player game. Section 3 introduces a new graph formulation of extensive-form games that allows a new characterisation of pure Nash equilibria in two-player games with perfect information. An equivalent formulation is discussed in Sect. 3.3 and used in Sect. 4 to achieve the following objectives: to identify a Nash equilibrium, to enumerate all Nash equilibria, to find an upper bound to their utility which is compared to the methods in literature (cf. [16]) and to identify the best and the worst Nash equilibrium for a player. Section 5 presents extended computational experiments of such methods on a given dataset of games. Section 6 ends the paper with some insights and possible research directions to extend the results to more generic extensive-form games. The reader can find examples of games in Appendix A to visualize the notions introduced in the article and detailed proofs of the theorems in Appendix B.

2 Extensive-Form Games

Notation. In this work we will use the following notation for vectors. A vector \(a = (a^1, \dots ,a^n)\) is an ordered sequence of elements \(a^k\) with \(k \in \{1,\dots ,n\}\). Given two vectors \(a = (a^1, \dots , a^n)\) and \(b = (b^1, \dots b^n)\), a concatenation of vectors \(a+b = (a^1, \dots , a^n, b^1, \dots , b^n)\) is represented by the operator \(+\). Given a concatenation of vectors \(a+b\), a is said to be a prefix of \(a+b\), and that \(a \le a+b\). A vector with no elements is called the empty vector \(\emptyset \) and \(\emptyset \le a\) for all a. Two vectors a and \(a^{\prime }\) might have a common prefix. We denote by \(c = a \cap a^{\prime }\) the longest common prefix of a and \(a^{\prime }\), i.e., the longest sequence such that \(c \le a\) and \(c \le a^{\prime }\).

2.1 Definitions

We consider discrete, finite extensive-form games with perfect information, whose feature is to have all information sets as singletons (cf. [1, 24]). We will describe this class of games with an operative definition which appears more suitable to our algorithmic framework than the standard one appearing in the literature. Note that, even if our definition is written specifically for finite extensive-form games with perfect information, it is indeed compliant with other ones appearing in the literature (cf. [25,26,27]). They are provided for a generic N-players extensive-form game, even though this manuscript tackles the 2-player case only.

Let \({\mathcal {I}} = \{1,\dots ,N\}\) be the set of players acting in a sequence of time instants. At each time instant, a player observes a history of actions \(h^{\prime }\) and picks an action \(a \in {\mathcal {A}}(h^{\prime })\). In particular, at the beginning, the first player to act observes no actions \(h^0 = \emptyset \) and thus can act within a set of M actions \({\mathcal {A}}(h^0) = \{a_1^0,\dots ,a_M^0\}\). Let us say that for instance she picks \(a^{0} = a^0_{m} \in {\mathcal {A}}(h^0)\); the second player observes \(h^1 = (a^0)\) and can thus pick an action \(a^1 \in {\mathcal {A}}(h^1)\). Iterating at each instant this procedure, we define the set of histories \(H^{\prime }\). Accordingly, there is a function \({\mathcal {A}}: h^{\prime } \in H^{\prime } \mapsto A\) that maps every history \(h^{\prime }\) to the set of actions A available to the player observing history \(h^{\prime }\). The game ends when there is no actions left. Formally, there is a subset \(H \subset H^{\prime }\) such that \({\mathcal {A}}(h) = \emptyset \) for all \(h \in H\). Such terminal histories are called outcomes. Every outcome \(h \in H\) is evaluated by a function \(u_{i}\) that maps it to the value \(u_i(h) \in {\mathbb {R}}\) assigned by player i to h.

Definition 1

(extensive-form game) An extensive-form game is a tuple \(\Gamma = \langle {\mathcal {I}}, {\mathcal {A}}, H^{\prime }, H, P, u \rangle \), where:

-

\({\mathcal {I}} = \{1,\dots ,N\}\) is the set of players;

-

\(H^{\prime }\) is the set of histories with \(\emptyset \in H^{\prime }\);

-

\({\mathcal {A}}: h^{\prime } \in H^{\prime } \rightarrow A\) is a function that provides for every history a set of actions, i.e., for all \(a \in A = {\mathcal {A}}(h^{\prime })\), we have \(h^{\prime } + (a) \in H^{\prime }\);

-

\(H = \{h \in H^{\prime } | {\mathcal {A}}(h) = \emptyset \} \subset H^{\prime }\) is the set of outcomes;

-

\(P: H^{\prime } \setminus H \rightarrow {\mathcal {I}}\) is a function that indicates which player \(P(h^{\prime }) \in {\mathcal {I}}\) acts after observing the history \(h^{\prime } \in H^{\prime } \setminus H\);

-

\(u = (u_i)_{i \in {\mathcal {I}}}\), with \(u_i: H \rightarrow {\mathbb {R}}\), is the utility function.

The size of a finite extensive form game is the number of outcomes. In the literature, the standard representation of the game is the tree of possible histories (cf. Appendix A.1). Hence, we alternatively call node a history observed by a player. The standard definition of a strategy identifies the single action chosen by a player after observing a history. In this paper, we consider pure strategies as we leave to future works the analysis and algorithmic solution of the games in mixed strategies.

Definition 2

(strategy) Given a game \(\Gamma = \langle {\mathcal {I}}, {\mathcal {A}}, H^{\prime }, H, P, u \rangle \) and a player \(i \in {\mathcal {I}}\), we pick all the histories at which the player acts: \(H_i = \{h^{\prime } \in H^{\prime } {\setminus } H | P(h^{\prime }) = i\}\). A strategy \(s_i\) is a function \(s_i: h^{\prime } \in H_i \mapsto a \in {\mathcal {A}}(h^{\prime })\) that maps every observed history \(h^{\prime } \in H_i\) to an action \(a \in {\mathcal {A}}(h^{\prime })\) available to the player i. Let \(S_i\) denote the set of all strategies of player i.

If every player picks a strategy, we have a tuple of strategies \(s = \langle s_1,s_2,\dots ,s_N\rangle \), that we call strategy profile. If we consider a strategy profile, for every history there will be an action to be played. Eventually, this sequence of actions makes an outcome. Such outcome is defined as the realisation of the strategy profile. In the following sections we write \(s \mapsto h\) to identify the unique realisation h of the strategy profile s. Moreover, with some abuse of notation, we write u(s), i.e., the utility of a strategy profile s, to indicate \(u(s) = u(h: s \mapsto h)\).

The solution of a game is an equilibrium, i.e., a situation in which players to pick strategies that they do not want to change. The Nash equilibrium is a combination of strategies for which the players find it convenient not to deviate unilaterally. More specifically, if the other players do not change their strategies \(s_{-i} = (s_j)_{j \in {\mathcal {I}} \setminus \{i\}}\), the player i has no interest in changing her own strategy \(s_i\) because she would not improve her utility. This concept of Nash equilibrium is hereafter defined.

Definition 3

(Nash equilibrium) Given a game \(\Gamma = \langle {\mathcal {I}}, H, u \rangle \), we say that a strategy profile \(\langle \overline{s}_i\rangle _{i \in {\mathcal {I}}}\) is a Nash equilibrium if for every \(i \in {\mathcal {I}}\) and for all \(s_i \in S_i\):

An extensive-form game with perfect information always admits at least one Nash equilibrium in pure strategies (cf. [6]).

2.2 Bounds for Nash Equilibria in Two-Player Games

The most efficient methods to provide an upper bound to the utility of Nash equilibria have been introduced for two-player extensive-form games. We suppose that the games are not zero-sum, since such subcategory of games has already been fully studied (cf. [16]).

Given a two-player extensive-form game \(\Gamma = \langle {\mathcal {I}} = \{1,2\},{\mathcal {A}}, H^{\prime }, P, u \rangle \), we consider the following optimization problem [ST]:

This bilevel optimization problem has linear complexity in the number of the strategies of the game. We note however that they might still be exponential in the game size, i.e., in number of outcomes (cf. [16]). Indeed, let us for instance consider a game represented by a complete binary tree, i.e., a game in which every node has two actions. Given \(|H^{\prime } {\setminus } H|\) the number of non-leaf nodes of the game tree, it is easy to show that there are \(2^{|H^{\prime } \setminus H|}\) strategy profiles and \(|H^{\prime } \setminus H|+1\) outcomes. However, a different formulation of [ST] introduced in (cf. [16]) can be written in the form of a bi-level linear program with a number of variables and a number of constraint inequalities that grow linearly with respect to the tree size. Such formulation, which considers mixed strategies, is discussed in details in Sect. 4.2 and it is adapted to solve games in pure strategies.

An optimal solution of [ST] provides an upper bound to the utility of the first player of all the Nash equilibria of the game (cf. [28]).

Theorem 1

Let us consider \(\overline{S}\) the set of feasible solutions \(\overline{s} = (\overline{s}_1,\overline{s}_2) \in S_1 \times S_2\) of [ST] and the optimum value \(\overline{U}_1 = \sup _{\overline{s} \in \overline{S}} u_1(\overline{s})\). Given a Nash equilibrium \(s^* \in S_1 \times S_2\) of the game \(\Gamma \), it holds:

Proof

It is sufficient to prove that any Nash equilibrium is a feasible solution of [ST]. Indeed, since \(s^*\) is a Nash Equilibrium, we have that for all \(s_2 \in S_2\):

Therefore it holds that \(s_2^* = \mathop {\mathrm {arg\,max}}\limits _{s_2 \in S_2} u_2(s^*_1,s_2)\). \(\square \)

3 Graph Form

3.1 A Representation of Extensive-Form Games as Graphs of Outcomes

In this section, we introduce a new representation of a two-player game in extensive form with perfect information as an undirected graph of its outcomes. The goal of this representation is to focus on the outcomes, in order to identify which of them are realisations of the Nash equilibria. We next put in relation the strategies of the players with the outcomes of the game.

If a player chooses a strategy, she limits the number of outcomes that are reachable by the other player. We formalise this observation by associating a strategy to a subset of outcomes that represent it. We recall that every strategy profile \((s_1,s_2) \in S_1 \times S_2\) can be mapped to its realisation \(h: (s_1,s_2)\mapsto h\). Given a strategy \(s_1 \in S_1\), we consider the set of possible outcomes \(H(s_1)\) which are realisations of strategy profiles \((s_1,s_2)\) which include \(s_1 \in S_1\) as a strategy of the first player and any \(s_2 \in S_2\) as a strategy of the second player.

Definition 4

(outcomes of a strategy) Given a two-player game \(\Gamma = \langle {\mathcal {I}} = \{1,2\}, H,u \rangle \) and a strategy \(s_1 \in S_1\), the set of outcomes \(H(s_1) \subset H\) of strategy \(s_1\) is:

In order to understand which elements belong to the set of outcomes of a strategy, we introduce a new property, called compatibility. This property enables to identify two outcomes that can be obtained by the same strategy chosen by a given player.

Definition 5

(compatibility) Given a two-player game \(\Gamma = \langle {\mathcal {I}} = \{1,2\}, H,u \rangle \), we say that two outcomes \(h,h^{\prime }\in H\) are compatible for player \(i \in {\mathcal {I}}\) if there is a strategy \(s_i \in S_i\) such that \(h \in H(s_i)\) and \(h^{\prime } \in H(s_i)\).

Remark

Since we discuss only two-player games, i.e., \({\mathcal {I}} = \{1,2\}\), we arbitrarily choose one player to be the first player (e.g., \(i_1 = 1\)) and one to be the second player (e.g. \(i_2 = 2\)). Later in this section it is shown that such choice can be arbitrary. If not specified, we refer to two outcomes as compatible if they are compatible for player 1. If two outcomes \(h,h^{\prime }\in H\) are compatible, there are a strategy \(s_1 \in S_1\) and two strategies \(s_2,s^{\prime }_2 \in S_2\) such that \((s_1,s_2) \mapsto h\) and \((s_1,s^{\prime }_2) \mapsto h^{\prime }\).

If two outcomes can be produced by the same strategy, the first player always takes the same decisions at every node. Lemma 2 proves that this condition is not only necessary but sufficient. Formally, given two outcomes \(h,h^{\prime } \in H\) it is necessary to observe at which node the history starts to be different; such node is identified by their longest common prefix \(h \cap h^{\prime }\).

Lemma 2

We consider a two-player game \(\Gamma = \langle {\mathcal {I}} = \{1,2\}, H,P,u \rangle \). Two outcomes \(h,h^{\prime } \in H\) are compatible if and only if \(P(h \cap h^{\prime }) = 2\).

Proof

(i) First we prove that \(P(h \cap h^{\prime }) = 2\) implies that h and \(h^{\prime }\) are compatible, then (ii) we prove that \(P(h \cap h^{\prime }) = 1\) implies that h and \(h^{\prime }\) are not compatible.

(i) Let us suppose that \(P(h \cap h^{\prime }) = 2\). We need to define \(s_1 \in S_1\) and \(s_2,s^{\prime }_2 \in S_2\) such that \((s_1,s_2) \mapsto h\) and \((s_1,s_2^{\prime }) \mapsto h^{\prime }\). We recall that a strategy of the first player is a function associating an action to each partial history \(h^{\prime \prime } \in H^{\prime }\) in the tree observed by the first player, i.e., such that \(P(h^{\prime \prime }) = 1\). For each \(h^{\prime \prime } \le h\) (\(h^{\prime \prime } \le h^{\prime }\)) if \(P(h^{\prime \prime }) = 1\) we define a strategy \(s_1 \in S_1\) such that \(h^{\prime \prime } + (s_1(h^{\prime \prime })) \le h\) (\(h^{\prime \prime } + (s_1(h^{\prime \prime })) \le h^{\prime }\)). Analogously, we define a strategy \(s_2 \in S_2\), such that for each \(h^{\prime \prime } \le h\) with \(P(h^{\prime \prime }) = 2\) we have \(h^{\prime \prime } + (s_2(h^{\prime \prime })) \le h\), and a strategy \(s^{\prime }_2 \in S_2\), such that for each \(h^{\prime \prime } \le h^{\prime }\) with \(P(h^{\prime \prime }) = 2\) we have \(h^{\prime \prime } + (s^{\prime }_2(h^{\prime \prime })) \le h^{\prime }\). For any other \(h^{\prime \prime } \nleq h\) and \(h^{\prime \prime } \nleq h^{\prime }\) we take an arbitrary decision for defining \(s_1\), \(s_2\), \(s^{\prime }_2\). By construction, we have \((s_1,s_2) \mapsto h\) and \((s_1,s_2^{\prime }) \mapsto h^{\prime }\).

(ii) Let us now suppose that \(P(h \cap h^{\prime }) = 1\). Let \((s_1,s_2)\) and \((s^{\prime }_1,s^{\prime }_2)\) be two strategy profiles such that \((s_1,s_2) \mapsto h\) and \((s^{\prime }_1,s^{\prime }_2) \mapsto h^{\prime }\). It is impossible to have \(s_1=s^{\prime }_1\) since \(P(h \cap h^{\prime }) = 1\) implies \(s_1(h \cap h^{\prime }) \ne s^{\prime }_1(h \cap h^{\prime })\), from which we can conclude that h and \(h^{\prime }\) are not compatible. \(\square \)

Based on the definition of compatibility, it is possible to build a graph of compatibilities among all the outcomes of a game \(\Gamma \), or the graph form for short.

Definition 6

(graph form) The graph of compatibility of a two-player game \(\Gamma = \langle {\mathcal {I}} = \{1,2\}, H,u \rangle \) is a tuple \(\Gamma = \langle H,E,u\rangle \), where H is the set of outcomes as nodes of the graph, \(E \subset H^2\) is the set of edges connecting any two compatible outcomes and \(u: H \rightarrow {\mathbb {R}}^2\) is the utility function that assigns a pair of weights to every node.

Remark

In the following of the article we sometimes omit the transformation of an extensive-form game \(\Gamma \) into its graph form \(\langle H,E,u \rangle \), which can be performed by application of Lemma 2, and therefore we directly introduce the game by representing it in its graph form \(\Gamma = \langle H,E,u \rangle \).

3.2 Characterisation of Nash Equilibria in the Graph Form of the Game

Given a game \(\Gamma \), let us characterise the outcomes \(H(s_1)\) of a strategy \(s_1 \in S_1\) on its graph form. By definition, such outcomes are all compatible with one another. Let us consider the nodes on the graph corresponding to the outcomes \(H(s_1)\); they induce a clique and, as we show next, such clique is maximal.

Lemma 3

Consider a two-player game \(\Gamma = \langle {\mathcal {I}} = \{1,2\}, H,u \rangle \) with its graph form \(\Gamma = \langle H,E,u\rangle \). For every strategy \(s_1 \in S_1\), the set \(H(s_1) \subset H\) forms a maximal clique of the graph \(\langle H,E,u\rangle \).

Proof

According to Definition 5, we have that \(H(s_1)\) induces a clique on the graph. Consider any outcome \(h \in H \setminus H(s_1)\). Since \(h \notin H(s_1)\) there is a partial history \(h^{\prime \prime } \in H^{\prime }\), with \(h^{\prime \prime } \le h\) and \(P(h^{\prime \prime }) = 1\), such that the subsequent action \(a_{k+1} \in {\mathcal {A}}(h^{\prime \prime })\) is not chosen by strategy \(s_1\), i.e., \(a_{k+1} \ne s_1(h^{\prime \prime })\). Consider now an outcome \(h^{\prime } \in H(s_1)\) such that \(h^{\prime \prime } + (s_1(h^{\prime \prime })) \le h^{\prime }\).

Since \(P(h\cap h^{\prime })=1\), from Lemma 2h and \(h^{\prime }\) are not compatible. Since this argument holds for every \(h \in H {\setminus } H(s_1)\), we have that \(H(s_1)\) forms a maximal clique. \(\square \)

We observe that to every maximal clique of the graph there is at least one strategy whose set of outcomes corresponds to it. We prove that it is always true in Lemma 4.

Lemma 4

Let us consider a two-player game \(\Gamma = \langle {\mathcal {I}} = \{1,2\}, H,u \rangle \) with its graph form \(\Gamma = \langle H,E,u\rangle \). For every set of vertices \({\mathcal {C}}\) that induces a maximal clique on the graph \(\langle H,E\rangle \), there is a strategy \(s_1 \in S_1\) such that \({\mathcal {C}} = H(s_1)\).

Proof

We first show that \({\mathcal {C}} \subset H(s_1)\) for a given \(s_1 \in S_1\). For this, we consider a strategy \(s_1 \in S_1\) such that, for all \(h \in {\mathcal {C}}\) and all \(h^{\prime \prime } \le h\) such that \(P(h^{\prime \prime }) = 1\), we have that \(h^{\prime \prime } + s_1(h^{\prime \prime }) \le h\). Such strategy exists and it is defined by applying a procedure similar to the one used in the proof of Lemma 2; we recall that \(P(h \cap h^{\prime }) = 2\) for each pair of compatible outcomes \(h,h^{\prime } \in {\mathcal {C}}\).

Therefore \({\mathcal {C}} \subseteq H(s_1)\). But, from Lemma 3, \(H(s_1)\) induces a maximal clique and thus \({\mathcal {C}} = H(s_1)\). \(\square \)

Lemmas 3 and 4 establish that there exists a bijection between a partition of the set of strategies of the game and the set of maximal cliques in the graph form. An illustration is given in Fig. 7b. A similar result is obtained for the set of strategies of the second player on the complementary graph.

Lemma 5

For every two-player game \(\Gamma = \langle {\mathcal {I}} = \{1,2\}, H,P,u \rangle \) with its graph form \(\Gamma = \langle H,E,u\rangle \), there is a bijection between a partition of the set of strategies of the second player \(S_2\) and the set of maximal cliques of the complementary graph \(\langle H,E^C\rangle \), where \(E^C = \{(h,h^{\prime }) \in H^2 | h \ne h^{\prime }, (h,h^{\prime }) \notin E\}\).

Proof

Given two outcomes \(h,h^{\prime } \in H\), we have that \(P(h \cap h^{\prime }) \in \{1,2\}\). Therefore \(P(h \cap h^{\prime }) = 2\) if and only if \(P(h \cap h^{\prime }) \ne 1\). Lemma 2 thus determines that the graph form of the second player is complementary to the one of the first player. The result follows from Lemmas 3 and 4. \(\square \)

Choosing a strategy for the first player implies picking a maximal clique in the graph \(\langle H,E\rangle \). Furthermore, one can observe that with an analogous method it is possible to build a graph for the strategies of the second player. However, thanks to Lemma 5 it is not necessary to perform further computations, because such graph is complementary to \(\langle H,E\rangle \).

Enabling Strategy. We observe that the minimal representation of strategies as sets of outcomes can be obtained by applying the concept of enabling strategy of [19] to games with perfect information. Given a player \(i \in {\mathcal {I}}\) and an outcome \(h \in H\), an enabling strategy \(S_i(h) = \{s_i \in S_i | h \in S_i\}\) is the set of strategies of player \(i \in {\mathcal {I}}\) leading to outcome \(h \in H\). Enabling strategies are the minimal representation of the set of strategies with respect to specific properties of the space of strategies. In games with perfect information and two outcomes \(h_1,h_2 \in H\), there is only one player \(i \in {\mathcal {I}}\) such that \(S_i(h_1) \ne S_i(h_2)\). The previous Lemmas can be obtained by combining this property with the known properties of enabling strategies (cf. [19]).

As anticipated in Sect. 2, we are interested in identifying Nash equilibria outcomes in pure strategies. We recall that a Nash equilibrium is a strategy profile in which none of the players is interested in changing her own strategy unilaterally.

Best responses and outcomes. The standard way to define a best response for an extensive-form game entails to refer to its equivalent strategic form. In this case, if a player picks a strategy, the other player will choose a best response, i.e., a strategy such that her utility is maximized. A Nash equilibrium can be identified as a mutual best response accordingly. We now provide a connection between this fundamental definition of equilibrium based on the strategic form and properties of the outcomes in the graph form of the game. In fact, every designated outcome h corresponds to one or more strategies \(s_1\) for the first player identified by a maximal clique \({\mathcal {C}}_1\) on the graph form which includes h. In turn, for every element \(h^{\prime } \in {\mathcal {C}}_1\), the second player can choose a strategy \(s_2\) whose corresponding set of outcomes \({\mathcal {C}}_2\) on the complementary graph includes \(h^{\prime } \in {\mathcal {C}}_2\). The second player has an incentive to pick the element within \({\mathcal {C}}_1\) that maximises her utility, a condition that thus h must fulfill in order to avoid deviations from the second player. A similar argument can be put forward by inverting the players. Note that a single outcome may correspond to multiple strategy pairs. Thus, in the graph form, determining if an outcome corresponds to a Nash equilibrium means to answer to the question if there exists at least a pair of maximal cliques respectively on the graph and on the complementary graph containing this outcome, and such that their intersection is a mutual best response in the corresponding strategic form.

The best response of the second player leads to the outcome which is most preferred by the second player within the maximal clique \({\mathcal {C}}_1\) chosen by the first player. If a vertex \(h \in H\) corresponds to the outcome of a best response of the second player, two conditions must hold. First, there must be a maximal clique \({\mathcal {C}}_1\) which includes it, i.e., \(h \in {\mathcal {C}}_1\), so that the second player can choose it. Second, such maximal clique \({\mathcal {C}}_1\) should not include all the nodes \(X^h\) which are preferred to h by the second player, so that the second player has an incentive to choose h and no other outcome. Whether such clique exists is the problem [MC] formalised hereafter.

Problem 6

(MC) Existence of a maximal clique including h and excluding \(X^h\).

INSTANCE: \(\langle H,E,h,X^h\rangle \) defining a graph \(\langle H,E\rangle \), a vertex \(h \in H\) and a subset of vertices \(X^h \subset H\) with \(h \notin X^h\).

QUESTION: Is there a vertex set \({\mathcal {C}} \subset H {\setminus } X^h\) with \(h \in {\mathcal {C}}\) that induces a maximal clique on \(\langle H,E\rangle \)?

We would like to know if an outcome h is the realisation of a Nash equilibrium. Hence, a maximal clique including the corresponding vertex h and excluding \(X^h = \{h^{\prime } \in H | u_2(h^{\prime }) > u_2(h)\}\) ensures that the first player has a strategy to induce the second player onto the desired outcome h.

Example

In the game of Fig. 6 the second player prefers \(X^h = \{h_2,h_3,h_4\}\) to \(h = h_6\). In the graph of Fig. 7a we observe that there is no maximal clique including \(h_6\) and excluding all the elements of \(X^h\). Therefore \(h_6\) cannot correspond to a best response of the second player.

Furthermore, by applying the same arguments on the complementary graph we conclude that it is possible to determine whether an outcome is the realisation of a best response also of the first player. Specifically, it is necessary to identify a maximal clique on the complementary graph such that the vertices corresponding to the outcomes preferred by the first player are excluded.

Example

In the graph of Fig. 7a the first player prefers \(X^h = \{h_2,h_3,h_5,h_6\}\) to \(h = h_1\). There is no maximal clique on the complementary graph of Fig. 7a, i.e., there is no independent set, that includes \(h_1\) and none of the elements in \(X_h\). Therefore \(h_1\) cannot be a best response of the first player.

Theorem 7 combines these findings and provides a characterisation of a Nash equilibrium in the graph form.

Theorem 7

Let us consider a two-player game in its graph form \(\Gamma = \langle H,E,u\rangle \) and an outcome \(h \in H\). We consider \(X_1^h = \{h^{\prime } \in H | u_1(h^{\prime }) > u_1(h)\}\) and \(X_2^h = \{h^{\prime } \in H | u_2(h^{\prime }) > u_2(h)\}\), the sets of outcomes preferred to h, respectively, by the first and the second player. The outcome \(h \in H\) is a realisation of a Nash equilibrium if and only if the problem [MC] has true as answer both providing as input \(\langle H,E,h,X_2^h\rangle \) and \(\langle H,E^C,h,X_1^h\rangle \).

The proof is provided in Appendix B.1. Such result allows us to develop methods that compute Nash equilibria without listing all the strategies of the players, which are often in exponential number with respect to the size of the game (cf. Section 2). Such methods will be discussed in the following sections.

3.3 Analysis of the Main Problem and its Complexity

The complexity of identifying a Nash equilibrium outcome on a game in its graph form depends on the complexity of solving two instances of problem [MC]: one with input \(\langle H,E^C,h,X_1^h\rangle \) and another with input \(\langle H,E,h,X_2^h\rangle \), as defined in the previous section. In this section, we evaluate the complexity of problem [MC] with input \(\langle H,E,h,X^h \rangle \) for a generic graph \(\langle H,E\rangle \). More specifically, we first define a variant [EC] of problem [MC] and then we show that the two problems are equivalent, i.e., that a solution of problem [EC] provides a solution of problem [MC] and conversely.

Let us consider a generic problem [MC] with input \(\langle H,E,h,X^h \rangle \). First, we argue that when solving [MC] the problem can be restricted to the neighbourhood of \(h \in H\), \(V^h = \{h^{\prime }, (h,h^{\prime }) \in E\}\). Indeed, let us suppose that there is a maximal clique induced by a vertex set \({\mathcal {C}} \subset H\) with \(h \in {\mathcal {C}}\) excluding \(X^h \subset H\). Since the clique is maximal, it must hold that, for every vertex \(h^{\prime } \in X^h\), there is at least one vertex \(h \in {\mathcal {C}}\) which is not connected to \(h^{\prime }\), i.e., such that \((h,h^{\prime }) \notin E\). Those vertices in \(X^h\) who are not in the neighborhood \(V^h\) always fulfill this property, as \(h \in {\mathcal {C}}\). Therefore, instead of considering all the vertices in \(X^h\), we can restrict the problem to \(X = X^h \cap V^h\). With this argument, we conclude that the vertex set \({\mathcal {C}}\) belongs to the neighborhood \(V^h\).

Example

Consider the graph of Fig. 1a, in which the set \({\mathcal {C}} = \{h,h_2,h_3,h_4\}\) induces a maximal clique. The vertices \(X^h \setminus V^h = \{h_5,h_{10}\}\) are not connected to h and the vertex \(H {\setminus } X^h {\setminus } V^h = \{h_9\}\) can never belong to the vertex set \({\mathcal {C}}\) that induces the maximal clique. Therefore we can restrict the problem from H to \(\{h_1,h_2,h_3,h_4,h_6,h_7,h_8\}\).

Equivalence of problems [MC] and [EC]. a Let us consider problem [MC] with \(H = \{h_1,h_2,h_3,h_4,h_5,h_6,h_7,h_8,h_9,h_{10}\}\) and \(X^h = \{h_5,h_6,h_7,h_8,h_{10}\}\). A maximal clique that solves [MC] is \({\mathcal {C}} = \{h,h_2,h_3,h_4\}\); b Let us consider problem [EC] with \(V = \{h_1,h_2,h_3,h_4\}\) and \(X = \{h_6,h_7,h_8\}\). A clique that solves [EC] is \({\mathcal {C}}^{\prime } = \{h_2,h_3\}\)

Let us thus consider a slightly different problem [EC].

Problem 8

(EC) Existence of an excluding clique.

INSTANCE: \(\langle V,X,E\rangle \) defining a graph \(\langle V \cup X,E\rangle \) with \(V \cap X = \emptyset \).

QUESTION: Is there a vertex set \({\mathcal {C}} \subset V\) that induces a clique on \(\langle V,E\rangle \) that is maximal in \(\langle {\mathcal {C}} \cup X,E\rangle \)?

Theorem 9 shows that the problem [MC] with input \(\langle H,E,h,X^h \rangle \) can be solved by means of problem [EC] with a different input derived by the input of problem [MC].

Example

Indeed let us consider the problem [MC] depicted in Fig. 1a and its restriction to the neighborhood of h of Fig. 1b. The problem [MC] requires to identify a maximal clique that has no elements in \(X^h\) and includes h, such as \(\{h,h_2,h_3,h_4\}\) or \(\{h,h_1,h_2\}\). The problem [EC] requires to identify a vertex set \({\mathcal {C}}^{\prime }\) on \(V = \{h_1,h_2,h_3,h_4\}\) that induces a clique such that for all elements in \(X = \{h_6,h_7,h_8\}\) there is at least one element in \({\mathcal {C}}^{\prime }\) not connected to it. Such cliques are \(\{h_1\}\), \(\{h_1,h_2\}\), \(\{h_2,h_3\}\), \(\{h_3,h_4\}\) and \(\{h_2,h_3,h_4\}\). For instance, let us consider \(\{h_2,h_3\}\): the vertex \(h_6\) is not connected to \(h_3\), while the vertices \(h_7\) and \(h_8\) are not connected to \(h_2\).

Proposition 9

Let us consider a graph \(\langle H,E\rangle \), a subset of vertices \(X^h \subset H\) and a vertex \(h \in H \setminus X^h\). Let us define \(V^h = \{h^{\prime } | (h,h^{\prime }) \in E\}\), \(X = X^h \cap V^h\), \(V = V^h {\setminus } X\) and \(E|_{V \cup X} = \{(h^{\prime },h^{\prime \prime }) \in E | h^{\prime } \in V, h^{\prime \prime } \in V \cap X\}\). The problem [MC] with input \(\langle H,E,h,X^h \rangle \) has true as answer if and only if the problem [EC] with input \(\langle V,X,E|_{V \cap X} \rangle \) has.

The proof is provided in Appendix B.2.

We observe that problem [EC] requires to prove the existence of a clique rather than identifying a maximal clique, as in problem [MC]. Moreover, the input of [EC] is a smaller graph induced by the neighborhood of one vertex, h, in graph of [MC]. Therefore, from now on, let us focus on the analysis of [EC]. In the following theorem, we prove that [EC] is NP-complete. Indeed, we reduce it to the problem of the existence of the dominating clique [DC], which is known to be NP-complete (cf. [29]).

Problem 10

(DC) Existence of a dominating clique

INSTANCE: A graph \(\langle H,E\rangle \).

QUESTION: Is there a vertex set \({\mathcal {C}} \subset H\) that induces a clique on the graph such that for every vertex \(h^{\prime \prime } \in H {\setminus } {\mathcal {C}}\) there is a vertex \(h^{\prime } \in {\mathcal {C}}\) such that \((h^{\prime },h^{\prime \prime }) \in E\)?

Theorem 11

In a generic graph the problem [EC] is NP-complete.

Proof

We present next a polynomial reduction from [DC] to [EC]. The argument of the proof is illustrated in Fig. 2. We consider the problem [DC] with input \(\langle H,E \rangle \) and define two vertex sets \(V = {\tilde{H}}\) and \(X = \tilde{{\tilde{H}}}\), where \({\tilde{H}}\) and \(\tilde{{\tilde{H}}}\) are copies of set H. Let us also define a set of edges \(E^{\prime } = \{(i,j)|i,j \in V,(i,j) \in E\} \cup \{(i,j)|i \in V, j \in X, (i,j) \notin E\}\). We consider the problem [EC] with input \(\langle V,X,E^{\prime } \rangle \). By construction, the input has size \(O(|H|^2)\), i.e., it is polynomial in the size of the input of problem [DC]. We prove now that [DC] admits answer true if and only if the same happens for [EC] with input \(\langle V,X,E^{\prime } \rangle \). If [DC] has answer true, there exists a vertex set \({\mathcal {C}} \subset H\) that: (a) induces a clique on \(\langle H,E \rangle \); (b) and such that, for each \(j \in H\setminus {\mathcal {C}}\), there is a \(i \in {\mathcal {C}}\) such that \((i,j) \in E\). From (a) and the definition of sets V and \(E^{\prime }\), there is a copy of \({\mathcal {C}} \subset V\) defining a clique in graph \(\langle V\cup X,E^{\prime } \rangle \). Also, from the definition of \(E^{\prime }\) and from (b), for each \(j \in X\) there is a \(i \in {\mathcal {C}}\) such that \((i,j) \notin E^{\prime }\). Therefore \({\mathcal {C}}\) provides also an answer true for [EC].

We now prove that an answer true for [EC] provides an answer true also to [DC]. A vertex set \({\mathcal {C}}\subset V\) that induces a clique on \(\langle V \cup X,E^{\prime } \rangle \), clearly defines a clique on \(\langle H,E\rangle \). It holds that for all \(j \in X\) there is \(i \in {\mathcal {C}}\) such that \((i,j) \notin E^{\prime }\). Since \(X = {\tilde{H}}\) and \(V = \tilde{{\tilde{H}}}\), with \({\tilde{H}}\) and \(\tilde{{\tilde{H}}}\) copies of H, we have that for all \(j \in H{\setminus } C\) there is a vertex \(i \in {\mathcal {C}}\) such that \((i,j) \in E\). Therefore \({\mathcal {C}}\) provides also a solution true for [DC]. \(\square \)

On the Graph of an Extensive-Form Game. We have just proved that problem [EC] is NP-complete for a generic graph. However, the graph generated by an extensive-form game is not a generic one. Indeed, it is possible to identify a graph that corresponds to no extensive-form games. The graph \(\langle H, E \rangle \) of Fig. 3 does not represent any extensive-form game (cf. Appendix A.5).

4 New Methods for the Identification of Nash Equilibria

In this section, the theoretical results introduced in Sect. 3 are applied to derive new methods for the computation of outcomes of Nash equilibria in two-player extensive-form games with perfect information. Theorem 7 provides a necessary and sufficient condition for an outcome to be a realisation of a Nash equilibrium. This result is the pillar for the development of computational methods to the following questions: (i) whether it is possible to enumerate the Nash equilibria and (ii) whether the realisation of Nash equilibria can achieve a value of utility with a given range of values.

In what follows, we exploit the results of Sect. 3.3 and introduce a linear system that enables to determine if an outcome is the realisation of a Nash equilibrium. Relying on this linear system, we introduce a method to enumerate all Nash equilibria outcomes in Sect. 4.1, a method to provide an upper bound to their utility in Sect. 4.2 and a method to provide the best or worst Nash equilibrium outcomes for any player in Sect. 4.3. The latter is compared to the one provided by the optimization problem by [16].

4.1 Nash Equilibrium

The condition to verify if an outcome is the realisation of a Nash equilibrium introduced in Theorem 7 requires to solve two instances of problem [EC] with input \(\langle V \cup X,E\rangle \) such that \(V \cap X = \emptyset \). We recall that the solution obtained by solving the problem allows to identify some possible outcomes \({\mathcal {C}} \subset V\) of a strategy within the set V such that we have the guarantee that the elements X preferred by the opponent are not included. We provide a formulation [CL] of problem [EC]:

Formulation [CL] models any feasible solution of problem [EC]: \(x_i = 1\) if and only if \(i \in {\mathcal {C}}\). Constraints \((CL-1)\) impose that \({\mathcal {C}}\) induces a clique, while constraints \((CL-2)\) guarantee that every vertex \(j \in X\) is not connected to at least one vertex \(i \in {\mathcal {C}}\). Any solution to the linear system [CL] provides a solution to problem [EC] with input \(\langle V \cup X,E\rangle \).

Theorem 7 imposes to solve two instances of the problem [MC] to determine if an outcome is the realisation of a Nash equilibrium. We thus exploit the fact that the problem [MC] can be modeled by formulation [EC] and define a unique linear system [NE] that allows to determine if an outcome is the realisation of a Nash equilibrium. Let us consider a two-player game in its graph form \(\Gamma = \langle H,E,u\rangle \) and an outcome \(h \in H\). Let us define the following sets:

-

\(X_1 = \{h^{\prime } \in H | (h^{\prime },h) \notin E, u_1(h^{\prime }) > u_1(h)\}\), the set of outcomes compatible with h in the complementary graph, that are preferred by the first player to h;

-

\(X_2 = \{h^{\prime \prime } \in H | (h^{\prime \prime },h) \in E, u_2(h^{\prime \prime }) > u_2(h)\}\), the set of outcomes compatible with h in the graph, that are preferred by the second player to h;

-

\(V_1 = \{h^{\prime } \in H \setminus X_2 | (h^{\prime },h) \in E\}\), the set of outcomes compatible with h in the graph, such that h is preferred to them for the second player;

-

\(V_2 = \{h^{\prime \prime } \in H \setminus X_1 | (h^{\prime \prime },h) \notin E\}\), the set of outcomes compatible with h in the complementary graph, such that h is preferred to them for the first player.

The outcome \(h \in H\) is a realisation of a Nash equilibrium if and only if the system [NE] provides a solution:

Given a game \(\Gamma = \langle H,E,u \rangle \), by applying [NE] to every \(h \in H\) it is possible to enumerate all the realisations of the Nash equilibria. In the following, we propose an enumeration algorithm [EA] that iterates over all the outcomes and then solves [NE] for every outcome.

4.2 Upper Bounds for Nash Equilibria

In this section, we compare two methods to compute upper bounds for the utility of the first player when a Nash equilibrium is played. The most known formulation for extensive-form games is the one introduced by [16], which provides a Nash equilibrium for zero-sum games. Recently, this formulation has been proven to provide an upper bound to the utility of the first player of any Nash equilibrium (cf. [28]). This method is based on the concept of sequence, which is a vector of actions played by a same player. Given a game \(\Gamma = \langle {\mathcal {I}}, {\mathcal {A}}, H^{\prime }, P,u \rangle \) and a history \(h^{\prime } \in H^{\prime }\), we denote by \(seq_i = (h_{ik})\) a sequence of actions played by player i according to \(h^{\prime }\). We write \(h^{\prime } = (seq_1,seq_2)\) to show that to every history \(h^{\prime }\) correspond two sequences \(seq_1\) and \(seq_2\). We consider \(\Lambda _1\) and \(\Lambda _2\), respectively, the set of all sequences of the first and second player. Let \(x \in \{0,1\}^{|\Lambda _1|}\) and \(y \in \{0,1\}^{|\Lambda _2|}\) be the vectors defining the probability for a sequence to be played. We define the matrix \(U^i: \Lambda _1 \times \Lambda _2 \rightarrow {\mathbb {R}}\) that maps each couple of sequences to the utility of player i: \(U^i_{seq_{1},seq_{2}} = u_i(h)\) for all \(h = (seq_{1},seq_{2}) \in H\); \(U^i_{seq_{1},seq_{2}} = 0\) if \(h^{\prime } = (seq_{1},seq_{2}) \notin H\). The utilities of the players can thus be written in the form \(x^T U^1 y \) and \(x^T U^2 y\). The formulation defining the set of possible sequences is constrained by the fact that if an action is taken at a node of the game, such decision must be considered also in the following ones.

All such causal constraints, written \(Ex=e\) and \(Fy=f\), respectively, for the first and the second player, will be built according to the same principle. Finally, the upper bound of any Nash equilibrium is given by the solution of the following bilevel problem denoted by [VS].

The optimization problem [VS] has size O(|H|) (cf. [16]). We now formulate [VS] as a linear optimization problem. Note that, as anticipated in Sect. 2, we are only interested in solutions corresponding to pure strategies. Therefore we can add the integral constraints:

We will also introduce the variable \(w_{jk} \in [0,1]\), which allows to linearise the formulation by rewriting \(x_j \cdot y_k = w_{jk}\) as:

In the second level of problem [VS] the optimal \(\overline{u}:= u_2(\overline{y}) \in {\mathbb {R}}\) is achieved for some \(k \in \Lambda _2\), i.e., we can write

Since only one sequence \(k \in \Lambda _2\) must be chosen, the constraint \(Fy=f\) is replaced by

The constraint \(Ex=e\) when written explicitly corresponds to:

We now reformulate the second level of [VS], using a set of linear constraints. Let us denote by \(\overline{u} \in {\mathbb {R}}\) the maximum utility for the second player. Also, we set a large value \(M > 0\) to use the following classical big-M constraints:

The bilevel problem [VS] is then denoted by \([VS-L]\), and written as follows:

Note that adding a constant value to the utility function does not change the solution, therefore we can assume \(U_{jk}^2 > 1\) for all \(j \in \Lambda _1\) and \(k \in \Lambda _2\). Under this assumption, we can add the following inequality:

which is valid for [VS], since \(y_k = 0 \iff \sum _{j \in \Lambda _1} U_{jk}^2 \cdot x_j = 0\) and \(y_k = 1 \iff \exists j \in \Lambda _1\) such that \(x_j = 1\).

Let us denote by \([VS-L2]\) the resulting formulation:

In what follows, we introduce a new algorithm, called [UBA] and described in Algorithm 2, that allows to compute an upper bound of the utility of the first player when a Nash equilibrium is played. We show that such upper bound is the same as the one provided by [VS]. The algorithm starts by ordering the outcomes in decreasing order of utility of the first player. Every outcome is then evaluated by solving problem [EC] with input \(\langle V_1,X_2,E \rangle \). If the existence of a clique is proven for [CL] the algorithm stops and an upper bound is found. As remarked previously, since the procedure tests a necessary and yet not sufficient condition, the outcome found is not necessarily a realisation of a Nash equilibrium. Both [VS] and [UBA] provide the best outcome for the first player that can be a best response of a strategy of the second player, a necessary condition for the outcome to be a realisation of a Nash equilibrium. Theorem 12 thus proves that the two methods provide the same upper bound.

Theorem 12

Consider a game \(\Gamma = \langle {\mathcal {I}}, {\mathcal {A}},H,P,u\rangle \) and its graph form \(\langle H,E,u\rangle \). Let \(u_1^{VS}\) and \(u_1^{UBA}\) be the optimal values obtained when [VS] and [UBA] are applied to game \(\Gamma \), then we have \(u_1^{VS} = u_1^{UBA}\).

Proof

We define the set of all the outcomes that can be a best response of the second player to a strategy of the first player, \(BR_2 = \{h \in H | \exists s_1 \in S_1, h = \mathop {\mathrm {arg\,max}}\limits _{h^{\prime } \in H(s_1)} u_2(h^{\prime })\}\). We show that \(u_1^{VS} = u_1^{UBA} = \max _{h \in BR_2} u_1(h)\). First, we observe that \(BR_2\) corresponds to the set of feasible solutions of [VS] and thus \(u_1^{VS} = \max _{h \in BR_2} u_1(h)\). Let us prove that \(u_1^{UBA} = \max _{h \in BR_2} u_1(h)\). Given \(h \in H\), \(X = \{h^{\prime } \in H | (h^{\prime },h) \in E, u_1(h^{\prime }) > u_1(h)\}\) and \(V = \{h^{\prime } \in H {\setminus } X | (h^{\prime },h) \in E\}\), let us consider \(H^{CL}\) the set of \(h \in H\) such that problem [CL] with input \(\langle V,H,E \rangle \) has answer true. Then we have \(u_1^{UBA} = \max _{h \in H^{CL}} u_1(h)\). Moreover, for all \(h \in H^{CL}\) let us consider a strategy \(s_1^h \in S_1\) such that \(V \subset H(s_1^{h})\) and \(H(s_1^{h}) \cap X = \emptyset \). By definition of \(H^{CL}\) for each \(h \in H^{CL}\) we have \(h = \mathop {\mathrm {arg\,max}}\limits _{h^{\prime } \in H(s_1^h)} u_1(h)\), which implies \(h \in BR_2\). Analogously, if \(h \notin H^{CL}\) there is no \(s_1 \in S_1\) such that \(h = \mathop {\mathrm {arg\,max}}\limits _{h^{\prime } \in H(s_1)} u_1(h)\) and thus \(h \notin BR_2\). Since \(H^{CL} = BR_2\), we have \(u_1^{UBA} = \max _{h \in BR_2} u_1(h)\). \(\square \)

4.3 Best and Worst Nash Equilibrium

Note that the algorithms [VS] and [UBA] introduced in Sect. 2.2 do not provide the tightest upper bound to the utility of the Nash equilibria (cf. Appendix A.6). In this section, we introduce an algorithm that provides a Nash equilibrium outcome whose utility is the highest for the first player. The algorithm [BNE] consists in ordering the outcomes from best to worst with respect to the utility function of the first player and then picking the first of them that is a realisation of a Nash equilibrium, i.e., for which [NE] admits a feasible solution. Analogously, it is possible to identify the Nash equilibrium with the lowest utility for the first player, by ordering in reverse order the outcomes. The algorithm [WNE] is presented together with [BNE] in Appendix B.3.

5 Numerical Results

To our knowledge, the only method for enumerating the pure Nash equilibria of extensive-form games is a brute-force transformation of the game in normal form. This approach requires an enumeration of the exponential number of strategy profiles of the game, which cannot be tested numerically, unless we limited the size of games to few (less than 20) outcomes.

For this reason, in this section, we assess the performance of the methods introduced in Sect. 4 through several series of experiments. To our knowledge, there is no standard library of extensive-form games in the literature. For this reason, we developed a new library presented in Sect. 5.1. The methods that provide bounds to the utility of Nash equilibria and those who enumerate them are analysed separately, respectively in Sect. 5.2 and in Sect. 5.3. The experimental study was conducted on a Intel Xeon CPU 2.20 GHz with 13 GB RAM. The algorithms were implemented in Python 3.8. The solver used to solve all Mixed-Integer Linear Programming problems is GLPK (cf. [30]).

5.1 Library of Extensive-Form Games

Extensive-form games with perfect recall and perfect information have never been categorised. We thus introduce a new classification of games based on three key features: the structure of the game-tree, the size of the game and the utility function. This allows to challenge our algorithms on a wide range of game instances and analyse their efficiency. The proposed classification will be used to create a new library of extensive-form games. More precisely, the structure of a game captures the properties of the shape of the game-tree. The size of the game (i.e., the number of outcomes) allows us to better assess the scalability of methods. Finally, once structure and size are fixed, the only parameter that varies in a game is the utility function, which we provide different families of functions for.

Each instance of the dataset is referred to with a specific name encoding the three key features of the game. Such encoding is shown in Table 1. The games’ structure is encoded as follows: Rn indicates that the number of actions at every child of a node \(h^{\prime } \in H\) is chosen uniformly at random \({\mathbb {U}}(\{0,\dots ,n \cdot |{\mathcal {A}}(h^{\prime })|\})\), given the constraint that they have on average n actions; Cn indicates that every node has the same number of actions, with n actions per node; and finally Un indicates that, at every node, all actions but one lead to an outcome, with n actions per node. The players’ utility is encoded as follows: R if the utility of an outcome is chosen uniformly at random \({\mathbb {U}}([0,1])\) in the interval [0, 1]; D if the utility is chosen randomly from a discrete set \({\mathbb {U}}(\{1,\dots ,10\})\), namely a natural integer between 1 and 10; Z if the game is zero-sum, i.e., at every node \(h \in H\) the winner is chosen at random \(i \in \{1,2\}\) and gets \(u_i(h) = 1\), while the loser gets 0; A if the game is zero-sum and the winner has a utility chosen randomly from a discrete set \({\mathbb {U}}(\{1,\dots ,10\})\); F if the utility is chosen randomly from \({\mathbb {U}}([0,1])\) at every outcome and it is the same for the two players; E if every utility of every outcome has constant value. The latest value of a label is the size of the game.

Example

An instance of game labeled C4E100 has 100 outcomes, every node has 4 actions (C) and utility is a constant function (E).

The library is publicly accessible (cf. [31]) and composed of three distinct datasets. Dataset 1 contains 21 extensive-form games which vary in their structure, within the range {R, C, U}, and in their size {100, 216, 324, 400, 512, 625, 729}, but not in their utility, which is always Random R. Dataset 1 has games of smaller size, i.e., small enough to manage methods already known in the literature, that provide bounds to the utility of Nash equilibria; Dataset 1 is used in Sect. 5.2. Dataset 2 has 72 extensive-form games which vary in their structure {R, C, U}, size {256, 729, 1296, 2401} and utility {R, D, Z, A, F, E}. Dataset 3 has 75 extensive-form games of size 729 which vary in structures {R, C, U} and utility {R, D, Z, A, F}. Dataset 2 and 3 have games of larger size, that are used to assess the method to enumerate the Nash equilibria outcomes. They are used in Sect. 5.3. Data0set 2 includes games with different characteristics; it enables us to assess the method on a large variety of games. Dataset 3 includes 75 games with size 729, gathered in 15 groups, each one with 5 games having the same encoding. The 15 groups are built alternating 3 different structures {\(R3*729\), \(C3*729\), \(U5*729\)} and 5 different utilities \('*' \in \{R,D,Z,A,F\}\). The utility E has been discarded, as we show next that it does not need further analysis. The analysis performed on Dataset 3 allows to understand the variability of the results obtained for Dataset 2.

5.2 Bounds to the Utility of Nash Equilibria

We first test the methods introduced in Sect. 4.2 and in Sect. 4.3 on Dataset 1. The reference methods known in literature, i.e., the branch and bound algorithms used to solve formulations \([VS-L]\) and \([VS-L2]\), are compared to algorithm [UBA]: they all provide the same upper bound to the utility of the first player in a Nash equilibrium. We recall that the proposed algorithms [BNE] and [WNE] provide the tightest (respectively, upper and lower) bounds to the utility of every Nash equilibrium. Table 2 reports the computation time for every instance of each algorithm as well as the number of iterations for the three iterative algorithms [UBA], [BNE] and [WNE].

Comparison of [UBA] vs \([VS-L]\) vs \([VS-L2]\). First and main result is that the algorithm [UBA] overcomes the methods relying on \([VS-L]\) and \([VS-L2]\) by some orders of magnitude. The method [UBA] computes the upper bound to the utility of Nash equilibria at least \(>40\) times faster than \([VS-L]\) and \([VS-L2]\). Second, we highlight the quality of the valid inequality added in \([VS-L2]\). Indeed, we observe that it improves the computation time with respect to \([VS-L]\) in 10 out of 21 instances and reduces of around \(30\%\) the computation time in instances like R6R512 and C2R512, which require more than 25 minutes for \([VS-L]\). Both \([VS-L]\) and \([VS-L2]\) perform \(17\%\) to more than \(99\%\) faster on structures C and U than those of type R; we ascribe this to the fact that these games present fewer nodes and thus require fewer constraints in the linear formulation.

Comparison of [UBA] vs [BNE] vs [WNE]. Algorithm [BNE] allows us to tighten the upper bound in 4 instances out of 21, thus showing that [UBA], \([VS-L]\) and \([VS-L2]\) do not always achieve the tightest bound. In Table 2, the few instances in which [BNE] tightens the bound are underlined, whereas in the other 17 out of 21 instances the number of iterations does not change. As predicted, [BNE] is slower than [UBA], but still performs more than 6 times faster than both \([VS-L]\) and \([VS-L2]\) in average on this dataset. Algorithm [WNE] requires a larger computation time than [BNE] in 19 out of 21 instances. In fact, it always has a larger number of iterations than [BNE]. Indeed, [WNE] first checks the outcomes with lowest utility for the first player, those are unlikely to correspond to the best response of the first player. It takes thus far more time to identify an outcome which is a best response for both players.

5.3 Enumeration of Realisations of Nash Equilibria

In the next experiment, we tested the methods introduced in Sect. 4.1 on Dataset 2 and on Dataset 3. We have measured the performance of Algorithm [EA] while enumerating the Nash equilibria outcomes of every instance.

Analysis on Dataset 2. For the space’s sake, in Table 3 we display only the results for all the games’ instances with size 729. More precisely, for each game, we show how many outcomes are the realisations of Nash equilibria NE, the average size \(X_{avg}\) of all sets \(X_1\) and \(X_2\), the total time required to run the algorithm \(t_{tot}\), the average time \(t_{avg}\) and the maximal time \(t_{max}\) for an outcome of the game. In addition, Fig. 4 displays the average time and the maximal time required to execute the algorithm on a game outcome, and the percentage of Nash equilibria identified among all the game’s outcomes.

Impact of the Size on Performance. As expected, the computation time increases with the size of the game (cf. Fig. 4). Algorithm [EA] iterates over the outcomes and requires to solve system [NE] for each outcome. When the size of the game increases, on average the size of system [NE] increases accordingly. However, if the size is fixed, the structure and the utility function have a fundamental impact on the computation time.

Impact of the Utility Function on Performance. In the degenerate case E, when outcomes all have same utility value, solving system [NE] gets trivial for each outcome and thus the computation time of each iteration is negligible (cf. Table 3). Indeed, the sets of outcomes to be excluded \(X_1\) and \(X_2\) are empty at every iteration. For such case building the graph is not even necessary. We observe that the computation time is lower for the case Z, as the sizes of \(X_1\) and \(X_2\) are smaller. We cannot infer significant correlations on the other cases (R, D, A, F) and we thus defer such analysis to Dataset 3.

Impact of the Structure on Performance. The structure of the game influences the average time necessary to verify if an outcome is the realisation of a Nash equilibrium. Indeed, we observe in Fig. 4 that in games with the same size those whose nodes have the same number of children (structure coding C) require more time on average to compute an equilibrium. This is due to the fact that the neighborhoods of an outcome \(V_1 \cup X_2\) and \(V_2 \cup X_1\) in the graph and its complementary have always the same size in both parts of system [NE]. On the other hand, in games with more asymmetrical structure (structure coding R and U) one of the two graphs is often smaller and thus the corresponding [NE] is much faster to be solved in practice.

Analysis of Dataset 3. Table 4 shows the average of performances on 5 sample games for each one of the 15 patterns. The outcomes are chosen with uniform random probability. Every curve of Fig. 5 shows the computation time distribution of [NE] for an outcome of a game. The 15 curves provide such result for the 15 games having the same utility coding. Namely, every line appearing in the plots shows for every game type \(\Gamma = \langle {\mathcal {I}},H,u \rangle \) the function \(f(t) = {\mathbb {P}}(t(h) \le t | h \in H)\), i.e., the probability that the algorithm solving system [NE] takes less than t seconds to determine if h is the realisation of a Nash Equilibrium.

Computation time distribution for [NE] on the outcomes of games belonging to Dataset 3. Every curve corresponds to the distribution for the outcomes of a single game. Each figure reports on the performance on the 15 games having the same encoding for the utility function, being respectively Random (R), Discrete (D), Zero-sum (Z), Asymmetric (A) and Indifferent (F). The distribution curves represent each a structure: Random (R) in blue, Complete (C) in red and Totally Unbalanced (U) in green (Color figure online)

Impact of the Structure on Performance. These numerical results show empirically that the structure is the main factor influencing the performance of the algorithm solving system [NE]. Indeed, on a game with totally unbalanced (U) structure it takes a negligible time for the vast majority (around \(99\%\)) of the outcomes of a game instance (cf. Fig. 5). On the other hand, on a game with complete (C) structure the algorithm such percentage decreases to \(20-50\%\). The algorithm performs in between the two extremes for a game with random (R) structure.

Impact of the Utility on Performance. We observe on Table 4 that the best performances are obtained for zero-sum games (coding utility Z) for the same structure. This is due to the fact that when the utility is equal to 1 the set of outcomes to be excluded X is empty (cf. Table 3). One of the two sides of the problem is always trivial. If the game switches from utility Z to A, i.e., if the player that wins gains a value between 1 and 10 instead of just 1, the property that makes one of the problems trivial is lost. Indeed, games with coding A have performances comparable to those with other utility codings (R, D, F). One might thus assume that increasing the granularity of the utility might make the algorithm less efficient. However, we do not observe any significant difference in the computation time while comparing (D) (\(u_i \sim {\mathbb {U}}(\{1,\dots ,10\})\)) to (R) (\(u_i \sim {\mathbb {U}}(0,1)\)).

5.4 Obtaining Insights on Nash Equilibria of Extensive-Form Games

To the best of our knowledge, Algorithm [EA] is the first one proposed to enumerate the Nash equilibria outcomes of an extensive-form game that does not resort to brute force. Besides the analysis of its complexity, we can also provide further insights of the numerosity of Nash equilibria in a game.

The number of possible realisations of a Nash equilibrium can vary from 1 to the number of outcomes of a game. The latter case implies that the utility function is constant (case E). However, in the generic case the number of Nash equilibria highly depends on the structure of the game-tree. We observe from Sect. 5.3 that games with a totally unbalanced structure (U) tend to host fewer Nash equilibria. This is due to the fact that if there is an outcome with great value to a player, she might choose to stick to it, and the opponent has few options to build a strategy that redirects her to an outcome that lies deeper in the tree. If an outcome with great value happens to lie at a very high level of the tree, the lower levels hardly host other realisations of Nash equilibria. The converse tends to occur as well, i.e., in a generic game with a complete structure (C) we typically observe many more Nash equilibria than in the unbalanced case. This follows the intuition that in a complete game structure a player can find more combination of strategies to convince the opponent to switch her best response to a different outcome.

6 Conclusions

In this paper, we introduce a new representation for a two-player extensive-form game with perfect information as a graph of its outcomes. We prove that identifying a Nash equilibrium outcome of an extensive-form game corresponds to identifying two cliques on such graph and its complementary. Thanks to this result, we introduce the first method of the literature to determine if an outcome of an extensive-form game is a realisation of a Nash equilibrium. Such method allows to define the first algorithm to enumerate the realisations of all Nash equilibria of an extensive-form game. The algorithm performs very well on a sample dataset of games of different structures and sizes. Moreover, it is possible to reframe the algorithm so that it provides any given bound to the utility of all Nash equilibria. Such algorithm performs significantly better than the most known method in literature, which provides a (not always the tightest) upper bound to the utility of Nash equilibria.

We foresee several extensions for this work. First, we do not fully exploit the properties of the graph form to improve the efficiency of the proposed algorithms. Second, it would be interesting to devise methods to parallelize the computation of the Nash equilibria outcomes in large instances. Finally, the categorisation of extensive-form games suggests that more efficient methods can be designed for some specific classes of games. For instance, customized algorithms can be written to compute the compatibility of two outcomes. A possible extension of this results would be eventually to extend the numerical results to larger games with mixed strategies, with more than two players and with imperfect information.

Data Availability

The dataset used in the numerical experiments is available online (cf. [31]).

References

Kuhn HW, Tucker AW (1953) Contributions to the theory of games. Princeton University Press, Princeton

Long NV (2011) Dynamic games in the economics of natural resources: a survey. Dyn Games Appl 1:115–148

Baird DG, Gertner RH, Picker RC (1998) Game theory and the law. Harvard University Press, Cambridge

Etesami SR, Başar T (2019) Dynamic games in cyber-physical security: an overview. Dyn Games Appl 9(4):884–913

Nash JF Jr (1950) Equilibrium points in n-person games. Proc Natl Acad Sci 36(1):48–49

Selten R (1965) Spieltheoretische behandlung eines oligopolmodells mit nachfrageträgheit: teil i: bestimmung des dynamischen preisgleichgewichts. Z Gesamte Staatswiss J Inst Theor Econ H 2:301–324

Selten R (1978) The chain store paradox. Theory Decis 9(2):127–159

Pettit P, Sugden R (1989) The backward induction paradox. J Philos 86(4):169–182

Binmore K, McCarthy J, Ponti G, Samuelson L, Shaked A (2002) A backward induction experiment. J Econ Theory 104(1):48–88

Daskalakis C, Goldberg PW, Papadimitriou CH (2009) The complexity of computing a Nash equilibrium. SIAM J Comput 39(1):195–259

Papadimitriou CH (1992) On inefficient proofs of existence and complexity classes. Ann Discret Math 51:245–250. https://doi.org/10.1016/S0167-5060(08)70637-X

Lemke CE, Howson JT Jr (1964) Equilibrium points of bimatrix games. J Soc Ind Appl Math 12(2):413–423

Avis D, Fukuda K (1992) A pivoting algorithm for convex hulls and vertex enumeration of arrangements and polyhedra. Discret Comput Geom 8(3):295–313

Avis D, Rosenberg GD, Savani R, Von Stengel B (2010) Enumeration of Nash equilibria for two-player games. Econ Theory 42(1):9–37

Widger J, Grosu D (2009) Parallel computation of Nash equilibria in n-player games. In: 2009 international conference on computational science and engineering, vol 1. IEEE, pp 209–215

Von Stengel B (1996) Efficient computation of behavior strategies. Games Econ Behav 14(2):220–246

Koller D, Megiddo N, Von Stengel B (1996) Efficient computation of equilibria for extensive two-person games. Games Econ Behav 14(2):247–259

Audet C, Belhaiza S, Hansen P (2009) A new sequence form approach for the enumeration and refinement of all extreme Nash equilibria for extensive form games. Int Game Theory Rev 11(04):437–451

Govindan S, Wilson R (2002) Structure theorems for game trees. Proc Natl Acad Sci 99(13):9077–9080

Herings PJ-J, Peeters R (2010) Homotopy methods to compute equilibria in game theory. Econ Theory 42(1):119–156

Argenziano R, Schmidt-Dengler P (2014) Clustering in-player preemption games. J Eur Econ Assoc 12(2):368–396

Selten R, Mitzkewitz M, Uhlich GR (1997) Duopoly strategies programmed by experienced players. Econom J Econom Soc 65:517–555

Hassin R, Yovel U (2015) Sequential scheduling on identical machines. Oper Res Lett 43(5):530–533

Fudenberg D, Tirole J (1991) Game theory. The MIT Press, Cambridge

Kreps DM, Wilson R (1982) Sequential equilibria. Econom J Econom Soc 23:863–894

Fudenberg D, Levine D (1983) Subgame-perfect equilibria of finite-and infinite-horizon games. J Econ Theory 31(2):251–268

Koller D, Megiddo N (1992) The complexity of two-person zero-sum games in extensive form. Games Econ Behav 4(4):528–552

Zappalà P, Benhamiche A, Chardy M, De Pellegrini F, Figueiredo R (2022) A timing game approach for the roll-out of new mobile technologies. In: 2022 20th international symposium on modeling and optimization in mobile, Ad hoc, and wireless networks (WiOpt), pp 217–224. https://doi.org/10.23919/WiOpt56218.2022.9930538

Kratsch D, Liedloff M (2007) An exact algorithm for the minimum dominating clique problem. Theor Comput Sci 385(1–3):226–240

Makhorin A (2008) GLPK (GNU linear programming kit). http://www.gnu.org/s/glpk/glpk.html

Zappalà P (2023) GT library 1.0. Dataset of extensive-form games. https://doi.org/10.5281/zenodo.7628367. https://github.com/paolozapp/gtlibrary

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

All the authors contributed equally to this work.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Ethical Approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Examples

1.1 A.1 Extensive Form

We consider an example of extensive-form game, whose representation is the tree of Fig. 6. The game \(\Gamma = \langle {\mathcal {I}},H,u\rangle \) with two players \({\mathcal {I}} = \{1,2\}\) is represented by a tree, which allows to show all the possible actions. The first player observes no actions \(\emptyset \) and she picks an action in the set \({\mathcal {A}}(\emptyset ) = \{a_1,a_2\}\). If she picks action \(a_2\), the game arrives to the outcome \(h_1 = (a_2) \in H\). On the other hand, if she picks action \(a_1\), the second player observes it and picks an action from the set \({\mathcal {A}}(a_1) = \{b_1,b_2\}\). If the second player picks action \(b_1\), she gets to the outcome \(h_2 = (a_1,b_1) \in H\). Analogously it is possible to get all the other outcomes of the game: \(h_3 = (a_1,b_2,a_3,b_3)\), \(h_4 = (a_1,b_2,a_3,b_4)\), \(h_5 = (a_1,b_2,a_4,b_5)\), \(h_6 = (a_1,b_2,a_4,b_6)\). The set of outcomes is therefore \(H = \{h_1,h_2,h_3,h_4,h_5,h_6\}\). The set of histories is instead \(H^{\prime } = \{\emptyset ,h_1,(a_1),h_2,(a_1,b_2),(a_1,b_2,a_3),(a_1,b_2,a_4), h_3,h_4,h_5,h_6\}\). The function P maps respectively \(H^{\prime } {\setminus } H = \{\emptyset ,(a_1),(a_1,b_2),(a_1,b_2,a_3),(a_1,b_2,a_4)\}\) to the players acting at such nodes \(\{1,2,1,2,2\}\). The utility function \(u: H \rightarrow {\mathbb {R}}^2\) evaluates the outcomes. For instance, we can have \(u(h_1) = (2,0)\), where \(u_1(h_1) = 2\) is the evaluation given to \(h_1\) by the first player and \(u_2(h_1) = 0\) is the one given by the second player. For the game in Fig. 6 we assume that \(u(h_2) = (3,4)\), \(u(h_3) = (8,11)\), \(u(h_4) = (1,9)\), \(u(h_5) = (5,1)\), \(u(h_6) = (9,3)\). The value of the utility allows to understand which outcomes are preferred by the players. For instance, the second player prefers \(h_3\) to \(h_6\) because \(u_2(h_3) = 11 > 3 = u_2(h_6)\). We write alternatively \(h \succ _i h^{\prime }\) to show that \(u_i(h) > u_i(h^{\prime })\). In the caption of Fig. 6 all the preferences among the outcomes are given, based on the values assigned by the utility function.

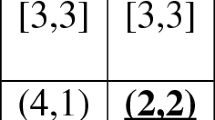

An extensive-form game in its representation as a tree. Preferences. The order of preference for the first player is: \(h_6 \succ _1 h_3 \succ _1 h_5 \succ _1 h_2 \succ _1 h_1 \succ _1 h_4\). The order of preference for the second player is: \(h_3 \succ _2 h_4 \succ _2 h_2 \succ _2 h_6 \succ _2 h_5 \succ _2 h_1\). Strategies. The two players choose respectively \(s_1\) and \(s_2\) as strategies. At nodes \(\emptyset \) and \((a_1,b_2)\) the first player chooses respectively \(s_1(\emptyset ) = a_1\) and \(s_1(a_1,b_2) = a_4\). At nodes \((a_1)\), \((a_1,b_2,a_3)\) and \((a_1,b_2,a_4)\) the second player chooses respectively \(s_2(a_1) = b_1\), \(s_2(a_1,b_2,a_3) = b_3\) and \(s_2(a_1,b_2,a_4) = b_6\)