Abstract

We consider a dynamic game where additional players (assumed identical, even if there will be a mild departure from that hypothesis) join the game randomly according to a Bernoulli process. The problem solved here is that of computing their expected payoff as a function of time and the number of players present when they arrive, if the strategies are given. We consider both a finite horizon game and an infinite horizon, discounted game. As illustrations, we discuss some examples relating to oligopoly theory (Cournot, Stackelberg, cartel).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In most strategic interactions analysis, the set of players is known and common knowledge. But there are some situations where neither the players, nor the designer or public authority, nor the theorist know how many players are in a game. As an illustration, consider the three situations below:

Example 1

Imagine the case of a company that commits a violation of competition rules (which identically affects consumers) in a country where consumers can take joint action in court to recover the amount of their losses. The legal system chosen by the country as part of a joint action works as follows: the first period begins when a consumer enters the court, in the second period the judge analyses the case. If he finds it justified, he evaluates the total harm done,Footnote 1 and imposes a reimbursement and a payments schedule for each month of a third period. But during that third period, more complainants may apply to join the action, given that the judge will not approve more than one per week. The weekly reimbursement is then shared equally among all complainants approved at that time. What is the first consumer–victim expected payoff according to the fact that he does not know how many victims will participate into the joint action as each victim can alternatively choose to never suit or suit individually the company?

Example 2

Consider the case of a city where the authorities issue a number of taxi driver licences. There are k individuals who hold such a licence. Then, thanks to a technological innovation, a company is able to transform every willing motorist driver into a transporter of people for fee. How much can the k licensed taxi drivers expect to loose, given that they do not know how many motorists will be turned into drivers?

Example 3

Suppose that a wealthy French entrepreneur decides to create the “René and Gisèle Foundation” to promote research in game theory. This foundation, thanks to the proceeds of a charitable donation, appoints a benevolent research director (free of conflicts of interest) to share each month equally a sum of 100.000 euros between all scientific projects in game theory which she has examined and fond valuable. As the entrepreneur and the research director want to provide a long term support, they decide to never abandon the funding of a project that she had already selected. After the beginning of the foundation, where \(k\ge 1\) projects could have been selected, each month, due to administrative procedures, only one new project, if found valuable, could be added to the foundation’s public list of selected projects (which mentions the date of arrival in the list of each selected project). How much the mth selected project can expect to have?

The goal of this paper is to provide a general mathematical framework for computing the payoff of players in a dynamic game with clone players randomly arriving at each step of time in a Bernoulli process and to show examples of applications in oligopoly theory. The set-up here only allows for a sequence of stepwise, static equilibria, and we assume that these static problems are solved, so that strategies and payoffs are known as a function of the number of players present. We leave a true inter-temporal equilibrium analysis for a later article.

The paper is organized as follows: in the next section, we expose the theoretical related literature and explain how we contribute to the question of games with an unknown number of players. In Sect. 3, we formulate the problem, propose a model and give the expected payoff of finite (Theorem 1) and infinite (Theorem 5) horizon game that is the expected payoff for each player. Then in Sect. 4, we provide some examples from oligopoly theory where this framework and results could be used to find expected payoff and static equilibria. Section 5 concludes by underlining the limits and questions of our framework which are left for future research.

2 Related Literature

It is well known that the usual textbook game theory toolbox is in the “fixed-n” paradigm and does not address problems where there is a degree of uncertainty on the number of players in a game. Indeed, in Bayesian games, the uncertainty for each player is on the types of other players, that is, on their private information about their characteristics represented by a random variable for each player. So there is no uncertainty on the number of players in the game. Moreover, to extend Bayesian games to games with unknown number of players raises conceptual and modelling issues that could not be overcome. However, to the best of our knowledge, there exists currently in game theory two ways to directly manage the question of uncertainty on the number of players.

The first strain of the literature, which is the oldest one, investigates games where the active competing players is not common knowledge. This issue has taken two forms (see [13]). The first form models the number of active players as a stochastic process on a set of potential players. It means that the active players are a subset of the set of potential players, which implies that each player has a private information (he is active or not). The timing of this kind of game is usually in four-step: (1) nature chooses which players will be active (and those who will be passive), (2) each player observes only his own situation, (3) the active players choose their strategies and (4) payoffs are received. The common knowledge information is the set of potential players and the probability distribution used by Nature to assign a role to each player.

The second form endogenizes the entry process. In this setting, at first, there is no player in the market. Then, it is assumed that each potential player (the total set of which is common knowledge) has a privately known and different cost to entry on the market, and each of them must decide to participate or not into the game.

These two kinds of models are useful in a great number of cases. For example, consider the situation of an appointment of Dean at a University; the applicants do not know how many persons will apply even if they perfectly know how many persons can apply (the set of Professors in the University). It is also the case, for example, in online auction sites where the number of registers is known and the number of bidders for each product is ex ante unknown.

Seminal articles in this line of the literature are [15, 16] which demonstrate that some results of auction theory could be sensitive to the assumption that there is a stochastic number of bidders, which means that the set of real bidders is not common knowledge, whereas the set of potential bidders is. They also allow the bidders to have different priors on how many bidders are present as long as these priors are Bayesian consistent. From the design policy point of view, the question arises whether the seller had an interest to reveal (or conceal) the number of actual bidders and their identities. In [27], the number of potential bidders is common knowledge, but some of them are experts (bidders with private information) and some are non-experts (bidders with no private information), and it is the number of experts which is uncertain for the seller and the other buyers. Münster [20] offers an investigation of rent-seeking contests with an unknown number of competing contestants where all players are risk neutral or have constant absolute risk aversion. Complementing to the pure risk approach, Salo and Weber [32] and Levin and Ozdenoren [13] propose models where bidders are averse to the ambiguity generated by the lack of information about the number of rivals in the auction. On the entry process endogenous side, Samuelson [33] finds that policies to limit the number of bidders may be welfare improving. When a first-price sealed bid auction is used and bidders can enter upon paying an entry cost, McAfee and McMillan [17] show that the seller should not impose a reserve price higher than his own valuation and that the optimal number of bidders enter. More recently, there have been investigations along these lines on environmental topics as in [31] or [2, 3].

The more recent other strain of the literature is games with population uncertainty, where the real number of players in the market is not common knowledge. But the number of players really in the game is supposed to be drawn from a random variable, whose probability distribution and mean are commonly known. Players have to choose their strategies before the player set is revealed. Among these games, a special attention has been paid to the subclass called Poisson games, where the number of players N is a random variable that follows a Poisson distribution with mean n (so \(N=k\) with the probability \(e^{-n}n^{k}/k!\)), and each player’s type is then drawn independently from some fixed probability distribution. More generally, in extended Poisson games the expected population sizes and players’ utility functions may depend on an unknown state of the world. In this case, there is a two-stage structure: first a random state variable is drawn from some given distribution, and then a Poisson game is played. In theses games, each player’s belief about the number of other players and their types is the same with the prior distribution of the total number of players and their types (property called by Myerson environmental equivalence). It has been shown that Poisson games modelling has very nice mathematical features, subsumes Bayesian games with consistent priors and opens a fertile field of research. To illustrate the nature of questions solved by it, De Sinopoli and Pimienta [7] introduces the following simple and clear story: “A player is sitting at home and faces two possible alternatives, either she goes out to some social event, or she stays home. She does not know how many players are facing this same disjunctive, but she knows that this number is a Poisson random variable with parameter n. If she goes out and meets somebody she receives a payoff equal to 1. If she meets nobody or decides to stay home, she gets a payoff equal to 0. Every player faces these same two options and has the same preferences”.

This type of modelling was introduced by Myerson [21–23] and Milchtaich [19]. See also [6] for a more recent account. It seems particularly well adapted to elections (where each voter does not know what is the real number of voters, see Myerson’s works) or some kind of auctions. Makris [14] proposes to model the coordination problem as a Poisson game and investigates the conditions under which a unique equilibrium is selected. In [28], it is shown that with population uncertainty, two competitors are not enough to eliminate all profits in equilibrium and have competition in a Bertrand game. Recently, Östling et al. [26] proposes an application of Poisson game to a LUPI game (lowest unique positive integer wins lottery), in order to study a lottery called Limbo introduced by the government-owned Swedish gambling monopoly Svenska Spel on January 29, 2007.

By contrast with these two lines of the literature, the spirit of our paper is a dynamic framework where the number of players involved varies with time, and to study the expected payoff of each player in situations where nobody knows ex ante the set of real players at each period after the beginning and at the end of the game (finite case), or at each period of the game (infinite case). The only information that are common knowledge before the game actually starts in our set-up is the number of incumbents, that there is a random entry at each period of time according to a Bernoulli process of known parameter, and the law linking the actual number of players present at one stage to the expected payoff at that stage (e.g. in a succession of Cournot equilibria, the inverse demand curve). Once the game is going, the actual number of players present at each stage is also common knowledge at that stage. So there is no asymmetric information in our model. We will consider a clone economy, i.e an economy where the incumbents and the new entrants are perfectly identical. As already stated, even though there is an equilibrium at each step, our aim at this stage will not be to define a dynamic equilibrium, but to compute the expected payoff to the players when their strategic behaviours are given. The full dynamic equilibrium will be dealt with in a forthcoming article.

In the rest of the literature on games, but with a fixed number of players, we may find some kinship of our theory with repeated games, see, for example, [18], since the folk theorem, for instance, involves an infinite sequence of plays with the same strategies, as we do. But there, it is the same set of players who play again and again. The fact that in the present theory, the payment matrices vary from step to step is reminiscent of stochastic games, see, for example, [24] or, more recently, the special issue [25]. However, we are not, at this stage, able to prove a result about a stationary dynamic equilibrium, as often found in that strain of the literature. Another strain of the literature with an infinite sequence of decisions is concerned with stopping problems by voting, such as [8]. But here again, the number of players is fixed and known. Games with an infinite number of players have been considered, either under the heading of “anonymous games” or that of Mean field games [4, 12] or Nash Certainty Equivalence Principle [10]. But while in the present article, the number of players is unbounded, it remains finite, and we have no averaging of the effects of the other players, as found in mean field games. Yet reference [11] provides some bridge between these two theories, see below.

A literature much closer to our theory is that of piecewise deterministic systems [5, 9], although this is not in a game theoretical framework. But we may consider the stochastic arrivals of players as stochastic jumps in the dynamic system, which is deterministic between two jumps. This same idea was used in [1], although in a very different context.

Finally, the only other article we are aware of with a stochastic entry of an a priori unknown number of players—that we learned of after this manuscript was first submitted for publication—is [11]. In that article, a major player has an infinite horizon while minor players enter randomly and leave after a given time T. Each has its own (linear) dynamics, but the (quadratic) payoffs are functions of the means of the minor players’ states—a kind of “mean field” approach which is then taken advantage of in an approximation for large numbers of minor players. The authors aim to find a dynamic Nash equilibrium—not our topic in the present article—in linear strategies and settle for an implicit solution via the concept of “consistent set of strategies”, i.e. a set of strategies that satisfy the fixed point property inherent in a Nash equilibrium. The algorithm proposed to solve for the Nash strategies is precisely a Picard iteration of a fixed point problem. A convergence proof is given in a restricted case.

3 Model

The family of problems we are investigating could have two forms. As in our examples 1 and 3, there are cases where the dynamic game has an end which corresponds to a finite horizon. There are also cases, as in our example 2, where the dynamic game has no finite end time or, more realistically, where the end time is unknown for the players, which corresponds to the infinite horizon case. We will study these two types of games successively.

We consider a discrete time, multistage game where time t is an integer varying from \(t_1\) to T (finite horizon), or from 0 to \(\infty \) (infinite horizon). We assume that additional players may arrive only one at each discrete instant of time, this with a probability p and independently from other arrivals (a Bernoulli process), and let \(t_m\) be the first stage where there are m players, i. e. the stage when player number m arrives. Once in the game, a player never leaves. (Thus, the number of players at each instant of time is governed by a binomial law.)

Usually, in a dynamic game there is a state \(x(t)\in \mathbb {R}^n\) whose dynamics are driven by the actions \(a_i(\cdot )\) of the players, according to some given law

Then the payoff of each player is

If the \(a_i\) are mixed strategies, then \(L_i\) is a mathematical expectation.

However, here the emphasis is not on the strategies but on computing an expected payoff when the strategies are assumed identical for all players, and known as a function of the number m of players present, the state and current time. In that case, a sufficient description of the state is the sequence \(\{t_n\}_{n\le m}\) of past arrival times, and current time t. As a consequence, we may dispense with the explicit dynamics, and write the payoff as a function of these variables. We also assume that the players are identical not only in their strategy choices, but also in their per stage payoff. (The latter justifies the former. We will, however, see a marginal deviation from that hypothesis in our examples.) Their payoffs will only differ because of their different arrival times.

Notation Let \(\tau _1=t_1\) and for \(m\ge 2\), \(\tau _m=(t_2,\ldots ,t_m)\in \mathbb {N}^{m-1}\) and therefore, \(\tau _{m+1}=(\tau _m,t_{m+1})\). Let \(L_m(\tau _m,t)\) be the per stage payoff of each player when m players are present as a function of the past arrival times sequence and current time. Let also m(t) be the number of players present at time t .

We introduce the further notation \(M_1(t)=L_1(t)\), and for \(m\ge 2\),

(in the infinite horizon case, we will use \(t_1=0\)). For example,

We may provide a graphical representation of what is going on. All possible entrance histories, for a finite horizon, can be represented on a tree as shown in Fig. 1. Time is shown on the bottom line. At each instant of time, and at each node of the tree for that instant, we draw a branch going up if a new player enters at that time instant, going down if nobody enters. We have chosen \(t_1=0\), shown four time steps, and labelled the nodes with the number of players present upon reaching this node (during the time step ending there). We have noted over each branch the per stage payoff of each player during the corresponding time step.

An history is represented by a path from the root of the tree (to the left) to a leaf. The payoff for that history is the (“horizontal”) sum of the per stage payoffs noted on each branch of its path (\(\tau _m\) is denoted with a list of time instants between curly braces). For each m and t, \(M_m(t)\) is the (“vertical”) sum of the \(L_m\) of stage t, i.e. the sum of the markings of the branches of the stage beginning at t reaching a node labelled m.

One can read on this graph that:

3.1 Finite horizon

To better take into account the different arrival times of the players, we introduce an explicit discount factor \(r \le 1\) and write the payoff of the nth player arrived as

Both m(t) and \(\tau _m\) are random variables. Hence, we let

We will prove the theorem:

Theorem 1

The expected payoff of the discrete time, finite horizon game for player 1, is

We observe that thus the dependence of the per stage payoff on the sequence of arrival times has been lumped into the sequence of \(M_m\). This is directly related to the fact that, for a Bernoulli process, at the number m of positive events given, all sequences of m arrival times share the same (conditional) probability. Accordingly, the dependence of the expected payoff on the per stage probability of arrival p is through the probabilities \(p^{m-1}(1-p)^{t-t_1-m+1}\) of having \(m-1\) arrivals between \(t_1+1\) and t.

It appears clearly that the limit expected payoff as p goes to zero is just

as the term \(m=1\) only remains in the sum over m, and if \(p=1\),

Proof of the theorem

The proof of the theorem is given in section “Proof of Theorems 1” of Appendix 1. It is based upon a rather classical backward induction argument and interchanges of orders of summations in the resulting formulas.

We may also write the equivalent formula for the payoff of the mth arriving player. We need to introduce extra notation. For \(1\le m<k\):

and

Given m and \(t_m\), the maximum possible number of players is \(m+T-t_m\). By a computation similar to the one leading to the theorem, we get:

Corollary 2

The payoff of the mth arriving player, given the sequence of arrival times \(\tau _m\), is

or, equivalently

3.2 Infinite Horizon

The same set-up is used to consider the game with an infinite horizon, i.e. a payoff

We need two new definitions:

Definition 3

The sequence of functions \(\{L_m\}\) is said to be

-

1.

uniformly bounded if it has a uniform bound, denoted L:

$$\begin{aligned} \exists L > 0 : \forall t\in \mathbb {R}_+,\quad \forall m\in \mathbb {N},\quad \forall \tau _m\in \mathscr {T}_m(t),\quad |L_m(\tau _m,t)| \le L, \end{aligned}$$ -

2.

exponentially bounded if each \(L_m\) is uniformly bounded, but that bound is allowed to vary exponentially with m:

$$\begin{aligned} \exists L > 0 : \forall t\in \mathbb {R}_+,\quad \forall m\in \mathbb {N},\quad \forall \tau _m\in \mathscr {T}_m(t),\quad |L_m(\tau _m,t)| \le L^m. \end{aligned}$$

Remark 4

-

1.

If the sequence \(\{L_m\}\) is uniformly bounded, it is also exponentially bounded. Indeed, let L be the uniform bound; the sequence is exponentially bounded by \(L^m\) if \(L>1\) and by \(1^m=1\) if \(L\le 1\).

-

2.

If the sequence \(\{L_m\}\) is exponentially bounded by \(L^m\) with \(L < 1\), it is also uniformly bounded by L. However, if \(L>1\), it may not be uniformly bounded.

We prove the following theorem:

Theorem 5

If the sequence \(\{L_m\}\) is exponentially bounded by \(L^m\) and \(r<1/L\), or if it is uniformly bounded, then the expected payoff of the infinite horizon game is given by

Proof

The proof, given in section “Proof of Theorems 5” of Appendix 1, is via taking the limit in formula 1, and carefully checking that the infinite series converge.

We derive the following consequences:

Corollary 6

-

1.

If the sequence \(\{L_m\}\) is exponentially bounded by \(L^m\) with \(1\le L < 1/r\), then

$$\begin{aligned} |\varPi ^e_1| \le \frac{L}{1-rL}, \end{aligned}$$ -

2.

if the sequence \(\{L_m\}\) is uniformly bounded by L, then

$$\begin{aligned} |\varPi ^e_1| \le \frac{L}{1-r}, \end{aligned}$$

We may similarly extend formula (2) to an infinite series, which converges under the same conditions:

Corollary 7

The infinite horizon payoff of the mth arriving player is, under the same conditions as in theorem 5:

4 Applications to Oligopoly Theory

We consider identical firms entering a market. The game will be played over an infinite horizon, with a discount factor r. We investigate four different market structures, differing in the type of equilibrium—cartel or competitive à la Cournot—hypothesized at each stage, and in the behaviour of the incumbents and of the new entrant each time one arrives. The four possible market structures will be called “cartel–cartel”, “cartel–Stackelberg”, “Cournot–Cournot” and “Cournot–Stackelberg”, and will be explained in more detail below.

The per stage profits for the various equilibria considered are computed in Appendix 2.

4.1 Cartel–Cartel, or Equally Sharing a Fixed Revenue Flux

In this simple case, the incumbents form a cartel, and each arriving new firm enters the cartel. If the optimum per stage profit feasible by a lone player on this market is c, we have \(L_m(\tau _m,t)=c/m\). We assert

Theorem 8

In the cartel–cartel market structure, the expected payoff to the first player is

We are therefore able to get a closed form formula. It exhibits the limit expected payoff \(\varPi ^e_1=c/(1-r)\) as p goes to zero, and \(\varPi ^e_1 \rightarrow \infty \) as \(r\rightarrow 1\) for fixed p. That it is decreasing with p can be seen in the fact that the function \(x\mapsto \ln (1+x)/x\) is decreasing for \(x \in [0,1]\).

Proof of theorem 8

The cardinal of the set \(\mathscr {T}_m(t)\) is

we obtain

and hence, applying formula (3),

It suffices to recognize the identity, for \(x<1\):

to conclude the proof. \(\square \)

It is possible to compute the expected payoff of the mth arriving player, but we see little hope of simplifying it beyond the sheer repetition of the formula:

or the slightly more appealing formula that exhibits the independence of \(\varPi ^e_m\) from \(\tau _m\):

Corollary 9

The payoff of the mth arriving player in the cartel–cartel market structure is

4.2 Cartel–Stackelberg

In this market structure, in the absence of a new entrant, firms form a cartel. However, whenever a new firm enters the market, the incumbents still play as a cartel, but act as leaders imposing their strategy on the new entrant who acts as a follower, in a Stackelberg scheme. The table in the appendix shows that we may equivalently assume that the incumbents do not take immediate notice of the new entrant, or do not take it seriously, and continue with the same production level as before.

After a first step in that configuration, the new entrant joins the cartel for the rest of the game. It is not its best interest. But it may be coerced to do so by the other players who threat to revert otherwise to an all Cournot–Nash equilibrium. A kind of “grim trigger” strategy. As soon as \(m> 6\), they incur a loss in doing so, but not as much as the new entrant. It is therefore a credible threat.

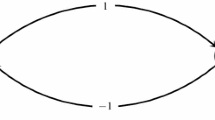

The difference with the cartel–cartel time structure is displayed by the simple time diagrams of Fig. 2, where we have assumed, for clarity, that \(t_{m-1} < t_m-1\), i.e. there was no new entrant at time \(t_m-1\).

According to the table in the appendix, we have, for some positive number c:

We state the following theorem:

Theorem 10

The expected payoff of the cartel–Stackelberg scheme for the first player is

Although slightly more complicated than in the cartel–cartel scheme, this formula shares with the former one most of its characters: a closed form formula, exhibiting even more clearly its decreasing character with p and converging to \(\varPi ^e=c/(1-r)\) as \(p\rightarrow 0\) and \(\varPi ^e\rightarrow \infty \) as \(r\rightarrow 1\).

Proof

The proof, given in section “Proof of Theorems 10” of Appendix 1 involves a careful analysis of the combinatorics of the problem, and application of the previous formulas.

Finally, the payoff to the mth arriving player at time \(t_m\) can be derived from the corresponding formula of the simple sharing problem, just correcting for the fact that at its first step, it earns c / 4 rather than c / m. \(\square \)

Corollary 11

The payoff of the mth arriving player in the cartel–Stackelberg market structure is

4.3 Cournot–Cournot

We now assume that at each time step, the firms compete in a Cournot fashion. With reference to the diagrams shown in Fig. 2, we now have that shown in Fig. 3

As a consequence, there is a positive number \(C\) such that

We also assume a discount factor r as in the above theory. A direct application of the general theory leads to

Theorem 12

The expected payoff of the first player in the Cournot–Cournot market structure is

This is not a very appealing formula. Yet, it is easy to program, even on a spreadsheet, to get a numerical approximation, computing the terms recursively. Write

and compute the u and \(v_n\) according to the recursions \(u(0)=1\), \(v_0(t)=1/4\), \(\forall n > t\), \(v_n(t)=0\), and for \(n \le t\), \(t \ge 1\):

Computing up to the 15th term, with \(r=.8\), we found, for \(p=1/4\), \(\varPi ^e_{1,C}=.822\,C\), for \(p=1/2\), \(\varPi ^e_{1,C}=.625\,C\), and for \(p=3/4\), \(\varPi ^e_{1,C}=.508\,C\). (To get the same precision, the computation requires the more terms that p is smaller. With 50 terms, for \(p=0\), we get \(\varPi ^e_{1,C}= 1.249986\) instead of the theoretical 1.25. These computations were performed on a Libre Office spreadsheet.)

4.4 Cournot–Stackelberg Scheme

We consider a scheme similar to that of the cartel–Stackelberg, but where firms compete à la Cournot–Nash instead of forming a cartel, both in the absence of a newcomer, and within the group of incumbents when a new firm enters the market. As in the cartel–Stackelberg structure, the behaviour of the incumbents may be explained as ignoring the newcomer at the first step. Then (as soon as \(m\ge 2\)), all players profit from a reversal to the all Cournot–Nash equilibrium.

The time diagram is now as shown in Fig. 4

We therefore have

The analysis is completely similar to that of the cartel–Stackelberg scheme, replacing the \(L_m\) as necessary, and leads to the following theorem:

Theorem 13

Let \(\varPi ^e_{1,C}\) be the Cournot expected payoff (5). The expected payoff of the Cournot–Stackelberg scheme for the first player is

We may notice that the relationship of this formula to the preceding one is the same as the corresponding one in the cartel case. It preserves the same properties as outlined above.

5 Conclusion

In this paper, we have analyzed a model where there is, for everyone, an a priori unknown number of players, the actual number, increasing with time, being common knowledge at decision time. We have assumed that all the players are clones (since they adopt the same strategy and have the same payoff at each period of time) and that the payoff law—the demand market in our examples—and entry device (Bernoulli process) are common knowledge. Players’ payoff differ from each other only due to the time during which they are in the market. In this setting we are able to calculate the payoff of each player in finite and infinite horizon games. Four simple examples in microeconomics show that the theory is indeed operative. The formulas obtained are not very simple, but they allow for efficient numerical implementations.

Yet, we may point out a set of weaknesses of the theory, each of which points to possible further investigations.

-

We are limited to a clone economy. Dealing with an unlimited number of players, the game has to be anonymous. But we might want to have several classes of players, such as done in many studies of population uncertainty and anonymous games.

-

To keep with the simple formulas of the Bernoulli process, we impose a constant entry probability p. It would be more realistic to have it depend on the number of players already on the market, as the benefit of entry decreases with that number. This is chiefly true in our examples where the size of the market is constant. We shall take up that issue in a forthcoming article.

-

In our four examples, the players’ per stage payoff depend on the number of players, and only very mildly on the sequence of past arrival times. (In the one-step Stackelberg games, where we need to distinguish the case \(t=t_m\) from the case \(t>t_m\).) A more complex dependence such as alluded to at the beginning of Sect. 3, say because a resource is consumed by the players, requires, to remain manageable by our theory, that it be explicit enough to let us compute the sums \(M_m\). A rather severe restriction on the models we are able to deal with.

-

We do not allow for players leaving the market. Yet, in many applications, this would be more realistic.

But as it is, the theory can probably be used as a Rubinstein “fable” ([29, 30]) to investigate some real life economic problems.

Notes

On the one hand, in a joint action, this country chooses to charge the total harm to the liable party. On the other hand, it allows each victim not to prosecute, prosecute individually or collectively. It thus follows that the liable party can be led to pay several times the damage which she caused: these are punitive damages.

We count powers as sequences of multiplications

References

Bernhard P, Hamelin F (2016) Sharing a resource with randomly arriving foragers. Math Biosci 273:91–101

Breton M, Keoula MY (2012) Farsightedness in a coalitional great fish war. Environ Resour Econ 51:297–315

Breton M, Sbragia L, Zaccour G (2010) A dynamic model for international environmental agreements. Environ Resour Econ 45:25–48

Caines PE (2014) Mean field games. In: Samad T, Ballieul J (eds) Encyclopedia of systems and control. Springer, New York

Davis MHA (1985) Control of piecewise-deterministic processes via discrete-time dynamic programming. In: Stochastic differential systems, Lecture Notes in control and information sciences 78:140–150. Springer

De Sinopoli F, Meroni C, Pimienta C (2014) Strategic stability in Poisson games. J Econ Theory 153:46–63

De Sinopoli F, Pimienta C (2009) Undominated (and) perfect equilibria in Poisson games. Games Econ Behav 66:775–784

Ferguson ATS (2005) Selection by committee. In: Nowak A, Szajowski K (eds) Advances in dynamic games, vol 7. Annals of the International Society of Dynamic Games. Birkhäuser, Boston, pp 203–209

Haurie A, Leizarowitz A, van Delft C (1994) Boundedly optimal control of piecewise deterministic systems. Eur J Oper Res 73:237–251

Huang MY, Malhamé RP, Caines PE (2006) Large population stochastic dynamic games: closed loop McKean–Vlasov systems and the Nash certainty equivalence principle. Commun Inf Syst 6:221–252

Kordonis I, Papavassilopoulos GP (2015) LQ Nash games with random entrance: an infinite horizon major player and minor players of finite horizon. IEEE Trans Automat Contr AC 60:1486–1500

Lasry JM, Lions PL (2007) Mean field games. Jpn J Math 2:229–260

Levin D, Ozdenoren E (2004) Auctions with uncertain number of bidders. J Econ Theory 118:229–251

Makris M (2008) Complementarities and macroeconomics: Poisson games. Games Econ Behav 62:180–189

Matthews S (1987) Comparing auctions for risk averse buyers: a buyer’s point of view. Econometrica 55:633–646

McAfee RP, McMillan J (1987) Auctions with a stochastic number of bidders. J Econ Theory 43:1–19

McAfee RP, McMillan J (1987) Auctions with entry. Econ Lett 23:343–347

Mertens JF, Sorin S, Zamir S (2015) Repeated games. No. 55 in econometric society monographs. Cambridge Uninersity Press, Cambridge

Milchtaich I (2004) Random-player games. Games Econ Behav 47:353–388

Münster J (2006) Contests with an unknown number of contestants. Public Choice 129:353–368

Myerson RB (1998) Extended Poisson games and the condorcet jury theorem. Games Econ Behav 25:111–131

Myerson RB (1998) Population uncertainty and Poisson games. Int J Game Theory 27:375–392

Myerson RB (2000) Large Poisson games. J Econ Theory 94:7–45

Neyman A, Sorin S (2003) Stochastic games. Kluwer Academic publishers, NATO ASI series

Nowak A, Sloan E, Sorin S (2013) Special issue on stochastic games. Dyn Games Appl 3

Östling R, Tao-Yi Wang J, Chou EY, Camerer CF (2011) Testing game theory in the field: Swedish LUPI lottery games. Am Econ J Microecon 3:1–33

Piccione M, Tan G (1996) A simple model of expert and non expert bidding in first price auctions. J Econ Theory 70:501–515

Ritzberger K (2009) Price competition with population uncertainty. Math Soc Sci 58:145–157

Rubinstein A (2006) Dilemmas of an economic theorist. Econometrica 74:865–883

Rubinstein A (2012) Economic fables. Open Books Publishers, Cambridge

Rubio JS, Ulph A (2007) An infinite-horizon model of dynamic membership of international environmental agreements. J Environ Econ Manag 54:296–310

Salo A, Weber M (2007) Ambiguity aversion in first-price sealed bid auctions. J Risk Uncertain 11:123–137

Samuelson WF (1985) Competitive bidding with entry costs. Econ Lett 17:53–57

Acknowledgments

We thank Céline Savard-Chambard, Saïd Souam, Nicolas Eber and Hervé Moulin for conversations and comments that improved the paper. We received very useful suggestions from Sylvain Béal. Comments by anonymous reviewers were helpful to improve the presentation of the model and complete the literature survey (mean field games and stopping by vote).

Author information

Authors and Affiliations

Corresponding author

Additional information

Marc Deschamps acknowledges the financial support of ANR Damage (programme ANR-12-JSH1-0001, 2012-2015). The usual disclaimer applies.

Appendices

Appendix 1: Proofs of the Theorems

1.1 Proof of Theorem 1

We remark the maximum number of players is \(T-t_1+1\), and can only be attained at time T, and only if a player has arrived at each instant of time. We then have

For \(m\le T-t_1\) and a compatible \(\tau _m\), we have

Now, \(t_{m+1}>T\) with a probability \((1-p)^{T-t_m}\), and the occurrence of a given \(t_{m+1}\le T\) has a probability \(p(1-p)^{t_{m+1}-t_m-1}\). Hence, writing \(t_+\) for \(t_{m+1}\):

Introduce the notation \(q=1-p\). Interchanging the order of summation,

Using classical formulas for the sum of a geometric series and regrouping terms, we obtain for \(m\le T-t_1\):

being agreed that \({\varPi ^e_{m+1}}(\tau _m,T+1)=0\). A more useful form of this formula for the sequel is as follows:

where the second term of the right-hand side is absent if \(t_m=T\).

Remark 14

It is a not-so-easy calculation to check that if \(L_m(\tau _m,t) \le L\) and \(\varPi ^e_{m+1}(\tau _m,t_{m+1}) \le (T-t_{m+1}+1)L\), then this formula implies, as it should, \(\varPi ^e_m(\tau _m) \le (T-t_m+1)L\).

A hint about how to make the above check is as follows: in the second term of the formula, write

and use the classic formula for the sum of the (finite) geometric series.

We use formula (6) recursively: write first \(\varPi ^e_1\) as a function of \(\varPi ^e_2\), and using again the same formula substitute for \(\varPi ^e_2\) as a function of \(\varPi ^e_3\), and again for \(\varPi ^e_3\) as a function of \(\varPi ^e_4\). Then interchange the order of the summations, placing them in the order \(t,t_2,t_3,t_4\). In the following formula, every time the lower bound of a sum is larger than the upper bound, the term is just absent. We obtain

Continuing in the same way up to \(m=T-t_1+1\), we obtain

The last term can be identified as the term \(m=T-t_1+1\) of the first sum, as the range of t in the embedded (second) sum is limited to \(t=T\), and we have seen that \(L_{T-t_1+1}(T)=M_{T-t_1+1}(T)\). It suffices now to shift m by one unit to obtain the formula

And interchanging a last time the order of the summations, formula (1). \(\square \)

Remark 15

As a consequence of formula (1), if for some fixed L, for all m, \(\tau _m\) and t, \(L_m(\tau _m,t)=L\), then \(\varPi ^e_1=[(1-r^{T-t_1+1})/(1-r)]L\) (whose limit as \(r\rightarrow 1\) is \((T-t_1+1)L\)), and if \(L_m(\tau _m,t)\le L\), then \(\varPi ^e_1\) is bounded above by that number.

1.2 Proof of Theorem 5

We start with formula (1) where we set \(t_1=0\), and recall by a superscript (T) that it is a formula for a finite horizon T, the horizon we want to let go to infinity:

The only task left to prove the theorem is to show that the series obtained as \(T\rightarrow \infty \) converges absolutely. To do this, we need an evaluation of \(M_m(t)\). Observe that the cardinal of the set \(\mathscr {T}_m(t)\) is simply the combinatorial coefficient

As a consequence, if \(|L_m| \le L^m\), we have

and

Therefore,

The series converges absolutely provided that

which is always true if \(L\le 1\), and ensured for all \(p\le 1\) if \(rL < 1\). This proves the theorem for the case exponentially bounded.

In the case uniformly bounded, with \(|L_m|\le L\), we obtain similarly

and

and the series is always absolutely convergent. \(\square \)

1.3 Proof of Theorem 10

We aim to apply formula (3). The term \(t=0\) requires a special treatment: the only term in the sum over m is \(m=1\) and \(M_1(0)=L_1(0)=c\). For \(t > 0\), we have three cases:

-

1.

For \(m=1\), there has not been any new entrant; therefore, \(L_1(t) = c\).

-

2.

For \(1< m < t+1\), we sum first over the \(\tau _m\) such that \(t_m < t\), i.e. \(\tau _m\in \mathscr {T}_m(t-1)\), then over the \(\tau _m\) such that \(t_m=t\); there the sum is over the values of \(\tau _{m-1}\in \mathscr {T}_{m-1}(t-1)\).

-

3.

For \(m=t+1\), there have been new entrants at each time step. Therefore, \(L_{t+1}(\tau _{t+1},t) = c / 2t\). We summarize this in the following calculation:

$$\begin{aligned} \text{ for }\quad m=1,\quad M_1(t)&= c = c\frac{(t-1)!}{(m-1)!(t-m+1)!}\frac{1}{m},\\ \text{ for }\quad 1<m<t+1,\quad M_m(t)&= \sum _{\tau _m\in \mathscr {T}_m(t-1)} \frac{c}{m} + \sum _{\tau _{m-1}\in \mathscr {T}_{m-1}(t-1)}\frac{c}{2(m-1)}\\&= c\frac{(t-1)!}{(m-1)!(t-m+1)!}\frac{1}{m}\\&\quad + c\frac{(t-1)!}{(m-2)!(t-m+1)!}\frac{1}{2(m-1)},\\ \text{ for }\; m=t+1,\; M_{t+1}(t)&= \frac{c}{2t} = c\frac{(t-1)!}{(m-2)!(t-m+1)!}\frac{1}{2(m-1)}. \end{aligned}$$

We therefore obtain, for \(t>0\):

Finally, summing over t as in formula (3), without forgetting the term \(t=0\),

It suffices to take out one power of rq from the sum over t, shift the summation index by one unit, recognize the expected payoff of the simple sharing scheme and replace it by formula (4) to prove the theorem.

Appendix 2: Static Equilibria

We consider n identical firms (a clone economy) sharing a market with a linear inverse demand function, linking the price P to the total production level Q as

Production costs have been lumped into b and so doing normalized at zero. We compute the optimal production level Q, and resulting price P and profit \(\varPi \) of each firm for various equilibria.

1.1 Cartel

In a pure cartel, firms behave as a single player, only sharing the optimal production level equally among them. Let Q be that level. The profit of the (fictitious) single player is

Hence, the optimal production level is \(Q=b/(2a)\), to be equally divided among the firms, as well as the profit \(\varPi = b^2/(4a)\). The price is then \(P= b/2\), and the individual production level q and profit \(\varPi _i\) are

1.2 Cartel–Stackelberg

We investigate the case where \(n-1\) firms form a cartel, behaving as a leader vis à vis one firm acting as a follower.

Let \(q_L\) be the quantity produced by each incumbent, \(q_F\) that of the follower. Hence, \(Q=(n-1)q_L+q_F\). The follower’s profit is

hence

Therefore, the optimal reaction curve \(q_F\) as a function of \(q_L\) is

With such a strategy, each incumbents’ profit is

Therefore, the optimal production level of each incumbent and their profit are

Placing this back into the optimal follower’s reaction curve, its production level and profit are

and the price of the commodity is \(P=b/4\). All these results will be summarized in a table in the last section.

1.3 Cournot–Nash

We have n identical firms competing à la Cournot. The Cournot–Nash equilibrium is obtained as follows. Let q be the individual production level, therefore \(Q=nq\), and P the resulting price:

The individual profit of player i is

It follows that the optimum \(q_i\) is

but we seek a symmetric equilibrium where \(q_i=q\), and therefore,

Placing this back into the law for P, we find

1.4 Cournot–Stackelberg

We finally consider \(n-1\) firms competing à la Cournot–Nash within their group, producing a quantity \(q_L\) each, but that group behaving as a leader vis à vis a single follower, producing a quantity \(q_F\). We therefore have

The calculations are similar to the previous ones. The follower’s profit is therefore

Hence,

With this strategy,

Consequently, for player i, one of the leaders,

It follows that

while \(P = b/(2n)\), and

1.5 Summary

We regroup the results of these calculations in the following table:

Appendix 3: Complexity

In this appendix, we undertake to count the number of arithmetic operations involved in computing \(\varPi ^e_1\) for a finite horizon by four different methods: (direct) path enumeration, backward dynamic programming, path enumeration and forward dynamic programming, and use of formula (1). To ease the calculations, we let \(t_1=1\), so that T is the number of time steps. We shall refer to the tree shown in Fig. 1.

If we assume no regularity in their definition, the data are made of the collection of all \(L_m(t)\), \(t=1,\ldots ,T\), that is, as many numbers as there are branches in the tree, i.e.

numbers. As all must be used, there is no way in which a general method could involve less than that number of arithmetic operations. Therefore, we expect a complexity of the order of \(2^T\) (of the order of \(10^6\) for \(T=20\) and \(10^{15}\) for \(T=50\), a completely unrealistic case!), and the difference between methods can only be in the coefficient multiplying that number.

1.1 Path Enumeration

The tree counts \(2^{T-1}\) paths from the root to a leaf. Let \(\nu \in [1,2^{T-1}]\) number them. We denote by \(\pi _\nu \) the path number \(\nu \), and let \(m_\nu \) be the number of player present at the end of path \(\pi _\nu \). Each path has a probability of being followed

Let \(L_\nu (t)\) denote the \(L_n(\tau _n,t)\) on path \(\pi _\nu \). Each path involves a payoff

And we have

A direct method of evaluating \(\varPi ^e\) is therefore as follows:

-

1.

Compute the \(\mathbb {P}(\pi (\nu ))\) for each \(\nu \). The computation of each involves \(T-2\) multiplications.Footnote 2 Therefore, that step involves \(2^{T-1}(T-2)\) arithmetic operations.

-

2.

Compute the \(\varPi (\pi _\nu )\). Each involves \(T-1\) additions; therefore, this step involves \(2^{T-1}(T-1)\) arithmetic operations.

-

3.

Compute \(\varPi ^e_1\) according to formula (10), involving \(2^{T-1}\) multiplications and as many additions (-1), that is, \(2^T\) operations.

Therefore, the total number of arithmetic operations is

that is of the order of \(T2^T\).

1.2 Dynamic Programming (DP)

Denote the nodes of the tree by the sequence \(\sigma (t)\) of t indices, 0 or 1, the 1 denoting the times when an arrival occurred, a branch sloping up in our figure. (All sequences \(\sigma (t)\) begin with a one.) The possible successors of a given \(\sigma (t)\) are \((\sigma (t),0)\) and \((\sigma (t),1)\) that we denote as

Denote by \(L(\sigma (t))\) the \(L_m\) of the branch reaching the node \(\sigma (t)\).

1.2.1 Backward DP

Let \(V(\sigma (t))\) be the expected future payoff when at node \(\sigma (t)\). It obeys the dynamic programming equation

and \(\varPi ^e_1 = V(\mathrm {root}) = V(1)+L_1(1)\).

There are thus four arithmetic operations to perform at each node of the tree (not counting the leaves), that is,

arithmetic operations, i.e. of the order of \(4\times 2^T\).

1.2.2 Path Enumeration and Forward DP

This is a variant of the path enumeration method (10) on two counts:

-

1.

Compute once each probability \(p^{m-1}(1-p)^{T-m}\) and store it. This costs \(T(T-2)\) arithmetic operations.

-

2.

Compute the \(\varPi (\pi _\nu )\) according to the forward dynamic programming method

$$\begin{aligned} \varPi (\sigma (t-1),i(t-1)) = \varPi (\sigma (t-1)) + L(\sigma (t-1),i(t-1)). \end{aligned}$$This is one addition at each node of the tree, i.2. \(2^T\) operations.

It remains to implement formula (10), using \(2^T\) arithmetic operations, for a total of \(2^{T+1} + T(T-2)\). This is of the order of \(2\times 2^T\).

1.3 Using the \(M_m(t)\)

We rewrite formula (1) as

1.3.1 Computing the \(M_m(t)\)

The first task is to compute the collection of \(M_m(t)\). For each t, there are \(2^{t-1}\) \(L_m(t)\) to combine in t terms, that is, \(2^{t-1}-t\) additions. There is no computation for the steps 1 and 2. The total number of additions there is therefore

1.3.2 Computing the \(p^{m-1}(1-p)^{t-m}\)

We set

We compute them according to the following method:

Counting the arithmetic operations, this leads to \(T-1\) multiplications to compute the \(u_1(t)\), and to

multiplications to compute the rest of the \(u_m(t)\). That is for this step

arithmetic operations.

1.3.3 Applying Formula (11)

Finally, there are \(T(T+1)/2\) terms in formula (11), each involving a multiplication and an addition, i.e. \(T(T+1)\) arithmetic operations (minus one addition)

Summing all steps, this is

i.e. of the order of \(2^T\) arithmetic operations, half as many as in the best DP method.

1.4 Simple Case

In the case where the \(L_m(\tau _m,t)\) are actually independent from \(\tau _m\), the computation of each \(M_m\) requires just a multiplication by a combinatorial coefficient, that is, an overall complexity for all \(M_m\) of \(T(T-1)/2\) or \(T(T-1)\) depending on whether the combinatorial coefficients are given or to be computed (via the Pascal Triangle algorithm). Then the complexity of our method drops to \(2T^2\) or \(2.5 T^2\). A huge simplification makes it possible to actually compute the result for large T.

1.5 Conclusion

Our theory gives the fastest algorithm for this general “unstructured” case, half as many algebraic operations as in the next best. But of course, its main advantage is elsewhere, in allowing one to take advantage of any regularity in the definitions of the \(L_m\), and also in allowing for closed formulas for the infinite horizon case. Formula (4) is a typical example.

A general remark is that going from the “direct” method, in \(T2^T\) arithmetic operations to one with a constant coefficient, in a typical computer science tradeoff, we trade computer time for storage space. However, if the \(L_m\) need to be stored as data (as opposed to being given by some explicit formula), then in all the faster methods, they are used only once so that their memory space can be reused for intermediate results.

Rights and permissions

About this article

Cite this article

Bernhard, P., Deschamps, M. On Dynamic Games with Randomly Arriving Players. Dyn Games Appl 7, 360–385 (2017). https://doi.org/10.1007/s13235-016-0197-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13235-016-0197-z