Abstract

In this paper, we study “robust” dominating sets of random graphs that retain the domination property even if a small deterministic set of edges are removed. We motivate our study by illustrating with examples from wireless networks in harsh environments. We then use the probabilistic method and martingale difference techniques to determine sufficient conditions for the asymptotic optimality of the robust domination number. We also discuss robust domination in sparse random graphs where the number of edges grows at most linearly in the number of vertices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Domination of graphs is an important topic from both theoretical and application perspectives and has been extensively studied in the random graph context as well. Throughout, random graphs refer to the Bernoulli or Erdös-Rényi random graph \(G\) obtained by allowing each edge in the complete graph on \(n\) vertices to be present with a certain probability \(p,\) independent of the other edges (for formal definitions, please refer to Sect. 2). In [7], two point concentration for the domination number of \(G\) is obtained for the case when \(p\) is essentially a constant and this concentration phenomenon was extended for a wide range of \(p\) in [5]. Since then many other variants of domination have also been studied (see for e.g. [3] [6]).

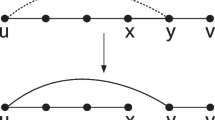

Dominating sets also occur naturally in the design of wireless networks. In [8], an early application of dominating sets is explored for routing in ad hoc wireless networks devoid of any central control. The nodes belonging to the dominating sets are interpreted as “gateways" through which any two nodes in the network can communicate with minimal delay. This was extended to higher dimensional wireless networks in [9] and for a survey on the usage of domination in communications, we refer to [4].

Ad hoc networks are especially fragile in terms of linkage in the sense that the sensors are continually moving around and so links may break or form randomly. Moreover, due to environmental constraints like shadowing and fading, it may happen that links between certain nodes are simply not feasible. In such a situation, it is of natural interest to know whether the domination property is still retained and this is the topic of study in this paper. We consider dominating sets in the Bernoulli random graph \(G\) and are interested in obtaining “robust" dominating sets that retain the domination property even if a small deterministic set of edges are removed. We obtain sufficient conditions for asymptotic optimality of the robust domination number in terms of the maximum vertex degree and the number of edges of the graph that has been removed from \(K_n.\)

The paper is organized as follows: In Sect. 2, we state and prove our main result regarding robust domination number in random graphs for the dense regime. We use the probabilistic method to establish sufficient conditions for asymptotic optimality. Next, in Sect. 3, we also discuss our results for the robust domination in the sparse regime.

2 Robust domination

Let \(K_n\) be the complete graph on \(n\) vertices and let \(\{Z(f)\}_{f \in K_n}\) be independent random variables indexed by the edge set of \(K_n\) and with distribution

where \(0< p < 1.\) Let \(G\) be the random graph formed by the union of all edges \(f\) satisfying \(Z(f) = 1\) and let \(H\) be any deterministic subgraph of \(K_n\) with \(m = m(n)\) edges and a maximum vertex degree of \(\Delta = \Delta (n).\)

A set \({{{\mathcal {S}}}} \subset V\) is said to be a dominating set of \(G {\setminus } H\) if each vertex in \(V {\setminus } {{{\mathcal {S}}}}\) is adjacent to at least one vertex in \({{{\mathcal {S}}}}\) in the graph \(G {\setminus } H.\) We also say that \({{{\mathcal {S}}}}\) is a \(H-\)robust dominating set or simply a robust dominating set. The \(H-\)robust domination number or simply the robust domination number is defined to be the minimum size of a dominating set in \(G {\setminus } H\) and is denoted by \(\gamma (G {\setminus } H).\)

We seek conditions on \(H\) so that the robust domination number \(\Gamma _n:= \gamma (G{\setminus } H)\) and the actual domination number \(\gamma (G) \le \Gamma _n\) are of the same order. Intuitively, if \(H\) is sparse, then we expect \(\Gamma _n\) and \(\gamma (G)\) to be close with high probability, i.e., with probability converging to one as \(n \rightarrow \infty .\) This is illustrated in our first result below that obtains bounds for \(\Gamma _n\) in terms of the maximum vertex degree \(\Delta \) of the graph \(H.\) For \(0< x,y < 1\) we define \(u_n(x,y):= \frac{\log (nx)}{|\log (1-y)|}\) and set \(u_n:= u_n(p,p).\) Moreover, we use the notation \(a_n = o(b_n)\) to denote that \(\frac{a_n}{b_n} \longrightarrow 0,\) as \(n \rightarrow \infty .\)

Lemma 1

The following properties hold:

- \((a)\):

-

Let \(\lambda _a:= np\) and \(\lambda _b:= n|\log (1-p)| > \lambda _a.\) For every \(\theta > 2,\) there exists a \(\lambda _0 = \lambda _0(\theta ) > 0\) such that if \(\frac{\lambda _0}{n} \le p \le 1-\frac{1}{n^3},\) then

$$\begin{aligned} {\mathbb {P}}\left( \Gamma _n \ge u_n \left( 1-\frac{\theta \log \log {\lambda _b}}{\log {\lambda _a}}\right) \right) \ge 1-\exp \left( -\frac{3n}{8} \cdot \frac{(\log {\lambda }_b)^{\theta }}{\lambda _a}\right) . \end{aligned}$$(2) - \((b)\):

-

Let \(\Delta \) be the maximum vertex degree of \(H\) and suppose \(np \longrightarrow \infty \) and \(p \le p_0\) for some constant \(0< p_0 <1.\) For every \(\epsilon > 0\) and all \(n\) large,

$$\begin{aligned} {\mathbb {P}}\left( \Gamma _n \le u_n(1+\epsilon ) + \Delta \right) \ge 1- \frac{1}{\log (np)}. \end{aligned}$$(3)

Consequently if \(\Delta = o(u_n)\) and \(p \le p_0\) is such that \(np \longrightarrow \infty ,\) then \(\frac{\Gamma _n}{u_n} \longrightarrow 1\) in probability as \(n \rightarrow \infty .\)

The condition \(np \longrightarrow \infty \) ensures that \(G\) is reasonably dense in terms of its vertex degrees. In particular if the edge probability \(p\) is a constant, then \(u_n\) is of the order of \(\log {n}\) and moreover both \(\lambda _a\) and \(\lambda _b\) are of the order of \(n.\) Setting \(\theta = 3\) in (2), we then get that \(\Gamma _n \ge u_n \left( 1- O\left( \frac{\log \log {n}}{\log {n}}\right) \right) \) with probability at leat \(1-e^{-C(\log {n})^3}\) for some constant \(C > 0.\) Similarly, the final statement (3) implies that if \(\Delta = o(\log {n}),\) then \(\Gamma _n \le u_n(1+2\epsilon )\) with high probability, for any arbitrary constant \(\epsilon > 0.\)

Combining the observations of the previous paragraph, we get that \(\Gamma _n \sim u_n\) with high probability, where we use the notation \(a_n \sim b_n\) to denote that

\(\frac{a_n}{b_n} \longrightarrow 1\) as \(n \rightarrow \infty .\) Thus the robust domination number satisfies

\(\Gamma _n \sim u_n \sim \gamma (G)\) and is therefore asymptotically equal to the “ideal" domination number \(\gamma (G),\) with high probability.

Proof of Lemma 1

\((a)\): Since \(\gamma (G {\setminus } H) \ge \gamma (G),\) it suffices to lower bound \(\gamma (G)\) and for completeness, we give a small proof using a union bound argument covering all possibilities, as in [7] [5]. Specifically, letting \(\lambda _a:= np, \lambda _b = n|\log (1-p)|\) and \(t_n:= \frac{\log {\lambda _a} - \theta \log \log {\lambda _b}}{|\log (1-p)|}\) vertices, we show that there exists a dominating set containing \(t_n\) vertices, with high probability.

We begin with upper and lower bounds for \(t_n.\) Using \(|\log (1-p)| > p,\) we see that \(t_n \le \frac{\log {\lambda _a}}{p} = n\frac{\log {\lambda _a}}{\lambda _a} < \frac{n}{4}\) if \(\lambda _a \ge \lambda _0,\) a sufficiently large absolute constant. Moreover, we have that \(\lambda _a > (\log {\lambda _b})^{2\theta }\) for all \(n \ge N_0 = N_0(\theta )\) large, provided \(np \ge \lambda _0 = \lambda _0(\theta )\) is large. Indeed if \(p \le \frac{1}{2},\) then using \(|\log (1-p)| < 2p,\) we get that \(\lambda _a - (\log {\lambda _b})^{2\theta } \ge np-(\log (2np))^{2\theta } >0\) if \(np \ge \lambda _0 = \lambda _0(\theta )\) is sufficiently large. On the other hand if \(\frac{1}{2} \le p \le 1-\frac{1}{n^3},\) then \(\lambda _a = np > \frac{n}{2}\) and

for all \(n \ge N_0 = N_0(\theta )\) large. Summarizing, we get that

for all \(n\) large.

Let \({{{\mathcal {S}}}}\) be any set containing \(t_n\) vertices. For a vertex \(v \in {{{\mathcal {S}}}}^c,\) the probability that \(v\) is not adjacent to any vertex of \({{{\mathcal {S}}}}\) is \((1-p)^{t_n} = \frac{(\log {\lambda _b})^{\theta }}{\lambda _a}.\) Thus the vertex \(v\) is adjacent to some vertex of \({{{\mathcal {S}}}}\) with probability \(1-\frac{(\log {\lambda _b})^{\theta }}{\lambda _a}\) and so if \(E_{dom}({{{\mathcal {S}}}})\) is the event that \({{{\mathcal {S}}}}\) is a dominating set, then using the fact that the complement set \({{{\mathcal {S}}}}^c\) has \(n-t_n \ge \frac{3n}{4}\) vertices (see (4)), we get that

Since there are \({n \atopwithdelims ()t_n} \le \left( \frac{ne}{t_n}\right) ^{t_n} = \exp \left( t_n \log \left( \frac{ne}{t_n}\right) \right) \) sets of size \(t_n,\) we use the union bound and the bounds in (4), to see that the probability that there exists a dominating set of size at most \(t_n\) is bounded above by

for all \(n \ge N_0\) not depending on \(\lambda _a\) or \(\lambda _b,\) where the second inequality in (5) is true provided \(np=\lambda _a \ge \lambda _0\) is large and the final inequality in (5) is true if we choose \(\theta > 2\) strictly. \(\square \)

Proof of Lemma 1

\((b)\): Let \({{{\mathcal {D}}}}\) be any set containing \((1+\epsilon )u_n + \Delta \) vertices. Each vertex \(v \notin {{{\mathcal {D}}}}\) is adjacent to at least \((1+\epsilon )u_n\) vertices of \({{{\mathcal {D}}}}\) in the graph \(K_n {\setminus } H\) and so the probability that \(v\) is not adjacent to any vertex of \({{{\mathcal {D}}}}\) in \(G {\setminus } H,\) is at most \((1-p)^{(1+\epsilon )u_n} = \frac{1}{(np)^{1+\epsilon }}.\) Thus if \({{{\mathcal {B}}}}\) is the set of all vertices not dominated by \({{{\mathcal {D}}}}\) in \(G {\setminus } H,\) then \({\mathbb {E}}\#{{{\mathcal {B}}}} \le \frac{n}{(np)^{1+\epsilon }}\) and so a direct application of Markov inequality gives that

By definition \({{{\mathcal {D}}}} \cup {{{\mathcal {B}}}}\) is a dominating set in \(G {\setminus } H\) and has size

with probability at least \(1-\frac{1}{\log {np}},\) by (6). Also since \(p \le p_0\) a constant, we have that \(|\log (1-p)| \le \sum _{k \ge 1}p^{k} \le \frac{p}{1-p} \le \frac{p}{1-p_0}\) and so

for all \(n\) large, since \(np \longrightarrow \infty .\) From (7), we then the upper deviation bound in (3). \(\square \)

From the discussion following Lemma \(1,\) we see that if the edge probability \(p\) is a constant, then \(\Delta = o(\log {n})\) is sufficient to ensure the asymptotic equivalence of the robust and the ideal domination numbers. However, in this case we also know that with high probability, the vertex degree in the random graph \(G\) in fact grows linearly with \(n,\) which is much larger than \(\log {n}.\) Therefore could we, perhaps under additional assumptions, establish asymptotic equivalence for conflict graphs \(H\) that are sparse in comparison to \(G\)? Addressing this issue, we have the following result for random graphs with a convergent edge probability sequence.

Theorem 1

Suppose \(np \longrightarrow \infty ,\;\;p \le 1-\frac{1}{n^3}\) and \(p = p(n) \longrightarrow p_0\) for some constant \(0\le p_0 \le 1.\) As before, let \(H = H(n)\) be any deterministic graph with maximum vertex degree \(\Delta = \Delta (n)\) and containing \(m = m(n)\) edges. If either

then \(\frac{\Gamma _n}{u_n} \longrightarrow 1\) in probability as \(n \rightarrow \infty .\)

Continuing with constant edge probability example, we see from Theorem 1 that if \(p\) is a constant, then either \(\Delta = o(n)\) or \(m = o(n\log {n})\) is sufficient for \(\Gamma _n\) and \(\gamma (G)\) to be asymptotically equal.

For the case \(p_0=0\) which is of interest in communication networks, we see that there are robust dominating sets that asymptotically have the same size as the ideal dominating sets even if the number of edges removed per vertex is much larger than the vertex degree itself. This is true because the expected degree of a vertex in \(G\) equals \((n-1)p \approx np\) and so by the standard deviation estimate (34), we can deduce that each vertex has degree at most of the order of \(np\) with high probability. Condition (8) ensures that the robust domination number is asymptotically optimal provided \(\Delta = o(n),\) even if \(\Delta \) is much larger than \(np.\)

To prove Theorem 1, we perform a case by case analysis of \(\Gamma _n\) based on the asymptotic edge probability \(p_0.\) Recalling the definition of \(u_n(x,y)\) and \(u_n = u_n(p,p)\) prior to Lemma 1, we have the following result.

Lemma 2

Suppose that \(\Delta \le r_0 n-1\) for some constant \(0< r_0 < 1.\)

- \((a)\):

-

For every \(\epsilon > 0\) there are positive constants \(\lambda _i = \lambda _i(\epsilon ,r_0),i=1,2\) such that if \(\frac{\lambda _1}{n} \le p \le \min \left( \frac{1}{2}, 1-\exp \left( -\frac{\epsilon ^2(1-r_0)}{16}\right) \right) ,\) then

$$\begin{aligned} {\mathbb {P}}\left( \Gamma _n \le \frac{(1+6\epsilon )u_n}{1-r_0}\right) \ge 1-z_n, \end{aligned}$$(9)where

$$\begin{aligned} z_n:= \min \left( \exp \left( -\frac{\lambda _2 n}{4(np)^{(1+4\epsilon )(1-r_0)^{-1}}}\right) , \frac{1}{(np)^{\epsilon /2}}\right) . \end{aligned}$$ - \((b)\):

-

For every \(\epsilon >0\) and every constant \(0< p < 1,\) we have that

$$\begin{aligned} {\mathbb {P}}\left( \Gamma _n \le (1+\epsilon )u_n(p,p(1-\epsilon )-r_0)\right) \ge 1-\exp \left( -\frac{\epsilon ^2np}{8}\right) . \end{aligned}$$(10) - \((c)\):

-

For every \(\epsilon >0,\) there is a constant \(C = C(\epsilon ) > 0\) such that if \(q_1:=\max \left( 1-p,\frac{\Delta }{n}\right) \le 2^{-2/\epsilon -2}\) then

$$\begin{aligned} {\mathbb {P}}\left( \Gamma _n \le (1+\epsilon )u_n(p,1-q_1)\right) \ge 1-\frac{C}{n^{\epsilon /2}}. \end{aligned}$$(11)

Parts \((a),(b)\) and \((c)\) of Lemma 2 essentially obtain deviation upper bounds for \(\Gamma _n\) for the cases \(p_0 = 0, 0< p_0 < 1\) and \(p_0 =1,\) respectively.

We use the probabilistic method to prove Lemma 2 and so we begin with a couple of common definitions. For integer \(t \ge 1\) let \({{{\mathcal {X}}}}:= (X_1,\ldots ,X_{t})\) be a random \(t-\)tuple chosen from \(V^{t},\) that is independent of the graph \(G.\) Also let \({\mathbb {P}}_X\) denote the probability distribution of \({{{\mathcal {X}}}}.\) In each of the three cases below, we choose the tuple \({{{\mathcal {X}}}}\) appropriately so that certain niceness properties are satisfied and exploit this to estimate the domination number.

Proof of Lemma 2

\((a)\): For a constant \(0< \zeta < 1\) to be determined later, let \(t = \frac{u_n}{1-\zeta }.\) Assuming that \(X_i, 1 \le i \le t\) are independent and chosen uniformly randomly from \(V,\) we estimate below the number of vertices “left out" by the set \({{{\mathcal {D}}}}:= \{X_1,\ldots ,X_t\}.\) For a vertex \(v,\) the \({\mathbb {P}}_X-\)probability that the random variable \(X_1\) is equal to \(v\) or adjacent to \(v\) in \(H\) is at most \(\frac{\Delta +1}{n} \le r_0.\) Therefore if \(Q(v)\) is the number of indices \(i, 1 \le i \le t\) such that \(X_i\) is not adjacent to \(v\) in the graph \(H,\) then \({\mathbb {E}}_{X}(Q(v)) \ge t(1-r_0)\) and using the standard deviation estimate (34) we get for \(\epsilon > 0\) that

In the Appendix we show that \(u_n(x,x) = \frac{\log (nx)}{|\log (1-x)|}\) is strictly decreasing for all \(x > \frac{\lambda _{low}}{n}\) where \(\lambda _{low} > 0\) is a sufficiently large absolute constant. Therefore if \(\dfrac{\lambda _{low}}{n} \le p \le \lambda _{up}:= 1-\exp \left( -\frac{\epsilon ^2(1-r_0)}{16}\right) ,\) then for all \(n \ge N_0(\epsilon ,r_0)\) large we have that

where the second inequality in (13) is true since \(\lambda _{up}\) is a constant and so \(\log (n\lambda _{up}) \ge \frac{\log {n}}{2}\) for all \(n\) large. Therefore setting

we get from the union bound, (12) and (13) that

We assume henceforth that \(E_{tot}\) occurs.

Next, we estimate the number of distinct entries in \({{{\mathcal {X}}}}.\) Since \(|\log (1-p)| > p,\) we get for any constant \(\zeta > 0\) that \(t = \frac{1}{1-\zeta }\frac{\log (np)}{|\log (1-p)|}< \frac{\log (np)}{p(1-\zeta )} < \frac{\epsilon n}{2}(1-r_0),\) provided \(np \ge \lambda _{low} = \lambda _{low}(\epsilon ,\zeta ,r_0)\) is large enough. Now, for \(i \ne j,\) the probability that \(X_i\) equals \(X_j\) is \(\frac{1}{n}\) and so the \({\mathbb {P}}_X-\)expected number of repeated entries is at most \(\frac{t^2}{n} \le \frac{\epsilon t}{2}(1-r_0).\) If \(E_{rep}\) is the event that the number of repeated entries is at most \(t\epsilon (1-r_0)\) then \({\mathbb {P}}_X(E^c_{rep}) \le \frac{1}{2},\) by the Markov inequality. Combining with the estimate for \({\mathbb {P}}_X(E_{tot})\) in the previous paragraph we then get from the union bound that \(E_{tot} \cap E_{rep}\) occurs with \({\mathbb {P}}_X-\)probability at least \(\frac{1}{2} - \frac{1}{n} > 0.\)

We assume henceforth that \(E_{tot} \cap E_{rep}\) occurs so that each vertex \(v\) is adjacent to least \(t(1-\epsilon )(1-r_0) - t\epsilon (1-r_0) \ge t(1-2\epsilon ) (1-r_0)\) and at most \(t = \frac{u_n}{1-\zeta }\) vertices of \({{{\mathcal {D}}}},\) in the graph \( K_n {\setminus } H.\) Setting \(1-\zeta := \frac{(1-2\epsilon )(1-r_0)}{1+\epsilon },\) we then get that the probability of the event \(J_v\) that \(v\) is not adjacent to any vertex of \({{{\mathcal {D}}}}\) in the graph \(G {\setminus } H,\) is at most \((1-p)^{(1+\epsilon )u_n} = \frac{1}{(np)^{1+\epsilon }}\) and at least \((1-p)^{t} = \frac{1}{(np)^{(1-\zeta )^{-1}}}.\) If \(L_{tot}:= \sum _{v \notin {{{\mathcal {D}}}}} 1{1}(J_v),\) then \({\mathbb {E}}L_{tot} \le \frac{n}{(np)^{1+\epsilon }}\) and a direct application of the Markov inequality gives us that

Similarly \({\mathbb {E}}L_{tot} \ge \frac{n}{(np)^{(1-\zeta })^{-1}}\) and so using the standard deviation estimate (34) we get that

since \((1-\zeta )^{-1} = \frac{1+\epsilon }{(1-2\epsilon )(1-r_0)} \le \frac{1+4\epsilon }{1-r_0}\) provided \(\epsilon > 0\) is a small enough constant. We fix such an \(\epsilon \) henceforth.

If \(L_{tot} \le \frac{n}{(np)^{1+\epsilon /2}},\) then \(\Gamma _n \le \#{{{\mathcal {D}}}} + L_{tot} \le \frac{u_n}{1-\zeta } + \frac{n}{(np)^{1+\epsilon /2}}\) and moreover, using \(|\log (1-p)| <2p\) for \(p < \frac{1}{2},\) we have for \(np \ge \lambda _{low}\) large enough that \(\frac{n}{(np)^{1+\epsilon /2}}< \epsilon \frac{\log (np)}{p} < 2\epsilon u_n.\) Thus

and together with (14) and (15), this obtains the desired upper bound in (9). \(\square \)

Proof of Lemma 2

\((b)\): We begin with a couple of preliminary calculations. If \(d_G(v)\) is the degree of \(v\) in \(G,\) then \({\mathbb {E}}d_G(v) = (n-1)p.\) Therefore from the deviation estimate (34), we get that \(d_G(v) \ge np(1-\epsilon )\) with probability at least \(1-\exp \left( -\frac{\epsilon ^2}{5}np\right) .\) Letting \(E_{deg}:= \bigcap _{v} \{d_G(v) \ge np(1-\epsilon )\},\) we get from the union bound that

for all \(n\) large.

We henceforth assume that \(E_{deg}\) occurs and let \(X_j, 1 \le j \le t\) be independently and uniformly chosen from the vertex set \(V\) also independent of the graph \(G.\) Let \({{{\mathcal {N}}}}(X_i)\) be the set of all neighbours of \(X_i\) in the graph \(G{\setminus } H\) and set \({{{\mathcal {N}}}}[X_i]:= \{X_i\} \cup {{{\mathcal {N}}}}(X_i)\) to be the closed neighbourhood of \(X_i.\) Setting \({{{\mathcal {B}}}}:= \bigcup _{1 \le j \le t} {{{\mathcal {N}}}}[X_j],\) we see that each vertex in \({{{\mathcal {B}}}}\) is adjacent to at least one vertex in \(\{X_j\}_{1 \le j \le t}\) and so \({{{\mathcal {D}}}}:= \bigcup \{X_j\}_{1 \le j \le t} \bigcup \left( V {\setminus } {{{\mathcal {B}}}}\right) \) is a dominating set for \(G {\setminus } H.\) Letting \({\mathbb {P}}_X\) be the distribution of \(\{X_j\}_{1 \le j \le t},\) we see that the \({\mathbb {P}}_X-\)expected size of \({{{\mathcal {D}}}}\) is

and in the rest of the proof below, we use telescoping to bound the expected size of \({{{\mathcal {B}}}}.\)

Formally, for \(1 \le j \le t\) we let \({{{\mathcal {B}}}}_j:= \bigcup _{1 \le i \le j} {{{\mathcal {N}}}}[X_i]\) and estimate the expected increment \({\mathbb {E}}_X\#{{{\mathcal {B}}}}_{j} - {\mathbb {E}}_X\#{{{\mathcal {B}}}}_{j-1}.\) Adding these increments would then give us the desired bound for \({{{\mathcal {B}}}} = {{{\mathcal {B}}}}_{t}.\) Specifically, we have by construction that \(\#{{{\mathcal {B}}}}_j = \#{{{\mathcal {B}}}}_{j-1} + \#\left( {{{\mathcal {N}}}}[X_j] {\setminus } {{{\mathcal {B}}}}_{j-1}\right) \) and for any set \({{{\mathcal {S}}}},\)

Thus \({\mathbb {E}}_X\#\left( {{{\mathcal {N}}}}[X_j] \cap {{{\mathcal {S}}}}\right) = \frac{1}{n} \sum _{y \in {{{\mathcal {S}}}}} (d(y)+1),\) where \(d(y)\) is the degree of vertex \(y\) in \(G {\setminus } H\) and setting \({{{\mathcal {S}}}} = V {\setminus } {{{\mathcal {B}}}}_{j-1} =: {{{\mathcal {B}}}}^c_{j-1},\) we therefore get that

We recall that \(E_{deg}\) occurs and also that the maximum vertex degree of \(H\) is \(\Delta \) and so \(\sum _{y \in {{{\mathcal {B}}}}^c_{j-1}} (d(y)+1) \ge \#{{{\mathcal {B}}}}^c_{j-1} (np(1-\epsilon )+1-\Delta ).\) Defining \(\beta _{j}:= \frac{{\mathbb {E}}\#{{{\mathcal {B}}}}_j}{n},\) we then get that \(\beta _{j} \ge \theta _1 + \theta _2 \beta _{j-1},\) where \(\theta _1 = 1-\theta _2:=p -p\epsilon -\frac{\Delta -1}{n}.\) Applying recursion and using the fact that \(\beta _1 > 0,\) we get that

Substituting (19) into (17) and using \(\frac{\#{{{\mathcal {B}}}}}{n} = \beta _{t},\) we finally get that \({\mathbb {E}}_X\#{{{\mathcal {D}}}} \le t+ n(1-\theta _1)^{t-1}.\)

Summarizing, if the event \(E_{deg}\) occurs, then there exists a dominating set of size at most \(t+ n(1-\theta _1)^{t-1}.\) Setting \(t -1= (1+\epsilon )\frac{\log {(np)}}{|\log (1-\theta _1)|}\) we get that \(n(1-\theta _1)^{t-1} =\frac{n}{(np)^{1+\epsilon }} = O\left( \frac{1}{n^{\epsilon }}\right) \) and so the above iteration procedure necessarily terminates after at most \(t\) steps to provide the desired dominating set \({{{\mathcal {D}}}}\) of size at most \(t+1.\) By Lemma statement

for all \(n\) large and so \({{{\mathcal {D}}}}\) has size at most \((1+\epsilon ) u_n(p,p(1-\epsilon )-r_0).\) From (16), we then get (10). \(\square \)

Proof of Lemma 2

\((c)\): For \(t \le 2\log {n},\) let \({{{\mathcal {X}}}} = (X_1,\ldots ,X_t)\) be a uniformly randomly chosen \(t-\)tuple from \(V^{t}\) with distinct entries. Say that a vertex \(v\) is bad if \(v\) is not adjacent to any vertex of \({{{\mathcal {X}}}}\) in \(G {\setminus } H\) and let \(q:= 1-p.\) The vertex \(v\) is not adjacent to \(X_i\) in \(G {\setminus } H\) if either \(v=X_i\) or \(v\) is adjacent to \(X_i\) in \(H\) or the edge \((v,X_i)\) is not present in \(G.\) Therefore if \(A_i\) denotes the event that \(v\) is not adjacent to \(X_i\) in \(G {\setminus } H,\) then

where \({{{\mathcal {Z}}}}_i\) is the event that either \(v = X_i\) or \(v\) is adjacent to \(X_i\) in \(H.\) Moreover, because the entries of \({{{\mathcal {X}}}}\) are distinct, the events \(A_i\) and \(A_j\) are mutually \({\mathbb {P}}-\)independent, given \({{{\mathcal {X}}}}.\)

Thus denoting \(A_{bad}(v)\) to be the event that \(v\) is bad, we see that

Given \(X_1,\ldots ,X_{t-1},\) the random variable \(X_t\) is equally likely to be any of the remaining \(n-t+1\) vertices from \(\{1,2,\ldots n\}\) and since the vertex \(v\) is adjacent to at most \(\Delta \) vertices in \(H,\) the event \({{{\mathcal {Z}}}}_i\) defined prior to (20) occurs with conditional probability

Plugging this into (20) we obtain

for all \(n\) large, where \(q_1:= \max \left( q, \frac{\Delta }{n}\right) .\) Continuing iteratively, we see from (21) that

provided we set \(t:= \left( 1+\frac{\epsilon }{2}\right) \frac{\log {(np)}}{|\log (2q_1)|}.\) Using \(p \le 1\) and \(q_1 \le 2^{-2/\epsilon -2}\) we see that the required condition \(t\le 2\log {n}\) is satisfied for all \(n\) large.

If \(N_{bad}:= \sum _{v} 1{1}(A_{bad}(v))\) is the total number of bad vertices, then from (22) we see that

and so there exists a choice of \({{{\mathcal {X}}}}\) such that \({\mathbb {E}}N_{bad} \le \frac{n}{(np)^{1+\epsilon /2}}.\) We fix such a \({{{\mathcal {X}}}}\) henceforth and get from the Markov inequality that

for some constant \(C > 0\) since \(p \ge 1-2^{-2/\epsilon -2}\) (see statement of the Lemma). In other words, with probability at least \(1-\frac{C}{n^{\epsilon /2}},\) the vertices in \({{{\mathcal {X}}}}\) form a dominating set of \(G\) and so

Again using \(q_1 \le 2^{-2/\epsilon -2}\) we have that \(\frac{1+\epsilon /2}{|\log (2q_1)|} \le \frac{1+\epsilon }{|\log {q_1}|} \) and so (23) implies that \(\Gamma _n \le (1+\epsilon )u_n(p,1-q_1)\) and this obtains the desired deviation upper bound in (10). \(\square \)

We now use Lemma 2 to prove Theorem 1 below.

Proof of Theorem 1

We consider three separate subcases depending on whether the asymptotic edge probability \(p_0 = 0,1\) or otherwise. For \(p_0 = 0\) and \(\Delta = o(n),\) we use the lower deviation bound in part \((a)\) of Lemma 1 and the upper deviation bound in part \((a)\) of Lemma 2 to get that \(\frac{\Gamma _n}{u_n} \longrightarrow 1\) in probability. Similarly, the cases \(0< p_0 < 1\) and \(p_0=1\) are obtained using parts \((b)\) and \((c),\) respectively, of Lemma 2.

For \(m = o(nu_n(1-p)),\) we include a small “preprocessing" step. First consider the case \(p_0 = 0.\) For \(\epsilon > 0\) let \({{{\mathcal {Q}}}}\) be the set of all vertices with degree at most \(\epsilon n.\) In the proof of Lemma 2\((a),\) we now choose \(X_i, 1 \le i \le t\) uniformly and independently from \({{{\mathcal {Q}}}}\) and estimate the number of vertices covered by the set \({{{\mathcal {D}}}}:= \{X_1,\ldots ,X_t\} \cup {{{\mathcal {Q}}}}^c.\) For \(\epsilon > 0\) and a vertex \(v,\) the \({\mathbb {P}}_X-\)probability that \(X_1\) is equal or adjacent to \(v\) in \(H\) is at most \(\frac{\Delta +1}{\#{{{\mathcal {Q}}}}} \le \frac{\epsilon }{1-\epsilon } < 2\epsilon \) since by definition, the set \({{{\mathcal {Q}}}}^c\) has size \(o(u_n)<\epsilon u_n < \epsilon n\) for all \(n\) large.

If \(L_{tot}\) is the number of vertices “left out" by \(\{X_1,\ldots ,X_t\},\) then arguing as in the proof of Lemma 2\((a)\) we get that both (14) and (15) holds and so

for some constants \(C_1,C_2>0.\) Since \(\epsilon > 0\) is arbitrary, we argue as in the first paragraph of this proof to then get that \({\mathbb {E}}\Gamma _n = u_n(1+o(1)).\) An analogous analysis holds for the cases \(0< p_0 <1\) and \(p_0 = 1\) as well.

3 The sparse regime

In this section, we discuss robust domination in the sparse regime when \(np \longrightarrow \lambda < \infty .\) We consider the cases \(\lambda = 0\) and \(0< \lambda < \infty \) separately and have the following result regarding the robust domination number.

Theorem 2

We have:

- \((a)\):

-

If \(np \longrightarrow 0,n^2p \longrightarrow \infty \) and either \(\Delta = o(n)\) or \(m = o(n^3p),\) then \(\frac{4\Gamma _n}{n^2p} \longrightarrow 1\) in probability as \(n \rightarrow \infty .\)

- \((b)\):

-

Suppose \(np \longrightarrow \lambda \) for some \(0< \lambda < \infty \) and either \(\Delta = o(n)\) or \(m = o(n^2).\) For every \(\epsilon > 0,\) we have

$$\begin{aligned} {\mathbb {P}}\left( a(\lambda )(1-\epsilon ) \le \frac{\gamma (G)}{n} \le \frac{\Gamma _n}{n} \le b(\lambda ) (1+\epsilon )\right) \longrightarrow 1 \end{aligned}$$(24)where

$$\begin{aligned}{} & {} a(\lambda ):= \left\{ \begin{array}{cc} \lambda e^{-2\lambda } &{}\;\;\;\lambda \le \lambda _0 \\ \;\;\\ \frac{\log {\lambda }-3\log \log {\lambda }}{\lambda }, &{}\;\;\;\lambda> \lambda _0 \end{array} \right. \;,\;\; b(\lambda ):= \left\{ \begin{array}{cc} \frac{\lambda }{4}, &{}\;\;\;\lambda \le 1 \\ \;\;\\ \frac{\log {\lambda }+1}{\lambda }, &{}\;\;\;\lambda > 1 \end{array} \right. \;,\;\; \end{aligned}$$and \(\lambda _0 > 0\) is an absolute constant not depending on the choice of \(\lambda \) or \(H.\)

Essentially, for \(\lambda = 0\) we see that \(\Gamma _n\) is of the order of \(n^2p\) while for the “intermediate" regime \(0< \lambda < \infty ,\) the robust domination number is of the order of \(n,\) with high probability.

Proof of Theorem 2

\((a)\): If \(Y_{tot}\) and \(Z_{tot}\) denote, respectively, the number of edges and the number of isolated edges of \(G {\setminus } H,\) then \(\frac{Y_{tot}}{2} \le \Gamma _n \le \frac{Z_{tot}}{2}\) and so it suffices to bound \(Y_{tot}\) and \(Z_{tot}.\) The expected number of edges in \(G\) is \({n \atopwithdelims ()2}p = \frac{n^2p}{2}(1+o(1))\) and so from the deviation estimate (34) in Appendix, we get that

This provides an upper bound for \(\Gamma _n.\)

We now obtain general lower bounds for \(\Gamma _n\) assuming that \(np \longrightarrow \lambda \) and \(\Delta \le r_0n-1\) for some finite constants \(0< r_0 < 1\) and \(0 \le \lambda < \infty .\) As discussed before, it suffices to obtain a deviation bound for \(Y_{tot}\) and we use the second moment method. For an edge \(e \in K_n {\setminus } H,\) let \(A_e\) be the event that \(e\) is isolated so that \(Y_{tot} = \sum _{e \in K_n {\setminus } H} 1{1}(A_e),\) where \(1{1}(.)\) is the indicator function. Since each vertex has degree at most \(n,\) we have that

and since \(\Delta \le r_0 n\) we have that the number of edges in \(H\) is \(m \le \frac{1}{2}\Delta n \le \frac{1}{2}r_0n^2.\) Therefore from (26), we get that

for all \(n\) large.

Next, the minimum vertex degree in \(K_n {\setminus } H\) is \(n-1-\Delta \ge n(1-r_0)\) and so for distinct edges \(e_1 \ne e_2\) in \(K_n {\setminus } H,\) we argue as in (26) to get that

Thus again using (26), we get that \({\mathbb {P}}\left( A_{e_1} \cap A_{e_2}\right) -{\mathbb {P}}(A_{e_1}){\mathbb {P}}(A_{e_2})\) is bounded above by

and therefore

Using (28), (27) and the Chebychev inequality, we get for \(\epsilon > 0\) that

where \(C = C(\lambda ,r_0,\epsilon ) > 0\) is a constant. Again using (27) and (29), we get that

If \(\Delta = o(n)\) and \(\lambda = 0,\) then we can set \(r_0\) arbitrarily small in the above analysis and get from (25) and (30) that \(\frac{4\Gamma _n}{n^2p} \longrightarrow 1\) in probability as \(n \rightarrow \infty .\) If \(m = o(n^3p),\) then the number of vertices with degree larger than \(\epsilon n\) for \(\epsilon > 0\) is \(o(n^2p).\) Performing the “pre-processing" steps as in the proof of Theorem 1, we again get that \(\frac{4\Gamma _n}{n^2p} \longrightarrow 1\) in probability. \(\square \)

Proof of Theorem 2

\((b)\): We show that there exists a constant \(C > 0\) such that for every \(\epsilon > 0,\)

From (31) and the Chebychev inequality, we then get (24). Also, we only consider the case \(\Delta = o(n)\) and the preprocessing arguments analogous to the proof of Theorem 2\((a)\) holds for the case \(m = o(n^2).\)

For convenience, we assume throughout that \(np = \lambda \) and begin with the lower bounds for \({\mathbb {E}}\Gamma _n.\) Set \(\theta = 3\) in Lemma 1\((a)\) and let \(\lambda _0:= \lambda _0(3).\) Since \(p = \frac{\lambda }{n},\) we have that

and so for \(\epsilon > 0\) we have that \(u_n \left( 1-\frac{3\log \log {\lambda _b}}{\log {\lambda _a}}\right) \ge a(\lambda )n(1-\epsilon )\) for all \(n\) large. Consequently, for \(\lambda > \lambda _0,\) we get from (2) in Lemma 1 that

and so \({\mathbb {E}}\Gamma _n \ge a(\lambda )n(1-2\epsilon )\) for all \(n\) large. For \(\lambda < \lambda _0,\) we use (27) and the fact that \(\Delta = o(n)\) to get that \({\mathbb {E}}\Gamma _n \ge \frac{n^2p}{4}e^{-2\lambda }(1-2\epsilon ) = a(\lambda )n(1-2\epsilon )\) for all \(n\) large.

Next, for the upper bound for \({\mathbb {E}}\Gamma _n\) for \(\lambda \le 1,\) we recall from the discussion prior to (27) that \({\mathbb {E}}\Gamma _n \le \frac{{\mathbb {E}}Z_{tot}}{2} \le \frac{n^2p}{4} = \frac{\lambda n}{4}.\) For \(\lambda > 1,\) we use the alteration method as in the proof of Lemma 1\((b).\) Let \({{{\mathcal {D}}}}\) be any set of \(u_n + \Delta \) vertices. Each vertex \(v \notin {{{\mathcal {D}}}}\) is adjacent to at least \(u_n\) vertices of \({{{\mathcal {D}}}}\) and so \(v\) is not adjacent to any vertex of \({{{\mathcal {D}}}}\) in \(G {\setminus } H\) with probability at most \((1-p)^{u_n} = \frac{1}{np}\) and if \({{{\mathcal {B}}}}\) is the set of all “bad" vertices in \({{{\mathcal {D}}}}^c\) not adjacent to any vertex of \({{{\mathcal {D}}}},\) then the expected size of \({{{\mathcal {B}}}}\) is at most \(\frac{1}{p} = \frac{n}{\lambda }.\) Moreover, the asymptotic relation \(|\log (1-p)| \sim p\) and \(\Delta = o(n)\) imply that \({{{\mathcal {D}}}}\) has size \(u_n + \Delta \le \left( \frac{\log {\lambda }}{\lambda } + o(1)\right) n.\) The set \({{{\mathcal {D}}}} \cup {{{\mathcal {B}}}}\) is a dominating set of \(G {\setminus } H\) and has an expected size of at most \(n\left( \frac{\log {\lambda }+1}{\lambda } + o(1)\right) \le b(\lambda )n(1+\epsilon )\) for all \(n\) large. This completes the proof of the expectation bounds in (31).

Next, to prove the variance bound in (31), we use the martingale difference method. For \(1 \le j \le n,\) let \({{{\mathcal {F}}}}_j = \sigma \left( \{Z(f): f = (u,v), 1 \le u < v \le j\}\right) \) denote the sigma field generated by the state of the edges in the complete subgraph \(K_j.\) Defining the martingale difference \(R_j:= {\mathbb {E}}(\Gamma _n \mid {{{\mathcal {F}}}}_j) - {\mathbb {E}}(\Gamma _n\mid {{{\mathcal {F}}}}_{j-1}),\) we get that \(\Gamma _n -{\mathbb {E}}\Gamma _n = \sum _{j=1}^{n} R_j.\) By the martingale property we then have

To evaluate \({\mathbb {E}}R_j^2,\) we introduce the graph \(G^{(j)}\) obtained by using independent copies for the states of all edges \((u,j), 1 \le u < j\) and retaining the same state as \(G\) for the rest of the edges. With this notation, we rewrite \(R_j = {\mathbb {E}}( \Gamma _n - \Gamma _n^{(j)} \mid {{{\mathcal {F}}}}_j),\) where \(\Gamma _n^{(j)}\) is the domination number of the graph \(G^{(j)} {\setminus } H\) and so squaring and taking expectations, we get \({\mathbb {E}}R_j^2 \le {\mathbb {E}}(\Gamma _n - \Gamma _n^{(j)})^2.\) To estimate the difference \(|\Gamma _n - \Gamma _n^{(j)}|,\) we let \({{{\mathcal {D}}}}\) be any minimum size dominating set of \(G {\setminus } H.\) Adding all vertices adjacent to the vertex \(j\) in the graph \(G^{(j)}\) to the set \({{{\mathcal {D}}}}\) gives us a dominating set of \(G^{(j)} {\setminus } H\) and so \(\Gamma _n \le \Gamma _n^{(j)} + l_j,\) where \(l_j\) is the total number of edges containing \(j\) as an endvertex either in \(G\) or \(G^{(j)}.\) By symmetry, we therefore get \(|\Gamma _n - \Gamma ^{(j)}_n| \le l_j\) and so \({\mathbb {E}}R_j^2 \le {\mathbb {E}}l^2_j.\)

The expected number of edges in \(G\) containing \(j\) as an endvertex is

\((n-1)p \le \lambda \) and so if \(E_{up}\) is the event that the vertex \(j\) is adjacent to at most \(C_1 \log {n}\) edges in \(G\) then using Chernoff bound we get for \(s > 0\) that

for some constant \(C_2= C_2(\lambda ,s) > 0.\) Setting \(s=1\) and choosing \(C_1\) large, we get that \({\mathbb {P}}(E^{c}_{up}) \le \frac{1}{n^{6}}.\) Defining an analogous event \(E_{up}^{(j)}\) for the graph \(G^{(j)},\) we get from the union bound that \(F_{up}:= E_{up} \cap E^{(j)}_{up}\) occurs with probability at least \(1-\frac{2}{n^{6}}.\) If \(F_{up}\) occurs, then \(l_j \le 2C_1 \log {n}\) and other wise, we use the bound \(l_j \le 2n.\) Combining this with the discussion in the previous paragraph, we get

for some constant \(C_3 > 0.\) Plugging this into (32) gives the variance bound in (31) and therefore completes the proof of Theorem 2\((b).\) \(\square \)

References

Alon, N. and Spencer, J. (2008). The Probabilistic Method. Wiley Interscience, 2008.

Bollobás, B. (2001). Random Graphs. Cambridge University Press.

Clark, L. and Johnson, D. (2011). The Independent Domination Number of a Random Graph. Discuss. Math. Graph Theory, 31, 129–142.

Du, H., Ding, L., Wu, W., Kim, D., Pardalos, P. M. and Willson, J. (2013). Connected Dominating Set in Wireless Networks. In: Pardalos, P., Du, DZ., Graham, R. (eds) Handbook of Combinatorial Optimization. Springer, New York.

Glebov , R., Liebenau, A. and Szabó, T. (2012). On the Concentration of the Domination Number of the Random Graph. SIAM Journal on Discrete Mathematics, 29, 1186–1206.

Wang, B. and Xiang, K. (2009). On \(k-\)tuple Domination of Random Graphs. Appl. Math. Lett., 22, 1513–1517.

Wieland, B. and Godbole, A. P. (2001). On the Domination Number of a Random Graph. Electronic Journal of Combinatorics, 8, #R37, 1–13.

Wu, J. and Li. H. (2000). Domination and its Applications in Ad Hoc Wireless Networks with Unidirectional Links. IEEE International Conference on Parallel Processing 2000, 1–9.

Zou, F., Li, X., Kim, D. and Wu, W. (2008). Construction of Minimum Connected Dominating Set in \(3-\)Dimensional Wireless Network. Wireless Algorithms, Systems and Applications, 5258, 134–140.

Acknowledgements

I thank Professors Rahul Roy, C. R. Subramanian and the referee for crucial comments that led to an improvement of the paper. I also thank IMSc and IISER Bhopal for my fellowships.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Data availibility

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

Additional information

Communicated by B V Rajarama Bhat.

Appendix

Appendix

Standard Deviation Estimate: Let \(Z_i, 1 \le i \le t\) be independent Bernoulli random variables satisfying \({\mathbb {P}}(Z_i = 1) = p_i = 1-{\mathbb {P}}(Z_i = 0).\) If \(W_t = \sum _{i=1}^{t} Z_i\) and \(\mu _t = {\mathbb {E}}W_t,\) then for any \(0< \eta < \frac{1}{2}\) we have that

For a proof of (34), we refer to Corollary \(A.1.14,\) pp. \(312,\) Alon and Spencer (2008).

\( \underline{Montonicity of u_n(x)}\): The function \(u_n(x):= \frac{\log (nx)}{|\log (1-x)|}\) has a derivative

where

is the binary entropy function and logarithms are natural throughout. If \(x > \frac{1}{2},\) then \(H(x) - x\log {n} \le 1-\frac{\log {n}}{2} <0\) for all \(n \ge 4.\) The numerator \(H(x)-x\log {n}\) has derivative \(\log \left( \frac{1}{x}-1\right) - \log {n} < 0\) for all \(x > \frac{1}{n+1}.\) Thus for \(\frac{\lambda }{n}< x < \frac{1}{2}\) and \(\lambda > 1\) we use \((1-x)|\log (1-x)| <x\) to get that \(H(x)-x\log {n}\) is bounded above by

which is strictly less than zero if \(\lambda > e.\)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ganesan, G. Robust domination in random graphs. Indian J Pure Appl Math 55, 427–438 (2024). https://doi.org/10.1007/s13226-023-00374-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13226-023-00374-6