Abstract

Philosophical discussions on causal inference in medicine are stuck in dyadic camps, each defending one kind of evidence or method rather than another as best support for causal hypotheses. Whereas Evidence Based Medicine advocates the use of Randomised Controlled Trials and systematic reviews of RCTs as gold standard, philosophers of science emphasise the importance of mechanisms and their distinctive informational contribution to causal inference and assessment. Some have suggested the adoption of a pluralistic approach to causal inference, and an inductive rather than hypothetico-deductive inferential paradigm. However, these proposals deliver no clear guidelines about how such plurality of evidence sources should jointly justify hypotheses of causal associations. We here develop such guidelines by first giving a philosophical analysis of the underpinnings of Hill’s (1965) viewpoints on causality. We then put forward an evidence-amalgamation framework adopting a Bayesian net approach to model causal inference in pharmacology for the assessment of harms. Our framework accommodates a number of intuitions already expressed in the literature concerning the EBM vs. pluralist debate on causal inference, evidence hierarchies, causal holism, relevance (external validity), and reliability.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction: causal inference in pharmacology

Pharmacology blends science and technology in a very peculiar fashion. It works across levels of reality by directly intervening at the biochemical level only: whereas the direct domain of action of drug molecules is limited to protein receptors, the desired end-effects are clinically observable results. However, because the proteins with which the drug molecules interact are embedded in various, possibly interacting, biological pathways (metabolic, genetic, signal transduction), most end-effects are unpredictable.

Knowledge of these various interactions and the biological laws governing them, as well of the contingent initial conditions holding in any specific context is far from exhaustive and does not allow reliable prediction or causal inference. Hence, until recently, drug approval has mainly relied on a black-box methodology, grounded on hypothesis rejection. This paradigm, which has been mainly developed with the aim to minimise false positives in efficacy assessment (see Howick 2011; Teira2011, for an historical-philosophical overview), puts several constraints on the kind of evidence which is allowed to inform causal inference, and severely hampers the integration of heterogenous, possibly inconclusive pieces of evidence. Indeed, by giving a strong precedence to specific methods for inferring causes (such as Randomised Controlled Trials), the standard approach implicitly emphasises some indicators of causality, such as “difference making” in contrast to others, and has difficulties in incorporating evidence of different sources (e.g. spontaneous reports, case series, comparative studies of various kinds) and levels (e.g., molecular data, clinical evidence, epidemiological studies) which cannot be accommodated under this heading. Whereas this paradigm is reasonable for the purpose of avoiding fraud, by eliminating as much as possible any source of confounding and bias, it is not adequate for the purpose of minimising harms of health interventions (see Osimani and Mignini 2015).

The methodological landscape is rapidly changing though: Bayesian methods are gaining ground in statistical analysis of trials, because of their ability to optimise the use of available evidence by incorporating historical (heterogenous) knowledge in the prior, allowing diverse types of evidence to be integrated in the probability function, and by providing a probabilistic measure of the hypothesis under investigation, hence allowing decisions under uncertainty. Such statistical techniques are gaining ground especially in safety trials and trials for fast track or so called “orphan” drugs.

Systems pharmacology takes a “holistic” approach to study the effects of drugs in the organism by focusing on interrelations, rather than the components, of the mechanisms leading to intended and unintended outcomes. Computational modeling of perturbated cellular mechanisms for instance, aims to provide insights into the variability and complexity of pharmacological effects in the organ system (see for instance Britton et al. 2013). Also, data-intensive and knowledge discovery techniques put all available and drug-outcome relevant data together, in order to possibly predict the end-effect of a given drug, by reconstructing the possible routes of action from the molecular to the phenotypic level (see Abernethy and Bai 2013; Tilman and Eberhardt 2014; Xie et al. 2009). There is still considerable uncertainty as to the inferential and predictive value of these approaches, especially when they are taken on their own. However, their specific epistemic contribution could be made more valuable in combination with other sources of knowledge.

The main focus of the present paper is in fact to provide a framework for the amalgamation of diverse kinds of evidence for causal inference, which is formally and epistemologically grounded. For this, we will adopt Bovens’ and Hartmann’s (Bovens and Hartmann 2003) proposal to use Bayesian confirmation theory in order to account for (and mathematically explain) some phenomena related to scientific inference; such as the confirmatory power of the coherence of the body of evidence, the epistemic interaction of consistency of measurements and reliability of information sources, as well as the modular contribution of different “lines of evidence” related to diverse observable consequences of the investigated hypothesis. We will adapt this framework to causal inference and consequently specify a concrete structure for that purpose. We will then illustrate its epistemic and heuristic virtues as an instrument for evidence amalgamation, in the context of causal inference of drug-induced harm.

Section 2 explains in more detail why standards for efficacy and safety assessments should not be the same. Section 3 focuses on the role of causal inference in such assessments and elaborates on Bradford Hill viewpoints on causality in order to provide a list of philosophically grounded causal indicators. Section 4 presents Bayesian epistemology as a valid alternative to current standards of evidence; in particular it relies on Bovens’ and Hartmann’s mathematical representation of scientific inference and adapts it to the specific problem of inferring causality in pharmacology. Section 5 presents the details of our model and illustrates how it functions by presenting two model calculations. Section 6 spells out the main virtues of our inferential model with respect to the standard view and other proposals for synthesising evidence; furthermore it sets the stage for further developments and practical implementation.

2 Efficacy and safety assessments in pharmacology

2.1 Why standards ought not be the same

The European Parliament and the European Council have recently changed the regulation of pharmacovigilance practice (Directive 2010/84/EU; regulation (EU) No 1235/2010, entered into force in July 2012), putting a special emphasis on joint efforts for what can be considered an information-based (rather than power-based) approach to pharmaceutical risk assessment. The related guidelines encourage the integration of information coming from different sources of safety signals (spontaneous case reports, literature, data mining, pharmaco-epidemiological studies, post-marketing trials, drug utilisation studies, non-clinical studies, late-breaking information; see also Herxheimer 2012). Yet, the methodological bases for implementing such policy are still shaky in that the standards for causal assessment of adverse drug reactions (ADRs) is still parasitic on the (statistical) methods developed to test drug efficacy (see also Senn 2007; Price et al. 2014; Osimani and Mignini 2015). In Osimani and Mignini (2015), a series of reasons have been provided for adopting asymmetric standards for safety and efficacy assessments. These mainly deal with the following facts: 1) because of pragmatic as well as epistemic reasons, in the case of risk assessment there is higher concern for false negatives (failing to detect possible causes of observed effects), rather than for false positives (failing to distinguish between spurious and genuine causes). This is mainly due to the fact that the drug is developed and tested with reference to the intended effect, whereas the detection of side-effects – apart from most common ones, which can be observed also in medium-sized samples – is mainly left to the post-marketing phase; 2) also, evidence accumulating in time may strongly point to the hypothesis of causal association between drug and observed harm, without nevertheless being conclusive. Hence, instruments of probabilistic causal assessment are needed which do not demand a clear-cut rejection or acceptance of investigated hypotheses; 3) heterogeneous pieces of evidence might jointly support a given hypothesis without being individually able to allow any significant inference; hence instruments for evidence amalgamation are needed for this purpose.

2.2 The decision problem

Drug licensing bodies, such as the Food and Drug Agency in the USA or the EMA in Europe, as well as national agencies such as the Bundesinstitut für Arzneimittel und Medizinprodukte in Germany, or the Medicines and Healthcare products Regulatory Agency in the UK, regularly face the problem of whether to approve a drug for treatment or not and the problem of whether or not to let a drug further circulate in the market when its safety profile is updated through the discovery of additional risks. Indeed any given drug is always approved “with reservation” (Osimani 2007).Footnote 1 The actions taken by the drug licensing body may have wide influence on public health and public finance as well as economic success of the drug’s manufacturer and its competitors. Intuitively, the normatively right action to take is to leave the drug in the market, provided that – on the basis of the available evidence – the expected utility of not withdrawing it exceeds the utility of withdrawing it.

The precautionary principle has been introduced in the pharmaceutical domain in order to account for the uncertainty arising in cases where suspicion arises about a new harm, possibly associated with the drug, but evidence cannot conclusively point to a causal connection between them. In fact, before its introduction in the legal system (and through various international agreements related to environmental law, see Osimani 2013) no preventive measure was possible without a scientific proof of the causal connection between suspected source of damage and expected harm. This is because liability and safety regulations are grounded on a clear causal connection between the agent deemed responsible for the hazard and the hazard itself. The precautionary principle relaxes this requirement in view of the good at stakes (health and environment) and of the radical uncertainty related to the possible unintended outcomes of human interventions on nature. In such cases, a well-founded suspicion may suffice to take action (withdraw the drug or restrict its usage), and the principle of proportionality applies. This means that the probability associated with the hypothesis of causal association may be as low as the expected harm is high (with respect to the expected benefit, Osimani et al. 2011).

Hence, the decision, e.g., withdraw the drug or not, will depend on some threshold which reflects the nature of the medication, the pharmaceutical environment (i.e., the availability of alternative treatments for the same condition), policy and ethical dimensions, as well as the perceived acceptability of the risk.

In order to avoid commitment (for the moment) to any of the many notions of causation offered by the philosophical-methodological literatureFootnote 2 we use the formula: DⒸH in order to express the proposition: “D causes H”, sometimes abbreviated by Ⓒ when no ambiguities arise. Hence, by adopting the classical cost-effectiveness analysis formula, we can infer the probability threshold for causality p ∗, at which the expected utility of withdrawing equals the expected utility of keeping the drug in the market.

Let w stand for the act of withdrawing the drug D from the market while ¬w stands for not withdrawing D. The utility of (not) withdrawing given that Ⓒ or ¬Ⓒ holds is denoted by the two-place utility function U. At the probability threshold p ∗, the expected utility for withdrawing the drug equals the expected utility for not withdrawing it. p ∗ can hence be obtained by solving

for p ∗. Therefore, we can find p ∗ to be determined by the utilities

If the degree of belief in Ⓒ is strictly greater than p ∗, then the normatively correct decision is to withdraw the drug from the market. If the degree of belief is strictly less than p ∗, then the normatively correct decision is to keep the drug in the market. Hence, p ∗ partitions the continuum of degrees of belief between the two alternative hypotheses (Ⓒ and ¬Ⓒ) into two intervals, see Fig. 1.

There is a fact of the matter: either Ⓒ is true or the opposite holds. So, in order to make the best decision it is necessary and sufficient to adopt degrees of belief which fall in the interval between p ∗ and the truth value of Ⓒ – where a truth value of 1 stands for ‘true’ and a truth value of 0 stands for ‘false’. Therefore, p ∗ allows for a certain margin of error; however, the chances to fall into the right interval get higher and higher the more evidence one takes into account: the more one “samples” from reality, the closer one’s beliefs get to the truth (Edwards et al. 1963).Footnote 3

A straightforward consequence of this state of affairs is that there is a need of instruments which allow a probabilistic assessment of the suspected causal link between drug and side-effect, by taking into account all available evidence at the time of decision. In particular, four desiderata are essential for a framework of causal assessment of drug induced harm:

-

1.

It must allow for probabilistic hypothesis confirmation.

-

2.

It must be able to incorporate heterogeneous kinds of data.

-

3.

It must be able to integrate diverse types of inferential patterns, in order to optmise the epistemic import of available evidence.

-

4.

The framework should be particularly focused on causal assessment in pharmacology and therefore consider the specific issues which arise in this context.

In this paper, we focus exclusively on how to develop a framework of this kind. The problems of i) how to determine the expected utilities of these harms and ii) how to determine the expected utility of benefits are outside the scope of this paper.

3 A framework for the assessment of harms in pharmacology, part 1: relata

In the following, we present our approach to causal inference for drug harms based on a formal Bayesian model of scientific inference. Our approach 1) identifies possible indicators of causality (observable consequences of the causal hypothesis) on the basis of the methodological and philosophical literature on causality, evidence, and causal inference; 2) embeds them in a topological framework of probabilistic dependencies and independencies grounded in assumptions regarding their reciprocal epistemic interconnections; 3) weakly orders some of these probabilistic dependencies as a function of their inferential strength with respect to the confirmation of causal hypotheses. Furthermore, the developed model is used to illustrate possible epistemic dynamics related to the interactions of its various components.

3.1 Causal indicators

In a much quoted paper devoted to causal assessment of environmental hazards (Hill 1965), the epidemiologist Sir Austin Bradford Hill identifies nine “viewpoints” which help detecting possible causes of observed risks. Hill does not consider these “viewpoints” (neither individually nor jointly) to provide sufficient and necessary conditions for causality, but they should “help us to make up our minds on the fundamental question – is there any other way of explaining the set of facts before us, is there any other answer equally, or more, likely than cause and effect?”, see Hill (1965, p. 299). The nine “viewpoints” are the following: 1) strength of the association; 2) consistency; 3) specificity; 4) temporality; 5) biological gradient; 6) plausibility; 7) coherence; 8) experiment; 9) analogy.

-

1.

Strength of the association refers to the observed relationship between a candidate cause and the putative risk: how much the former contributes to the latter, measured for instance by the ratio of relevant outcomes between exposed and unexposed group, or by regression coefficients. Particularly, Hill emphasises that although this kind of information may be causally opaque because of possible confounders, still causation may be conceded whenever such possible confounders cannot be reasonably identified (on grounds that one can use this as a heuristic basis for excluding them).

-

2.

Consistency refers both to the convergence of the observed results in different study settings and across different methods, and to the stability of the association across different background conditions and circumstances. The former kind of consistency may be also referred to as methodological robustness, in that it is meant to provide a warrant that the observed results have not been produced by the studies themselves, and thereby exclude the possibility that they are a study artifact. The latter instead refers to the association holding in different contexts/ kinds of populations/background conditions, and is also known as “ontological robustness” (Wimsatt 1981, 2012).

-

3.

Specificity refers to ideally one-to-one relationships between specific sources of hazards and specific kinds of harms. Hill makes for instance the example of different kinds of cancer sites (lung or nose vs. scrotum cancer) which specific populations (nickel refiners vs. chimney sweepers) are more likely to contract, depending on the kinds of chemicals they are exposed to.

-

4.

Temporality. Excluding theoretical cases of backward causation, causes come before their effects. Hence, one would be inclined to infer causality when both a statistical association is present and the putative cause is observed to come prior to the effect. However, observational studies cannot guarantee perfect information on temporal order, since generally both the exposure to risk factors and the development of disease extend through time; therefore attention should be paid to possible confounding factors and reverse causation.

-

5.

Biological gradient refers to what is also called dose-response curve: an observed systematic relationship between the exposure and the strength of the observed effect: “For instance, the fact that the death rate from cancer of the lung rises linearly with the number of cigarettes smoked daily, adds a very great deal to the simpler evidence that cigarette smokers have a higher death rate than non-smokers”, see Hill (1965, p. 298).

-

6.

Biological plausibility refers to the fact that knowledge of molecular mechanisms should also be considered, in addition to statistical knowledge, when assessing causal associations. On the other hand, causal association should not be dismissed on the grounds that the hypothesised mechanisms are implausible, since implausibility is relative to the state of the art, and this might be overturned by strong evidence to the contrary: “What is biologically plausible depends upon the biological knowledge of the day”, see Hill (1965, p. 298).

-

7.

Coherence relates to how well the different pieces of evidence fit together. For instance, when evidence from animal studies and epidemiological studies point to the same hypothesis. Coherence also relates to how well the hypothesis fits with background knowledge: “the cause and effect interpretation of our data should not seriously conflict with the generally known facts of the natural history and biology of the disease”, see Hill (1965, p. 298). However, lack of coherence among the different kinds of studies, cannot totally nullify their evidential value.

-

8.

Experimental evidence, if available, allows the scientist to isolate the factor under investigation from other possible confounders, and thereby provides the strongest support for causal hypotheses according to Hill and the epidemiological canon in general.

-

9.

Reasoning by analogy is an additional source of potentially relevant information for the causal claim at hand. Hill only briefly mentions analogy as providing further support for the hypothesis under investigation.

Hill concedes that he is not providing a theoretical justification for his list of categories, but interestingly, his points of view on causality reflect many of the criteria discussed in the philosophical literature for several decades now. We shall go on to systematically gather philosophical support for Hill’s list in the next section.

3.2 Philosophical underpinnings of various indicators of causality

In the following, we discuss the rationales which epistemologically underpin Hill’s viewpoints on causality by appealing to the philosophical literature, and derive our list of indicators of causality for our formal framework. While there may be further indicators of causality (in pharmacology), we think that those presented below are the most pertinent ones discussed in the philosophical literature.Footnote 4 Figure 2 presents a mapping from Hill’s list onto our framework which will be discussed in detail below. Not every viewpoint is mapped onto an indicator of causality in our sense – e.g., we locate analogy among the set of inferential patterns. The remainder of Section 3.2 is aligned with Hill’s list, cf. Fig. 2.

3.2.1 Strength of association

Observed strength of association may mean different things. In biological systems, causes bring about putative effects, if specific combinations of background conditions hold and possibly hindering factors are absent. In contrast to causes which hold on a broad spectrum of background conditions, causes whose backgrounds conditions are rarely met – or whose hindering factors are common – will produce small effects in the treatment arm. Hence the effect-size reflects this kind of phenomenon, which philosophers have referred to as “stability” or “invariance”, or “insensitivity” or “non-contingency” (Pearl 2000; Woodward 2003). This means that the effect size may reflect the relative non-contingency of the causal effect (independence from “supporting factors”: Cartwright and Stegenga 2011; Stegenga 2015), rather than its causative force.

Woodward (2003, 2010) introduces stability as a feature of counterfactual dependence between putative cause and effect: the causal relationship remains intact under changes in the environment, and the severity of the changes “tests” the stability of the relationship – the more stable, the less sensitive to the specification of background parameters. Stability may indeed be considered as an indicator of causality in that it manifests the non-contingent relationship between the observed effect and the putative cause: in a certain sense it is an attenuated version of the necessity condition inherent to the pre-Humean conception of causality. Woodward as well as Pearl (Pearl 2000; Woodward 2003) consider stability as an essential quality of causal relations: the more unstable a relation is observed to be, the higher the likelihood that it is not genuinely causal at all in the end.

Strength of the association is also a function of how much the putative effect changes upon changes of the putative cause. This is measured for instance by the (regression) coefficient. In this respect, strength refers to the functional relationship itself, independently of its degree of universality. We will refer to a strong association in this sense as having a “high rate of growth”.

Related to the rate of growth is also the “dose-response relationship”. This states whether a systematic relation between changes in one variable and changes in the other holds in the first place. The existence of a dose-response model is an important indicator of causality especially because the detection of a clear dose-response curve (e.g., a logarithmic relation between treatment or exposure and observed effect values with small error terms) is best explained by positing a truly ontological influence structure along Reichenbach’s principle: The variables are either directly causally related or correlated due to the presence of a common cause.Footnote 5

Dose-response relationship and (high) rate of growth are epistemically related not only because the latter conceptually subsumes the former, but also because the higher the rate of growth, the more likely it is that both will be detected in observational or experimental studies. This is because, if the rate of growth is high, then a relationship between putative cause x and effect y (x and y are continuous variables) is more likely to be detected even in small samples/less favourable background conditions. In sum, the presence of a dose-response relationship d y/d x ≠ 0 (for most x in the domain) suggests that there is a systematic relationship between the putative cause and the effect; the rate of growth says how strong this relationship is, e.g, how much d y/d x departs from 0. Figure 3 contrasts two exemplary rates of growth (high in graph 1a and low in graph 1b) with concrete dose-response curves (graphs 2a, 2b, and 2c). The dose-response relationship can be linear or – as illustrated in the example – nonlinear (in which case it can be monotonic or non-monotonic).Footnote 6

The strength of association may also be measured by “probabilistic dependence”. In comparison to rate of growth and the dose-response gradient, this may be a less informative indicator, in that it need not provide any information as to the functional form of the relationship between cause and effect, but merely denotes the presence of an asymmetry in the conditional distribution of the two variables.

In our system we distinguish between “rate of growth”, “dose-response relationship” and “probabilistic dependence” as different indicators of causality corresponding to Hill’s “strength of association”.

We also introduce “difference making” as a perfect indicator of causality: this is to be inferred either through experiment or through “intervention” as intended in the causal graphs literature (Pearl 2000; Woodward 2003) (see Section 5.2).

The main distinction between the three former indicators and the latter one is that difference making represents an asymmetric relationship (it is about what makes a difference to what), whereas the various forms of strength of associations are all symmetric in principle, and therefore cannot provide straightforward information about the direction of the relationship. This also follows from the very same reason why dose-response relationship, rate of growth and probabilistic dependency are only imperfect indicators of causality, whereas difference making is a perfect one (more on this below in Section 3.2.6, Page 19, and Section 5.2).

3.2.2 Consistency

Hill’s second viewpoint “consistency”, also refers to stability in that it centers on the question: “Has [the association] been repeatedly observed by different persons, in different places, circumstances and times?” (emphasis added). However, by referring to repeated observations, consistency also relates to replication of studies with identical methods, or in (systematically) varied study settings.

Indeed, as for any other empirical science, clinical trials and epidemiological studies cannot test a given hypothesis but in highly contingent study settings, together with its theoretical/methodological assumptions, and ceteris paribus clauses. Hence, systematic variation of study design and setting serves to provide evidence that the result is not an artifact of the particular circumstances in which a given study has been carried out, or of the particular method, or theoretical model adopted, and related assumptions (see also Kuorikoski et al. 2010). Hence the role of consistency should be distinguished along the following lines (see also Table 1):

-

1.

Replication of (ideally) identical studies (same “background conditions” – same inclusion and exclusion criteria, mode of administration/exposure, etc. – and same design, e.g., cohort study, RCT, etc.): this is a means to increase accuracy of measurement.

-

2.

Replication of the observation through different methods, but analogous background conditions: this should test the results against the suspicion of being created by the specific study design/setting (“study artifacts”) and guarantee “methodological robustness”.

-

3.

Replication of the observation through similar methods, but in different background conditions: this should test the stability of the causal link itself in different populations/circumstances and show the extent to which it is “ontologically robust” (Wimsatt 2012; Open Science Collaboration 2015; Meehl 1990; Woodward 2006).

Meehl (1990) represents the logical structure of theory testing as follows:

Where T represents the theory to be tested, A t its ancillary assumption, C p denotes the ceteris paribus clauses, A i the methodological assumption of the specific study design used, and C n the specific and absolutely contingent conditions of a given individual experiment. The arrow denotes entailment and the horseshoe ⊃ between O 1 and O 2 material entailment. So, the left-hand conjunction is falsified, modus tollens, if you observe (O 1,¬O 2), instead, observing (O 1,O 2) provides inductive support to it.

Study replication of the first kind (1) serves the purpose of verifying whether the results, positive or negative, are due to the contingent settings of the individual study (C n ); systematic variation of experimental/observational settings (2) is meant to identify the influence of the methodological settings (A i ). Replication (3) allows one to test the possible violation of ceteris paribus clauses (C p ); theory-related ancillary assumptions (A t ), and any testable consequence of the theory itself (T). Hence, the confirmatory role of replication consists in enhancing the probability of the theory T by decreasing the probability that the evidence provided by the study for it is instead due to A t , C p , A i , or C n .

In the specific context of causal inference by means of clinical or epidemiolgical studies, the role of replication and systematic study variation can analogously be related to the various components of scientific inference. So, T may represent the hypothesis of the causal connection itself; A t the ancillary assumptions, C P , the “everything else being equal” clause in relation to the investigated drug, that is, the possible interacting factors such as age, sex, co-morbidity etc., A i represents the methodological assumptions, that is those aspects of the study design which make a difference to the result and its interpretation with respect to other kinds of designs (e.g., a cohort study with respect to a case-control study); and C n represents the contingent circumstances and conditions of any given study.

Consequently, from a confirmatory point of view, consistency across studies may mean very different things depending on whether such studies share the same design, the same kind of population or both.

In our framework, consideration of the confirmatory value of consistency is incorporated in the general structure of the network, where multiple reports for the same indicator may be related by the same reliability or relevance nodes if they share the same methodology or background conditions respectively.

3.2.3 Specificity: quantitative and qualitative versions

Specificity also refers to diverse phenomena. The traditional concept of specificity (following Hill) approximates the classic conception of a cause as a necessary and sufficient condition for its effect to occur, in contrast to the possibility of it being produced by other candidate causes. In particular, specificity is interesting when considered as an (ideally) bijective function holding between sets of cause classes and sets of effect classes; for instance when various kinds of a class of toxic agents are related to various kinds of cancers through biunivocal relationships.

Specificity as a property of causality has been discussed by Lewis (2000), Waters (2007), and Woodward (2010) as the sort of functional relationship which systematically holds between the values of a variable and the values of another variable, such that changing the value of the former in specific ways also changes the values of the latter in specific ways, ideally in a bijective fashion. Lewis uses for this kind of causal relationship the term “influence” and describes it as follows: “C will influence E to the extent that by varying the state of C [...] we can vary the state of E in a fine-grained way” (Lewis 2000, p. 305).Footnote 7 In itself, an ideal bi-conditional association with no residual instantiations of Cs not accompanied by Es, or vice versa, would not guarantee causation: specificity is not an exclusive property of causation (also conventional codes are specific in this sense, see Osimani 2014a; Kment 2010, p. 83). However, it is very unlikely that such bi-conditional relationships can occur by chance alone, therefore, specificity may be considered as an indicator of a causal association being present, in that it manifests an underlying robust relationship, which explains the systematic correspondence between the two relata.

Another, related notion of specificity refers to the “geometrical complementarity” on which many biological phenomena are based; such as the key-hole relationship between antigens and antibodies, or between target receptors and drug molecules (see Weber 2006). This kind of specificity is relevant to causal assessment and prediction in pharmacology for methods (such as so called “systems pharmacology”), that try to infer the possible effects of drugs by identifying the sets of possible families of receptors – and related proteins – which a certain drug molecule could bind to on grounds of its “affinity” with them. Indeed, affinity is a function of stereometric properties (structural and biological similarity); see for instance Xie et al. (2009). This kind of specificity pertains to mechanistic reasoning, hence it will be considered insofar as it is used to glean causal evidence from knowledge about mechanisms (see below, biological plausibility: evidence of mechanisms, Section 3.2.6).

Specificity and stability are independent concepts: a causal relationship may be at the same time highly specific and unstable (e.g. multifactorial genetic diseases), or it may be highly stable but show a low degree of specificity (e.g. intestinal inflammation caused by various kinds of antibiotics).

Neither stability nor specificity will be used in our framework to distinguish different types of causes, but rather as signs of the presence of a causal connection. In our framework, specificity is encoded as difference-making since those very studies aiming at detecting causal efficacy of a drug under investigation also yield information about the variance of the effect under different tests.Footnote 8

3.2.4 Temporal order, distance, and duration

In his question “which is the cart and which the horse?”, Hill addresses the aspect of temporal precedence, seen as one of the most important markers of causality. The importance of this criterion is mirrored by the fact that many theorists of causality consider it a necessary prerequisite, to postulate alignment of the causal and the temporal direction, or even explicitly incorporate it in their formal framework.Footnote 9 For instance, Suppes (1970) goes beyond Reichenbach’s common cause principle in explicitly building on the direction of time in his probabilistic definition of causality: an event genuinely causes a subsequent second event if it is identified as a “prima facie cause” – i.e., it precedes the effect and raises its probability – and guaranteed not to be an instance of “spurious causation”. Hence, although temporal precedence is a necessary condition for causality, it is not sufficient for it because of the possibility of confounding.

The methodological literature speaks about “reverse causation” in cases where precedence of time, together with statistical association, might give the false impression that the preceding phenomenon causes the succeeding one, whereas the contrary holds. In epidemiology, this is mainly due to issues related to duration and manifestation in time of causal phenomena.Footnote 10

For example, the recent debate around the causal association between paracetamol and asthma centers around the possibility that cohort studies showing a statistical association between the drug and the disease, may not warrant a causal conclusion, notwithstanding the temporal precedence of paracetamol consumption with respect to disease inception, because subjects affected by asthma in its subclinical phase (i.e., when it has not been diagnosed yet) have a higher than average tendency to develop colds, fever or rhynitis, and therefore to take more paracetamol than the unaffected population (Heintze and Petersen 2013; Weatherall et al. 2014). Figure 4, replicated from Heintze and Petersen (2013), shows the potential common cause structure explaining the association between the use of antibiotics or paracetamol and later wheezing or bronchial asthma.

Confounding by indication in the case of the association between antibiotics and asthma (cf. Heintze 2013, p. 1205, Figure 4). Where the upper structure contains a direct causal link, the second structure includes a third and earlier factor, respiratory infection, triggering both the prescription of drugs and what turns into wheezing or asthma at a later time

In our framework, temporality will be expressed straightforwardly by a temporality variable to sum up the above distinctive criteria.

3.2.5 Biological gradients and dose-response models

Related to specificity as influence is also the dose-response relationship, where a clear pattern of quantitative dependency manifests. Quite in line with Lewis’ idea of fine-grained relevance as a characteristic feature of causation, Hill sees it as a strong indicator of a causal relation if an investigation reveals a biological gradient, in that a clear dose-response curve admits a simple explanation.

In analogy to the strength of association indicator, also the biological gradients splits up into the three dimensions “rate of growth”, “dose-response relationship”, and “probabilistic dependence”.

3.2.6 Inferential patterns

3.2.7 Plausibility of the Biological Mechanism

The role of evidence about possible/plausible/actual mechanisms (Machamer et al. 2000; Darden 2006; Craver 2007) linking the putative cause to its phenotypic effect is strongly debated in philosophy, especially in relation to evidence standards. Philosophers closer to the Evidence Based Medicine approach, even in recognising some value to knowledge about mechanisms, still doubt that they can complement statistical black-box evidence because of the limited and fragmentary knowledge of the “causal web” in which they are embedded (see for instance Howick 2011). Other philosophers instead generally recognise that knowledge about mechanisms plays a plurality of roles both in combination with statistical information and in a stand-alone fashion:

-

1.

Following the philosophical analysis of causal explanation, the most traditional epistemic role assigned to knowledge about mechanisms is to provide the ontological rationale for observed regularities (Salmon 1984).

-

2.

Knowledge about mechanisms can also constitute a sort of double check for causality (Salmon 1997; Russo and Williamson 2007; Clarke et al. 2014).

-

3.

Mechanisms have a methodological relevance in that they are supposed to provide the basis for extrapolation (Cartwright 2007b; Luján et al. 2016), and are important for supporting the reliability of model assumptions as well as for interpreting experiment results (for instance a two-way curvilinear causal interaction cannot be detected or may be misinterpreted by linear regression models).

-

4.

Finally, mechanisms are held to have an epistemological/theoretical relevance, in that they can provide the hypothesis which puts together disparate data (through abductive inference). In this sense they provide the basis for the accumulation of knowledge and scientific progress (see also Craver 2007).

In the epidemiological literature, especially in narrative reviews, it is typical to combine evidence about mechanisms with statistical evidence at the population level, as an argumentative move in favour of causal hypotheses which are only suggested by statistical associations. For instance, going back to our debate about the possible causal association between paracetamol and asthma, a series of reviews (Shaheen et al. 2000, 2008; Katayoun 2005; Henderson and Shaheen 2013; Allmers et al. 2009; McBride 2011; Heintze 2013; Martinez-Gimeno and García-Marcos 2013) present both population-level studies as well as evidence of molecular and cellular mechanisms from bio-essay or animal studies showing plausible biological pathways leading from consumption of paracetamol to the development of hyper-responsive reactions and asthma, see Osimani (2014b). This latter evidence is meant to provide support for the “physical” connection, if the observed statistical association was due to a causal association.Footnote 11 In our framework this dimension will be mapped onto the indicator for mechanisms.

The plausibility part of the “plausibility of mechanisms” indicator refers to the general fit of the hypothesised mechanisms to available background knowledge and this leads us directly to the subsequent “viewpoint” on causality (as Hill would call it), namely: “coherence of evidence”. Neither coherence of evidence nor the subsequent viewpoints listed by Hill (“experiment” and “analogy”) are strictly speaking indicators of the presence of causal relationships themselves. Rather, they refer to the inferential/methodological process itself, and they appeal to particular methods (experimental), or kinds of reasoning (“analogy”), or theoretical/epistemological virtues (“coherence”) which may be adopted to “optimise causal inference”.

3.2.8 Coherent evidence

Coherence is a property of the body of evidence, rather than of the phenomenon under investigation (here, the causal link between drug and side effect). Hence, coherence may involve the concept of consistency seen above and therefore denote 1) a property of a set of measurements, related to the same investigated parameter, both in the sense that they all indicate a positive (or a negative) effect, and in the sense that the strength of the effect size does not exceed statistical variability across studies – this sort of coherence is generally referred to as consistency of results, and is established through replication; 2) a property of a set of various pieces of evidence related to the same testable consequence of the hypothesis, investigated through diverse kinds of methods: methodological robustness or “triangulation” (see Wimsatt 1981; Wimsatt et al. 2012); 3) a property of a set of pieces of evidence related to diverse testable consequences of the investigated hypothesis. This latter sense is the less investigated and accounted for in the methodological literature.

In the epidemiological literature, consistency and coherence are not explicitly distinguished and evaluation of the latter is left to informal/implicit judgment in narrative reviews. Instead, the philosophical literature has investigated coherence in several respects: 1) general epistemology offers “coherentism” as a response to skepticism in alternative to “foundationalism”: according to this view beliefs are justified by their fitting together in a system, and standing in a relation of mutual support (BonJour 2010), like the stones of an arc (simul stabunt, simul cadent); 2) formal epistemology has investigated the confirmatory value of coherence of beliefs, also by developing various measures of coherence, in the attempt both to formalise its content and to track its truth-conduciveness; see for example Crupi and Tentori (2014), Fitelson (2003), Bovens and Hartmann (2003), Dietrich and Moretti (2005), and Moretti (2007).

As a theoretical virtue, coherence is particularly relevant in a context, such as risk assessment, where evidence may come from different sources and relate to diverse levels/dimensions of the suspected causal association between drug and side-effects.

We adopt and adapt such accounts of coherence in order to develop a system of evidence amalgamation which naturally incorporates coherence as confirmatory, by virtue of the way the system lets heterogeneous pieces of evidence interact and thereby jointly contribute to the (dis-)confirmation of the investigated hypothesis.

3.2.9 Support by experiment

The scientific method strongly relies on systematic observation and experiment. In particular, carefully controlled experiments are considered a privileged way to inquire nature, in that they ideally allow the scientist to isolate the phenomenon under investigation from interfering and disturbance factors. More specifically, the systematic variation of experimental conditions recalls Mills method of eliminative induction (Mill 1884), in that it allows to see the behaviour of the studied phenomenon in combinatorial rearrangement of different circumstances, encoding both methodological and theoretical assumptions (different “possible worlds”).

However, traditional experiments in physics are very different from experiments in biology, pharmacology, and medicine (but also sociology and psychology). In physics, the experiment is meant to test a theory by comparing the observed value and the value predicted by the theory: statistical significance speaks directly for the theory in that it reflects a small discordance between the two values. In the latter sciences instead, the tendency has prevailed to test the null hypothesis, that is, the catch-all complement of the claim that the experiment is supposed to provide evidence for (see also Meehl 1990).

This has raised various criticisms both addressed against the methodology of hypothesis testing itself (see for instance Howson and Urbach 2006) as well as concerning the epistemic value of randomised controlled trials in medicine (Cartwright 2007a; Papineau 1993; Worrall 2007b, 2010; Teira 2011; La Caze et al. 2012). In the latter case, the charge involves the alleged epistemic virtues of randomisation:Footnote 12 the exclusive reliance on RCTs for the justification of causal claims is considered to be wrongheaded for various reasons; both related to the kind of information which they provide, and to the kind of information which they are not able to incorporate or account for.

Indeed, whereas classical experiments in physics allow the scientist to observe the behaviour of the investigated phenomenon in an array of different “possible worlds” (in different scenarios or under different initial or boundary conditions), and compare systematic differences among such situations, randomisation is blind to such specific settings. Its outcome is rather to neutralise their effects on the final result, by creating two populations, one for the treatment and one for the control group, where the same ”worlds” should be represented in the same proportion. This should guarantee that the different results possibly observed at the end of the trial are due to the treatment and only to it.

Hence randomisation, as a means to isolate the putative cause from other possible confounding factors, loses much information with regard to the specification of possibly relevant mediating and interacting causes. However, it provides a much higher guarantee with respect to confounding than observational studies do. Ceteris paribus, RCTs fare better in distinguishing spurious from genuine causes.

Since Rubin (1974), the standard conceptualisation of causal claims resulting from RCTs (and comparative studies) is counterfactual: the “causal effect” is the difference between what would have happened to the subject, had it been exposed to the treatment and what would have happened to it, had it been exposed to the control. Since the subject cannot undergo the same experimental conditions at the same time, the causal effect is calculated as the average difference of the effects observed in the group of exposed and the group of unexposed subjects.Footnote 13

Causality has indeed been analysed in terms of counterfactuals in several respects. Lewis (1973, 1986, 2000) proposes a possible-worlds semantics for the truth conditions of individual causal claims in terms of counterfactual dependence; Woodward (2003) identifies necessary and sufficient conditions for causality in terms of invariance under intervention, where his notion of intervention captures the counterfactual gist of causal graphs and structural equation modelling. Finally, Pearl (2000) is focused on counterfactuals related to potential effects of interventions, and relies on causal knowledge to predict the effect of such interventions (e.g., policy interventions). Hence, counterfactuals have different roles in analysing causal claims, defining causality or using causal knowledge for predicting the effect of interventions. However, they share the intuition that a cause must make some difference to whether the effects occur or not (holding other variables fixed).

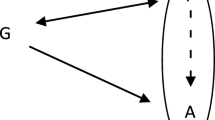

In our framework we identify RCTs as particularly reliable sources of evidence for the difference-making effect attributed to causes by the philosophical literature; and we understand difference-making as ideal controlled variance along the concept of intervention in manipulationist theories of causation. For example, Woodward (2003) carefully characterises intervention variables for the purpose of testing the hypothesised causal connection between two events X and Y. Figure 5 illustrates the idea: If the unknown underlying causal connections form a fork (first graph in Fig. 5), the introduction of a suitably chosen intervention variable I will lift X from the influence of X and Y’s common cause and refute the hypothesis that X is a cause of Y (second graph in Fig. 5). Nevertheless, if I is not chosen well it might indeed lift X from its parents’ influence but introduce a new dependency between X and Y, leading to the false conclusion that X causally influences Y (third graph in Fig. 5). In contrast to Woodward, Pearl (2000) expresses interventions as local surgeries in the causal model, subjecting X to ideal (external) control by directly setting its value (qua d o(x)), thereby abstracting from the possibility of finding a suitable intervention variable. X is then called a cause of Y if Y’s value can be varied by varying X (possibly upon controlling for additional variables in the given situation). We see our concept of difference-making as closely related to Pearl’s definition of what it means to be a cause, and accordingly, difference-making will be a perfect indicator of causation (also see below, Section 5.2).

Interventions can be used to test the underlying causal structure and learn more about the relation between event X and Y. Woodward’s interventions require an additional, suitably defined intervention variable I (overriding causal influence on X, marked by dotted edges), whereas Pearl’s interventions are expressed as local surgeries in the causal graph

3.2.10 Support by analogy

Hill briefly mentions reasoning by analogy as additionally contributing to the assessment of the causal claim: if a specific drug is on trial, available evidence of a similar second drug’s effects might be used for inference about the former. This touches upon central and notorious epistemological questions: What does it mean to be sufficiently similar in the case under consideration? In what way does the difference between the first and the second drug influence changes in expected outcome values? How specific are a drug’s properties? If they are highly specific – to what extent can this drug be used in an analogical argument, if at all? Although similarity seems to be a concept difficult to spell out in formal terms,Footnote 14 the applicability and fruitfulness of parallel reasoning is of great interest (see Bartha 2010 and Hesse 1959), and analogical arguments are employed across disciplines.

Physicists, e.g., transfer abstract structures of analogue reasoning to analogue simulations with which physical systems (presumedly similar in all relevant aspects) are tested under syntactic isomorphism (see, e.g., Unruh 2008; Dardashti et al. 2016; Hesse 1952 for discussions of analogue arguments in physics). Scientific discovery is oftentimes propelled by analogy, as, e.g., in the nineteenth century, when secured knowledge about acoustics was employed in the discovery of spectral lines. Guided by the image of a harmonic oscillator, physicists were able to focus their attention to groups of spectral lines with specific frequency patterns from the beginning (see Bartha’s in-depth overview of analogical arguments in Bartha 2013). When coupled with a suitable theory of confirmation, analogy can finally be used to support a scientific hypothesis where only evidence from an analogue system is obtained (see, e.g., Hesse 1964 or Poellinger and Beebe 2017). This is of special interest for our purposes in this paper.

In pharmacology and epidemiology, explanation and prediction by analogy rest both on sufficiently well-described background conditions and knowledge about the relevant biological mechanisms at work. Describing all relevant differences between two drugs might be the first step towards justifying assessment by analogy – the second step might then be inference in a unified model where all the relevant differences are integrated as parameters. Formal models relating different pieces of evidence can precisely be of help for this task. Once relevant influences are distinguished from irrelevant ones and the contribution of differences in the relevant factors are determined, analogy will justifiedly help in identifying causation.

Reasoning by analogy is also at the basis of inductive inferences from study to target population. Indeed, because of the context sensitivity of many causal associations in the biological realm, these can hold only in specific populations, and therefore evidence about causal effects related to one population may not license similar conclusions about another population, unless the two population are analogous.

Our framework can be operationalised for the assessment of support by analogy in two ways:

-

1.

The investigated causal hypothesis DⒸH (with all of its sub-structure) may be related to a second causal hypothesis D ′ⒸH ′ which has already been confirmed in specific previous studies. Now, if scientists have sufficient reason to propose an analogy relation between D and D ′ (e.g., a high degree of chemical or functional similarity), knowledge about this second causal hypothesis supports the first hypothesis DⒸH via analogy (“horizontally” on the same level of investigation).

-

2.

Since in general no piece of evidence comes from an experiment or a study conducted on the target population, the question of applicability of the study’s findings must be phrased in terms of the similarity between study and target populations: If study and target populations are sufficiently similar, researchers are licensed to reason about causal links in the target population by analogy with their test cases. The degree to which this kind of transfer is licensed is encoded in our framework as an attribute of available reports (see Section 3.3 below for a discussion of the relevance of evidence and how it “vertically” influences the assessment of the causal hypothesis).

Relativising the justification of analogue reasoning to a (comparative or numerical) distance measure (i.e., the similarity between drugs or populations) might of course be criticised due to the perspectival nature of similarity and relevance. Nevertheless, once this measure is agreed to be of sufficient strength, our framework can be used to express the confirmatory dynamics of support by analogy.

3.3 Relevance and reliability

In the literature on (the philosophy or even science of) evidence, we find the idea that studying the concept of “dimensions” of items of evidence can help address the notoriously tricky notion of “weight of evidence” (Weed 2005). Schum put forward a substance-blind classification of inferential power of evidence employing the dimensions: “credibility” and “relevance”, see Schum (2011). To score an item of evidence on these dimensions one needs to answer the following questions “Can one believe the reported evidence?” and “How does an item of evidence bear upon the proposition of interest?”.

In the same line, Roush argues that good evidence for the user has to be “credible” and “relevant” (see Roush 2005, Chapter 5).

In Cartwright (2008) and Cartwright and Stegenga (2011), Cartwright and Stegenga shift the focus to the user of evidence (policy or decision maker). With this move they make room for a more pragmatic notion of relevance which refers not only to the confirmatory force of the evidence with respect to the hypothesis under investigation, but also to whether the evidence acquired in the study may license the same conclusion in domains/populations which may differ from the study sample (external validity, extrapolation). We clearly demarcate these distinct notions of relevance by adopting a “relevance dimension”, which refers to external validity issues, as a notion separate from the standard Bayesian relevance (as paradigmatically measured by probabilistic dependence). Also we distinguish these from the reliability dimension.

Relevance Ideally, pharmacological studies would license the same inferences for the studied population and the target population of future drug users. In reality, studies are not conducted on the entire population of future drug users but on a much smaller number of patients, see Chan and Altman (2005, p. 1180), Button et al. (2013) for this problem in neuro science, Doll and Peto (1980) in cancer research and a philosophical discussion of this problem in Worrall (2007a, p. 992). Additionally, studied populations, in particular in RCTs, often fail to be representative for the target population due to strict patient inclusion criteria, see Revicki and Frank (1999) and Upshur (1995, p. 483). Therefore, there is a need to reason by analogy from the studied population to the population of interest (see Section 3.2.6).Footnote 15 The relevance pertaining to an item of evidence measures how well the observed results in a study population can be transferred to the target population of future drug users.

Reliability The “credibility” dimension of evidence relates to the source which originates it and the way it has been collected. Bovens & Hartmann, see (Bovens and Hartmann 2003, Chapter 4), elaborate on “reliability” as an instrument for Bayesian hypothesis confirmation where it is construed as i) a function of an instrument’s accuracy or ii) the credibility of testimony.

The sums at stake in drug licensing decision problems are enormous (revenues of successful drugs can reach billions of dollars within a few years) it is hence not surprising that vested interests may influence the flow of information. A point in case for vested interests to playing an inglorious role is the story of “Vioxx”, see Carné and Cruz (2005), Horton (2004), Jüni et al. (2004), Krumholz et al. (2007), and Holman and Bruner (2015). The abstract worry that financial conflicts of interests lead to a “bias in the synthesis and interpretation of scientific evidence” seems to be not merely abstract, see Bes-Rastrollo et al. (2013) and Dunn et al. (2014). A low reliability of an item of evidence may be due to a number of methodological flaws (confounding, biased studies, more broadly: poor design of a study, sub-optimal data recording) as well as not fully objective sources.

In our framework we shall use variables for every piece of evidence. To every such variable two further variables pertain, a variable for the relevance and a variable for the reliability.

4 Modeling scientific inference in Bayesian epistemology

4.1 The Bayesian paradigm

Bayesian epistemology is a current paradigm in the philosophy of science, see for example Howson and Urbach (2006) and Weisberg (2015). It has two virtues which are key for us. Firstly, it complies with Carnap’s principle of total evidence (Carnap 1947) which demands that all available evidence is taken into account when determining probabilities. This is achieved by updating prior probabilities by the evidence propositions yielding posterior probabilities.

Secondly, it allows for a nuanced assessment of hypotheses after incorporating all available evidence into the posterior. The degree to which the evidence boosts (or lowers) the probability of a hypothesis is the (dis-)confirmation the evidence provides to the hypothesis.Footnote 16 In Bayesian epistemology, the role of evidence in (dis-)confirming a hypothesis is thus grounded on a sound methodological bases.

A theoretical framework which allows us to distinguish the different epistemic levels of the problem at hand, as well as their interaction is presented in Bovens and Hartmann 2003, Chapter 4). This framework is a model for epistemic dynamics underpinning scientific inference and it provides a mathematical explanation for it by labeling nodes with epistemic categories. In Section 5, we will adapt their work for our purposes of causal inference in pharmacology.

4.2 Scientific hypothesis confirmation according to Bovens & Hartmann

The Bayesian network model of Bovens & Hartmann, presented in (Bovens and Hartmann 2003), (see (Darwiche 2009; Neapolitan 2003) for introductions to Bayesian networks) consists of the hypothesis, (some of) its observable consequences, reports on whether theses consequences were born out in experiments and the reliability of instruments used in these experiments. The graph structure of the Bayesian network represents conditional independencies between the variables. Conditional probabilities attach to every variable which specify the probability of a variable given its parents.

The following binary propositional variables are used: A variable HYP where the intended meaning for H y p is that “the hypothesis is true”, similarly for variables C o n i (“consequence i holds”), R e p i (“consequence i is reported”)Footnote 17 and R e l i (“report i is reliable”), cf. (Bovens and Hartmann 2003, p. 89). According to the Bayesian paradigm, a prior probability function P, defined over the algebra generated by these variables, is selected. Naturally, P is constrained to respect the conditional independencies encoded by the graph \(\mathcal G\). Updating the prior P, by conditionalising, then allows Bovens & Hartmann to calculate posterior probabilities given experimental results.

The set of meaningful conditional probabilistic independencies in prior P can be read off by means of the graphical d-separation criterion (Pearl 2000). The graph \(\mathcal G\) in Fig. 6 depicts the situation for one single consequence. The general case for more consequences is more involved and depicted in Fig. 7. These conditional independencies – denoted by  – are Footnote 18

– are Footnote 18

The choice of prior is further constrained by

Bovens & Hartmann take Eq. 5 to be their definition of what it means to be an observable consequence of a given hypothesis, see Bovens and Hartmann (2003, p. 90).

Equation 6 refers to the convention that when an instrument is unreliable, then the probability of receiving a report does not depend on whether the consequence holds. When the instrument is fully reliable, then the probability of receiving a report that the consequence has been observed equals one, if the consequence holds; see Eq. 7. Vice versa, a fully reliable instrument produces a positive report with probability zero, if the consequence does not hold, see Eq. 8.

They then determine the (posterior) probability of the hypothesis being true, given a report and its reliability (Bovens and Hartmann 2003, p. 92, Equation 4.5). This probability can be computed directly from the conditional probabilities specified at the nodes in the Bayesian network. The comparison of prior and posterior probability is at the heart of Bayesian confirmation theory.

The rationale for arranging the theoretical layer (HYP, CON) and the empirical/methodological layer (REP, REL) in the proposed way is summarised in the following:

-

1.

Testable consequences of the hypothesis are inserted as intermediate nodes between the HYP-node and the report nodes: H Y P⊥ ⊥R E P i | C O N i for all i. According to Bovens & Hartmann, this formally captures the fact that the hypothesis cannot be tested directly, only observable consequences of the hypothesis are testable (cf. Bovens and Hartmann 2003, p. 89).

-

2.

All consequence nodes (together with their respective descendants) are conditionally independent given HYP: R E P i ⊥ ⊥R E P j | H Y P for all i,j with i≠j. This models the situation where a range of consequences can be assessed by multiple independent tests (see the left portion of Fig. 7 and cf. Bovens and Hartmann 2003, p. 98). If, however, the dependence of studies or tests is to be marked in the structure, one REL node might also have more than one REP node as a child, thereby creating dependency between reports (see the right portion of Fig. 7).

-

3.

All Rel nodes are unconditionally independent of their non-descendants. That means: R E L i ⊥ ⊥n o n D e s c(R E L i ) for all i; i.e., in the case of all reports being independent we have R E L i ⊥ ⊥H Y P for all i (with i indicating a report for the i-th consequence). This independence formalises the assumption that from learning something about the reliability of a report we cannot infer anything about the truth (or falsity) of the hypothesis (cf. Bovens and Hartmann 2003, p. 58).

4.3 Virtues of the Bovens & Hartmann approach

Probabilistic networks have found wide-spread use in applications in a great number of domains including medicine (e.g., differential diagnosis). These networks implement knowledge from a very specific domain and nodes generally represent concrete phenomena or entities of interest.

Bovens & Hartmann draw on this formal apparatus to abstractly model scientific inference and to formally relate higher level epistemic categories. While the mathematics do not change, the graph provides an illustration of the epistemic dimensions at stake and thereby provides greater insight into some methodological issues by offering, as it were, a mathematical explanation of their dynamics. Indeed, this sort of representation allows us to single out in the mathematical formulae the specific role played by each epistemic dimension in the inferential dynamics; e.g., the role of reliability with respect to the propagation of confirmation in connection with replication of studies and with heterogenous sources of evidence (see Section 3.2.2).

5 A framework for the assessment of harms in pharmacology, part 2: structure

5.1 Graphical representation – variables and intended interpretations

For the purpose of developing our Bayes net model of pharmacological inference, our main interest is determining rational degrees of belief in the causal hypothesis “Drug D causes harm H in population U”. For ease and clarity of exposition, we here use a binary propositional variable. We hence introduce a variable Ⓒ with the intended meaning of Ⓒ = T R U E is that: “Drug D causes harm H in U”.

As outlined in Section 3, we take it that rational belief in the causal hypothesis is based on causal indicators: difference-making, probabilistic dependence, dose-response relationship, mechanisms etc. We use binary propositional indicator variables I N D i (i∈{1,2,3,4,...}) with I N D 1=Δ,I N D 2=PD,I N D 3 = D R,I N D 4 = R o G,I N D 5=M,I N D 6=T (difference-making, probabilistic dependence, dose-response relationship, rate of growth, mechanisms and temporality, respectively) and possibly further variables for causal indication I N D k . I n d i means that the i-th consequence of the causal hypothesis holds.

For every item of evidence r and every causal indicator i the item of evidence informs us about, we use a report variable \(RE{P_{r}^{i}}\).Footnote 19 Reports may come from various kinds of studies such as case reports,Footnote 20 case series, case-control studies and cohort studies; or from experiments at various levels: in vitro, in vivo and clinical studies. Relevant evidence may also come from knowledge discovery techniques as well as computational modeling. The meaning of \(RE{P_{r}^{i}}\) is that the item of evidence is consistent with the causal indicator for a population for which the studied population is representative.

We use two further variables for relevance and reliability for report variables in order to account for these two dimensions of the evidence and their role in (dis)confirming the investigated hypothesis (cf. Section 3.3).

5.2 Probabilistic independencies

By borrowing the quite general reconstruction of scientific inference from Bovens & Hartmann we import their direct and indirect probabilistic dependencies and conditional independencies (see our summary of the relevant independencies from Bovens and Hartmann (2003) in Section 4.2e). Beyond that, our choice of causal indicators and subsequently their formalisation as variables (i.e., nodes in the graph) requires us to make the theoretical, implicatory dependencies transparent by expressing them as links in the network. The following list motivates our modeling choices for the formalisation of the conceptually related indicators R o G, PD, DR, and Δ:

-

1.

We model the implication relations between the four indicators as edges on the second level of our network: the detection of a high rate of growth implies a dose-response relationship which in turn means that the variables under consideration are probabilistically dependent.

Note that the edges on the second level are not a superfluous addition: If probabilistic dependence is measured but the causal hypothesis is known to be false (i.e., Ⓒ is fixed to FALSE), the variables PD, DR, and R o G remain dependent since they overlap conceptually. Inserting direct edges on the second level precisely expresses this overlap. The categorical independence between the hypothesis level and the indicator level becomes apparent in this structure: PD, DR, and R o G are not dependent via the Ⓒ node, but directly linked to one another on the indicator level.

-

2.

No direct edge links R o G and PD since an observed high rate of growth implies an observed dose-response which implies an observed probabilistic dependence in turn and mediates the inference from R o G to PD (in other words, DR screens off PD from R o G).

-

3.

Our choice to not insert an edge between DR, R o G, PD and Δ reflects our intention to clearly demarcate the conceptual/methodological dividing line between observational/static and interventional/dynamic support for the causal hypothesis: Ⓒ screens off Δ from the observational/static indicators.Footnote 21 And formally:

. This principled distinction is already laid out in Hume’s famous twofold definition of causation which can be seen as a point of reference both for regularity/supervenience as well as for counterfactual/manipulationist theories of causation (cf. Hume 1748, Sec. VII). Finally, all indicators listed above are imperfect ones, except for difference-making. Nevertheless, we are not collapsing Δ and Ⓒ into a single node: Following the philosophical literature on causality, we consider that when a difference-making relationship between two events or variables holds, then this is a sufficient – although not necessary – condition for causality. This can be characterized in logical terms as an entailment relationship: Δ⊃Ⓒ. Hence, in our system, the probability of a causal relationship, given a genuine difference making relationship is 1: P(Ⓒ | Δ)=1. The inverse entailment, Ⓒ⊃Δ, does not hold however. Although Δ – representing the possibility of ideal controlled variance – implies Ⓒ in a definitional way, knowledge of Ⓒ does not necessitate the existence difference-making – e.g., in cases of “holistic causation”.Footnote 22

. This principled distinction is already laid out in Hume’s famous twofold definition of causation which can be seen as a point of reference both for regularity/supervenience as well as for counterfactual/manipulationist theories of causation (cf. Hume 1748, Sec. VII). Finally, all indicators listed above are imperfect ones, except for difference-making. Nevertheless, we are not collapsing Δ and Ⓒ into a single node: Following the philosophical literature on causality, we consider that when a difference-making relationship between two events or variables holds, then this is a sufficient – although not necessary – condition for causality. This can be characterized in logical terms as an entailment relationship: Δ⊃Ⓒ. Hence, in our system, the probability of a causal relationship, given a genuine difference making relationship is 1: P(Ⓒ | Δ)=1. The inverse entailment, Ⓒ⊃Δ, does not hold however. Although Δ – representing the possibility of ideal controlled variance – implies Ⓒ in a definitional way, knowledge of Ⓒ does not necessitate the existence difference-making – e.g., in cases of “holistic causation”.Footnote 22

Note also that we are purposely choosing to direct the edge between Ⓒ and M towards M: We understand the existence of a mechanism as a testable consequence of the causal hypothesis, i.e., as a constitutive element of Ⓒ rather than a pre-requisite (or even somehow causally prior) – in accordance with all other indicator nodes.Footnote 23

The Figs. 8–10 graphically represent aspects of the graph of our Bayesian network. Figure 8 displays epistemic dimensions at stake, Fig. 9 shows the causal indicators, their reciprocal relations, and the studies which inform us about single indicators. Figure 10 depicts a case in which studies are informative about more than one indicator.

Example of a Bayesian net for two studies which both inform us about two indicators. The study generating reports for difference making and probabilistic dependence may be an RCT, while the study generating the two reports on the right may be a case control study. For both studies, we use one reliability and one relevance variable each

5.3 Adopting a prior

Success of Bayesian reasoning hinges on the choice of a suitable prior. Incorporating domain knowledge plays a major role in the choice of a prior. Domain knowledge may be elicited from experts.Footnote 24

While the prior has to satisfy the conditional independences discussed above and incorporate prior domain knowledge, there are further properties in the problem specification that a sensible prior has to satisfy; which we shall now discuss.

In Eq. 16 all report, reliability and relevance variables pertain to the same indicator variable.

Equation 9 means that the conditioning on one causal indicator boosts the belief in the causal hypothesis being true, while Eq. 10 means that conditioning on the negation of an indicator lowers the belief in the causal hypothesis. Similarly, the same holds in the presence of another instantiated indicator, see Eqs. 11 and 12. Equations 13 and 14 express that probabilistic dependence is a weaker causal indicator than difference-making or high rate of growth. Consequently, conditioning on difference-making, dose-response relationship, or high rate of growth gives a greater boost to the belief in the causal hypothesis than probabilistic dependence. Vice versa, conditioning on the negation of probabilistic dependence reduces belief in the causal hypothesis more strongly than conditioning on the negation of a dose-response relationship or high rate of growth.

One reliable study, which is reliable and relevant for the target population, and finds that, say, there is probabilistic dependence between the drug an adverse drug reactions, significantly boosts the belief that there is probabilistic dependence in the target population; (15).

Equation 16 formalises the following thought: Belief in the inconsistency of a report and the respective indicator is boosted if the study is either irrelevant, unreliable, or both (¬(R e l & R l v) serves to explain the inconsistency).

5.4 Two model calculations

We now illustrate how to use our model by giving two example calculations. First, we show how to decide between withdrawing the drug or not. Secondly, we show how the posterior weight of an item of evidence that conflicts with a large consistent body of evidence decreases.

5.4.1 The threshold p ∗

With the prior in place, we can now investigate conditions under which we will recommend to withdraw the drug D by calculating the threshold p ∗.

We find for a pair of distinct indicator variables I N D j ,I N D k ≠Δ which are not linked by an arrow

Letting a,b∈[0,1]

we obtain

For fixed a, \(\frac {a}{a+b}\) is a strictly decreasing function in b; if a=0, then \(\frac {a}{a+b}=0\) (as long as b ≠ 0 in which case the fraction is ill-defined), if a=1, then \(\frac {a}{a+b}\) varies between 1 and 0.5. For fixed b>0, \(\frac {a}{a+b}\) increases strictly with increasing a. If b=0, then \(\frac {a}{a+b}=1\) (assuming a ≠ 0), if b=1, then \(\frac {a}{a+b}\) varies between 0 and 0.5.

For the threshold p ∗ given by the decision problem (p ∗ = P(Ⓒ|I n d j &¬I n d k )), we can compute the threshold value a ∗ for for fixed b (and vice versa) as follows (assuming we never divide by zero):