Abstract

The nonlinear matrix equation \(X^p=R+M^T(X^{-1}+B)^{-1}M\), where p is a positive integer, M is an arbitrary \(n\times n\) real matrix, R and B are symmetric positive semidefinite matrices, is considered. When \(p=1\), this matrix equation is the well-known discrete-time algebraic Riccati equation (DARE), we study the convergence rate of an iterative method which was proposed in Meng and Kim (J Comput Appl Math 322:139–147, 2017). For the generalized case \(p\ge 1\), a structured condition number based on the classic definition of condition number is defined and its explicit expression is obtained. Finally, we give some numerical examples to show the sharpness of the structured condition number.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the nonlinear matrix equation

where p is a positive integer, \(M \in {\mathbb {R}}^{n\times n}\), B and R are symmetric positive semidefinite matrices.

For the special case \(p=1\), Eq. (1) is exactly

which, under certain condition, is a simplified symmetric form of the well-known discrete-time algebraic Riccati equation (DARE)

where \(M\in {\mathbb {R}}^{n\times n}\), \(E\in {\mathbb {R}}^{n\times m}\), \(C\in {\mathbb {R}}^{q\times n}\) and \(G\in {\mathbb {R}}^{m\times m}\) is a symmetric positive definite matrix. It has been proved that if (M, E) in DARE (3) is a stabilizable pair and (C, M) is a detectable pair,Footnote 1 then DARE (3) has a unique symmetric positive definite solution, see [7, 12, 22]. Under this assumption, with \(B=EG^{-1}E^T\) and \(R=C^TC\), the DARE (3) can be rewritten in the symmetric form as Eq. (2).

The symmetric positive definite solution of Eq. (2) or, equivalently, the DARE (3) is of theoretical and practical importance in some control problems, see [1, 3, 8, 13, 15, 16, 23] and the references therein. For finding the unique symmetric positive definite solution, Komaroff [11] proposed a fixed-point iteration

and proved that the matrix sequence \(\{X_k\}\) converges to the unique positive definite solution when M is nonsingular, \(R>0\) and \(B>0\). Later, Dai and Bai [5] showed that the matrix sequence \(\{X_k\}\) still converges even if M is singular, and they modified the fixed-point iteration to a new iteration as

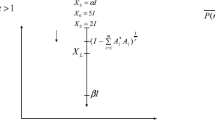

Comparing with the fixed-point iteration (4), even though the modified fixed-point iteration (5) avoids computing the inverse of \(X_k^{-1}+B\), it still involves the computation of \(X_k^{-1}\) at each step, which may lead to numerical instability problems due to the matrix inverse. In [18], under the condition that B is nonsingular, an inversion-free variant iteration which completely avoids the computation of \(X_k^{-1}\) is proposed as

In the process of iteration (6), only the inverse of matrix B is required, and since B is a given positive definite matrix, \(B^{-1}\) can be readily obtained.

It was proved in [5] that both the convergence rates of the basic fixed-point iteration (4) and the modified fixed-point iteration (5) remains linear. But, as far as we know, there is no work on the convergence rate of the inversion-free variant iteration (6). In this paper, we study the convergence behaviour of iteration (6).

For the general case where \(2\le p<\infty\), the existence of a unique positive definite solution of Eq. (1) is a direct consequence of the results by Jung et al. [10]. In [18], two iteration methods for computing the unique positive definite solution, as well as a lower and an upper bound of the solution, are given. Here, as a continuation of the previous results, we develop a structured condition number for the nonlinear matrix equation (1). It is validated by numerical examples that the newly proposed structured condition number successively measures the sensitivity of the solution.

The rest of this paper is organized as follows. In Sect. 2, we derive the convergence rate of the inversion-free variant iteration (6). In Sect. 3, a structured condition number is defined and the explicit expression is derived. In Sect. 4, we give a numerical example to show the sharpness of the proposed structured condition number.

We begin with the notation used throughout this paper. \({\mathbb {R}}^{n\times n}\) is the set of \(n\times n\) matrices with elements on field \({\mathbb {R}}\). \({\mathbb {S}}^{n\times n}\) and \(\mathbb {P}(n)\) are, respectively, the set of symmetric matrices and positive definite matrices. \(\Vert \cdot \Vert\) and \(\Vert \cdot \Vert _F\) are the spectral norm and the Frobenius norm, respectively. For a symmetric matrix H, \(\lambda _{\mathrm{max}}(H)\) (\(\lambda _{\mathrm{min}}(H)\) ) denotes the maximal (minimal) eigenvalue of H and \(\rho (H)\) is the spectral radius of H. For a matrix \(A=(a_1,a_2,\ldots , a_n)=(a_{ij})\in {\mathbb {R}}^{n\times n}\) and a matrix B, \(\mathrm{vec}(A)\) is a vector defined by \(\mathrm{vec}(A)=(a_1^T,\ldots , a_n^T)^T\); \(A\otimes B=(a_{ij}B)\) is a Kronecker product. For Hermitian matrices X and Y, \(X\ge Y (X>Y)\) means that \(X-Y\) is positive semidefinite (definite). I represents the identity matrix of size implied by context. \(\Vert \cdot \Vert\) will be the spectral norm for square matrices unless otherwise noted.

2 Convergence rate

In this section, we study the convergence rate of the inversion-free variant iteration (6). Since B and R are positive semidefinite matrices, there is a matrix \(E\in {\mathbb {R}}^{m\times q}\) and a matrix \(C\in {\mathbb {R}}^{\ell \times m}\) such that \(B=EE^T\) and \(R=C^TC\). Then Eq. (2) is equivalent to the discrete-time algebraic Riccati equation

Throughout the rest of this section, we assume that (M, E) is a stabilizable pair and (C, M) is a detectable pair. Then the DARE (7), or equivalently, Eq. (2), has a unique positive definite solution \(X_+\) and

If both R and B are positive definite matrices, let \(B=Z\varLambda Z^T\) (\(Z^TZ=I, \varLambda =diag(\lambda _i(B))\)) be a spectral decomposition, and let \(R=C^TC\) where C is a matrix with independent columns. It can be easily proved that (M, Z) is a stabilizable pair and (C, M) is a detectable pair. Then Eq. (2) has a unique positive definite solution \(X_+\) and \(\rho ((I+BX_+)^{-1}M)<1\).

Lemma 2.1

([14, p.21]) Let T be a (nonlinear) operator from a Banach space E into itself and \(x^*\in E\)be a solution of \(x=Tx\). If T is Fr\({\acute{e}}\)chet differentiable at \(x^*\)with \(\rho (T'_{x^*})<1\), then the iterates \(x_{k+1}=Tx_k\ (k=0,1,\ldots )\)converge to \(x^*\), provided that \(x_0\)is sufficiently close to \(x^*\). Moreover, for any \(\epsilon >0\),

with \(\Vert \cdot \Vert\)is the norm in E and \(c(x_0;\epsilon )\)is a constant independent of k.

Theorem 2.2

For the inversion-free variant iteration (6), we have

Proof

Define an operator T on \({\mathbb {R}}^{n\times n}\) by

where \(Y\in {\mathbb {R}}^{n\times n}\). Then for the inversion-free variant iteration (6), we have

and \((BX_+B+B)^{-1}\) is a fixed point of T. Setting \(Z=BRB+BM^TB^{-1}MB+B\) for convenience, we have \(T(Y)=2Y-YZY+YBM^TYMBY\), then the Fr\({\acute{e}}\)chet derivative \(T'_{Y}: {\mathbb {R}}^{n\times n}\rightarrow {\mathbb {R}}^{n\times n}\) is

Let \(Y=(BX_+B+B)^{-1}\), it follows

Since B is supposed to be nonsingular, by Woodbury identity, we obtain \((B+X_+^{-1})^{-1}=B^{-1}-(BX_+B+B)^{-1}\). Hence

where (9) is obtained by using the fact that \(X_+\) is a solution of Eq. (2). Similarly, it can be obtained that

It follows that

Denote the eigenvalues of \(S=(I+X_+B)^{-1}M^T\) by \(\lambda _i, i=1,\ldots n\), and let them be ordered such that their moduli are nonincreasing, i.e.,

Since the “vec” form of the Fr\({\acute{e}}\)chet derivative \(T'_{(BX_+B+B)^{-1}}\) is \(S\otimes S\), then the eigenvalues of \(T'_{(BX_+B+B)^{-1}}\) are \(\lambda _i\lambda _j\) for \(i,j=1,\ldots ,n\). Hence, we have \(\rho \big (T'_{(BX_+B+B)^{-1}}\big )=\lambda _1^2\), that is, \(\rho \big (T'_{(BX_+B+B)^{-1}}\big )= \big (\rho ((I+BX_+)^{-1}M)\big )^2\).

According to [18, Theorem 3.6], it can be proved that the sequence \(\{Y_k\}\) converges to \((BX_+B+B)^{-1}\) which is the fixed point of T. By Lemma 2.1, we have

Moreover,

which implies that

\(\square\)

It was proved in [5] that both the convergence rates of the basic fixed-point iteration (4) and the modified fixed-point iteration (5) satisfy

It can seen that if \(\rho ((I+BX_+)^{-1}M)\) is very close to 1, the convergence of the inversion-free variant iteration (6), the modified fixed-point iteration (5) and the fixed-point iteration (4) may be very slow. Here we say a few words about the cyclic reduction, which has a quadratic convergence rate and can be applied to Eq. (2).

Suppose B is a positive definite matrix, applying the Woodbury identity to Eq. (2) yields

from which we get

Let \(Y=B^{-1}+X\), \(Q=B^{-1}+R+M^TB^{-1}M\), \(A=B^{-1}M\), then (10) can be written as

If \(X_+\) is the unique positive definite solution of Eq. (2), then \(Y_+=B^{-1}+X_+\) solves Eq. (11). It has been proved in [6] that if Eq. (11) has a positive definite solution, then it has a maximal one and a minimal one. We show that \(Y_+\) is the maximal solution of Eq. (11).

Theorem 2.3

If \(X_+\)is the unique positive definite solution of Eq. (2), then \(Y_+=B^{-1}+X_+\)is the maximal positive definite solution of Eq. (11).

Proof

For all \(|\lambda |<1\), it holds

Since \(X_+\) is the unique positive definite solution of Eq. (2), it follows from (8) that \(\rho ((I+BX_+)^{-1}M)<1.\) Thus, \((I+\lambda (I+BX_+)^{-1}M)\) is invertible for all \(|\lambda |<1\). Hence, \(Y_++\lambda A\) is invertible for all \(|\lambda |<1\). According to [6, Theorem 3.4], we know that \(Y_+\) is the maximal positive definite solution of Eq. (11). \(\square\)

Meini [17] showed that the cyclic reduction algorithm can be applied to find the maximal and minimal positive definite solutions of Eq. (11). Since we have proved that \(Y_+=X_++B^{-1}\) is the maximal solution of Eq. (11) if \(X_+\) is the unique positive definite solution of Eq. (2), it seems that it is possible to apply cyclic reduction algorithm to Eq. (2). Indeed, if we transplant Meini’s result to Eq. (11), we get

Theorem 3.1 in [17] shows that the matrices \(\{Q_k\}\) and \(\{Y_k\}\) are positive definite and it holds that \(0<Q_{k+1}\le Q_{k}\) and \(0<Y_{k+1}\le Y_k\) for all k. Moreover, Theorem 3.2 in [17] shows that the first block entry \(\{Y_k\}\) quadratically converges to \(Y_+=X_++B^{-1}\) if \(\rho (Y_+^{-1}A)<1\).

Since \(Y_+=B^{-1}+X_+\), the matrix sequence \(\{Y_k\}\) converges to \(Y_+\), which implies that the sequence \(X_k=Y_k-B^{-1}\) converges to \(X_+\). If we replace \(Y_k\) by \(X_k+B^{-1}\) and after rearrangement, the iteration based on cyclic reduction for Eq. (2) can be stated as

Theorem 2.4

Suppose \(R>0\)and \(B>0\), then the matrix sequence \(\{X_k\}\)generated by iteration (12) converges to \(X_+\), where \(X_+\)is the unique positive definite solution of Eq. (2), and it holds that \(0<X_{k+1}\le X_k\)and for any \(\epsilon >0\), \(\Vert X_k-X_+\Vert =O((\sigma +\epsilon )^{2\cdot 2^k})\), where \(\sigma =\rho ((I+BX_+)^{-1}M)\).

Proof

It has been proved by Meini [17] that \(0<Y_{k+1}\le Y_k\), which implies \(0<X_{k+1}\le X_k\). Since both R and B are positive definite matrices, then Eq. (2) has a unique positive definite solution \(X_+\) and \(\rho ((I+BX_+)^{-1}M)<1\), which gives

it follows from [17, Theorem 3.2] that \(\Vert Y_k-Y_+\Vert =O((\sigma +\epsilon )^{2\cdot 2^k})\), thus \(\Vert X_k-X_+\Vert =\Vert Y_k-Y_+\Vert =O((\sigma +\epsilon )^{2\cdot 2^k})\) . \(\square\)

Remark 2.5

Suppose \(B>0,\) let \(B=Z\varLambda Z^T\) (\(Z^TZ=I, \varLambda =diag(\lambda _i(B))\)) be a spectral decomposition, and let \(R=C^TC\) where C is a matrix with possibly dependent columns. The condition \(R>0\) in Theorem 2.4 is not necessary if (M, Z) is a stabilizable pair and and (C, M) is a detectable pair.

3 A structured condition number

In this section, based on the classic definition of the condition number defined in Rice [20], we define a new structured condition number at \(X_+\) of the nonlinear matrix equation (1), where \(X_+\) is the unique positive definite solution to Eq. (1). The explicit expression of the structured condition number is obtained.

Let \(M(\tau )=M+\tau E\), \(B(\tau )=B+\tau G\), and \(R(\tau )=R+\tau H\), where \(\tau\) is a real parameter, \(E, G, H\in {\mathbb {R}}^{n\times n}\), and G and H are symmetric matrices. We consider the equation

Let \(F(X,\tau )=X^p-M(\tau )^T(X^{-1}+B(\tau ))^{-1}M(\tau )-R(\tau )\), and \(X_+\) is the unique symmetric positive definite solution of Eq. (1). Then

-

1.

\(F(X_+,0)=0\);

-

2.

\(F(X,\tau )\) is differentiable arbitrarily many times in the neighborhood of \((X_+,0)\) since \(X^p\) and \(X^{-1}\) are the polynomial or rational function of the elements of X.

-

3.

\(\frac{\partial F}{\partial X}\big |_{(X_+,0)}=\sum _{k=0}^{p-1}X_+^{p-1-k}\otimes X_+^{k}-(M^T\otimes M^T)\big ((I+X_+B)^{-1}\otimes (I+X_+B)^{-1}\big )\).

Remark 3.1

The introduction of \(\partial F /\partial X\) is similar to [21]. According to [21], we know that \(\frac{\partial AXB}{\partial X}=B^T\otimes A\), \(\frac{\partial X^{-1}}{\partial X}=-(X^{-1})^T\otimes X^{-1}\), it follows from the chain rule that

Since \(M(0)=M, B(0)=B\), we have

\(\frac{\partial F}{\partial X}\big |_{(X_+,0)}\) is invertible under certain conditions. For example, if \(p\ge 2\) and at least one of R and M is nonsingular, it follows from Theorem 2.3 in [18] that \(X_+\ge \alpha _2 I\), where \(\alpha _2\) is the unique positive root of function \(g_2(x)=x^p-\lambda _{\mathrm{min}}(M^TM)(\lambda _{\mathrm{min}}(B)+x^{-1})^{-1}-\lambda _{\mathrm{min}}(R)\). Suppose \(\Vert M\Vert ^2<p\alpha _2^{p-1}\), then by Theorem 3.3.16 in Horn and Johnson [9] we get

which implies that \(\mathrm{det}\Big (\frac{\partial F}{\partial X}\big |_{(X_+,0)}\Big )\ne 0\).

In what follows, we suppose that \(\frac{\partial F}{\partial X}\big |_{(X_+,0)}\) is invertible. Then, according to implicit function theory [19], there is \(\delta >0\) such that if \(\tau \in (-\delta , \delta )\), there is a unique \(X(\tau )\) satisfying

-

1.

\(F(X(\tau ), \tau )=0, X(0)=X_+\).

-

2.

\(X(\tau )\) is differentiable arbitrarily many times with respect to \(\tau\).

For

by taking derivative for both sides of (14) with respect to \(\tau\) at \(\tau =0\), we arrive at

Let

where \(\varPi \in {\mathbb {R}}^{n^2\times n^2}\) is the vec permutation satisfying \(\varPi \mathrm{vec}(E)=\mathrm{vec}(E^T)\). Then we have

Note that \(J_1-J_2\) is invertible since \(J_1-J_2=\frac{\partial F}{\partial X}\big |_{(X_+,0)}\) and \(\frac{\partial F}{\partial X}\big |_{(X_+,0)}\) is assumed to be invertible. Based on the classic definition of the condition number defined in Rice [20], we define a condition number at the unique positive definite solution \(X_+\) of Eq. (1):

To obtain the explicit expression of the newly defined structured condition number (15), we first define a linear operator \({\mathcal {W}}\) on a matrix \(Z\in {\mathbb {C}}^{n\times n}\) with a positive semidefinite matrix S and a positive integer p as

Define another linear operator \({\mathcal {L}}: {\mathbb {S}}^{n\times n}\rightarrow {\mathbb {S}}^{n\times n}\) as

with \(Z\in {\mathbb {S}}^{n\times n}\).

Since \(J_1-J_2\) is invertible, it implies that \({\mathcal {L}}\) is invertible. Moreover, we define the operator \({\mathcal {P}}: {\mathbb {R}}^{n\times n}\times {\mathbb {S}}^{n\times n}\times {\mathbb {S}}^{n\times n}\rightarrow {\mathbb {S}}^{n\times n}\) as

with \(E\in {\mathbb {R}}^{n\times n}\) and \(G, H\in {\mathbb {S}}^{n\times n}\).

Taking derivative for both sides of (14) with respect to \(\tau\), and then letting \(\tau =0\), we arrive at

This yields

Define the operator \({\mathcal {D}}: {\mathbb {R}}^{n\times n}\times {\mathbb {R}}^{n\times n}\times {\mathbb {R}}^{n\times n}\rightarrow {\mathbb {R}}^{n\times n}\) as

where \(W, P, Q\in {\mathbb {R}}^{n\times n}\) and \({\mathcal {T}}: {\mathbb {R}}^{n\times n}\rightarrow {\mathbb {R}}^{n\times n}\) is an invertible linear operator defined by

with \(N\in {\mathbb {R}}^{n\times n}\).

Theorem 3.2

For matrices \(W, P, Q\in {\mathbb {R}}^{n\times n}\), there exist real matrices \({\hat{W}}, {\hat{P}}, {\hat{Q}}\in {\mathbb {R}}^{n\times n}\)with \(({\hat{W}}, {\hat{P}}, {\hat{Q}})\ne 0\)such that

where \({\hat{P}}^T={\hat{P}}\)or \({\hat{P}}^T=-{\hat{P}}\)and \({\hat{Q}}^T={\hat{Q}}\)or \({\hat{Q}}=-{\hat{Q}}^T\).

Proof

Let (W, P, Q) be a singular “vector” of \({\mathcal {D}}\) corresponding to the largest singular value, then the maximum in (18) occurs since the operator \({\mathcal {D}}\) is linear. Note that

then \((W,P^T,Q^T)\) is also a singular “vector” of \({\mathcal {D}}\) corresponding to the largest singular value. If \(P=-P^T, Q=-Q^T\), then \({\hat{W}}=W, {\hat{P}}=P\) and \({\hat{Q}}=Q\) are the real matrices satisfying (18). If \(P=-P^T, Q\ne -Q^T\), then \({\hat{W}}=W, {\hat{P}}=P\) and \({\hat{Q}}=\frac{Q+Q^T}{2}\) are the kind of matrices that satisfy (18). If \(P\ne -P^T, Q=-Q^T\), then there exist \({\hat{W}}=W, {\hat{P}}=\frac{P+P^T}{2}\) and \({\hat{Q}}=Q\) satisfying (18). If \(P\ne -P^T\), \(Q\ne -Q^T\), then \({\hat{W}}=W\), \({\hat{P}}=\frac{P+P^T}{2}\) and \({\hat{Q}}=\frac{Q+Q^T}{2}\) are the matrices satisfying (18). \(\square\)

Lemma 3.3

[4] If two Hermitian matrices \(W_1, W_2\in {\mathbb {C}}^{n\times n}\)satisfy \(W_2\ge W_1\ge -W_2\), then \(\Vert W_1\Vert _F\le \Vert W_2\Vert _F\).

We say the matrices M and B are (D, p)-stable if \({\mathcal {W}}(Z,D,p)-M^T(I+D^TB)^{-1}Z(I+BD)^{-1}M \ge 0\) implies \(Z\ge 0\).

Theorem 3.4

Under the condition that \(J_1-J_2\)is invertible and matrices M and B are \((X_+, p)\)-stable. The structured condition number at \(X_+\)of Eq. (1), where \(X_+\)is the unique positive definite solution to Eq. (1), is

Proof

It follows from (17) that

Then, according to (15) and (16), it suffices to prove

It is clear that

What left to prove is

By Theorem 3.2, there exists \(({\hat{W}},{\hat{P}},{\hat{Q}})\ne 0\), where \({\hat{W}},{\hat{P}},{\hat{Q}} \in {\mathbb {R}}^{n\times n}\), \({\hat{P}}\) and \({\hat{Q}}\) are either symmetric or skew-symmetric matrices, such that

There are four cases according to the symmetry or anti-symmetry property of matrices \({\hat{P}}\) and \({\hat{Q}}\).

Case 1: \({\hat{P}}={\hat{P}}^T\) and \({\hat{Q}}={\hat{Q}}^T\). Let \({\hat{E}}={\hat{W}}\), \({\hat{G}}={\hat{P}}\) and \({\hat{H}}={\hat{Q}}\), it yields

Case 2: \({\hat{P}}^T=-{\hat{P}}\ne 0\) and \({\hat{Q}}^T={\hat{Q}}\). In this case, both \(({\hat{W}}, {\hat{P}},{\hat{Q}})\) and \(({\hat{W}}, -{\hat{P}},{\hat{Q}})\) satisfy (18). As a linear combination of them, \((0, {\hat{P}},0)\ne 0\) also satisfies (18).

It is clear that there is a real orthogonal matrix U and \(p_i>0 \ (i=1,2,\ldots , k)\) such that

Let \({\hat{E}}=0, {\hat{H}}=0\) and

we have

Let \({\hat{Z}}={\mathcal {D}}(0,{\hat{P}},0)\in {\mathbb {R}}^{n\times n}\) and \({\tilde{Z}}={\mathcal {P}}(0,{\hat{G}},0)\in {\mathbb {S}}^{n\times n}\), then

and

It follows that

and

Since M and B are \((X_+, p)\)-stable, we have from (26) and (27) that \({\tilde{Z}}+i{\hat{Z}}\ge 0\) and \({\tilde{Z}}-i{\hat{Z}}\ge 0\), which leads to

As a direct consequence of Lemma 3.3, we have \(\Vert i{\hat{Z}}\Vert _F\le \Vert {\tilde{Z}}\Vert _F.\)

Then,

Now a symmetric matrix \({\hat{G}}\in {\mathbb {S}}^{n\times n}\) is found and satisfies

Case 3: \({\hat{P}}^T={\hat{P}}\) and \({\hat{Q}}^T=-{\hat{Q}}\ne 0\). In this case, \((0,0,{\hat{Q}})\) satisfies (18). It is clear that there is a real orthogonal matrix V and \(q_i>0 \ (i=1,2,\ldots , \ell )\) such that

Let

then we have

Let \({\hat{Z}}={\mathcal {D}}(0,0,{\hat{Q}})\in {\mathbb {R}}^{n\times n}\) and \({\tilde{Z}}={\mathcal {P}}(0,0, {\hat{H}})\in {\mathbb {S}}^{n\times n}\), it yields

and

Since M and B are \((X_+, p)\)-stable, we have from (31) and (32) that \({\tilde{Z}}+i{\hat{Z}}\ge 0\) and \({\tilde{Z}}-i{\hat{Z}}\ge 0\), which leads to

Analogously to the last part in Case 2, we find a symmetric matrix \({\hat{H}}\in {\mathbb {S}}^{n\times n}\) such that

Case 4: \({\hat{P}}^T=-{\hat{P}}\ne 0\) and \({\hat{Q}}^T=-{\hat{Q}}\ne 0\). In this case, \((0, {\hat{P}},{\hat{Q}})\) satisfies (18). Obviously, \({\hat{P}}\) has a form as (24) and \({\hat{Q}}\) has a form as (29). Choose \({\hat{G}}\) and \({\hat{H}}\) to have the form (25) and (30), respectively.

Let \({\hat{Z}}={\mathcal {D}}(0,{\hat{P}},{\hat{Q}})\in {\mathbb {R}}^{n\times n}\) and \({\tilde{Z}}={\mathcal {P}}(0,{\hat{G}}, {\hat{H}})\in {\mathbb {S}}^{n\times n}\), it yields

and

Analogously to the last part in Case 2 again, we now find the symmetric matrices \({\hat{G}}, {\hat{H}}\in {\mathbb {S}}^{n\times n}\) such that

From (23), (28), (33) and (36), we can conclude that

which, together with (22), leads to (21). It follows from (15), (16), (20) and (21) that (19) holds. \(\square\)

The condition that \(J_1-J_2\) is invertible in Theorem 3.4 highly relies on \(X_+\). Since the value of \(X_+\) may not be trivial in most cases, a sufficient condition to determine whether \(J_1-J_2\) is invertible is necessary. As we have mentioned, for \(p\ge 2\), at least one of R and M is nonsingular and \(\Vert M\Vert ^2<p\alpha _2^{p-1}\), it can be proved analogously to (13) that \(J_1-J_2\) is invertible. We thus get the following corollary.

Corollary 3.5

Suppose \(p\ge 2\)is a positive integer, at least one of R and M is nonsingular, \(\Vert M\Vert ^2<p\alpha _2^{p-1}\)and matrices M and B are \((X_+, p)\)-stable. The structured condition number at the unique positive definite solution \(X_+\)of Eq. (1) is

For \(p=1\), if \(X_+\) is the unique positive definite solution of the DARE (2), then \(\rho ((I+BX_+)^{-1}M)<1\). It follows that

is invertible. Moreover, since \(\rho ((I+BX_+)^{-1}M)<1\), for a Hermitian matrix Z, \(Z-M^T(I+X_+B)^{-1}Z(I+BX_+)^{-1}M \ge 0\) implies \(Z\ge 0\). We have the following theorem.

Theorem 3.6

If \(p=1\), \(X_+\)is the unique positive definite solution of the DARE (2), the structured condition number at \(X_+\)is

where \(J=(M^T\otimes M^T)((I+BX_+)^{-T}\otimes (I+X_+B)^{-1})\).

4 Numerical examples

In this paper, we apply the iteration method proposed in [18] for finding the minimal nonnegative solution of Eq. (1) and of the perturbed equation. Our experiments were done in Matlab R2017b with machine precision around \(10^{-16}\) and the iterations terminate if the relative residual \(\rho _{res}(X_k)\) satisfies

Example 4.1

We consider the matrix equation (2) with the elements of matrix M being defined by the following scheme:

-

(I)

For a real \(0<\alpha <1\).

-

(II)

For \(i=1,\ldots , n\):

-

(a)

for \(j=i,\ldots ,n\), set \(m_{ij}=i^2+1/j\);

-

(b)

compute \(s_1=\sum _{j=1}^{i-1}m_{ij}\), \(s_2=\sum _{j=i}^nm_{ij}\);

-

(c)

for \(j=i,\ldots ,n\), set

$$\begin{aligned} m_{ij}=m_{ij}\frac{1-\alpha -s_1}{s_2},\quad m_{ji}=m_{ij}. \end{aligned}$$Let \(R=I_n\), \(B=\left( \begin{array}{ccccc} 3&{} -1&{} &{} &{}\\ -1&{} 3&{}-1&{} &{} \\ &{}\ddots &{}\ddots &{}\ddots &{}\\ &{} &{}-1&{}3&{}-1\\ &{} &{} &{}-1&{}3\\ \end{array}\right) _{n\times n}.\)

-

(a)

Since both R and B are positive definite matrices, the DARE (2) has a unique positive definite solution \(X_+\) and \(\rho ((I+BX_+)^{-1}M)<1\). This implies that iteration (12) converges quadratically.

For different values of the matrix size n and parameter \(\alpha\), we apply the four iterations to Eq. (2). Table 1 reports the number of iterations and the residual errors. From Table 1 we can see that the iteration based on cyclic reduction for Eq. (2) uses much less iterations for obtaining the unique positive definite solution.

Example 4.2

This is an example for the DARE (2) and is taken from [2]. The coefficient matrices are constructed as follows. Let \(M_0=diag(0, 1, 3)\), \(V=I-\frac{2}{3}vv^T\), \(v^T=[1, 1, 1]\). Then

where \(\epsilon\) is a real parameter.

Suppose the coefficients are perturbed, in MATLAB commands, as follows.

Set \(\varDelta M={\tilde{M}}-M\), \(\varDelta R={\tilde{R}}-R\) and \(\varDelta B={\tilde{B}}-B\). Using the structured condition number (19), we obtain a perturbation bound \({\mathcal {K}}_{X_+}\varDelta\), where \(\varDelta =\frac{\Vert (\varDelta M, \varDelta R, \varDelta B)\Vert _F}{\Vert (M, R,B)\Vert _F}\). We compute the relative error \(\frac{\Vert {\tilde{X}}_+-X_+\Vert _F}{\Vert X_+\Vert _F}\). Let \(\epsilon =2\), for \(j=2,4,6\) and \(p=1,3,5\), we compare the computed perturbation bound with the relative error. The results are shown in Table 2.

For this example, Table 2 shows that the estimated perturbation bound is very close to the exact relative perturbation errors, which implies that the structured condition number successfully indicated the sensitivity of the minimal positive definite solution \(X_+\) to the perturbations in coefficients.

5 Conclusion

In this paper, the convergence behaviour of an already existing iterative method is studied. For the general case \(p\ge 1\), we define a structured condition number at the unique positive definite solution \(X_+\) of Eq. (1), which is validated by numerical examples that the newly proposed structured condition number measures the sensitivity of the solution well.

Notes

(M, E) is a stabilizable pair if \(\omega ^TE=0\) and \(\omega ^TM=\lambda \omega ^T\) hold for some constant \(\lambda\) and vector \(\omega\), then \(|\lambda |<1\) or \(\omega =0\). (C, M) is a detectable pair if \((M^T,C^T)\) is a stabilizable pair.

References

Anderson, W.N., Kleindorfer, G.D., Kleindorfer, P.R., Woodroofe, M.B.: Consistent estimates of the parameters of a linear system. Ann. Math. Stat. 40, 2064–2075 (1969)

Benner, P., Laub, A.J., Mehrmann, V.: A collection of benchmark examples for the numerical solution of algebraic Riccati equations II: Discrete-time case, Tech. Report SPC 95-23, Fak. f. Mathematik, TU Chemnitz Zwickau, 09107 Chemnitz, FRG (1995)

Bougerol, Ph: Kalman filtering with random coefficients and contractions. SIAM J. Control Optim. 31, 942–959 (1993)

Byers, R., Nash, S.: On the singular vectors of the Lyapunov operator. SIAM J. Algebraic Discrete Methods 8, 59–66 (1987)

Dai, H., Bai, Z.-Z.: On eigenvalue bounds and iteration methods for discrete algebraic Riccati equations. J. Comput. Math. 29, 341–366 (2011)

Engwerda, J.C., Ran, A.C.M., Rijkeboer, A.L.: Necessary and sufficient conditions for the existence of a positive definite solution of the matrix equation \(X+A^*X^{-1}A=Q\). Linear Algebra Appl. 186, 255–275 (1993)

Gudmundsson, T., Kemmey, C., Laub, A.J.: Scaling of the discrete-time algebraic Riccati equation to enhance stability of the Schur solution method. IEEE Trans. Autom. Control 37, 513–518 (1992)

Guo, C.-H., Lancaster, P.: Iterative solution of two matrix equations. Math. Comput. 68, 1589–1603 (1999)

Horn, R.A., Johnson, C.R.: Topics in Matrix Analysis. Cambridge University Press, Cambridge (1991)

Jung, C., Kim, H.-M., Lim, Y.: On the solution of the nonlinear matrix equation \(X^n=f(X)\). Linear Algebra Appl. 430, 2042–2052 (2009)

Komaroff, N.: Iterative matrix bounds and computational solutions to the discrete algebraic Riccati equation. IEEE Trans. Autom. Control 39, 1676–1678 (1994)

Konstantinov, M., Petkov, P., Christov, N.: Perturbation analysis of the discrete Riccati equation. Kybernetika 29, 18–29 (1993)

Kouikoglou, V.S., Phillis, Y.A.: Trace bounds on the covariances of continuous-time systems with multiplicative noise. IEEE Trans. Autom. Control 38, 138–142 (1993)

Krasnosel’skii, M.A., Vainikko, G.M., Zabreiko, P.P., Ruticki, YaB, Stet’senko, V.Ya.: Approximate Solution of Operator Equations. Wolters-Noordhoff Publishing, Gronongen (1972)

Lancaster, P., Rodman, L.: Algebraic Riccati Equations. Oxford Science Publications, Oxford University Press, Oxford (1995)

Lee, C.-H.: Simple stabilizability criteria and memoryless state feedback control design for time-delay systems with time-varying perturbations. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 45, 1211–1215 (1998)

Meini, B.: Efficient computation of the extreme solutions of \(X+A^*X^{-1}A=Q\) and \(X-A^*X^{-1}A=Q\). Math. Comput. 71, 1189–1204 (2002)

Meng, J., Kim, H.-M.: The positive definite solution of the nonlinear matrix equation \(X^p=A+M(B+X^{-1})^{-1}M^*\). J. Comput. Appl. Math. 322, 139–147 (2017)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equation in Several Variables. Academic Press, New York (1970)

Rice, J.R.: A theory of condition. SIAM J. Numer. Anal. 3, 287–310 (1996)

Sun, J.-G.: Eigenvalues and eigenvectors of a matrix dependent on several parameters. J. Comput. Math. 3, 351–364 (1985)

Wonham, W.M.: Linear Multivariable Control: A Geometric Approach, 2nd edn. Springer, New York (1979)

Wang, S.-S., Chen, B.-S., Lin, T.-P.: Robust stability of uncertain time-delay systems. Int. J. Control 46, 963–976 (1987)

Acknowledgements

This work was partially supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2017R1D1A3B04033516), the National Research Foundation of Korea (NRF) Grant funded by the Korean Government (MSIP) (NRF-2017R1A5A1015722) and the Basic Science Research Program through the National Research Foundation of Korea (NRF-2019R1I1A1A01062548) funded by the Ministry of Education, and the National Natural Science Foundation of China under grant No.11961048, NSF of Jiangxi Province with No.20181ACB20001. The authors thank the anonymous referees for providing very useful suggestions for improving this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest was reported by the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Meng, J., Chen, H., Kim, YJ. et al. A further study on a nonlinear matrix equation. Japan J. Indust. Appl. Math. 37, 831–849 (2020). https://doi.org/10.1007/s13160-020-00421-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13160-020-00421-3