Abstract

The paper is concerned with the problem of \(H_{\infty }\) filter design for delayed static neural networks with Markovian switching and randomly occurred nonlinearity. The random phenomenon is described in terms of a Bernoulli stochastic variable. Based on the reciprocally convex approach, a lower bound lemma is proposed to handle the double- and triple-integral terms in the time derivative of the Lyapunov function. Finally, the optimal performance index is obtained via solving linear matrix inequalities(LMIs). The result is not only less conservative but the time derivative of the time delay can be greater than one. Numerical examples with simulation results are provided to illustrate the effectiveness of the developed results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As the characteristic of distributed storage, parallel processing and self-learning ability, the neural networks have been successfully applied to signal processing, static image processing and associative memories etc. But the change of the actual project, such as time delay [1–3] which is the main element of many physical processes, may lead to significantly deteriorated performances of the underlying neural networks. Therefore, the stability of the neural network is the core problem that needs to be considered. When the external states of neurons are taken as basic variables, the neural networks can be transformed into static neural networks, because of its extensive application, such as recursive back propagation neural network and the optimization of neural network etc., a number of papers have focus on static neural networks [4–10]. Guaranteed generalized H 2 performance state estimation problems of delayed static neural networks are studied in [4], and a H 2 filter is designed for a class of static neural networks in [5]. Furthermore, guaranteed \(H_{\infty }\) performance state estimation problems are added in [6]. The state estimation of static neural networks with delay-dependent and delay-independent criteria is presented in [7]. While in [8], by constructing a suitable augmented Lyapunov-Krasovskii function, the \(H_\infty\) state estimation problem of static neural network was further researched. On the other hand, the stability analysis of static recurrent neural networks has been researched in [9, 10].

On the other hand, the Markovian jumping system is very suitable for random mutation model, such as the change of the working point, sudden environmental interference, and biomedical error [11, 12]. In order to further study neural networks, many related results on stability analysis and filter design for neural networks with Markovian jumping parameters have been reported in [13–23]. However, more attention should be paid to these disturbances such as uncertainty, which is caused by the randomness. Strictly speaking, any actual system contains random factors. It is worth to know that the nonlinear disturbances may occur in a probabilistic way and are randomly changeable in terms of their types. Both time-delay and random disturbance have great influence in the stability of system, so a lot of reserach on them have been done in [24–30]. For examples, asynchronous \(l_2\)–\(l_\infty\) filter is designed for discrete-time stochastic systems where the sensor nonlinearities is considered in [24]. The randomly occurring parameter uncertainties with certain mutually uncorrelated Bernoulli distributed white noise sequences is introduced in [25]. \(H_\infty\) filtering for a class of discrete-time stochastic system with randomly occurred sensor nonlinearity has been researched in [26]. The effect of both variation range and distribution probability of the time delay is taken into account in [27]. Stochastic switched static neural networks with randomly occurring nonlinearities and stochastic delay is introduced and its mean square exponential stability proved in [28]. The problem of mean square asymptotic stability of stochastic Markovian jump neural networks with randomly occurred nonlinearities has been solved in [29]. Moreover, the analysis for the asymptotic stability of stochastic static neural networks is proposed in [30], where the time-delays are variable.

In this paper, according to the reciprocally convex approach [32, 33], which is a special type of function combination obtained by applying the inequality lemma to partitioned single integral terms, we will quote the lower bounder lemma in [5] instead of Wirtinger inequality in [31] for such a linear combination of the Lyapunov functional with the double- and triple-integral. Based on this lemma, we will get the lower prescribed level of noise attenuation compared with [31]. One needs to be noted is that the time derivative of the time delay can be greater than one in this paper. Motivated by the above discussion, the randomly occurred nonlinearity function will be taken into account with a Bernoulli stochastic variable in the paper. \(H_{\infty }\) filter is designed to ensure the resultant error systems are globally stochastic stable. And the \(H_{\infty }\) filter performance indexes are obtained by solving linear matrix inequalities (LMIs). Finally, numerical examples are given to demonstrate the validity and effectiveness of the proposed approach.

Throughout this paper, \(\mathbb {R}^n\) denotes the n-dimensional Euclidean space. I is the identity matrix. \(||\cdot ||\) denotes Euclidean norm for vectors. \(A^T\) stands for the transpose of the matrix A. For symmetric matrices X and Y, the notation \(X>Y\) (respectively \(X\ge Y\) ) means that the \(X-Y\) is positive definite (respectively, positive semi-definite). The symmetric terms in a symmetric matrix are denoted by \(*\) and \(diag\{Z_1,Z_2,\ldots ,Z_n\}\) denotes a blockdiagonal matrix. \(\mathbf {E}\{x\}\) stands for the expectation of the stochastic variable. \(L_{2}[0, \infty )\) is the space of the square integrable vector functions over \([0, \infty )\).

2 Problem description

Firstly, for \(t\ge 0\), \(r_t\), taking values from a finite set \(\mathcal {N}=\{1,2,\ldots ,n\}\), is a right-continuous Markov chain defined on a complete probability space \((\Omega ,\mathcal {F}, \mathcal {P})\). Its transition probability between different modes are given by

where \(\Delta >0\), \(\lim _{\Delta \rightarrow 0}\frac{o(\Delta )}{\Delta }=0\); \(\pi _{ij}\ge 0\), \(\forall i\ne j\), and for \(i\in \mathcal {N}\), \(\pi _{ii}=-\sum _{j=1,j\ne i}^n\pi _{ij}\).

Next, we consider the following static neural networks with Markovian switching and randomly occurred nonlinearity:

where \(x(t)=[x_1(t)~x_2(t)~\cdots ~x_n(t)]^T\in \mathbb {R}^{n}\) is the state vector of the neural networks with n neurons, \(A(r_{t})=diag\{a_1(r_{t}),a_2(r_{t}),\ldots ,a_n(r_{t})\}\) is a constant diagonal matrix with \(a_m(r_{t})>0\), \(w(t)\in \mathbb {R}^p\) is the disturbance input in \(L_{2}[0,\infty )\), \(y(t)\in \mathbb {R}^q\) is the measured output and z(t) is the signal to be estimated, \(f(x(t))=[f_1(x_1(t)),f_2(x_2(t)),\ldots ,f_n(x_n(t))]\) denotes the neuron activation function, for r(t) \(\in\) \(\mathcal {N}\), \(W(r_{t}),C(r_{t}),D(r_{t}),B_1(r_{t}),B_2(r_{t}),E(r_{t})\) are the constant matrices with compatible dimensions, and \(J(r_{t})\) is an external input vector. \(\psi (x(t))=[\psi _1(x_1(t)),\psi _2(x_2(t)),\ldots ,\psi _n(x_n(t))]\) is an output nonlinear signal. \(\phi (t)\) is a real valued initial function. d(t) is a time-varying delay with an upper bound \(d>0\) and scalar \(\mu\), such that d(t) satisfies

To simplify the notations, in the sequel, for each \(r(t)=i\in \mathcal {N}\), we denote the matrix \(A(r_t)\) to be \(A_i\) and so on.

For the neural network (1)–(4), the state estimator is constructed as follows:

where \(\hat{x}(t)\in \mathbb {R}^{n}\) and \(\hat{z}(t)\in \mathbb {R}^q\), and \(K_i\) are to be designed matrices with compatible dimensions.

Defining the error signals to be \(\tilde{x}(t)=x(t)-\hat{x}(t)\), and \(\tilde{z}(t)=z(t)-\hat{z}(t)\). It is easy to follow the above discussion that the estimation error systems are

where

The following presentation will give us a detailed understanding of the problem.

Remark 1

Markovian switching systems are considered in this paper, but the state of Markovian switching may be different with the state of systems. For example, many papers [1, 2, 11, 13, 18] have considered the impulsive neural network model, which belongs to a new category of dynamical systems, so it is neither purely continuous-time nor purely discrete-time.

Remark 2

According to the given hypothesis [25, 29], \(\alpha (t)\) is a Bernoulli process white sequence taking values of 1 and 0, and indicating that the output of the plant y(t) is linear or not, with \(Pr[\alpha (t)=1]=\alpha\), \(Pr[\alpha (t)=0]=1-\alpha\), where \(\alpha \in [0,1]\) is a known constant, for further calculation, we get

Remark 3

Following from Remark 2, \(\alpha (t)\) is not constant and time-varying, which means the output nonlinear signal will randomly appear in the measured output y(t). Therefore, the advantage of the model is more flexible and adapt to changes of the working conditions, even in some unexpected situations.

Assumption 1

The activation function f(t) in (1) and nonlinear function \(\psi (t)\) in (2) are both continuous and satisfy

where \(f(0)=0\), \(\psi (0)=0,\quad i=1, 2, \ldots , n\). \(u\ne v\), \(l_i^-\) and \(l_i^+\), \(m_i ^-\) and \(m_i ^+\) are real scalars, and they maybe positive, negative, or zero.

Remark 4

From Assumption 1, we know that the bound of activation function f(t) can be positive or negative, which means it will be more general than usual Lipschitz condition in [7].

Remark 5

The randomly occurred disturbance of neural network has been deeply researched in literatures [24, 26, 28, 29], where the nonlinear function \(\psi (t)\) satisfies the sector bounded condition. In this paper, in order to compare with [31], we will consider the same assumption for the activation function in [31]. Here the nonlinear functions f(t) and \(\psi (t)\) satisfy the conditions (15)–(16). Then with the stochastic variable \(\alpha (t)\), the occurrence probability of the event of \(\psi (t)\) is defined.

The following lemmas are given which will be used in our main results.

Lemma 1

[34] If there exists function \(v(t):[0,d]\rightarrow \mathbb {R}^n\) , such that \(\int ^d_0v^T(s)Xv(s)ds\) and \(\int ^d_0v(s)ds\) are well defined, the following inequality holds for any pair of symmetric positive definite matrix \(X\in \mathbb {R}^{n\times n}\) and \(d>0\).

Lemma 2

[5] For the given scalar \(d > 0\) , real matrix S and G satisfy

then with \(e(t)=[x^T(t)\quad \tilde{x}^T(t)]^T\), \(\bar{w}(t)=[w^T(t) \quad w^T(t)]^T\), one has

where

\(H_\infty\) filter problem can be utilized as: given a prescribed level of noise attenuation \(\rho > 0\), such that the following conditions hold.

3 Main results

Theorem 1

For the given scalar \(d>0\) and \(\mu\) , the resulting estimation error systems (9)–(10) are globally stochastic stable with \(H_{\infty }\) performance \(\rho\) , if there exist positive matrices \(P_{i1}\), \(P_{i2}\), \(Q_{11i}\), \(Q_{13i}\), \(Q_{21i}\), \(Q_{23i}\), \(Q_{11}\), \(Q_{22}\), \(R_{11}\), \(R_{22}\), \(R_{i11}\), \(R_{i22}\), \(S_{11}\), \(S_{22}\), \(S_{i11}\), \(S_{i22}\) , diagonal matrices \(T_i=diag\{t_{i1},t_{i2},\ldots ,t_{in}\}>0\), \(U_i=diag\{u_{i1},u_{i2},\ldots ,u_{in}\}>0\), and \(X_i\), \(Q_{12}\), \(Q_{12i}\), \(Q_{22i}\), \(R_{12}\), \(R_{i12}\), \(S_{12}\), \(S_{i12}\), \(G_i=\left[ \begin{array}{cc}G_{i11} &{} G_{i12}\\ G_{i21} &{} G_{i22} \end{array}\right]\) with appropriate dimension, such that the following LMIs hold for \(i\in \mathcal {N}\):

where

The gain matrices \(K_i\) can be designed as

Proof

Combing (1)–(4) and (9)–(10), one has \(e(t)=[x^T(t)\quad \tilde{x}^T(t)]^T\), \(\bar{z}(t)=[z^T(t) \quad \tilde{z}^T(t)]^T\), we get the following augmented system governing the estimation error dynamics:

where

Now we need to show the augmented error systems (28)–(29) are globally stochastic stable, we choose the following Lyapunov functions to begin this proof

where

Firstly, we define the weak infinitesimal operator \(\mathcal {L}\) as

where \(\mathbf {E}\) is defined as

Then for each \(i\in \mathcal {N}\), according to the weak infinitesimal operator \(\mathcal {L}\), we have the stochastic differential

where

Noting that \(\pi _{ij}\ge 0\), when \(j\ne i\), and \(\pi _{ii}\le 0\), one has

In the view of (24), we obtain

From (25), we also have the following calculation:

By Lemma 1 and 2, it is known that

For any \(T_i=diag\{t_{i1},t_{i2},\ldots ,t_{in}\}>0\), \(U_i=diag\{u_{i1},u_{i2},\ldots ,u_{in}\}>0\), considering the conditions in (15)–(16), similar to [27], we obtain

where

Considering the (39)–(45) and noting (5), then we take the mathematical expectation of \(\mathcal {L}V(t)\) with the conditions (13)–(14), and we finally get

Now, we define a function:

Taking (17) into consideration, it is not difficult to obtain

Letting \(\bar{S_i}=\frac{1}{d}S_i+\frac{1}{2}S\), and we obtain

where

If \(\tilde{\Sigma }_{1i}<0\), by Schur complement, it follows from (47) that

Then pre- and post-multiplying (48) by \(diag\{I,I,I,I,I,I,I,P_i\bar{S_i}^{-1},P_i\bar{S_i}^{-1}\}\) and its transpose, we get

Thus, \(\mathcal {J}<0\), in the view of the fact that \(-P_i\bar{S_i}^{-1}P_i\le -2P_i+\bar{S_i}\), by noting (27), (19)–(23) and (30)–(32), it is noting that \(\Sigma <0\) implies (26) holds, since \(w(t)\ne 0\), we have \(\mathcal {J}<0\), that is \(\Vert \tilde{z}(t)\Vert _{2}<\rho \Vert w(t)\Vert _{2}\).

When \(\bar{w}(t)=0\), the augmented system will be:

where

We choose the same Lyapunov functions (33) and calculate the weak infinitesimal operator \(\mathcal {L}V(t)\). In this case, by the similar line of the derivative of (46), we get:

where

With similar step (48)–(49), we get the following matrix inequality:

In the view of the fact that \(-P_i\bar{S_i}^{-1}P_i\le -2P_i+\bar{S_i}\), by noting (27), (19)–(23) and (30)–(32), it is noting that (26) implies (50) holds. Therefore, the estimation error systems with \(\bar{w}(t)=0\) are stochastically stable, this ends the proof. \(\square\)

Remark 6

The measurement model is proposed in (2), which provides a novel unified framework for the phenomenon of randomly occurred nonlinearities. The stochastic variable \(\alpha (t)\) characterizes the random nature of nonlinearities, when \(\alpha (t)\ne 0\), it works normally. When \(\alpha (t)=0\), the static neural networks (1)–(4) have the following form in [31]:

the state estimator is constructed as follows:

and we finally get the error systems:

Corollary 1

For the given scalars \(d>0\) and \(\mu\) in (5), considering (51)–(54), the resulting error systems (55)–(56) are globally stochastic stable with \(H_{\infty }\) performance \(\rho\) , if there exist real matrices \(P_i>0\) , \(Q_{1i}>0\) , \(Q_{2i}>0\) , \(Q>0\) , \(R>0\) , \(R_i>0\) , \(S>0\), \(S_i>0\) , diagonal matrix \(T_i=diag\{t_{i1},t_{i2},\ldots ,t_{in}\}>0\), \(G_i\) and \(X_i\). Such that the following LMIs hold for \(i\in \mathcal {N}\):

where

Then the gain matrices \(K_i\) is designed as \(K_i=P_i^{-1}X_i\).

Remark 7

Compared with [31], in order to handle the integral terms in the time derivative of the Lyapunov function, the number of time variable is reduced by the Wirtinger inequality at the expense of conservatism in [31]. The number of decision variables for time complexity is \(\frac{i}{2}(5n^2+9n)+\frac{3}{2}n(n+1)\). While in Corollary 1, by employing Jensens inequality and the reciprocally convex combination technique [32, 33], a lower bounder inequality in Lemma 2 is qutoed to reduce conservativeness with the number \(\frac{i}{2}(7n^2+9n)+\frac{3}{2}n(n+1)\). The difference of the number of decision variables are \(\Delta N=in^2\), which caused by the freely matrices \(G_i\). Here, \(G_i\) in (59) is introduced in the reciprocally convex approach. However, the less conservativeness are achieved at the expense of introducing more number of variables with \(\Delta N=in^2\), which will bring computational burdens.

Remark 8

It is noticed that the filter design problem studied in [31] is a special case of this paper, and we can easily obtain the much less conservative result in Corollary 1. It should be noted that the derivative of delay \(\mu\) in [31] will be invalid if \(\mu \ge 1\). But in this paper, the finally condition holds for any \(\mu\) (see Table 3).

4 Numerical examples

Example 1

Considering the neural networks (51)–(54) with parameters in [31]:

Mode 1:

Mode 2:

Suppose the transition probability matrix is given by

Firstly, we let \(\mu =0.3\), and change the upper bounder d of time delay with \(\Pi _1\), the results are listed in Table 1. Then according to [31], we have \(\mu =0.8\), \(d=0.9\), for different \(\pi _{22}\) with \(\pi _{11}=-\pi _{12}=-0.5\), the results are shown in Table 2. Finally, we let \(d=0.7\), \(\mu =1.2\) with \(\pi _{11}=-\pi _{12}=-0.5\), for different \(\pi _{22}\), the results are summarized in Table 3. And “–” means that the result is not applicable to the corresponding case. In this Corollary 1, utilizing the inequality in Lemma 2 of this paper which is different from Lemma 2 of [31], the conservatism of the results is reduced when compared with the method in [31]. From these tables, we can see the prescribed level of noise attenuation \(\rho\) is much lower and the time derivative of the time-varying delay is no longer required to be smaller than one. The number of decision variables for time complexity in [31] are 47, while in Cor ollary 1 are 55 for the reason of the introduced matrices \(G_1\) and \(G_2\).

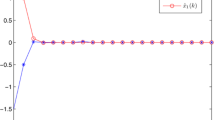

The activation functions are chosen as \(f(W_1x(t-d(t))=0.25sin( W_1t)+0.55\), and \(f(W_2x(t-d(t))=0.2sin(W_2t)+0.4\), the noise disturbance is chosen as \(w(t)=e^{-2t}sin(0.6t)\), when \(\mu =0.3\), \(d=1.2\), \(\rho =0.72\), and \(\Pi _1\) are taken with the initial condition \(x(0)=[~-1~~2~]^T\), \(\hat{x}(0)=[~0.5~~1~]^T\), the simulation results are plotted in Figs. 1, 2. One needs to pay attention is that the value of \(K_1\) and \(K_2\) in Tables 1, 2 are calculated from Corollary 1.

Example 2

Considering the delay static neural networks (1)–(4) with the following parameters:

Mode 1:

Mode 2:

Suppose the transition probability matrix is given by

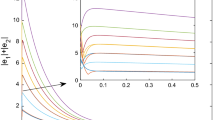

Let \(\alpha =0.82\), for \(\mu =0.4\) and \(\mu =1.1\), we change the values of time delay d with \(\Pi _2\) respectively. The results are presented in Table 4. From Table 4, when the time-delay d increase, the optimal \(H_\infty\) performance indices \(\rho _{min}\) is increasing for different \(\mu\).

We choose the same activation functions \(f(W_1x(t-d(t))\) and \(f(W_2x(t-d(t))\), noise disturbance w(t) in Example 1, initial conditions \(x(0)=[~-1~~2~]^T\), \(\hat{x}(0)=[~0.5~~1~]^T\). When \(\psi (C_1x(t))=0.1sin(C_1t)+0.4\), \(\psi (C_2x(t))=0.1sin(C_2t)+0.2\), \(\mu =1.1\), \(d=2.5\), \(\rho =1.7266\) with \(\Pi _2\), then the simulation results are plotted in Figs. 3, 4.

State trajectories, estimation and Markov chain \(r_t\) (Corollary 1)

Estimation error (Corollary 1)

State trajectories, estimation and Markov chain \(r_t\) (Theorem 1)

Estimation error (Theorem 1)

5 Conclusions

This paper has addressed the problem of \(H_{\infty }\) filter design for delayed static neural networks with Markovian switching and randomly occurred nonlinearity. Bernoulli stochastic variable and the double- and triple-integral terms of the Lyapunov functions are taken into account. In the process of the derivation without the Bernoulli stochastic variable, the double integral terms will be easy to handled and we end up with a smaller prescribed level of noise attenuation. Two numerical examples have demonstrated the effectiveness of the proposed approach. Based on the analysis in this paper, the other further results can be extended to more complex systems. For example, it is possible to generalize reciprocally convex approach subject to the asymmetric static neural networks with Markovian jumping or fuzzy neural networks with Markovian jumping. It will be interesting to be investigated in future.

References

Zhu Q, Cao J, Rakkiyappan R (2015) Exponential input-to-state stability of stochastic Cohen-Grossberg neural networks with mixed delays. Nonlinear Dyn 79(2):1085–1098

Zhu Q, Rakkiyappan R, Chandrasekar A (2014) Stochastic stability of Markovian jump BAM neural networks with leakage delays and impulse control. Neurocomputing 136(1):136–151

Ali MS, Saravanan S (2015) Robust finite-time \(H_\infty\) control for a class of uncertain switched neural networks of neutral-type with distributed time varying delays. Neurocomputing 177:454–468

Huang H, Feng G, Cao J (2011) Guaranteed performance state estimation of static neural networks with time-varying delay. Neurocomputing 74(4):606–616

Hu D, Huang H, Huang T (2014) Design of an Arcak-type generalized \(H_2\) filter for delayed static neural networks. Circ Syst Signal Pr 33(11):3635–3648

Huang H, Huang T, Chen X (2013) Guaranteed \(H_\infty\) performance state estimation of delayed static neural networks. IEEE Trans 60(6):371–375

Duan Q, Su H, Wu Z (2012) \(H_\infty\) state estimation of static neural networks with time-varying delay. Neurocomputing 97:16–21

Ali MS, Saravanakumar R, Arik S (2016) Novel \(H_\infty\) state estimation of static neural networks with interval time-varying delays via augmented Lyapunov-Krasovskii functional. Neurocomputing 171:949–954

Du B, Lam J (2009) Stability analysis of static recurrent neural networks using delay-partitioning and projection. Neural Netw 22(4):343–347

Sun J, Chen J (2013) Stability analysis of static recurrent neural networks with interval time-varying delay. Math Comput Model 221:111–120

Zhu Q, Cao J (2012) Stability of Markovian jump neural networks with impulse control and time varying delays. Nonlinear Anal Real World Appl 13(5):2259–2270

Syed Ali M, Marudaib M (2011) Stochastic stability of discrete-time uncertain recurrent neural networks with Markovian jumping and time-varying delays. Math Comput Model 54(9):1979–1988

Zhu Q, Cao J (2012) Stability analysis of Markovian jump stochastic BAM neural networks with impulse control and mixed time delays. IEEE Trans Neural Netw Leading Syst 23(3):467–479

Zhou Q, Chen B, Lin C, Li H (2010) Mean square exponential stability for uncertain delayed stochastic neural networks with Markovian jump parameters. Circ Syst Signal Pr 29(2):331–348

Wu Z, Shi P, Su H, Chu J (2012) Stability analysis for discrete-time Markovian jump neural networks with mixed time-delays. Expert Syst Appl 39(6):6174–6181

Zhu Q, Cao J (2011) Exponential stability of stochastic neural networks with both Markovian jump parameters and mixed time delays. IEEE Trans Cybern 41(2):341–353

Balasubramaniam P, Lakshmanan S, Manivannan A (2012) Robust stability analysis for Markovian jumping interval neural networks with discrete and distributed time-varying delays. Chaos Soliton Fract 45(4):483–495

Zhu Q (2014) pth moment exponential stability of impulsive stochastic functional differential equations with Markovian switching. J Frankl Inst 351(7):3965–3986

Ou Y, Shi P, Liu H (2012) A mode-dependent stability criterion for delayed discrete-time stochastic neural networks with Markovian jumping parameters. Neurocomputing 94:46–53

Tian J, Li Y, Zhao J, Zhong S (2012) Delay-dependent stochastic stability criteria for Markovian jumping neural networks with mode-dependent time-varying delays and partially known transition rates. Appl Math Comput 218(9):5769–5781

Liu Y, Wang Z, Liu X (2010) Stability analysis for a class of neutral-type neural networks with Markovian jumping parameters and mode-dependent mixed delays. Neurocomputing 73(7):1491–1500

Balasubramaniam P, Revathi VM (2014) \(H_\infty\) filtering for Markovian switching system with mode-dependent time-varying delays. Circ Syst Signal Pr 33(2):347–369

Ali MS, Arik S, Saravanakumar R (2015) Delay-dependent stability criteria of uncertain Markovian jump neural networks with discrete interval and distributed time-varying delays. Neurocomputing 158:167–173

Wu ZG, Shi P, Su H (2014) Asynchronous \(l_2-l_\infty\) filtering for discrete-time stochastic Markov jump systems with randomly occurred sensor nonlinearities. Automatical 50:180–186

Li F, Shen H (2015) Finite-time \(H_\infty\) synchronization control for semi-Markov jump delayed neural networks with randomly occurring uncertainties. Neurocomputing 166:447–454

Sun T, Su H, Wu Z, Duan Q (2012) \(H_\infty\) Filtering over networks for a class of discrete-time stochastic system with randomly occurred sensor nonlinearity. J Contr Sci Engine 2012

Bao H, Cao J (2011) Delay-distribution-dependent state estimation for discrete-time stochastic neural networks with random delay. Neural Netw 24(1):19–28

Hu M, Cao J, Hu A (2014) Mean square exponential stability for discrete-time stochastic switched static neural networks with randomly occurring nonlinearities and stochastic delay. Neurocomputing 129:476–481

Duan J, Hu M, Yang Y, Guo L (2014) A delay-partitioning projection approach to stability analysis of stochastic Markovian jump neural networks with randomly occurred nonlinearities. Neurocomputing 128:459–465

Tan H, Hua M, Chen J, Fei J (2015) Stability analysis of stochastic Markovian switching static neural networks with asynchronous mode-dependent delays. Neurocomputing 151:864–872

Shao L, Huang H, Zhao H, Huang T (2015) Filter design of delayed static neural network s with Markovian jumping parameters. Neurocomputing 153:126–132

Park P, Ko JW, Jeong C (2011) Reciprocally convex approach to stability of systems with time-varying delays. Automatical 47:235–238

Ko JW, Park P (2012) Reciprocally convex approach for the stability of networked control systems. Intell Contr Innov Comput 110:1–9

Gu K, Kharitonov VL, Chen J (2003) Stability of time-delay systems. Birkhauser, Massachusetts

Acknowledgments

This work was supported by the National Natural Science Foundation of China under Grants 11301004, 61403002, 61503121, the Natural Science Foundation of Jiangsu Province under Grant BK20130239, the Research Fund for the Doctoral Program of Higher Education of China under Grant 20130094120015, and the Fundamental Research Funds for the Central Universities under Grant 2016B07314.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cheng, Y., Hua, M., Cheng, P. et al. \(H_{\infty }\) filter design for delayed static neural networks with Markovian switching and randomly occurred nonlinearity. Int. J. Mach. Learn. & Cyber. 9, 903–915 (2018). https://doi.org/10.1007/s13042-016-0613-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-016-0613-0