Abstract

Instead of clustering data points to cluster center points in k-means, k-plane clustering (kPC) clusters data points to the center planes. However, kPC only concerns on within-cluster data points. In this paper, we propose a novel plane-based clustering, called k-proximal plane clustering (kPPC). In kPPC, each center plane is not only close to the objective data points but also far away from the others by solving several eigenvalue problems. The objective function of our kPPC comprises the information from between- and within-clusters data points. In addition, our kPPC is extended to nonlinear case by kernel trick. A determinative strategy using a Laplace graph to initialize data points is established in our kPPC. The experiments conducted on several artificial and benchmark datasets show that the performance of our kPPC is much better than both kPC and k-means.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Clustering [1] is the process of grouping data points into clusters, so that data points within a cluster have high similarity and data points are very dissimilar in the different clusters. Clustering has been applied in many areas, including data mining, statistics, biology, and information retrieval [2–4]. There are many methods for clustering, such as partitioning methods [5–7], hierarchical methods [8, 9], and density-based methods [10, 11]. Many clustering methods [5, 6, 10] aim at clustering data points to several clusters by center points, such as k-means [3, 12] and fuzzy C-means [13], which may perform better when data points distribute as spherical shapes. However, data points may not be clustered around a data point, e.g., a toy example shown in Fig. 1a [14], where each cluster’s data points distribute along a straight line.

k-plane clustering (kPC) [15, 16] is proposed to handle the case that data points fall into clusters around planes. To obtain the final center planes, kPC alternates between center plane updates and cluster assignments. Therefore, in Figure 1, kPC performs better than k-means. However, kPC only consider the within-cluster compactness may leads difficulties. A toy example is given by Fig. 2, in which kPC constructs two overlapped lines that misclassify the data points of cluster 2 into the cluster 1. Hence, kPC fails to find reasonable clusters and ends up estimating the number of clusters to 2. In addition, selecting the initial data points randomly in both k-means and kPC will affect the cluster results in a great extent [17–19]. A set of poor quality initial seeds may lead to a poor quality clustering result.

Recent studies [20–22] show that the between-cluster data points could be used to improve the cluster performance. Therefore, we introduce the between-cluster data points in kPC and propose a novel plane-based clustering, called k-proximal plane clustering (kPPC). Similar to kPC, kPPC also updates the center plane and cluster assignments alternately. The difference is, in the updating process, each center plane is not only close to its data points but also far away from the others, leading to solve several eigenvalue problems. In addition, we extend the above procedure to nonlinear case by kernel trick [14, 23–27]. Compared with kPC, the main advantages of our kPPC are: (1) the center plane in kPPC not only close to its data points but also far away from the others; (2) instead of the random initialization in kPC, kPPC constructs a Laplace graph to give the efficient initial clusters; (3) a nonlinear model of kPPC is also proposed by the kernel trick;

The remainder of this paper is divided into the following parts. Section 2 gives a brief review of the related work. Section 3 presents the details of our kPPC. In Sect. 4, we report the experimental results on both artificial and benchmark datasets. Finally, Sect. 5 concludes the paper.

2 Related works

In this paper, we consider a set of m unlabeled data points in the n-dimensional real space represented by the matrix A in \(R^{m\times n}\). For the clustering problem, the purpose is to cluster A into k clusters with the labels \(y_i\in {N}, i=1,2,\ldots ,m\) represented by the vector \({\mathbf{y}} \in N^{m}\). Next, we briefly review k-means and kPC.

2.1 k-means

k-means [28, 29] wishes to cluster m data points into k clusters so that data points of each cluster lie near the cluster center data point.

k-means keeps each data point from the same cluster being the closest to its cluster center data point, which uses the sum of the squared error to represent the dissimilarity measure by solving the following problem

where \(\mathbf x\) is the data point in space representing a given data point, \({{\mathbf {A}}}_i\) is the cluster center points of the ith cluster, \({{\mathbf {A}}}_i\) contains the rows of \({{\mathbf {A}}}\) that belong to the ith cluster, and \(||\cdot ||_2\) means the \(L_2\) norm.

By using the Lagrangian multiplier method [30, 31], cluster centers points can be updated according to

where \(m_i\) is the number of data points corresponding to \({{\mathbf {A}}}_i\).

After getting the cluster center points, a data point \(\mathbf x\) is labeled by its nearest center as follow

Given a set of m data points, k-means randomly choose k data points as the initial cluster centers. In turn, every data point is assigned to its cluster center by (3). Then, k cluster centers points are updated by (2). The final k clusters are obtained if no redistribution of data points or cluster’s centroid in any cluster.

2.2 kPC

The kPC [15] wishes to cluster \({{\mathbf {A}}}\) into k clusters such that the data points of each cluster lie close to a cluster center plane defined as follows

where \({{\mathbf {w}}}_i \in R^{n}\) is the weighted vector and \(b_i\in R\) is the bias.

kPC keeps each data point from the same cluster being the closest to the same cluster center plane, which leads to solve the following programming problems with \(i=1,2,...,k\).

where \({\mathbf {A}}_i\) contains the rows of \({\mathbf {A}}\) that belong to the ith cluster, \({\mathbf {e}}_i\) is a vector of ones of appropriate dimension. The geometric interpretation is clear, i.e., given the ith cluster, the objective of (5) minimizes the sum of the distances between the data points of the ith cluster and the ith center plane, and the constraint normalizes the normal vector of the center plane. The solution of the problem (5) can be obtained by solving k eigenvalue problems [15].

Once we obtained the k cluster center planes, a data point \(\mathbf {x}\) is assigned to the ith cluster by

Given the original data points \({\mathbf {A}}\), kPC starts from randomly initializing \(({\mathbf {w}}_i,b_i)\), where \(||{\mathbf {w}}_i||_2^2=1\). In turn, every data point is assigned a label by (6). Then, k center planes are updated by solving (5). The final k cluster center planes are obtained if a repeated overall assignment of data points to cluster center plane or a non-decrease in the overall objective function occur.

3 kPPC formulation

3.1 Linear kPPC

For each cluster, kPPC not only requests the objective data points close to the corresponding center plane but also requests the other data points far away from this cluster center plane, which leads to solve the following programming problems with \(i=1,2,...,k\)

where \({\mathbf {A}}_i\) contains the rows of \({\mathbf {A}}\) that belong to the ith cluster and \({\mathbf {B}}_i\) is the data points not belonging to the ith cluster; \(c>0\) is a parameter to weight the proximal term and the aloof term; \({\mathbf {e}}_i\) and \(\bar{{\mathbf {e}}}_{\mathbf {i}}\) are vectors of ones with an appropriate dimensions. Once we obtained the k cluster center planes, the data points can be assigned the same as kPC by (6).

Now, we give the details to solve (7). For \(i=1,2,\ldots ,k\), the problem (7) can be solved by the Lagrangian multiplier method [23, 30, 31] below. The Lagrangian function of (7) is

where \(\lambda\) is the Lagrangian multiplier. Set the partial derivatives of L with respect to \(({\mathbf {w}}_i,b_i)\) to zero, we get the following two equalities:

When \({\mathbf {e}}_i^\top {\mathbf {e}}_i-c{\bar{{\mathbf {e}}}}_i^\top {\bar{{\mathbf {e}}}}_i=0\), \(b_i\) will disappear, and thus there are two cases of the solution of (7):

Case (1). \({{\mathbf {e}}}_i^\top {{\mathbf {e}}}_i-c{\bar{{\mathbf {e}}}}_i^\top {\bar{{\mathbf {e}}}}_i\ne 0\):

where \({\mathbf{Q}} ={\mathbf {A}}_i^\top {{\mathbf {A}}}_i-c{\mathbf {B}}_i^\top {\mathbf {B}}_i +\dfrac{({\mathbf {e}}_i^\top {\mathbf {A}}_i-c{\bar{{{\mathbf {e}}}}}_i^\top {{\mathbf {B}}}_i)^\top ({\mathbf {e}}_i^\top {\mathbf {A}}_i-c{\bar{{\mathbf {e}}}}_i^\top {\mathbf {B}}_i)}{-{\mathbf {e}}_i^\top {\mathbf {e}}_i+c{\bar{{\mathbf {e}}}}_i^\top {\bar{{\mathbf {e}}}}_i}\).

Case (2). \({\mathbf {e}}_i^\top {\mathbf {e}}_i-c{\bar{{\mathbf {e}}}}_i^\top {\bar{{\mathbf {e}}}}_i=0\):

where \(m_i\) is the number of data points corresponding to \({\mathbf {A}}_i\). It is easy to conclude that \({\mathbf {w}}_i\) is the eigenvector of the smallest eigenvalue of the matrix \({\mathbf{Q}}\) in (Eqs. 11, 12) (or \({\mathbf {A}}_i^\top {\mathbf {A}}_i-c{\mathbf {B}}_i^\top {\mathbf {B}}_i\) of (Eqs. 13, 14, 15].

Now, we turn to the initialization of the proposed kPPC. As we know, the initial centers selection is very important in both k-means and kPC [18, 32–35]. Therefore, we propose an effective way to give the initial centers. Specifically, we introduce a similarity graph to initialize the centers. The following steps are adopted:

-

1.

Calculate the P nearest neighbors undirected graph G [36] for \({\mathbf {A}}\) by

$$\begin{aligned} G_{ij}=\left\{ \begin{array}{ll} 1&\quad\text{if }\ {\mathbf {x}}_i\in N(\mathbf {x}_j) ~ \text{ or }\ ~ \mathbf {x}_j\in N(\mathbf {x}_i)\\ 0 &\quad \text{otherwise } \end{array}\right. \end{aligned}$$(15)where \(N(\mathbf {x}_i)=\{\mathbf {x}_i^{(1)},\mathbf {x}_i^{(2)},...\mathbf {x}_i^{(P)}\}\) is the set of its P nearest neighbors. The data points are labeled into \(\bar{c}\) connected components by tagging them the same label if they are connected in G, and different labels if they are not connected.

-

2.

Suppose k is the number of clusters one wanted. If \(\bar{c}<k\), repeatedly find two neighbors with the largest distance and break their neighborhood to divide a connected component into two, until the number of the current connected components is k; if \(\bar{c}>k\), find two connected components of data points with the closest distance and merge them into one repeatedly, until the number of the current connected components is k.

-

3.

Export the current cluster labels as the initial cluster.

According the above procedure, we obtain the following algorithm 1.

We consider the algorithm converges if a repeated overall assignment of data points to cluster center plane or a non-decrease in the overall objective function occur.

3.2 Nonlinear kPPC

When the data points distribution in the nonlinear manifold (such as Figs. 5 and 6), the linear methods may not doing well. In this section, we extend our linear kPPC to manifold clustering by kernel trick. The thought of kernel trick [31, 37, 38] is to map the input into a higher-dimensional feature space with some non-linear transformation. The trick is that this mapping is never given explicitly, but implicitly applied in this newly-mapped data space. The nonlinear kPPC formulation also follows this process.

Instead of the aforementioned linear plane in input space, we consider the following kernel-generated surface [39–41]

where K is an appropriately chosen kernel.

Nonlinear kPPC is to minimize the following programming problems with \(i=1,2,...,k\).

where \({\mathbf {A}}_i\) is the data points of the ith cluster and \({\mathbf {B}}_i\) is the data points not in the ith cluster, \(c>0\) is a parameter to weight the proximal term and the aloof term, \({\mathbf {e}}_i\) and \({\bar{{\mathbf {e}}}}_i\) are vectors of ones of appropriate dimensions. Once we obtained k clustering surfaces, a data point \(\mathbf {x}\) is assigned to the ith cluster by

The above problems (17, 18) also can be solved by the Lagrangian multiplier method below

where \(\lambda\) is the Lagrangian multiplier. Set the partial derivatives of L with respect to \(({\mathbf {w}}_i,b_i)\) Ù:

Case (i). \({\mathbf {e}}_i^\top {\mathbf {e}}_i-c{\bar{{\mathbf {e}}}}_i^\top {\bar{{\mathbf {e}}}}_i\ne 0\):

where \({\mathbf{Q}} =(K({\mathbf {A}}_i,{\mathbf {A}}^\top )^\top K({\mathbf {A}}_i,{\mathbf {A}}^\top )-cK({\mathbf {B}}_i,{\mathbf {A}}^\top )^\top K({\mathbf {B}}_i,{\mathbf {A}}^\top )+ \dfrac{({\mathbf {e}}_i^\top K({\mathbf {A}}_i,{\mathbf {A}}^\top )-c{\bar{{\mathbf {e}}}}_i^\top K({\mathbf {B}}_i,{\mathbf {A}}^\top ))^\top ({\mathbf {e}}_i^\top K({\mathbf {A}}_i,{\mathbf {A}}^\top )-c{\bar{{\mathbf {e}}}}_i^\top K({\mathbf {B}}_i,{\mathbf {A}}^\top ))}{-{{e}}_i^\top {{e}}_i+c{\bar{{{e}}}}_i^\top {\bar{{{e}}}}_i})\).

Case (ii). \({\mathbf {e}}_i^\top {\mathbf {e}}_i-c{\bar{{\mathbf {e}}}}_i^\top {\bar{{\mathbf {e}}}}_i=0\):

where \(m_i\) is the number of data points corresponding to \({\mathbf {A}}_i\).

We also use a similarity graph to construct the initial cluster center planes instead of the randomly initialization for nonlinear kPPC. The process contains the following steps: (1) we choose an appropriately kernel function \(K(\cdot ,\cdot )\) to map the data points into a higher-dimensional feature space; (2) the same strategy as linear kPPC is used to construct the initial center planes. Then, we obtain the algorithm 2.

From algorithm 1 and 2, we can see that the main computational cost in our kPPC is to compute one P nearest neighbors undirected graph G and some eigenvalue decomposition problems. The computational complexity of each is at most O(\(n^3\)). Thus, the complexity of our kPPC is O(\(t*n^3\)), where t is the number of iterations.

The convergence criterion of algorithm 2 is the same as algorithm 1.

4 Experimental results

In this section, to validate our kPPC, experiments are carried out on several artificial datasets and benchmark datasets [42]. We compare our kPPC with other clustering methods, including kmeans [28, 29], kPC [15], and updated versions of DBSCAN [43, 44]. All these methods are implemented by Matlab (2008a) [45] on a PC with an Intel P4 processor (2.5 GHz) with 2 GB RAM. Clustering accuracy and F-measure [46] are used as the performance evaluation criterions, which are defined as follows. Consider \(\widetilde{C}\) = \((\widetilde{c_{1}},...,\widetilde{c_{k}})\) a prediction partition of a clustering method and \(\widetilde{H}\) = \((\widetilde{h_{1}},...,\widetilde{h_{k}})\) is a artificial defined partition of \({\mathbf {A}}\). We refer to a pair of data points \((\mathbf {x}_i,\mathbf {x}_j)\) from \({\mathbf {A}}\) using the following terms:

- SS::

-

if both data points belong to the same cluster of the clustering structure \(\widetilde{C}\) and to the same group of \(\widetilde{H}\).

- SD::

-

if data points belong to the same cluster of \(\widetilde{C}\) and to different group of \(\widetilde{H}\).

- DS::

-

if data points belong to different cluster of \(\widetilde{C}\) and to the same group of \(\widetilde{H}\).

- DD::

-

if data points belong to different cluster of \(\widetilde{C}\) and to different group of \(\widetilde{H}\).

Assuming now that \(f_{11}\), \(f_{10}\), \(f_{01}\) and \(f_{00}\) are the number of SS, SD, DS and DD pairs respectively. Now we can define the following indices to measure the degree of similarity between \(\widetilde{C}\) and \(\widetilde{H}\):

In this paper, the clustering accuracy is computed by the Rand statistic [46] as above.

F-measure [46] is computed as follow:

where \(\widetilde{P}\) is the precision, \(\widetilde{R}\) is the recall.

The parameter c in both linear and nonlinear kPPC is selected from \(\{2^i|i=-8,-7,\ldots ,8\}\). For the nonlinear cluster methods, we choose the RBF kernel and select the kernel parameter p in \(\{2^i|i=-8,-7,\ldots ,8\}\). The parameter P nearest neighbors undirected graph based initialization is selected from \(\{P=1,3,5,7,9\}\).

4.1 Artificial datasets

In this subsection, four artificial datasets are generated. The first two datasets (see Figs. 3a and 4a are composed of three clusters and rest two datasets (see Figs. 5a and 6a are composed of two clusters. The number of data points are 160, 160, 126, and 150, respectively. Figs. 3, 4, 5 and 6b–e show the results of the different cluster methods performed on these datasets. Tables 1 and 2 show the clustering accuracies and the F-measures of these methods, respectively.

The first two datasets are cross-plane type datasets, and we use the linear cluster methods to cluster these datasets. From Figs. 3 and 4 , we observe that both kPC and our linear kPPC are better than k-means and DBSCAN on these two datasets, and our kPPC get the best performance, while kPC performs not as good as our kPPC. This shows that our linear kPPC is more reasonable than the others when the dataset has the linear structure. Table 1 shows that our linear kPPC gives the best performance and both the clustering accuracies and F-measure are highest.

The rest two datasets have nonlinear structure, and we use the nonlinear cluster methods to cluster these datasets. From Figs. 5 and 6, we observe that DBSCAN get the best performance, and our nonlinear kPPC clusters two clusters almost the same as the original datasets, while the other two methods do not perform well in the nonlinear case. This shows DBSCAN and our nonlinear kPPC can catch the distribution of the manifold datasets more precisely. However, DBSCAN achieves better clustering performance than the kPPC in Table 2. As we know, DBSCAN is a density-based spatial clustering technique and can merge the overlapping datasets into one cluster. Therefore, we observe that DBSCAN get the worse performance in Table 1. While we have two non-overlapping datasets and the spatial clustering density of the datasets is uniform in Table 2. Therefore, the clustering effect of DBSCAN is a little better.

4.2 Benchmark datasets

In this subsection, we compare our kPPC with the others in their ability to recover cluster labels [15] on several benchmark datasets listed in Table 3. We use accuracy and F-measure as cluster evaluation criteria in the following tables. Both the random initialization and graph-based initialization k-means, kPC, and kPPC are experimented. For random initialization, all these methods are run ten times, and we record the average accuracy, the standard deviation and the one-run CPU time in Tables 4 and 9. The better results are marked by bold. The p value was calculated by performing a paired t test [47–49] that comparing our kPPC to the other methods under the assumption of the null hypothesis that there is no difference between the dataset accuracy distributions.

From Table 4, it is seen that our kPPC has better ability to recover cluster labels than the other methods, especially the linear kPPC gets the six highest accuracies on these eight datasets, and the accuracy of our kPPC is almost higher than kPC on both random and graph-based initialization. This indicates that our kPPC is better than kPC, which means that the introduction of the between-cluster information can improve the clustering ability of kPC. Furthermore, graph-based clusters are more stable because their standard deviation are always zero, while the bias of random clusters are ranging from 0.02 to 2.17%. Therefore, our graph-based initialized kPPC are more reasonable and stable than randomly initialized kPPC. From Table 5, it is seen that kmeans is more stable than other methods with random initialization, which implies the F-measures of these plane-based methods, includes kPC and kPPC, strongly depend on the initialization. our linear kPPC also gets the five highest F-measures on these eight datasets. From Tables 4 and 5, we can seen that our kPPC owns the highest cluster results compared with the others. One shortcoming of kPPC is that the time consuming is larger than other methods from Table 6.

For the nonlinear case, Tables 7 and 9 have the similar results with the above linear case. Therefore confirm our conclusions further.

4.3 Parameter influence

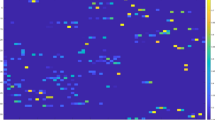

In this subsection, we show the influence of parameters c and p in our kPPC of the above benchmark datasets. Figs. 7 and 8 show the relations between the parameters and the accuracies of our kPPC on these datasets, where Fig. 7 corresponds to the linear kPPC and Fig. 8 corresponds to the nonlinear kPPC, respectively. In Fig. 8, we conduct experiments on four selected benchmark datasets listed in Table 3.

From Fig. 7, we obtain three interesting observations: (1) we know that kPPC with commonly setting parameter \(P=1\) performs better than other values of P, and kPPC often works not well if one increase P from 5, 7, and 9. Therefore, we suggest one to set P smaller to get a better performance. (2) the parameter c has a great influence for the clustering accuracy, and with the increase of c, the clustering accuracy tends to be stable. (3) kPPC performs well when c is relatively small but larger than zero and it can be seen that when \(c=0\), the clustering accuracies are mostly lower. The reason lies in that when \(c=0\), our kPPC degrades to kPC. Therefore, we conclude that the parameter c can be set more flexible, such as from 0 to 64.

There are three parameters c, p, and P in the nonlinear kPPC. From Fig. 8, we can see that (1) the parameter \(P=3\) can makes nonlinear kPPC perform better than other values of P. (2) both c and p have great influence for the clustering accuracy. Moreover, \(c \in [1,64]\) may be a good option to obtain a well performance. (3) the best accuracy of the different datasets is obtained with different p, and \(p \in [0.02,32]\) often makes it perform well.

5 Conclusions

In this paper, we have proposed an improved version of kPC, called kPPC. In kPPC, each cluster center plane is not only close to the objective data points but also far away from the other data points. Our kPPC has been extended to nonlinear clustering. In addition, the initial labels of the data points in kPPC are computed efficiently by a Laplacian graph. Preliminary experiments confirm the merits of our kPPC. Our kPPC Matlab codes can be downloaded from: http://www.optimal-group.org/Resource/kPPC.html. For the future work, it seems worth to find the way to explore the localized representations of the plane such as [50, 51], and use other methods to construct the graph such as [52]. Also, extending kPPC by using other plane-based classifiers such as [53, 54] and by considering fuzzy clustering algorithm such as fuzzy linear c-means [55], fuzzy c-lines [56], and fuzzy c-varieties [57] are also interesting.

References

Han J, Kamber M (2006) Data mining concepts and techniques. Morgan Kaufmann, San Francisco

Wang Z, Shao Y, Bai L et al (2015) Twin support vector machine for clustering. IEEE Trans Neural Netw Learn Sys 26(10):2583–2588

Anderberg M (1973) Cluster analysis for applications. Academic Press, New York

Aldenderfer M, Blashfield R (1985) Cluster analysis. Sage Publications, Los Angeles

Andrews H (1972) Introduction to mathematical techniques in pattern recognition. Wiley, New York

Celeux G, Govaert G (1995) Gaussian parsimonious clustering models. Pattern Recognit 28(5):781–793

Jain A, Dubes R (1988) Algorithms for clustering data. Englewood Cliffs, NJ

Fisher D (1987) Knowledge acquisition via incremental conceptual clustering. Mach Learn 2(2):139–172

Hassoun M (1995) Fundamentals of artificial neural networks. MIT, Cambridge

Bradley P, Mangasarian O, Street W (1997) Clustering via concave minimization. Adv Neural Inf Process Syst 9:368–374

Rao M (1987) Cluster analysis and mathematical programming. Am Stat Assoc 66(335):622–626

Selim S, Ismail M (1984) K-means-type algorithms: a generalized convergence theorem and characterization of local optimality. IEEE Trans Pattern Anal Mach Intell PAMI 6(1):81–87

Bezdek JC, Hathaway RJ, Sabin MJ, Tucker WT (1987) Convergence theory for fuzzy c-means: counterexamples and repairs. Syst Man Cybern IEEE Trans 17(5):873–877

Mangasarian O, Wild E (2006) Multisurface proximal support vector classification via generalize eigenvalues. IEEE Trans Pattern Anal Mach Intell 28(1):69–74

Bradley P, Mangasarian O (2000) k-Plane clustering. J Glob Optim 16(1):23–32

Tseng P (2000) Nearest q-flat to m points. J Optim Theory Appl 105(1):249–252

Amaldi E, Coniglio S (2013) A distance-based point-reassignment heuristic for the k-hyperplane clustering problem. Eur J Oper Res 227(1):22–29

Rahman MA, Islam MZ, Bossomaier T (2014) Denclust: a density based seed selection approach for k-means. Artif Intell Soft Comput 8468:784–795

Bezdek JC, Pal NR (1998) Some new indexes of cluster validity. Syst Man Cybernet Part B Cybern IEEE Trans 28(3):301–315

Li C, Kuo B, Chin T (2011) Lda-based clustering algorithm and its application to an unsupervised feature extraction. Fuzzy Syst IEEE Trans 19(1):152–163

Pang Y, Wang S, Yuan Y (2014) Learning regularized lda by clustering. Neural Netw Learn Syst IEEE Trans 25(12):2191–2201

Yang ZM, Guo YR, Li CN, Shao YH (2015) Local k-proximal plane clustering. Neural Comput Appl 26(1):199–211

Shao Y, Deng N, Chen W, Wang Z (2013) Improved generalized eigenvalue proximal support vector machine. IEEE Signal Process Lett 20(3):213–216

Shao Y, Zhang C, Wang X, Deng N (2011) Improvements on twin support vector machines. IEEE Trans Neural Netw 22(6):962–968

Shao Y, Chen W, Deng N (2014) Nonparallel hyperplane support vector machine for binary classification problems. Inf Sci 263:22–35

Qi Z, Tian Y, Shi Y (2012) Twin support vector machine with universum data. Neural Netw 36:112–119

Qi Z, Tian Y, Shi Y (2012) Robust twin support vector machine for pattern classification. Pattern Recognit 46(1):305–316

Han J, Kamber M (2006) Data mining: concepts and techniques, 2nd edn. China Machine Press, Beijing

Ding C, He X (2004) K-means clustering via principal component analysis, in: Proceedings of the twenty-first international conference on machine learning, ACM, p 29

Scarborough J (1958) Numerical mathematical analysis, 4th edn. Johns Hopkins Press, New York

Deng N, Tian Y, Zhang C (2013) Support vector machines: optimization based theory, algorithms, and extensions. CRC Press, Boca Raton

Naldi M, Campello R (2014) Evolutionary k-means for distributed datasets. Neurocomputing 127(3):30–42

Bradley P, Fayyad U (1998) Refining initial points for k-means clustering, in: Proceedings of the 15th international conference on machine learning (ICML98), pp. 91–99

Fayyad U, Reina C, Bradley B (1998) Initialization of iterative refinement clustering algorithms In: Proc 14th Intl Conf on machine learning (ICML), pp. 194–198

Shao Y-H, Bai L, Wang Z, Hua X-Y, Deng N-Y (2013) Proximal plane clustering via eigenvalues. Proc Comput Sci 17:41–47

Luxburg U (2007) A tutorial on spectral clustering. Stat Comput 17(4):395–416

Hathaway RJ, Bezdek JC, Huband JM (2005) Kernelized non-euclidean relational c-means algorithms. Neural Parallel Sci Comput 13(3):305–326

Scholköpf B, Smola A (2002) Learning with kernels. MIT Press, Cambridge, MA

Shao Y, Deng N (2012) A coordinate descent margin based-twin support vector machine for classification. Neural Netw 25:114–121

Qi Z, Tian Y, Shi Y (2012) Laplacian twin support vector machine for semi-supervised classification. Neural Netw 35:46–53

Shao Y-H, Wang Z, Chen W-J, Deng N-Y (2013) A regularization for the projection twin support vector machine. Knowl Based Syst 37:203–210

Blake CL, Merz CJ (199 8) UCI repository for machine learning databases. http://www.ics.uci.edu/mlearn/MLRepository.html. Accessed Jan 2015

Derya B, Alp K (2007) ST-DBSCAN: an algorithm for clustering spatial–temporal data. Data Knowl Eng 60(1):208–221

Zhou A, Zhou S, Cao J, Fan Y, Hu Y (2000) Approaches for scaling DBSCAN algorithm to large spatial databases. J Comput Sci Technol 15(6):509–526

The MathWorks Inc (1994–2001) Matlab, User’s guide. http://www.mathworks.com

Halkidi M, Batistakis Y, Vazirgiannis M (2001) On clustering validation techniques. Intell Inf Syst J 17:107–145

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mac Learn Res 7(1):1–30

Hodges J Jr, Lehmann EL (1956) The efficiency of some nonparametric competitors of the t-test. Ann Math Stat 27(2):324–335

Hollander M, Wolfe D, Chicken E (1973) Nonparametric statistical methods, 2nd edn. Wiley, New York

Wang Y, Jiang Y, Wu Y, Zhou Z (2011) Localized k-flats. In: Proceedings of the twenty-fifth AAAI conference on artificial intelligence, pp. 525–530

Huang P, Zhang D (2010) Locality sensitive c-means clustering algorithms. Neurocomputing 73:2935–2943

Yang B, Chen S (2010) Sample-dependent graph construction with application to dimensionality reduction. Neurocomputing 74:301–314

Tian Y, Shi Y, Liu X (2012) Recent advances on support vector machines research. Technol Econ Dev Econ 18(1):5–33

Shao Y-H, Deng N-Y, Chen W-J (2013) A proximal classifier with consistency. Knowl Based Syst 49:171–178

Bezdek JC, Gunderson R, Ehrlich R, Meloy T (1978) On the extension of fuzzy k-means algorithms for detection of linear clusters. Decision and control including the 17th symposium on adaptive processes 17(1):1438–1443

Bezdek JC, Coray C, Gunderson R, Watson J (1981) Detection and characterization of cluster substructure i. linear structure: fuzzy c-lines. SIAM J Appl Math 40(2):339–357

Bezdek JC, Coray C, Gunderson R, Watson J (1981) Detection and characterization of cluster substructure ii. fuzzy c-varieties and complex combinations thereof. SIAM J Appl Math 40(2):358–372

Acknowledgments

This work is supported by the National Natural Science Foundation of China (Nos. 11201426, 11371365, and 11501310), the Zhejiang Provincial Natural Science Foundation of China (Nos. LY15F030013, LQ14G010004, and LY16A010020), the National Statistical Science Research Project of China (No. 2013LZ13), and the Natural Science Foundation of Inner Mongolia Autonomous Region of China (No. 2015BS0606).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, LM., Guo, YR., Wang, Z. et al. k-Proximal plane clustering. Int. J. Mach. Learn. & Cyber. 8, 1537–1554 (2017). https://doi.org/10.1007/s13042-016-0526-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-016-0526-y