Abstract

The field of computer-assisted retinal vascular segmentation is crucial since it aids in the diagnosis of disorders like diabetic retinopathy. The segmentation of retinal images deals with several problems, including the appearance of pseudo vascularization, difficulty in detecting thin vessels, and the enhancement of low-resolution images. This study aims to propose a new unsupervised method for retinal vascular segmentation that ensures high accuracy detection comparatively with previous studies. The proposed method can achieve good performance without prior training or turning. It is based on an efficient hybrid combination of many well-known intensity transformations and filters, followed by an adaptive thresholding algorithm. Firstly, contrast limited adaptive histogram equalization (CLAHE) and bottom-hat (BTH) filtering are applied to increase the contrast between the vascular and the fundus. To bring up the vessel tree structure against a non-uniform image background, a Jerman filtering is performed. Then, reconstruction processes, bowler-hat (BLH) filtering, and the generated field of view (FoV) mask are applied to preserve image details and remove any noise. Finally, an adaptive threshold is used to classify vessel and non-vessel pixels. The impact of the proposed segmentation model has been evaluated on the open-access STARE and DRIVE databases, reaching an accuracy index of 0.9618 and 0.9586, and a specificity index of 0.9810 and 0.9874, respectively. The suggested segmentation method proved more accurate and more efficient than the results of some other current methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Ophthalmologists worldwide are known to rely on fundus images to diagnose and treat various eye diseases. Therefore, it has become imperative that experts use digital image processing on retinal images with the main aim of analyzing and knowing the various features of the retina, optic disc, blood vessels, and macula. Computer-based analysis of the image has greatly contributed to detecting imbalances in the ocular retina, such as bleeding, aneurysms, secretions, and disc disorders. In addition, this analysis allows us to diagnose and predict certain serious diseases, like diabetes, glaucoma, blindness, and macular edema (Mookiah et al. 2021; Abdulsahib et al. 2021). Generally, the segmentation of vessel structures from the fundus images is often manually performed, which is tedious, time-consuming, and error-prone, especially for large population screening. Besides, the rating of the majority of pixels is often apparent to an ophthalmologist. However, some pixels, such as those at the border of the vessel, those of near-pathology vessels, and those of small vessels, are difficult to distinguish easily. Therefore, methods that can reduce the number of manual supervision while increasing speed and accuracy are needed.

In recent years, many research efforts have been made to address the problem of retinal image segmentation. In the literature, segmentation methods are categorized into supervised or unsupervised approaches.

Supervised techniques operate a set of samples to train a classifier that distinguishes between the vessel and non-vessel pixels to produce an optimal predictive model, and the set employed in this type of process is summoned the training set. These techniques have then branched into machine learning and deep learning algorithms. For example, random forest, support vector machine (SVM), k nearest neighbors (KNN), artificial neural networks (ANN), and others are some of the classifiers used in the medical field. Deep learning is considered the most effective technique as it solves the problems of conventional classification algorithms as it can exploit raw data without using handmade features via an automatic feature extractor (Balasubramanian and Ananthamoorthy 2021; Saravanan et al. 2022). However, a high-performance deep learning model depends on many training samples with corresponding annotations, which are not consistently available, particularly for retinal vessel segmentation.

On the other hand, unsupervised segmentation methods operate without prior knowledge of the image to be segmented. The most common methods of unsupervised segmentation of retinal vessels can be classified as vessel tracking, matched filtering, multi-scale, model-based morphology and machine learning. Many recent research papers have discussed these methods in detail (Mookiah et al. 2021; Qureshi et al. 2019). Unsupervised methods are advantageous in higher speed and lower computational complexity than supervised methods. Although the accuracy of unsupervised segmentation methods has been substantially improved in the previous works, these methods still need to raise and improve the accuracy ratio and face fundamental problems such as computational complexity, hyperparameters tuning, large memory requirements, and specialized hardware (Qureshi et al. 2019). In addition, the appearance of pseudo vascularization and the enhancement of low-resolution images are also common problems for unsupervised as well as supervised methods, which are attributed to issues that may arise during the acquisition of the image, such as the type of the camera, noise, blur, and the patient’s movement.

Given the earlier issues, they can be solved in high performance through a hybrid combination of many intensity transformations and filters. This paper proposes an unsupervised approach for retinal blood vessel segmentation, which can extract good vessel segmentation images without the need for prior training or turning. In the following, some research questions are formulated to indicate the focus of the research on the one hand and clarify the contribution of our study on the other hand. The first question is: what are the main contributions of the suggested method? Second, how does the proposed method improve low and varying contrast and eliminate noise? Finally, how do we prevent background pixels from overlapping in segmentation and reduce the mathematical operations? Hence, our contributions and the strong points of this paper are given as follows:

-

An unsupervised method for retinal blood vessel segmentation providing high accuracy detection is developed. It is based on a new hybrid combination of many well-known tools.

-

A new method to create the mask is proposed to reduce the mathematical operations and increase the segmentation’s effectiveness. In addition, it enables us to bypass the use of those given in the database, which are usually not properly prepared.

-

Contrast enhancement of the retina image and detail preserving are performed using the CLAHE and BTH filtering.

-

Extraction of the vessel tree structure is subjected to the Jerman filtering, noting that this hybridization of filters overcomes the difficulty of choosing the suitable filters to eliminate poor contrast in retina images found in other methods (Wang et al. 2019).

-

Denoising the improved retinal image and the appearance enhancement of delicate vessels are achieved using the reconstruction operations and BLH filtering.

-

To prevent background pixels from overlapping in segmentation, the generated mask is placed as a last-ditch enhancement in the retina image.

-

Adaptive thresholding is carried out for binarizing the enhanced images obtained through different phases. Therefore, the proposed method is more effective than other methods in terms of segmentation accuracy.

The remainder of this paper is organized into seven main sections. Section 2 reviews related work in retinal blood vessel segmentation. Section 3 explains basic concepts that help understand the proposed methodology. Section 4 provides a detailed explanation of the steps followed in the proposed method. Section 5 presents the experiment and evaluation parts, and Section 6 presents the results and discussions of the paper. Finally, Sect. 7 yields the conclusion.

2 Related works

Segmentation and analysis of blood vessels by image processing are crucial in various fields of medicine. Several researchers have provided efficient algorithms for extracting and analyzing retinal vessels in fundus images that fall into a general category: supervised and unsupervised. This section presents a brief discussion of retinal vessel segmentation using unsupervised methods to overview the various methods.

In literature,(Azzopardi et al. 2015) proposed a filter that selectively responds to vessels’ structure. It is based on a combination of shifted filter responses (COSFIRE) to detect the vessels’ tree. The validation of this method on open-access data sets showed its efficiency in reducing computational complexity. In addition, (Lázár and Hajdu 2015) suggested a segmentation method that is based on combining a region growing procedure with a hysteresis thresholding scheme, which depends on the response of adjacent pixels similarity vector. Furthermore, (Soomro et al. 2018) used principle component analysis (PCA) prior to gray-scale conversion to achieve a considerable improvement insensitivity. Varying scales of these components were employed for normalization, followed by anisotropic diffusion to target narrow vessels. However, the main criticism of the use of denoisers for restoring the fundus image is their inability to address both the additive and multiplicative noise cases simultaneously. Alternatively, a suitable approach may be figuring out and addressing the dominant noise case only. In this regard, it can be argued that speckle, owing to its multiplicative nature and dense concentration, dominantly impacts the structural details compared to the effect caused by the systematic additive noise. Hence, prioritizing the removal of speckle patterns from the retinal fundus image can significantly improve the quality. A recent study (Khawaja et al. 2019) demonstrated such an approach, whereby a state-of-the-art speckle denoiser, namely probabilistic patch based (PPB) denoiser (Deledalle et al. 2009), was used to improve the performance of an unsupervised retinal vessel segmentation framework. This scheme separately detected small and large vessels, though PPB denoiser was essentially used to improve the detection of large vessels.

Furthermore, some research has been based on attempts to suggest ways to improve retinal image contrast (Da Rocha et al. 2020). For example, an unsupervised segmentation method, based on corrected morphological and fractal dimensions, to improve the texture between retinal vessels and the background in a fundus image was suggested by (Wang et al. 2019). First, the morphological factor combined with linear structural elements removes the lesion and light reflection. Then, the blood vessels are extracted using multi-threshold and fractal dimensions. The proposed method has been tested on STARE, DRIVE, and HRF public databases. After that, (Neto et al. 2017) suggested a way to improve the contrast of the inverted green layer of the raw image and to apply a local threshold based on the cumulative distribution. Finally, the results are refined with morphological processes that reduce noise and residual harshness in the image. Another way to improve the fundus image of the retina (Wang et al. 2021) is to simultaneously correct the lighting, improve details, and suppress noise. This method divides the raw image into three layers to correct uneven lighting in the base layer, and weighted fusion improves details and suppresses noise and artifacts. Among the most recent articles published, the authors of this study (Mahtab and Hossein 2022) developed an enhanced active contour method that extracts thin vessels and ridges very well using a discrete wavelet transform for energy reduction. Segmentation is divided into two sections using the minimization formula: foreground and background. They discovered that foreground pixels matter more than background pixels. As a result, they altered the minimization formulation to give the foreground greater importance. The optimization procedure, which works instead of reinitialization, is the second half of the innovation. The evolution in the iterated process might sometimes impair the evolution’s stability. To avoid destroying evolution’s stability, they employed an optimization method formula to retain the contour on the image’s edges. The efficacy of the method was assessed on the DRIVE database. The obtained results show the limits of the method to provide high accuracy detection.

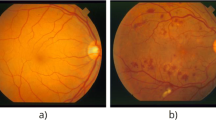

3 Preliminary

As mentioned above, the proposed method for retinal vascular segmentation is a hybrid combination of many well-know tools. In this section, we present the mathematical tools and techniques used in our segmentation method to enable a reader to assess our results. It is well-known that the image of the retina consists of three channels: red, green, and blue (Fig. 1). The red channel is supersaturated, and the blue one is poorly lit to reveal the blood vessel. On the other hand, the green channel is considered vascular detection due to the large contrast between the blood vessels and their background. Thus, the image analysis is conducted on the green channel (Azzopardi et al. 2015).

3.1 Contrast limited adaptive histogram equalization (CLAHE)

CLAHE is a method widely used in the processing of biomedical images and signals. Its main interest lies in making the interesting portions of an image more visible. CLAHE is an improved type of adaptive histogram equalization (AHE) (Pizer et al. 1987) developed by Zuiderveld (Zuiderveld 1994). CLAHE reduces the noise amplification problem by segmenting an image into small interrelated areas called tiles and applying histogram equalization over each tile. Figure 2 illustrates the concept of contrast enhancement with CLAHE, where the image is displayed at the top before the contrast is enhanced, while the image below is displayed after the contrast enhancement, correction of lighting is applied to increase the luminance and brightness of the image.

3.2 Morphological operation

Morphological image processing is a mathematical tool that modifies or extracts information about the shape inside the image (I) and its structure. A small matrix known as the structural element (S) is used to accomplish this processing. In common, the structuring (S) component is a matrix that only includes (0) and (1) and that can have any size and shape. It should be noted that there are many morphological processes such as dilation \((\oplus )\), erosion \((\ominus )\), and so on. All existing morphological processes are built on a combination of these two operations, for instance, morphological opening \((\circ )\) and closing \((\cdot )\) (Koschan and Abidi 2008), which are defined as:

Dilation

Erosion

Opening

Closing

where, the role of opening operation \((\circ )\) is to keep patterns dark and features on the one hand, and to suppress bright appearances on the other hand. The closing operation \((\cdot )\) preserves the bright features while suppressing the dark scores.

3.2.1 Bottom-hat (BTH) filtering

In digital image processing and mathematical morphology, bottom-hat (BTH) filtering is used for various tasks such as background equalization, image enhancement, feature extraction, and others. The purpose of its use in improving the images of the retina is its effective ability to enhance the appearance of the eye’s retinal vessels that appear black in a background that is considered less black. Bottom-hat filtering (BTH) process based on subtract the input image (I) from the result \(\phi _{S}(I)\) of performing a morphological closing operation \((\cdot )\) on the input image (I) . The closure performs a dilation \((\oplus )\) followed by Erosion \((\ominus )\). The result is filling holes and connecting objects nearby. The equation of the BTH filtering is given as follows:

In the suggested method, the BTH filter is used immediately after the extraction of the green channel. Its role is to prepare and enhance the appearance of the image components, mainly the vessels. The filter settings are chosen after many experiments, and at the end, the value 11 is chosen for the structural element with the square shape, for it gives satisfactory results.

3.2.2 Bowler-hat (BLH) filtering

The BLH filter combines the outputs of morphological opening processes, which are executed on the image (I), as they are executed in two parallel tracks using different structural elements (Sazak et al. 2019). The first track uses the structural elements of the disk \((S_{d})\) with varying radii (d), is obtained:

where, \((S_{d})\) is the structuring elements of diameter \(d \in \left[ 1, d_{\max }\right]\) and dmax is the expected maximum vessel size and the parameter specified by the user. The second track uses the structural elements of line \((S_{d, \theta })\) with varying radii(d) and rotation\((\theta )\), it can be written as:

where, \((S_{d, \theta })\) structuring elements of line and each line is of length \(d \in \left[ 1, d_{\max }\right]\) with a width of 1 pixel, and orientation \(\theta \in [0,180)\).

Then, the final results of the second track have been subtracted from those of the first track. The results of the two tracks are taken and linked between as follows:

To test the effectiveness and the capacity of this filter in terms of promoting the appearance of the vessels, the same experiments were repeated, in the same way as with the previous filter, in order to prepare the image for the segmentation step.

3.3 Jerman filtering

Jerman filtering (Jerman et al. 2016) is a filter that has been designed on the basis of enhancement filters (Frangi et al. 1998; Sato et al. 2000; Li et al. 2003; Erdt et al. 2008; Zhou et al. 2007). This filter is able to differentiate between different local structures, using the second order intensity derivatives for Hessian matrix at each point in the image I(x, y) . To intensify the local structures of different sizes, the analysis is usually carried out on a Gaussian scale space (Voorn et al. 2013) of the image I(x, y).

The Hessian matrix of (2D) image I(x, y) at a scale (s) is defined as:

The eigenvectors of a Hessian matrix explain the main local directions of curvature with eigenvalues \((\lambda )\) corresponding to their magnitude. Selective improvement and enhancement of local image structures, based on background brightness versus shape and foreground, can be produced by studying and analyzing the magnitudes and the signs of Hessian eigenvalues. Consequently, the latter can distinguish between tube-like, blob-like, and plane-like features in a data set by decomposing the eigenvalue of the Hessian matrix. The contrast is maximized by harmonizing the enhancement function with eigenvalues, and it is performed by multiscale filtering on the extracted vessels of different scales (s). The scaling factor (s) values are determined according to the expected maximum and minimum size of the structures of interest. The modified Frangi enhancement function for 2D images is given by:

In the Frangi filtering (Frangi et al. 1998), the improvement function is proportional to the magnitude of the squared eigenvalues. A modified form of Jerman filter for (2D) vascularity enhancement is given by:

where, \(\lambda _{\rho }(s)\) define as :

where \((\tau )\) is tuned to obtain the enhancement independently of the relative brightness of the structures. Often times, a value of \((\tau )\) is chosen between zero and one. A decrease in \((\tau )\) generally leads to an increase in \((\lambda _{\rho })\), since there is a higher probability that \((\lambda _{2}>\lambda _{\rho }/2)\), for which \((\lambda _{\rho })\) is set to (1). The result is, in general, a more consistent response to the bright structures for \((\lambda < 1)\) (Meleppat et al. 2019). In our approach, Jerman filtering is used to increase the clarity and contrast of the blood vessels in the retinal image. Table 1 all the symbols that are used in the equations with their meanings.

3.4 Reconstruction

Morphological reconstructions are useful for creating an image from small components or removing features from an image without changing the shape of its objects. Such constructions are also used to divide images by counting the number of objects present and removing shadows. Morphological reconstructions are usually applied to binary or gray images. The reconstruction process involves two images: the mask (I) and the marker (h). Reconstructions are carried out on the marker (h) image, generally created by dilation or erosion to the mask image. The marker (h) image can also be used from existing images, as in our case. To perform this operation, the marker (h) image must have the same dimensions as the mask (I) image (Lei et al. 2018).

3.5 Adaptive thresholding

Thresholding is the process of converting a grayscale image into a binary image. The two main kinds of threshold techniques are global and local adaptive threshold. In many cases, we cannot segment an image with a single global threshold due to the variable contrast in images, the imaging artifacts and the illumination changes. Hence, local adaptive threshold was developed to overcome the limitations of the global threshold by using diverse thresholds at each pixel location in the image. The local threshold (T) value in the adaptive threshold can be based on several statistics, such as the mean operation as follows:

where, (T) is the threshold of the local neighborhood of \((W \times W)\) pixels surrounding each pixel. The choice of W is critical. It must be large enough to cover enough background and foreground pixels at each point. However, it should not be so large that it affects the threshold. (C) sets the threshold above the general image noise variance in uniform pixel areas (Solomon et al. 2011).

Several attempts to improve the performance of the traditional thresholding techniques have been proposed in the literature (Chow and Kaneko 1972; Bradley and Roth 2007). In this context, the authors in Bardozzo et al. 2021 have proposed an interesting local adaptive thresholding method, which is logically based on the Bradley algorithm. The authors presented an optimization methodology in order to use fuzzy integrals (Dimuro et al. 2020), specifically Sugeno integrals. Generally speaking, fuzzy-based adaptive thresholding algorithms have demonstrated that they can achieve a higher accuracy on the one hand, but they are drastically slow on the other hand. To remedy this problem, the authors calculated fuzzy integral images with algorithms based on the summed-area table. Several experiments have been conducted to show the efficiency of the proposed method. The main disadvantage of this method is that it requires two steps to process the image: computing the fuzzy integral image \(F_{A_i}\), followed by an adaptive binarization using the \(F_{A_i}\). These two steps are time-consuming compared with the traditional method. In addition, it was noted that this method has some parameters that must be tuned to optimize the segmentation result.

Furthermore, after several experiments conducted on retinal images, we opted for traditional adaptive thresholding, presented above, due to its simplicity and effectiveness in segmenting thick and thin blood vessels in retinal images, especially after thorough preprocessing.

3.6 Conversion to L*a*b color space

The original image is taken in the RGB color space. Frequently, images are converted from one color space to another to facilitate image processing and analysis. In certain color spaces, the properties or characteristics of the objects of interest appear better when separated from others. Therefore, in this paper, we convert an RGB image to another color space called L*a*b (Zhang and Wandell 1997) in order to reduce the color dimension, which allows us to achieve better separation between intensity groups and to make a mask to the original image (Demirkaya et al. 2008). The L*a*b color space was derived from the CIE XYZ tristimulus value specified by three components: A luminosity channel L*, and two chromaticity channels a* and b*. Channel a* is represented in green-red and Channel b in blue-yellow. All color information is included in channels a* and b* (Koschan and Abidi 2008).

3.6.1 Mask generation

In the field of medical image segmentation, and especially in the processing of fundus images, researchers exclude the dark background of the image and confine the segmentation to the structures of interest. To reduce the mathematical operations and increase the segmentation’s effectiveness, the mask image created is generally a binary image that contains two values: zero (0) for the background and one (1) in the structures of interest. To do so, the image to be segmented is multiplied by the created mask image to generate an image without background pixels. The field-of-view mask (FoV) employed in the proposed method is designed by converting the RGB fundus image to the color space L*a*b. Then, the b chromaticity channel is selected and made into a binary image. After that, the morphological erosion operation is applied with the structure element having the shape of a disk and with the size 6. Figure 3 shows an example of the FoV mask created according to the suggested method of mask generation.

4 Proposed method

One major challenge in retinal image analysis is low and varying contrast, especially for accurate vessel segmentation. To address this issue, we need to improve the quality of the retinal image to preserve detail, eliminate poor contrast, and denoise. For this, the suggested method contains a succession of many well-known intensity transformations and filters in order to prepare the retinal image for the segmentation step. The main steps of the proposed method are given in Table 2.

In the first step, the FoV mask is created by converting the color image to the space L*a*b (explained in detail in the subsection Fig. 3). The resulting mask has been used in the penultimate stage before the vessels’ segmentation and extraction. This step aims to reduce the calculation complexity on the one hand and to increase the segmentation accuracy on the other hand. It should be emphasized that, during our experiments, the accuracy rate is low in the absence of the FoV mask. Then, due to the higher contrast of the green channel of the RGB retina image, it has been extracted in the second step, and the analysis is performed on this channel as shown in Fig. 5c. Despite the high variability rate, the analyses indicate that the green channel alone is insufficient for successful clustering.

Accordingly, to clarify the vessels and the background of the image to improve the assembly performance, some intensity transformations and enhancement filters have been applied to the retina image. The intensity transformation applied in the third step is CLAHE Fig. 5d, which is commonly used as a primary enhancement as it helps improve image contrast while preserving image components.

In the fourth step, the BTH and Jerman filtering have been applied. The BTH filter highlights the blood vessels in the retina and makes them more visible, as it contributes to the non-protrusion of the lens of the eye Fig. 5e. Then, to make thin and large blood vessels stand out and to define the edges and curves well, the Jerman filtering has been used Fig. 5f.

After that, the image (figure d with figure f) has been reconstructed in the fifth step to preserve its objects Fig. 5g. BLH filtering has been used as a final tool before performing the segmentation of an image Fig. 5h, as this filtering eliminates noise significantly. In the last step, adaptive thresholding has been applied for pixel classification and retinal vessel extraction, as illustrated Fig. 5i.

The flow chart of the proposed method applied for retinal vessel segmentation is illustrated in Fig. 4, and the Table 3 contains the various values used in the proposed method. In addition, visual examination of retinal vascular segmentation with treatment phases for our proposed method using STARE (Hoover et al. 2000) is depicted in Fig. 5.

5 Experiments

This section is devoted to the experiments carried out to validate the proposed method. It is organized into two main subsections: The first presents the databases used to evaluate the performance of the proposed method. The second subsection presents the adopted validation methodology. The latter compares the images segmented by our method with those segmented manually by different experts, where this comparison is evaluated using specific ratios to measure the performance and accuracy of the segmentation method.

5.1 Database

The availability of publicly accessible image databases is an essential factor underpinning the evolution of feature extraction approaches. This necessity has pushed several research companies to make their retinal image databases available publicly. For instance, STARE (Hoover et al. 2000), and DRIVE (Staal et al. 2004) are open for researchers. These two databases are among the most popular databases used to develop and test the performance of various retinal segmentation methods, which allows us to perform quantitative comparisons with a state-of-the-art method. In addition, one of the key reasons we chose these databases, they also provide corresponding ground truth images of the blood vessel. Table 4 displays some of the characteristics of these databases upon which the performance of the proposed method can be evaluated.

Noting that the databases STARE and DRIVE have independent and identically distributed images. In addition, the databases STARE and DRIVE contain a small number of images (20 and 40 images, respectively), which are carefully chosen to avoid biased data.

5.2 Validation

The quality and efficiency of any proposed vascular segmentation methodology depend on its ability to correctly distinguish between vessels and background pixels. Therefore, the performance is assessed by comparing the achieved segmentation results with those calculated manually, following four parameters:

-

TP: Number of pixels in the structures of interest (vessels) detected correctly.

-

TN: Number of background pixels detected correctly.

-

FP: Number of background pixels detected as the structures of interest pixels (vessels).

-

FN: Number of pixels in the structures of interest (vessels) detected background pixels.

A comparison between the ground truth and the image obtained through the proposed method in STARE and DRIVE database. a, d ground truth in STARE and DRIVE database respectively, b, e segmentation images results with our proposed method, and c, f images of congruence, and the difference between ground truth and segmentation images results

These parameters are illustrated in Fig. 6 by comparing sample images from the STARE and DRIVE databases. The images in Figure 6c, f show a group of colors: the white TP color represents the blood vessels in the retina that have been properly divided. However, the purple FP and green FN colors aim to show the flaws in the proposed method that should be addressed. In order to determine and to compare the performance of the proposed method with the results of some other current methods, certain ratios are selected:

Sensitivity

Specificity

Accuracy

Matthews correlation coefficient

Precision

\(F_1\) score

6 Results and discussion

This section presents the results obtained from the experimental evaluations. Besides, an in-depth analysis of the results achieved is also carried out. Noting that the experiments have been conducted using MATLAB.

In order to further illustrate the effectiveness of the suggested method of retinal vascular segmentation, experimentation has been carried out on two well-known open-access databases, STARE and DRIVE. Based on these databases, the results achieved are compared with those obtained by some of the unsupervised methods from the literature. The vascular segmentation is assessed using sensitivity (Sn), specificity (Sp), accuracy (Acc). Indeed, the higher values of these coefficients can be interpreted as better performance. The Table 5 compares the performance of different methods for retinal vessel segmentation on the STARE database. Sixteen works retained in this study (Fraz et al. 2012; Fathi and Naghsh-Nilchi 2013; Azzopardi et al. 2015; Zhao et al. 2015; Oliveira et al. 2016; Zhang et al. 2016; Neto et al. 2017; Zhao et al. 2017; Khomri et al. 2018; Soomro et al. 2018; Khawaja et al. 2019; Wang et al. 2019; Shah et al. 2019; Roy et al. 2019; Da Rocha et al. 2020; Mahtab and Hossein 2022). It can be observed that the specificity and the accuracy indicators are better, reaching values of \((Sp = 0.9810)\) and \((Acc = 0.9618)\), respectively. It can also be noticed that (Shah et al. 2019) obtained such a high sensitivity \((Sn= 0.8949)\) at the same time the cost of the accuracy reaching the value of \((Acc =0.9354)\). Therefore, it is pertinent to note that the sensitivity index achieved by the our method \((Sn =0.7253)\) is much closer to the literature values.

In this context, the same remarks can be made in the case of the DRIVE database. The Table 6 compares the performance of the proposed method with state-of-the-art methods. As for specificity \((Sp = 0. 9874)\) and accuracy \((Acc = 0.9586)\), they are considered the best with respect of the works retained. In contrast, the sensitivity index achieved by our method is equal to \((Sn = 0.6595)\).

To better understand the effectiveness of the proposed method, we extended the evaluation by using the MCC, Pr and \(F_1\) score metrics, as described in Table 7. Thus, for the MCC coefficient, our method reaches values of \((MCC = 0.7211, MCC = 0.7193)\) on STARE and DRIVE, respectively, which are satisfactory since the MCC coefficient varies between \(-1\) and 1. While the precision \((Pr = 0.7625, Pr = 0.8381)\) and the \(F_1\) score \((F_1 = 0.7389, F_1 = 0.7315)\) achieved on the STARE and DRIVE databases are within the normal range of the field of medical image segmentation.

In general, the proposed method has achieved good results in terms of accuracy and specificity indexes in both databases, which confirms once again the reliability of the proposed method.

7 Conclusion

In this paper, we proposed a new method using hybrid filters that precede the vessel segmentation step to enhance low and varying contrast on the one hand, and to reduce noise surges in devices on the other hand. For that reason, CLAHE and morphological filters have been used for low-frequency noise and vessel enhancement. In addition, the Jerman filtering has also been used to illustrate wide and thin vessels in images. Furthermore, the created mask has been employed to reduce the mathematical operations and increase the segmentation’s effectiveness. Finally, adaptive thresholding has been utilized to extract vessel features and segment image pixels into the vessel and non-vessel images. These steps greatly enhance the efficiency of vessel detection capabilities. The suggested method has been tested on established clinical databases STARE and DRIVE. It outperforms the most recent methods with significant improvements, particularly in detection accuracy.

As a future scope, it will be interesting to extend the results of this paper to predict various retinal diseases like glaucoma, diabetic retinopathy, age-related macular degeneration, and so on. For this purpose, a promising avenue of research is to record in context histories the data in order to perform the segmentation of retinal blood vessels for disease prediction (Bala and Chana 2015; Rosa et al. 2015; da Rosa et al. 2016; Dupont et al. 2020; Filippetto et al. 2021).

References

Abdulsahib AA, Mahmoud MA, Mohammed MA, Rasheed HH, Mostafa SA, Maashi MS (2021) Comprehensive review of retinal blood vessel segmentation and classification techniques: intelligent solutions for green computing in medical images, current challenges, open issues, and knowledge gaps in fundus medical images. Netw. Model. Anal. Health Inform. Bioinform. 10(1):1–32

Azzopardi G, Strisciuglio N, Vento M, Petkov N (2015) Trainable cosfire filters for vessel delineation with application to retinal images. Med Image Anal 19(1):46–57

Bala A, Chana I (2015) Intelligent failure prediction models for scientific workflows. Expert Syst Appl 42(3):980–989

Balasubramanian K, Ananthamoorthy N (2021) Robust retinal blood vessel segmentation using convolutional neural network and support vector machine. J Ambient Intell Humaniz Comput 12(3):3559–3569

Bardozzo F, De La Osa B, Horanská L, Fumanal-Idocin J, delli Priscoli M, Troiano L, Tagliaferri R, Fernandez J, Bustince H (2021) Sugeno integral generalization applied to improve adaptive image binarization. Inf Fusion 68:37–45

Bradley D, Roth G (2007) Adaptive thresholding using the integral image. J Graph Tools 12(2):13–21

Chow C, Kaneko T (1972) Automatic boundary detection of the left ventricle from cineangiograms. Comput Biomed Res 5(4):388–410

Da Rocha DA, Barbosa ABL, Guimarães DS, Gregório LM, Gomes LHN, da Silva Amorim L, Peixoto ZMA (2020) An unsupervised approach to improve contrast and segmentation of blood vessels in retinal images using clahe, 2d gabor wavelet, and morphological operations. Res Biomed Eng 36(1):67–75

da Rosa JH, Barbosa JL, Ribeiro GD (2016) Oracon: an adaptive model for context prediction. Expert Syst Appl 45:56–70

Deledalle CA, Denis L, Tupin F (2009) Iterative weighted maximum likelihood denoising with probabilistic patch-based weights. IEEE Trans Image Process 18(12):2661–2672

Demirkaya O, Asyali MH, Sahoo PK (2008) Image processing with MATLAB: applications in medicine and biology. CRC Press, Boca Raton

Dimuro GP, Fernández J, Bedregal B, Mesiar R, Sanz JA, Lucca G, Bustince H (2020) The state-of-art of the generalizations of the Choquet integral: from aggregation and pre-aggregation to ordered directionally monotone functions. Inf Fusion 57:27–43

Dupont D, Barbosa JLV, Alves BM (2020) Chspam: a multi-domain model for sequential pattern discovery and monitoring in contexts histories. Pattern Anal Appl 23(2):725–734

Erdt M, Raspe M, Suehling M (2008) Automatic hepatic vessel segmentation using graphics hardware. In: International workshop on medical imaging and virtual reality. Springer, pp 403–412

Fathi A, Naghsh-Nilchi AR (2013) Automatic wavelet-based retinal blood vessels segmentation and vessel diameter estimation. Biomed Signal Process Control 8(1):71–80

Filippetto AS, Lima R, Barbosa JLV (2021) A risk prediction model for software project management based on similarity analysis of context histories. Inf Softw Technol 131:106497

Frangi AF, Niessen WJ, Vincken KL, Viergever MA (1998) Multiscale vessel enhancement filtering. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 130–137

Fraz MM, Barman SA, Remagnino P, Hoppe A, Basit A, Uyyanonvara B, Rudnicka AR, Owen CG (2012) An approach to localize the retinal blood vessels using bit planes and centerline detection. Comput Methods Programs Biomed 108(2):600–616

Hoover AD, Kouznetsova V, Goldbaum M (2000) Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans Med Imaging 19(3):203–210

Jerman T, Pernuš F, Likar B, Špiclin Ž (2016) Enhancement of vascular structures in 3D and 2D angiographic images. IEEE Trans Med Imaging 35(9):2107–2118

Khawaja A, Khan TM, Naveed K, Naqvi SS, Rehman NU, Nawaz SJ (2019) An improved retinal vessel segmentation framework using Frangi filter coupled with the probabilistic patch based denoiser. IEEE Access 7:164344–164361

Khomri B, Christodoulidis A, Djerou L, Babahenini MC, Cheriet F (2018) Retinal blood vessel segmentation using the elite-guided multi-objective artificial bee colony algorithm. IET Image Process 12(12):2163–2171

Koschan A, Abidi MA (2008) Digital color image processing. Wiley-Interscience, New York

Lázár I, Hajdu A (2015) Segmentation of retinal vessels by means of directional response vector similarity and region growing. Comput Biol Med 66:209–221

Lei T, Jia X, Zhang Y, He L, Meng H, Nandi AK (2018) Significantly fast and robust fuzzy c-means clustering algorithm based on morphological reconstruction and membership filtering. IEEE Trans Fuzzy Syst 26(5):3027–3041

Li Q, Sone S, Doi K (2003) Selective enhancement filters for nodules, vessels, and airway walls in two-and three-dimensional CT scans. Med Phys 30(8):2040–2051

Mahtab S, Hossein P (2022) An active contour model using matched filter and hessian matrix for retinal vessels segmentation. Turk J Electr Eng Comput Sci 30(1):295–311

Meleppat RK, Miller EB, Manna SK, Zhang P, Pugh EN Jr, Zawadzki RJ (2019) Multiscale Hessian filtering for enhancement of OCT angiography images. In: Ophthalmic technologies XXIX, vol 10858. International Society for Optics and Photonics, p 108581K

Mookiah MRK, Hogg S, MacGillivray TJ, Prathiba V, Pradeepa R, Mohan V, Anjana RM, Doney AS, Palmer CN, Trucco E (2021) A review of machine learning methods for retinal blood vessel segmentation and artery/vein classification. Med Image Anal 68:101905

Neto LC, Ramalho GL, Neto JFR, Veras RM, Medeiros FN (2017) An unsupervised coarse-to-fine algorithm for blood vessel segmentation in fundus images. Expert Syst Appl 78:182–192

Oliveira WS, Teixeira JV, Ren TI, Cavalcanti GD, Sijbers J (2016) Unsupervised retinal vessel segmentation using combined filters. PLoS One 11(2):e0149943

Pizer SM, Amburn EP, Austin JD, Cromartie R, Geselowitz A, Greer T, ter Haar Romeny B, Zimmerman JB, Zuiderveld K (1987) Adaptive histogram equalization and its variations. Comput Vis Graph Image Process 39(3):355–368

Qureshi I, Ma J, Abbas Q (2019) Recent development on detection methods for the diagnosis of diabetic retinopathy. Symmetry 11(6):749

Rosa JH, Barbosa JL, Kich M, Brito L (2015) A multi-temporal context-aware system for competences management. Int J Artif Intell Educ 25(4):455–492

Roy S, Mitra A, Roy S, Setua SK (2019) Blood vessel segmentation of retinal image using Clifford matched filter and Clifford convolution. Multimed Tools Appl 78(24):34839–34865

Saravanan V, Samuel R, Krishnamoorthy S, Manickam A (2022) Deep learning assisted convolutional auto-encoders framework for glaucoma detection and anterior visual pathway recognition from retinal fundus images. J Ambient Intell Humanized Comput 1868–5145:1–11

Sato Y, Westin CF, Bhalerao A, Nakajima S, Shiraga N, Tamura S, Kikinis R (2000) Tissue classification based on 3D local intensity structures for volume rendering. IEEE Trans Vis Comput Graph 6(2):160–180

Sazak Ç, Nelson CJ, Obara B (2019) The multiscale bowler-hat transform for blood vessel enhancement in retinal images. Pattern Recogn 88:739–750

Shah SAA, Shahzad A, Khan MA, Lu CK, Tang TB (2019) Unsupervised method for retinal vessel segmentation based on Gabor wavelet and multiscale line detector. IEEE Access 7:167221–167228

Solomon C, Breckon T (2011) Fundamentals of digital image processing: a practical approach with examples in Matlab. Wiley, New York

Soomro TA, Gao J, Lihong Z, Afifi AJ, Soomro S, Paul M (2018) Retinal blood vessels extraction of challenging images. In: Australasian conference on data mining. Springer, pp 347–359

Staal J, Abràmoff MD, Niemeijer M, Viergever MA, Van Ginneken B (2004) Ridge-based vessel segmentation in color images of the retina. IEEE Trans Med Imaging 23(4):501–509

Voorn M, Exner U, Rath A (2013) Multiscale hessian fracture filtering for the enhancement and segmentation of narrow fractures in 3D image data. Comput Geosci 57:44–53

Wang W, Wang W, Hu Z (2019) Retinal vessel segmentation approach based on corrected morphological transformation and fractal dimension. IET Image Process 13(13):2538–2547

Wang J, Li YJ, Yang KF (2021) Retinal fundus image enhancement with image decomposition and visual adaptation. Comput Biol Med 128:104116

Zhang X, Wandell BA (1997) A spatial extension of CIELAB for digital color-image reproduction. J Soc Inform Display 5(1):61–63

Zhang J, Dashtbozorg B, Bekkers E, Pluim JP, Duits R, ter Haar Romeny BM (2016) Robust retinal vessel segmentation via locally adaptive derivative frames in orientation scores. IEEE Trans Med Imaging 35(12):2631–2644

Zhao Y, Rada L, Chen K, Harding SP, Zheng Y (2015) Automated vessel segmentation using infinite perimeter active contour model with hybrid region information with application to retinal images. IEEE Trans Med Imaging 34(9):1797–1807

Zhao Y, Zhao J, Yang J, Liu Y, Zhao Y, Zheng Y, Xia L, Wang Y (2017) Saliency driven vasculature segmentation with infinite perimeter active contour model. Neurocomputing 259:201–209 (multimodal media data understanding and analytics)

Zhou C, Chan HP, Sahiner B, Hadjiiski LM, Chughtai A, Patel S, Wei J, Ge J, Cascade PN, Kazerooni EA (2007) Automatic multiscale enhancement and segmentation of pulmonary vessels in CT pulmonary angiography images for CAD applications. Med Phys 34(12):4567–4577

Zuiderveld K (1994) Contrast limited adaptive histogram equalization. Academic Press Professional Inc, New York, pp 474–485

Funding

There is no funding for this study

Author information

Authors and Affiliations

Contributions

All the authors have participated in writing the manuscript and have revised the final version. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors

Informed consent

Informed consent was obtained from all individual participants included in the original study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mehidi, I., Belkhiat, D.E.C. & Jabri, D. A high accuracy segmentation method for retinal blood vessel detection based on hybrid filters and an adaptive thresholding. J Ambient Intell Human Comput 15, 323–335 (2024). https://doi.org/10.1007/s12652-022-03893-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-022-03893-y