Abstract

Accidental fall is one of the most prevalent causes of loss of autonomy, deaths and injuries among the elderly people. Fall detection and rescue systems with the advancement of technology help reduce the loss of lives and injuries, as well as the cost of healthcare systems by providing immediate emergency services to the victims of accidental falls. The aim of this paper is to perform a systematic review of the existing sensor-based fall detection and rescue systems and to facilitate further research in this field. The systems are reviewed based on their architecture, used sensors, performance metrics, limitations, etc. This review also provides a taxonomy for classifying the fall detection systems. The systems have been divided into two main categories: single sensor-based fall detection systems, and multiple sensor-based fall detection systems. Although single sensor-based systems are very accurate in detecting falls, multiple sensor-based systems are more efficient. The low power consumption of most single sensor-based systems especially those which are based on the accelerometer is perfect for wearable solutions, while most multiple sensor-based systems are perfect for indoor monitoring.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Accidental fall is one of the predominant causes of injury and death for the general population, particularly the elderly. The elderly people make up a significant part of the world population. In 2017, 13% of the world population (962 million people) were aged 60 or above, according to a United Nations prediction (Sugawara and Nikaido 2014). This is expected to about double to 2.1 billion by 2050, and about triple to 3.1 billion by 2100 (Sugawara and Nikaido 2014). According to the World Health Organization (WHO) (Verma et al. 2016), accidental falls are the second leading cause of premature death from injury. Every year, an estimated 646,000 falls result in fatalities over the world and 37.3 million falls require immediate medical attention (Verma et al. 2016). Adults over 60 years of age have the highest fall-related death rates and adults over 65 years of age suffer the highest number of fatal falls (Verma et al. 2016). These fall events result in a loss of 17 million disability-adjusted life years. Disability-adjusted life years denote the potential years of “healthy” life lost due to premature death or disabilities caused by unfortunate events. Fall events also result in significant monetary loss for both the individual and the state. The average cost of the health care system per fall injury for people over 65 years of age is US$ 1049 and US$ 3611 in Australia and the Republic of Finland, respectively (Verma et al. 2016).

In general, most fall events occur at home due to an abundance of potential fall hazards (Hamm et al. 2016). Common hazards include but not limited to slippery floors, obstructed ways, clutter, pets, unstable furniture, and poor lighting conditions (Lord et al. 2006). The average elderly population is less prone to falls than older people suffering from severe neurological diseases, e.g., dementia and epilepsy (Homann et al. 2013), (Wang et al. 2016), (Rawashdeh et al. 2012), (Rahaman et al. 2019). Risk of falls also increases due to solitary living arrangements (Bergen et al. 2016), (O’Loughlin et al. 1993). However, in most cases, the falls do not result in loss of lives. Life-threatening complications arise when the affected person does not get the necessary treatment in time and remains on the floor for a prolonged period without others’ notice (Vallabh and Malekian 2018), (Sterling et al. 2001), (Islam et al. 2020), (Parkkari et al. 1999), (Jager et al. 2000), (Florence et al. 2018).

Making the entire home environment fall-proof is not a feasible solution (Pynoos et al. 2010), (Pynoos et al. 2012). However, the advancement of fall detection technologies enables automated systems to detect falls in an environment and minimizes both the damage and the response time by notifying the emergency services and caregivers of the fall event (Doulamis 2010), (Mukhopadhyay 2015), (Delahoz and Labrador 2014), (Chaudhuri et al. 2014), (Noury et al. 2007), (Igual et al. 2013), (Mubashir et al. 2013).

Modern fall detection systems involve the following stages: data collection stage, feature extraction stage, detection stage or learning stage (Noury et al. 2007), (Igual et al. 2013), (Mubashir et al. 2013), (Nooruddin et al. 2020). Relevant fall and Activities of Daily Living (ADL) motion data of users are collected via sensors in the data collection stage. Many types of sensors can be used to acquire the motion data. Meaningful features are extracted from the raw sensor data in the feature extraction stage. The systems that use machine learning algorithms to classify the motion data use the extracted features to train the model. The trained model is then deployed and used to classify future motion data. The threshold-based systems compare the extracted features with predetermined values to classify the motion data (Rahman et al. 2020, Islam et al. 2019, Buke et al. 2015, Bagalà et al. 2012.

Many monitoring and fall detection systems were reviewed in (Mukhopadhyay 2015), (Delahoz and Labrador 2014), (Chaudhuri et al. 2014), (Noury et al. 2007), (Igual et al. 2013), (Mubashir et al. 2013), (Bet et al. 2019). Mubashir et al. (2013) classified fall detection systems into three types: wearable, vision-based, and ambient/fusion. Typically, the sensors that are used to develop wearable fall detection systems comprise accelerometer, gyroscope, depth sensor, infrared sensor, acoustic sensor and vibration sensor. Video surveillance and Doppler radars are used for real-time monitoring-based fall detection systems (Mubashir et al. 2013), (Islam et al. 2019). A combination of monitoring systems and wearable sensors are used in ambient/fusion based systems.

Mukhopadhyay et al. (2015) reviewed the wearable solutions used in fall detection and rescue systems. The types of sensors, the wireless protocols used in the applications, the monitored activities, design challenges of the solutions and energy consumption of the sensors, as well as current market situation and future trends were reviewed. Delahoz and Labrador (2014) reviewed machine learning based fall detection and fall prevention systems. The general structure of fall detection systems was presented as a collection of three modules: data collection module, feature extraction module and learning module. The design issues such as occlusion, multiple people, obtrusion, privacy, aging, computational cost, energy consumption, noise and difficulty in choosing thresholds were considered. The fall detection systems were reviewed based on the overall position of the sensors. The reviewed sensors were divided into two types: external sensors and wearable sensors. The external sensors were divided into camera-based sensors and ambient sensors. The authors also discussed the various environmental, psychological, and physical factors of falls. Chaudhuri et al. (2014) reviewed fall detection systems systematically and provided quality scoring based on a condensed version of the Statement of Reporting of Evaluation Studies in Health Informatics (STARE-HI). The reviewed fall detection systems were divided into two main categories: wearable and non-wearable systems. The categorization was based on the position of the detecting sensor. If the sensors were worn by the monitored person, the respective system was categorized as a wearable system. If the sensors were mounted on a stationary platform, the respective system was categorized as a non-wearable system. The used sensors were grouped into general types such as motion sensors, floor sensors, cameras, etc. The systems that used a combination of multiple systems for accurate fall detection were also reviewed.

Noury et al. (2007) reviewed the fall detection systems and grouped them into two main categories: analytical methods and machine learning methods. Analytical methods mainly detect the lying position from various sensors, such as horizontal inclination sensors and floor sensors. Machine learning methods leverage large datasets and machine learning classifier models detect falls from sensor data. Igual et al. (2013) categorized the reviewed fall detection systems into two main types: context-aware systems and wearable devices. In context-aware systems, the sensors are deployed in the environment. In this case, the sensors are stationary and overlook a fixed environment. On the other hand, in wearable systems, the sensors are placed on different positions, e.g., chest, waist, and wrist of the monitored person. The methods of the context-aware systems can be broadly categorized into three main stages: data collection and processing, feature extraction, and inference stage. Smartphone based fall detection systems were also reviewed. The current and future trends of computer vision-based detectors and machine learning based approaches were discussed. Various design challenges such as performance of usability, acceptance, and social stigma related to such devices were discussed. Various issues related to fall detection technology such as smartphone limitations, privacy concerns, availability of public datasets, and real-life falls were also considered in the review. Bet et al. (2019) reviewed wearable sensor-based fall detection systems and explored the types of commonly used sensors, their sampling rate, the type of the signal acquisition and data processing method used, the functional tests performed on the system, and the types of application. Four main types of sensors, namely: accelerometer, gyroscope, magnetometer, and barometer were mostly used in the reviewed systems. The systems developed in (Safi et al. 2015), (Hu et al. 2018), (Buke et al. 2015) also reviewed inertial wearable sensors. Some computer vision-based fall detection systems were reviewed in (Zhang et al. 2015), (Erden et al. 2016). Various available public fall detection datasets and the performance of various systems on those datasets were discussed in (Khan and Hoey 2017), (Igual et al. 2015), and (Casilari et al. 2017).

It is evident from the current review works that in current literature, fall detection systems are divided into two broad categories: wearable systems and non-wearable systems based on the “wearability” perspective. The categorization of the systems in existing literature into two groups—context-aware and wearable systems—is also based on a similar perspective. The categorization of the systems into two types—analytical methods and machine learning methods—is based on the “classification methodology” perspective. The categorization of the systems by existing literature into three categories—wearable, vision-based and ambient or fusion—is based on the “used sensor type” perspective. In this case, the non-wearable systems are further divided into the vision-based and ambient-based categories. Many other reviews are conducted on a specific sub-category of fall-detection systems, such as inertial sensors-based wearable systems, computer vision-based systems, etc. Most of the review works till now have categorized fall detection systems based on whether the system is wearable or not. However, the number of sensors used and their type is a major specification of any fall detection system. While some systems only employ a single sensor for data collection purposes, other systems use multiple sensors. Both kinds of systems have achieved state-of-the-art results.

The purpose of this review work is the systematic assessment of recent fall detection systems. We provide a taxonomy categorizing the developed fall detection systems based on the number of used sensors. We reviewed the systems considering the following issues: system type (single/multiple sensors), system technologies, system working principles, and the merits and demerits of the system. The research was limited to peer reviewed articles which were written in English and published between the years 2014 and early 2019 in scientific journals or magazines or presented in conferences. The research was restricted to a number of sources, namely: Google Scholar, PubMed, EMBASE, CINAHL, and NCBI. Additionally, to acquire fall statistics, a manual search was carried out on books and publications by organizations that focus on statistics of accidental falls and their consequences, such as the World Health Organization (WHO). The keywords used, for searching the databases or for manual web searches, were the following: “fall detection”, “fall detection and rescue systems”, “fall statistics”, “fall prevention”, “sensor-based fall detection”, and “fall monitoring”. The titles and abstracts from the search results were analyzed to eliminate duplicates and publications that were beyond the scope of this review work. After thoroughly reading and evaluating the remaining publications, specific topics of interest in the review articles were identified and quantified. Our main focus was on fall detection systems that employ some kind of sensors for data acquisition and detection.

The remaining part of the paper is organized as follows: Sect. 2 describes the fall detection systems with two categories: single sensor-based and multiple sensor-based systems. The results and detailed discussion of the review are presented in Sect. 3. Section 4 concludes the review.

2 Literature review on fall detection systems

Many organizations have been working for a long time to make cost-effective and well-organized fall detection systems for the elderly. The work associated with this field is reviewed as follows.

All the systems that are reviewed in this paper are categorized into two groups: single sensor-based systems and multiple sensor-based systems. The categorization is done based on how many sensors for the system have been used to capture the real-world scenario. Single sensor-based systems use the data from only one sensor for feature extraction and classification. Multiple sensor-based systems use data from multiple sensors for feature extraction and classification. The sensors such as Wi-Fi or Bluetooth modules (communication purpose) which are not used for feature extraction or classification purposes are not considered during categorization. The taxonomy of the reviewed fall detection systems is depicted in Fig. 1.

2.1 Single sensor-based fall detection systems

Single sensor-based systems rely on a single sensor or a single module for data collection. Single sensor-based systems use one of the sensors or modules, e.g., accelerometer, gyroscope or depth camera for data collection. The collected data is then processed and passed to a detection technique, e.g., threshold-based algorithm (Chen et al. 2019), (Mehmood et al. 2019), machine learning model or statistical model (Sanchez and Muñoz 2019), (Yhdego et al. 2019), (Yacchirema et al. 2019). Systems that employ a threshold-based algorithm test the collected data against preset data for detection. Systems that employ machine learning or statistical models pass the collected data to a pre-trained model that was trained on a similar dataset. Various open-access datasets are available for single sensor-based ADL and fall detection (Igual et al. 2015), (Casilari et al. 2017), (Khan and Hoey 2017).

2.1.1 Fall detection using accelerometer

An accelerometer is a device that measures the acceleration or rate of change of velocity of a body in its instantaneous rest frame. Single-axis and multi-axis models of the accelerometer are available to detect the magnitude and direction of the proper acceleration, as a vector quantity. Sense orientation, vibration, shock, falling in a resistive environment, etc. are popular applications of accelerometers (Rand et al. 2009), (Ward et al. 2005). Almost all of the modern portable devices contain Microelectromechanical systems (MEMS) accelerometers. These accelerometers are used for detecting screen orientation, position, etc. However, they are perfectly capable of detecting fall events (Lee and Tseng 2019), (Santos et al. 2019), (Thanh et al. 2019), (Ranakoti et al. 2019). Data from accelerometers can be used in machine learning, statistical models (Santos et al. 2019) or threshold-based algorithms (Lee and Tseng 2019) for fall detection.

Chen et al. (2019) proposed a wrist-worn accelerator-based fall detection system by combining ensemble stacked auto-encoders (ESAEs) and one class classification based on the convex hull (OCCCH). ESAEs were used to overcome the disadvantages of ANNs and unsupervised feature extraction was done. The pattern recognition task was performed by the OCCCH. The strategies such as majority voting and weight adaptive adjustment were used to improve the overall performance of the system. Two experiments were performed to validate the overall performance of the system. Experiment I involved fall and ADL data from 6 volunteers. Experiment II involved fall and ADL data from 11 volunteers from a different group. All fall data was registered while falling on a yoga mat, whereas, all ADL data are real-world data. In experiment I, the system achieved accuracy, sensitivity, and specificity of 97.45, 96.09, and 98.92%, respectively. The system achieved accuracy, sensitivity, and specificity of 97.82, 99.30, and 96.36%, respectively in experiment II. Mehmood et al. (2019) proposed a tri-axial accelerometer based fall detection system that uses Mahalanobis distance for detecting falls in real-time data. Mahalanobis distance is used to calculate distances between two points. However, Mahalanobis distance does not require the points to be of the same data group, thus enabling calculating distance between points of groups of different sizes. Three types of ADLs: walking, standing posture, getting up, and sitting on chair were recorder. Four volunteers recorded the fall motions in a laboratory environment. The calculated Mahalanobis distance was compared against a threshold value for determining whether a fall occurred or not. However, the prototype used Bluetooth technology to communicate with the main computer which processes the data, constraining the versatility of the device. The developed system achieved 96% accuracy from the experiment.

Yhdego et al. (2019) developed an accelerometer-based fall detection system that used transfer learning with AlexNet and continuous wavelet transform for fall detection. The URFD public dataset was used as the main data source. A continuous wavelet transform was performed on the data in the data processing stage. AlexNet was trained on the data using transfer learning. ImageNet weights were used in the pre-trained model. The deep convolutional neural network based system achieved 96.43% accuracy, 95.83% sensitivity, and 96.875% specificity. Yacchirema et al. (2019) proposed a tri-axial accelerometer based wearable fall detection system that used a random forest algorithm to properly distinguish between ADL and fall events. The system is waist-mounted. The system also has a rescue component to monitor the persons. The random forest algorithm outperformed other logistic regression and convolutional neural network based models. The system achieved 98.72% accuracy, 94.60% specificity, and 96.22% sensitivity.

Cao et al. (2016) proposed a fall detection system that collects acceleration data of the human chest using a wearable device containing a triaxial accelerometer. The collected data from the sensor is used for fall detection using Hidden Markov Model (HMM). The Feature Sequences (FSs) extracted from the accelerometer are used to train the HMM. The framework achieved the accuracy, sensitivity, and specificity of 97.2, 91.7, and 100%, respectively. The parameters of HMM in this literature might not be optimal as the training samples used are collected from the simulated motion process, not from real practice falls. Aguiar et al. (2014) developed an unobtrusive smartphone-based fall detection system. The accelerometer data of the smartphone placed in the user’s belt or pocket is continuously monitored. 14 different signal components are computed from the acceleration vectors and passed through a Butterworth digital filter. The computed signal components are x, y, z projections, angles and magnitude value along the three axes of the phone. A decision tree is used to select the most significant features and calculate the thresholds. These thresholds are then used in a state machine to classify fall events. The system also incorporates a rescue system. In case of a fall scenario, it sends the location info of the patient to the emergency services and caregivers, thus ensuring immediate medical assistance. The system was tested in two positions: belt and pocket. The specificity and sensitivity of the system are close to 99% and 97%, respectively, for both usage positions. Power consumption is a major concern for the system as it continuously monitors the accelerometer data of the device.

Lim et al. (2014) proposed a highly efficient activity monitoring system. To filter possible fall events, the system uses simple thresholds from fall-feature parameters calculated from a single triaxial accelerometer. A Hidden Markov Model (HMM) is then used on the possible fall events to distinguish between actual fall events and fall-like events. Thus, this system conserves computational cost and resources by using the Hidden Markov Model (HMM) only for classifying possible fall events. The system is chest-mounted as the chest is the closest to the body’s center of gravity. The best results were obtained when the threshold parameters were set as ASVM = 2.5 g and θ = 55°. The system achieved 99.5% accuracy, 99.69% specificity, and 99.17% sensitivity, respectively.

2.1.2 Fall detection using depth camera

Depth cameras are used to produce a 2D image representing the distance to points in a scene from a specific point. The pixel values of the resultant image correspond to the depth or distance of the points. Images from generated from RGB cameras do not contain depth information. Pixels in normal images correspond to intensities of the corresponding points. Depth cameras and their generated depth images can be used to properly determine the position of an object or a person in an environment (Xu et al. 2019). Depth images can be used for detecting fall events (Xu et al. 2019), (Kong et al. 2019). Depth camera-based systems almost exclusively employ machine learning models for detection and classification of fall and ADL events.

Ding et al. (2017) introduced a detection algorithm employing depth images collected from a Kinect sensor using wavelet moment. At first, the algorithm normalizes the depth image according to each pixel in the image relative to the distance from the centroid and polar coordinates. The feature vectors are extracted after performing Fast Fourier Transform (FFT) of the image. Wavelet transform is used in the extraction process. Finally, Support Vector Machine (SVM) classification methods and the minimum distance are used to detect the fall. The algorithm was tested on 100 images (non-fall 58 images and 42 fall images). The accuracy of detecting fall images is about 88% and the accuracy of detecting non-fall images is about 90%. The algorithm currently monitors only one user. The effectiveness of the algorithm on multiple users can be checked in the future. Kong et al. (2017) proposed an algorithm for fall detection. The system relies on a depth camera. The RGB-D camera is placed at 2 m from the ground. The binary images found through the depth camera are passed through a canny filter for getting the outline of the images. Then 15° groups were created by dividing the calculated tangent vector angles of all the white angles in the outline image. The value of the tangent angles (in most of the cases) below 45° considered a fall. The system appraised the accuracy, sensitivity, specificity of 97.1, 94.9, and 100%, respectively. As the system uses an RGB-D camera, it can work perfectly even in dark conditions. The system also works on environments where more than one person is present. Tran et al. (2014) proposed a novel approach that defines and computes three distinct features (angle, distance, velocity) of 8 upper body joints (Head, Shoulder_Right, Shoulder_Center, Shoulder_Left, Spine, Hip_Right, Hip_center, Hip_Left). The activities are represented as a set of three values (angle, distance, velocity) of several joints of the human skeleton that are known as feature vectors in the prototype. These feature vectors are provided as inputs for training or testing SVM classifiers. Using a combination of two joints: head and spine with two features: distance and velocity provided the best results. The transition time should also be taken into account for reducing the number of fall positives of the proposed system in the future.

2.1.3 Fall detection using infrared sensor

An infrared sensor is an electronic sensor that measures infrared light radiating from the objects in the field of view. IR sensors can detect general movement but cannot provide information on the moving subject itself. As humans mostly give off infrared radiation, IR sensors can be used to monitor human movement (Martínez-Villaseñor et al. 2019), (Moulik and Majumdar 2019). Infrared based systems are also mostly surveillance oriented. IR sensor-generated data are normally used to create 3D images or blocks representing environmental infrared radiation information (Mastorakis and Makris 2014). After feature extraction, various machine learning or statistical models are used to detect the fall and ADL events (Martínez-Villaseñor et al. 2019), (Moulik and Majumdar 2019), (Mastorakis and Makris 2014).

Chen and Ma (2015) adopted an infrared sensor array composed of a 16 × 4 thermopile array with corresponding 60° × 16.4° field of view. Two sensors attached to different places in the wall that capture the three-dimensional image information. The temperature difference characteristic is then used to subtract the image from the background model to determine the foreground of the human body. The Angle of Arrival (AOA) from each sensor is obtained by using the foreground temperature. An AOA based positioning algorithm is used to estimate the location and the location is then passed to a regression model to reduce the positioning error. As the two sensors capture any action simultaneously, the fall detection algorithm extracts feature from the sensor with the larger foreground region. The extracted features are then applied to the k-Nearest Neighbor (k-NN) classification model which classifies them into fall and non-fall events. This system managed to distinguish fall event with 93% total accuracy, 95.25% sensitivity, and 90.75% specificity. Jankowski et al. (2015) proposed a system based on IR depth sensor measurements. A feature selection block by Gram-Schmidt orthogonalization and a Nonlinear Principal Component Analysis (NPCA) block is used to improve the effectiveness of discriminative statistical classifiers (multilayer perceptron). The feature selection block determines the ranking of the features. NPCA block transforms the raw data into a nonlinear manifold, thus reducing the dimensionality of the data to two dimensions. The system obtained an accuracy of 93% and a sensitivity of 92%. The deep learning classifier structure used 5 hidden neurons, whereas, the neural networks used 15 hidden neurons.

2.1.4 Fall detection using radar

Radars are devices for tracking objects using radio waves to determine their position, size and velocity. A radar system normally consists of a transmitter capable of generating electromagnetic waves in the radio and microwave spectrum, a receiving antenna, a receiver, a transmitting antenna and a processor to determine the characteristics of the objects. The transmitter transmits radio waves. These waves reflect off the objects. The object’s location and speed can be calculated from the reflected waves (Rana et al. 2019). Doppler radars have been extensively used in fall detection systems (Yoshino et al. 2019), (Su et al. 2015). Doppler radars are specialized radars that employ the Doppler effect. Doppler radars emit microwave signals and analyze how the objects alter the frequencies of the returned signal. Various signal processing techniques are generally used to detect falls from radar data (Yoshino et al. 2019), (Sadreazami et al. 2019), (Sadreazami et al. 2020), (Ding et al. 2019), (Erol and Amin 2019).

Jokanovic et al. (2016) used a monostatic Continuous Wave (CW) Radar for fall detection. In the proposed system, radar returns nonstationary-natured signals corresponding to normal human motions. Thus, constant and higher-order velocity components of various parts of the human body under motion can be revealed using time–frequency (TF) analysis can be used to extract the higher-order and constant velocity components of various parts of the human body. This system uses a TF-based deep learning approach for detecting fall events. The proposed approach in the system captures the TF signature properties automatically and applies the features to the softmax regression classifier. The system uses stacked auto-encoders for feature extraction. The system achieved 87% success rate in detection fall events. Su et al. (2015) developed a detection system that employed a Doppler range control radar. The radar is ceiling mounted. The radar senses the falls and non-falls from the Doppler Effect. The Wavelet Transform is used to distinguish among the activity events. The system at first uses the coefficients of wavelet decomposition at a given scale for identifying the time locations of the possible fall events. Then the time–frequency content is extracted from the wave coefficients at many scales and a feature vector is formed for classification. Out of the different wavelet functions tested in the system, higher detection accuracy was reached using “bior2.2”, “db3”, “rbio1.3”, “rbio3.3”, and “sym3,” for the prescreening stage and “bior2.6”, “coif4”, “db10”, “db11”, and “rbio3.3” for the classification stage. This system can be used in any indoor scenarios including bathrooms as this system does not compromise the privacy of the user. With the use of the WT pre-screener and classifier, the system achieved 93% accuracy, 97.1% sensitivity, and 92.2% specificity.

2.1.5 Fall detection using 802.11n NIC

The wireless medium consists of electromagnetic signals in the radio or microwave spectrum. These signals contain binary data. The channel data resulting from humans affecting the wireless medium can be used in machine learning or statistical models for fall detection (Fung et al. 2019), (Wang et al. 2017).

Wang et al. (2017) developed a system named WiFall. Human activities affect the wireless medium. WiFall takes the time variability and special diversity of Channel State Information (CSI) as an indication of human activities. As CSI is available in almost all of the current wireless infrastructure, WiFall does not require any hardware modification or wearable devices or any kind of environmental modifications. The system used WiFall on laptops equipped with commercial 802.11n NICs. The channel properties of a communication link can be estimated by CSI. It can also be used to detect human motion because human motion affects wireless propagation space, creating different patterns in the received signal. A one-class SVM was used to distinguish human fall based on the features extracted from the anomaly patterns. WiFall achieved 87% positive detection rate and 18% false detection rate in laboratory experiments.

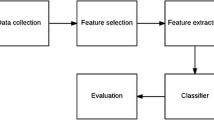

It can be observed from the reviewed single sensor-based works that there are three major distinct stages in single sensor-based fall detection systems. The first distinct stage is the data collection stage. In this stage, single sensor modalities are used to collect raw data. The reviewed systems used various sensing modalities, e.g., tri-axial accelerometer, depth camera, infrared sensor, radar, 802.11n NIC, etc. to collect data from the patients. The second major distinct stage is the feature extraction stage. In this stage, various methods are used to extract meaningful information and features from the data collected in the previous stage. The majority of the reviewed works used various feature extraction methods, such as ESAE, FFT, wavelet transform and stacked auto-encoders to extract features from the collected data. The third distinct stage is the classification stage. The extracted features from the previous stage are classified using various methods in this stage. The reviewed single sensor-based fall detection systems used various threshold and artificial intelligence-based methods to classify the extracted features. Considering these three stages, a general architecture of single sensor-based fall detection systems which are found from the reviewed works is presented in Fig. 2.

Table 1 summarizes the above described single sensor-based systems considering the following issues: sensor type, location of the used sensor, portability of the system, indoor-outdoor use, user privacy, accuracy, sensitivity, and specificity.

2.2 Multiple sensor-based fall detection systems

Multiple sensor-based systems depend on multiple sensors for capturing the real-world scenario. The systems normally rely on a fusion of sensors, such as gyroscope, accelerometer and depth camera for data collection purposes. The data collected from multiple sensors are processed differently and then used in threshold-based algorithms (Cillis et al. 2015) or machine learning models for detection (Boutellaa et al. 2019), (Wu et al. 2019).

2.2.1 Fall detection using accelerometer and camera

Accelerometers are used to determine the acceleration of a body along several axes. Cameras provide information about the movement of the patient, as well as the environment around the patient. Both still images and video sequences can be used for fall detection. Still images are normally multidimensional matrices where an entry implying a pixel represents the intensity of the light on that location. Videos are sequences of still images. Various image processing techniques employing shape information and segmentation techniques can be used on video sequences for fall detection (Ozcan and Velipasalar 2016), (Zerrouki et al. 2016).

Zerrouki et al. (2016) used the Exponentially Weighted Moving Average (EWMA) monitoring scheme on the accelerometric data to detect potential falls. Only the features corresponding to detected falls were then classified using SVM into true falls and fall like events. A background subtraction technique was used to extract the body silhouette from the input image sequence. Thus, unchanged pixels in the frame sequence were eliminated by using the background image as a reference. This EWMA-SVM classification system outperformed Neural Network, k-NN, and Naive Bayes classifiers (AUCNN = 0.94, AUCKNN = 0.93, AUCNaiveBayes = 0.95 and AUCEWMA-SVM = 0.97). The system achieved an overall accuracy of 96.77%. But the system has still some shortcomings. The RGB camera used in this system is not capable of extracting the human silhouette in dark conditions. The dataset used in the system is collected from the University of Rzeszow named as fall detection dataset (URFD) (Kwolek and Kepski 2014) to test the system. The dataset contained the fall events and ADL from volunteers who are normally over the age of 26. The volunteers used in the dataset do not reflect the elderly community.

Ozcan and Velipasalar (2016) proposed a system employing camera and accelerometer sensors of smartphones to assist the elderly. The system uses histograms of edge orients with gradient local binary patterns as the main features. For the accelerometer-based part of fall detection, the system checks if the magnitude of the 3-axis vector is greater than the empirically determined threshold. The 3-axis vector is obtained by observing the magnitude of linear acceleration with the gravity component extracted from the corresponding direction. This system performed better than other systems which used Histograms of Oriented Gradients (HOG) and its variants for feature extraction. The system achieved 96.36% sensitivity and 92.45% specificity in detecting falls from standing and 90.91% sensitivity and 66.04% specificity in detecting falls from sitting.

2.2.2 Fall detection using accelerometer and gyroscope

While accelerometers provide an overall motion of a body along several axes, gyroscopes provide information about the orientation and angular velocity of the body. Accelerometers and gyroscopes can be combined to create highly accurate fall detection systems (Bet et al. 2019), (Kwon et al. 2019).

Wu et al. (2019) proposed a multiple sensor-based fall detection system that used a novel threshold derived from a multivariate control chart to detect falls in motion data. Two types of sensors namely: accelerometer and gyroscope are used in the system. The sensors are fixed on the waist, arm, and thigh location of the monitored person. In the data processing stage, the Autoregressive Integrated Moving Average (ARIMA) technique is used to remove autocorrelation, and PCA is used to reduce dimensionality from the multidimensional data. In the classification stage, falls and ADLs are differentiated by using a multivariate statistical process control chart. The system is person-specific as the threshold value is calculated from individual historical data. The developed system achieved 95.2% specificity and 94.8% sensitivity.

Cillis et al. (2015) introduced a smartphone-based fall detection that used accelerometer and gyroscope to collect the data from the real-time environment (Inertial Measurement Units and/or Smartphones). The system combined the user’s heading with the instantaneous acceleration magnitude vector in a Threshold Based Algorithm (TBA). The best performance was achieved using a threshold-based algorithm combining gyroscope and accelerometer information. The system achieved 100% accuracy in discriminating between falls and ADLs using this approach. Huynh et al. (2015) developed an approach using a combination of accelerometer and gyroscope sensors for robust fall detection. The system implemented an optimization schema using the Receiver Operating Characteristic (ROC) curve and iterative analysis of sensitivity and specificity and determined critical thresholds for LFTacc, UFTacc, and UFTgyro to be 0.30 g–0.35 g, 2.4 g, and 240°/s, respectively. The system achieved 96.3% sensitivity and 96.2% specificity, respectively, using an accelerometer, gyroscope, and a ROC optimization strategy. While the system is very effective for detecting falls, it might be less effective in determining near fall detection scenarios. For testing, the sensors were worn in the chest.

2.2.3 Fall detection using accelerometer, gyroscope and depth camera

Still images generated from RGB cameras do not contain any depth information about the subject or the environment. Depth cameras generate images that contain the depth information of the environment. This depth information can be used to accurately track a subject’s location in the environment (Xu et al. 2019), (Kong et al. 2019), (Kwolek and Kepski 2014).

Kwolek and Kepski (2014) used a tri-axial accelerometer and a gyroscope to observe and detect potential falls as well as the motion of the user. If the calculated acceleration passes a set threshold, the person is extracted from the depth images taken from the Kinect, features are calculated and finally, SVM classifier is used to classify the action and initialize the fall alarm. The system achieved accuracy, sensitivity, and specificity of 98.33, 100, and 96.67% when SVM was used to classify based on depth images and accelerometer data. The dataset developed and used in this system is named University of Rzeszow Fall Detection dataset (URFD) (Kwolek and Kepski 2014) and is publicly available. However, as this system relies heavily on depth images from the Kinect sensor, this system is most suitable for indoor uses as the sunlight interferes with the depth estimation of the Kinect device in an outdoor scenario.

2.2.4 Fall detection using accelerometer, cardiotachometer and smart sensors

Cardiotachometer is a device that is used for a prolonged graphical recording of the heartbeat. Fall events result in accelerated heart rates. Thus, cardiotachometer readings are useful in detecting fall events (Gia et al. 2018).

A multi-functional data acquisition board was proposed by Wang et al. (2014) which incorporated temperature and humidity sensors, a low power 3-axis accelerometer, a GPS module, a cardiotachometer, and a wireless communication module. The threshold-based algorithm used in this system relies on three features for accurate fall detection such as impact magnitude, trunk angle, and after-event heart rate. The system achieved 97.5% total accuracy, 96.8% sensitivity, and 98.1% specificity.

2.2.5 Fall detection using accelerometer, gyroscope and UWB location tags

UWB location tags provide continuous location information to the receiver. This location information can be used to determine the real-time location of a patient in a closed environment. Several other sensors can be combined with the location information to detect fall events (Gjoreski et al. 2014).

A system named CoFDILS using body-worn inertial and location sensors proposed by Gjoreski et al. (2014). Three context components such as the user’s activity, body accelerations, and location information are used to determine the occurrence of a fall. A context-based reasoning schema is used in the system. Each of the three components uses the information from the other two components as context and determines the user’s situation. Each component is assigned a context variable. The context variable contains the value of the component at each point in time. A total of six inertial sensors were placed on the user body. The positions of the inertial sensors were: chest, waist, left thigh, left ankle, right thigh, and right ankle. Only the sensors in the user’s legs and waist were studied. A total of four location tags were placed on the user’s body. The positions of the location tags were: chest, waist, left and right ankle. Their detected UWB (Ultra-Wide Band) radio signals were tracked by sensors fixed in the corners of the room. After processing the acquired data, various machine learning classification methods (Decision trees, k-NN, Naive Bayes, Random Forest and SVM) were used to classify the data. A single sensor enclosure including one inertial sensor and one location sensor placed on the chest achieved 96.6% success in fall detection and 93.3% success in activity recognition. The Random Forest technique also provided the best classification results.

2.2.6 Fall detection using accelerometer, gyroscope and magnetometer

Sanchez and Muñoz (2019) introduced a multiple sensor-based wrist-worn fall detection system that used an artificial neural network (ANNs) for differentiating between falls and ADLs. Three types of sensors namely: accelerometer, gyroscope, and magnetometer were used in the prototype. The used neural network was very simple having only 1 hidden layer with 8 neurons. The system achieved accuracy, sensitivity, and specificity of 98.10%, 98.10%, and 98.10%, respectively. All the tests were performed in laboratory conditions. The prototype was also bulky in size. Boutellaa et al. (2019) proposed a multiple wearable sensor-based fall detection system that used the covariance matrix as a means to fuse signals from sensors and the nearest neighbor classifier to differentiate between falls and ADLs. In the data collection stage, three sensors, namely: accelerometer, gyroscope, and magnetometer are used. In the feature extraction stage, the covariance matrix is used to fuse the multiple signals. In the detection stage, Riemannian metrics and K-NN are used to classify activities into three types: falls, risk-falls, and ADLs. Two available public datasets were used as main data sources, no prototypes were made. The system achieved 92.5% accuracy.

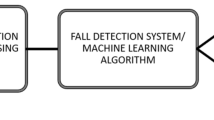

A similar observation to the single sensor-based fall detection systems is made for the reviewed multiple sensor-based works. Three major distinct stages are involved with multiple sensor-based fall detection systems. The first distinct stage is the data collection stage, where a combination of sensing modalities is used to collect raw data from patients. The reviewed works used various combinations of sensing modalities, such as tri-axial accelerometer, gyroscope, magnetometer, UWB location tag, etc. to collect data from the patients. The second major distinct stage comprises the feature extraction stage, where various methods are used to extract meaningful information from raw sensor data. The majority of the reviewed multiple sensor-based works used various feature extraction methods, such as EWMA, context-based reasoning, histogram of oriented gradients, etc. to extract features from the collected data. The third major distinct stage consists of the classification, where extracted features are classified into various classes. The reviewed multiple sensor-based fall detection systems used threshold and artificial intelligence-based methods for classification. Thus, a general architecture as illustrated in Fig. 3 is derived from the reviewed multiple sensor-based fall detection systems.

Table 2 summarizes the above-described multiple sensor-based systems considering the following issues: sensor type, location of the used sensor, portability of the system, indoor-outdoor use, user privacy, accuracy, sensitivity, and specificity.

3 Results and discussion

Several performance metrics are used for comparing the effectiveness of systems. Accuracy, specificity, and sensitivity are such metrics used for evaluating and differentiating different systems. In the context of fall detection, True Positives (TP) are correctly identified “fall” instances. False Positives (FP) are incorrectly classified “non-fall” instances. True Negatives (TN) are correctly identified “non-fall” instances. False Negatives (FN) are incorrectly classified “fall” instances. A reliable system should strive for a low False Positive and False Negative rate.

Accuracy can be described as the proportion of accurate instances to total number of instances. Accuracy can be calculated as follows.

Sensitivity also termed as Recall or True Positive Rate (TPR) can be expressed as the ratio of actual positives that have been correctly classified as positives. Sensitivity can be calculated as follows.

Specificity also termed as Selectivity or True Negative Rate (TNR) can be expressed as the ratio of actual negatives that have been correctly classified as negatives. Specificity can be calculated as follows.

Accuracy of all the reviewed systems is presented in Fig. 4. Single sensor-based systems and multiple sensor-based systems have been separated by using two different colors. Among single sensor-based systems, the accelerometer-based system proposed in (Lim et al. 2014) achieved the highest accuracy of 99.50%. The waist mounted accelerometer based system proposed in (Yacchirema et al. 2019) achieved 98.72% accuracy. The accelerometer-based system introduced in (Cao et al. 2016) and RGB-D camera-based system introduced in (Kong et al. 2017) achieved accuracy of 97.20% and 97.10%, respectively. The monostatic CW radar-based system developed in (Jokanovic et al. 2016) and 802.11n NIC based system developed in (Wang et al. 2017) both achieved 87.0% accuracy, which is the lowest in all the reviewed systems. Among the multiple sensor-based systems, the system employing both accelerometer and gyroscope in (De Cillis et al. 2015) achieved the highest accuracy of 100.0%. The system employing an accelerometer, gyroscope, and depth camera in (Kwolek and Kepski 2014) achieved an accuracy of 98.33%. Among all the reviewed systems, the multiple sensor-based system proposed in (De Cillis et al. 2015) achieved the highest accuracy (100.0%). The second highest accuracy (99.50%) was achieved by the single sensor-based system developed in (Lim et al. 2014). The lowest accuracy (87.0%) was achieved by the systems described in (Jokanovic et al. 2016) and (Wang et al. 2017) where both are single sensor-based systems. The systems developed in (Aguiar et al. 2014), (Tran et al. 2014), (Ozcan and Velipasalar 2016), (Huynh et al. 2015) did not provide accuracy metrics, making their systems harder to compare to other systems.

Sensitivity of all the reviewed systems is presented in Fig. 5. Single sensor-based systems and multiple sensor-based systems have been separated by using two different colors. Among the single sensor-based systems, the accelerometer-based system proposed in (Chen et al. 2019) achieved the highest sensitivity of 99.30%. The accelerometer based systems designed in (Lim et al. 2014) achieved 99.7% sensitivity. The Doppler radar-based system introduced in (Su et al. 2015) and the accelerometer-based system introduced in (Aguiar et al. 2014) achieved the sensitivity of 97.1% and 97.0%, respectively. Among the reviewed multiple sensor-based systems, the accelerometer, gyroscope, and depth camera-based system developed in (Kwolek and Kepski 2014) achieved the highest sensitivity of 100.0%. The accelerometer, gyroscope and magnetometer based system proposed by (Sanchez and Muñoz 2019) achieved 98.10% sensitivity. The accelerometer and camera-based system proposed in (Ozcan and Velipasalar 2016), accelerometer and gyroscope-based system described in (Huynh et al. 2015), and the accelerometer, cardiotachometer, smart sensors-based system introduced in (Jin Wang et al. 2014) achieved sensitivity scores as 96.36%, 96.30%, and 96.80%, respectively. Among all the reviewed systems, the multiple sensor-based systems proposed in (Kwolek and Kepski 2014) achieved the highest sensitivity (100.0%). The system introduced in (Chen et al. 2019) achieved the second-highest sensitivity (99.30%). The systems proposed in (Ding et al. 2017), ( Tran et al. 2014), (Jokanovic et al. 2016), (Wang et al. 2017), (Zerrouki et al. 2016), (De Cillis et al. 2015), (Gjoreski et al. 2014) did not provide any sensitivity metric.

The specificity of all the reviewed systems is presented in Fig. 6. Two colors were used to differentiate between single sensor-based systems and multiple sensor-based systems. Among the reviewed single sensor-based systems, the accelerometer-based system proposed in (Cao et al. 2016) and the RGB-D camera-based system introduced in (Kong et al. 2017) achieved the highest specificity (100%). The accelerometer-based systems proposed in (Aguiar et al. 2014) and (Lim et al. 2014) achieved almost similar specificity of 99.0%, and 99.69%, respectively. Among the reviewed multiple sensor-based systems, the accelerometer, cardiotachometer, and smart sensor-based system proposed in (Jin Wang et al. 2014) and the accelerometer, gyroscope, magnetometer based system proposed in (Sanchez and Muñoz 2019) achieved the highest specificity of 98.1%. The accelerometer and gyroscope-based system introduced in (Huynh et al. 2015), and the accelerometer, gyroscope, and depth camera-based system introduced in (Kwolek and Kepski 2014) achieved 96.2% and 96.67% specificity scores, respectively. Among all the reviewed systems, the systems proposed in (Cao et al. 2016) and (Kong et al. 2017) achieved the highest specificity, and they are both single sensor-based systems. The system described in (Lim et al. 2014) achieved the second-highest specificity as 99.69%. The systems proposed in (Ding et al. 2017), (Tran et al. 2014), (Jankowski et al. 2015), (Jokanovic et al. 2016), (Wang et al. 2017), (Zerrouki et al. 2016), (De Cillis et al. 2015), (Gjoreski et al. 2014) did not provide any specificity metrics.

In most of the developed systems, the researchers only measured one or two out of the three performance metrics. In single sensor-based systems, only the systems proposed in (Chen et al. 2019), (Yhdego et al. 2019), and (Lim et al. 2014) have scored greater than 95% in all three performance metrics. Out of the three, the system developed in (Lim et al. 2014) achieved the highest accuracy, sensitivity, and specificity values of 99.5, 99.17, 99.69%, respectively. In multiple sensor-based systems, only the systems developed in (Kwolek and Kepski 2014), (Jin Wang et al. 2014), and (Sanchez and Muñoz 2019) achieved greater than 95% scores in all three performance metrics.

Both single sensor and multiple sensors-based fall detection systems achieve higher accuracy, sensitivity, and specificity. The multiple sensors, however, increase the overall accuracy, sensitivity, and specificity, but not by leaps and bounds. Accelerometers are the most used sensors in our review. Gyroscopes are a close second.

We evaluated the systems based on three criteria: portability, indoor/outdoor use and user’s privacy. The portability score represents the ease of carrying the system with the monitored person. The portability score is provided on a scale of 0–2. A score of 0 meaning the relevant system is not portable; 1 meaning, somewhat portable; and 2 meaning, highly portable.

The attribute—indoor/outdoor use—represents the adaptability of the system with the environment where the system can be used. This attribute can have a character symbol from I, O, and B. “I” represents that the relevant system can only be used indoors, “O” represents the relevant system can only be used outdoors, while “B” represents that the relevant system can be used both indoors and outdoors. The privacy score represents the degree of privacy violation of the monitored person through the system. While all data leaks are harmful in one way or another, leaks of raw data from one type of sensor might be relatively less harmful than leaks from another type of sensor, depending on the capacity of data access by a third party. The privacy score is provided on a scale of 0–2. A score of 0 represents that the relevant system does not protect the user’s privacy i.e., it poses a high risk to the user’s privacy. A score of 1 represents that the relevant system moderately protects the user’s privacy, while a score of 2 represents that the relevant system protects the user’s privacy.

Out of the reviewed systems, single or multiple sensor-based wrist-mounted solutions such as smart watches are the most versatile. They can be used both indoor and outdoor environments. However, power efficiency and network connectivity are big issues for such devices. The network connectivity issues for the devices make them difficult to embed fall rescue services. Other waist-mounted, thigh-mounted or chest-mounted solutions might be uncomfortable for the users. But a combination of these mounts increases the overall effectiveness of the fall detection system.

Smartphone based fall detection and rescue systems are good alternatives to embedded-system-based solutions. Availability of all types of motion sensors in smartphones, easy to use APIs, network connectivity, good battery life, the widespread availability and affordability of smartphones makes them a very good choice for developing fall detection systems. Most multiple sensors-based wearable fall detection systems are chest or waist mounted. Multiple sensors-based solutions combining wearable sensors and stationary sensors can only be used indoor but might be more applicable for mass monitoring in nursing homes, hospitals, care centers, etc. Fall detection systems employing floor sensors, Doppler radars, different types of cameras, etc. can only be used indoors and are applicable for use in the aforementioned scenarios. No such system is found that could only be used outdoors.

User privacy is also a huge concern for fall detection systems. Fall detection systems employing various cameras do not protect the users’ privacy. Accelerometer, gyroscope, magnetometer, and cardiotachometer based solutions protect the privacy of the users relatively better. Portability is a huge consideration for fall detection systems. Fall detection systems that use different types of cameras, IR sensors, floor sensors, and radars are not portable at all. On the other hand, body-mounted systems employing various motion sensors, such as accelerometers, gyroscopes, barometers, and magnetometers are highly portable and adaptable.

4 Conclusion

The paper reviews the various automatic fall detection systems which use various types of sensors to capture the real-world environment. The reviewed fall detection systems use either single or multiple sensors for data acquisition related to falls. The number of sensors used does not normally dictate the system’s accuracy. However, accuracy is more dependent on the features used for classification in the system. Accelerometer based single sensor systems are the best for wearable solutions. Kinect sensor-based fall detection systems cannot be used for outdoor monitoring as the sunlight affects the accuracy of the depth estimation. Most of the monitoring systems do not protect the users’ privacy. Depth camera, Wi-Fi Channel State Information (CSI), Doppler radar, Infrared Sensor Array based monitoring systems are great for maintaining the users’ privacy. They can even be deployed in bathrooms. But most of these systems are limited by their range and need multiple sensors deployed throughout the environment for providing accurate fall monitoring. Most of the network-reliant systems are also susceptible to hackers. The overall security of the patients should be considered while developing fall detection systems. As most of the fall detection systems are tested on their custom datasets, it becomes difficult to compare different fall detection systems based on their performance metrics. Therefore, fall detection systems should be tested on similar open-access datasets for future comparison. Similar open-access datasets can be merged to generate larger benchmark datasets. Computer vision or surveillance-based fall detection systems need huge amounts of processing power. They also stop working when a power failure occurs. Hence, failsafe systems should be proposed that would work when the primary systems cease to work for some reason. Most fall detection systems face problems in differentiating the activity of lying down and falling. Depth image and radar-based fall detection systems face problems when furniture is present in the system and the user falls behind furniture. The most important aspects while designing fall detection systems are computational cost, classifiers, energy consumption, environment, the presence of multiple people, privacy, threshold values, etc. We hope that this review will aid the researchers to design better sensor-based fall detection systems.

References

Aguiar B, Rocha T, Silva J, Sousa I (2014) Accelerometer-based fall detection for smartphones. In: 2014 IEEE International Symposium on Medical Measurements and Applications (MeMeA). IEEE, pp 1–6

Bagalà F, Becker C, Cappello A et al (2012) Evaluation of accelerometer-based fall detection algorithms on real-world falls. PLoS ONE 7:e37062. https://doi.org/10.1371/journal.pone.0037062

Bergen G, Stevens MR, Burns ER (2016) Falls and fall injuries among adults aged ≥65 years—United States, 2014. MMWR Morb Mortal Wkly Rep 65:993–998. https://doi.org/10.15585/mmwr.mm6537a2

Bet P, Castro PC, Ponti MA (2019) Fall detection and fall risk assessment in older person using wearable sensors: a systematic review. Int J Med Inform 130:103946. https://doi.org/10.1016/j.ijmedinf.2019.08.006

Bin KS, Park J-H, Kwon C et al (2019) An energy-efficient algorithm for classification of fall types using a wearable sensor. IEEE Access 7:31321–31329. https://doi.org/10.1109/ACCESS.2019.2902718

Boutellaa E, Kerdjidj O, Ghanem K (2019) Covariance matrix based fall detection from multiple wearable sensors. J Biomed Inform 94:103189. https://doi.org/10.1016/j.jbi.2019.103189

Buke A, Gaoli F, Yongcai W et al (2015) Healthcare algorithms by wearable inertial sensors: a survey. China Commun 12:1–12. https://doi.org/10.1109/CC.2015.7114054

Cao H, Wu S, Zhou Z, et al (2016) A fall detection method based on acceleration data and hidden Markov model. In: 2016 IEEE International Conference on Signal and Image Processing (ICSIP). IEEE, pp 684–689

Casilari E, Santoyo-Ramón J-A, Cano-García J-M (2017) Analysis of public datasets for wearable fall detection systems. Sensors 17:1513. https://doi.org/10.3390/s17071513

Chaudhuri S, Thompson H, Demiris G (2014) Fall detection devices and their use with older adults. J Geriatr Phys Ther 37:178–196. https://doi.org/10.1519/JPT.0b013e3182abe779

Chen WH, Ma HP (2015) A fall detection system based on infrared array sensors with tracking capability for the elderly at home. In: 2015 17th International Conference on E-health Networking, Application & Services (HealthCom). IEEE, pp 428–434

Chen L, Li R, Zhang H et al (2019) Intelligent fall detection method based on accelerometer data from a wrist-worn smart watch. Measurement 140:215–226. https://doi.org/10.1016/j.measurement.2019.03.079

De Cillis F, De Simio F, Guido F, et al (2015) Fall-detection solution for mobile platforms using accelerometer and gyroscope data. In: 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE, pp 3727–3730

Debes C, Merentitis A, Sukhanov S et al (2016) Monitoring activities of daily living in smart homes: understanding human behavior. IEEE Signal Process Mag 33:81–94. https://doi.org/10.1109/MSP.2015.2503881

Delahoz Y, Labrador M (2014) Survey on fall detection and fall prevention using wearable and external sensors. Sensors 14:19806–19842. https://doi.org/10.3390/s141019806

Ding Y, Li H, Li C, et al (2017) Fall detection based on depth images via wavelet moment. In: 2017 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI). IEEE, pp 1–5

Ding C, Zou Y, Sun L, et al (2019) Fall detection with multi-domain features by a portable FMCW radar. In: 2019 IEEE MTT-S International Wireless Symposium (IWS). IEEE, pp 1–3

Doulamis N (2010) Viusal fall alert service in low computational power device to assist persons’ with dementia. In: 2010 3rd International Symposium on Applied Sciences in Biomedical and Communication Technologies (ISABEL 2010). IEEE, pp 1–5

Erden F, Velipasalar S, Alkar AZ, Cetin AE (2016) Sensors in assisted living: a survey of signal and image processing methods. IEEE Signal Process Mag 33:36–44. https://doi.org/10.1109/MSP.2015.2489978

Erol B, Amin MG (2019) Radar data cube processing for human activity recognition using multisubspace learning. IEEE Trans Aerosp Electron Syst 55:3617–3628. https://doi.org/10.1109/TAES.2019.2910980

Florence CS, Bergen G, Atherly A et al (2018) Medical costs of fatal and nonfatal falls in older adults. J Am Geriatr Soc 66:693–698. https://doi.org/10.1111/jgs.15304

Fung NM, Wong Sing Ann J, Tung YH, et al (2019) Elderly Fall Detection and Location Tracking System Using Heterogeneous Wireless Networks. In: 2019 IEEE 9th Symposium on Computer Applications & Industrial Electronics (ISCAIE). IEEE, pp 44–49

Gjoreski H, Gams M, Luštrek M (2014) Context-based fall detection and activity recognition using inertial and location sensors. J Ambient Intell Smart Environ 6:419–433. https://doi.org/10.3233/AIS-140268

Hamm J, Money AG, Atwal A, Paraskevopoulos I (2016) Fall prevention intervention technologies: a conceptual framework and survey of the state of the art. J Biomed Inform 59:319–345. https://doi.org/10.1016/j.jbi.2015.12.013

Homann B, Plaschg A, Grundner M et al (2013) The impact of neurological disorders on the risk for falls in the community dwelling elderly: a case-controlled study. BMJ Open 3:e003367. https://doi.org/10.1136/bmjopen-2013-003367

Hu L, Wang S, Chen Y, et al (2018) Fall detection algorithms based on wearable device: a review. zjujournals.com

Huynh QT, Nguyen UD, Irazabal LB et al (2015) Optimization of an accelerometer and gyroscope-based fall detection algorithm. J Sensors 2015:1–8. https://doi.org/10.1155/2015/452078

Igual R, Medrano C, Plaza I (2013) Challenges, issues and trends in fall detection systems. Biomed Eng Online 12:66. https://doi.org/10.1186/1475-925X-12-66

Igual R, Medrano C, Plaza I (2015) A comparison of public datasets for acceleration-based fall detection. Med Eng Phys 37:870–878. https://doi.org/10.1016/j.medengphy.2015.06.009

Islam MM, Neom NH, Imtiaz MS et al (2019) A review on fall detection systems using data from smartphone sensors. Ing des Syst d’Inform 24:569–576. https://doi.org/10.18280/isi.240602

Islam MM, Rahaman A, Islam MR (2020) Development of smart healthcare monitoring system in IoT environment. SN Comput Sci 1:185. https://doi.org/10.1007/s42979-020-00195-y

Jager TE, Weiss HB, Coben JH, Pepe PE (2000) Traumatic brain injuries evaluated in U.S. emergency departments, 1992–1994. Acad Emerg Med 7:134–140. https://doi.org/10.1111/j.1553-2712.2000.tb00515.x

Jankowski S, Szymanski Z, Dziomin U, et al (2015) Deep learning classifier for fall detection based on IR distance sensor data. In: 2015 IEEE 8th International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS). IEEE, pp 723–727

Jokanovic B, Amin M, Ahmad F (2016) Radar fall motion detection using deep learning. In: 2016 IEEE Radar Conference (RadarConf). IEEE, pp 1–6

Khan SS, Hoey J (2017) Review of fall detection techniques: a data availability perspective. Med Eng Phys 39:12–22. https://doi.org/10.1016/j.medengphy.2016.10.014

Kong X, Meng L, Tomiyama H (2017) Fall detection for elderly persons using a depth camera. In: 2017 International Conference on Advanced Mechatronic Systems (ICAMechS). IEEE, pp 269–273

Kong X, Meng Z, Meng L, Tomiyama H (2019) Three-states-transition method for fall detection algorithm using depth image. J Robot Mechatronics 31:88–94. https://doi.org/10.20965/jrm.2019.p0088

Kwolek B, Kepski M (2014) Human fall detection on embedded platform using depth maps and wireless accelerometer. Comput Methods Programs Biomed 117:489–501. https://doi.org/10.1016/j.cmpb.2014.09.005

Lee J-S, Tseng H-H (2019) Development of an enhanced threshold-based fall detection system using smartphones with built-in accelerometers. IEEE Sens J 19:8293–8302. https://doi.org/10.1109/JSEN.2019.2918690

Lim D, Park C, Kim NH et al (2014) Fall-detection algorithm using 3-axis acceleration: combination with simple threshold and hidden Markov model. J Appl Math 2014:1–8. https://doi.org/10.1155/2014/896030

Lord SR, Menz HB, Sherrington C (2006) Home environment risk factors for falls in older people and the efficacy of home modifications. Age Ageing 35:ii55–ii59. https://doi.org/10.1093/ageing/afl088

Martínez-Villaseñor L, Ponce H, Brieva J et al (2019) UP-fall detection dataset: a multimodal approach. Sensors 19:1988. https://doi.org/10.3390/s19091988

Mastorakis G, Makris D (2014) Fall detection system using Kinect’s infrared sensor. J Real-Time Image Process 9:635–646. https://doi.org/10.1007/s11554-012-0246-9

Mehmood A, Nadeem A, Ashraf M et al (2019) A novel fall detection algorithm for elderly using SHIMMER wearable sensors. Health Technol (Berl) 9:631–646. https://doi.org/10.1007/s12553-019-00298-4

Moulik S, Majumdar S (2019) FallSense : an automatic fall detection and alarm generation system in IoT-enabled environment. IEEE Sens J 19:8452–8459. https://doi.org/10.1109/JSEN.2018.2880739

Mubashir M, Shao L, Seed L (2013) A survey on fall detection: principles and approaches. Neurocomputing 100:144–152. https://doi.org/10.1016/j.neucom.2011.09.037

Mukhopadhyay SC (2015) Wearable sensors for human activity monitoring: a review. IEEE Sens J 15:1321–1330. https://doi.org/10.1109/JSEN.2014.2370945

Nguyen Gia T, Sarker VK, Tcarenko I et al (2018) Energy efficient wearable sensor node for IoT-based fall detection systems. Microprocess Microsyst 56:34–46. https://doi.org/10.1016/j.micpro.2017.10.014

Nooruddin S, Islam M, Sharna FA (2020) Internet of things an IoT based device-type invariant fall detection system. Internet Things 9:100130. https://doi.org/10.1016/j.iot.2019.100130

Noury N, Fleury A, Rumeau P, et al (2007) Fall detection—principles and methods. In: 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE, pp 1663–1666

O’Loughlin JL, Robitaille Y, Boivin J-F, Suissa S (1993) Incidence of and risk factors for falls and injurious falls among the community-dwelling elderly. Am J Epidemiol 137:342–354. https://doi.org/10.1093/oxfordjournals.aje.a116681

Ozcan K, Velipasalar S (2016) Wearable camera- and accelerometer-based fall detection on portable devices. IEEE Embed Syst Lett 8:6–9. https://doi.org/10.1109/LES.2015.2487241

Parkkari J, Kannus P, Palvanen M et al (1999) Majority of hip fractures occur as a result of a fall and impact on the greater trochanter of the femur: a prospective controlled hip fracture study with 206 consecutive patients. Calcif Tissue Int 65:183–187. https://doi.org/10.1007/s002239900679

Pynoos J, Steinman BA, Nguyen AQD (2010) Environmental assessment and modification as fall-prevention strategies for older adults. Clin Geriatr Med 26:633–644. https://doi.org/10.1016/j.cger.2010.07.001

Pynoos J, Steinman BA, Do Nguyen AQ, Bressette M (2012) Assessing and adapting the home environment to reduce falls and meet the changing capacity of older adults. J Hous Elderly 26:137–155. https://doi.org/10.1080/02763893.2012.673382

Rahaman A, Islam M, Islam M et al (2019) Developing IoT based smart health monitoring systems: a review. Rev d’Intell Artif 33:435–440. https://doi.org/10.18280/ria.330605

Rahman MM, Islam MM, Ahmmed S, Khan SA (2020) Obstacle and fall detection to guide the visually impaired people with real time monitoring. SN Comput Sci 1:231. https://doi.org/10.1007/s42979-020-00231-x

Rana S, Dey M, Ghavami M, Dudley S (2019) Signature inspired home environments monitoring system using IR-UWB technology. Sensors 19:385. https://doi.org/10.3390/s19020385

Ranakoti S, Arora S, Chaudhary S, et al (2019) Human Fall detection system over IMU sensors using triaxial accelerometer. In: Advances in Intelligent Systems and Computing. pp 495–507

Rand D, Eng JJ, Tang P-F et al (2009) How active are people with stroke? Stroke 40:163–168. https://doi.org/10.1161/STROKEAHA.108.523621

Rawashdeh O, Sa’deh W, Rawashdeh M, et al (2012) Development of a low-cost fall intervention system for hospitalized dementia patients. In: 2012 IEEE International Conference on Electro/Information Technology. IEEE, pp 1–7

Sadreazami H, Bolic M, Rajan S (2019) CapsFall: fall detection using ultra-wideband radar and capsule network. IEEE Access 7:55336–55343. https://doi.org/10.1109/ACCESS.2019.2907925

Sadreazami H, Bolic M, Rajan S (2020) Fall detection using standoff radar-based sensing and deep convolutional neural network. IEEE Trans Circuits Syst II Express Briefs 67:197–201. https://doi.org/10.1109/TCSII.2019.2904498

Safi K, Attal F, Mohammed S, et al (2015) Physical activity recognition using inertial wearable sensors—a review of supervised classification algorithms. In: 2015 International Conference on Advances in Biomedical Engineering, ICABME 2015

Sanchez JAU, Muñoz DM (2019) Fall detection using accelerometer on the user’s wrist and artificial neural networks. In: IFMBE Proceedings. pp 641–647

Santos GL, Endo PT, de Monteiro KH, C, et al (2019) Accelerometer-based human fall detection using convolutional neural networks. Sensors (Switzerland) 19:1–12. https://doi.org/10.3390/s19071644

Sterling DA, O’Connor JA, Bonadies J (2001) Geriatric falls: injury severity is high and disproportionate to mechanism. J Trauma Inj Infect Crit Care 50:116–119. https://doi.org/10.1097/00005373-200101000-00021

Su BY, Ho KC, Rantz MJ, Skubic M (2015) Doppler radar fall activity detection using the wavelet transform. IEEE Trans Biomed Eng 62:865–875. https://doi.org/10.1109/TBME.2014.2367038

Sugawara E, Nikaido H (2014) Properties of AdeABC and AdeIJK efflux systems of Acinetobacter baumannii compared with those of the AcrAB-TolC system of Escherichia coli. Antimicrob Agents Chemother 58:7250–7257. https://doi.org/10.1128/AAC.03728-14

Tran T-T-H, Le T-L, Morel J (2014) An analysis on human fall detection using skeleton from Microsoft kinect. In: 2014 IEEE Fifth International Conference on Communications and Electronics (ICCE). IEEE, pp 484–489

Vallabh P, Malekian R (2018) Fall detection monitoring systems: a comprehensive review. J Ambient Intell Humaniz Comput 9:1809–1833. https://doi.org/10.1007/s12652-017-0592-3

Van Thanh P, Tran D-T, Nguyen D-C et al (2019) Development of a real-time, simple and high-accuracy fall detection system for elderly using 3-DOF accelerometers. Arab J Sci Eng 44:3329–3342. https://doi.org/10.1007/s13369-018-3496-4

Verma SK, Willetts JL, Corns HL et al (2016) Falls and fall-related injuries among community-dwelling adults in the United States. PLoS ONE 11:e0150939. https://doi.org/10.1371/journal.pone.0150939

Wang J, Zhang Z, Li B et al (2014) An enhanced fall detection system for elderly person monitoring using consumer home networks. IEEE Trans Consum Electron 60:23–29. https://doi.org/10.1109/TCE.2014.6780921

Wang P, Chen C-S, Chuan C-C (2016) Location-aware fall detection system for dementia care on nursing service in evergreen inn of Jianan Hospital. In: 2016 IEEE 16th International Conference on Bioinformatics and Bioengineering (BIBE). IEEE, pp 309–315

Wang Y, Wu K, Ni LM (2017) WiFall: device-free fall detection by wireless networks. IEEE Trans Mob Comput 16:581–594. https://doi.org/10.1109/TMC.2016.2557792

Ward DS, Everson KR, Vaughn A, Rodgers AB, Troiano RP (2005) Accelerometer use in physical activity: best practices and research recommendations. Med Sci Sport Exerc 37:S582–S588. https://doi.org/10.1249/01.mss.0000185292.71933.91

Wu Y, Su Y, Hu Y et al (2019) A multi-sensor fall detection system based on multivariate statistical process analysis. J Med Biol Eng 39:336–351. https://doi.org/10.1007/s40846-018-0404-z

Xu Y, Chen J, Yang Q, Guo Q (2019) Human posture recognition and fall detection using kinect V2 camera. In: 2019 Chinese Control Conference (CCC). IEEE, pp 8488–8493

Yacchirema D, de Puga JS, Palau C, Esteve M (2019) Fall detection system for elderly people using IoT and ensemble machine learning algorithm. Pers Ubiquitous Comput 23:801–817. https://doi.org/10.1007/s00779-018-01196-8

Yhdego H, Li J, Morrison S, et al (2019) towards musculoskeletal simulation-aware fall injury mitigation: transfer learning with deep CNN for fall detection. In: 2019 Spring Simulation Conference (SpringSim). IEEE, pp 1–12

Yoshino H, Moshnyaga VG, Hashimoto K (2019) Fall detection on a single doppler radar sensor by using convolutional neural networks. In: 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC). IEEE, pp 2889–2892

Yu X (2008) Approaches and principles of fall detection for elderly and patient. In: 2008 10th IEEE Intl. Conf. on e-Health Networking, Applications and Service, HEALTHCOM 2008

Zerrouki N, Harrou F, Sun Y, Houacine A (2016) Accelerometer and camera-based strategy for improved human fall detection. J Med Syst 40:284. https://doi.org/10.1007/s10916-016-0639-6

Zhang Z, Conly C, Athitsos V (2015) A survey on vision-based fall detection. In: Proceedings of the 8th ACM International Conference on PErvasive Technologies Related to Assistive Environments—PETRA’15. ACM Press, New York, pp 1–7

Acknowledgements

This research is supported by Universiti Malaysia Pahang (UMP) through University Research Grant RDU192206.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nooruddin, S., Islam, M.M., Sharna, F.A. et al. Sensor-based fall detection systems: a review. J Ambient Intell Human Comput 13, 2735–2751 (2022). https://doi.org/10.1007/s12652-021-03248-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-021-03248-z