Abstract

Purpose

Breast cancer is a tumour affecting the breast tissues and is detected commonly in women. Computer-aided diagnosis aids in mass screening and early detection of breast cancer. Technological advancements in deep learning are driving the growth of automated breast cancer diagnosis. Various breast cancer image modalities like histopathology, mammograms, thermography, and ultrasound-based images are used for detecting breast cancer. This paper tends to explore a review of current studies related to breast cancer classification using deep learning techniques.

Methods

The review highlights the imaging modalities, publicly available datasets, augmentation techniques, preprocessing techniques, transfer learning approaches, and deep learning techniques used by various researchers in the process of breast cancer early detection. In addition, the study also presents a comprehensive review of the performance of the existing deep learning algorithms, challenges, and future research directions.

Results

The various methods proposed so far have been compared based on performance metrics including accuracy, sensitivity, specificity, AUC, and F-measure. Many research works have attained an accuracy of more than 90% in the classification and analysis of breast cancer detection using histopathological images.

Conclusion

This review has pointed out various limitations that have to be improved for accurately identifying breast cancer using image modalities. Incorrect labelling of data due to observer variations with regard to image segmentation datasets, computation time, and Memory overhead has to be analyzed in future for resulting an enhanced CAD system.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Cancers are one of the main public health concerns. According to projections by the IARC (International Agency for Research on Cancer) of the WHO (World Health Organization) and GBD Global Burden of Disease Cancer Collaboration, cancer cases rose by 28 percent between 2006 and 2016, and there will be 2.7 million new cancer cases emerging in 2030 [1]. Almost anywhere in the human body, cancer can start. Breast cancer is one of the most prevalent and deadly in women among the different forms of cancer (1.7 million incident cases, 535,000 deaths, and 14.9 million life-years adjusted for disability) [2]. Breast cancer is a disease affecting breast cells and is the major cause of death among women [3]. More than 25, 0000 cases of US women suffering from breast cancer are reported in 2017 [4]. Around 15% of all cancer deaths among women have been found to be due to breast cancer [5], and rates are found to be rising. The diagnosis of breast cancer has now become very relevant.

Masses or lumps and microcalcifications that feel different from the other tissues are the main symptoms of breast cancer, whereas all masses are not cancerous. Masses are categorized as two types i) benign and ii) malignant. Benign masses are harmless abnormal growth that do not grow outside the breast and not cancerous. Malignant masses are cancerous and might be non-invasive cancer (carcinoma insitu) or invasive cancer. Non-invasive carcinoma does not spread outside the breast whereas Invasive carcinoma occurs outside the breast and is found in linings of duct. The commonly occurring carcinoma is invasive [6]. The death rate due to breast cancer can be reduced to a great extent if detected at an early stage [7,8,9,10]. But this is a very time-consuming process and the results obtained may not be that accurate [11]. The medical terms and their definitions are presented in Table 1 for better understanding.

Breast cancer is a disease with different characteristics in biological, histological, and clinical properties. This cancer arises from the multiplication of abnormal breast cells and has the potential to spread to nearby healthy tissues. Screening is performed with the use of radiological images, like digital mammography breast X ray (DMG) and ultrasound imaging (ULS) and MRI (Magnetic Resonance Imaging), Biopsy (histological Images), Computerized /tomography (CT). In mammography images, the auto-detection of lesions, their volume, and their shape is a notable indicator that is particularly useful in recognizing the deformed edge of a malignant tumor and the smooth edge of a benign tumor.

However, these non-invasive imaging methods might not be capable of accurately identifying malignant regions. So, the biopsy technique is commonly used to examine the malignancy in breast cancer tissues to a greater extend [14]. Furthermore, comprehensive monitoring of biochemical indicators and imaging modalities is required for the initial detection of breast cancer. Breast cancer multi-classification concerns can be resolved using CAD systems as a second alternative. It can be used as a low-cost, voluntarily accessible, quick, and consistent source of breast cancer early detection. It can also help radiologists diagnose breast cancer anomalies, which can lower the death rate from 30 to 70% [15].

The gathering of tissue samples, mounting them on microscopic glass slides, and staining these slides for enhanced viewing of nuclei and cytoplasm are all part of the biopsy procedure [16]. The microscopic investigation of these slides is subsequently carried out by pathologists in order to confirm the diagnosis of breast cancer [17]. Manual examination of complex-natured histopathology images, on the other hand, is a time-consuming and tedious procedure that might lead to errors. Furthermore, the morphological parameters used in the classification of these images are somewhat subjective, resulting in a diagnostic concordance of roughly 75% across pathologists. As a result, computer-assisted diagnosis plays an important role in assisting pathologists with histopathology picture analysis.

It specifically enhances breast cancer diagnostic accuracy by minimizing inter-pathologist differences in diagnostic choices. Breast cancer histopathology images may exhibit intra-class variation and inter-class consistency that traditional computerized diagnostic methods, such as rule-based systems and machine learning techniques, may not be able to address satisfactorily [18]. Majority of these methodologies rely on feature extraction techniques like scale-invariant feature transform, speed robust features, and local binary patterns, all of which are based on supervised data and can produce biased results when used to classify breast cancer histopathology images. Deep learning [19] is a sophisticated set of computer models constructed on several layers of nonlinear processing units in response to the need for speedy diagnosis.

Several machine learning (ML), artificial intelligence (AI), and neural network technologies have recently been investigated for image processing.. The CAD system have created an authentic and trustworthy system which can reduce experimental errors and can perform benign and malignant lesions differentiation with increased accuracy. With these systems image quality can be improved for human judgement and automate the image readability process for perception and interpretation. Recently, a number of papers applying machine learning and artificial intelligence algorithms for breast cancer detection, segmentation, and classification were published [15, 20, 21]. Deep learning models [17, 19, 22] have recently made significant progress in computer vision, particularly in biomedical image processing, due to its ability to automatically learn complicated and advanced features from images. This has prompted a number of researchers to use these models to classify breast cancer histopathology images [17]. Because of its ability to effectively communicate parameters across several layers within a deep learning model, convolutional neural networks (CNNs) [23] are commonly utilised in image-related tasks.

According to the research, it has been found that the shapes of breast (abnormal) tissues vary greatly, thus the screening method can remove the benchmarks. Micro calcification morphology, based on the distance between each micro calcification is an important factor for determining ROI. Fixed scale and invaraint scale approaches are there in which the the former is based on the distance between individual calcification used for defining the micro calcification cluster and the latter is a pixel level approach visualizing the morphological aspects like calcification cluster shape density, size etc. to the radiologist. For the segmentation and classification of masses and classification, histogram based methods and selection of optimal threshold can be used and from the literature it can be seen that none of the study has implemeted this approach.

A novel CAD system needs to be developed based on this approach to classify the calcification and masses. A content-based image retrieval is a new approach based on mammogram indexing and ROI patches classification. From literature, it is found that none of a study used indexing on ROI patches to classify calcification and mass using a mammogram. However, indexing and ROI classification-based CAD system needs to be developed with the help of expert radiologist to get precise results. Furthermore, some challenges faced by DL algorithms for breast cancer diagnostics are related to ultrasound images because of its low signal-tonoise ratio (SNR) comparative to others. However, echogram is a new ULS imaging technology, which is much cheaper for breast screening. So, the development of a new DL algorithm is a significant task to break through the echogram image analysis. The CT or MRI image modalities are spatial 3D data which are very large in size and need higher computation resources.

1.1 Motivation

The prime objective of this review is to support the researchers to explore the current CAD tools inorder to develop an efficient method for breast cancer classification which is computationally efficient. This review sumarizes the imaging modalities used for breast cancer classification. It helps the researchers a detailed analysis of the various datsets available publicly. The highlights of the various transfer learning and deep learning techniques used for breast cancer classification and the performance parameters used to evaluate the models are presented. Also unlike other breast detection reviews a detailed insight into the various data augmentation techniques and image preprocessing techniques are summarized.

1.2 Paper structure

The remainder of this paper is structured as follows. Section 2 describes breast cancer image modalities. Deep learning techniques for breast cancer diagnosis are discussed in Sect. 3. Various breast cancer datasets are discussed in Sect. 4. Various pre-processing techniques used to enhance the images are discussed in Sect. 5. Section 6 discusses different data augmentations techniques that are performed to increase the size of the dataset. Section 7 includes transfer learning techniques and Sect. 8 covers convolutional neural network techniques used for breast cancer analysis. Performance metrics are discussed in Sect. 9. Section 10 discusses the issues and challenges in breast cancer image analysis and Sect. 11 is conclusion.

2 Materials and methodology and contributions:

The main goal of this study is to use AI methods and various multi-imaging modalities to study about breast cancer classification. When creating this comprehensive study, the following questions are taken into account.

-

Types of imaging modalities that have recently been used to diagnose breast cancer.

-

The types of datasets used to develop AI models (public and private).

-

Different types of DL classifiers that have recently been employed to classify breast cancer.

-

The difficulties that classifiers encounter in accurately detecting masses.

-

The various parameters that are used to assess breast cancer classifiers.

Breast cancer images have been analyzed to find out whether a tissue is benign or malignant. The various imaging modalities used by authors includes histopathological images, mammograms, and ultrasound images.

2.1 Breast cancer detection using cancer diagnosis

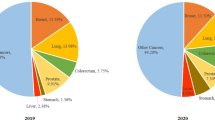

Computer-aided detection systems serve as a source for the detection of lesions that could indicate the presence of breast cancer, and radiologist decisions were made. Figure 1(a) shows the distribution of breast cancer research area and Fig. 1(b) represents the Year wise Contribution provided by various researchers.

The CAD system's main goal is to diagnose suspicious areas of the breast and mark regions of interest that could be lesions. According to Zemmal et al. [24] and Saraswathi et al. [25], CAD detection systems have improved the radiologist's accuracy in detecting breast cancer. This section outlines the methods for using CAD to screen for breast cancer. Screening plays a major concern in detection of breast cancer. Although the primary causes of breast cancer are unknown, it is known to cause major difficulties in people based on their gender, age, and genetic history [26]. Because of its small size, early discovery of a breast tumor is curable and can enhance patient surveillance. The American Cancer Society recommends a breast cancer screening to determine the presence of cancer symptoms is detailed in Table 2.

2.2 Medical imaging modalities

Breast screening is the use of medical multi-image modalities to detect breast abnormalities so that cancer can be diagnosed early and can be prevented from spreading [25].A range of image modalities are utilized to detect breast cancer; a few contemporary multi-image modalities employed for breast cancer prediction via the CAD system is detailed in Fig. 2.

2.2.1 Screen-film mammography (SFM)

Screen-film mammography (SFM) [27] is a powerful tool for detecting suspicious lesions. Fluorescent screen and film are used in standard screen-film mammography and hence it fails to detect a large percentage of cancers especially soft tissue lesions in the presence of dense glandular tissues. The film serves simultaneously as the image receptor, display medium, and long-term storage medium. This limitation can lead to loss of image contrast, especially when exposure or film-processing conditions lead to lower optical densities in lesion-containing tissues.

2.2.2 Histopathological images

Histology is the study of the microscopic anatomy of cells and tissues of organisms in which a thin slice (section) of tissue is examined under a light (optical) or electron microscope. For cancer diagnosis the histology image analysis has been carried out by the histopathologists by visually examining the shape of the cells and tissue distributions thereby evaluating whether the tissue regions are cancerous or not and can assess the level of malignancy present. For breast cancer detection and ranking applications, histopathological research has been used extensively [28]. The primary diagnosis method used is manual analysis which requires the expertise of an experienced histopathologist and also vulnerable to errors due to the ambiguity and variety of histopathological images [29]. Time consumption is very high for such analysis and also there may be interpersonal variations among the observations i.e., the experience of the pathologists doing the analysis can influence the result of the work. Hence computer assisted analysis of histopathogical images is very important.

Whole slide digital scanners can be used to digitize histology slides and can be stored in a digital form [30]. Computational data analysis together with traditional methods of diagnosis reduced the burden of pathologists. Due to the specific features of histology imaging, including the variability in image preparation methods, clinical interpretation procedures, the complex structures and very large size of the images, automated histology image analysis is generally not easy.

The method of designing tools for histopathological analysis is not an easy task. Fine-grained, high-resolution images that show rich geometric structures and complex textures are histopathological images of breast cancer. The variability within a class and the consistency between classes, especially when dealing with multiple classes, can make classification extremely difficult. Figure 2a shows several examples of histology images.

Instead of using binary classification, Nahid et al. [31] used many categories to classify breast cancer, and WSI color images allow for the production of numerous ROI images to train the model. To categorize invasive and noninvasive breast cancer, Shibusawa et al. [32] generate ROI patches from HP images. In comparison to mammograms, Ultrasonic, and MRI, auto-classification of breast tumors using Histopathological images provides a number of advantages.

2.2.3 Mammogramic images

The most common effective screening technique for early detection of breast cancer has been the digital mammogram [28, 29, 33]. An X-ray image of the breast is a mammogram. A photographic film is created by film screen mammography, while digital mammography produces digital images. The person getting the mammogram will put their breast between two clear plates to hold it in place, which will compress it between them. For a better image, this flattens the breast and prevents the image from blurring. From two sides, the machine takes an image of the breast. The mammogram is then examined by a doctor for something irregular that may be a symptom of cancer. Figure 2b shows several examples of mammogram images.

Mammography is a low-dose x-ray image modality that uses the CAD system to classify minor differences in breast tissues. The x-ray beam easily goes through the dense breast's fibro-glandular tissues to study the lump and calcification that is a common indicator of breast cancer. The contrast between the mass and the calcification is nearly non-existent, anatomically heterogeneous, and highly irregular, making clinical diagnosis challenging. mammography images, on the other hand, utilized two methods for breast cancer screening. The entire breast, including all glandular structures, is examined in the cranial-caudal view. The black strip depicts the fatty tissues, while the shape depicts the nipple.

2.2.4 Ultrasound images

Ultrasound imaging of the breast creates representations of the internal structures of the breast using sound waves. It is usually used during a physical examination, mammogram or breast MRI to help detect breast lumps or other irregularities that the doctor may have noticed. Ultrasound is secure and non-invasive, and radiation is not used [34, 35]. Breast images can be collected from any orientation using ultrasound technology. Figure 3 depicts ultrasound breast images with cyst, fibroadenoma lesion and invasive ductual carcinoma.

The various imaging modalities are successful in detecting abnormal masses and microcalcifications in breast tissues. But the breast tissue overlapping present in these imaging modalities hides breast information which may hide the suspicious cells from the vision.

2.2.5 MRI images

MRI stands for Magnetic Resonance Imaging. It uses strong magnetic field and computer-generated radio waves to create images of the breast by altering the alignment of hydrogen atoms in the body. MRI has a higher sensitivity for the detection of breast cancer and is not affected by breast density compared with mammography [36]. MRI is effective in detecting invasive ductual carcinoma.

2.2.6 Infrared thermography (irt) images

IRT uses an infrared camera to detect the breast cancer by measuring the variations in the temperature on the surface of the breasts. Due to breast cancer the cells multiply as a result the blood flow to the cells increase thereby increasing the temperature near the malignant cells. Table 3 shows the image modalities used by various authors. The range of image modalities utilized to detect breast cancer via the CAD system is detailed in Fig. 4. The comparison analysis, as well as the pros and cons of different modalities, are shown in Table 4

3 Deep learning techniques for breast cancer diagnosis

With the advent of technology, CAD (Computer-Aided Design) methods have been proposed for detecting cancer with increased accuracy. Initially, the machine learning approaches, an application of artificial intelligence was used where machines were provided with the ability to learn by themselves without being programmed explicitly. Supervised machine learning schemes used labeled dataset and unsupervised learning analyzed data that is neither classified nor labeled [64]. The steps involved in machine learning schemes are shown in Fig. 5. Various machine learning algorithms have been proposed. Abbas et al. [65] proposed BCD-WERT for feature selection and classification using extremely randomized tree and whale optimization algorithm which obtained better results than many of the existing machine learning methods with an accuracy of 99.30%. Safdar et al. [66] proposed a method for the binary classification of images using support vector machine (SVM), logistic regression (LR), and K-nearest neighbor to obtain accuracy of 97.7%

Recent enhancements lie with deep learning techniques, in which multiple layers are deployed to obtain high-level features from the input [67]. Deep learning outperforms machine learning in various fields such as speech recognition, natural language processing, computer vision etc. [68]. The relationship between deep learning, machine learning and artificial intelligence are shown in Fig. 6.

Deep learning based on convolutional neural networks (CNN) are now widely used for disease diagnosis, detection and classification, where CNNs can directly learn the features from images [69,70,71,72]. Deep learning mimics working of neurons in brain which uses different layers to learn from the data. Number of layers determines the depth of the model. The input layers process data and the final result is given by the output layer. Between the input layer and the output layer, any number of hidden layers can be used. The process flow of deep learning is shown in Fig. 7.

The process of applying deep learning to detect breast cancer involves the following steps.

-

Image acquisition

-

Data Augmentation

-

Deep Learning Method

-

Problem Application

To detect whether an image is cancerous or not, machine learns by itself from large set of images during analysis. Images can be obtained from public databases or private databases. The acquired images are pre-processed to improve the image quality. The number of images in the dataset is increased using various data augmentation techniques like rotation, flipping etc. Training phase in deep learning method includes either convolutional neural network or the transfer learning models. Problem application predicts whether the image belongs to which class i.e., cancerous or not.

4 Breast cancer image data sets

To diagnose breast cancer various techniques including mammograms, breast ultrasound, biopsy, Magnetic Resonance Imaging (MRI) and histology images are used [10]. A mammogram is a commonly available method to detect malignant cells by acquiring x-ray images of the breast. In ultrasound breast images are captured using sound waves to distinguish between benign and malignant tumours.. MRI is performed as a follow up of mammogram and ultrasound in which different images of the breast are taken and combined to aid the doctors in the detection of tumours in MRI, images of the interior of the rest are created using a magnet and radio waves. Since the underlying tissue architecture is maintained during preparation, histopathology images provide a more in-depth look at the breast cancer and how it affects tissues. A lot of histopathological databases are generated of which a few are publicly available.

Ayana, Gelan, et al. [61] used publicly available Mendeley dataset with 250 breast ultrasound images, of which 150 are malignant cases and the rest are benign cases. Zhang et al. [43] used mini MIAS dataset with 322 mammogram images of which 209 are benign and 113 are malignant. All the images are of size 124 X124. Monika et al. [73] used wisconsin breast cancer dataset with 569 images. Tsai, Kuen-Jang, et al. [45] used digital mammogram dataset provided by the E-Dahospital, Taiwan. With 5733 mammograms of 1490 patients, including 1434 left craniocaudal view (LCC), 1436 right craniocaudal view (RCC), 1433 left mediolateral oblique view (LMLO) and 1430 right mediolateral oblique view (RMLO) views.

Alruwaili et al. [46] used MIAS(Mammographic Image Analysis Society) dataset with 51 benign masses and 48 malignant masses. Phan, Nam Nhut, et al. [59] used two datasets – TCGA-BRCA dataset with 388 WSIs and one in house dataset comprising 233 WSIs. Thapa, Ashu, et al. [60] used datasets of ICIAR 2018 challenge and performed tests using various datasets. Suh et al. [44] used 1501 digital mammogram images and the dataset consists of 200 images of which 98 are benign and 102 are malignant. Dataset available at https://wiki.cancerimagingarchive.net/ is used by Zheng, Jing, et al. [63].

Celik, Yusuf, et al. [57] used 162 WSIs and multiple image samples were extracted from each patient’s images. Expert pathologists identified cancerous areas in the images and the images were segmented 277,524 samples of which 78,786 were IDC positive and 198,738 were IDC negative. BreaKHis dataset [74] is a publicly available dataset with 7909 histopathological images of benign and malignant tumors of four different resolutions. Both benign and malignant tumors include four subclasses of each.. Sample images from the BreaKHis dataset are shown in Fig. 8.

Gour et al. [33], Bardou et al. [51], and Fabio A. Spanhol et al. [53, 54] used BreaKHis dataset for detecting breast cancer at early stages. Zerouaoui et al. [58] used BreakHis and FNACdatasets. FNAC consists of images from 56 women of which 36 are benign and 24 are malignant. Zhu, Chuang, et al. [28] used two datasets namely, BreaKHis [74] and the BACH [67] dataset. BACH includes two datasets namely, microscopy dataset and whole slide image dataset. The BACH microscopy dataset consists of 400 similarly dimensioned images of Hematoxylin & Eosin stained breast histology (2048 X 1536), and each image is labeled with one of four groups, namely benign lesion, normal tissue, invasive carcinoma, and in situ carcinoma. The WSI subset consists of 20 very large annotated whole-slide images that can have several regions of normal, benign, in situ carcinoma and invasive carcinoma. Medical experts carried out the annotation of the whole-slide pictures.

Li et al. [30] used Hematoxylin and Eosin stained breast cancer histology digitized images. Li, Xingyu, et al. [47] used a breast cancer dataset published by Israel Institute of Technology [75] with 361 histology images out of which 119 are normal and rest are malignant in which 102 malignant images are of carcinoma in situ, and 140 are of invasive carcinoma. Nadia Brancati et al. [48] used Invasive Ductal Carcinoma (IDC) dataset that included 162 whole slide images. Each image is partitioned into a set of patches out of which 46,633 patches represent invasive carcinoma and 124,011 patches represent non-invasive carcinoma. Baris Gecer et al. [49] analysed breast cancer using 240 breast histopathology images. Ahmad et al. [50] used publicly available Hematoxylin and Eosin stained dataset with 260 labelled images of which 240 were used for training and 20 for testing.

Alexander Rakhlin et al. [13] used 400 breast histology microscopy images. Araújo et al. [52] used 269 Hematoxylin and Eosin stained labeled breast histology images of which 249 were used for training and rest for testing. Wisconsin Breast Cancer dataset with 683 benign and malignant samples was used by Ahmed M. Abdel-Zaher et al. [55]. Dan C. Cireşan et al. [56] used a Public MITOS dataset that included 50 images. SanaUllah Khan et al. [12] analysed two breast cancer microscopic images of which one is a standard dataset and the other from a hospital in Pakistan. 400 mammogram images of which 200 malignant and 200 benign images, generated using the Senographe 2000 D all- digital mammography camera are used by Wang et al. [37] for diagnosis of breast cancer. Perre et al. [38] used 736 mammogram images from the Breast Cancer Digital Repository. Ragab et al. [39] used two public datasets. Two different datasets collected from US were used by Yap et al. [40]. Dataset A included 306 images of which 60 were malignant and rest benign images. The second dataset included 163 of which 53 were cancerous and 110 were benign. 1874 pairs of mammogram images were analysed by Wenqing Sun et al. [41] of which 1434 were malignant and 1724 were benign.

Ahmed et al. [42] used two publicly available datasets namely Mammographic Image Analysis Society (MIAS) digital mammogram dataset and Curated Breast Imaging Subset of (Digital Database for Screening Mammography) (CBIS-DDSM). The datasets used by different authors are listed in Table 5. Out of the various datasets analysed some are publicly available [28, 33, 39, 40, 50] etc. BreakHis [28, 33] is a publicly available dataset with 9109 histopathological images. It can be seen that it is the most commonly used dataset in the papers we have taken for study.

5 Pre-processing the images

Histopathological images are high resolution images with complex structure and are large in size. The complexity and the disturbing factors can slow down the analysis process. To remove the different types of noise in tissue images and for improved quantitative analysis, pre-processing is performed. Also, mammograms have to be preprocessed in order to improve the image quality for further analysis.

Ayana, Gelan, et al. [61] pre-processed and segmented images using OpenCV and scikit-image [83]. The acquired color images were transferred into grayscale images and then to binary images using adaptive thresholding. The noise is removed using dilation function and the segmented image with only the cell body part was obtained.

Alruwaili et al. [46] performed adaptive contrast enhancement on the basis of redistribution of the lightness values of input image which improves the small details, low contrast and textures of the input image thereby improving the visibility of curves and edges in all parts of the image. Image normalization is performed to reduce noise and to make sure that the values are within a specified range.

Phan, Nam Nhut, et al. [59] generated high resolution patches from each WSI and the Macenko method [84] is utilized for normalizing hematoxylin and eosin staining of the generated patches. Blurry images are removed using Laplacian algorithm by calculating variance thresholding for the blurry images using a customized script. Thapa, Ashu, et al. [60] performed color normalizations to reduce the color variation in hematoxylin and eosin stained images. Image enhancement is done by reduction by filtering through contrast limited adaptive histogram equalization (CLAHE). The problem of edge shadowing is also reduced. Zhang et al. [43] preprocessed the original images to obtain the region of interest of the breast. The original mammogram image is passed through seven steps: (1) AN reduction; (2) MN reduction; (3) CLAHE; (4) BG removal; (5) PEM removal; (6) SSBC; and (7) Down sampling, to obtain the preprocessed image. All images were resized into the 1000 × 1300 pixels by Suh et al. [44]. To minimize inter image contrast differences the images were preprocessed using a contrast limited adaptive histogram equalization (CLAHE) algorithm [85] in which the brightness of each pixel in the image is transformed by applying histogram equalization locally [86] in the neighboring pixel regions to improve the local contrast of the image.

Yurttakal et al. [62] cropped tumorous regions from the MRI slices and each image was resized to 50 X 50 with the pixel values normalized between 0 and 1. Images were denoised using image denoising deep neural network [87]. Zhu, Chuang, et al. [28] performed color correction [88] to compensate for the color variations occurred during staining of breast histology images, in which a simple statistical analysis is carried out to enforce the color characteristics of one image on another. Li et al. [30] performed stain normalizations [88] which by separately measuring the mean and standard deviations for each channel, translated the RGB images into a decorrelated color space and performed a collection of linear transformations to match the source and target image color distributions and then converted the image back to RGB. Li, Xingyu, et al. [47] normalized the images using illuminant normalization method [77] and the normalized image is rescaled to [0, 1] after converting to the grayscale version which reduces the effect of color variations. Baris Gecer et al. [49] pre-processed the images by subtracting the average of the RGB values from each pixel. Alexander Rakhlin et al. [13], normalized the amount of H & E stained on the tissues using stain normalization [89]. By decomposing the RGB colour into the H&E colour space of the tissue, the quantity of H&E is changed, followed by multiplying the magnitude of the H&E of each pixel by two uniform random variables in the [0.7, 1.3] range.

Ahmad et al. [50] performed two image normalization techniques [84, 88] to help better quantitative analysis. To convert colored images to optical density, a logarithmic transformation is applied. The 2D projection of 0D tuples is then determined through which the original images are passed and histogram equalization is applied. Araújo et al. [52] normalized the images using the staining technique proposed in [84], in which the colors of the images are transformed into optical density and singular value decomposition is applied. The resulting color space transformation is applied to the original image and the histogram is extended so that the dynamic cover is less than ninety percent of the data. Spanhol et al. [53] reduced the dimensionality of the image from 700 X 460 to 350 X 430 to obtain the best results. Patches were extracted from the image and the model was trained using the extracted patches.

Sana Ullah Khan et al. [12], performed stain normalizations using the method proposed by Macenko et al. [84]. Wang et al. [37], eliminated the noise during diagnosis using an adaptive mean filter algorithm [90]. A sliding window is used to calculate noise, where a spatial correlation, variance and mean is computed for the sliding window to detect the presence of noise. If noise is present, pixel values of the windows are replaced by mean. To enhance the contrast between masses and surrounding a contrast enhancement algorithm was used [91]. Perre et al. [38] pre-processed the images by cropping a region of interest using the bounding box information of the segmented region. The aspect ratio is preserved even when the region of interest is smaller than lesion’s dimensions. The square crop is translated and the surrounding breast patterns are included by changing image coordinates when the lesion is close to the image border.

Ragab et al. [39] adapted contrast limited adaptive histogram equalization to improve the image contrast. Wenqing Sun et al. [41] covered the truth files of most data to simulate unlabelled data. Ahmed et al. [42] proposed a pre-processing algorithm which includes two modules a) artifact and noise removal and b) muscle removal. The various pre-processing methods used are shown in Table 6. Pre-processing stage resulted with an enhanced image or noise free images that will be latter used for training.

6 Data augmentation

Deep learning networks show an improved performance when the amount of data available is more. Since the dataset available is small in certain papers, the technique of data augmentation is applied. It is a method by which the size of dataset is increased artificially by creating versions of images in the dataset. Augmented data can be obtained from the original images by applying various geometric transformations like translation, rotation, scaling, shearing, etc. [96]. Alruwaili et al. [46] performed rotation, reflection, shifting, and scaling and augmented images were generated for each image in the dataset. Thapa, Ashu, et al. [60] performed 90,180 and 270 degrees of rotation and flipping which results in seven times more images than the original database number.

Suh et al. [44] performed data augmentation of non-malignant class of images since the number of nonmalignant class images were only one fifth of the malignant class. Hence the imbalance was compensated. Yurttakal et al. [62] performed horizontal and vertical translations. Also, rotation up to angles of 20 degrees. Gour et al. [33] used data augmentation methods including affine transformation, image patches generation algorithm and stain normalization which increased the dataset size by 11%. Zhu, Chuang, et al. [28] used flipping, random rotation and shearing transformations to avoid overfitting, as a result of which eight images are generated for each training sample. Images are resized to 1120 X 672 after augmentation.

The technique of augmented patch dataset is used by Ahmad et al. [50] to decrease overfitting and to increase the size and dimensions of the dataset. Data augmentation method performed generated 72,800 images from the original 260 images. Araújo et al. [52] created augmented patch dataset and performed mirroring and rotation to generate 70,000 patches. Bardou et al. [51] used two data augmentation techniques including rotation at angles of 90◦, 180◦, and 270◦ and horizontal flip. Rakhlin et al. [13] performed 50 random colour augmentations for each image. Sana Ullah Khan et al. [12] increased the size of data set by scaling, rotation, translation and colour modelling to generate a total of 8000 images where 6000 images are used for training and rest are used for testing. Perre et al. [38] performed a combination of flipping and rotation transformations of 90°, 180° and 270°. Dina A. Ragab et al. [39] used rotation for data augmentation where each image is rotated by angles 0°, 90°, 180° and 270°, which results in four images instead of a single image. Most of the research works performed data augmentation techniques to increase the data set and reduce the over-fitting problems and the commonly used augmentation techniques are translations and rotations. It can be seen that models show an improved accuracy over an augmented dataset. For instance, in the model proposed by Gour et al. [33] accuracy improved around 8% when an augmented dataset is used.

Shen et al. [93] has performed random transformations: horizontal and vertical flips, rotation in [−25, 25] degrees, zoom in [0.8, 1.2] ratio and intensity shift in [− 20, 20] pixel values. Various methods such as image flipping, translation, rotation, and deformation were utilized by Yiwen Xu et al. [95] to augment the data. Viridiana Romero Martinez et al. [97] carried out augmentation methods such as random rotation, random resize crop and random horizontal flip. Eleftherios Trivizakis et al. [98] used rotation, flipping, elastic deformation and mirroring amplifies model properties, such as translation, rotational and scale invariance to perform augmentation. Augmentations techniques used by various authors are shown in Table 7.

7 Transfer learning methodologies

In transfer learning knowledge gained from one problem can be used to solve another problem [29, 100, 101]. Transfer learning is used when the training data is costly or difficult to obtain [102]. The network trained for a particular task is transferred to the target task trained by target dataset [103]. More than twenty hybrid architectures utilizing the deep learning techniques namely DenseNet 201, Inception V3, Inception ResNet V2, MobileNet V2, ResNet 50, VGG16, and VGG19), and four classifiers viz MLP, SVM, DT, and KNN for binary classification of breast histopathological images were evaluated by Zerouaoui et al. [58] and the best results were obtained using DenseNet 201. 99% accuracy is obtained over the FNAC dataset and for the breakhis dataset accuracies of 92.61%, 92%, 93.93%, and 91.73% are obtained over the four magnification values: 40X, 100X, 200X, and 400X.

EfficientNetB2, InceptionV3, and ResNet50 are used in multistage transfer learning (MSTL) algorithm proposed by Ayana et al. [61] and ooptimized using Adagrad, Adam and stochastic gradient descent.: Adam, Adagrad, and stochastic gradient de-scent (SGD). A test accuracy of 99 ± 0.612% on the Mendeley dataset and 98.7 ± 1.1% on the MT-Small-Dataset was achieved using ResNet50 with Adagrad optimizer. Alruwaili et al. [46] performed binary classification of breast images into benign and malignant using Nasnet-mobile and an advanced version of ResNet 50 named MOD-RES…. It can be seen that oversampling increases the overall accuracy of the mage.

Phan et al. [59] proposed a dual step transfer learning which used the pretrained models like ResNet101, VGG16, ResNet50 and Xception for feature extraction. During the first step an in house breast cancer dataset is used and the TCGA-BRCA dataset is used for the secondary step. Compared to the one step process of transfer learning the two step process improved model performance and also the training time is reduced. Four class classification into four image subtypes like basal-like, HER2-enriched, luminal A, and luminal B are performed. Lastly, for model prediction visualization, gradient-weighted class activation mapping is used.

Gour et al. [33] proposed ResHist which is a CNN based on residual learning, with 152 layers for the binary classification of histopathological images into benign and malignant. The ResHist model achieves an accuracy of 84.34% and an F1-score of 90.49% for the classification of histopathological images and achieved an accuracy of 92.52% and F1-score of 93.45% on augmented dataset.

Image classification is done using Residual Network (ResNet) and densely connected convolutional networks (DenseNet) by Celik et al. [57]. An accuracy of 91.57% is obtained using DenseNet-161 model and F-score of 94.11% is obtained using ResNet-50 model. Li et al. [30] classified breast histopathology images into four classes. The classes depend upon the variations found in the cell density, variability and cell morphological organization. Patches of two kinds with distinct sizes that contains tissue and cell level features were extracted to preserve important data. Discriminative patches were selected and features were extracted and classification was performed. ResNet50 [104] is used to extract features from patches, P-norm pooling [105] is used to get final image features and support vector machine (SVM) is used for final image-wise classification with an accuracy of 95%. Li, Xingyu et al. [47] used a fully convolutional autoencoder, where autoencoders are neural networks with no fully connected layers [26]. Dominant structural patterns from the normal images are learned and the patches that do not have these features are detected as abnormal by neural network and support vector machine. Due to the small data set the accuracy achieved by this scheme is 76%.

Nadia Brancati et al. [48] suggested a technique for detecting invasive ductal carcinoma of the breast. The whole slide images are separated as patches which are marked either as invasive ductual carcinoma or non-invasive ductual carcinoma. Features were extracted initially by training in unsupervised model and the input images were reconstructed. Back propagation was implemented using stochastic gradient descent algorithm and overfitting is prevented by including sparsity constraints in hidden units..A convolutional autoencoder entitled supervised encoder FusionNet (SEF) performed supervised classification. FusionNet is a residual network with skip connection and this method obtained an accuracy of 97.67%.

Four fully connected convolutional neural networks which can handle images of different resolutions are used by Baris Gecer et al. [49] to localize the regions of interest by removing unwanted information. Another convolutional neural performed multiclass classification into four classes and the method obtained an accuracy of 55%. Ahmad et al. [50] used three pretrained convolutional neural networks namely AlexNet, ResNet and GoogleNet for breast histopathological image classification to obtain an accuracy of 85%. Bardou et al. [51] proposed a convolutional neural network with five convolutional layers and two fully connected layers and Rectified Linear Unit (ReLU) activation function is used which is implemented using BVLC Caffe [106]. This method made a comparison between convolutional neural network and features based classification for binary and multi class. Binary classification obtained higher accuracy than multi class classification in the range between 96.15% and 98.33%.

Rakhlin et al. [13] used a deep convolutional feature representation [107] method with unsupervised feature representation extraction. Deep convolutional neural networks trained on ImageNet [108] were used. VGG, Inception V3, VGG-16 and ResNet-50 were used for feature extraction. From each model, fully connected layers are removed to allow images of arbitrary size. The last convolutional layer of ResNet-50 and VGG-16 are converted to a one-dimensional feature vector. Internal four blocks of VGG-16 are concatenated into a single vector. Sparse descriptors of low dimensionality are obtained followed by supervised classification using LightGBM for implementing gradient boosted trees [109]. Two class classification into carcinomas and noncarcinomas and four class classification into invasive carcinoma, in situ carcinoma, benign and normal classes were performed and achieved an accuracy of 93.8 ± 2.3% and 87.2 ± 2.6% respectively.

Fabio A. Spanhol et al. [53] used extracted features from the images as the input to classifier. Output of a previously trained convolutional neural network is fed into these classifiers that are trained on problem-specific data. The pre-trained CaffeNet model is used and was trained on the ImageNet. 86.3% and 84.6% are the accuracies obtained for two class classification and for four class classification respectively. Sana Ullah Khan et al. [12] analyzed the cancer detection process through GoogLeNet [110], VGGNet [111], and ResNet [104], where they extracted different low-level features separately. Low level features are combined using a fully connected layer. The method achieved an accuracy of 97.525%, without training from scratch thus improving the classification efficiency. Ana C. Perre et al. [38] used three pre-trained models which are CNN-F, CNN-M [112], and Caffe [106].

Yap et al. [40] investigated three methods for breast ultrasound lesion detection—a patch-based LeNET [113], U-Net [114] and using AlexNet [115]. The disadvantage of the method is that it required training and negative images in the experiment. Ahmed et al. [42] used DeepLab V3 framework and Mask RCNN. Xception 65 is the backbone of DeepLab V3 and for Mask RCNN, Resnet 101 is the backbone model. By analyzing the various literature surveyed it was found that the commonly used transfer learning models are VGG-16, VGG-19 & Resnet. Xie J et al. [116] performed analysis of histopathological binary and multiclass image classification using Inception_V3 and Inception_ResNet_V2. The performance of Inception_ResNet_V2 network was found to be more suitable than the Inception_V3 network architecture [116].

8 Convolution neural network models

Convolutional neural network (CNN) is a deep learning-based algorithm for image recognition and image classification which includes input layer, output layer, and hidden layers [117]. Convolutional neural network consists of convolution, pooling and fully connected layers and the operation performed is convolution, which extracts the features from images [118]. Zhang et al. [43] used an eight-layer convolutional neural network with batch normalization and dropout and the max pooling is replaced by rank based stochastic pooling. In this convolutional neural networks are used to learn image-level features and relation-aware representation features are learned using graph neural networks. The method proposed is termed Net-5. The method achieved high accuracy but cannot be used to deal heterogenous data. Also, the dataset used is small so optimization in large dataset is necessary before clinical usage.

Tsai et al. [45] proposed a deep neural network (DNN)-based model for the classification of breast mammogram images into BI-RADS categories 0, 1, 2, 3,4A, 4B, 4C and 5. Mammogram dataset was segmented to generate block based image segments which are provided as the inputs to the model and the BI-RADS category is predicted as the output.

Thapa et al. [60] proposed a Deep Convolutional Neural Network (DCNN) using Patch-based Classifier (PBC). The convolutional neural network uses a tangent function together with convolution, max pooling, dropout and softmax layers. The tangent function is modified by obtained by combining arc Tan function and ReLU to reduce the processing time and to increase the accuracy.

Suh et al. [44] used two convolutional neural networks DenseNet-169 and EfficientNet-B5. Yurttakal et al. [62] proposed a convolutional neural architecture with six groups of convolution, batch normalization, ReLU and five max-pooling layers, followed by one dropout, one fully connected layer and softmax layer. Adam optimizer was used. Zheng et al. [63] used Deep Learning Assisted Efficient AdaBoost Algorithm (DLA-EABA) for breast cancer detection and deep CNNs are employed for tumour classification with multiple convolutional layers, LSTM, max-pooling layers, fully connected layer and soft max layer.

Zhu et al. [28] performed histopathological image classification using multiple compact convolutional neural networks. A hybrid convolutional neural network is designed which includes a local model branch and a global model branch. Patches are generated for a histopathological image using a patch sampling strategy which are passed to a local model branch and yield predictions for all the patches. Output for the local model branch is obtained by performing patch voting of all predictions. To the global model branch the down sampled image as a whole is given and obtained the prediction. Local predictions and global predictions are weighted together to obtain better representation ability. Squeeze-Excitation-Pruning (SEP) [119] decreased overfitting and achieved higher accuracy. Multiple models are built with different composition and data partition to improve the generalization ability of the model. 87.5% patient level and 84.4% image level accuracy are obtained for the multimodal assembling scheme.

Araújo et al. used [52] convolutional neural networks for four class classification of histological images in to normal tissue, benign lesion, invasive carcinoma, in situ carcinoma and two class classification into non-carcinoma and carcinoma. 77.8% is the accuracy obtained for four class classification and for two class classification, an accuracy of 83.3% is obtained. Spanhol et al. [54] extracted nonoverlapping grid patches either using a sliding window or randomly. Supervised training was performed using a stochastic gradient descent method where fusion rules like sum, product and max [120] are used to combine the results of the image patches. Patient level accuracy of 88.6 ± 5.6% and image level accuracy of 89.6 ± 6.5% is obtained.

Abdel-Zaher [55] proposed a deep belief path for breast cancer detection and an accuracy of 99.68% was obtained. Cireşan et al. [56] proposed a method in which the central coordinate of single mitosis is found out and based on that training is done. A deep neural network architecture is used with successive layers of convolution and max pooling and obtained an F-score of 0.782.

Wang et al. [37], extracted region of interest using a mass detection method. Classifiers are trained using the features extracted from the region of interest and labels, with all the images from the dataset. Both the objective features and subjective features are combined. ELM classifier is used since it outperforms multidimensional classification. A 7-layer convolutional neural network is used which included three convolution layers, three max-pooling layers, and one fully connected layer. The fully connected layer has 48 neurons which are the features for further analysis.

Ragab et al. [39] used two segmentation approaches. Initially region of interest was determined manually and then threshold and region-based segmentation was performed. Deep convolutional neural networks were used for feature extraction, with SVM replacing the last layer to classify the images into benign and malignant categories for the DDSM and CBIS-DDSM datasets. For manual segmentation, Alexnet [115] achieved an accuracy of 79%, and for the automated ROI procedure, an efficiency of 94%. Only a minor portion of the data used by Wenqing Sun et al. [41] was labelled, whereas the majority of the data was unlabelled. Three convolutional layers are used and sub patches extracted from ROI’s are given as input to the network. The method is useful when labelled data is difficult to obtain and the accuracy obtained was 82.43%.

Shen et al. [93] proposed a deep learning based model for the classification of mammograms which requires an annotated dataset only during the initial stages and it can further proceed without a ROI annotated dataset. Monika Tiwari et al. [73] used deep learning and machine learning techniques including Regression, Support Vector Machine (SVM) and K Nearest Neighbor (KNN), Multi-Layer perceptron classifier, Artificial Neural Network (ANN)) etc. on the dataset taken from Kaggle. The results showed that SVM and Random Forest Classifier have got an accuracy of 96.5%. CNN and ANN were employed to achieve an accuracy of 99.3% and 97.3% respectively. Umar Albalawi et al. [121] used Convolutional Neural Network classifier for diagnosing breast cancer with MIAS (Mammographic Image Analysis Society)-dataset. Wiener filter is used to remove the noise and background of the image and the K-means clustering technique was employed for the fragmentation. Authors concluded that the performance of the CNN model achieved 0.5–4% high test accuracy and 3–13% specificity when compared to other models.

Mi et al. [80] has worked on two-stage architecture based on deep learning method and machine learning method for the multi-class classification (normal tissue, benign lesion, ductal carcinoma in situ, and invasive carcinoma) of breast digital pathological images. Model could achieve accuracies of 85.19% on multi-classification and 96.30% on binary classification (non-malignant vs malignant). Methods used by various authors, the convolutional neural networks and the image modality are shown in Table 8.

9 Performance metrics

Using various performance metrics such as accuracy, specificity, sensitivity, and F1-score, the efficacy of the models is evaluated. The resultant performance metric values are detailed in this section.

9.1 Accuracy

Accuracy is the fraction of predictions made correct by the model and frequency with which the predicted value matches with the actual value divided by total prediction made and the expression for accuracy is given in Eq. (1). Accuracies obtained by various models are shown in Table 9.

9.2 Sensitivity, specificity & AUC

Sensitivity is the fraction of correct positive predictions and Specificity is the fraction of negative data predictions. The expression for sensitivity is given in Eq. (2) and expression for specificity is given in Eq. (3).

Area under Curve (AUC) is area under ROC (receiver operating characteristic curve) and is calculated using the Eq. (4).

where \({\mathrm{x}}_{\mathrm{i}}\) is the abscissa of the \({\mathrm{i}}^{\mathrm{th}}\) point in the ROC curve and \({\mathrm{y}}_{\mathrm{i}}\) is the ordinate of the \({\mathrm{i}}^{\mathrm{th}}\) point in the ROC curve. Table 10 shows the Sensitivity, Specificity & AUC obtained for breast cancer classification.

9.3 F-measure

The weighted harmonic mean of recall and precision is F-measure and the expression is shown in Eq. (5). F-measure obtained for breast cancer classification of histopathological images is shown in Table 11.

10 Future trends and challenges in breast cancer image analysis

This section outlines future research directions for breast cancer classification and detection, and significant efforts are needed to improve the efficiency of classification of breast cancer. This review highlighted the current trends of breast cancer detection and classification using deep learning approaches. Despite the good outcomes of the literature search, there are still few limitations and difficulties with regard to our current search study and are presented below:

-

Lack of large publicly available datasets- deep learning requires large datasets for efficient detection.

-

The majority of the studies observed the private databases obtained from clinics or cancer research organizations. For evaluating and comparing the performance of these datasets along with the developed models is strenuous.

-

Incorrect labeling of data due to observer variations with regard to image segmentation datasets.

-

Computation time, Memory overhead are not presented.

-

Benchmark dataset is not available to large extend.

Future work includes:

-

A reliable CAD system with explainable AI is essential for doctors/ radiologists to diagnose breast cancer at an early stage.

-

Different image modalities (Histopathology, MRI, CT, Thermal, Mammogram) of affected individuals should be gathered. Labeled multi class cancer data set should be included for improving the performance of detection.

-

Development of automatic annotator that can segment the cancer images without the help of radiologist/clinicians.

-

Appropriate selection of pre-processing algorithms to enhance the image quality will improve the model accuracy.

-

Unsupervised / saliency maps can be used to overcome the unlabelled datasets.

-

A multi hybrid models for analyzing multi-image modalities using deep learning can be investigated.

-

Major challenge with computer aided diagnosis is the availability of large dataset. Few shots learning can be explored to overcome the limitations of small datasets.

-

Implementation of deep learning models for multi-class classification similar to BRAIDS (Breast Imaging-Reporting and Data System) and TNM (Tumour Node, Metastasis).

11 Conclusion

In this paper we presented a survey on the methods for detecting breast cancer using various image modalities. Traditional method of manually analysing breast images is time consuming, tedious and error prone [125]. With the advent of computer aided design methods, it is possible to analyse the images automatically. Deep Learning techniques which can extract the features automatically can be used efficiently for breast cancer detection. Convolutional neural network (CNN) which is a deep learning technique have been widely used for analysing breast images. It has been found that the usage of transfer learning is very high. This paper discusses about datasets, image pre-processing methods, data augmentations and deep learning models adapted by various authors. The performance metrics used by the different models includes accuracy, specificity, sensitivity and AUC. Even though many effective methods have been proposed for the detection of breast cancer, it is still risking the lives of thousands. A major pitfall in most of the deep learning technique is the need for a large dataset and the difficulty to get an appropriate dataset. Computational power and time also should be considered while analysing breast images and often very high computing power is required. Hence much efficient methods need to be found out which can detect breast cancer at an earlier stage with reduced cost and time requirements.

Availability of data and material

The data that support the findings of this study are available from the first author upon reasonable request.

Code availability

The code is available from the first author upon reasonable request.

References

Boyle P, Levin B. World Cancer report 2008: IARC Press. International Agency for Research on Cancer IARC. 2018.

Moraga-Serrano PE. Global, regional, and national cancer incidence, mortality, years of life lost, years lived with disability, and disability-adjusted life-years for 29 cancer groups, 1990 to 2016: a systematic analysis for the global burden of disease study. JAMA Oncol. 2018;4:1553–68. https://doi.org/10.1001/jamaoncol.2018.2706.

DeSantis CE, Ma J, Gaudet MM, Newman LA, Miller KD, Goding Sauer A, Jemal A, Siegel RL. Breast cancer statistics, 2019. CA A Cancer J Clin. 2019;69:438–51. https://doi.org/10.3322/caac.21583.

Von Minckwitz G, Huang CS, Mano MS, Loibl S, Mamounas EP, Untch M, Wolmark N, Rastogi P, Schneeweiss A, Redondo A, Fischer HH. Trastuzumab emtansine for residual invasive HER2-positive breast cancer. N Engl J Med. 2019;380(7):617-28.

https://www.who.int/cancer/prevention/diagnosis-screening/breast-cancer/en/.

Sharma GN, Dave R, Sanadya J, Sharma P, Sharma K. Various types and management of breast cancer: an overview. J Adv Pharm Technol. 2010;1(2):109.

Chu KC, Smart CR, Tarone RE. Analysis of breast cancer mortality and stage distribution by age for the Health Insurance Plan clinical trial. JNCI: J Natl Cancer Inst. 1988;80(14):1125-32. https://doi.org/10.1093/jnci/80.14.1125.

Akram M, Iqbal M, Daniyal M, Khan AU. Awareness and current knowledge of breast cancer. Biol Res. 2017;50(1):1-23. https://doi.org/10.1186/s40659-017-0140-9.

Kim DH, Kim ST, Ro YM. Latent feature representation with 3-D multi-view deep convolutional neural network for bilateral analysis in digital breast tomosynthesis. In 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP) 2016 (pp. 927-931). IEEE. https://doi.org/10.1109/ICASSP.2016.7471811.

Guo R, Lu G, Qin B, Fei B. Ultrasound imaging technologies for breast cancer detection and management: a review. Ultrasound Med Biol. 2018;44(1):37-70. https://doi.org/10.1016/j.ultrasmedbio.2017.09.012.

He L, Long LR, Antani S, Thoma GR. Histology image analysis for carcinoma detection and grading. Comput Methods Programs Biomed. 2012;107(3):538-56. https://doi.org/10.1016/j.cmpb.2011.12.007.

Khan S, Islam N, Jan Z, Din IU, Rodrigues JJ. A novel deep learning based framework for the detection and classification of breast cancer using transfer learning. Pattern Recognit Lett. 2019;125:1-6.

Rakhlin A, Shvets A, Iglovikov V, Kalinin AA. Deep convolutional neural networks for breast cancer histology image analysis. In international conference image analysis and recognition 2018 Jun 27 (pp. 737-744). Springer, Cham.

Mahmood T, Li J, Pei Y, Akhtar F, Imran A, Rehman KU. A brief survey on breast cancer diagnostic with deep learning schemes using multi-image modalities. IEEE Access. 2020;8:165779-165809.

Springer, 2019, pp. 813–823. [18] Dankwa-Mullan I, Rivo M, Sepulveda M, Park Y, Snowdon J, Rhee K. Transforming diabetes care through artificial intelligence: The future is here. Popul Health Manag. 2019;22(3):229–242.

Veta M, Pluim JPW, Diest PJV, Viergever MA. Breast cancer histopathology image analysis: A Review IEEE Trans Biomed Eng. 2014;61:1400–1411.

Dimitriou N, Arandjelović O, Caie PD. Deep learning for whole slide image analysis: an overview. Front Med. 2019 Nov 22;6:264.

Robertson S, Azizpour H, Smith K, Hartman J. Digital image analysis in breast pathology—from image processing techniques to artificial intelligence. Transl Res. 2018;194:19-35.

Lecun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444.

Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, van der Laak JAWM, van Ginneken B, Sánchez CI. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42(9):60–88.

Duraisamy S, Emperumal S. Computer-aided mammogram diagnosis system using deep learning convolutional fully complex-valued relaxation neural network classifier. IET Comput Vis. 2017;11(8):656–62.

Bour A, Castillo-Olea C, Garcia-Zapirain B, Zahia S. Automatic colon polyp classification using convolutional neural network: a case study at Basque country. 2019 IEEE Int Symp Signal Process Inf Technol (ISSPIT). 2019;1-5. IEEE.

LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86(11):2278-324.

Zemmal N, Azizi N, Sellami M. CAD system for classification of mammographic abnormalities using transductive semi supervised learning algorithm and heterogeneous features. In 2015 12th International Symposium on Programming and Systems Int Conf Archit Support Program Lang Oper Syst (ISPS). 2015;1-9. IEEE.

Saraswathi D, Srinivasan E. A CAD system to analyse mammogram images using fully complex-valued relaxation neural network ensembled classifier. J Med Eng Technol. 2014;38(7):359–66.

Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. In Proceedings of the Proc. IEEE Comput Soc Conf Comput Vis. 2015:3431-3440.

Lewin JM, D'Orsi CJ, Hendrick RE, Moss LJ, Isaacs PK, Karellas A, Cutter GR. Clinical comparison of full-field digital mammography and screen-film mammography for detection of breast cancer. Am J Roentgenol. 2002;179(3):671-677.

Zhu C, Song F, Wang Y, Dong H, Guo Y, Liu J. Breast cancer histopathology image classification through assembling multiple compact CNNs. BMC BMC Med Inform Decis Mak. 2019;19(1):1-7.

Elmore JG, Longton GM, Pepe MS, Carney PA, Nelson HD, Allison KH, Geller BM, Onega T, Tosteson ANA, Mercan E, Shapiro LG, Brunye TT, Morgan TR, Weaver DL. A randomized study comparing digital imaging to traditional glass slide microscopy for breast biopsy and cancer diagnosis. J Pathol Inform. 2017;8(1):1–12.

Li Y, Wu J, Wu Q. Classification of Breast Cancer Histology Images Using Multi-Size and Discriminative Patches Based on Deep Learning. IEEE Access. 2019;7:21400–8. https://doi.org/10.1109/ACCESS.2019.2898044.

Nahid AA, Ali FB, Kong Y. Histopathological breast-image classification with image enhancement by convolutional neural network. In 2017 20th Int Conf Comput Inf Technol (ICCIT). 2017 Dec 22 (pp. 1-6). IEEE.

Shibusawa M, Nakayama R, Okanami Y, Kashikura Y, Imai N, Nakamura T, Kimura H, Yamashita M, Hanamura N, Ogawa T. The usefulness of a computer-aided diagnosis scheme for improving the performance of clinicians to diagnose non-mass lesions on breast ultrasonographic images. J Med Ultrason. 2016;43(3):387–94.

Gour M, Jain S, Sunil Kumar T. Residual learning based CNN for breast cancer histopathological image classification. Int J Imaging Syst Technol. 2020;30(3):621-35.

Drukker K, Giger ML, Horsch K, Kupinski MA, Vyborny CJ, Mendelson EB. Computerized lesion detection on breast ultrasound. Med Phys. 2002;29(7):1438–46.

Huang YL, Chen DR, Liu YK. Breast cancer diagnosis using image retrieval for different ultrasonic systems. Int Conf Image Process 2004;5:2598–2960.

Morrow M, Waters J, Morris E. MRI for breast cancer screening, diagnosis, and treatment. The Lancet. 2011;378(9805):1804–11.

Wang Z, et al. Breast cancer detection using extreme learning machine based on feature fusion with cnn deep features. IEEE Access. 2019;7:105146–58.

Perre AC, Alexandre LA, Freire LC. Lesion classification in mammograms using convolutional neural networks and transfer learning. Comput Methods Biomech Biomed Eng Imaging Vis. 2018. https://doi.org/10.1080/21681163.2018.1498392.

Ragab DA, Sharkas M, Marshall S, Ren J. Breast cancer detection using deep convolutional neural networks and support vector machines. PeerJ. 2019;7: e6201. https://doi.org/10.7717/peerj.6201.

Yap MH, et al. Automated breast ultrasound lesions detection using convolutional neural networks. IEEE J Biomed Health Inform. 2018;22(4):1218–26. https://doi.org/10.1109/JBHI.2017.2731873.

Sun W, Tseng TL, Zhang J, Qian W. Enhancing deep convolutional neural network scheme for breast cancer diagnosis with unlabeled data. Comput Med Imaging Graph. 2017;57:4-9.

Ahmed L, Iqbal MM, Aldabbas H, et al. Images data practices for semantic segmentation of breast cancer using deep neural network. J Ambient Intell Human Comput. 2020. https://doi.org/10.1007/s12652-020-01680-1.

Zhang YD, Satapathy SC, Guttery DS, Górriz JM, Wang SH. Improved breast cancer classification through combining graph convolutional network and convolutional neural network. Inf Process Manag. 2021;58(2):102439.

Suh YJ, Jung J, Cho BJ. Automated breast cancer detection in digital mammograms of various densities via deep learning. J Pers Med. 2020;10(4):211.

Tsai KJ, et al. A high-performance deep neural network model for bi-rads classification of screening mammography. Sensors 22.3. 2022;1160.

Alruwaili M, Gouda W. Automated Breast Cancer Detection Models Based on Transfer Learning. Sensors. 2022;22(3):876.

Li X, Radulovic M, Kanjer K, Plataniotis KN. Discriminative pattern mining for breast cancer histopathology image classification via fully convolutional autoencoder. IEEE Access. 2019:7;36433–36445.

Brancati N, De Pietro G, Frucci M, Riccio D. A deep learning approach for breast invasive ductal carcinoma detection and lymphoma multi-classification in histological images. IEEE Access. 2019;7:44709–20. https://doi.org/10.1109/ACCESS.2019.2908724.

Gecer B, Aksoy S, Mercan E, Shapiro LG, Weaver DL, Elmore JG. Detection and classification of cancer in whole slide breast histopathology images using deep convolutional networks. Pattern Recognit. 2018;84:345-56.

Ahmad HM, Ghuffar S, Khurshid K. Classification of breast cancer histology images using transfer learning. 2019. https://doi.org/10.1109/IBCAST.2019.8667221.

Bardou D, Zhang K, Ahmad SM. Classification of breast cancer based on histology images using convolutional neural networks. Ieee Access. 2018;6:24680-93. https://doi.org/10.1109/ACCESS.2018.2831280.

Araújo T, et al. Classification of breast cancer histology images using convolutional neural networks. PLoS One. 2017;12(6).

Spanhol FA, Oliveira LS, Cavalin PR, Petitjean C, Heutte L. Deep features for breast cancer histopathological image classification. In2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC) 2017 Oct 5 (pp. 1868-1873). IEEE. https://doi.org/10.1109/SMC.2017.8122889.

Spanhol FA, Oliveira LS, Petitjean C, Heutte L. Breast cancer histopathological image classification using convolutional neural networks. In 2016 Proc Int Jt Conf Neural Netw. (IJCNN) 2016 Jul 24 (pp. 2560-2567). IEEE.

Abdel-Zaher AM, Eldeib AM. Breast cancer classification using deep belief networks. Expert Syst Appl. 2016;46:139–44.

Cireşan DC, Giusti A, Gambardella LM, Schmidhuber J. Mitosis detection in breast cancer histology images with deep neural networks. In International conference on medical image computing and computer-assisted intervention 2013: 411-418. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-40763-5_51.

Celik Y, Talo M, Yildirim O, Karabatak M, Acharya UR. Automated invasive ductal carcinoma detection based using deep transfer learning with whole-slide images. Pattern Recogn Lett. 2020;133:232–9.

Zerouaoui H, Idri A. Deep hybrid architectures for binary classification of medical breast cancer images. Biomed Signal Process Control. 2022;71: 103226.

Phan NN, et al. Predicting breast cancer gene expression signature by applying deep convolutional neural networks from unannotated pathological images. Front Oncol. 2021;11:769447–769447.

Thapa A, et al. Deep learning for breast cancer classification: Enhanced tangent function. Comput Intell. 2021.

Ayana G, Park J, Jeong JW, Choe SW. A novel multistage transfer learning for ultrasound breast cancer image classification. Diagnostics. 2022;12(1):135.

Yurttakal AH, Erbay H, İkizceli T, Karaçavuş S. Detection of breast cancer via deep convolution neural networks using MRI images. Multimed Tools Appl. 2020;79(21):15555-73.

Zheng J, Lin D, Gao Z, Wang S, He M, Fan J. Deep learning assisted efficient AdaBoost algorithm for breast cancer detection and early diagnosis. IEEE Access. 2020;8:96946–54.

Sahran S, Qasem A, Omar K, Albashih D, Adam A, Abdullah SN, Abdullah A, Hussain RI, Ismail F, Abdullah N, Pauzi SH. Machine Learning Methods for Breast Cancer Diagnostic. In: Nilufer Bulut (Eds.). Breast Cancer Surg. 2018. https://doi.org/10.5772/intechopen.79446.

Abbas S, Jalil Z, Javed AR, Batool I, Khan MZ, Noorwali A, Gadekallu TR, Akbar A. BCD-WERT: a novel approach for breast cancer detection using whale optimization based efficient features and extremely randomized tree algorithm. PeerJ Comput Sci. 2021;7:e390. https://doi.org/10.7717/peerj-cs.390.

Safdar S, Rizwan M, Gadekallu TR, Javed AR, Rahmani MK, Jawad K, Bhatia S. Bio-imaging-based machine learning algorithm for breast cancer detection. Diagnostics. 2022;12(5):1134. https://doi.org/10.3390/diagnostics12051134.

Zaharchuk G, et al. Deep Learning in Neuroradiology. AJNR Am J Neuroradiol. 2018;39(10):1776–84. https://doi.org/10.3174/ajnr.A5543.

Chapter 10 Analysis of Histopathology Images From Traditional Machine Learning to Deep Learning, Biomedical Texture Analysis, Oscar Jimenez-del-Toro,Sebastian Otálora ,Mats Andersson, Kristian Eurén, Martin Hedlund, Mikael Rousson, Henning Müller, Manfredo Atzori, Elsevier, 2017.

Ciresan D, Giusti A, Gambardella L, Schmidhuber J. Deep neural networks segment neuronal membranes in electron microscopy images. Proc 26th Ann Conf. Neural Inf Proc Syst. 2012. (NIPS) P. L. Bartlett, F. C. N. Pereira, C. J. C. Burges, L. Bottou, and K. Q. Weinberger, Eds. 2012;2852–2860. [Online]. Available: http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.

Xing F, Xie Y, Yang L. An automatic learning-based framework for robust nucleus segmentation. IEEE Trans Biomed Eng. 2015;35(2):550-66.

Prasoon A, Petersen K, Igel C, Lauze F, Dam E, Nielsen M. Deep feature learning for knee cartilage segmentation using a triplanar convolutional neural network. In Int Conference on Med Image Comput Comput Assist Interv. 2013: 246-253. Springer, Berlin, Heidelberg.

Cruz-Roa AA, Arevalo Ovalle JE, Madabhushi A, González Osorio FA. A deep learning architecture for image representation, visual interpretability and automated basal-cell carcinoma cancer detection. In Int Conference on Med Image Comput Comput Assist Interv. 2013:403-410. Springer, Berlin, Heidelberg.

Tiwari M, Bharuka R, Shah P, Lokare R. Breast cancer prediction using deep learning and machine learning techniques. 2020. Available at SSRN: https://ssrn.com/abstract=3558786 or https://doi.org/10.2139/ssrn.3558786.

Spanhol F, Oliveira LS, Petitjean C, Heutte L. A dataset for breast cancer histopathological image classification. IEEE Transact Biomed Eng (TBME). 2016;63(7):1455–62.

“Breast cancer imageset,” ftp://ftp.cs.technion.ac.il/pub/projects/medicimage/breastcancerdata/.

Pêgo A, Aguiar P. Bioimaging. [Online]. 2015. Available: http://www.bioimaging2015.ineb.up.pt/dataset.html.

Li X, Plataniotis KN. A complete color normalization approach to histo-pathology images using color cues computed from saturation weighted statistics. IEEE Trans Biomed Eng. 2015;62(7):1862–73.

Elmore JG, Longton GM, Carney PA, Geller BM, Onega T, Tosteson ANA, Nelson HD, Pepe MS, Allison KH, Schnitt SJ, O’Malley FP, Weaver DL. Diagnostic concordance among pathologists interpreting breast biopsy specimens. J Am Med Assoc. 2015;313(11):1122–32.

Oster NV, Carney PA, Allison KH, Weaver DL, Reisch LM, Longton G, Onega T, Pepe M, Geller BM, Nelson HD, Ross TR. Development of a diagnostic test set to assess agreement in breast pathology: practical application of the Guidelines for Reporting Reliability and Agreement Studies (GRRAS). BMC Women's Health. 2013;13(1):1-8.

Mi W, Li J, Guo Y, Ren X, Liang Z, Zhang T, Zou H. Deep Learning-Based Multi-Class Classification of Breast Digital Pathology Images. Cancer Manag Res. 2021;10(13):4605–17. https://doi.org/10.2147/CMAR.S312608.PMID:34140807;PMCID:PMC8203273.

Vaka AR, Soni B, Reddy S. Breast cancer detection by leveraging machine learning. ICT Express. 2020;6(4):320-4. https://doi.org/10.1016/j.icte.2020.04.009. (https://www.sciencedirect.com/science/article/pii/S2405959520300801)

Frazer HM, Qin AK, Pan H, Brotchie P. Evaluation of deep learning‐based artificial intelligence techniques for breast cancer detection on mammograms: Results from a retrospective study using a BreastScreen Victoria dataset. J Med Imaging Radiation Oncol. 2021;65(5):529-37.

Van der Walt S, Schönberger JL, Nunez-Iglesias J, Boulogne F, Warner JD, Yager N, Gouillart E, Yu T. scikit-image: image processing in Python. PeerJ. 2014;2:e453.

Macenko M, Niethammer M, Marron JS, Borland D, Woosley JT, Guan X, et al, “A method for normalizing histology slides for quantitative analysis” , In: Proceedings—2009 IEEE International Symposium on Biomedical Imaging: From Nano to Macro, ISBI 2009. Boston, Massachusetts. 2009;1107–1110

Pizer SM. Contrast-limited adaptive histogram equalization: Speed and effectiveness stephen m. pizer, r. eugene johnston, james p. ericksen, bonnie c. yankaskas, keith e. muller medical image display research group. Proc First Conf Visualization Biomed Comput. Atlanta, Georgia. 1990;337:1.

Pizer SM, Amburn EP, Austin JD, Robert C, Geselowitz A, Greer T, Romeny BTH, Zimmerman JB, Zuiderveld K. Adaptive Histogram Equalization and Its Variations. Comput Vis Graph Image Process. 1987;39:355–68.

Gurcan MN, Boucheron LE, Can A, Madabhushi A, Rajpoot NM, Yener B. Histopathological image analysis: A Review. IEEE Rev Biomed Eng. 2009;2:147–71. https://doi.org/10.1109/RBME.2009.2034865.

Reinhard E, Adhikhmin M, Gooch B, Shirley P. Color transfer between images. IEEE Comput Graph Appl. 2001;21(5):34–41.

Arnout C. Ruifrok, Dennis A. Johnston and others, Quantification of histochemical staining by color deconvolution. Anal Quant Cytol Histology. 2001;23(4):291–9.