Abstract

Brain-computer interface (BCI) technologies have developed as a game changer, altering how humans interact with computers and opening up new avenues for understanding and utilizing the power of the human brain. The goal of this research study is to assess recent breakthroughs in BCI technologies and their future prospects. The paper starts with an outline of the fundamental concepts and principles that underpin BCI technologies. It examines the many forms of BCIs, including as invasive, partially invasive, and non-invasive interfaces, emphasizing their advantages and disadvantages. The progress of BCI hardware and signal processing techniques is investigated, with a focus on the shift from bulky and invasive systems to more portable and user-friendly options. Following that, the article delves into the important advances in BCI applications across several fields. It investigates the use of BCIs in healthcare, particularly in neurorehabilitation, assistive technology, and cognitive enhancement. BCIs’ potential for boosting human capacities such as communication, motor control, and sensory perception is being thoroughly researched. Furthermore, the article investigates developing BCI applications in gaming, entertainment, and virtual reality, demonstrating how BCI technologies are growing outside medical and therapeutic settings. The study also gives light on the problems and limits that prevent BCIs from being widely adopted. Ethical concerns about privacy, data security, and informed permission are addressed, highlighting the importance of strong legislative frameworks to enable responsible and ethical usage of BCI technologies. Furthermore, the study delves into technological issues such as increasing signal resolution and precision, increasing system reliability, and enabling smooth connection with existing technology. Finally, this study paper gives an in-depth examination of the advances and future possibilities of BCI technologies. It emphasizes the transformative influence of BCIs on human-computer interaction and their potential to alter healthcare, gaming, and other industries. This research intends to stimulate further innovation and progress in the field of brain-computer interfaces by addressing problems and imagining future possibilities.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Communicating is a vital component of daily life, and it is achieved through a variety of methods such as conversing, writing, and using computer interfaces, which are becoming an increasingly important means of interacting with others through channels such as e-mail and text messaging.

However, there are conditions such as stroke, amyotrophic lateral sclerosis (ALS), or other injuries or neurologic disorders that can cause paralysis by damaging the neural pathways that connect the brain to the rest of the body, frequently limiting one’s ability to relate and communicate to people.

Brain-computer interfaces (BCIs), by translating cerebral activity into control signals for assistive communication devices, have the potential to restore communication for people with tetraplegia and anarthria.

Humans can now use electrical signals generated by brain activity to interact with, influence, or change their surroundings. Individuals who are unable to speak or use their limbs may be able to communicate or operate assistive devices for walking and manipulating objects thanks to the emerging field of BCI technology. The public is very interested in brain-computer interface research. News articles in the lay media demonstrate intense curiosity and interest in a field that, hopefully, will one day soon dramatically improve the lives of many disabled people affected by a variety of disease processes (Jerry et al. 2012).

With the rapid advancement of technology, the gap between humans and machines has begun to close. Our fantastic science fiction stories about “mind control” have gradually become a reality thanks to machines. These new approaches’ frontiers include BCIs and artificial intelligence (AI). Typically, experimental paradigms for BCIs and AI were developed and deployed independently of one another. However, scientists now prefer to combine BCIs and AI, which makes it possible to efficiently use the brain’s electric signals to maneuver external devices (Bell et al. 2008).

AI, which can advance the analysis and decoding of neural activity, has turbocharged the field of BCIs. Over the past decade, a wide range of BCI applications with AI assistance have emerged (Xiayin et al. 2020).

BCIs, which represent technologies designed to communicate with the central nervous system, and neural sensory organs can provide a muscle independent communication channel for people with neurodegenerative diseases, such as amyotrophic lateral sclerosis, or acquired brain injuries (Kübler et al. 1999).

Fortunately, recent advances in AI methodologies have made great strides, verifying that AI outperforms humans in decoding and encoding neural signals (Xiayin et al. 2020). This gives AI a huge possibility to be an excellent assistant in analyzing signals from the brain before they reach the prosthesis (Patel et al. 2009). When AI works within BCIs, internal parameters are provided to the algorithms constantly, such as pulse durations and amplitudes, stimulation frequencies, energy consumption by the device, stimulation or recording densities, and electrical properties of the neural tissues (Silva 2018).

If brain-computer interface and artificial intelligence succeed, it has great benefit for people with neurological disorders and will be a great upgrade in the health sector. There are, however, certain challenges that may be encountered in achieving this great feat. Some of the challenges are patient’s inability to effectively use this technology and sometimes its invasiveness. This publication addresses some key advantages that BCIs hold as well as challenges that may impede its progress.

Also, BCIs, even though trying to evolve to solve all these neurological issues, still has a long way to go to help patients that use it.

Methods

Literature search

We conducted a systematic literature search using multiple electronic databases, namely PubMed, IEEE Xplore, ScienceDirect, and Scopus, to identify studies related to BCI technologies. The search was carried out in June 2022 and included all studies published since the inception of BCI technology to present time. We used a combination of keywords and Boolean operators such as “brain-computer interface,” “BCI,” “brain-machine interface,” “neural prosthetics,” “brain signal processing,” “electroencephalography,” and “brain imaging.” The search was limited to English-language studies published in peer-reviewed journals and conference proceedings.

Selection criteria

We included studies that investigated the advancements and future prospects of BCI technologies in various applications, as well as those reporting on the development of new algorithms, novel techniques, or improved hardware for BCI. We excluded studies that focused only on basic neuroscience research or animal studies that were not related to BCI applications. Review articles, editorials, and other non-empirical studies were also excluded.

Data extraction and analysis

Two authors independently screened the titles, abstract, and full text of the identified studies to determine their eligibility for inclusion in this review. Any discrepancies were resolved through consensus discussion. We used a standardized data extraction form to collect information on the study design, participants, sample size, BCI technology used, application area, and main findings.

Data were analyzed using a narrative synthesis approach, where we summarized, compared, and contrasted the findings of the included studies. We identified key themes and sub-themes from the studies and organized them in a coherent and meaningful way. We also provided a critical evaluation of the quality of the included studies and the limitations of the BCI technologies reviewed.

Results

Brain-computer interface

Communication has always been the most important tool to interact with our environment and the people in it. The pursuit for interaction between a computer and a person has long piqued the interest of researchers and scientists. BCI has solved the issue of communication between the human brain and its surrounding environment. These intelligent systems can decipher brain signals using five consecutive stages: signal acquisition, pre-processing, feature extraction, classification, and control interface as shown in Fig. 1 (Nicolas-Alonso and Gomez-Gil 2012). According to Mridha (Mridha et al. 2021), the BCI system employs the user’s brain activity signals as a medium for communication between the person and the computer, translated into the required output, and it enables users to operate external devices that are not controlled by peripheral nerves or muscles via brain activity. Furthermore, BMIs can be either classified as motor, sensory, and sensorimotor, or they can be categorized as invasive or non-invasive depending upon which part of the brain they tap into or which part they are implanted in respectively (Salahuddin and Gao 2021). Today, an average computer processor can solve up to 1.8 billion calculations per second (cps) (Moravec 1998), while the human brain 1000 trillion (Mead and Kurzweil 2006) can exceed this number by far and wide. According to Salahuddin (Salahuddin and Gao 2021), it is noted the current interfaces being used to connect to the digital world, such as typing or voice commands, have very low bandwidth and throughput which hinders the market disruption of commercial BMI products. Brain-computer interface has recently become a fascinating topic of scientific investigation and a viable means of showing a direct link between the brain and technology. This notion has been utilized in several research and development projects, and it has also become one of the fastest growing sectors of scientific inquiry.

Brain-computer interface in use

Stroke is the most important application for BCI. According to Orban (Orban et al. 2022), stroke has become one of the main reasons for abnormal human death. According to global disease research records, more than 10 million patients worldwide suffer from stroke and up to 116 million people are left with disabilities. This disability affects the patient and the patient’s family’s daily life (Lozada-Martínez et al. 2021). Damage to the central nervous system results from stroke. Loss of limb motion is one of the ailments that is most frequently anticipated. Training in rehabilitation is essential for stroke sufferers. The survival rate of stroke patients has noticeably increased in recent years. The notion of BCI rehabilitation devices is becoming more widely available, but there is still a significant need for cutting-edge rehabilitation techniques to shorten the recovery time and enhance motor recovery in post-stroke patients. Brain-computer interfaces have been given priority usage over the conventional neuromuscular pathways because they enable stroke patients to communicate with the surrounding environment using their brain signals, overcoming the movement disability of the limbs (He et al. 2020). This advantage caused a growing attraction in the field of rehabilitation. Additionally, the ability to decode the desires of patients diagnosed with motor disability has governed the usage of an external rehabilitative or assistive device. It has proved the ability of BCI systems to apply the neural plasticity concept using neurofeedback (Mrachacz-Kersting et al. 2016).

Evolution of brain-computer interfaces: milestones

BCIs are technological systems that facilitate direct communication between the human brain and external devices. BCIs have evolved significantly over the years, with advancements in technology and our understanding of the brain (Kawala-Sterniuk et al. 2021). This section will provide an overview of the evolution of BCIs, including their history, major milestones, and current state of research.

History of BCIs

It is important to look at the relatively recent history and initial development of BCIs to better understand the necessity for study and development in this area. However, it was not until the twentieth century that the development of BCIs gained momentum. In the 1920s, German neuroscientist Hans Berger invented the electroencephalogram (EEG), which allowed for the recording of electrical activity in the brain (Tudor et al. 2005). This laid the foundation for the development of BCIs that could interpret brain signals. In 1956, Lilly implanted a multielectrode array in a monkey’s cortical area for electrical stimulation (Salahuddin and Gao 2021). Later in 1976, Wyrwicka and Sterman recorded brain signals in cats which they later translated into sensory feedback for the same animals to increase the generation of those brain signals (sensorimotor rhythms). The term “brain-computer interface” was first coined by Jacques Vidal in 1973 and he successfully converted brain signals into computer-controlled signals. In the 1990s, Nicolelis and Chapin mastered one dimensional neural control of robotic limbs using laboratory rats (Chapin et al. 1999).

The same team later worked on robotic arm control and created BMIs for bimanual movement and locomotion patterns. Most recently, (Donoghue et al. 2007) implanted invasive multielectrode arrays on humans to show BMI control of computer cursor (Hochberg et al. 2006) and robotic manipulator (Hochberg et al. 2012).

Major milestones in BCI evolution

The evolution of BCIs can be divided into several key milestones that have shaped the field:

Early BCI developments

In the 1960s and 1970s, researchers made progress in developing rudimentary BCIs that could detect brain signals and control simple tasks, such as moving a cursor on a computer screen (Chengyu and Weijie 2020). These early BCIs relied on invasive techniques, such as implanted electrodes, and were limited in their capabilities.

Non-invasive BCIs

In the 1980s and 1990s, researchers began to explore non-invasive methods for BCI development, such as using EEG to detect brain signals from the scalp without the need for invasive implants (Kawala-Sterniuk et al. 2021). This opened up the possibility of developing BCIs that were more user-friendly and had broader applications.

Motor restoration

In the 2000s, significant progress was made in using BCIs to restore motor function in individuals with paralysis or limb loss. For example, researchers developed BCIs that allowed paralyzed patients to control robotic arms and perform basic tasks, such as grasping objects (Meng et al. 2016). This represented a major breakthrough in BCI research and demonstrated the potential of BCIs for enhancing human capabilities.

Cognitive enhancement

In recent years, there has been increasing interest in using BCIs for cognitive enhancement, such as improving memory or cognitive processing speed. Researchers have developed BCIs that can stimulate specific areas of the brain to enhance cognitive function, although these technologies are still in the early stages of development (Daly and Huggins 2015).

Summary of major milestones

YEARS | MILESTONE IN BCI |

|---|---|

1960s and 1970s | Development of invasive methods for BCIs that could detect brain signals and control simple tasks, e.g., moving a mouse cursor. |

1980s and 1990s | Development of non-invasive techniques for BCI such as EEG. |

2000s | Development of BCI used for restoring motor function of paralyzed or physically challenged individuals, e.g., using BCIs to control robotic arms. |

Recent years | Development of BCIs for cognitive enhancement such as improving memory or cognitive processing speed. |

Current state of BCI research

Today, BCIs are a rapidly growing field of research with diverse applications, ranging from medical and clinical uses to consumer applications. Researchers continue to make advancements in BCI technology, with a focus on improving their usability, accuracy, and safety. Some of the current areas of research in BCI development include the following:

Neural implants: Researchers are exploring new materials and designs for neural implants to improve their long-term stability and biocompatibility. Advances in nanotechnology and materials science are enabling the development of smaller and more reliable implants that can interface with the brain for extended periods of time (Pampaloni et al. 2018).

Non-invasive BCIs: Non-invasive BCIs, such as those that use EEG, continue to be a popular area of research. Efforts are being made to improve the accuracy and reliability of non-invasive BCIs, as well as develop new signal processing techniques to extract more information from brain signals (Salahuddin and Gao 2021).

Machine learning and artificial intelligence: Machine learning and artificial intelligence techniques are being integrated into BCIs to improve their performance and enable more complex tasks (Zhang et al. 2020). For example, researchers are using machine learning algorithms to decode brain signals and translate them into handwritten text. The next section of this article focuses on the implementation of artificial intelligence in BCIs.

Artificial intelligence in brain-computer interfaces

Introduction

The goal of AI is to replicate human cognitive functions. It is ushering in a paradigm shift in healthcare, fueled by the increased availability of healthcare data and the rapid advancement of analytics tools. BCIs and AI are at the forefront of these emerging techniques. The use of AI in BCIs has gained significant attention in recent years due to its potential to improve the accuracy and reliability of BCI systems. Typically, experimental paradigms for BCIs and AI were conceived and implemented independently of one another. According to Bell (Bell et al. 2008), scientists now prefer to combine BCIs and AI, which makes it possible to efficiently use the brain’s electric signals to maneuver external devices. For severely disabled people, the development of BCIs could be the most important technological breakthrough in decades (Lee et al. 2019). BCIs, which represent technologies designed to communicate with the central nervous system and neural sensory organs, can provide a muscle independent communication channel for people with neurodegenerative diseases, such as amyotrophic lateral sclerosis, or acquired brain injuries (Kübler et al. 1999). The history of BCIs is intimately related to the effort of developing new electrophysiological techniques to record extracellular electrical activity, which is generated by differences in electric potential carried by ions across the membranes of each neuron (Birbaumer et al. 2006). Fortunately, recent advances in AI methodologies have made great strides, verifying that AI outperforms humans in decoding and encoding neural signals (Li and Yan 2014). This gives AI a fantastic possibility to be an ideal assistant in processing brain signals before they reach the prosthesis.

According to Patel (Patel et al. 2009), AI is a set of general approaches that uses a computer to model intelligent behavior with minimal human intervention, which eventually matches and even surpasses human performance in task-specific applications. When AI works within BCIs, internal parameters are provided to the algorithms constantly, such as pulse durations and amplitudes, stimulation frequencies, energy consumption by the device, stimulation or recording densities, and electrical properties of the neural tissue (Silva 2018). After receiving the information, AI algorithms can identify useful parts and logic in the data and then simultaneously produce the desired functional outcomes (Li et al. 2015).

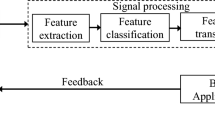

According to Zhang (Zhang et al. 2020), at the dawn of technological transformation, a tendency to combine BCIs and AI has also attracted our attention. Here, we review current applications with a focus on the state of BCIs, the role that AI plays and future directions of BCIs based on AI (Fig. 2).

Schematic description of BCIs based on AI. The circuit can be described as follows. First, micro-electrodes detect signals from the human cerebral cortex and send them to the AI. Second, the AI takes charge of signal processing, which includes feature extraction and classification. Third, the processed signals are output to achieve the abovementioned functions. Finally, feedback is sent to the human cortex to adjust the function (Zhang et al. 2020)

History of AI in neurology (brain network)

The history of AI stems back to the 1950s with the introduction of the perceptron model (Minsky et al. 2017); however, it was not until the 1990s that machine-learning techniques became more widely utilized (Crevier 1993). The development of machine-learning tools including support vector machine and recurrent neural networks (Sarle 1994; Cortes and Vapnik 1995; Kohavi 1995) allowed scientists to leverage the computational power available in this era to build statistical models robust to data variation, and to make new inferences about real-world problems (Obermeyer and Emanuel 2016). However, arguably, the biggest advances in AI to date have come in the last decade, as massive scale data and hardware suitable to process these data have become available, and sophisticated deep-learning methods—that aim to imitate the working of the human brain in processing data—became computationally feasible (Ngiam et al. 2011; LeCun et al. 2015). Deep learning is now widely regarded as the foundation of contemporary AI (Sejnowski 2020).

There are preliminary examples of the value of AI in neurology, for example in detecting structural brain lesions on MRI (Brosch et al. 2014; Korfiatis et al. 2016). A common limitation of clinical AI studies is the amount of available data with high-quality clinical outcome labels, rather the availability of robust AI algorithms and computational resources.

Applications of AI in BCIs

The implementation of AI in BCIs has numerous applications, including medical, communication, and control systems. In medical applications, AI-BCI systems have been used for the treatment of neurological disorders such as epilepsy, Parkinson’s disease, and stroke. For instance, AI-based BCI systems have been developed for detecting epileptic seizures and providing timely intervention to prevent or reduce the severity of the seizure (Akkus et al. 2017). Additionally, AI-based BCI systems have been developed for the diagnosis and treatment of Parkinson’s disease, which affects the nervous system and causes tremors and difficulty in movement. Such systems use AI algorithms to analyze brain signals and provide feedback to the patient (Zhao et al. 2017).

In communication applications, AI-BCI systems have been developed for individuals with speech and motor impairments, such as those with amyotrophic lateral sclerosis (ALS) or locked-in syndrome. Such systems use AI algorithms to decode the user’s brain signals and translate them into text or speech output.

In control systems, AI-BCI systems have been developed for controlling external devices such as prosthetic limbs or robots. For instance, an AI-based BCI system developed by researchers at the University of Pittsburgh enabled a monkey to control a robotic arm using its brain signals (Collinger et al. 2013). Similarly, AI-based BCI systems have been developed for controlling prosthetic limbs in amputees, enabling them to perform complex movements such as grasping objects or writing (Li et al. 2017).

Advantages of AI in BCIs

The implementation of AI in BCIs offers several advantages over traditional BCI systems. One of the main advantages is increased accuracy and reliability. AI algorithms can analyze large amounts of brain data and identify patterns that may not be visible to the human eye. This enables AI-BCI systems to detect and interpret brain signals more accurately, resulting in higher performance and fewer errors.

Another advantage of AI in BCIs is adaptability. Traditional BCI systems require calibration before each use, which can be time-consuming and inconvenient for the user. AI algorithms, on the other hand, can adapt to changes in the user’s brain signals over time, reducing the need for frequent calibration. This makes AI-BCI systems more user-friendly and practical for long-term use.

Furthermore, AI in BCIs can improve the speed of information processing. Traditional BCI systems may require several seconds or even minutes to process and interpret brain signals, which can be too slow for real-time applications. AI algorithms, however, can process and analyze brain signals in real-time, enabling faster and more efficient communication between the brain and external devices.

Challenges of AI in BCIs

Despite its potential benefits, the implementation of AI in BCIs also presents several challenges. One of the main challenges is the need for large amounts of training data. AI algorithms require large datasets to train and improve their performance. In the case of BCIs, this means collecting large amounts of brain data from human subjects, which can be difficult and time-consuming. Additionally, the quality and consistency of the data can affect the performance of the AI algorithm, as inaccurate or inconsistent data can lead to incorrect predictions and unreliable results.

Another challenge of AI in BCIs is the issue of interpretability. AI algorithms can be difficult to interpret, as they often rely on complex mathematical models that can be difficult to understand or explain. This can be a problem in applications such as medical diagnosis or decision-making, where it is important to understand how the AI algorithm arrived at its conclusions. Researchers are working on developing methods to improve the interpretability of AI algorithms, such as visualizing the decision-making process or using explainable AI models (Rudin 2019).

Another challenge is the issue of privacy and security. BCIs involve the collection of sensitive personal information, such as brain activity data, which can be vulnerable to data breaches or unauthorized access. Additionally, the use of AI algorithms can introduce new security risks, such as adversarial attacks or data poisoning, where an attacker can manipulate the data to produce incorrect predictions or results (Hung et al. 2020). Researchers are developing methods to improve the security of AI-BCI systems, such as using encryption or authentication methods to protect the data.

Conclusion

In conclusion, the implementation of AI in BCIs has numerous applications and potential benefits, including increased accuracy and reliability, adaptability, and improved speed of information processing. However, the implementation of AI in BCIs also presents several challenges, such as the need for large amounts of training data, interpretability, and privacy and security concerns. Researchers are working to address these challenges and improve the performance and usability of AI-BCI systems, paving the way for future advancements in this field. Interdisciplinary cooperation between neuroscientists, engineers, computer scientists, and medical professionals are required to overcome these hurdles and progress the area of BCIs. Collaborations of this type can spur innovation, better knowledge of brain processes, and hasten the translation of BCI technology from the lab to real-world applications.

Finally, the developments and future prospects of BCI technology offer significant chances to improve the quality of life for people with impairments while also opening up new avenues for human-computer connection. BCIs have the potential to revolutionize various aspects of our life and reshape the future of technology with further research and development.

Data availability

On reasonable request, the associated author will provide a complete database of selected research, including those retrieved during the search and selection process.

References

Akkus Z, Galimzianova A, Hoogi A, Rubin DL, Erickson BJ (2017) Deep learning for brain MRI segmentation: state of the art and future directions. J Digit Imaging 30(4):449–459. https://doi.org/10.1007/s10278-017-9983-4

Bell CJ, Shenoy P, Chalodhorn R et al (2008) Control of a humanoid robot by a noninvasive brain-computer interface in humans. J Neural Eng 5(2):214–220. https://doi.org/10.1088/1741-2560/5/2/012

Birbaumer N, Weber C, Neuper C (2006) Physiological regulation of thinking: brain-computer interface (BCI) research. Prog Brain Res 2006(159):369–391. https://doi.org/10.1016/S0079-6123(06)59024-7

Brosch T, Yoo Y, Li DKB, Traboulsee A, Tam R (2014) Modeling the variability in brain morphology and lesion distribution in multiple sclerosis by deep learning. Med Image Comput Comput Assist Interv 17(Pt 2):462–469. https://doi.org/10.1007/978-3-319-10470-6_58

Chapin JK, Moxon KA, Markowitz RS, Nicolelis MAL (1999) Real-time control of a robot arm using simultaneously recorded neurons in the motor cortex. Nat Neurosci 2:664–670. https://doi.org/10.1038/10223

Chengyu L, Weijie Z (2020) Progress in the brain-computer interface: an interview with Bin He. Natl Sci Rev 7(2):480–483. https://doi.org/10.1093/nsr/nwz152

Collinger JL, Wodlinger B, Downey JE, Wang W, Tyler-Kabara EC, Weber DJ, McMorland AJ, Velliste M, Boninger ML, Schwartz AB (2013) High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 381(9866):557–564. https://doi.org/10.1016/S0140-6736(12)61816-9

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20:273–297. https://www.semanticscholar.org/paper/Support-Vector-Networks-Cortes-Vapnik/52b7bf3ba59b31f362aa07f957f1543a29a4279e

Crevier DA (1993) The tumultuous history of the search for artificial intelligence, 1st edn. Basic Books, New York (https://www.researchgate.net/publication/233820788_AI_The_Tumultuous_History_of_the_Search_for_Artificial_Intelligence)

Daly JJ, Huggins JE (2015) Brian-computer interface: current and emerging rehabilitation applications. Arch Phys Med Rehabil. https://doi.org/10.1016/j.apmr.2015.01.007

Donoghue JP, Nurmikko A, Black M, Hochberg LR (2007) Assistive technology and robotic control using motor cortex ensemble-based neural interface systems in humans with tetraplegia. J Physiol 579(Pt 3):603–611. https://doi.org/10.1113/jphysiol.2006.127209

He B, Yuan H, Meng J, Gao S (2020) Brain–computer interfaces. In: Neural engineering. Springer, Berlin/Heidelberg, Germany, pp 131–183. https://doi.org/10.1007/978-1-4614-5227-0_2

Hochberg LR, Bacher D, Jarosiewicz B, Masse NY, Simeral JD, Vogel J (2012) Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 485(7398):372–375. https://doi.org/10.1038/nature11076

Hochberg LR, Serruya MD, Friehs GM, Mukand JA, Saleh M, Caplan AH (2006) Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature 442(7099):164–171. https://doi.org/10.1038/nature04970

Hung HC, Wang YC, Wang YC (2020) Applications of artificial intelligence in orthodontics. Taiwan J Orthod 32. https://doi.org/10.38209/2708-2636.1005

Jerry JS, Dean JK, Jonathan RW (2012) Brain-computer interfaces in medicine. Mayo Clin Proc. https://doi.org/10.1016/j.mayocp.2011.12.008

Kawala-Sterniuk A, Browarska N, Al-Bakri A, Pelc M, Zygarlicki J, Sidikova M, Martinek R, Gorzelanczyk EJ (2021) Summary of over fifty years with brain-computer interfaces – a review. Brain Sci 11(1):43. https://doi.org/10.3390/brainsci11010043

Kohavi R (1995) A study of cross-validation and bootstrap for accuracy estimation and model selection. In: Proceedings of the 14th international joint conference on artificial intelligence, vol 2. Morgan Kaufmann Publishers Inc., Montreal, Quebec, Canada, pp 1137–1143

Korfiatis P, Kline TL, Erickson BJ (2016) Automated segmentation of hyperintense regions in FLAIR MRI using deep learning. Tomography 2(4):334–340. https://doi.org/10.18383/j.tom.2016.00166

Kübler A, Kotchoubey B, Hinterberger T et al (1999) The thought translation device: a neurophysiological approach to communication in total motor paralysis. Exp Brain Res 124(2):223–232. https://doi.org/10.1007/s002210050617

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

Lee MB, Kramer DR, Peng T (2019) Brain-computer interfaces in quadriplegic patients. Neurosurg Clin N Am 30(2):275–281. https://doi.org/10.1016/j.nec.2018.12.009

Li JH, Yan YZ (2014) Improvement and simulation of artificial intelligence algorithm in special movements. Ann Transl Med 8(11):712. https://doi.org/10.21037/atm.2019.11.109

Li M, Yan C, Hao D (2015) An adaptive feature extraction method in BCI-based rehabilitation. J Intell Fuzzy Syst Appl Eng Technol 28(2):525–535. https://doi.org/10.5555/2729770.2729774

Li W, Jin J, Duan F (2017) Cognitive-based EEG BCIs and human brain-robot interactions. Comput Intell Neurosci 2017:9471841. https://doi.org/10.1155/2017/9471841

Lozada-Martínez I, Maiguel-Lapeira J, Torres-Llinás D, Moscote-Salazar L, Rahman MM, Pacheco-Hernández A (2021) Letter: need and impact of the development of robotic neurosurgery in Latin America. Neurosurgery 88(6):E580–E581. https://doi.org/10.1093/neuros/nyab088

Mead WR, Kurzweil R (2006) The singularity is near: when humans transcend biology. Technol Forecast Soc Change 73(2):104112. https://doi.org/10.1016/j.techfore.2005.12.004

Meng J, Zhang S, Bekyo A, Olsoe J, Baxter B, He B (2016) Noninvasive electroencephalogram based control of a robotic arm for reach and grasp tasks. Sci Rep 6:38565. https://doi.org/10.1038/srep38565 Erratum in: Sci Rep. 2020 Apr 15;10(1):6627

Minsky M, Papert SA, Bottou L (2017) Perceptrons, Reissue edn. MIT Press, Cambridge, MA. https://doi.org/10.7551/mitpress/11301.001.0001

Moravec H (1998) When will computer hardware match the human brain? J ETechnol 1:10. https://scholar.google.com/scholar_lookup?title

Mrachacz-Kersting N, Jiang N, Stevenson AJ, Niazi IK, Kostic V, Pavlovic A, Radovanovic S, Djuric-Jovicic M, Agosta F, Dremstrup K (2016) Efficient neuroplasticity induction in chronic stroke patients by an associative brain-computer interface. Brain Res 1674:91–100. https://doi.org/10.1016/j.brainres.2017.08.025

Mridha MF, Das SC, Kabir MM, Lima AA, Islam R, Watanobe Y (2021) Brain-computer interface: advancement and challenges. Brain-Computer Interface: Advancement and Challenges. Sensors (Basel) 21(17):5746. https://doi.org/10.3390/s21175746

Ngiam, J., Khosla, A., Kim, M., Nam, J., Lee, H., Ng, A.Y. (2011). Multimodal deep learning. https://www.semanticscholar.org/paper/Multimodal-Deep-Learning-Ngiam-Khosla/80e9e3fc3670482c1fee16b2542061b779f47c4f

Nicolas-Alonso LF, Gomez-Gil J (2012) Brain computer interfaces, a review. Sensors (Basel) 12(2):1211–1279. https://doi.org/10.3390/s120201211

Obermeyer Z, Emanuel EJ (2016) Predicting the future—big data, machine learning, and clinical medicine. N Engl J Med 375(13):1216–1219. https://doi.org/10.1056/NEJMp1606181

Orban M, Elsamanty M, Guo K, Zhang S, Yang H (2022) A review of brain activity and EEG-based brain–computer interfaces for rehabilitation application. Bioengineering (Basel) 9(12):768. https://doi.org/10.3390/bioengineering9120768

Pampaloni NP, Giugliano M, Scaini D, Ballerini L, Rauti R (2018) Advances in nano neuroscience: from nanomaterials to nanotools. Front Neurosci 12:953. https://doi.org/10.3389/fnins.2018.00953

Patel VL, Shortliffe EH, Stefanelli M (2009) Position paper: the coming of age of artificial intelligence in medicine. Artif Intell Med 46(1):5–17. https://doi.org/10.1016/j.artmed.2008.07.017

Rudin C (2019) Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell 1(5):206–215. https://doi.org/10.1038/s42256-019-0048-x

Salahuddin U, Gao PX (2021) Signal generation, acquisition, and processing in brain machine interfaces: a unified review. Front Neurosci 15:728178. https://doi.org/10.3389/fnins.2021.728178

Sarle WS (1994) Neural networks and statistical models. In: Proceedings of the Nineteenth Annual SAS Users Group International Conference. SAS Institute, Cary, NC, pp 1538–1550. https://doi.org/10.1016/j.eswa.2007.10.005

Sejnowski TJ (2020) The unreasonable effectiveness of deep learning in artificial intelligence. Proc Natl Acad Sci U S A 117(48):30033–30038. https://doi.org/10.1073/pnas.1907373117

Silva GA (2018) A new frontier: the convergence of nanotechnology, brain machine interfaces, and artificial intelligence. Front Neurosci 12:843. https://doi.org/10.3389/fnins.2018.00843

Tudor M, Tudor L, Tudor KI (2005) Hans Berger (1873–1941) – the history of electroencephalography. Acta medica Croatica: casopis Hravatske akademije medicinskih znanosti 59(4):307–313 (in Croatian)

Xiayin Z, Ziyue M, Huaijin Z, Tongkeng L, Kexin C, Xun W, Chenting L, Linxi X, Xiaohang W, Duoru L, Haotian L (2020) The combination of brain-computer interfaces and artificial intelligence: applications and challenges, 2019. Ann Transl Med 8(11):712. https://doi.org/10.21037/atm.2019.11.109

Zhang X, Ma Z, Zheng H, Li T, Chen K, Wang X, Liu C, Xu L, Wu X, Lin D, Lin H (2020) The combination of brain-computer interfaces and artificial intelligence: applications and challenges. Ann Transl Med 8(11):712. https://doi.org/10.21037/atm.2019.11.109

Zhao C, Liu B, Piao S, Wang X, Lobell DB, Huang Y, Huang M, Yao Y, Bassu S, Ciais P, Durand JL, Elliott J, Ewert F, Janssens IA, Li T, Lin E, Liu Q, Martre P, Müller C et al (2017) Temperature increase reduces global yields of major crops in four independent estimates. Proc Natl Acad Sci 114(35):9326–9331. https://doi.org/10.1073/pnas.1701762114

Code of availability

No software application or custom code is available.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection, and analysis were performed by Evelyn Karikari and Koshechkin Konstantin. The first draft of the manuscript was written by Evelyn Karikari and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Karikari, E., Koshechkin, K.A. Review on brain-computer interface technologies in healthcare. Biophys Rev 15, 1351–1358 (2023). https://doi.org/10.1007/s12551-023-01138-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12551-023-01138-6