Abstract

Parallel Enumeration and Branch-and-Bound Library (PEBBL) is a C++ class library implementing the underlying operations needed to support a wide variety of branch-and-bound algorithms on MPI-based message-passing distributed-memory parallel computing environments. PEBBL can be customized to support application-specific operations, while managing the generic aspects of branch and bound, such as maintaining the active subproblem pool across multiple processors, load balancing, and termination detection. PEBBL is designed to provide highly scalable performance on large numbers of processor cores. We describe the basics of PEBBL’s architecture, with emphasis on the features most critical to is high scalability, including its flexible two-level load balancing architecture and its support for a synchronously parallel ramp-up phase. We also present an example application: the maximum monomial agreement problem arising from certain machine learning applications. For sufficiently difficult problem instances, we show essentially linear speedup on over 6000 processor cores, demonstrating a new state of the art in scalability for branch-and-bound implementations. We also show how processor cache effects can lead to reproducibly superlinear speedups.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Branch and bound is a fundamental method of numerical optimization with numerous applications in both discrete optimization and continuous nonconvex global optimization. See for example [9] for a general tutorial on branch-and-bound algorithms. Branch and bound is potentially well-suited to parallel computing, since exploring a branch-and-bound tree often generates a large number of weakly coupled tasks. Despite this suitability, branch-and-bound algorithms are not “embarrassingly parallel” in the sense that efficient parallel implementation is immediate and straightforward.

It is therefore useful to have parallel branch-and-bound shells, frameworks, or libraries: software tools that provide parallel implementations of the basic, generic functionality common to a broad range of branch-and-bound applications. Those seeking to create new parallel branch-and-bound applications can avoid “reinventing the wheel” by using these tools to handle the generic aspects of search management, while concentrating their programming effort primarily on tasks unique to their applications. The need for branch-and-bound frameworks is greater in parallel than in serial, because managing the pool of active search nodes is relatively straightforward in serial. By contrast, parallel branch-and-bound frameworks may require more complex logic for pool management, distributed termination and load balancing.

This paper describes the Parallel Enumeration and Branch-and-Bound Library (PEBBL) software framework. The goal of PEBBL, influenced by the needs of Sandia National Laboratories, is to provide extreme scalability in implementing branch-and-bound methods on distributed-memory computing systems.

For efficiency and portability, we implemented PEBBL in C++, using the MPI message-passing API [49] to communicate between processors. PEBBL may also be used on shared-memory platforms by configuring standard MPI implementations such as MPICH [27] and Open MPI [26] to emulate message passing through shared memory. Shared-memory systems can often operate efficiently when programmed in this way, since the programming environment naturally tends to limit memory contention.

The most distinctive features of PEBBL are:

-

An extremely flexible work distribution and load balancing scheme that achieves unmatched scalability and has only two strata in its processor hierarchy. That is, there are just two possibly overlapping kinds of processors: “workers” and “hubs”. When scaling to large numbers of processors, the hubs interact in a peer-to-peer manner and do not require extra levels of controller processors such as “masters of masters” or “masters of masters of masters”.

-

Support for application-specific non-tree parallelism during the search ramp-up phase, followed by a “crossover” to using tree-based parallelism.

-

Support for enumeration of multiple optimal and near-optimal solutions meeting a variety of configurable criteria.

The rest of this paper is organized as follows: Sect. 2 summarizes PEBBL’s history, discusses some more of its innovative contributions, and presents a brief literature review relating it to other work on branch-and-bound computational frameworks. Section 3 then presents key aspects of PEBBL’s design. Section 4 then presents a computational study based on the maximum monomial agreement (MMA) problem described in [13, 18, 25], showing excellent scaling to over 6000 processor cores. Section 5 then presents some computational observervations showing how processor cache behavior can lead to superlinear speedups for some branch-and-bound applications. Our main conclusions from our computational experiments are:

-

PEBBL definitively demonstrates scalability of parallel branch and bound over a significantly wider range of processor counts than previously published work.

-

When enumerating multiple optimal or near-optimal solutions, PEBBL’s scalability is nearly as good as when seeking a single solution.

-

Despite its apparent complexity, PEBBL’s parallelization strategy adds relatively little overhead to underlying serial branch-and-bound algorithms.

PEBBL is a large, complex software package, so this paper necessarily omits many details. For a more detailed description, the reader should refer to the technical report [22], which is an expanded version of this article, and [23], the current PEBBL user guide. PEBBL is part of A Common Repository for Optimizers (ACRO), a collection of optimization-related software projects maintained by Sandia National Laboratories. PEBBL may be downloaded from http://software.sandia.gov/acro (under the BSD license). The PEBBL source files include the maximum monomial agreement (MMA) test problem instances and algorithm implementation presented in this paper. At the time of writing, the current PEBBL release is version 1.4.1.

2 Literature review and history

PEBBL began its existence as the “core” layer of the parallel mixed integer programming (MIP) solver Parallel Integer and Combinatorial Optimizer (PICO). However, we separated PICO into two distinct packages to facilitate using its core layer for applications with bounding procedures not involving linear programming—for example, the application described in Sect. 4 below. The core layer, which supported generic parallel branch-and-bound algorithms in an application-independent way, became PEBBL. The remainder of PICO is specific to problems formulated explicitly as MIPs. An early description of PICO [21] includes a description of the PICO core, which evolved into PEBBL. However, PEBBL’s design has evolved from this description, and its scalability has improved significantly. Similar but more recent material is included as part of [2], and a somewhat more recent but very condensed description of the internals of PEBBL and PICO is also embedded in [19]. Neither [2] nor [19] contain computational results or describe PEBBL’s enumeration capabilities.

Some elements of PEBBL’s design can be traced back to ABACUS [24, 30, 31], a serial C++ framework supporting serial LP-based branch-and-bound methods involving dynamic constraint and variable generation. Some aspects of PEBBL’s task distribution and load balancing schemes are based on CMMIP [14–17], a parallel branch-and-bound solver specific both to mixed integer programming and to the Thinking Machines CM-5 parallel computing system of the early 1990’s.

PEBBL is neither the first nor the only parallel branch-and-bound implementation framework. Early C-language general parallel branch-and-bound libraries include PUBB [47, 48], BoB [3], and PPBB-Lib [50]. MINTO [40] is an earlier, C-language framework specifically addressing MIP problems. SYMPHONY and BCP, both described in [36, 41], are two related frameworks that support parallelism, respectively written in C and C++. However, they do not support large-scale parallelism, and for applications requiring extensive scalability have been superseded by tools based on CHiPPS/ALPS [42]. Many aspects of CHiPPS/ALPS [42] were influenced by the early development of PICO and PEBBL.

To our knowledge, the most recently reported scalability studies for general-purpose parallel branch-and-bound engines are for BOBPP, a successor to Bob, and ALPS. Manouer et al. [39] use the general BOBPP framework to parallelize Google’s OR-Tools constraint solver. Compared to a serial run, they report a speedup of 38.14 on 48 cores (79 % relative efficiency). Xu et al. report computational experience using ALPS for knapsack problems [54]. Based on average total wall-clock time over 26 difficult knapsack problems on a BlueGene system, they report efficiency of 77 % on 2048 processors relative to the same problems running on 64 processors. The largest numbers of processors reported for branch-and-bound-based runs are specific to integer linear programming: Koch et al. [35] solved a single difficult integer program with the SCIP integer programming solver (using CPLEX for linear programming solutions) and MPI on an HLRN-II SGI Altix ICE 8200 Plus supercomputer. They report 79 % efficiency on 7168 cores relative to a base of 4096 cores. Using such a large base number of processors may not give an accurate picture of true efficiency relative to a single processor. In a recent, less formal setting, Shinano [45] reported efficiencies of 76 % solving a particular integer program using paraSCIP [46] with 4095 processors compared to a base of 239 processors on an HLRN-II. But for another integer programming problem, the efficiency for the same processor count and base was only 38 %. The same source also reports attempts to run on 35,000 processors of a Titan Cray XK7 and reported a run time for almost 10,000 processors, but with no scalability results.

In other recent work, the FTH-B&B [4] package focuses on fault-tolerant branch-and-bound mechanisms for grid environments. It includes fault detection through heartbeat communication between parents and children in a multi-level control hierarchy, as well as checkpointing and recovery mechanisms. Experiments in [4] focus on measuring fault-tolerance-related overhead, rather than considering scalability and search efficiency.

3 Software architecture

We now describe PEBBL’s software design. Included in this description are a number of original contributions not mentioned in Sect. 1, namely:

-

Division of the software package into serial and parallel layers

-

Subproblems that store a state, and the notion of describing a branch-and-bound implementation as a collection of operators transforming these states; this feature allows one to easily change search “protocols”—see Sect. 3.2

-

Variable amounts of subproblem exchange between “hub” and “worker” processors

-

Use of “threads” (more properly referred to as coroutines) and a nonpreemptive scheduler module to manage tasks on each individual processor.

Regarding the first item above, the PEBBL software consists of two “layers,” the serial layer and the parallel layer. The serial layer provides an object-oriented means of describing branch-and-bound algorithms, with essentially no reference to parallel implementation. The parallel layer contains the core code necessary to create parallel versions of serial applications. Creation of a parallel application can thus proceed in two steps:

-

1.

Create a serial application by defining new classes derived from the serial layer base classes. Development may take place in an environment without parallel processing capabilities or an MPI library.

-

2.

“Parallelize” the application by defining new classes derived from both the serial application and the parallel layer. These classes can inherit most of their methods from their parents—only a few additional methods are required, principally to tell PEBBL how to pack application-specific problem and subproblem data into MPI message buffers, and later unpack them.

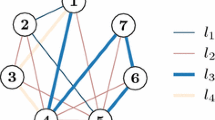

Figure 1 shows the conceptual inheritance pattern used by PEBBL. Any parallel PEBBL application constructed through this multiple-inheritance scheme has the full capabilities of the parallel layer, including a highly configurable spectrum of parallel work distribution and load balancing strategies.

To define a serial branch-and-bound algorithm, a PEBBL user extends two principal classes in the serial layer, branching and branchSub. The branching class stores global information about a problem instance, and it contains methods that implement various kinds of serial branch-and-bound algorithms, as described below. The branchSub class stores data about each subproblem in the branch-and-bound tree, and it contains methods that perform generic operations on subproblems. This basic organization is borrowed from ABACUS [24, 30, 31], but PEBBL’s design is more general, since there is no assumption that linear programming or cutting planes are involved.

The remainder of this section refers to numerous parameters controlling the details of the branch-and-bound search. All such parameters have default values so users can begin using PEBBL without having to explicitly specify their values. The user may then tune specific parameters based on the runtime behavior of their application.

3.1 Subproblem states, subproblem transition methods, and solution generation

Every PEBBL subproblem stores a state indicator that progresses through some subset of six states called boundable, beingBounded, bounded, beingSeparated, separated, and dead.

The branchSub class has three abstract virtual methods which are responsible for implementing subproblem state transitions: boundComputation, splitComputation, and makeChild. A serial PEBBL application is primarily defined by the instantiations of these three methods in the application subproblem class.

Figure 2 illustrates the subproblem state operator methods and the possible subproblem state transitions. The boundComputation method advances subproblems from the initial boundable state through to the bounded state, while the split Computation method advances subproblems from the bounded state to the separated state. Each application of the makeChild method extracts a child subproblem.

Once all of a subproblem’s children have been created, it becomes dead. Any subproblem operator can also set a subproblem’s state to dead if it determines that the subproblem represents no relevant solutions. The beingBounded state is included to allow for incremental calculations that might compute progressively tighter bounds for a subproblem, or for lengthy bound calculations that one might want to temporarily suspend. The beingSeparated state is provided for similar reasons.

Each subproblem also has a bound data member which may be updated at any time by any of the three state-transition methods, although it is typically set by bound Computation. Furthermore, the branchSub class has an incumbent Heuristic method that is called once a subproblem becomes bounded. This method is intended to generate feasible solutions, perhaps using information generated during bounding. However, any of the subproblem operators is free to generate possible new incumbent solutions at any point in the life of a subproblem. The branchSub class also contains methods for identifying “terminal” subproblems, and extracting solutions from them. Terminal subproblems are those for which the computed bound is exact and a matching feasible solution is readily available: in integer programming, for example, a subproblem is terminal if solving its linear programming relaxation returns an integral solution. A terminal subproblem does not require further exploration and becomes dead once PEBBL extracts a solution matching its bound value (unless PEBBL is enumerating multiple solutions—see Sect. 3.6 below).

PEBBL also provides a number of classes for representing problem solutions. If a specialized solution representation is needed, the user may derive one from the PEBBL-provided base class solution.

3.2 Pools, handlers, and the search framework

PEBBL’s serial layer orchestrates serial branch-and-bound search through a module called the “search framework,” which acts as an attachment point for two user-specifiable objects, the “pool” and the “handler”. The combination of pool and handler determines the variant of branch-and-bound being applied. Essentially, the framework executes a loop in which it extracts a subproblem S from the pool and passes it to the handler, which may create children of S and insert them into the pool. If subproblem S is not dead after processing by the handler, the framework returns it to the pool. Figure 3 illustrates the relationship between the search framework, pool object, and handler object.

The pool object dictates how the currently active subproblems are stored and accessed, which effectively determines the branch-and-bound search order. Currently, there are three kinds of pools:

-

A heap sorted by subproblem bound, which results in a best-first search order. This pool also has a “diving” option to give priority to an application-defined “integrality measure” (in general, some measure of closeness to being a terminal node) until an incumbent solution is found, and then revert to standard best-bound ordering.

-

A FIFO queue, which results in a breadth-first search order.

-

A stack, which results in a depth-first search order.

For particular applications, users may implement customized pools that specify other search orders.

In general, subproblems in the pool may in be in any mix of states. The handler, the other component “plugged into” the search framework, implements a “search protocol” specifying how subproblems are advanced through the state diagram, thus controlling the mix of states present in the pool. Three handlers are currently available: “eager”, “lazy”, and “hybrid”.

The lazy handler implements lazy search, as defined in [10]. In lazy search, the handler removes a subproblem from the pool and computes its bound. If the subproblem cannot be fathomed, the handler extracts its children and places them back in the pool without computing their bounds. This variant of branch and bound is typical of MIP solvers. The eager handler instead implements an eager search protocol [10]. In eager search, the handler picks a subproblem from the pool, immediately separates it, and then extracts all its children and calculates their bounds. Children whose bounds do not cause them to be fathomed are returned to the pool. This type of search is more typically used in situations in which the bound may be calculated quickly.

PEBBL also contains a third handler, called the hybrid handler. This handler implements a strategy that is somewhere between eager and lazy search, and is perhaps the most simple and natural given PEBBL’s concept of subproblem states. Upon removing a subproblem from the pool, the hybrid handler performs one application of the appropriate method to advance it in the state diagram. It then places the subproblem back in the pool if it is not dead, along with any children generated in the process. With the hybrid handler, the pool contains subproblems in an arbitrary mix of states, whereas the lazy and eager handler keep the entire pool in the boundable or bounded state, respectively, unless the application uses the beingBounded or beingSeparated states to suspend bounding or separation operations.

3.3 Parallelism: tokens and work distribution within a processor cluster

PEBBL’s parallel layer organizes processors similarly to the later versions of CMMIP [14, 16]. Processors are organized into “clusters”, each consisting of one “hub” processor that controls one one or more “worker” processors. Through run-time parameters, the user may control the number of processors per cluster, and whether hub processors are “pure” hubs or simultaneously function as hubs and workers. It is possible for a cluster, or even all clusters, to consist of only a single processor.

Although there is a limit to the number of processors that may function efficiently within a centrally controlled cluster, PEBBL’s philosophy is to make this limit as large as possible. To this end, its design used two basic principles: first, a hub should be able to “guide” rather than “micromanage” its workers, without having to interpose itself into every worker subproblem-processing decision. Second, the communication and memory resources of the hub should not be wasted by storing and transmitting subproblem information irrelevant to the hub’s main purpose of scheduling and distributing work.

To allow for “guidance” rather than “micromanagement,” workers in a PEBBL cluster are generally not pure “slaves.” Each worker maintains its own pool of active subproblems. Depending on various run-time parameters, the pool might be small (e.g. a single subproblem). Workers use essentially the same search handler objects present in the serial layer, but in parallel these handlers also have the ability to “release” subproblems from the worker. The decision whether to release a subproblem is usually taken when it is created, except when performing eager search, in which case the decision is taken immediately after the subproblem is bounded. Released subproblems do not return to the local pool: instead, the worker cedes control over these subproblems to the hub. Eventually, the hub will send control of the subproblem either back to the worker or to another worker.

When a processor is both a hub and a worker, it maintains two distinct pools of subproblems, one under control of the hub thread and one under control of the worker thread. The two coresident threads communicate in much the same way as a worker and hub located on different processors, but through local memory operations rather than MPI messages.

For simplicity throughout the remainder of this subsection, we describe the work distribution scheme as if a single cluster spanned all the available processors. In the next subsection, we will amend the description for the case of more than one cluster.

A worker’s decision to release a subproblem is randomized, with the probability of release controlled by run-time parameters and the current distribution of work among processors. If PEBBL is configured so that the release probability is always 100 %, then control of every subproblem returns to the hub at some state in its lifecycle. In this case, the hub and its workers function like a classic “master-slave” system. When the release probability is lower, the hub and its workers are less tightly coupled. The release probability can vary between parameter-determined bounds depending on the fraction of the estimated total work controlled by the worker processor. To promote even work distribution early in a run, the release probability is temporarily set to 100 % for the first s subproblems each worker encounters after the initial synchronous ramp-up phase (see Sect. 3.7 below), where s is a run-time parameter.

As mentioned above, the second major principle in PEBBL’s intracluster architecture is avoiding passing unnecessary information through the hub: when a subproblem is released, only a small portion of its data, called a “token” [15, 43], is actually sent to the hub. A token consists of the information needed to identify a subproblem, locate it in memory, and schedule it for execution. On a 64-bit processor, a token occupies 80 bytes of storage, which is much less than typically required to store the full data for a subproblem in most applications. Since the hub receives only tokens from its workers, these space savings translate into reduced storage requirements and communication load at the hub. In certain cases, PEBBL can gain further efficiency by using a single token to refer to several sibling subproblems.

The hub processor maintains a pool of subproblem tokens that it has received from workers. Each time it learns of a change in workload status from one of its workers, the hub reevaluates the work distribution in the cluster and dispatches subproblem tokens to workers it deems “deserving”. The notion of “deserving” can take into account both subproblem quantity and quality (as measured by the distance between the subproblem bound and the incumbent value).

When the hub dispatches a subproblem token t to a worker \(w^*\), the resulting message might not go directly to \(w^*\). Instead, it goes to the worker w that originally released t. If \(w \ne w^*\), then when w receives the token, it forwards the necessary subproblem information to \(w^*\), much as in [14–16, 43]. To save communication resources, the hub may pack multiple dispatch operations into the same MPI message.

The hub periodically broadcasts overall workload information to its workers so they know the approximate relation of their own workloads to those of other workers. This information allows each worker to adjust its probability of releasing subproblems. PEBBL also has a second mechanism, called “rebalancing”, to help maintain the user’s desired balance between hub and worker control of subproblems (especially near the end of a run). During rebalancing, workers can send blocks of subproblem tokens to the hub outside of the usual handler-release cycle.

The subproblem release probabilities and rebalancing operations at the workers, along with the calculation of when workers are “deserving”, are calibrated to (1) keep the fraction of subproblems in the cluster controlled by the hub close to the run-time parameter hubLoadFac and (2) keep the remaining subproblems relatively evenly distributed among the workers. In this calculation, an adaptively computed “discount” is applied to a worker process colocated on the hub processor. Specifically, if the hub processor appears to be spending a fraction h of its time on its hub functions but is also a worker, then the target number of subproblems for its worker process is a factor \(1-h\) lower than for other worker processors.

Depending on the settings of the parameters controlling subproblem release and dispatch, PEBBL’s intracluster work distribution system can range from a classic “master-slave” configuration to one in which the workers “own” the vast majority of the subproblems, and the hub controls only a small “working set” that it tries to use to correct imbalances as they occur. A spectrum of settings between these two extremes is also possible. For example, there is a configuration in which the hub controls the majority of work within the cluster, but each worker has a small “buffer” of subproblems to prevent idleness while waiting for the hub to make work-scheduling decisions. The best configuration along this spectrum depends on both the application and the relative communication/computation speed of the system hardware. In practice, some run-time experimentation may be necessary to find the best settings for a given combination of computing enviroment and problem class.

3.4 Work distribution between clusters

For any given combination of computing environment, application, and problem instance, there will be a limit to the number of processors that can operate efficiently as a single cluster. Even if PEBBL is configured to maximize the conservation of hub resources, the hub may simply not be able to keep up with all the messages from its workers, or it may develop excessively long queues of incoming messages. At a certain point, adding more processors to a single cluster will not improve performance. To take advantage of additional processors, PEBBL therefore provides the ability to partition the overall processor set into multiple clusters.

PEBBL’s method for distributing work between clusters resembles CMMIP’s [14, 16]: there are two mechanisms for transferring work between clusters, “scattering” and “load balancing”. Scattering occurs when workers release subproblems. If there are multiple clusters and a worker has decided to release a subproblem, then the worker makes a random decision, controlled by some additional parameters and workload-state information, as to whether the subproblems should be released to the worker’s own hub or to the hub of a randomly chosen cluster. At the beginning of a run, PEBBL promotes even work distribution by forcing release of a worker’s first s subproblems (using the same notation as in the previous subsection) to random clusters.

To supplement scattering, PEBBL also uses a form of “rendezvous” load balancing similar to CMMIP [14, 16]. Earlier related work [32, 37] describes synchronous application of the same basic idea to individual processors instead of clusters. This procedure also has the important side effect of gathering and distributing global information on the amount of work in the system, which in turn facilitates control of the scattering process. This information is also critical to termination detection in the multi-hub case.

The load balancing mechanism defines its estimated “workload” at a cluster c at time t to be

Here, C(c, t) denotes the set of subproblems that c’s hub knows are controlled by the cluster at time t, \(\overline{z}(c,t)\) represents the fathoming value known to cluster c’s hub at time t, and z(P, c, t) is the best bound on the objective value of subproblem P known to cluster c’s hub at time t. The fathoming value is the objective value that allows a subproblem to be fathomed; this is typically the incumbent value, but it may be different if PEBBL’s enumeration feature is active (see Sect. 3.6). The exponent u is either 0, 1, or 2, at the discretion of the user. If \(u=0\), only the number of subproblems in the cluster matters. Values of \(u=1\) or \(u=2\) give progressively higher “weight” to subproblems farther from the incumbent. The default value of u is 1. Using a technique based on binomial coefficients, PEBBL is able to recalculate the estimate (1) in constant time whenever the fathoming value \(\overline{z}(c,t)\) changes, individual subproblems are added to or deleted from C(c, t), or individual z(P, c, t) values change.

The rendezvous load balancing mechanism organizes the hub processors into a balanced tree. This tree provides a mechanism for bounding the communication load on each individual hub processor; it is not a hierarchy of control, because all the hubs in the system are essentially peers. Periodically, messages “sweep” semi-synchronously through this entire tree, from the leaves to the root, and then back down to the leaves. These messages repeat a pattern of a “survey sweep” followed by a “balance sweep”. The survey sweep gathers and distributes system-wide workload information. This sweep provides all hubs with an overall system workload estimate, essentially the sum of the L(c, t) over all clusters c. However, if the sweep detects that these values were based on inconsistent fathoming values, then it immediately repeats itself.

After each successful survey sweep, every hub determines whether its cluster should be a potential donor of work, a potential receiver of work, or (typically) neither. Donors are clusters whose workloads are above the average workload by some parameter-specified factor, while receivers must have loads below average by another parameter-specified factor. The balance sweep operation then counts the total number of donors d and receivers r. It assigns to each donor a unique number in the range \(0,\ldots ,d-1\), and to each receiver a unique number in the range \(0,\ldots ,r-1\). The balance sweep is a form of parallel prefix operation [5], involving a single round of messages passing up the tree, followed by a single pass down. At the end of the balance sweep, all hub processors also know the values of d and r. After the balance sweep, the first \(y=\min \{d,r\}\) donors and receivers then “pair up” via a rendezvous procedure involving 3y point-to-point messages. Specifically, donor i and receiver i each send a message to the hub for cluster i, for \(i= 0,\ldots ,y-1\). Hub i then sends a message to donor i, telling it the processor number and load information for receiver i. See [28, Sect. 6.3] or [14, 16] for a more detailed description of this process. Within each pair, the donor sends a single message containing subproblem tokens to the receiver. Thus, the sweep messages are followed by up to 4y additional point-to-point messages, with at most 6 messages being sent or received by any single processor—this worst case occurs when a hub is both a donor and a rendezvous point. Both the survey and balancing sweeps involve at most \(2(b+1)\) messages being sent or received at any given hub processor, where b is the branching factor of the load-balancing tree. During a load-balancing cycle, from the start of a successful survey sweep through any corresponding work exchanges between hubs, the number of load-balancing-related messages passing through any given hub processor is bounded above by the constant \(2(b+1) + 2(b+1) + 6 = 4b + 10\), and the cumulative message latency associated with propagating this information is \({{\mathrm{O}}}(\log _b H) = {{\mathrm{O}}}(\log H)\), where H is the number of clusters. This design ensures the scalability of PEBBL’s load balancing mechanism.

The frequency of survey and balance sweeps is controlled by a timer, with the minimum spacing between survey sweeps being set by a run-time parameter. If the total workload on the system appears to be zero, then this minimum spacing is not observed and sweeps are performed as rapidly as possible, to facilitate rapid termination detection. Under certain conditions, including at least once at the end of every run, a “termination check” sweep is substituted for the balance sweep; see Sect. 3.8 for a discussion of the termination check procedure.

Finally, we note that interprocessor load balancing mechanisms are sometimes classified as either “work stealing” initiated by the receiver or “work sharing” initiated by the donor. PEBBL’s rendezvous method is neither. Instead, donors and receivers efficiently locate one another on an equal, peer-to-peer basis.

3.5 Thread and scheduler architecture

The parallel layer requires each processor to perform a certain degree of multitasking. To manage such multitasking in as portable a manner as possible, PEBBL uses its own system of nonpreemptive “threads”, more precisely described as coroutines. These threads are called by a scheduler module and are not interruptible at the application level. They simply return to the scheduler when they wish to relinquish control. On each processor, PEBBL creates some subset of the following threads:

-

Two “compute threads” that handle the fundamental computations: a “worker thread” for processing subproblems, and an optional “incumbent search thread” dedicated to running incumbent heuristics.

-

Two threads associated with work distribution and load balancing on hub processors: a “hub thread” that handles the main hub functions and a “load balancing/termination thread” for handling intercluster load balancing (see Sect. 3.4 above) and termination detection (see Sect. 3.8 below).

-

Three worker-based threads to handle communication between workers and from hubs to workers

-

An “incumbent broadcast thread” for distributing information about new incumbents, an optional “early output thread” to output provisional solution information before the end of long runs, and two optional threads to handle enumeration of multiple solutions (see Sect. 3.6 below).

Furthermore, applications derived from PEBBL have the ability to incorporate their own additional, application-specific threads into PEBBL’s multitasking framework. For example, the PICO MIP solver creates an additional thread to manage communication of “pseudocost” branching quality information between processors.

Except for the compute threads, all of PEBBL’s threads are “message triggered”: they only run when specific kinds of messages are received. The compute threads run whenever they have work available and no arriving messages need to be serviced. When both kinds of compute threads are active, PEBBL manages compute-time allocations between them through a variant of stride scheduling [34, 51], allowing for an intelligent allocation of CPU resources to each thread; see [20, 21] for details. The split of compute resources between the two threads is determined by a combination or run-time parameters and the current gap between the value of the incumbment solution and the best currently known global bound on the optimal solution value.

The incumbent broadcast thread manages asynchronous tree-based broadcasts of new incumbent values, with special features to handle “collisions” between trees of potential new incumbent messages spreading from different initiating processors. Essentially, the broadcast tree will automatically “wither” and cease propagating messages wherever it encounters a processor with a better incumbent value, or the same incumbent value but a lower-numbered initiating processor. This scheme ensures that all processors eventually agree on the incumbent value and on which processor the incumbent resides.

3.6 Solution enumeration

The extent of PEBBL’s search process is controlled by two nonnegative parameters, relTolerance and absTolerance. Normally, if a subproblem’s bound is within either an absolute distance absTolerance or relative distance relTolerance of the current incumbent, PEBBL fathoms and deletes it. Thus the final incumbent is guaranteed to be either within absTolerance objective function units or a multiplicative factor relTolerance of the true optimum.

PEBBL can also be configured to enumerate multiple solutions. Such enumeration is controlled by four further parameters, as follows:

-

enumAbsTol = a: retain solutions within a units of optimal.

-

enumRelTol = r: retain solutions within a multiplicative factor r of optimal.

-

enumCutoff = c: retain solutions whose objective value is better than c.

-

enumCount = n: retain the best n solutions. If the \(n^{\text {th}}\)-best solution is not uniquely determined, PEBBL breaks objective-value ties arbitrarily.

If more than one of these parameters is set, then PEBBL retains only solutions jointly meeting all the specified criteria. If any of these parameters are set, then enumeration is considered active, and PEBBL stores not just the usual single incumbent solution, but also a “repository” of multiple solutions. PEBBL adds solutions to the repository as they are found, and removes them whenever it deduces they cannot meet the enumeration criteria.

In serial, the effect of the enumeration parameters is to alter the criteria PEBBL uses to fathom subproblems and the behavior of the internal method that signals a potentially new feasible solution. When using just the enumAbsTol or enumRelTol parameters, fathoming only occurs when a subproblem bound is worse than the incumbent by at least the amount specified by one of the active criteria. For enumCount, subproblems are compared not to the incumbent, but to the \(\mathtt{enumCount }^{\text {th}}\)-best solution in the repository. When the repository already contains enumCount solutions and a new solution enters, one of the repository’s worst solutions is deleted.

For enumeration to work properly, the application classes need two capabilities: “branch-through” and solution duplicate detection. Branch-through refers to branching on terminal subproblem. Without enumeration, PEBBL will never apply the splitComputation method to a terminal subproblem: by definition, one has identified an optimal solution in the subproblem’s region of the search space, so ordinarily there would be no need to explore this region further. This may not be the case when enumerating, so the application must be prepared to split terminal subproblems. In some applications, splitting terminal subproblems may require a completely different branching procedure: in integer programming, for example, branching ordinarily uses variables with fractional values in the linear programming relaxation solution, but a terminal subproblem will have no such variables.

The second capability normally needed to support enumeration is duplicate solution detection, which is necessary to prevent applications that generate solutions heuristically from accumulating multiple identical solutions in the repository. To support PEBBL’s detection of duplicate solutions, any solution representation used by a PEBBL application must implement a solution comparision method and a solution hash value function. Equivalent solutions must have the same hash value. The PEBBL-supplied solution classes already have these capabilities and PEBBL also supplies tools to simplify the construction of such methods for customized solution representation classes.

PEBBL currently lacks any gradated notion of solution distance or “diversity.” It has only a boolean sense of solutions being “the same” or “not the same”. For applications that can generate large numbers of symmetric or nearly identical solutions, it may be desirable to monitor and control the diversity of the repository in a non-boolean sense. For an example of this kind of technique for MIP problems, see [11]. Unlike PEBBL’s enumeration scheme, however, the technique described in [11] does not guarantee complete enumeration of specific sets of solutions; such full solution enumeration appears to be a unique feature of PEBBL.

When running in parallel, PEBBL promotes scalability by partitioning the repository approximately equally among all processors through a mapping based on the solution hash value—every solution s has a unique “owning” processor based on its hash value. New feasible solutions are sent to their owning processor, where they are checked for duplication before entering the repository.

If only the enumRelTol, enumAbsTol, or enumCutoff criteria are set, this storage logic is essentially all that is needed to enumerate solutions in parallel. However, if enumCount is set, then the implementation becomes more complicated. For proper pruning, one would like to have an estimate of the \(\mathtt{enumCount }\)th-best solution in the union of the repository segments of all processors, which we call the “cutoff solution”. In order for each processor to maintain a valid estimate of the cutoff solution, PEBBL periodically passes messages up and down a balanced tree similar to that used for load balancing, but consisting of all processors, rather than just hubs. These messages contain sorted lists of solution identifiers (objective values, owning processor and serial number), that are merged as messages converge up the tree. The root forms an estimate of the cutoff solution that is then broadcast down the tree.

3.7 Synchronous ramp-up support

PEBBL provides support for specialized, synchronous ramp-up procedures at the beginning of the search process. PEBBL’s main approach to parallelism is to evaluate multiple nodes of the search tree simultaneously. Early in the search process, however, the pool of search nodes is still small, and particular applications may have different opportunities for parallelism that provide more concurrency. Therefore, PEBBL provides support for a synchronous ramp-up phase with an application-defined crossover point. During the ramp-up phase, every processor redundantly develops an exactly identical branch-and-bound tree. As they synchronously process each subproblem, the processors are free to exploit any parallelism they wish in executing boundComputation, splitComputation, makeChild, or the incumbent heuristic. Because different processors might find different incumbents—for example by using randomized procedures with different random-number seeds—PEBBL provides special methods to sychronize the incumbent value during ramp-up. Without such synchronization, there are various mechanisms by which the ramp-up phase may deadlock.

A virtual method controls the termination of the ramp-up phase: Once this method signals the end of ramp-up, the worker processors partition the leaf nodes of the current search tree as equally as possible on both the intercluster and intracluster level. This partition operation can be performed without any communication, since all processors have copies of the same leaf nodes in local memory; it is simply a matter of each processor deleting the subproblems it does not “own”. After partitioning the active subproblems, PEBBL enters its normal asychronous search mode.

PEBBL’s default implementation of the crossover trigger method senses whether the tree has at least \(\alpha W\) nodes, where \(\alpha \) is a run-time parameter defaulting to 1, and W is the number of worker processors. Ideally, however, it is best to also consider whether the parallelism available in the active nodes of the search tree appears to exceed any alternative source of parallelism available to the application. Such a test is necessarily application-dependent and must be implemented by the user overriding the default crossover-triggering method.

3.8 Startup and termination

In addition to the ramp-phase and the main asynchronous search phase, a PEBBL run has two additional phases: an initial problem read-in operation at the very beginning, and a solution and statistics output phase at the end.

The read-in stage is straightforward: processor 0 reads the problem instance data and broadcasts it to all other processors. The solution output phase is also relatively straightforward: in the absence of enumeration, the processor \(p^*\) holding the final incumbent solution simply outputs it directly or through processor 0. When enumeration is being used, this phase is more complicated, but consists essentially of a synchronous parallel sort-merge operation to output the entire repository in objective-value order. Finally, some synchronous MPI reduction operations gather performance statistics for output by the processor 0.

A critical aspect of the implementation is detecting the end of the asynchronous search phase, so that the solution output phase can commence. Detecting termination is simple for parallel programs that are organized on a strictly “master-slave” or hierarchical principle. PEBBL has a more complicated messaging pattern with multiple asychronous processes, introducing subtleties into the detection of termination.

Essentially, PEBBL terminates when it has detected and confirmed “quiescence”, the situation in which all worker subproblem and hub token pools are empty, and all sent messages have been received. To confirm quiescence, PEBBL uses a method derived from [38], but adapted and specialized to its particular processor organization and messaging pattern. All PEBBL processors keep count of the total number of messages they have sent and received (other than load-balancing sweep and termination detection messages). These counts are aggregated at the hub processors, and (if there is more than one hub) through the message sweeps of the load-balancing tree. Thus, it is straightforward for processor 0, the hub of the cluster at the root of the load-balancing tree, to detect the situation in which the subproblem workload in the system sums to zero, and the total counts of sent and received messages are in balance. We call this situation “pseudoquiescence”. Pseudoquiescense is necessary for search termination, but it is not sufficient because the measurements making up the various sums involved are typically not taken at exactly the same time. For efficiency reasons relating to PEBBL’s specific messaging pattern, especially if enumeration is active, detection of pseudoquiescence is a two-stage process. The first detection level involves only messages relating to work distribution, but not incumbent or repository management, and it is easily triggered at processor 0 as part of the ordinary process of load balancing. If the first level of pseudoquiescence is detected, an additional “quiescence check” pattern of messages confirms whether the total count of all messages sent and received appears to balance. If this check confirms that pseudoquiescence has indeed occurred, PEBBL proceeds to a second check, the “termination check”.

Suppose that pseudoquiescence has occurred, and the total number of messages sent and received is m. It is shown in [38] that to confirm that true quiescence has occurred, it is sufficient to perform one additional measurement of the total number of messages sent at every processor and verify that its sum is still m. PEBBL uses an additional message sweep to confirm whether this is the case.

3.9 Checkpointing

PEBBL has a checkpointing feature that allows partial runs of branch-and-bound search to be resumed at a later time. This feature can be useful when a system failure or expiration of allotted time stops a PEBBL run before its natural completion. Note that some MPI implementations, for example Open MPI [26], now provide their own transparent checkpointing features, which could be used instead. However, PEBBL’s application checkpointing feature has some capabilities that transparent checkpointing does not, such as the ability to restart a run with different parameter settings or even a different number of processors.

PEBBL’s checkpointing feature is integrated into its termination-detection mechanism. A run-time parameter can be used to specify that PEBBL writes a checkpoint approximately every t minutes after the synchronous ramp-up phase. Once PEBBL detects that a checkpoint is due to be written, it signals all processors through the same process used to signal termination. Upon receiving the checkpoint signal, workers stop processing subproblems, and PEBBL waits until it has confirmed that there are no messages in transit, using the same procedure it employs for termination detection. Once this condition has been confirmed, each processor writes a binary checkpoint file. This file typically consists of the subproblems and tokens in the processor’s memory. Writing the file reuses the methods for constructing work-distribution messages, so no additional customization of PEBBL is needed to support checkpointing. However, PEBBL also provides virtual methods that can be customized to include additional application-specific internal state information in checkpoints.

PEBBL provides two methods, “restart” and “reconfigure”, that support restarting a run from a collection of checkpoint files. For a restart, the number of processors and the processor cluster configuration must exactly match the run that wrote the checkpoint. In this case, each processor reads a single checkpoint file, which is a potentially parallel operation. For a reconfigure, the number of processors and the clustering arrangement may be different from the one that wrote the checkpoint. In this case, processor 0 simply reads the checkpoint files one-by-one, and distributes subproblems as evenly as possible to the worker processors using a round-robin message pattern. The reconfigure mechanism is more flexible than the restart mechanism, but it is potentially much slower because it requires a serial pass through all the checkpoint data.

4 Application to maximum monomial agreement

4.1 The MMA problem and algorithm

To illustrate PEBBL’s performance and capabilities, we now describe its application to the maximum monomial agreement (MMA) problem. Eckstein and Goldberg [18] describe this problem and an efficient serial branch-and-bound method for solving it. An earlier, less efficient algorithm is described in [25], and a slightly more general formulation of the same problem class may be found in [13]. The algorithm described in [18] uses a combinatorial bound not based on linear programming, and it significantly outperforms applying a standard professional-quality MIP solver. This property makes MMA a practical example of applying branch-and-bound in a non-MIP setting. Conveniently, the algorithm in [18] had already been coded using the PEBBL serial layer, so it was only necessary to extend the implementation to incorporate the parallel layer. We now give a condensed description of the MMA problem and solution algorithm; further details may be found in [18].

The goal of MMA is to find a logical pattern that “best fits” a set of weighted, binary-encoded observations divided into two classes, in the sense that it maximizes the difference between the weight of matching observations from one class and the weight of matching observations from the other class. This problem arises as a natural subproblem in various machine learning applications. Each MMA instance consists of a set of M binary N-vectors in the form of a matrix \(A\in \{0,1\}^{M\times N}\), along with a partition of its rows into “positive” observations \(\varOmega ^+\subset \{1,\ldots ,M\}\) and “negative” observations \(\varOmega ^- = \{1,\ldots ,M\}{\setminus } \varOmega ^+\). Row i of A, denoted by \(A_i\), indicates which of N binary features are possessed by observation i. In a medical machine learning application, for example, each feature could represent the presence of a particular gene variant or the detection of a particular antibody, while \(\varOmega ^+\) could represent a set of patients with a given disease and \(\varOmega ^-\) a set of patients without the disease. Each MMA instance also includes a vector of weights \(w_i \ge 0\) on the observations \(i=1,\ldots ,M\).

A “monomial” on \({\{0,1\}}^N\) is a logical conjunction of features and their complements, that is, a function \(m_{J,C}:{\{0,1\}}^N \rightarrow \{0,1\}\) of the form

where J and C are (usually disjoint) subsets of \(\{1,\ldots ,N\}\). The monomial \(m_{J,C}\) is said to “cover” a vector \(x\in {\{0,1\}}^N\) if \(m_{J,C}(x)=1\), that is, if x has all the features in J and does not have any of the features in C. We define the “coverage” of a monomial \(m_{J,C}\) as

Define the weight of a set of observations \(S\subseteq \{1,\ldots ,M\}\) to be \(w(S)=\sum _{i\in S}w_i\). The MMA problem is to find a monomial whose coverage “best fits” the dataset \((A,\varOmega ^+)\) with weights w, in the sense of matching a large net weight of observations in \(\varOmega ^+\) less those matched in \(\varOmega ^-\), or vice versa. Formally, we wish to find subsets \(J,C\subseteq \{1,\ldots ,N\}\) solving

When the problem dimension N is part of the input, [18] proves that this problem formulation is \({\mathscr {NP}}\)-hard, using techniques derived from [33].

The main ingredients in any branch-and-bound algorithm are a subproblem description, a bounding function, and a branching rule. For MMA, each possible subproblem is described by some partition (J, C, E, F) of \(\{1,\ldots ,N\}\). Here, J and C respectively indicate the features which must be in the monomial, or whose complements must be in the monomial. E indicates a set of “excluded” features: neither they nor their complements may appear in the monomial. \(F=\{1,\ldots ,N\}\backslash (J \cup C \cup E)\) is the set of “free”, undetermined features. The root of the branch-and-bound tree is the subproblem \((J,C,E,F) = (\emptyset , \emptyset , \emptyset , \{1,\ldots ,N\})\). Subproblems with \(F=\emptyset \) correspond to only one possible monomial and cannot be subdivided.

The details of the bound b(J, C, E, F) may be found in [18] and are not necessary for the discussion here. It involves equivalence classes of observations induced by the set E and has complexity \({{\mathrm{O}}}(MN)\).

The final ingredient required to describe the branch-and-bound method is the branching procedure. Here we only use the most efficient of the branching schemes tested in [18], a ternary lexical strong branching rule. Given a subproblem (J, C, E, F), this method evaluates \(\left| F\right| \) possible branches, one for each element j of F. Given some \(j\in F\), there are three possibilities: either j will be in the monomial, its complement will be in the monomial, or j will not be used in the monomial. These possibilities respectively correspond to the three subproblems, \((J\cup \{j\},C,E,F\backslash \{j\})\), \((J,C\cup \{j\},E,F\backslash \{j\})\), and \((J,C,E\cup \{j\},F\backslash \{j\})\). We use this three-way branching of (J, C, E, F), selecting j through a lexicographic strong branching procedure. Specifically, for each member of j, we compute the three prospective child bounds \(b(J\cup \{j\},C,E,F\backslash \{j\})\), \(b(J,C\cup \{j\},E,F\backslash \{j\})\), and \(b(J,C,E\cup \{j\},F\backslash \{j\})\), round them to 5 decimal digits of accuracy, place them in a triple sorted in descending order, and then select the j leading to the lexicographically smallest triple. A byproduct of this procedure is the following potentially tighter “lookahead” bound on the current subproblem objective:

A fourth possible component of a branch-and-bound scheme is an incumbent heuristic. For the MMA problem, there is no difficulty in identifying feasible solutions; we use the straightforward strategy of [18], which is simply to use (J, C) as a trial feasible solution whenever processing a subproblem (J, C, E, F).

4.2 Parallel implementation in PEBBL

We extended the serial PEBBL implementation tested in [18] to use the PEBBL parallel layer. The fundamental part of this effort was the creation of pack and unpack routines to allow problem instance and subproblem data to be respectively written to and read from MPI buffers. Beyond this basic task, there are three additional, optional implemention steps which may be taken to improve parallel performance when converting a serial PEBBL application to a parallel one:

-

1.

Implementing a synchronous ramp-up procedure, if applicable.

-

2.

Creating an enhanced incumbent heuristic that can run semi-independently from the search process as part of the incumbent heuristic thread.

-

3.

Implementing interprocessor communication for any application-specific state information beyond what can be included in the initial problem instance broadcast or stored within individual subproblems.

Steps 2 and 3 are not applicable to the MMA algorithm described above, because of its simple procedure for generating incumbent solutions and its lack of any “extra” data structures relevant to step 3. However, because of the strong branching procedure, the MMA algorithm does have a secondary source of parallelism that lends itself naturally to PEBBL’s synchronous ramp-up phase.

The time to process each subproblem (J, C, E, F), especially near the root of the branch-and-bound tree, tends to be dominated by the strong branching procedure’s calculations of \(b(J\cup \{j\},C,E,F\backslash \{j\})\), \(b(J,C\cup \{j\},E,F\backslash \{j\})\), and \(b(J,C,E\cup \{j\},F\backslash \{j\})\), one such triple for each \(j\in F\). These calculations are independent and readily parallelizable. While all other calculations are performed redundantly, the ramp-up-phase version of the separation procedure divides the \(\left| F\right| \) undecided features as evenly as possible between the available processors. Then each processor computes the triple of potential child bounds for each feature j that it has been allocated. Each processor rounds the elements of these triples, sorts the elements within each triple in descending order, and determines the lexicographically smallest triple. Next, using MPI’s Allreduce reduction function with a customized MPI datatype and reduction operator, we determine the \(j\in F\), across all processors, leading to the lexicographically minimum triple. This j becomes the branching variable. Another MPI Allreduce operation computes the tightened “lookahead” bound (4).

Overriding PEBBL’s default implementation of the method that senses the end of ramp-up, we terminate the synchronous ramp-up phase when the size of the subproblem pool becomes comparable to the number of features, in the sense that \(\left| {\mathscr {P}}\right| > \rho N\), where \({\mathscr {P}}\) is the set of active search nodes and \(\rho \) is a run-time parameter. In some brief experimentation, we found that \(\rho = 1\) yielded good performance, so we used that value in most of our experimental tests. Below, we refer to \(\rho \) as the “ramp-up factor”.

4.3 Computational testing and scalability results

To demonstrate PEBBL’s scalability, we tested our parallel MMA solver on “Red Sky”, a parallel computing system at Sandia National Laboratories that consists of 2816 compute nodes, each with two quad-core Intel Xeon X5570 processors sharing 48 GB of RAM. Red Sky’s compute nodes are connected by an Infiniband interconnect arranged as a torroidal three-dimensional mesh, with a 10 GB/s link data rate and end-to-end message latencies on the order of one microsecond. Each Red Sky compute node runs a version of the Red Hat 5 Linux operating system. We compiled PEBBL with the Intel 11.1 C++ compiler with -O2 optimization, using the Open MPI 1.4.3 implementation of the MPI library.

The simplest way to use MPI on Red Sky is to launch 8 independent MPI processes on each processing node, one for each of the 8 cores. Thus, a job allocated \(\nu \) compute nodes behaves as an MPI job with \(8\nu \) parallel “processors”. By using shared memory areas rather than the Infiniband interconnect, MPI processes on the same node can communicate faster than processes on different nodes. Our only attempt to exploit this property in configuring PEBBL was to choose cluster sizes that were multiples of 8.

For runs on fewer than 8 “processors”, we simply allocated a single compute node, but launched fewer than 8 MPI processes on it. Otherwise, we always used a multiple of 8 processes. Essentially, we tested all configurations with either \(p = 2^k\) or \(p = 3 \cdot 2^k\) processor cores in the range \(1 \le p \le 8192\), with the exception of \(p = 12\), since 12 is neither less than 8 nor a multiple of 8.

For our tests, we used a collection of nine difficult MMA test instances derived from two data sets in the UC Irvine machine learning repository [1], each converted to an all-binary format using a procedure described in [6], and dropping observations with missing fields. Four of the problems are derived from the Hungarian heart disease dataset, the most challenging one tested in [18], and have 294 observations and 72 features. The remaining five instances were derived from the larger “spambase” dataset of spam and legitimate e-mails, and have 4601 observations described by 75 binary features.

The only difference between different MMA instances derived from the same dataset is in the weights \(w_i\), which strongly influence the difficulty of the instance. To generate realistic weights, we embedded our MMA solver within the LP-Boost column-generation method for creating weighted voting classifiers [12]. This procedure starts by applying its “weak learner”, in this case the MMA solver, with uniform weights. As it proceeds, it uses a linear program to construct the best possible dataset classification thresholding function that is a linear combination of the weak learner solutions obtained so far. The dual variables of this linear program provide new weights from which the weak learner creates a new term to add to the classification rule, and then the process repeats. As observed in [18], the MMA instances generated in this manner tend to become progressively more difficult to solve as the algorithm proceeds. The problems derived from the Hungarian heart disease dataset, which we denote hung23, hung46, hung110, and hung253, respectively use the weights from iterations 23, 46, 110, and 253 of the LP-Boost procedure. The problems derived from iterations before the 23rd were too easy to test in a large-scale parallel setting. The spam-derived instances are spam, spam5, spam6, spam12, and spam26, and respectively use the weights from iterations 1, 5, 6, 12, and 26 of LP-Boost.

In our testing, we left most of PEBBL’s parameters in their default configuration, except those concerned with sizing processor clusters. Processing an MMA subproblem tends to be fairly time-consuming due to the effort involved in computing the equivalence classes used in the bound calculation, which is multiplied by the requirements of the strong branching procedure. Because subproblems take relatively long to process—on the order of 0.01–0.02 s for the heart disease problems, and 0.7–0.8 s for the problems derived from the much larger spam dataset—a single hub can handle a large number of workers without becoming overloaded. Consequently, we set the cluster size to 128 processor cores. In clusters below 64 processor cores, we configured the hub to double as a worker.

Our first set of tests did not use enumeration. To demonstrate the usefulness of PEBBL’s synchronous ramp-up procedure, we ran each problem instance in two different modes, one with the default value of \(\rho =1\), and one with \(\rho =0\), which essentially disables the synchronous ramp-up procedure and starts the asynchronous search from the root subproblem. Because PEBBL’s run-time behavior is not strictly deterministic, we ran each combination of problem instance and \(\rho \) value at least 5 times, with the exception of runs on a single processor core. Such single-core runs used only PEBBL’s serial layer, which has deterministic behavior.

Tables 1, 2, 3, 4, 5, 6, 7, 8, and 9 show the results, one table per problem instance. The range of processor cores shown varies by problem instance: four of the spambase problems are too time-consuming to run on small processor configurations, and so their smallest number of processors is 8. For all the problem instances, we stopped increasing the number of processor cores after the relative speedup level had departed significantly from linear, or when we reached 8192 processor cores. All CPU times are in seconds, and the “Spdp.” and “Eff.” columns display relative speedup and relative efficiency, respectively. For problems too difficult to solve on a single processor core, these values are computed by linearly extrapolating the average time of the runs with the fewest cores to estimate the single-core running time. The “Tree growth” columns display the size of the search tree relative to the run(s) with the fewest cores.

Figures 4 and 5 graphically display the run time information for two of the tables. Figure 4 depicts the hung110 problem, which is of modest difficulty, and Fig. 5 shows spam26, the most difficult problem. Each graph uses a log-log scale, with processor cores on the horizontal access and run time (in s) on the vertical axis. On such a graph, perfectly linear speedup is represented by a straight line. On each graph, the dashed straight line shows an extrapolation of perfect linear relative speedup from the smallest procssor configuration tested. The “+” marks depict the runs without the synchronous ramp-up phase (\(\rho =0\)), and the “\(\times \)” marks represent the runs with a full synchronous ramp-up phase (\(\rho =1\)). For each combination of problem instance, number of processors, and \(\rho \) value, we computed the arithmetic mean of the sampled run times. The solid lines in each figure trace these means for \(\rho =1\), and the dotted lines show them for \(\rho =0\).

The following observations are apparent from the tables and charts:

-

Using a synchronous ramp-up phase improves scalability for all problem instances. Its effect is limited for relatively small numbers of processors, but as one increases the processor count it eventually significantly increases efficiency and reduces tree growth.

-

Speedups are close to linear over a wide range of processor configurations for all the problem instances, with the point of departure from linear speedup depending on the difficulty of the subproblems and the size of its search tree. For the problem with the smallest search tree, spam, behavior departs signficantly from linear at about 48 processor cores for \(\rho =1\) and 16–24 processor cores for \(\rho =0\). For the instance with the largest search tree, spam26, relative speedup remains essentially linear, with an efficiency of 94 %, even at 6144 processor cores. For 8192 cores, efficiency is still 89 %.

-

By applying a sufficient number of processors, the solution time for each instance can be reduced to the range of approximately 1–3 min. For example, spam26 can be solved in less than 3 min on 6144 processor cores, whereas solving it on 8 processor cores takes over 27 h, from which it may be extrapolated that solution on one processor core would require over 9 days.

-

Since there is no significant departure from ideal linear speedup when moving from 1 processor core to 2 processor cores, it may be inferred that the overhead imposed by moving from PEBBL’s serial to parallel layer is essentially negligible for the MMA class of problems.

-

There is no noticeable loss of efficiency in moving from a single processor cluster (in the runs with 128 or fewer cores) to multiple processor clusters. For example, the spam26 instance shows linear relative speedup between 128 and 4096 processor cores, even though the 128-processor configuration has a single cluster and the 4096-processor configuration requires the coordination of 32 such clusters.

-

Tree growth is the primary reason that speedups begin to depart from linear as more processors are applied. At the beginning of the asynchronous search phase, a large number of processors may need to share a relatively small pool of subproblems, with the result that some processors will evaluate subproblems that would have eventually been pruned prior to evaluation in a run with fewer processors. These subproblems may be subdivided multiple times and persist in the work pool until the final incumbent value is found. This form of inefficiency results from a combination of (1) the application not having a strong incumbent-generating heuristic and (2) it being increasingly difficult to approximate a best-first search order as the number of processors increases. In applications where heuristics can generate high-quality initial incumbents early in the solution process (e.g. the quadratic assignment problem), the main impediments to scalability would instead be processor idleness caused by a lack of available subproblems and inefficiencies in moving subproblems between processors.

-

Modestly superlinear speedups, corresponding to efficiencies slightly higher than 1.00, are sometimes observed. We believe that the main reason for this phenomenon is that more cache memory becomes available to the computation as the number of processor cores increases. PEBBL’s parallelization has sufficiently low overhead that such speedup effects are observable. In Sect. 5 below, we investigate a more dramatic version of this cache-related speedup effect using a simple knapsack algorithm.

Our second set of tests used PEBBL’s enumeration feature. One principle application of the MMA is in a column-generation setting, and column generation algorithms typically try to add several dozen columns to the master problem at a time. Hence, enumeration is a realistic application for MMA.

We used the same set of problems as above, but with \(\mathtt{enumCount } = 25\), instructing PEBBL to find a best possible set of 25 solutions. The enumCount enumeration mode places the largest additional communication burden on the PEBBL parallel layer. Generally, enumerating multiple solutions means exploring a larger search tree. We observed that the base tree size (for the smallest number of cores tested) changed little for the heart disease problems, but increased by roughly 25 % for the spam problems.

Tables 10, 11, 12, 13, 14, 15, 16, 17, and 18 show results of the experiments using enumeration. We tested only \(\rho =1\), the more efficient ramp-up setting from the single-solution tests. Overall, the results are similar to those without enumeration. In general, scalability is slightly worse in the heart disease problems, since the base search trees are of similar size but the implementation has more communication overhead (especially during the synchronous ramp-up phase, because synchronizing the repository requires significant communication). The results for the spam-detection problems are quite similar to those without enumeration; in some cases scalability improves slightly due to the larger base search tree. Enumeration’s extra communication overhead is of less concern for the spam problems, because a similar amount of overhead is “amortized” over more time-consuming subproblems.

Number of level-3 cache misses, summed across all processors, for the runs shown in Fig. 6

5 Cache-related superlinear speedups

Some of the data tables contain a curious phenomenon: numerous relative efficiencies are greater than 1.00, indicating that speedups are slightly better than linear. One mechanism through which such a phenomenon can occur, depending on the subproblem pool ordering and the means of generating incumbent solutions, is that using more processors may allow an incumbent solution to be found at a relatively earlier point in the search process, which shrinks the size of the search tree. However, that is not the case here, because tree sizes gradually increase with the number of processors.

Instead, the slightly superlinear results are due to processor memory cache behavior. We demonstrate this by studying an instance of a different problem class in which the effect is far more pronounced. Figure 6 shows the speedup behavior on Red Sky of a PEBBL knapsack application on a 3000-object binary knapsack problem in which the object weights and values are strongly correlated. This knapsack application is distributed with PEBBL, but we did not use this application for our main numerical tests because it does not implement a competitive method for solving knapsack problems: its algorithm is equivalent to using the linear programming relaxation of the problem without any cutting planes. However, it does serve as a simple demonstration application of PEBBL that has rather different properties from the MMA problem. In particular, subproblems tend to evaluate extremely quickly for this problem class: the runs shown in Fig. 6 all evaluated approximately 200 million subproblems. To avoid congestion at the hubs, we used a much smaller cluster size than in MMA; we selected a cluster size of 8, which matches the number of cores on each Red Sky node, and configured the hubs to be “pure” (i.e. hubs do not also function as workers). Otherwise, we used PEBBL’s default configuration settings (see Sect. 4.3). We tried all multiples of 8 processor cores from 8 to 128, solving the problem instance three times for each configuration.

As can be seen in the figure, significant and reproducible superlinear speedup occurs. To explain this phenomenon, we first checked the size of the search tree explored, but it was virtually constant. We then instrumented the executable code using Open|SpeedShop [44], and observed that the total number of machine instructions executed across all processors grew slightly between 8 and 16 processor cores, and then remained essentially flat. Thus, the only possible explanation for the superlinear speedup is that the average instruction execution time fell as we added more processors. In turn, the only reasonable explanation of this reduction in instruction time is the use of cache memory. Figure 7 shows the total number of level-3 cache misses across all processors, using information also obtained from Open|SpeedShop. Clearly, the larger processor configurations are able to keep a larger fraction of their working data within cache. Even with the higher relative communication overhead arising from the knapsack problem’s easier subproblems (as compared to MMA), the communication overhead of PEBBL is small enough that the cache effects dominate and speedups are reproducibly superlinear over a broad range of processor counts.

Although true superlinear speedups are sometimes considered theoretically impossible, it is important to note that the classical definitions of speedup and efficiency do not consider memory speed. In practice, as the number of processors increases, the memory resources available to an application often increases as well, including the total amount of each level of cache memory. If the total memory needed to store the active search pool is of a similar magnitude to the total amount of cache memory across all processors, adding cache may increase efficiency more than interprocessor communication overhead decreases it. Despite the apparent complexity of PEBBL’s communication, its overhead is low enough that this situation can indeed occur.

For various kinds of algorithms, superlinear speedups attributed to cache effects have been observed since at least the 1990s [7, 53]. More recent examples of such effects are remarked upon in [52], which studies matrix multiplication methods, in [8], which describes matrix factorization methods specifically designed to elicit such effects for particular cache architectures, and in [29], which describes a robot motion planning system. We are not aware of any previous claims of superlinear speedup for branch-and-bound algorithms.

6 Concluding remarks

A clear conclusion from our results is that branch-and-bound algorithms can be highly scalable for applications with large search trees. For the hardest problem instance we considered, spam26, we obtained 99 % relative efficiency on 4096 processor cores, 94 % on 6144 cores, and 89 % on 8192 cores. As opposed to some earlier published massively parallel results, we calculated our relative efficiencies relative to base runs with minimal numbers of processors, only 8 cores in the case of difficult instances like spam26, and only a single core for the easier ones. We thus demonstrate good scaling more definitively and over a wider range of processor counts than in prior published branch-and-bound work. Despite the complexity of PEBBL’s parallelization strategy, it is apparent from our results that it does not add appreciable overhead to the search process for the MMA and knapsack applications. For harder problem instances, furthermore, PEBBL can maintain nearly the same scalability when enumerating multiple MMA solutions (for easier problem instances, enumeration takes a greater toll on scalability, but it remains good).