Abstract

Alzheimer’s disease (AD) is a neurological memory loss syndrome that eventually leads to incapacity to perform everyday chores and death. Since no known cure for this disease exists, it’s crucial to catch it early, before symptoms appear. This study used Florbetapir PET (specifically AV-45 PET scans) as a neuroimaging biomarker to develop a 3-Dimensional Ensemble Net for Alzheimer’s multi-class categorization. Our research is the first to examine the outcomes of three different feature extraction methods in depth (3D Subject, 3D Slice and 3D Patch Extraction Approach). Alzheimer detection through AV45 PET scans and 3-Dimensional slice neuroimaging computation technique was further done using three distinct Slicing algorithms (Subset Slice Algorithm (SSA), Uniform Slice Algorithm (USA), and Interpolation Zoom Algorithm (IZA). We tested the classification accuracy of a 3D patch-based technique with numerous patches varying from small to medium to huge dimensions. In this study, we used Amyloid- Positron Emission Tomography (AV45-PET) scans from the ADNI repository to create 3-Dimensional Ensemble Net model. Averaging, registering according to a standard template, and skull removal were used to pre-process the raw AV45-PET scans. The rotation method was used to augment these scans even more. Ensembling of two separate 3D-ConvNets was done for the 3D Subject-based computation technique. For the 3D Patch based computing approach, many non-overlapping patches ranging from 32, 40, 48, 56, 64, 72, 80, and 88 were retrieved and given to the Ensemble Net. Three unique algorithms were devised to extract slices from an AV45-PET scan and integrate them back to form a 3D volume in the 3D Slice based technique. Our results showed that (1) The three-class classification accuracy of our Ensemble Net model utilizing AV-45 PET images was 92.13% (maximum accuracy attained so far as per our knowledge). (2) The 3-Dimensional Patch extraction proposition was most accurate in Alzheimer’s categorization using Florbetapir PET images, followed by Subject-approach, then 3D Slice approach, with performance accuracy of 92.13, 91.01, and 90.44%, respectively. (3) The accuracy of the Ensemble Net network employing the 3D Patch computational approach was highest for larger patches (Dimensions as 72, 80, 88), next moderate patches (Dimensions as 56, 64, 48), and finally smaller patches (Dimensions as 32, 40). Higher dimension patches were classified correctly 92.13% of the time, whereas medium patches were correctly classified 80.89% of the time, and small patches were classified 74.63% of the time. (4) In terms of three-class classification accuracy, using 3D-Slice based approach, uniform slice extraction and interpolation zoom technique with 90.44 and 88.2% accuracy outperformed subset slice selection, with 81.46% performance accuracy. Using the Ensemble Net model and a 3D patch-based feature extraction approach, we efficiently labeled Alzheimer’s disease in a three-class categorization using amyloid PET scans with an accuracy of 92.13. Accuracy. The proposed model is examined using several neuroimaging feature computation approaches, stating that 3D-patch based Ensemble Net outperforms 3D-subject based Ensemble Net model and 3D-slice level model in terms of performance accuracy. In addition, a series of experiments were conducted for 3D-patch based approach with numerous patch dimensions varying from small to moderate to big sized patches in order to investigate impact of patch size on Alzheimer’s classification accuracy. While for 3D-Slice based approach, to determine which strategy was optimal, the slice-level technique was evaluated using three distinct algorithms revealing that uniform slice method and interpolation selection method outperforms subset slice method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Alzheimer’s disease (AD) is a degenerative brain condition stepping towards memory loss over time. It is perhaps the leading cause of dementia, accounting for its 60–80% cases. It cannot be cured or treated to return to a normal state; only the progression of brain abnormalities can be delayed. People with Alzheimer’s disease may discover that routine tasks are becoming more difficult to complete than before. Symptoms include memory problems, trouble finding the correct words for things, being confused about the time or location, struggling managing money or paying bills, mood, personality, or judgement changes, and misplacing or being unable to find items. As a result, Alzheimer’s patients require a considerable attention, time, and money. Around 55 million people worldwide have Alzheimer’s disease, with the numbers expected to jump to approximately 139 million by 2050, with the cost of caring for these patients rising to 2.8 trillion dollars (Alzheimer’s Disease International 2022).

Senile Plaques and neurofibrillary tangles are two forms of brain lesions that cause Alzheimer’s disease. In the human brain, an imbalance of Amyloid-Beta protein bundles to create unwanted fibers, resulting in senile plaques. These plaques accumulate in more and more sections of the brain as time goes on, affecting learning, memory, and other functions. The aberrant buildup of Tau filaments in the cell leads to the formation of neurofibrillary tangles, which eventually lead to the neuron’s death. It can cause reading difficulties, a bad sense of direction, inattentiveness, short-term memory loss, poor object recognition, poor judgement, and impulsivity, among other symptoms. Many clinical trials aimed at lowering senile plaques have failed, and shrinking them isn’t enough to eliminate the condition. The question of whether of the two injuries occurs first in the brain is still being researched.

A number of biomarkers have been identified for Alzheimer’s identification including brain imaging, blood and urine tests, bio specimen, and genetic data (Khan 2016a, b). The brain’s morphology, functionality performed, and molecules found are used to classify neuroimaging biomarkers into three groups (Márquez and Yassa 2019). Functional and molecular imaging can identify an increase in Amyloid deposition, which contributes to Alzheimer’s disease (Perani 2014; Shirbandi et al. 2021). One of the indicators that really can assist early diagnose Alzheimer’s disease advancement is the Florbetapir PET scans (Morris et al. 2016). This scan aids in the evolution of healthy control to moderate cognitive impairment, then Alzheimer’s disease.

Deep learning models, which can identify even microscopic variations, offer a lot of potential in this case (Goenka and Sharma 2020; Yadav and Goenka 2021). Multiple researches have been conducted using deep learning to determine early Alzheimer’s diagnoses (Reith et al. 2020). Convolutional neural networks are rapidly employed in medical analysis domain to perform disease detection tasks, displacing manual feature extraction (Sharma et al. 2022; Goenka and Tiwari 2021a). It is harder to diagnose AD by applying conventional hand-craft feature extraction approach from several biomarkers, and subject expertise is also required (Camus et al. 2012; Johnson et al. 2013).

We designed 3-Dimensional Ensemble Net Model for Alzheimer’s three-class categorization by leveraging Amyloid PET scans (AV45-PET) from the Alzheimer’s Disease Neuroimaging Initiative ADNI (ADNI Dataset 2022). This research has four valuable contributions.

-

i.

To start, the 3D Ensemble Net framework was created by extracting patches from volumetric Amyloid PET scans for categorization of AD, NC (Normal Control), and MCI (Mild Cognitive Impairment), with the highest accuracy of 92.13% as per our knowledge.

-

ii.

Next, on the same dataset, we tried numerous neuroimaging feature extraction algorithms, to find out the best (3-Dimensonal Subject, Patch, and Slice based approach) for Alzheimer classification.

-

iii.

Furthermore, we used the Patch Feature extraction technique to investigate the impacts of using smaller, intermediate, and larger patches.

-

iv.

Lastly, Slice-based algorithms such as USM, SSM, and ISM were employed to identify which was best for Alzheimer categorization.

The article is organized as: Framework adopted by previous researchers for Alzheimer’s identification using amyloid PET is discussed in Sect. 2. Section 3 introduces the Ensemble Net architecture utilizing 3D-ConvNet using Florbetapir PET scans for Alzheimer’s categorization as NC vs. MCI vs. AD (three-class), as well as the dataset and various pre-processing and augmentation procedures applied. Experiment findings for Alzheimer’s multi-class and binary categorization using neuroanatomical computational approach are shown in Sect. 4. Section 5 includes a review of model’s methodologies, future scope, as well as conclusion.

2 Related work

This segment of the paper will go over how Amyloid PET scans have been used in multiple studies for Alzheimer detection. It also looks at the several feature extraction methods researchers utilized for Alzheimer’s categorization.

Alzheimer’s identification began with a complex time-gobbling manual feature extraction procedure using a variety of tools such as SPM toolbox (SPM 2022), Free Surfer (FreeSurfer 2022), DARTEL (DARTEL Toolbox 2022), Registration (FLIRT 2022), FSL (FSL 2022), ANTS (ANTs 2022), and others, which classified it as AD or NC. Artificial intelligence algorithms, neural networks, however, have surpassed this manual approach owing to its complexity, domain expertise required, and availability of processing capabilities (Kruthika et al. 2019; Haleem et al. 2019). However, due to its non-linearity properties, deep learning algorithms are taking the lead over machine learning algorithms(Qin and Tian 2018; Goenka et al. 2021).

To identify Alzheimer’s disease, several research use Deep Boltzmann machines (Suk et al. 2014), Convolutional Autoencoder (Hosseini-Asl et al. 2018), Gated Recurrent Units (Lee et al. 2019), Bi-LSTM (Bi-directional long short term memory) (El-sappagh et al. 2020), Convolutional Neural Networks (Wen et al. 2020), Attention Model (Zheng et al. 2022; Xiao et al. 2022), Generative Adversarial Networks (GAN) (Kang et al. 2021) and several other networks (Liu et al. 2015; Qiu et al. 2018). However, we concentrated on 3-Dimensional Convolutional Neural Networks (Li et al. 2020; Huang et al. 2019) rather than 2-Dimensional Convolutional Neural Networks (Janghel and Rathore 2020; Wang et al. 2018) in our research. As an outcome, the primary difference between 2-Dimensional and 3-Dimensional ConvNet is that the three dimensional network retains temporal details from the scans (Goenka and Tiwari 2021b). The 3D-EnsembleNet combines two 3D-ConvNets to further convolve over voxels in a PET scan whilst also maintaining spatial features.

Yuan et al. (2018) used a 3-Dimensional CNN to discriminate between Amyloid positive and negative scans and SUVr estimates using 1072 Florbetapir PET images taken from the ADNI database (Standard Uptake Value ratio). 178 AD, 525 MCI, and 369 NC scans were spatially normalized to MNI (Montreal Neurological Institute) template space and fed into a deep learning architecture, producing 95% accuracy, 92% sensitivity, and 98% specificity.

Using 732 PET scans from the ADNI repository, Sahumbaiev et al. (2018) designed a deep neural network. 237 AD, 87 MCI, and 408 CN scans were pre-processed using different normalization techniques, spatial co-registration using the AAL atlas (Automated Anatomical Labeling), and finally passed to a deep neural network with successive backward and forward propagation stages. Finally, the output layer’s softmax activation function yielded 90% sensitivity and 87% specificity.

Choi and Jin (2018) concatenated FDG and AV-45 PET images retrieved from the ADNI database after applying several pre-processing techniques such as co-registration, voxel standardization, smoothing, and rescaling. This feature volume was fed into a 3-Dimensional CNN that learned from Alzheimer’s binary classification data (AD versus NC) and correctly predicted MCI to AD conversion 84.2% of the time.

Ozsahin et al. (2019) used 500 AV-45 PET images to evaluate four distinct binary classifications by extracting several patches from the scans and feeding them into a back propagation neural network. 100 PET scans of AD, LMCI, EMCI, SMC, and NC were run through a pre-processing pipeline that included co-registration, averaging, standardisation, and resolution before being fed into a neural network. The accuracy for AD vs. NC was 87.9%, 66.4% for NC vs. LMCI, 60.0% for NC vs. EMCI, and 52.9% for NC vs. SMC.

Using T1w-MRI (T1 weighted Magnetic Resonance Imaging) and AV-45 PET scans, Punjabi et al. (2019) created a single-modality and multi-modality framework. The 585 AV-45 PET images were first pre-processed by applying registration to the MNI152 template, then averaging numerous scans from the same patient, and then skull stripping and sending them as a complete volume into a 3Dimensional convolutional neural network. The accuracy of our voxel-based neuroanatomical method combined with 3D CNN for AD versus NC was 85.15%.

The work by Huang et al. (2019) focuses on Hippocampus ROI feature extraction using 2145 T1w-MRI, FDG-PET images from 1211 subjects. The scans were pre-processed before being sent to 3D-CNN, namely VGG-Net. The two datasets namely, Segmented and Paired dataset, resulted in 90.10% accuracy for AD versus NC, compared to 89.11% accuracy for PET scans and 81.19% accuracy for MRI scans.

Using MRI and PET scans taken through ADNI, Zhang and Shi (2020) put forth four-class categorization NC vs. pMCI vs. sMCI vs. AD with 86.15% classification accuracy. The pre-processed scans of 500 participants were fed into a deep multimodal fusion network, which uses a 2-Dimensional CNN and an Attention model to collect low and high information and sMCI vs. pMCI accuracy was 89.79%, whereas AD vs. NC accuracy was 95.21%.

Peng et al. (2021) were able to distinguish amyloid positive and negative scans with 100% accuracy. MCDNet-2 (Monte Carlo de-noising network and GAN (Generative Adversarial Networks)) was used to train Amyloid PET scans from 25 subjects, and the results were 100% accurate (Table 1).

Despite the fact that these studies focused on deep neural networks, no research has been done on the different aspects of brain structure computational approaches. The classification accuracy of created models using 3-Dimensional Subject, Patch and Slice-based techniques varies whereas the prior studies employed only one method of feature extraction approaches. To assess suitability of these methodologies for a certain type of model, much research is necessary. Furthermore, binary and three-class classification accuracy must be improved. In addition, only a few studies looked at various feature extraction approaches.

This work is distinctive in these respects. To begin with, three-class classification accuracy has substantially improved. This, as far as we can tell, is the highest level of accuracy achieved. Second, the relevance of several neuroanatomical computing algorithms was proved using the same dataset. Third, multiple patch sizes have been evaluated to determine which one gives the best results in terms of accuracy. Finally, three ways for demonstrating the best in a slice-based algorithm were applied.

3 Materials and methods

3.1 Methodology

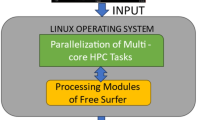

In this section, the authors present the framework and algorithm for Alzheimer’s detection, which includes the following main components: Pipeline for pre-processing 2. Data Enhancement 3. Voxel-based method with 3D-EnsembleNet 4. Patch-based method in 3D-EnsembleNet 5. Slice-based method using 3D-EnsembleNet. Figure 1 put forwards the framework for Alzheimer’s categorization using different neuroanatomy computational methodologies.

3.1.1 Pre-processing technique

The pre-processing pipeline’s objective is to lower various noises that may appear during acquisition of Amyloid PET scans. It is also no longer necessary for neural networks to rectify or ignore these biases. Further, the EnsembleNet will look for patterns in healthy scans, mildly impaired scans, and Alzheimer’s scans. The pre-processing method in proposed framework includes averaging numerous PET images, registration using FLIRT and volumetric skull stripping using the BET tool.

3.1.1.1 Averaging PET scans

PET scans of Amyloid AV-45 were taken 50 min after injecting 370 MBq 18F-florbetapir in dynamic list mode for 20 min. The mean img function in the nilearn library was used to average the various PET scans collected for a single individual. Every subject’s averaged single PET scan was further pre-processed and employed in our 3D Ensemble Net architecture.

3.1.1.2 Rigid registration

FMRIB’s Linear Registration tool (FLIRT) (FLIRT 2022) is employed in our study to reduce spatial dissimilarities that arose during scanning. FLIRT assisted in the registration of Amyloid PET images to MNI152 T1 Template (Fonov et al. 2011) with 2 mm isotropic spacing by conducting minor rotations and translations. For our pre-processing pipeline, we used 6 degrees of freedom (dof), a sinc-based interpolation approach, a correlation ratio search cost. Equation 1 is mathematical formula with six degrees of freedom (Muschelli 2022b).

where P is

\({rp}_{1}, {rp}_{2}, {rp}_{3}\) are three rotation parameters.

The translation vector in the x, y, and z-axes is connected with three degrees of freedom \(f=({f}_{x }, {f}_{y} , {f}_{z})\).

3.1.1.3 Skull stripping

The brain extraction tool (BET) (Smith 2002) of the FMRIB (Functional Magnetic Resonance Imaging of the Brain) Software Library (FSL) extracts neck, bone, eyes etc. non-brain structures from the whole AV45 PET images. Using clustering approaches, BET isolates voxels in amyloid scans and separates them to brain and non-brain tissues. We used fractions of 0.285 for this subdivision resulting in minimal characteristic loss (Goenka and Tiwari 2022).

Algorithm 1

The following algorithm (Muschelli 2022a) is used to extract the brain from the PET scan:

-

1.

Calculate the second and ninety-eighth percentiles.

-

2.

Using the formula (98th–2nd) * 0.10 + 2nd percentile, the threshold value is computed.

-

3.

The background is cleared using this threshold value.

-

4.

Centre of gravity is calculated using non-thresholded data.

-

5.

Brain’s radius and median intensity sites are computed.

-

6.

Region growth and iteration are used to compute the brain surface.

-

7.

The surface has been smoothed.

-

8.

Finally, the surface is shrunk using median intensity.

The complete pre-processing workflow for Amyloid PET Scans acquisition till skull stripping is shown in Fig. 2. Furthermore, three different feature extraction methods have been presented.

3.1.2 Augmentation technique

One tripping issue for deep learning algorithms is a lack of dataset as the model accuracy increases only when huge amount of dataset is there since it leads to lesser chances of overfitting. As a consequence, multiple techniques like flipping, rotation, rescaling, shifting by various coordinates, color correction, and others can be employed to augment the dataset. In our work, Scipy’s ndimage module was used to conduct − 5° and 5° for rotations for each original scan. As a result, the dataset size is tripled, resulting in a considerable improvement in predictive performance.

3.1.3 Classification models

We investigated three neuroanatomy computational extraction approaches for Alzheimer’s binary and multi-class categorization as AD, CN and MCI:

-

(a)

3-Dimensional Subject Based EnsembleNet

-

(b)

3-Dimensional Patch Based EnsembleNet

-

(c)

3-Dimensional Slice Based EnsembleNet

EnsembleNet is a hybrid of two 3-Dimensional Convolutional Networks used in our research where multiple convolution, batch normalization, pooling, dropout, and dense layers forms each Convolutional network. The 3-Dimensional convolutional layer takes 3-D Amyloid PET scans and convolves them through variety of filters to produce an output feature volume. In mathematical equation terms, \({W}_{nm}^{layer}\) is the 3-Dimensional kernel with size I × J × K in the layerth layer (Qu et al. 2020). This function is associated to former layer (layer-1) mth input feature volume \({F}_{m}^{layer-1}\) and \({F}_{m}^{layer}\), the nth output volume as shown in Eq. 2.

As shown in Eq. 3 the non-linear activation function \(\sigma (.)\) gives, \({F}_{i}^{layer}\) the output feature volume.

Activation function \({ActFn}^{[layer]}\) works on input feature volume \({V}^{[layer]}\), resulting into output feature volume \({IZ}^{[layer]}\) as shown in Eq. 4.

As depicted in Eq. 5, the pooling function \({PoolFn}^{\left[layer\right]}\) is applied to the \({ActFn}^{\left[layer\right]}\), resulting in \({OutFv}^{\left[lyr\right]}\), the output feature volume.

The batch normalization layer helps to standardize network inputs that are either direct inputs or activations from preceding layers. The Global Average pooling layer (Lin et al. 2014) contributes in lowering the feature space of model parameters, advancing towards Alzheimer’s three-class categorization due to mathematical equation of converting H × W × D to 1 × 1 × D dimensions, leading to a single entry vector. The completely linked layer at the network’s end assists in non-linear combinations of distinct characteristics, allowing us to categorize the data. The dropout layers help to regularize our recommended workflow method by detaching some visible and hidden nodes, mandating that no one node is accountable for predicting the Alzheimer’s disease.

The sub-sections below details the proposed frameworks for various feature extraction approaches in detail.

3.1.3.1 3-Dimensional subject based Ensemble Net

By merging features from two 3-dimensional convolutional networks that operate on complete Florbetapir AV-45 PET images, the 3D-Subject based Ensemble Net identifies Alzheimer’s disease. Before being sent to the Ensemble Net, Amyloid PET images are scaled to 128 × 128 × 64. In each ConvNet, we used 13 layers, including multiple convolutional layers, batch normalization, pooling layers (max and global average), and dense layers, as shown in Fig. 3. The features of these two models are amalgamated in the maximum layer, which is then transmitted to the dense layer. In three-class categorization, the penultimate fully connected layer with softmax activation function divides Alzheimer’s disease into AD, MCI, and NC, whereas sigmoid activation function distinguishes between MCI-AD, AD-NC, and NC-MCI in binary categorization.

3.1.3.2 3-Dimensional patch based Ensemble Net

The 3D-patch based Ensemble Net has a similar design to the 3D-Subject based Ensemble Net, however the size of the Florbetapir AV-45 PET scan is different. The patch size determines the input scans dimensions in this feature extraction approach. The Fig. 4 below shows the architecture for Patch dimension 72, which uses PET scan patches of size 72 × 72 × 72. The torch unfold function was used to construct patches out of complete scans, which were fabricated using non-overlapping technique. For ensuring that scans are divisible by patch size, we employed padding in our method (Goenka et al. 2022). Upon the extraction of blocks with unfold function, the reshape function is used to build a 3-Dimensional Patches, which are then passed to EnsembleNet framework.

In addition, we investigated the impact of patch dimensions on performance accuracy by using a variety of dimensions scaling from small to medium to large with 32, 40, 48, 56, 64, 72, 80, and 88 patch sizes employed in the experiments.

3.1.3.3 3-Dimensional slice based Ensemble Net

Slices are extracted using three different approaches and infused to generate a 3D-volume to pass to the framework in the 3D-Slice based EnsembleNet. interpolated zoom technique (IZA) (Zunair et al. 2020), uniform slice method (USA), Subgroup slice method (SSA) (Zunair et al. 2019), were the three slice selection methods used in our study. The 3-Dimensional Slice based EnsembleNet (depicted in Fig. 5) varies from 3-Dimensional Subject based EnsembleNet in that it concatenates slices retrieved using several techniques and sends them as input image instead of the whole scan. Furthermore, whereas 3D-Patch based covers may include the whole scan in separate patches, 3D-Slice based EnsembleNet does not capture the majority of information.

Subgroup slice algorithm (SSA): In this method, slices are extracted from Amyloid PET scan’s beginning, middle, and end positions. The middle slices are bagged by calculating half of the scan depth to preserve uniformity owing to depth variation. To achieve the desired input volume, the subsets are then layered depth wise.

Uniform slice algorithm (USA): The target depth DD, PET scan input depth PD are calculated using the uniform slice method. A spacing factor is calculated using the formula \(SpaceFact = PD/DD\). The spacing factor SpaceFact is retained throughout the succession of slices in scan data for this sample.

Interpolated zoom algorithm (IZA): Each PET volume is magnified along z-axis by \(1/PD.GD\), where PD is the PET input depth and GD is the goal depth size, using spline interpolation with order of three as interpolant. The PET volume is magnified or compressed here by duplicating the nearest pixel along depth. Because it uses spline interpolation to compress or extend z-axis to the appropriate depth, it maintains a major characteristic from the PET input scan in comparison to the aforementioned alternatives.

3.2 Metrics

A number of performance measures are used to evaluate the three Ensemble Nets built in this work for Alzheimer’s detection utilizing different neuroanatomy computing methodologies. For training, validation, and testing datasets, it includes performance accuracy and loss results. Confusion matrix, AUC, ROC, precision, sensitivity and f1-score are all calculated as well. Because of the importance of this suggested framework in the medical domain, it is vital to point out the incorrect predictions.

True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN) values are used in confusion matrix (Fig. 6).

Recall/Sensitivity is the classifier’s ability to find all the relevant cases within a dataset.

Precision is the total number of true positive cases divided by true positive and false positive cases.

Specificity is the total number of true negative cases divided by true negative and false positive cases.

3.3 Implementation details

NVIDIA Volta GPU with 32 GB RAM, 5120 CUDA, and 640 Tensor cores was utilized to compile and execute the EnsembleNet framework for Alzheimer’s detection. Keras (Chollet 2015) served as front-end while Tensor flow served as the back-end (Abadi et al. 2016) for this Alzheimer identification framework. Python was used as programming language with Anaconda navigator platform as IDE (Integrated Development Environment). Adaptive moment estimation (ADAM) optimizer with 0.0001 learning rate and cross entropy loss function was used for model compilation (Kingma and Ba 2015). As determined in Eqs. 9 and 10, Adam retains an exponentially declining average of prior gradients \({\mathfrak{m}}_{t}\) and past squared gradients \({\upnu }_{t}\). With values of 0.9 and 0.999, \({\beta }_{1}\) and \({\beta }_{2}\) are relatively tiny decay rates. Epsilon ε is a very tiny value introduced to prevent division by zero, with a value of 10–8.

These gradients \({\mathfrak{m}}_{t}\) and \({\upnu }_{t}\) are further bias corrected as indicated in Eqs. 11 and 12, labelled as \({\widehat{\mathfrak{m}}}_{t}\) and \({\widehat{\upnu }}_{t}\).

Eventually, as shown in Eq. 13, the weights \({\theta }_{t+1}\) \({, \theta }_{t}\) are changed using the following mathematical equation with \(\eta\) step size.

Early stopping also improved with validation accuracy and overfitting minimization whilst maintaining Epoch count at 100.

3.4 Materials (dataset)

The data for the research was taken from ADNI repository. Under the guidance of Dr. Michael W. Weiner, ADNI launched in 2004 as a public database. Its main purpose was to compile all biomarkers for early identification of Alzheimer’s disease in a single repository. For this study, Amyloid PET (AV-45) scans from ADNI-3 were obtained which was released in 2016.

The data for this investigation included 593 scans from 381 people (33 AD scans, 438 CN scans, and 122 MCI scans), which were separated in 3 classes as shown in Table 2: AD, CN, and MCI.

AD individuals were diagnosed with Alzheimer’s condition at beginning of the trial and they had same condition throughout until the data was extracted.

CN individuals were healthy from the start of the study and stayed that way throughout.

MCI Individuals, begun to acquire Alzheimer’s symptoms but had not yet fully transitioned to AD and were not as normal as CN individuals.

4 Experiments and results

In the section below, the results of different feature extraction approaches for Alzheimer’s categorization (three-class and two-class) are listed. The pre-processed data was extracted, saved and augmented separately through distinct feature extraction algorithms in each of the experiments, which were performed as distinct pipelines.

4.1 3-dimensional subject based EnsembleNet

With 1311 healthy, 99 Alzheimer, and 366 mild cognitive impairment scans divided into 1420 training, 178 testing, 178 validation datasets, the 3-Dimensional Subject based Ensemble Net for Alzheimer’s categorization was built. As shown in Fig. 7, the 3-class performance accuracy was 91.01%, with a training accuracy of 93.87, validation accuracy of 89.32%. The accuracy of binary classification was 100% for NC vs. AD, 95.23% for MCI vs. NC, and 97.87% for MCI vs. AD.

The graphic shows the confusion matrix, which includes 129 accurately determined healthy, 24 MCI, and 9 Alzheimer’s scans. Table 3 below shows the recall, precision, and F-scores for three classes with the support scans count. Figure 8 depicts ROC curves for NC, MCI, and AD, along with ROC values of 0.93, 0.86 for the micro-average and macro-average.

4.2 3-dimensional patch based EnsembleNet

The 3-Dimensional Patch based EnsembleNet framework for CN vs. AD vs. MCI classification was built using 1311 normal, 99 Alzheimer’s, and 366 mildly impaired scans, which were separated into 1420 training, 178 testing, and 178 validation datasets. The 3-class Alzheimer’s categorization was 92.13% accurate, as shown in Fig. 9, with 96.26 and 93.82% training and validation accuracy. The two-class categorization NC vs. AD was 97.87% accurate, MCI vs. NC 95.23%, and MCI vs. AD was 100% accurate.

The confusion matrix is portrayed in Fig. 10 with 131 accurately projected healthy controls, 29 MCI, and 4 Alzheimer’s controls. Further the performance metrics for three classes are shown in Table 4, along with the scans that supports the projection. Figure 10 shows ROC curves for three classes, with micro and macro-average ROC values being 0.94 and 0.82, respectively.

Patch Size, Dataset Samples, Training, Testing and Validation Accuracy, and other performance metrics for three-class categorization using various patch sizes are also shown in Table 5. As can be seen in the evaluation findings below, small patches like 32 and 40 have poor categorization accuracy. Large patches had the highest testing accuracy, with medium dimensionality patches (48, 56, and 64 dimensions) yielding greater performance accuracy than tiny patches (72, 80).

In terms of binary classification results, small and medium patches performed fairly, with testing accuracy around 92%, while big patches achieved the highest accuracy, as shown in the Table 6. The accuracy of AV-45 PET patches of size 72 × 72 × 72 in distinguishing normal control from Alzheimer’s disease was found to be 97.87%, 95.23% in distinguishing mild cognitive impairment from normal controls, and 100% in distinguishing Alzheimer’s from mildly impaired scans.

4.3 3-dimensional slice based EnsembleNet

The 3-Dimensional Slice based Ensemble Net framework for CN vs. MCI vs. AD classification was built using 366 mild cognitive impairment scans, 1311 normal scans, and 99 Alzheimer images. The USA, SSA, and IZA methods were used to draw out slices and combine to form the 3D scan. Slices with varying numbers, such as 8, 16, 24, 32, 40, 48, 56, 64, 72, and 88 slices, were also used. The highest accuracy was attained using USA method with 48-slice count, with accuracies as 90.45% for testing dataset, 95.14 for training, and 88.20 for validation dataset. Talking about binary categorization accuracy, CN vs. AD was 92.90% correct, MCI vs. CN 77.97%, and MCI vs. AD 87.23% accuracy. Figures 11 and 12 demonstrate the performance accuracy and loss curves for Alzheimer’s three-class categorization.

The confusion matrix as portrayed in the Fig. 13, with 131 CN, 37 MCI, and 10 Alzheimer’s controls.

Accuracies and performance metrics for three-class categorization through numerous slice depths and algorithms—Uniform Slice Algorithm (USA), Interpolation Zoom Algorithm (IZA), and Subset Slice Algorithm (SSA) are also shown in Tables 7, 8, and 9. The performance accuracy realized for smaller slices 8, 16, 24, 32 is relatively poor, as depicted in experiments results below. Despite the fact that more slices (40, 48, 56, 64, and 72) stemmed in acceptable classification accuracy, the optimal slice depth was 48. Uniform slicing outperforms interpolation zoom and subset slicing when it comes to different techniques (Fig. 14).

4.4 Performance comparison with existing state of art approaches

Table 10 further reveals that, when compared to the other two methodologies and previously published papers, our 3D-Patch based feature extraction methodology had the highest accuracy. Yuan et al. (2018) worked on voxel based approach to discriminate between Amyloid positive and negative scans using Florbetapir PET images taken from the ADNI fed them into a deep learning architecture, producing 95% accuracy, 92% sensitivity, and 98% specificity. Using 732 PET scans from the ADNI repository, Ivan Sahumbaiev et al. (2018) designed a deep neural network with successive backward and forward propagation stages yielding 90% sensitivity and 87% specificity. Choi and Jin (2018) selected voxel based approach and correctly predicted MCI to AD conversion 84.2% of the time by concatenating FDG and AV-45 PET images retrieved from the ADNI database after applying several pre-processing techniques.

Ozsahin et al. (2019) took patch based approach by extracting several patches from the 500 AV-45 PET scans and feeded them into a back propagation neural network. The author evaluated four distinct binary classifications and realized 87.9% accuracy for AD vs. NC, 66.4% for NC vs. LMCI, 60.0% for NC vs. EMCI, and 52.9% for NC vs. SMC. Using T1w-MRI and AV-45 PET scans, Punjabi et al. (2019) created a single-modality and multi-modality framework. The accuracy of voxel-based neuroanatomical method combined with 3D CNN for AD versus NC was 92.34%. The work by Huang et al. (2019) focused on Hippocampus ROI feature extraction using 2145 T1w-MRI, FDG-PET images from 1211 subjects and passing to 3D-CNN, namely VGG-Net realizing 90.10% accuracy for AD vs. NC, compared to 89.11% accuracy for PET scans and 81.19% accuracy for MRI scans. Using MRI and PET scans taken through ADNI, Zhang and Shi (2020) put forth four-class categorization NC vs. pMCI vs. sMCI vs. AD with 86.15% classification accuracy, 89.79% for sMCI vs. pMCI, and 95.21% for AD vs. NC. Peng et al. (2021) were able to distinguish amyloid positive and negative scans with 100% accuracy.

With patch dimensions of 72*72*72, our proposed model with AV45-PET scans and a Patch-based neuroanatomical computational model achieved 92.13% three-class classification accuracy. The slice-based technique, which used a uniform slicing algorithm and a 48-slice depth, achieved a classification accuracy of 90.45%. The accuracy of a subject-based method in which entire PET scans were sent to a 3-Dimensional ensemble network was 91.01%.

5 Discussion

The purpose of this work was to find most promising method for distinguishing between Alzheimer’s disease, healthy subjects and mildly impaired subjects, surpassing handcrafted manual feature extraction strategy. Gaining maximum accuracy for AD vs. MCI vs. NC categorization, thereby supporting clinicians was a difficult challenge due to lack of data, complex and higher dimensional scans. We created a deep ensemble network approach with various regularization algorithms to reduce overfitting and improve accuracy. Furthermore, our method employs complete volumetric PET scans as input and achieves the greatest 3-class classification accuracy to our knowledge. The most notable result of this research is that we extensively investigated numerous feature extraction strategies, finding that 3-Dimensional patch-based approach, as represented in Fig. 15, provides the highest accuracy as compared to Subject and Slice-based approaches.

Further to that, while conducting experiments for non-overlapping patch based methodology with patches of various sizes extending from small to medium to large, the latter provides the highest performance accuracy, second by medium, and eventually smaller patches. Additionally, as shown in Fig. 16, classification accuracy does not improves with increasing patch size beyond certain dimensions (in our case, 72*72*72), but rather remains consistent.

To discover which slice-based algorithm was optimal for Alzheimer’s multi-class classification, researchers explored a variety of methods. Slices were extracted and concatenated using interpolation zoom, uniform slicing, and subset selection techniques to build a 3-Dimensional volume that could be fed to our proposed ensembled network. Figure 17 shows the comparisons of these methods, with the uniforms slicing strategy outperforming the other two.

Though the results are adequate for Alzheimer’s three-class categorization, the framework may be investigated for Alzheimer’s four-class categorization, AD vs. pMCI vs. sMCI vs. CN (pMCI/sMCI denoting progressive and stable MCI, respectively). Then, by merging sMRI pictures with other scans like T1w-MRI, Tau-PET, T2w-MRI, DTI, PIB-PET and so forth, multi-modality extension may be performed. Numerous other biomarkers, such as bio specimen, genetic, and clinical profilers, can be used in conjunction with others to increase performance accuracy. Numerous cognitive scores, such as the CSD, NPD, ASD and others, should be combined to see the effect on classification accuracy. Additionally, our study examines only three feature extraction dimensions, namely 3-Dimensional Subject, Patch, and Slice-based, other techniques, such as 3-Dimensional ROI, 2-Dimensional Slices, can be used to decide suitability of these approaches for detecting Alzheimer disease.

Visualization techniques that may automatically detect problematic regions are another important topic that demands research, allowing medical practitioners to have more confidence in computational medical diagnosis. For emphasizing the anomalous area, many visualization approaches such as occlusion method Grad-CAM, Taylor decomposition, and local linear embedding among others, can be used.

6 Conclusion and future work

In this section we present the conclusions to which we have arrived. We also enumerate a list of directions for further research.

7 Conclusion

Using volumetric EnsembleNet and a 3D patch-based technique, we effectively classified Alzheimer’s, mildly cognitive, and healthy PET images with 92.13% accuracy. The proposed model is tested using a variety of neuroanatomy computing methodologies, indicating that 3D-patch based EnsembleNet outperforms 3D-subject based EnsembleNet and 3D-slice based approaches in terms of accuracy. A number of trials were also carried out with different patch dimensions varying with small (32, 40, 48) to medium (56, 64) to big (72, 80, 88) portraying the influence of patch size on model accuracy. Large patches with dimensions of 72*72*72 had a classification accuracy of 92.13%, medium patches had an accuracy of 80.89%, and tiny patches had an accuracy of 74.63%, according to the findings. Uniform slicing algorithm exceeded Subset slicing and interpolation algorithms, with a 90.45% Alzheimer’s three-class categorization.

8 Present and future research

Despite the fact that these findings represent a step ahead in evolving Alzheimer’s computer-based identification, the study findings can also be applied in various domains. Combining other modalities with AV-45 PET and extending the work to a four-class classification scheme should provide more results. Additionally, our study examines only three feature extraction dimensions, namely 3-Dimensional Subject, Patch, and Slice-based, other techniques, such as 3-Dimensional ROI, 2-Dimensional Slices, can be used to decide suitability of these approaches for detecting Alzheimer disease. Then, visualization strategies for that may automatically detect problematic regions in PET pictures should be devised. These discoveries would be tremendously beneficial, and they would boost doctors’ confidence in computer diagnostic tools.

Data availability statement

The data for the study was compiled using the ADNI database which is public repository.

References

Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M, Kudlur M, Levenberg J, Monga R, Moore S, Murray DG, Steiner B, Tucker P, Vasudevan V, Warden P et al (2016) TensorFlow: a system for large-scale machine learning. OSD I:16

ADNI Dataset (2022) http://adni.loni.usc.edu/

Alzheimer’s Disease International (2022) https://www.alzint.org/about/dementia-facts-figures/dementia-statistics/

ANTs (2022) http://stnava.github.io/ANTs/

Camus V, Payoux P, Barré L, Desgranges B, Voisin T, Tauber C, La Joie R, Tafani M, Hommet C, Chételat G, Mondon K, De La Sayette V, Cottier JP, Beaufils E, Ribeiro MJ, Gissot V, Vierron E, Vercouillie J, Vellas B et al (2012) Using PET with 18F-AV-45 (florbetapir) to quantify brain amyloid load in a clinical environment. Eur J Nucl Med Mol Imaging 39(4):621–631. https://doi.org/10.1007/s00259-011-2021-8

Choi H, Jin KH (2018) Predicting cognitive decline with deep learning of brain metabolism and amyloid imaging. Behav Brain Res 344:103–109. http://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf

Chollet F (2015) Keras

DARTEL toolbox (2022) https://neurometrika.org/node/34

El-sappagh S, Abuhmed T, Islam SMR, Sup K (2020) Multimodal multitask deep learning model for Alzheimer’s disease progression detection based on time series data. Neurocomputing 412:197–215

FLIRT (2022) https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/FLIRT

Fonov V, Evans AC, Botteron K, Almli CR, Mckinstry RC, Collins DL (2011) Unbiased average age-appropriate atlases for pediatric studies. Neuroimage 54(1):313–327

FreeSurfer (2022) https://surfer.nmr.mgh.harvard.edu/

FSL (2022) https://fsl.fmrib.ox.ac.uk/fsl/fslwiki

Goenka N, Sharma DK (2020) Carebot: a cognitive behavioural therapy agent using deep learning for COVID-19. 7(19):6100–6108

Goenka N, Tiwari S (2021a) Deep learning for Alzheimer prediction using brain biomarkers. Artif Intell Rev 54(7):4827–4871

Goenka N, Tiwari S (2021b) Volumetric convolutional neural network for alzheimer detection. ICOEI 1500–1505

Goenka N, Tiwari S (2022) AlzVNet: A volumetric convolutional neural network for multiclass classification of Alzheimer’s disease through multiple neuroimaging computational approaches. Biomed Signal Process Control 74(September 2021):103500. https://doi.org/10.1016/j.bspc.2022.103500

Goenka N, Tiwari S, Yadav D (2021) No-reference image blur detection scheme using fuzzy inference. Adv Math Sci J 10(3):1175–1182

Goenka N, Goenka A, Tiwari S (2022) Patch-based classification for Alzheimer disease using sMRI. Int Conf Emerg Smart Comput Inform (ESCI) 2022:1–5. https://doi.org/10.1109/ESCI53509.2022.9758317

Haleem A, Javaid M, Khan IH, Tech B, Engineering C (2019) Current status and applications of Artificial Intelligence (AI) in medical field: an overview. CMRP. https://doi.org/10.1016/j.cmrp.2019.11.005

Hao X, Bao Y, Guo Y, Ming Y, Zhang, Daoqiang, Risacher S, Saykin A, Yao Xiaohui, Shen L (2019) Multi-modal neuroimaging feature selection with consistent metric constraint for diagnosis of Alzheimer’s disease. Med Image Anal 60:101625. https://doi.org/10.1016/j.media.2019.101625

Hosseini-Asl E, Ghazal M, Mahmoud A, Aslantas A, Shalaby A, Barnes G, Gimel G, Keynton R, Baz AE (2018) Alzheimer’s disease diagnostics by a 3D deeply supervised adaptable convolutional network. Front Biosci 23(5):584–596

Huang Y, Xu J, Zhou Y, Tong T, Zhuang X (2019) Diagnosis of Alzheimer’s disease via multi-modality 3D convolutional neural network. Front Neurosci 13(May):509

Janghel RR, Rathore YK (2020) Deep convolution neural network based system for early diagnosis of Alzheimer’s disease. IRBM 1:1–10

Johnson KA, Sperling RA, Gidicsin CM, Carmasin JS, Maye JE, Coleman RE, Reiman EM, Sabbagh MN, Sadowsky CH, Fleisher AS, Murali Doraiswamy P, Carpenter AP, Clark CM, Joshi AD, Lu M, Grundman M, Mintun MA, Pontecorvo MJ, Skovronsky DM (2013) Florbetapir (F18-AV-45) PET to assess amyloid burden in Alzheimer’s disease dementia, mild cognitive impairment, and normal aging. Alzheimer’s Dementia. https://doi.org/10.1016/j.jalz.2012.10.007

Kang SK, Choi H, Lee JS (2021) Translating amyloid PET of different radiotracers by a deep generative model for interchangeability. Neuroimage 232(February):117890. https://doi.org/10.1016/j.neuroimage.2021.117890

Khan T (2016a) Alzheimer’ s disease cerebrospinal fluid (CSF) biomarkers. In Biomarkers in Alzheimer’s Disease, pp 139–180

Khan T (2016b) Genetic biomarkers in Alzheimer’s disease. In Khan TK (ed) Biomarkers in Alzheimer’s disease, vol 1. Academic Press, pp. 103–135

Kingma DP, Ba JL (2015) Adam: a method for stochastic optimization. In: 3rd international conference on learning representations, ICLR 2015—conference track proceedings, pp 1–15.

Kruthika KR, Rajeswari, & Mahesappa, H. D. (2019) Multistage classifier-based approach for Alzheimer’ s disease prediction and retrieval. Inform Med Unlocked 14(November 2018):34–42

Lee G, Nho K, Kang B, Sohn K, Kim D (2019) Predicting Alzheimer’s disease progression using multi-modal deep learning approach. Sci Rep 9(1):1–12

Li W, Lin X, Chen X (2020) Detecting Alzheimer’s disease based on 4D fMRI: an exploration under deep learning framework. Neurocomputing 388:280–287

Lin M, Chen Q, Yan S (2014) Network in network. ArXiv, pp 1–10

Liu M, Cheng D, Wang K, Wang Y, Alzheimer’s Disease Neuroimaging Initiative (2018) Multi-modality cascaded convolutional neural Networks for Alzheimer's disease diagnosis. Neuroinform 16(3-4):295–308. https://doi.org/10.1007/s12021-018-9370-4

Liu S, Liu S, Cai W, Che H, Pujol S, Kikinis R, Feng D, Fulham MJ (2015) Multimodal neuroimaging feature learning for multiclass diagnosis of Alzheimer’s disease. IEEE Trans Biomed Eng 62(4):1132–1140

Márquez F, Yassa MA (2019) Neuroimaging biomarkers for Alzheimer’s disease. Mol Neurodegener 5:1–14

Morris E, Chalkidou A, Hammers A, Peacock J, Summers J, Keevil S (2016) Diagnostic accuracy of 18 F amyloid PET tracers for the diagnosis of Alzheimer’s disease: a systematic review and meta-analysis. Eur J Nucl Med Mol Imaging 43:374–385. https://doi.org/10.1007/s00259-015-3228-x

Muschelli J (2022a) Brain Extraction/Segmentation

Muschelli J (2022b) Image Registration. https://doi.org/10.1007/978-3-642-41714-6_90345

Ozsahin I, Sekeroglu B, Mok GSP (2019) The use of back propagation neural networks and 18F-Florbetapir PET for early detection of Alzheimer’s disease using Alzheimer’s Disease Neuroimaging Initiative database. PLoS ONE 14(12):1–13. https://doi.org/10.1371/journal.pone.0226577

Peng Z, Ni M, Shan H, Lu Y, Li Y, Zhang Y, Pei X, Chen Z, Xie Q, Wang S, Xu XG (2021) Feasibility evaluation of PET scan-time reduction for diagnosing amyloid-β levels in Alzheimer’s disease patients using a deep-learning-based denoising algorithm. Comput Biol Med 138:104919. https://doi.org/10.1016/j.compbiomed.2021.104919

Perani D (2014) FDG-PET and amyloid-PET imaging: the diverging paths. Curr Opin Neurol. https://doi.org/10.1097/WCO.0000000000000109

Punjabi A, Martersteck A, Wang Y, Parrish TB, Katsaggelos AK (2019) Neuroimaging modality fusion in Alzheimer’s classification using convolutional neural networks. PLoS ONE. https://doi.org/10.1371/journal.pone.0225759

Qin Y, Tian C (2018) Weighted feature space representation with Kernel for image classification. Arab J Sci Eng 43(12):7113–7125. https://doi.org/10.1007/s13369-017-2952-x

Qiu S, Chang GH, Panagia M, Gopal DM, Au R (2018) Fusion of deep learning models of MRI scans, Mini-Mental State Examination, and logical memory test enhances diagnosis of mild cognitive impairment. Alzheimer’s Dementia Diagn Assess Dis Monitor 10:737–749

Qu L, Wu C, Zou L (2020) 3D Dense separated convolution module for volumetric medical image analysis. Appl Sci 10(2):485

Reith F, Koran ME, Davidzon G, Zaharchuk G (2020) Application of deep learning to predict standardized uptake value ratio and amyloid status on 18 F-florbetapir. Am J Neuroradiol 1–7

Sahumbaiev I, Popov A, Ivanushkina N, Ramírez J, Górriz JM (2018) Florbetapir image analysis for Alzheimer’s disease diagnosis. In: 2018 IEEE 38th International Conference on Electronics and Nanotechnology (ELNANO), pp 277–280

Sharma AK, Tiwari S, Aggarwal G, Goenka N, Kumar A, Chakrabarti P, Chakrabarti T, Gono R, Leonowicz Z, Jasinski M (2022) Dermatologist-level classification of skin cancer using cascaded ensembling of convolutional neural network and handcrafted features based deep neural network. IEEE Access. https://doi.org/10.1109/access.2022.3149824

Shirbandi K, Khalafi M, Mirza-Aghazadeh-Attari M, Tahmasbi M, Kiani Shahvandi H, Javanmardi P, Rahim F (2021) Accuracy of deep learning model-assisted amyloid positron emission tomography scan in predicting Alzheimer’s disease: a systematic review and meta-analysis. Inform Med Unlocked 25:100710. https://doi.org/10.1016/j.imu.2021.100710

Smith SM (2002) Fast robust automated brain extraction. Hum Brain Mapp 17(3):143–155

SPM (2022) https://www.fil.ion.ucl.ac.uk/spm/

Suk H, Lee S, Shen D, Initiative N (2014) Hierarchical feature representation and multimodal fusion with deep learning for AD / MCI diagnosis. Neuroimage. https://doi.org/10.1016/j.neuroimage.2014.06.077

Wang Y, Yang Y, Guo X, Ye C, Gao N, Fang Y, Ma HT, Ieee M (2018) A novel multimodal MRI analysis for Alzheimer’s disease based on convolutional neural network. EMBC 754–757

Wen J, Thibeau-sutre E, Diaz-melo M (2020) Convolutional neural networks for classification of Alzheimer’s disease: overview and reproducible evaluation. Med Image Anal 63:101694

Xiao J, Xu J, Tian C, Han P, You L, Zhang S (2022) A Serial attention frame for multi-label waste bottle classification. Appl Sci 12(3):1742. https://doi.org/10.3390/app12031742

Yadav D, Goenka N (2021) Comparative analysis of newton raphson and particle swarm optimization techniques for harmonic minimization in CMLI. Adv Math Sci J 10(3):1311–1317. https://doi.org/10.37418/amsj.10.3.18

Yuan Y, Wang Z, Lee W, VanGilder P, Chen Y, Reiman EM, Chen K (2018) Quantification of amyloid burden from florbetapir pet without using target and reference regions: preliminary findings based on the deep learning 3D convolutional neural network approach. Alzheimer’s Dementia 14:P315–P316. https://doi.org/10.1016/j.jalz.2018.06.121

Zhang T, Shi M (2020) Multi-modal neuroimaging feature fusion for diagnosis of Alzheimer’s disease. J Neurosci Methods 341:108795

Zheng M, Xu J, Shen Y, Tian C, Li J (2022) Attention-based CNNs for image classification: a survey. J Phys Conf Ser 2171(1):012068. https://doi.org/10.1088/1742-6596/2171/1/012068

Zunair H, Rahman A, Mohammed N (2019) Estimating severity from CT scans of tuberculosis patients using 3D convolutional nets and slice selection. CLEF 9–12

Zunair H, Rahman A, Mohammed N, Cohen JP (2020) Uniformizing techniques to process CT scans with 3D CNNs for tuberculosis prediction. ArXiv pp 1–12

Acknowledgements

The data for the study was compiled using the ADNI database. Michael W. Weiner, the Principal Investigator, launched ADNI in 2003. As an outcome, ADNI investigators were solely involved in design and implementation of ADNI data, not in the analysis or authoring of the report. I express my gratitude to the University of Petroleum and Energy Studies’ Machine Intelligence Research Centre (MiRC) for providing computational GPU infrastructure to execute this research work and thereby publishing the research contributions presented in this paper.

Funding

No funding received.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

No conflicts of interest declared.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Goenka, N., Tiwari, S. Multi-class classification of Alzheimer’s disease through distinct neuroimaging computational approaches using Florbetapir PET scans. Evolving Systems 14, 801–824 (2023). https://doi.org/10.1007/s12530-022-09467-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12530-022-09467-9