Abstract

Engagement is critical in learning, including computer-supported collaborative learning (CSCL). Previous studies have mainly measured engagement using students’ self-reports which usually do not capture the learning process or the interactions between group members. Therefore, researchers advocated developing new and innovative engagement measurements to address these issues through employing learning analytics and educational data mining (e.g., Azevedo in Educ Psychol 50(1):84–94, 2015; Henrie in Comput Educ 90:36–53, 2015). This study responded to this call by developing learning analytics to study the multifaceted aspects of engagement (i.e., group behavioral, social, cognitive, and metacognitive engagement) and its impact on collaborative learning. The results show that group behavioral engagement and group cognitive engagement have a significantly positive effect on group problem-solving performance; group social engagement has a significantly negative effect; the impact of group metacognitive engagement is not significant. Furthermore, group problem-solving performance has a significant positive effect on individual cognitive understanding, which partially mediates the impact of group behavioral engagement and fully mediates the impact of group social engagement on individual cognitive understanding. The findings have important implications for developing domain-specific learning analytics to measure students’ sub-constructs of engagement in CSCL.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Higher education has increasingly centered on cultivating students’ competencies in collaboration and preparing them for the technology-rich society, mindful that the job market will require students to work in interdisciplinary and cross-functional teams and technology-rich environments to achieve cognitively challenging tasks (Griffin et al., 2012). The effectiveness of Computer-Supported Collaborative Learning (CSCL) is well documented in the literature (e.g., Popov et al., 2018; Chen et al., 2018). In CSCL, small groups of learners usually collaborate via the computer to solve problems or co-construct knowledge; this entails the articulation and sharing of ideas to be built upon by the collaborating partners (Kollar et al., 2007; Weinberger et al., 2005). According to the perspectives of collaborative learning (King, 1997) and socio-constructivist learning theories (Duit & Treagust, 1998), knowledge is shared and co-created by a collaborative search for problem solutions. The engagement of all group members in socio-cognitive activities to work towards a common goal is a prerequisite for greater quality of learning (Csanadi et al., 2018; Dillenbourg, 1999). Given the increasing use of CSCL in higher education, it is imperative to develop an understanding of engagement in this environment, which can provide considerable insights into the effects of CSCL tasks on learning and teaching practices and how to better support students’ engagement in CSCL.

The multifaceted and dynamic nature of students’ engagement in CSCL settings remains a black box due to measurement challenges and relatively limited understanding of mutable collaborative learning practices. This study aimed to open this black box by applying novel data-driven assessment methods that could automatically identify multifaceted forms of engagement and then examine their impacts on group performance and individual learning gains. Engagement is relatively ill-defined in the literature and has been used to describe a wide range of things, such as students’ enactment of social, cognitive, metacognitive, motivational, and affective processes in the academic context (Azevedo, 2015). However, a literature review on students’ engagement in technology-mediated learning suggests that behavioral, cognitive, and emotional engagement are commonly included in surveys to measure engagement (Henrie et al., 2015). Self-reported surveys are most commonly used to measure engagement. Qualitative methods such as interviews, observations, and discourse analysis are also often used (e.g., Fredricks et al., 2004; Henrie et al., 2015). However, these methods have limitations, such as not being able to capture engagement dynamics during the learning process, being time-consuming, or not being easy to scale up. Therefore, researchers (e.g., Azevedo, 2015; Henrie et al., 2015) have advocated augmenting these traditional measurement methods with recent approaches such as learning analytics to advance the definition and operational measurement of engagement. Learning analytics offers new promise in studying multifaceted forms of engagement in collaborative learning. In particular, learning analytics enable the analysis of low-level trace data regarding students’ interactions recorded in different learning platforms (Long & Siemens, 2011). From this low-level structured data, it is possible to infer higher-level learning constructs, such as self-regulation learning and dropping-out risks (Zheng et al., 2020; Monllaó Olivé et al., 2020; Ye & Pennisi, 2022). The automatic methods based on learning analytics can potentially analyze a large amount of data and provide timely feedback and scaffolding to users (Pardo et al., 2019).

In this study, we drew insights from learning sciences regarding the multifaceted nature of student engagement in a collaborative task with computational models that automatically capture the meaning of what occurs in a learning episode. Students’ log files and chat messages enabled us to analyze their group behavioral, social, cognitive, and metacognitive engagement. However, this study did not include emotional engagement due to the constraints of the data. We used learning analytics to automatically identify multifaceted forms of engagement and then examined their impacts on group problem-solving performance and individual cognitive understanding. This study involved 99 students in 2-year colleges synchronously solving four Ohm’s Law-related series circuit problems online. Two questions guide this work:

-

1.

How to build a learning analytics model to automatically detect multifaceted engagement in collaboration learning?

-

2.

How do multifaceted forms of engagement influence group problem-solving performance and individual cognitive understanding?

Literature review

Collaborative problem solving

Collaborative problem solving, meaning making, and the sharing and construction of knowledge among students, typically involve technological mediation, characterizing CSCL (Gijlers & de Jong, 2009; Stegmann et al., 2007). CSCL is a pedagogical approach under the umbrella of social constructivism, which is strongly influenced by Vygotsky’s (1978) work, especially by the zone of proximal development (ZPD). ZPD is “the distance between the actual developmental level as determined by independent problem solving and the level of potential development as determined through problem solving under adult guidance, or in collaboration with more capable peers” (Vygotsky, 1978, p. 86). Vygotsky (1978) suggest learning is moving towards a higher intellectual level in ZPD through social interactions, which provide scaffolding opportunities. In CSCL, group members with different levels of knowledge can serve as scaffolding agents and provide learners with external guidelines and support, for example, in the form of clues, modelling, and explanations (Pifarre & Cobos, 2010).

In CSCL, small learner groups may search for information, form hypotheses, experiment, interpret, articulate, and share ideas (Stahl et al., 2006). Also, this field of research centers around high-level cognitive processes associated with peer interaction, for example, co-elaboration and negotiation of meaning, building on each other’s reasoning, argumentation, and knowledge sharing (King, 2002). Some studies have demonstrated that CSCL promotes students’ knowledge gains, skill acquisition, group task performance, interactions, and student attitude toward online collaboration (Chen et al., 2018; Jeong et al., 2016; Richardson et al., 2017; Vogel et al., 2017). However, the degree to which students learn and develop knowledge in collaborative problem-solving largely depends on the quality of peer interaction (Hmelo-Silver, 2003).

Studies on CSCL over the past two decades provide important insights into the importance of teamwork and task work processes (Graesser et al., 2018). Teamwork processes require students to coordinate procedure-related issues such as communication, planning, and task division in order to ensure the consistency of the joint work product (Xing et al., 2015b; Erkens et al., 2005). Task work processes involve students taking appropriate actions to solve the problem by establishing and maintaining cognitive shared understanding (Kirschner et al., 2008). These teamwork and task work processes match group behavioral, social, cognitive, and metacognitive engagement, such as managing time, building group relationships, contributing cognitive knowledge to solve problems, and monitoring problem solving. Efficient and effective requires the engagement of all group members at these two levels over time. The multifaceted nature of engagement in the CSCL context is described below.

Multifaceted engagement

Although there are some inconsistencies concerning the operationalization of engagement (Sinatra et al., 2015), there is an increasing tendency to understand engagement as a multifaceted, dynamic, and contextualized construct (Sinha et al., 2015). Building on constructivist and problem-based learning approaches, Kearsley and Schneiderman (1999) developed Engagement Theory where they stressed the value of technology in fostering learning engagement. This theory provides a framework for understanding the factors that promote students’ engagement in learning groups by highlighting three components: relating, creating, and donating. Relating implies students verbalizing, sharing their ideas, and explaining the reasoning behind those ideas to themselves and others in group learning activities. The creating component means that students participate in a project or problem-based learning activity which typically involves problem orientation, problem solution, and solution evaluation (Ploetzner et al., 1999). The donating component is about making a valuable contribution while learning and working on an authentic task. The three components guided the design of the group tasks that the participants worked on and suggested the importance of mining students’ chat messages and log data during their collaborative problem-solving process.

In line with Engagement Theory, we characterize engagement as one’s concentration, engagement, and persistence in completing academic tasks, including social interactions with a teacher and/or peers (Fredricks et al., 2004; Linnenbrink-Garcia et al., 2011). Within this construct, Fredricks et al. (2004) summarized the research literature on student engagement and defined three sub-constructs of engagement: behavioral, cognitive, and emotional engagement—all three support students to learn. Behavioral engagement consists of sustained on-task or off-task behaviors that learners perform while participating in academic activities (Fredricks et al., 2004). Cognitive engagement is about students’ application of domain-specific knowledge, disciplinary practices, and investment of cognitive efforts to comprehend ideas, information and master skills involved in completing the task (Gresalfi & Barab, 2010; Gresalfi et al., 2009). Emotional engagement describes students’ positive and high reactions towards their learning experiences, which influences their willingness to complete the task (Fredricks et al., 2004).

To cover the social interactions between students in collaborative learning, Sinha et al.’s (2015) definition of engagement includes behavioral, social, cognitive, and conceptual-to-consequential forms. Pekrun and Linnenbrink-Garcia (2012) proposed a five-component engagement model, including the behavioral, social-behavioral, cognitive, motivational, and cognitive-behavioral aspects. Social engagement refers to the quality of group interactions to complete a task (Sinha et al., 2015). The conceptual-to-consequential engagement (Sinha et al., 2015) and cognitive-behavioral engagement (Pekrun & Linnenbrink-Garcia, 2012) are about the metacognitive aspect of engagement, which involves the planning, monitoring, and evaluating processes to accomplish tasks (Zimmerman, 1990). The current study synthesized these definitions and explored how we can develop analytic models to automatically analyze different sub-constructs of engagement using log files and chat messages. The current study did not examine the emotional sub-construct of engagement (ideally captured through facial expressions, physiological sensors, and self-report questionnaires) due to the constraints of our data, although we consider it to be a sub-construct of engagement. Therefore, this study focused on students’ group behavioral, social, cognitive, and metacognitive engagement. In the following paragraphs, we briefly reviewed the literature on these forms of engagement, especially studies conducted in collaborative learning contexts.

Behavioral engagement is usually measured by students’ observable actions, such as participation, attendance, asking questions, and contributing to discussions (Fredricks et al., 2004; Henrie et al., 2015). Cocea and Weibelzahl (2011) employed behavioral indicators such as the time spent on reading and the number of pages read to measure engagement. Given the challenges of defining and operationalizing engagement, Gobert et al. (2015) analyzed students’ disengagement instead. They used features such as overall statistics for the clip and features related to pauses while running the simulation and time elapsed during experimentation to model disengagement detectors (Gobert et al., 2015). During collaborative learning, some behaviors may lead to disengagement, which may result in poor learning performance and group problem-solving. For instance, ignoring a member of a group, individual withdrawal of participation from group discussion, and limited response to an individual’s contributed ideas may cause whole group disengagement or lost opportunities for collaboration, resulting in undermining of learning (Rogat & Linnenbrink-Garcia, 2011; Van den Bossche et al., 2006).

Students’ social engagement plays an important role in influencing their collaborative problem solving and knowledge construction. Kwon et al. (2014) compared the social-emotional interactions between good collaborators and poor collaborators in an undergraduate clinical ethics online course. They found that the good collaborators engaged in intensive interactions among group members in the early collaboration phases and showed positive socio-emotional interactions continuously. Sinha et al. (2015) found that low-engagement groups used words that indicate a focus on individual thinking and activity, such as “I think”, “I am going to”, and “my turn”, while high-engagement groups used words that refer to the collective (e.g., we). Rogat and Adams-Wiggins (2015) developed a positive and negative socio-emotional interaction coding scheme. The positive interactions include active listening and respect, inclusion and encouraging participation of a group member or the whole group, group cohesion, discouraging marginalization, appealing to disciplinary norms, and mistakes as informational gaps (Rogat & Adams-Wiggins, 2015). In contrast, the negative interactions consist of disrespect, actively discouraging a group member’s participation and contributions to the shared task, low group cohesion, treating mistakes as incompetence, targeted ignoring/rejection, and social comparison (Rogat & Adams-Wiggins, 2015).

Students need to direct their attention to meaningfully process the information pertinent for task completion and apply domain-specific knowledge related to the tasks, which lays the basis for their cognitive engagement. Cognitive engagement is usually measured using think-aloud, log files, self-report questionnaires, classroom discourse, and tests (Azevedo, 2015). In the CSCL environment, individual domain-specific knowledge can be externalized and shared in groups via oral or written messages and manifested in their use of formulas and operations of simulations. The group members can elaborate on their domain-specific knowledge and build on each other’s ideas and reasoning to help the community better understand knowledge related to the problems they seek to solve. Furthermore, valuing student-contributed ideas and knowledge may motivate them to engage in a learning activity (Mullins et al., 2011).

In collaborative learning, mega-cognitive engagement is critical to ensure that group members are aware of their group goals; collaboratively develop and adjust plans to move towards goals; monitor the execution of the plans, development of understanding and progress towards goals; and eventually evaluate and reflect on understanding and task performance (Sinha et al., 2015). Sinha et al. (2015) found that the low-engagement group showed characteristics of ineffective initial planning, a decision to work on the task individually, members’ disengagement in terms of time, and task monitoring focusing on the spelling of components rather than the content. In contrast, the high-engagement group shared on-task activity and exhibited high-quality planning and task monitoring.

A few studies have explored the relationships between multifaceted forms of engagement and other related factors in collaborative learning. For instance, Jung and Lee (2018) examined the structural relationships between behavioral, emotional, and cognitive forms of learning engagement, learning persistence, academic self-efficacy, teaching presence, perceived usefulness, and perceived ease of use with 306 MOOC learners as subjects. Arguedas et al. (2016) measured 12 high school students’ motivation, engagement, self-regulation, and learning outcomes as well as teachers’ affective feedback information using questionnaires. They found a significant positive correlation between emotional awareness and students’ motivation, engagement, self-regulation, and learning outcomes. Sinha et al. (2015) developed a rating coding scheme describing low, moderate, or high engagement in behavioral, social, cognitive, and conceptual-to-consequential dimensions. Correction analysis between the different forms of engagement suggests that behavioral engagement and social engagement are highly correlated; behavioral, social, and cognitive engagement all impact conceptual-to-consequential engagement.

Engagement and learning analytics

Studies have examined engagement from various aspects in technology-mediated learning environments. A comprehensive review of engagement studies in technology-mediated learning (Henrie et al., 2015) suggests that about 21% of the reviewed 113 articles included behavioral, cognitive, and emotional indicators when measuring engagement. However, very few studies have labeled indicators for each of the sub-construct (Henrie et al., 2015). Furthermore, most of these studies adopted quantitative self-report, quantitative observational measures, qualitative measures, and even physiological sensors to measure engagement. However, measuring engagement using traditional methods is not only time-consuming but also delayed: the measurement usually takes place after finishing the learning task, which impedes the possibility of providing timely feedback and support to students.

The emerging scholarship of learning analytics provides new ways to measure students’ engagement while they are on task. Some studies have used log files to automatically gauge students’ engagement in massive open online courses (MOOCs). For example, Khalil and Ebner (2017) clustered MOOCs learners based on the level of engagement measured by their reading frequency, writing frequency, videos watched, and quiz attempts. Similarly, Phan et al. (2016) used learning analytics to examine students’ engagement patterns in a MOOC course and further explored its relationship with their course performance. Guo et al. (2014) studied how video production affects students’ engagement in a MOOCs course. A few studies have also investigated students’ engagement in a collaborative learning context using learning analytics. For example, Lu et al. (2017) applied learning analytics to monitor students’ engagement in order to improve their learning outcomes in a collaborative programming environment. We (Xing et al., 2015a, b) used learning analytics to measure students’ behavioral participation in order to predict group and individual group members’ achievement in a team-based math learning environment. Zhang et al. (2019) examined how mutual trust, social influence, and reward valence influence teamwork engagement in a collaborative learning environment supported by Slack. However, they measured engagement using scales and suggested leveraging data mining to detect engagement levels from Slack (Zhang et al., 2019).

To summarize, while learning analytics have been used in various ways to measure students’ engagement, most studies have focused only on one aspect of engagement, which limits the aspects of engagement that can be studied. No studies so far, to our best knowledge, attempted to detect multifaceted engagement using learning analytics in a collaborative learning situation using both quantitative (i.e., behavioral logs) and qualitative (i.e., chat message) data.

To address this research gap, we integrated both qualitative and quantitative methods by first manually coding students’ group behavioral, social, cognitive, and metacognitive engagement using their behavioral log data and chat message and then developing machine learning models to automatically detect these forms of engagement. This study joined in the conversation of employing machine learning and data mining techniques (Baker & Siemens, 2014) in measuring and predicting engagement from trace/process data such as log files.

Research model

Groups must engage in multifaceted forms of engagement to solve tasks in the CSCL context, and group members may improve their related domain-specific knowledge by engaging in collaborative problem-solving processes. To solve a group task, all group members need to communicate with each other to understand the task and plan how to solve the task. In this process, domain-specific knowledge related to the task is crucial. For example, to figure out the resistance of different resistors in a series circuit, the group members need to understand Ohm’s Law. They may need to articulate their plans to solve the task and elaborate on the relationship between different variables (e.g., resistance and voltage) or just ask the other group members to follow his/her instructions on how to conduct an experiment or how to do a sub-task. In the process, the group members need to monitor their progress and evaluate how close they are to solving the group task. The group task may be solved, or the group members may need to adjust their plan until they solve their task or run out of time. In the problem-solving process, individuals may improve their domain-specific knowledge related to the task, especially when they engage in group articulation and elaboration of the relationships between variables and mechanisms of how things work. All forms of engagement may work together to help the groups solve the task.

Therefore, as shown in Fig. 1, we hypothesize that multifaceted forms of engagement positively correlate with problem-solving performance, and problem-solving performance may mediate individual cognitive understanding of domain-specific knowledge. The followings are our detailed hypotheses:

H1

Group multifaceted forms of engagement in teamwork are positively correlated with problem-solving performance.

H1a

Group behavioral engagement in teamwork is positively correlated with group problem-solving performance.

H1b

Group social engagement in teamwork is positively correlated with group problem-solving performance.

H1c

Group cognitive engagement in teamwork is positively correlated with group problem-solving performance.

H1d

Group metacognitive engagement in teamwork is positively correlated with group problem-solving performance.

H2

Group multifaceted forms of engagement (especially cognitive engagement) in teamwork correlates positively with an individual’s cognitive understanding of the domain-specific knowledge, and group problem-solving performance mediates it.

Methods

Participants

The participants in this study were 144 students (23 females) attending five 2-year colleges in the northeastern United States. A convenience sampling method was employed to recruit the samples in local colleges, considering the complexity of working with students and teachers in multiple colleges. Among all the participants, 31 were Hispanic; 45 were eligible for free lunch; 64 students’ mothers had a college degree or above. Their average years at school were 13.2 years. Participants who did not engage in solving tasks or complete the post-survey were dropped from the analysis. Finally, 99 students in 38 groups were included in the study because, in some groups, only one or two students completed the post-survey.

Research context

The students worked in their original classes and were instructed by their teachers from respective institutions. Each student was first instructed to sit one per computer and then randomly assigned to groups of three. A class code was given to each student so that students from the same class could enter the same online space in the teaching teamwork platform (Zhu et al., 2019). The students engaged in a 90-min learning session in which they got familiar with the platform, attempted to solve four tasks related to Ohm’s Law with increasing complexity, and completed a post-survey on their cognitive understanding of Ohm’s Law. Such a topic was chosen because of the good match between students’ learning objectives and our developed curriculum and platform.

The group members could only communicate via the chatting box on the platform to best record their collaborative problem solving. A fake name was assigned to each student on the online platform to avoid the possibility that group members in the same classroom would orally discuss their problem solving. As shown in Fig. 2, in a series circuit, the voltage across the resistor controlled by the student Tiger is influenced by the voltage across the resistors controlled by the other two members in Group Animals. To achieve the goal of making the voltage 1.9 V and calculating the unknown E (i.e., electromotive force), Tiger must chat with the other two members to figure out their respective goals and the resistance of their resistors. Only when all group members report the resistance simultaneously and measure the current, can Tiger or other group members calculate E using Ohm’s Law E = I*(R0 + R1 + R2 + R3). Only when E becomes known, can Tiger and other group members negotiate how they should adjust their resistance based on their respective goals of achieving a specific voltage using the formulas R1 = V1/[(E − V1 − V2 − V3)/R0], R2 = V2/[(E − V1 − V2 − V3)/R0], and R3 = V3/[(E − V1 − V2 − V3)/R0]. Therefore, an individual group member cannot solve the task even if they have a good understanding of Ohm’s Law.

Instruments and data collections

The data sources for this study mainly include: (1) all the behavior data by each group member in each group, such as changing circuits, adjusting resistance, using the digital multimeter, and performing calculations; (2) chat messages by group members; (3) the timestamp of the behaviors and chat messages were recorded using the teaching teamwork platform; (4) group problem-solving performance for each task; and (5) post-survey on students’ cognitive understanding of Ohm’s Law.

The group problem-solving performance for each task was measured by the teaching teamwork platform based on whether a task was solved—if correct resistance and supply voltage (for level 3 and level 4 tasks) were figured out and submitted. If a task was solved, we coded the group’s problem-solving performance for this task as “1”; otherwise, it was coded as “0”. The individual cognitive understanding in a group was measured using the mean of group members’ performance on the six-item post-survey on Ohm’s Law. Cronbach’s alpha of the survey items reached 0.72, which is acceptable (van Zyl et al., 2000). There were five radio-button questions and one multiple-choice question. The evaluation of the items was straightforward—the students would get one point for selecting the correct answer for each of the radio-button questions and two points for selecting both of the correct answers to the multiple-choice question. Some examples are as follows:

A circuit contains two resistors, R1 and R2, in series. The resistance of R1 is greater than the resistance of R2. Check all of the following true statements.

Use the schematic below to answer this question. Select the formula that gives the total resistance of this circuit.

Learning analytics for multifaceted engagement detection

To identify the multifaceted engagement in a large number of groups and provide timely feedback to students, we employed learning analytics to automate the engagement detection process. We implemented four steps for such automatic detection. First, based on the definitions of various forms of engagement as described in the “Multifaceted Engagement” section, various features that correspond to each facet of engagement were calculated from the log data. Table 1 shows the detailed indicators of how we detected multifaceted engagement in the log data. Group behavioral engagement is mainly about on-task behaviors (Fredricks et al., 2004). Accordingly, we extracted relevant on-task indicators such as the number of chat messages, the number of performed calculations, and the number of times a group member adjusted the resistance. Group social engagement was measured based on the interaction levels and quality (Sinha et al., 2015) between group members, using indicators of the time delay between sending messages and the number of reciprocal interactions in a group. The group cognitive engagement was about students using domain-specific knowledge to solve a group task (Gresalfi & Barab, 2010; Gresalfi et al., 2009). Therefore, students’ elaboration on the relationships between variables in chat messages and the formulas input in calculators were used as indicators of their group cognitive engagement. We extracted the formulas used in the log data and transformed related chat messages into formulas. Then we understood the variables and values in the formulas by matching them with the column titles (representing variables) and values in certain columns. The formulas were then compared to various forms of Ohm’s Law to understand their scientific accuracy. The group metacognitive engagement refers to students’ planning, monitoring, and evaluating processes when accomplishing tasks (Zimmerman, 1990). Therefore, whether groups discussed the goal, developed a plan, elaborated on the plan, and reported and monitored the status in the chat were used as indicators of group metacognitive engagement.

Second, two researchers with an extensive background in collaborative learning and were familiar with the log data developed a rating scheme, as shown in Table 2. In the rating scheme, all the indicators of the four forms of engagement were categorized into the low, medium, and high levels; Table 2 shows the detailed criteria used to classify each indicator. We randomly selected the log data of 31 tasks out of 100 tasks. Then based on the indicators of each form of engagement described in Table 1, two researchers independently rated the selected tasks into low, moderate, or high levels of each form of engagement using the rating scheme. The inter-reliability of the rating between the two researchers was substantial (Kappa = 0.74, Landis & Koch, 1977). All the disagreements between the two researchers were discussed and resolved. This manual coding along with the constructed measures in the first step served as the training data. Third, using the training data as input, a variety of supervised machine learning algorithms, including Decision Tree (DT), Random Forest (RF), K-Nearest Neighbors (KNN), and Support Vector Machines (SVM) were used to build automatic detection models. All these machine learning algorithms have been widely used in learning analytics for prediction and have yielded promising results (Hasan et al., 2020; Wiyono et al., 2020). RF was selected because of its ensemble feature that crowdsources intelligence from numerous decision trees, the mechanism of which can effectively boost its predictive performance (Kovanović et al., 2016). While RF is usually hypothesized to have superior performance, conflicting results have been identified (e.g., Murugan et al., 2019; Shah et al., 2020). Therefore, we also selected three other algorithms commonly used in education to benchmark with RF to fully understand the affordances of these algorithms. Details of these models can be found in Table 3. Specifically, for each form of engagement, an independent supervised machine learning model was built. Fourth, after the automatic engagement detection models were built and their performance was validated, we applied the models to the rest of the dataset so that every task had an associated low, medium, and high engagement rating for each form of engagement.

Path modeling and mediation analysis

Path analysis is a multivariate statistical modeling method used to investigate the relationship between a set of independent and dependent variables (Alwin & Hauser, 1975). It is a straightforward extension of multiple regression (Garson, 2013). Path models were proposed as a way of decomposing correlations into various pieces of interpreting effects. This study uses a special case of structural equation modeling using only one indicator for each variable in the analysis. As a result, path modeling has a structure to test but does not require a measurement model. Path modeling usually generates two types of effects, direct and indirect/mediating effects. Therefore, path modeling analysis can deal with the causal order between the variables. As in Fig. 1, the engagement indicators are computed as a group, and so does the group performance. Cognitive understanding is calculated individually.

To further reveal the influencing process of the proposed structure, Baron and Kenny’s (1986) three-step method was applied to examine the mediating effects in this study. For a mediating effect to occur, first, an independent variable must influence the mediating variable significantly and the independent variable must also affect the dependent variable significantly. Then, both the independent variable and the mediating variable are employed to predict the dependent variable: if both of the variables significantly affect the dependent variable, then the mediating variable partially mediates the influence of the independent variable on the dependent variable; if the influence of the mediating variable is significant, but the effect of the independent variable is not, the mediating variable fully mediates the influence of the independent variable on the dependent variable.

Results

Learning analytics results for RQ1

The features and the manually coded categories were inputted into various supervised machine learning algorithms to optimize engagement detection performance. Table 4 presents the performance results for specific models for each facet of engagement. This table demonstrated that four learning analytics models were constructed to automatically detect the four kinds of engagement respectively. Generally, the DT and SVM models performed better than the other models for this engagement detection purpose. That is, SVM performed best when detecting group behavioral engagement. It can reach a 76.7% precision rate and 83.3% recall rate. The DT model outperformed others in group cognitive engagement (precision 78.3%, recall 69.0%), group metacognitive engagement (precision 83.3%, recall 93.3%) and group social engagement detection (precision 86.7%, recall 66.7%). The general performance range for supervised machine learning models in the learning analytics literature is between 60 and 85% (Dalal & Zaveri, 2011). Therefore, the automatic detection models of multifaceted engagement using learning analytics were considered excellent detection models. We then applied the learning analytics models to the rest of the data to identify their multifaceted engagement. Table 5 presents the results, along with group-level performance and individual cognitive understanding results. The groups had relatively high group behavioral and group cognitive engagement when working on the tasks, while they had a relatively low group social and group metacognitive engagement.

Path modeling and mediation analysis results for RQ2

The path modeling results are presented in Fig. 3 and Table 6. Figure 3 shows the graphical representation with path coefficients. Table 6 presents the hypotheses, path coefficient (β), and the p values. As demonstrated in Table 6, H1a, H1c, and H2 are supported, while H1b and H1b are rejected. H1a posits that the group behavioral engagement of group members positively influences their group problem-solving performance. Students’ group behavioral engagement has a statistically positive effect on group problem-solving performance (0.34, p < 0.01). Therefore, H1a is supported. H1b is rejected since group social engagement negatively affects group problem-solving performance (− 0.49, p < 0.001) rather than the hypothesized positive effect. H1c is confirmed since the group cognitive engagement positively affects the group problem-solving performance (0.33, p < 0.05). H1d is not supported; that is, group metacognitive engagement has no statistically significant influence on group problem-solving performance. H2 is confirmed: the group problem-solving performance has a statistically positive effect on individual students’ cognitive understanding.

Overall, group behavioral engagement has a larger positive effect on group problem-solving performance than group cognitive engagement. Group social engagement has the largest effect, even though it negatively influences group problem-solving performance. Group problem-solving performance can influence individual cognitive understanding, even though the effect is relatively small. The path modeling results showed that multifaceted engagement alone explains 17.5% variance of the group problem-solving performance and 10.7% variance of the individual cognitive understanding.

We further investigated the mediating effects in the path model using Baron and Kenny’s (1986) three-step method. The results appear in Table 7. The direct link between group behavioral engagement and individual cognitive understanding is significant (0.08, p < 0.01), satisfying the first criterion. Group behavioral engagement is also significantly correlated with the mediating variable, group problem-solving performance (0.25, p < 0.05), meeting the second criterion. When we put the group behavioral engagement and group problem-solving performance in the same model, they both have a significant influence on the individual cognitive understanding (0.06, p < 0.05; 0.08, p < 0.01). Therefore, group problem-solving performance partially mediates the influence of group behavioral engagement on individual cognitive understanding.

The direct link between group social engagement and individual cognitive understanding is significant (− 0.08, p < 0.05), satisfying the first criterion. Group social engagement is also significantly correlated with the mediating variable, group problem-solving performance (− 0.34, p < 0.01), meeting the second criterion. When we put the group social engagement and group problem-solving performance in the same model, group problem-solving performance has a statistically significant influence on individual understanding (0.09, p < 0.05). However, group social engagement does not significantly affect individual cognitive understanding. Therefore, the group problem-solving performance fully mediated the impact of group social engagement on individual cognitive understanding. Since group cognitive engagement is not significantly related to group problem-solving performance and individual understanding, group cognitive engagement performance has no mediation effect on individual understanding.

Discussions

In the present research, we examined the multifaceted engagement, group problem-solving performance, and individual cognitive understanding of 99 college students working in a CSCL environment. We first built a learning analytics model using supervised machine learning to analyze log data and chat messages to account for the multifaceted forms of engagement. Second, path modeling and mediation analysis were performed to reveal the intertwined relationship between various aspects of engagement with group problem-solving performance and individual cognitive understanding.

The results support the hypothesis that group behavioral and cognitive engagement positively affected group problem-solving performance. To solve the tasks, the students needed to apply Ohm’s Law-related knowledge to propose possible solutions, communicate with peers, make plans, experiment, monitor their experimentation, and refine plans if necessary. These processes involved group behavioral and cognitive engagement. Behavioral engagement, or on-task participation, is necessary for high-quality collaborative engagement (Sinha et al., 2015) because the individual withdrawal of participation usually results in losing collaborative opportunities and whole group disengagement (Van den Bossche et al., 2006). However, behavioral engagement only is not sufficient for high-quality collaboration because students who are not cognitively engaged are not likely to solve problems or learn (Engle & Conant, 2002). Similarly, Barron’s study (2003) suggested how students responded to ideas differentiated successful and less successful groups: successful groups accepted or further discussed proposals and documented them, while less successful groups responded to ideas with silence or rejections without rationale. In this study, using formulas to calculate, using Ohm’s law for reasoning, or strategically adjusting the resistance represent students’ efforts to adopt their Ohm’s Law-related knowledge to solve the tasks. Among these cognitive practices, some of the participants tried strategically by measuring the voltage, increasing the resistance if the measured voltage across the resistor was lower than the goal voltage, and lowering the resistance if the measured voltage across the resistor was higher than the goal voltage, indicating their understanding of the relationship between the resistance and voltage across a resistor, maybe in a simpler form. This phenomenon also helps explain why group social and cognitive engagement positively impacts group problem-solving performance.

Surprisingly, the results showed that group social engagement negatively affected group problem-solving performance. A possible reason may be that we measured group social engagement using time delay between messages, the different number of messages each group member sent, and the number of reciprocal messages between group members. However, we did not check the content of the messages, such as whether the students were on task, discussed how to solve the problems, or just chatted for fun. Off-topic discussion or other forms of social interaction do not significantly impact problem-solving results (Kapur, 2008). Even if the students frequently reported the voltage across their resistors to each other, which indicates their good group social engagement, they might still fail to solve the tasks if they did not discuss strategies or use content knowledge. Our previous studies (Popov et al., 2018; Zhu et al., 2019) on the same dataset showed that more than 60% of group chat messages were about understanding the tasks and monitoring group progress, suggesting that group social engagement may not necessarily contribute to knowledge construction or problem-solving regarding the tasks. From the perspective of Vygotsky’s (1978) ZDP, there may not be “more capable peers” (p. 86) in each group or an ideal social space for the “more capable peers” to contribute cognitive knowledge to scaffold the potential development of other group members at their ZDP. Further research is needed to create ideal social and cognitive environments for optimal collaborative problem solving.

Another surprising result was that the effect of group metacognitive engagement on group problem-solving performance is insignificant. This result may relate to the low proportion of group metacognitive engagement relevant messages in our data, as suggested by the relatively low mean value of group metacognitive engagement. Another possible explanation is that the group members did not co-construct plans, but rather one group member proposed a plan and monitored the problem-solving process. Then the other group members did not understand the plan and could not execute the plan appropriately to solve the task as a group. Or as Popov et al. (2017) suggested, students might reason based on misconceptions and false beliefs. In this way, group mega-cognitive engagement would not lead to successful problem-solving.

The result shows that individual cognitive understanding can be influenced by group problem-solving performance in a positive way, suggesting whether a group could solve tasks successfully impacts an individual’s cognitive understanding. The reason may be that successful problem solving requires each group members’ collaboration in adjusting their resistors. In order to persuade the other group members to execute a plan, a student might elaborate on his/her proposal using content knowledge. In this way, the other group members got chances to learn domain-specific knowledge in the application context, which enhanced their cognitive understanding. The reason why the effect of group problem-solving performance on individual cognitive understanding is relatively small may be that the students did not frequently elaborate on proposals in groups (Xing et al., 2019), and sometimes, students could successfully solve tasks by following other group members’ proposals without achieving an understanding of the reasons. Furthermore, students’ prior cognitive understanding, which was not measured in this study, might play a more important role in determining individual cognitive understanding after the tasks.

The impact of group social engagement on individual cognitive understanding is fully mediated by group problem-solving performance, while the impact of group behavioral engagement on individual cognitive understanding is partially mediated by group problem-solving performance. Group problem-solving performance fully mediating the impact of group social engagement on individual cognitive understanding suggests that only when students’ communication was about solving the tasks and they could solve the tasks successfully, could the communication between students benefit individual cognitive understanding. Differently, the literature on productive failure in problem-solving demonstrates that when student groups engaged in ill-structured and well-structured problem-solving tasks, complex and divergent group discussions that result in failure can still foster individual cognitive understanding and a potential transfer of problem-solving skills (Kapur, 2008). The reason why group problem-solving performance partially mediates the impact of group behavioral engagement may be that as discussed above, through adjusting the resistors and measuring the voltage across the resistors, students might achieve a better understanding of the relationship between resistance and voltage in a series circuit. In this way, students might not solve group tasks. Therefore, the group problem-solving process provided one way for the students to improve their cognitive understanding, and the students could also improve their understanding by experimenting with the platform individually.

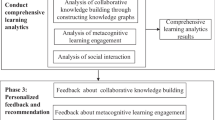

This study has theoretical, methodological, and instructional implications for measuring and fostering multifaceted engagement using learning analytics. Theoretically, this research employed both behavioral logs and chat messages collected during the collaborative learning process, deconstructed engagement into sub-constructs including group behavioral, social, cognitive, and metacognitive engagement, and detected engagement in a much more granular format using learning analytics. This study contributes to the literature concerning measuring students’ engagement in collaborative learning. Methodologically, most of the previous studies for measuring engagement used traditional social science methods, such as self-reported questionnaires, interviews, and reflection, often in a post-hoc manner (Fredricks et al., 2004; Henrie et al., 2015). That is, they examined students’ engagement after students finished the learning tasks (Guertin et al., 2007; Sun & Rueda, 2012). In this study, we showed that it is possible to automatically detect students’ engagement using log data and chat messages. Such automatic detection using the log data and chat messages builds the foundation to monitor students’ engagement in real-time and in a more comprehensive manner rather than only measuring one aspect of engagement. From the instructional and practical perspectives, educators and researchers must understand that the implementation of CSCL requires a considerable amount of preparation. Simply sending students off to work in CSCL groups does not guarantee productive engagement and successful collaboration. As an automated help for teachers, the detection results can be incorporated into an automatic agent and serve as timely feedback to support potential interventions. The interventions can either be delivered through instructors or learning platforms embedded with the automatic agent. For instance, prompts can be provided to remind students that they need to engage in their tasks behaviorally and cognitively if mainly a high level of group social engagement is detected. The detection results may also inform instructors on how to adjust their classroom instruction and activities. For instance, if most groups have relatively low levels of group cognitive engagement, it may be because of their lack of relevant domain-specific knowledge and disciplinary practices or failure to build a safe environment for group members to elaborate on their ideas. Strategies should be developed to solve these issues.

This study has several limitations. First, the tasks that the groups worked on were not open-ended problems but were fixed tasks that had correct answers. Although the tasks require students to communicate and collaborate, a group may solve the tasks if one group member knows how to and the others follow this student’s instructions. These weaken the participants’ need to behaviorally, socially, cognitively and metacognitively engage in the group problem-solving process. Second, students’ prior knowledge of Ohm’s law was not tested or controlled in this study. Although this limitation may not matter significantly in a study that focused on students’ group problem-solving process that randomly assigned students into small groups, future research can address this issue. Third, this exploratory study represents an ambitious effort to study the possibility of modelling students’ multifaceted engagement using log and chat data and uncovering the relationships between different types of group engagement, group problem-solving, and individual cognitive understanding. Accordingly, different concepts and analyses (e.g., multifaceted engagement, group problem-solving, individual cognitive understanding, learning analytics, and path modelling) were included in the study. Future research can extend the current study by collecting and labelling more data to improve the accuracies of multifaceted engagement detection models and verify the relationships between multifaceted forms of engagement, group problem-solving processes, and individual cognitive understanding. Future studies can replicate this study in other content areas and collaborative learning environments to examine the generalizability of the findings. Finally, the learning analytics models we developed suggest the possibility of developing a learning analytics agent to detect students’ multifaceted engagement in real time and provide students with timely feedback. It is worth examining how to work with such an agent to promote student learning. A starting point might be studying how to improve detection accuracy and present feedback to students and teachers for optimal collaborative experiences and learning outcomes.

Conclusions

This study explores the use of learning analytics to understand the role of multifaceted engagement in collaborative learning. We found that group behavioral and cognitive engagement have significantly positive effects on group problem-solving performance, while group social engagement has a significantly negative effect; and the group problem-solving performance has a significant, positive effect on individual cognitive understanding, which partially mediates the impact of group behavioral engagement and fully mediates the impact of group social engagement on individual cognitive understanding. This study bridges CSCL and learning analytics and contributes to a priority of CSCL research concerning using computational approaches (Wise & Schwarz, 2017). It not only methodologically contributes to the engagement research and learning analytics communities but also generates new understandings of the interplay between the different aspects of engagement with collaborative learning processes.

References

Akçapınar, G., Altun, A., & Aşkar, P. (2019). Using learning analytics to develop early-warning system for at-risk students. International Journal of Educational Technology in Higher Education, 16(1), 1–20.

Alwin, D. F., & Hauser, R. M. (1975). The decomposition of effects in path analysis. American Sociological Review. https://doi.org/10.2307/2094445

Arguedas, M., Daradoumis, T., & Xhafa, F. (2016). Analyzing how emotion awareness influences students’ motivation, engagement, self-regulation and learning outcome. Educational Technology & Society, 19(2), 87–103.

Azevedo, R. (2015). Defining and measuring engagement and learning in science: Conceptual, theoretical, methodological, and analytical issues. Educational Psychologist, 50(1), 84–94. https://doi.org/10.1080/00461520.2015.1004069

Baker, R., & Siemens, G. (2014). Educational data mining and learning analytics. In K. Sawyer (Ed.), The Cambridge handbook of the learning sciences (2nd ed., pp. 253–274). Cambridge University Press.

Baron, R. M., & Kenny, D. A. (1986). The moderator–mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology, 51(6), 1173. https://doi.org/10.1037//0022-3514.51.6.1173

Barron, B. (2003). When smart groups fail. The Journal of the Learning Sciences, 12(3), 307–359. https://doi.org/10.1207/S15327809JLS1203_1

Chen, J., Wang, M., Kirschner, P. A., & Tsai, C.-C. (2018). The role of collaboration, computer use, learning environments, and supporting strategies in CSCL: A meta-analysis. Review of Educational Research, 88(6), 799–843. https://doi.org/10.3102/0034654318791584

Cocea, M., & Weibelzahl, S. (2011). Disengagement detection in online learning: Validation studies and perspectives. IEEE Transactions on Learning Technologies, 4, 114–124. https://doi.org/10.1109/TLT.2010.14

Csanadi, A., Eagan, B., Kollar, I., Shaffer, D. W., & Fischer, F. (2018). When coding-and-counting is not enough: Using epistemic network analysis (ENA) to analyze verbal data in CSCL research. International Journal of Computer-Supported Collaborative Learning, 13(4), 419–438. https://doi.org/10.1007/s11412-018-9292-z

Dalal, M. K., & Zaveri, M. A. (2011). Automatic text classification: A technical review. International Journal of Computer Applications, 28(2), 37–40. https://doi.org/10.5120/3358-4633

Dillenbourg, P. (1999). What do you mean by collaborative learning? In P. Dillenbourg (Ed.), Collaborative learning: Cognitive and computational approaches (pp. 1–19). Pergamon.

Duit, R., & Treagust, D. (1998). Learning in science: From behaviorism towards social constructivism and beyond. In B. J. Fraser & K. G. Tobin (Eds.), International handbook of science education. Dordrecht: Kluwer Academic Publishers.

Engle, R. A., & Conant, F. R. (2002). Guiding principles for fostering productive disciplinary engagement: Explaining an emergent argument in a community of learners classroom. Cognition and Instruction, 20, 399–483. https://doi.org/10.1207/S1532690XCI2004_1

Erkens, G., Jaspers, J., Prangsma, M., & Kanselaar, G. (2005). Coordination processes in computer supported collaborative writing. Computers in Human Behavior, 21(3), 463–486. https://doi.org/10.1016/j.chb.2004.10.038

Fredricks, J., Blumenfeld, P., & Paris, P. (2004). School engagement: Potential of the concept, state of the evidence. Review of Educational Research, 74, 59–109. https://doi.org/10.3102/00346543074001059

Garson, G. D. (2013). Path analysis. Statistical Associates Publishing.

Gijlers, H., & de Jong, T. (2009). Sharing and confronting propositions in collaborative learning. Cognition and Instruction, 27(3), 239–268. https://doi.org/10.1080/07370000903014352

Gobert, J. D., Baker, R. S., & Wixon, M. B. (2015). Operationalizing and detecting disengagement within online science microworlds. Educational Psychologist, 50(1), 43–57. https://doi.org/10.1080/00461520.2014.999919

Graesser, A. C., Fiore, S. M., Greiff, S., Andrews-Todd, J., Foltz, P. W., & Hesse, F. W. (2018). Advancing the science of collaborative problem solving. Psychological Science in the Public Interest, 19(2), 59–92. https://doi.org/10.1177/1529100618808244

Gresalfi, M., & Barab, S. (2010). Learning for a reason: Supporting forms of engagement by designing tasks and orchestrating environments. Theory into Practice, 50, 300–310. https://doi.org/10.1080/00405841.2011.607391

Gresalfi, M., Barab, S., Siyahhan, S., & Christensen, T. (2009). Virtual worlds, conceptual understanding, and me: Designing for consequential engagement. On the Horizon, 17, 21–34. https://doi.org/10.1108/10748120910936126

Griffin, P., McGaw, B., & Care, E. (2012). Assessment and teaching of 21st century skills. Melbourne, Australia: Springer. https://doi.org/10.1007/978-94-007-2324-5

Guertin, L. A., Bodek, M. J., Zappe, S. E., & Kim, H. (2007). Questioning the student use of and desire for lecture podcasts. MERLOT Journal of Online Learning and Teaching, 3(2), 133–141.

Guo, P. J., Kim, J., & Rubin, R. (2014). How video production affects student engagement: An empirical study of MOOC videos. In Proceedings of the First ACM Conference on Learning@ scale conference (pp. 41–50). ACM. https://doi.org/10.1145/2556325.2566239

Hasan, R., Palaniappan, S., Mahmood, S., Abbas, A., Sarker, K. U., & Sattar, M. U. (2020). Predicting student performance in higher educational institutions using video learning analytics and data mining techniques. Applied Sciences, 10(11), 3894.

Henrie, C. R., Halverson, L. R., & Graham, C. R. (2015). Measuring student engagement in technology-mediated learning: A review. Computers & Education, 90, 36–53. https://doi.org/10.1016/j.compedu.2015.09.005

Hmelo-Silver, C. E. (2003). Analyzing collaborative knowledge construction: Multiple methods for integrated understanding. Computers & Education, 41(4), 397–420. https://doi.org/10.1016/j.compedu.2003.07.001

Ifenthaler, D., & Widanapathirana, C. (2014). Development and validation of a learning analytics framework: Two case studies using support vector machines. Technology, Knowledge and Learning, 19(1–2), 221–240.

Jeong, H., Hmelo-Silver, C. E., Jo, K., & Shin, M. (2016). CSCL in STEM education: Preliminary findings from a meta-analysis. In 2016 49th Hawaii international conference on system sciences (HICSS) (pp. 11–20). IEEE. https://doi.org/10.1109/HICSS.2016.11

Jung, Y., & Lee, J. (2018). Learning engagement and persistence in massive open online courses (MOOCS). Computers & Education, 122, 9–22. https://doi.org/10.1016/j.compedu.2018.02.013

Kapur, M. (2008). Productive failure. Cognition and Instruction, 26(3), 379–424. https://doi.org/10.1080/07370000802212669

Kearsley, G., & Schneiderman, B. (1999). Engagement theory: A framework for technology-based learning and teaching. Educational Technology, 38(5), 20–23.

Khalil, M., & Ebner, M. (2017). Clustering patterns of engagement in massive open online courses (MOOCs): The use of learning analytics to reveal student categories. Journal of Computing in Higher Education, 29(1), 114–132. https://doi.org/10.1007/s12528-016-9126-9

King, A. (1997). ASK to THINK-TELL WHY: A model of transactive peer tutoring for scaffolding higher level complex learning. Educational Psychologist, 32(4), 221–235. https://doi.org/10.1207/s15326985ep3204_3

King, A. (2002). Structuring peer interaction to promote high-level cognitive processing. Theory into Practice, 41(1), 33–39. https://doi.org/10.1207/s15430421tip4101_6

Kirschner, P. A., Beers, P. J., Boshuizen, H. P., & Gijselaers, W. H. (2008). Coercing shared knowledge in collaborative learning environments. Computers in Human Behavior, 24(2), 403–420. https://doi.org/10.1016/j.chb.2007.01.028

Kollar, I., Fischer, F., & Slotta, J. D. (2007). Internal and external scripts in computer-supported collaborative inquiry learning. Learning & Instruction, 17(6), 708–721. https://doi.org/10.1016/j.learninstruc.2007.09.021

Kovanović, V., Joksimović, S., Waters, Z., Gašević, D., Kitto, K., Hatala, M., & Siemens, G. (2016). Towards automated content analysis of discussion transcripts: A cognitive presence case. In Proceedings of the sixth international conference on learning analytics & knowledge (pp. 15–24).

Kwon, K., Liu, Y. H., & Johnson, L. P. (2014). Group regulation and social-emotional interactions observed in computer supported collaborative learning: Comparison between good vs. poor collaborators. Computers & Education, 78, 185–200. https://doi.org/10.1016/j.compedu.2014.06.004

Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33, 159–174. https://doi.org/10.2307/2529310

Linnenbrink-Garcia, L., Rogat, T. K., & Koskey, K. L. (2011). Affect and engagement during small group instruction. Contemporary Educational Psychology, 36(1), 13–24. https://doi.org/10.1016/j.cedpsych.2010.09.001

Long, P., & Siemen, G. (2011). Penetrating the fog: analytics in learning and education. EDUCAUSE Review, 46(5), 30–40. https://doi.org/10.17471/2499-4324/195

Lu, O. H., Huang, J. C., Huang, A. Y., & Yang, S. J. (2017). Applying learning analytics for improving students engagement and learning outcomes in an MOOCs enabled collaborative programming course. Interactive Learning Environments, 25(2), 220–234. https://doi.org/10.1080/10494820.2016.1278391

Monllaó Olivé, D., Huynh, D. Q., Reynolds, M., Dougiamas, M., & Wiese, D. (2020). A supervised learning framework: Using assessment to identify students at risk of dropping out of a MOOC. Journal of Computing in Higher Education, 32(1), 9–26. https://doi.org/10.1007/s12528-019-09230-1

Mullins, D., Rummel, N., & Spada, H. (2011). Are two heads always better than one? Differential effects of collaboration on students’ computer-supported learning in mathematics. International Journal of Computer-Supported Collaborative Learning, 6(3), 421–443. https://doi.org/10.1007/s11412-011-9122-z

Murugan, A., Nair, S. A. H., & Kumar, K. S. (2019). Detection of skin cancer using SVM, random forest and kNN classifiers. Journal of Medical Systems, 43(8), 1–9.

Pardo, A., Jovanovic, J., Dawson, S., Gašević, D., & Mirriahi, N. (2019). Using learning analytics to scale the provision of personalized feedback. British Journal of Educational Technology, 50(1), 128–138. https://doi.org/10.1111/bjet.12592

Pekrun, R., & Linnenbrink-Garcia, L. (2012). Academic emotions and student engagement. In S. L. Christenson, A. L. Reschly, & C. Wylie (Eds.), Handbook of research on student engagement (pp. 259–282). Springer. https://doi.org/10.1007/978-1-4614-2018-7_12

Phan, T., McNeil, S. G., & Robin, B. R. (2016). Students’ patterns of engagement and course performance in a massive open online course. Computers & Education, 95, 36–44. https://doi.org/10.1016/j.compedu.2015.11.015

Pifarre, M., & Cobos, R. (2010). Promoting metacognitive skills through peer scaffolding in a CSCL environment. International Journal of Computer-Supported Collaborative Learning, 5(2), 237–253. https://doi.org/10.1007/s11412-010-9084-6

Ploetzner, R., Dillenbourg, P., Preier, M., & Traum, D. (1999). Learning by explaining to oneself and to others. Collaborative Learning: Cognitive and Computational Approaches, 1, 103–121.

Popov, V., van Leeuwen, A., & Buis, S. C. A. (2017). Are you with me or not? Temporal synchronicity and transactivity during CSCL. Journal of Computer Assisted Learning, 33(5), 424–442. https://doi.org/10.1111/jcal.12185

Popov, V., Xing, W., Zhu, G., Horwitz, P., & McIntyre, C. (2018, June). The influence of students’ transformative and non-transformative contributions on their problem solving in collaborative inquiry learning. In The proceedings of the 13th international conference of the learning sciences (pp. 855–862). International Society of the Learning Sciences.

Richardson, J. C., Maeda, Y., Lv, J., & Caskurlu, S. (2017). Social presence in relation to students’ satisfaction and learning in the online environment: A meta-analysis. Computers in Human Behavior, 71, 402–417. https://doi.org/10.1016/j.chb.2017.02.001

Rizvi, S., Rienties, B., & Khoja, S. A. (2019). The role of demographics in online learning; A decision tree based approach. Computers & Education, 137, 32–47.

Rogat, T. K., & Adams-Wiggins, K. R. (2015). Interrelation between regulatory and socioemotional processes within collaborative groups characterized by facilitative and directive other-regulation. Computers in Human Behavior, 52, 589–600. https://doi.org/10.1016/j.chb.2015.01.026

Rogat, T. K., & Linnenbrink-Garcia, L. (2011). Socially shared regulation in collaborative groups: An analysis of the interplay between quality of social regulation and group processes. Cognition and Instruction, 29(4), 375–415. https://doi.org/10.1080/07370008.2011.607930

Shah, K., Patel, H., Sanghvi, D., & Shah, M. (2020). A comparative analysis of logistic regression, random forest and KNN models for the text classification. Augmented Human Research, 5(1), 1–16.

Sinatra, G. M., Heddy, B. C., & Lombardi, D. (2015). The challenges of defining and measuring student engagement in science. Educational Psychologist, 50(1), 1–13. https://doi.org/10.1080/00461520.2014.1002924

Sinha, S., Rogat, T. K., Adams-Wiggins, K. R., & Hmelo-Silver, C. E. (2015). Collaborative group engagement in a computer-supported learning environment. International Journal of Computer-Supported Collaborative Learning, 10(3), 273–307. https://doi.org/10.1007/s11412-015-9218-y

Stahl, G., Koschmann, T., & Suthers, D. (2006). Computer-supported collaborative learning: An historical perspective. In R. K. Sawyer (Ed.), Cambridge handbook of the learning sciences (pp. 409–426). Cambridge University Press.

Stegmann, K., Kollar, I., Zottmann, J., Gijlers, H., De Jong, T., Dillenbourg, P., & Fischer, F. (2007). Towards the convergence of CSCL and learning: Scripting collaborative learning. In Proceedings of the 8th international conference on Computer-supported collaborative learning (pp. 831–832). International Society of the Learning Sciences.

Sun, J. C. Y., & Rueda, R. (2012). Situational interest, computer self-efficacy and self-regulation: Their impact on student engagement in distance education. British Journal of Educational Technology, 43(2), 191–204. https://doi.org/10.1111/j.1467-8535.2010.01157.x

Van den Bossche, P., Gijselaers, W. H., Segers, M., & Kirschner, P. A. (2006). Social and cognitive factors driving teamwork in collaborative learning environments team learning beliefs and behaviors. Small Group Research, 37, 490–521. https://doi.org/10.1177/1046496406292938

van Zyl, J. M., Neudecker, H., & Nel, D. G. (2000). On the distribution of the maximum likelihood estimator of Cronbach’s alpha. Psychometrika, 65(3), 271–280. https://doi.org/10.1007/BF02296146

Vogel, F., Wecker, C., Kollar, I., & Fischer, F. (2017). Socio-cognitive scaffolding with computer-supported collaboration scripts: A meta-analysis. Educational Psychology Review, 29(3), 477–511. https://doi.org/10.1007/s10648-016-9361-7

Vygotsky, L. S. (1978). Mind in society. Harvard University Press.

Weinberger, A., Ertl, B., Fischer, F., & Mandl, H. (2005). Epistemic and social scripts in computer-supported collaborative learning. Instructional Science, 33(1), 1–30. https://doi.org/10.1007/s11251-004-2322-4

Wise, A. F., & Schwarz, B. B. (2017). Visions of CSCL: Eight provocations for the future of the field. International Journal of Computer-Supported Collaborative Learning, 12(4), 423–467. https://doi.org/10.1007/s11412-017-9267-5

Wiyono, S., Wibowo, D. S., Hidayatullah, M. F., & Dairoh, D. (2020). Comparative study of KNN, SVM and decision tree algorithm for student’s performance prediction. International Journal of Computing Science and Applied Mathematics, 6(2), 50–53.

Xing, W., Guo, R., Petakovic, E., & Goggins, S. (2015a). Participation-based student final performance prediction model through interpretable Genetic Programming: Integrating learning analytics, educational data mining and theory. Computers in Human Behavior, 47, 168–181. https://doi.org/10.1016/j.chb.2014.09.034

Xing, W., Popov, V., Zhu, G., Horwitz, P., & McIntyre, C. (2019). The effects of transformative and non-transformative discourse on individual performance in collaborative-inquiry learning. Computers in Human Behavior, 98, 267–276.

Xing, W., Wadholm, R., Petakovic, E., & Goggins, S. (2015b). Group learning assessment: Developing a theory-informed analytics. Journal of Educational Technology & Society, 18(2), 110–128. https://www.jstor.org/stable/jeductechsoci.18.2.110

Ye, D., & Pennisi, S. (2022). Using trace data to enhance students self-regulation: A learning analytics perspective. The Internet and Higher Education, 54, 100855. https://doi.org/10.1016/j.iheduc.2022.100855

Zhang, X., Meng, Y., de Pablos, P. O., & Sun, Y. (2019). Learning analytics in collaborative learning supported by Slack: From the perspective of engagement. Computers in Human Behavior, 92, 625–633. https://doi.org/10.1016/j.chb.2017.08.012

Zheng, J., Xing, W., Zhu, G., Chen, G., Zhao, H., & Xie, C. (2020). Profiling self-regulation behaviors in STEM learning of engineering design. Computers & Education, 143, 103669. https://doi.org/10.1016/j.compedu.2019.103669

Zhu, G., Xing, W., & Popov, V. (2019). Uncovering the sequential patterns in transformative and non-transformative discourse during collaborative inquiry learning. The Internet and Higher Education, 41, 51–61. https://doi.org/10.1016/j.iheduc.2019.02.001

Zimmerman, B. J. (1990). Self-regulated learning and academic achievement: An overview. Educational Psychologist, 25, 3–17. https://doi.org/10.1207/s15326985ep2501_2

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xing, W., Zhu, G., Arslan, O. et al. Using learning analytics to explore the multifaceted engagement in collaborative learning. J Comput High Educ 35, 633–662 (2023). https://doi.org/10.1007/s12528-022-09343-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12528-022-09343-0