Abstract

Crop mapping and acreage estimation are the simplest yet the most critical issues in agriculture. Remote sensing technology has been extensively used in the past few decades for executing these tasks. The objective of this study is to map sugarcane fields at a catchment level and segregate the plant and ratoon fields using the freely available Sentinel-1 and Sentinel-2 data. The study is carried out at the Kisan Sahkar Chini Mill catchment in the Saharanpur district of Uttar Pradesh. The objective was achieved by a two-step process where firstly the sugarcane fields are identified using Random Forest and SVM classifiers over temporal optical and microwave images. The most accurate result is used as a crop mask to separate the plant and ratoon fields. This was achieved by attempting a phenology based classification and spectral based classification. The results revealed that temporal Sentinel-2 data are highly competent in classifying sugarcane at farm level and segregating the plant and ratoon fields. The sugarcane crop mask was created with a kappa coefficient of 0.95 using the SVM classifier, and the plant and ratoon fields were discriminated using the Random Forest classifier with a kappa coefficient of 0.81. The sugarcane crop area was estimated to be approximately 535 acres of plant crop and 560 acres of the ratoon crop while the mill estimate was 520 acres and 540 acres, respectively. The results showed that Sentinel-2 has promising capabilities and is a convenient asset in delineating small-sized farms and classifying sugarcane and its crop types.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Agriculture is the chief source of social well-being in most parts of the World. In India, agriculture's importance is hardly overstated, as it plays an enormous role in the economy. The necessity and significance of timely and reliable estimation of crop acreage and production for the use of decision-makers, including but not limited to the producers, processors, managers and the government is widely acknowledged (Nihar et al., 2019). Misleading production estimates give way to flawed policies regarding imports, exports and stockholding of agriculture-based commodities. It leads to problems for farmers, traders and consumers through market distortion. Hence the colossal risks and drawbacks of unreliable crop area and production estimates are self-explanatory for India, a country that caters more than 1.3 billion people.

Sugarcane (Saccharum officinarum), a perennial cash crop of the grass family Poaceae, is one of the widely grown crops that contribute significantly to the national exchequer and employs over a million people in India. The crop is one of India's top priority commercial crops, and yet the official estimations frequently vary widely from those of the trade and industry. While the sugarcane crop acreage information at a regional scale is vital in terms of trade decisions, insurance and policy-making, they are also crucial at a field level to the crushing mills in terms of budget, crop plan, commercial strategy, harvest scheduling, field expansion or renewal.

Crop acreage estimation is cumbersome when carried out manually because of the vast land size, which is easily overcome by satellite imagery. Remote Sensing (RS) is a powerful and compelling technology for crop inventory mapping, acreage estimation, monitoring, phenology extraction and production estimation of crops with reasonable accuracy depending on sensor characteristics (Patel et al., 2006). Applications of RS technology for the agricultural industry has attracted research from several scholars worldwide. It is now being normalized to use RS to understand and answer many of the spatial queries regarding agriculture. Classification of crops to estimate their area and extent is among the most common applications of satellite-based RS. Crop inventory mapping rests as the basis for other studies like yield estimation, parameter retrieval and the crop response to the biotic and abiotic factors in its environment. By appropriate selection of spatial and spectral resolution and processing techniques, satisfying results can be achieved by RS technology for the use of sugarcane crop (Abdel-Rahman & Ahmed, 2008).

Several different approaches have come in to place to map crops accurately. A plethora of studies have been performed to evaluate different types of data such as optical, microwave and hyperspectral data to map sugarcane. While the optical data provide information about the pigments, cellular content and structure, microwave data are more responsive to the crop's geometry and dielectric properties. Pandey et al. (2019) estimated the sugarcane crop acreage using Landsat and IRS ResourceSat datasets with the help of extensive field sampling and illustrated the importance of the sensor's spatial resolution map sugarcane at mill catchment level. Singla et al. (2018), proposed that the temporal profile of Normalized Difference Vegetation Index (NDVI) can be efficiently used to discriminate the sugarcane crop types at any given scale. High-resolution LISS – IV data is often explored to study sugarcane mapping in India (Singh et al., 2020; Verma et al., 2017). The synergistic use of optical and microwave datasets to map sugarcane is also being explored recently (Jiang et al., 2019; Wang et al., 2020).

Several studies have reported satisfying results for the application of satellite imagery in mapping sugarcane. However, minimal research has been extended into mapping the types of sugarcane, namely the plant and the ratoon. Ratooning is the practice of regrowing sugarcane from the existing roots of the crop after harvesting. While the planted crop grows over twelve months, the ratoon crop generally takes only nine to ten months. The ratoon crops grow faster as they already have a well-established root system. The plant crops provide higher sugar recovery rates and have a higher number of tillers. The classification of sugarcane and these crop types is performed in this study using the two major algorithms Support Vector Machine (SVM) and Random Forest (RF).

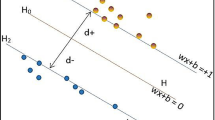

Recently, the advanced classifiers SVM and RF have drawn much attention from the scientific community due to their high accuracy (Mather and Tso, 2009). SVM stands robust and consistent regardless of high data dimensionality, data imbalance and limited training data (Sheykhmousa et al., 2019; Ustuner et al., 2016), while RF is more common due to its easy implementation, speed and insensitivity to overfitting (Belgiu & Drăgu, 2016; Mountrakis et al., 2011). It is also reported that in the case of lower resolution images, RF performs consistently better than SVM. However, SVM outperforms RF while classifying data containing a higher number of features (Sheykhmousa et al., 2020).

With the help of the two competent classifiers, mapping of sugarcane crop types at a farm level is performed over the freely available high-resolution Sentinel-1 and Sentinel-2 data. This study is an attempt to comparatively assess the viability and efficiency of the two characteristically different datasets to map sugarcane at field level and discriminate the crop type in a study area with an average field size of around 0.4 acres.

Materials and Methods

Study Area

The Kisan Sahkari Chini Mill (KSCM) Catchment located in the town of Nanauta in the district of Saharanpur, Uttar Pradesh was chosen as the area of interest for this study. The districts of Uttarakhand, namely Dehradun and Haridwar neighbour Saharanpur by the North and the West, respectively; the Karnal and Ambala districts of Haryana border Saharanpur by the East and the District of Muzaffarnagar of Uttar Pradesh on the South. The catchment area extends between 29.62 °N and 29.83 °N latitude, and between 77.20 °E and 77.54 °E longitude. The mill catchment contains sugarcane fields from around 165 villages covering a total area of over 460 square kilometres with an average sugarcane field size being 0.4 acres. The KSC Mill currently has a cane crushing capacity of 5000 tons per day, with an average sugarcane yield of 833 quintals/hectare and average sugar return of 8.45%.

The heart of the economy of this district is agriculture with sugarcane as the principal commercial crop. Rice, wheat, mustard, potato and tomato are among the other crops grown in the area. Mango orchards and Poplar plantations are also commonly found here. The Saharanpur district experiences a humid subtropical climate with annual precipitation ranging from 750 to 1200 mm and temperature ranging from 4 °C in winters up to 40 °C in summers. Figure 1 shows a false colour composite image of the KSCM catchment.

Data Used

The European Union's Radar imaging Sentinel-1A (C-Band) datasets and the optical Earth observation datasets of the twin satellites Sentinel-2A and Sentinel-2B were used in this study for mapping sugarcane in the catchment area. The Sentinel-2 mission provides optical images at a temporal resolution of 5 days with high resolution up to 10 m offering an extensive range of crop monitoring applications. The bottom of atmosphere reflectance (Level 2A) products (available globally since 2018) were collected for the period January 2019 to January 2020 (77 images) to monitor and extract the field parcel's phenology in the study area. The study area lies on the junction of two image tiles, and hence, all the images had to be mosaicked. Out of this dataset, one cloud-free image each from April, May, October and November was chosen for the spectral classification. Table 1 shows a list of all the satellite imagery used in this study.

Four Sentinel-1A SAR images from April, June, August and October were collected for this study to map sugarcane field parcels. These images were downloaded as Level -1 Ground Range Detected (GRD) products obtained by Interferometric Wide (IW) swath mode. The four images were pre-processed to obtain the VV and VH polarized backscatter images and stacked.

Around 250 Ground Truth (GT) Points were collected in the last week of September 2019. The sugarcane crop type and approximate dates of sowing and harvest were also collected along with other land-use information. These GT points were used to train and validate the classifiers in an 80:20 split, respectively.

Methodology

This study aims to map the sugarcane field parcels in the KSCM catchment and classify the sugarcane plant and ratoon crops using machine learning methods and estimate their area. This is achieved by using a two-step process where the sugarcane crop field parcels are separated from the other land-use classes first, and the fields are then segregated as plant or ratoon. Temporal microwave and optical data were used for this purpose. Both object-based and pixel-based approaches were performed to map the field parcels. The overall flow-chart and steps involved in discrimination of sugarcane crop types in the mill catchment area and for one specific village (Gudamb) are presented in Fig. 2.

Sugarcane Field Mapping

Mapping of the sugarcane crop fields was performed by object-based and pixel-based classifications using the SVM and RF classifiers. Image segmentation was done on a cloud-free optical image of October 2019, resulting in several objects or segments. The image objects layer was used to support the object-based classification for both optical and SAR images. Temporal Image Composites were prepared from the four SAR images and four optical images using the data listed in Table 1. The classification was carried out to separate the major classes such as settlement, water-body, sugarcane, paddy, vegetables, mango and poplar plantations. The different types of classification carried out were: (1) object-based classification on single date optical image (2) object-based classification on the temporal optical image, (3) object-based classification on temporal SAR image and (4) pixel-based classification over the temporal optical image. The points from the GT campaign were used as training points for the classifiers. The GT points were split into 80% and 20% for training and evaluation, respectively. The sugarcane crop mask is obtained from the best performing classifier and dataset combination after eliminating the other land-use classes.

Sugarcane Plant Versus Ratoon Discrimination

After separation of the sugarcane field parcels from the other pixels, they are categorized into plant and ratoon crops using the SVM and RF classifiers. The plant versus ratoon segregation was performed using (1) the temporal optical image and (2) an image stack of vegetation phenology parameters. Vegetation phenology refers to seasonal changes or life events in a crop's growing period. Phenology metrics for the sugarcane crop were derived from the TIMESAT software using temporal NDVI data computed from Sentinel-2 images for the period January 2019 to January 2020. The TIMESAT program generates smooth functions to extract phenological parameters. The curve fitting processes are based on least-square fit where the local functions are fused to form Global functions that describe NDVI over the full seasonal cycle. This software provides an interface to fit a time series of Vegetation Indices data into nonlinear mathematical functions (Eklundh & Jönsson, 2017). For this study, the Asymmetric Gaussian model was fit into a time series of NDVI images. The temporal resolution was reduced from 5 to 15 days by taking a maximum NDVI pixel composite to avoid cloud interference in the optical data. The phenological metrics namely Start of Season (SOS), End of Season (EOS), Length of the growing period or season (LOS), Maximum NDVI value during the growing period, Time of occurrence of Maximum value, Value of NDVI at SOS and EOS and Amplitude were extracted from the NDVI data. The eight parameters extracted from TIMESAT were written into images, and a composite image was made for the sugarcane crop mask created earlier. The SOS and EOS extracted from the software showed a difference of up to two weeks compared to GT. The phenology metrics images extracted and the optical temporal image used earlier were classified to label the fields as plant or ratoon using the GT points. The GT points were again split into 80% and 20% for training and testing, respectively. Ultimately, the sugarcane fields were mapped and classified into the plant or ratoon crops, and their acreage was estimated. The acreage was compared with the sugarcane crop area dataset available from the mill.

Results and Discussion

The present study was undertaken to classify sugarcane crop fields in the KSCM mill catchment area in Uttar Pradesh. The discrimination of the plant and ratoon crop using satellite data was also achieved in a two-step process. First, the sugarcane pixels are identified, and secondly, they are classified as ratoon or plant. Table 2 shows a comparison of the accuracies of the different classifiers over different datasets and the best outcome is highlighted in bold. It is seen that the optical datasets offered better results than the SAR data regardless of the classifier used. The SVM classifier on the optical multi-temporal dataset gave the highest accuracy of 95.9% and a Kappa coefficient of 0.95. Figure 3 shows the results for the extraction of sugarcane crop mask using the SVM classifier over temporal optical data.

It is also seen that contrary to many other studies that claim object-based classification is better than the pixel-based classification (Makinde et al., 2016; Whiteside et al., 2011), the pixel-based classification performed better in classifying the small sugarcane fields. This is because of the scale of objects being mapped and the resolution of the data used. Since the field parcels in this region are relatively small and the marginal pixels are influenced by the neighbouring land-use classes, only a few pure pixels in the farm's interior represent the actual spectral characters of the sugarcane crop. While the pixel-based classification is spot on solely based on every individual pixel's spectral characters, the object-based classification takes the average spectral response of a group of pixels considered an object based on their visual qualities as shape, size and colour. . As explained by Cai and Liu (2013), the most meaningful objects might have fewer pixels. Thus the accuracy of the classifiers based on objects is compromised. The Sentinel-2 dataset still stands competent in pixel-based classification for mapping small farms. The results are also comparable to the sugarcane mapping using Sentinel-2 data carried out by Wang et al. (2019). With higher resolution datasets, the object-based classification may work better to delineate the small farms. The sugarcane crop mask was extracted from the SVM classifier results, and the mask was used to further classify the fields as plant or ratoon.

Table 3 shows that the RF classifier's performance over the temporal optical images gave the best results (highlighted in bold) for segregation of plant and ratoon fields. It was noticed that the phenology-based classification did not provide expected results in terms of classifying plant and ratoon crops.

Figure 4 shows that the Random Forest classifier over the optical dataset shows a very satisfying classification with significantly less noisy pixels. The field boundaries are also prominently visible. The phenology-based classification's lower accuracy stems from the staggered SOS and EOS dates in the catchment area. Phenology is calculated from images spanning over the entire year, providing a wide range for SOS and EOS dates. This extensive range of SOS and EOS could overlap for the plant and ratoon crops, thus confusing the classifier. Meanwhile, the ratoon crops having a much voluminous canopy and better vigour are spectrally distinguishable from the plant crops using the optical satellite images. Another factor that plays an important role is the inevitability of clouds in optical data. Unlike spectral image-based classification, the cloud cannot be eliminated entirely from the time series of optical images while extracting phenology. The spectral image-based classification thus shows higher accuracy. The SVM and RF demonstrated outstanding performance in classifying the sugarcane crop types as discussed by Everingham et al., (2007). Figure 5 compares the two classifiers over the Gudamb village in the mill catchment area against the GPS based field survey data.

From the final classified image using the optical images, the plant and ratoon fields' area in the catchment area were computed. According to the data collected from the KSC mill's GPS survey, the catchment area had around 520 acres and 540 acres of plant and ratoon crops, respectively. The classification results showed a slightly higher estimation of approximately 535 acres of sugarcane plant crop and 560 acres of the ratoon crop.

Conclusions

Sentinel-2 images showed promising capabilities for field-level classification as well as discrimination between sugarcane plant and ratoon crops in the mill catchments with the help of ground truth points. It is desirable to use pixel-based classification to delineate the small field parcels using Sentinel data. The sugarcane fields were isolated from other land cover classes with an overall accuracy of 95.91% and 0.95 kappa coefficient using the SVM classifier. The plants and ratoon fields were separated with an accuracy of 90.75% and 0.81 kappa coefficient using the Random Forest classifier. The sugarcane plant and ratoon crops were estimated to cover around 520 acres and 540 acres, respectively, of the catchment area. Sentinel-2 data delivered convincing results with SVM and RF classifiers to delineate small-sized farms and map the different crop types. Outcomes of this study suggest that the Sentinel-2 datasets can be exploited conveniently in crop mapping and monitoring at the farm scale.

References

Abdel-Rahman, E. M., & Ahmed, F. B. (2008). The application of remote sensing techniques to sugarcane (Saccharum spp. hybrid) production: A review of the literature. International Journal of Remote Sensing, 29(13), 3753–3767. https://doi.org/10.1080/01431160701874603

Belgiu, M., & Drăgu, L. (2016). Random forest in remote sensing: A review of applications and future directions. ISPRS Journal of Photogrammetry and Remote Sensing, 114, 24–31. https://doi.org/10.1016/j.isprsjprs.2016.01.011

Cai, S., & Liu, D. (2013). A comparison of object-based and contextual pixel-based classifications using high and medium spatial resolution images. Remote Sensing Letters, 4(10), 998–1007. https://doi.org/10.1080/2150704X.2013.828180.

Eklundh, L., & Jönsson, P. (2017). TIMESAT 3.3 with seasonal trend decomposition and parallel processing Software Manual. Lund and Malmo University, Sweden, 1–92. Retrieved July 29, 2020, from http://web.nateko.lu.se/timesat/timesat.asp.

Everingham, Y. L., Lowe, K. H., Donald, D. A., Coomans, D. H., & Markley, J. (2007). Advanced satellite imagery to classify sugarcane crop characteristics. Agronomy for Sustainable Development, 27(2), 111–117.

Jiang, H., Li, D., Jing, W., Xu, J., Huang, J., Yang, J., & Chen, S. (2019). Early season mapping of sugarcane by applying machine learning algorithms to sentinel-1A/2 time series data: A case study in Zhanjiang City, China. Remote Sensing. https://doi.org/10.3390/RS11070861

Makinde, E. O., Salami, A. T., Olaleye, J. B., & Okewusi, O. C. (2016). Object based and pixel based classification using rapideye satellite imager of ETI-OSA, Lagos, Nigeria. Geoinformatics FCE CTU, 15(2), 59–70. https://doi.org/10.14311/gi.15.2.5

Mather, P., & Tso, B. (2009). Classification methods for remotely sensed data (2nd ed.). CRC Press. https://doi.org/10.1201/9781420090741

Mountrakis, G., Im, J., & Ogole, C. (2011). Support vector machines in remote sensing: A review. ISPRS Journal of Photogrammetry and Remote Sensing, 66(3), 247–259. https://doi.org/10.1016/j.isprsjprs.2010.11.001

Nihar, A., Mohammed Ahamed, J., Pazhanivelan, S., Kumaraperumal, R., & Ganesha Raj, K. (2019). Estimation of cotton and maize crop area in Perambalur district of Tamil Nadu using multi-date sentinel-1A SAR data. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences - ISPRS Archives, 42(3/W6), 67–71. https://doi.org/10.5194/isprs-archives-XLII-3-W6-67-2019

Pandey, S., Patel, N. R., Danodia, A., & Singh, R. (2019). Discrimination of sugarcane crop and cane yield estimation using Landsat and IRS resourcesat satellite data. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences - ISPRS Archives, 42(3/W6), 229–233. https://doi.org/10.5194/isprs-archives-XLII-3-W6-229-2019

Patel, N. R., Bhattacharjee, B., Mohammed, A. J., Tanupriya, B., & Saha, S. K. (2006). Remote sensing of regional yield assessment of wheat in Haryana, India. International Journal of Remote Sensing, 27(19), 4071–4090. https://doi.org/10.1080/01431160500377188

Sheykhmousa, M., Kerle, N., Kuffer, M., & Ghaffarian, S. (2019). Post-disaster recovery assessment with machine learning-derived land cover and land use information. Remote Sensing. https://doi.org/10.3390/rs11101174

Sheykhmousa, M., Mahdianpari, M., Ghanbari, H., Mohammadimanesh, F., Ghamisi, P., & Homayouni, S. (2020). Support Vector Machine Versus Random Forest for Remote Sensing Image Classification: A Meta-Analysis and Systematic Review. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 13(October), 6308–6325. https://doi.org/10.1109/JSTARS.2020.3026724

Singh, R., Patel, N. R., & Danodia, A. (2020). Mapping of sugarcane crop types from multi-date IRS-Resourcesat satellite data by various classification methods and field-level GPS survey. Remote Sensing Applications: Society and Environment, 19, 100340.

Singla, S. K., Garg, R. D., & Dubey, O. P. (2018). Sugarcane ratoon discrimination using LANDSAT NDVI temporal data. Spatial Information Research, 26(4), 415–425. https://doi.org/10.1007/s41324-018-0184-0

Ustuner, M., Sanli, F. B., & Abdikan, S. (2016). Balanced vs imbalanced training data: Classifying rapideye data with support vector machines. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences - ISPRS Archives, 41(July), 379–384. https://doi.org/10.5194/isprsarchives-XLI-B7-379-2016

Verma, A. K., Garg, P. K., & Hari Prasad, K. S. (2017). Sugarcane crop identification from LISS IV data using ISODATA, MLC, and indices based decision tree approach. Arabian Journal of Geosciences. https://doi.org/10.1007/s12517-016-2815-x

Wang, J., Xiao, X., Liu, L., Wu, X., Qin, Y., Steiner, J. L., & Dong, J. (2020). Mapping sugarcane plantation dynamics in Guangxi, China, by time series Sentinel-1, Sentinel-2 and Landsat images. Remote Sensing of Environment, 247(December 2019), 111951. https://doi.org/10.1016/j.rse.2020.111951

Wang, M., Liu, Z., Ali Baig, M. H., Wang, Y., Li, Y., & Chen, Y. (2019). Mapping sugarcane in complex landscapes by integrating multi-temporal Sentinel-2 images and machine learning algorithms. Land Use Policy, 88(July), 104190. https://doi.org/10.1016/j.landusepol.2019.104190

Whiteside, T. G., Boggs, G. S., & Maier, S. W. (2011). Comparing object-based and pixel-based classifications for mapping savannas. International Journal of Applied Earth Observation and Geoinformation, 13(6), 884–893. https://doi.org/10.1016/j.jag.2011.06.008

Acknowledgements

Authors sincerely express their gratitude to General Manager, Kisan Sahkari Chini Mills Ltd (KSCM) and Mr. Varshney, IT cell, KSCM for providing necessary information and GPS based field survey of sugarcane crop besides other logistic support. We are also thankful to Director, IIRS for providing necessary facilities and encouragement to carry out research for farmer-centric applications.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Nihar, A., Patel, N.R., Pokhariyal, S. et al. Sugarcane Crop Type Discrimination and Area Mapping at Field Scale Using Sentinel Images and Machine Learning Methods. J Indian Soc Remote Sens 50, 217–225 (2022). https://doi.org/10.1007/s12524-021-01444-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12524-021-01444-0