Abstract

Uniaxial compressive strength (UCS) of rock material is very important parameter for rock engineering applications such as rock mass classification, numerical modelling bearing capacity, mechanical excavation, slope stability and supporting with respect to the engineering behaviors’ of rock. UCS is obtained directly or can be predicted by different methods including using existing tables and diagrams, regression, Bayesian approach and soft computing methods. The main purpose of this study is to examine the applicability and capability of the Extreme Learning Machine (ELM), Minimax Probability Machine Regression (MPMR) for prediction of UCS of the volcanic rocks and to compare its performance with Least Square Support Vector Machine (LS-SVM). The samples tested were taken from the volcanic rock masses exposed at the eastern Pontides (NE Turkey). In the soft computing model to estimate UCS of the samples investigated, porosity and slake durability index were used as input parameters. In this study, the root mean square error (RMSE), variance account factor (VAF), maximum determination coefficient value (R2), adjusted determination coefficient (Adj. R2) and performance index (PI), regression error characteristic (REC) curve and Taylor diagram were used to determine the accuracy of the ELM, MPMR and LS-SVM models developed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Uniaxial compressive strength (UCS) of rock material is very important parameter for the geotechnical engineering applications such as rock mass classification, numerical modelling bearing capacity, mechanical excavation, slope stability and supporting with respect to the engineering behaviors’ of rock (Haramy and De Marco 1985; Ceryan 2014; Wang and Aladejare 2015). UCS can be measured directly or can be predicted by different methods including using existing tables and diagrams, regression, Bayesian approach and soft computing methods.

In addition to the instic properties of the rock materials, the sample geometry and loading conditions also affect laboratory test results directly on the rock sample. For this reason and also to compare the result, this test is carried out according to the international test standards set by the American Society for Testing and Materials (ASTM) or the International Society for Rock Mechanics (ISRM). However, the direct measurement of UCS is expensive and time-consuming, and the preparation of a standard core specimen for weak and highly fractured and thinly bedded rocks include a major challenge (Gokceoglu 2002; Gokceoglu and Zorlu 2004).

In order to assess the strength of rock materials easily and quickly in the field work, the tables and diagrams based on “simple means tests” were developed. As examples using the tables and diagrams, first approach to estimate UCS of rock materials can be given by the method recommended by the British Standard (BS 5930 1981) and the International Society for Rock Mechanics (ISRM 2007). On other hand, Pollak and co-workers (Pollak et al. 2017) suggested a method for estimations of the UCS of carbonate rock materials without conducting index test. The said method is based on observing four basic elements: lithology, fabric, defects and porosity. The LFDP determination method is simple, efficient, inexpensive and versatile. The use of existing tables and diagrams and LFDP determination method is useful in classification of rock mass, but the use of the UCS value estimated from the tables and diagram in engineering calculations is not appropriate.

Basic mechanical tests including the Shore Scleroscope hardness (Deere and Miller 1966; Koncagul and Santi 1999; Yasar and Erdogan 2004), Schmidt hammer (Shorey et al. 1984; Yagiz 2009; Fattahi 2017; Demirdag et al. 2018; Ghasemi et al. 2018), block punch test (Ulusay and Gokceoglu 1997; Mishra and Basu 2012; Sulukcu and Ulusay 2001), Brazilian test (Nazir et al. 2013), core strangle index (Yilmaz 2009), nail penetration test (Chaudhary 2004; Yamaguchi et al. 2005; Maruto Corporation 2006; Ngan-Tillard et al. 2009), Equotip hardness tests (Verwaal and Mulder 1993; Alvarez-Grima and Babuska 1999; Yilmaz 2013), Hybrid Dynamic Hardness (Yilmaz 2013) and Edge Load Strength (Palassi and Mojtaba Pirpanahi 2013) are used with empirical equations to obtain UCS. Indentation test (Cheshomi et al. 2017; Haftani et al. 2013; Mateus et al. 2007; Szwedzicki 1998; Yuen 2010), loading reconstructed cores test (Mazidi et al. 2012), modified point load test (Sheshde and Cheshomi 2015) and single particle loading test (Cheshomi et al. 2012; Cheshom and Ahmadi-Sheshde 2013; Cheshomi et al. 2015; Ashtaria et al. 2019) have been also suggested for indirect determination of UCS that have been also suggested for indirect determination of UCS. Although these tests are serious shortcomings, limitations and problems related to these testing methods (Yilmaz 2009; Kayabali and Selcuk 2010; Nefeslioglu 2013), they are also used as a input parameters in the predicted models such as statically and soft computing models (Ceryan and Korkmaz Can 2018) .

Most investigations involve determining the individual correlation between an index and the UCS (i.e., a simple regression analysis) (e.g. Rzhevsky and Novick 1971; Koncagul and Santi 1999; Fener et al. 2005; Chang et al. 2006; Mishra and Basu 2013; Fereidooni 2016; Aboutaleb et al. 2018; Heidari et al. 2018; Jamshidi et al. 2018). Certain studies have used more than one index to predict the UCS (i.e. multiple regression analysis) (e.g. Moos et al. 2003; Moradian and Behnia 2009; Ali et al. 2014; Torabi-Kaveh et al. 2014; Ceryan 2014; Heidari et al. 2018; Aboutaleb et al. 2018; Cengiz et al. 2018). There are some difficulties in the implementation and generalization of these statistical models (Sridevi 2000; Fener et al. 2005; Sonmez et al. 2006; Maji and Sitharam 2008; Yuen 2010; Wang and Aladejare 2015; Ng et al. 2014). There is no agreement of the equations obtained from regression analysis for the different rock types (Fener et al. 2005; Sonmez et al. 2006). Feng (2015) indicated that most empirical correlations are obtained using regression methods that do not quantify the uncertainties of predictions and it is not always possible to modify them to incorporate project-specific data. According to Maji and Sitharam (2008), in evolving trend-fitting curves by statistical regression, the data is constrained along a particular two-dimensional geometry of the statistical model used. To overcome these difficulties of these conventional methods, many researchers have employed soft computing methods in estimating UCS of rock material (Table 1).

ANNs have been used extensively for modeling in the prediction UCS (Table 1). However, they may suffer from some disadvantages such as converging at local minima instead of global minima, overfitting if training goes on for too long and non-reproducibility of results, partly as a result of random initialization of the networks and variation of the stopping criteria during optimization (Sattarib et al. 2014). In the past decade, a new alternative kernel-based technique called a support vector machines (SVM) (Vapnik 1995) has been derived from statistical learning theory. SVM model using sigmoid kernel function is equivalent to a two-layer perceptron neural network. Using a kernel function, SVMs are alternative training methods for polynomial, radial basis function and multilayer perceptron classifiers in which the weights of the network are found by solving a quadratic programming problem with linear constraints, rather than by solving a non-convex, unconstrained minimization problem as in standard ANN training (Huang et al. 2010). Despite this advantages of standard SVM, it has some shortcomings. Some of them are (i) that SVM employs basis functions superfluously in that the needed support vectors increase with the training data size and (ii) there is a dubiousness to get the control parameters. Thus, the calibration of the three parameters of SVM can be time-consuming and wearing (Gedik 2018). For this, Suykens and Vandewalle (1999) applied some modifications to the traditional SVM algorithm to simplify the process of finding a model by solving a set of linear equations (linear programming) instead of non-linear equations (quadratic programming) and named it as least square support vector machine (LS-SVM). It can be said that LS-SVM includes similar advantages of traditional SVM. But it performs faster computationally. The LS-SVM method has been used in the prediction of UCS by some researchers (Table 1).

Recently, extreme learning machine (ELM) has been proposed for training single hidden layer feedforward neural networks (SLFNs), which randomly choose hidden nodes and analytically determine the output weights of SLFNs (Huang et al. 2006; Zong et al. 2013). In the ELM method, the only free parameters that need to be learned are the connections (or weights) between the hidden layer and the output layer (Huang et al. 2006; Zausa and Civolani 2001; Huang et al. 2015). This mechanism is different from the conventional learning of SLFNs. ELM is formulated as a linear-in-the-parameter model which boils down to solving a linear system, which can be applied as the estimator in regression problem or the classifier for classification tasks (Huang et al. 2006; Liu et al. 2015; Huang et al. 2015). Theoretically, this algorithm tends to provide good generalization performance at extremely fast learning speed and has a highly accurate learning solution (Liu et al. 2015). And even with randomly generated hidden nodes, ELM maintains the universal approximation capability of SLFNs (Huang et al. 2015). According to the results of the study performed by Liu et al. (2015), the ELM approach can perform much better than the RBF-neural network (RBF-NN) and the BP-neural network (BP-NN), in modeling the rock parameter problems. Also, the ELM performs equivalently to the generalized regression neural network (GRNN) and the SVM in estimation of the UCS of rocks and takes much less time than the GRNN. The authors indicated that it can be easily used in the problems in rock mechanics and engineering where uncertainty substantially exists and expert opinions play an important role.

The other method used in solving engineering problems in recently is Minimax Probability Machine (MPM). MPM introduced in the studies performed by Lanckriet and co-workers (2002a-b) is a novel classification algorithm based on the prior knowledge and has been successfully applied in classification and regression problems (Yang et al. 2019). The problem of constructing a regression model can be posed as maximizing the minimum probability of future predictions being within some bound of the true regression function (Strohmann and Grudic 2002). This approach constitutes the main framework of MPM (Strohmann and Grudic 2002). This method has advantages over other machine learning methods (Yang et al. 2019): (i) MPM is a moment-based constrains algorithm (or called a nonparametric algorithm). It utilizes all the information from the samples, mean and variance, to find a minimax probabilistic decision hyperplane for separating the two-class samples for binary classifications; (ii) making no assumption on the data distribution, MPM can directly estimate a probabilistic accuracy bound by minimizing the maximum probability of misclassification error; and (iii) MPM formulation is reformulated as a second-order cone programming (SOCP).

The main purpose of this study is to examine the applicability and capability of the Extreme Learning Machine (ELM), Minimax Probability Machine Regression (MPMR) approaches for prediction of UCS of the volcanic rocks and to compare its performance with Least Square Support Vector Machine (LS-SVM). The samples tested were taken from the rock slopes on the Giresun-Gumushane highway and from the İyidere-Rize quarry (NE Turkey). In this study, also, the use of porosity and slake durability index together in estimating the UCS of the weathered volcanic rocks was investigated. The degree of weathering of the said volcanic rock material was defined by using Schmidt hammer rebound number (SHV). In this study, RMSE, VAF, R2, Adj. R2, PI, REC curve, and Taylor diagram were used to evaluate the performance of the models suggested.

Materials and testing procedures

The study area is located in the Eastern Pontides of NE Turkey (Fig. 1). The eastern Pontides comprise Late Cretaceous and Middle Eocene to Miocene volcanic and volcanoclastic rocks in the north, whereas in the south, pre-Late Cretaceous rocks are widely exposed. The area is characterized by three magmatic cycles developed during Liassic, Late Cretaceous and Eocene times (Fig. 1). The samples used in this study included Late Cretaceous volcanic rocks and interbedded sedimentary rocks. These volcanics are andesite, dacite and rhyolite in composition.

The geological maps of the study area (Acarlioglu et al. 2013)

In this study, 47 groups of block samples, each sample measuring approximately 30 × 30 × 30 cm, were collected in the field for petrographic analyses, index and mechanical test. These analyses and test were performed at the Rock Mechanics Laboratory in the Engineering Faculty of Karadeniz Technical University, Trabzon NE Turkey.

The dasitic and andesitic rock samples investigated are from the excavated slopes throughout the Gumushane-Giresun roadway in NE Turkey (Figs. 1, 2 and 3). Andesite is grayish green and dark green in color, and macroscopically, augite, hornblende, biotite and plagioclase minerals can be identified. The groundmass of the said rocks is composed of plagioclase, augite, hornblende, biotite, chlorite and opaque minerals. Calcite, sericite and chlorite are found as alteration products and sometimes as crack fills in these rocks.

The dasitic and andesitic rock exposed throughout the Gumushane-Giresun road (a, b) basalt and tuffs in the Iyidere-Ikizdere quarry, Rize NE Turkey (c, d, Usturbelli 2008)

Dacite has microgranular texture and contains a lot of quartz minerals. In the dacite, quartz is found euhedral to subhedral phenocrysts, and micro and cryptocrystalline in the groundmass and plagioclase occur subhedral phenocrysts and small anhedral crystals in the groundmass. In the plagioclases, mostly, sericitization, calcification and argillitization are observed. The biotite is abundant in euhedral and subhedral crystals and generally forms chloritized as small flakes in the dacites.

The rock samples from the tuffs and basalt investigated were obtained from the Iyidere-Ikizdere quarry, Rize NE Turkey (Figs. 1, 2 and 3). Basalt has microlitic-porphyric textures with plagioclase, clinopyroxene and hornblende phenocrysts. Their groundmass has an intergranular texture and contains plagioclase, clinopyroxene, hornblende, Fe-Ti oxide and volcanic glass. The tuffs are found as lytic-crystal tuff. The crystal fragments are composed of plagioclase, augite and hornblende as coarse and small grains, opacified and chloritized biotite along the cleavages, and opaque minerals in the tuff. The rock fragments in the rocks are composed of andesite.

Schmidt hammer rebound hardness test on the block samples, determine the porosity, slake durability test and the uniaxial compressive strength (UCS) was performed according to International Society for Rock Mechanics (ISRM 2007) (Table 2). Rebound hammer used in this study was Schmidt hammer N-type. From the 60 readings collected undertaken at different point on the block surface, the average of rebound number value was calculated from 50% of the highest readings. In this study, Schmidt hammer rebound number (SHV) obtained on unweathered block samples was used to define the weathering degree of the samples collected from the field (Eq. 1. Table 2)

where Wc is the decomposition index, Rf is the SHV value obtained for unweathered rock samples, Rw is the SHV value obtained for the investigated samples. The definition of the weathering degree of the sample investigated was made according to the classification given in Gokceoglu (1997) (Eq. 1). The Wc value is less than 1 in the unweathered rock sample, between 1.1 and 1.5 in slightly weathered samples, between 1.5 and 2 in moderately weathered samples and greater than 2 in highly weathered samples.

The slake durability test was performed using the standard testing method recommended by the International Society for Rock Mechanics (ISRM 1981). The test was performed using 10 samples of each test for four cycles. The slake durability index corresponded to each cycle and was calculated as the percentage ratio of the final to the initial dry weights of the rock in the drum after the drying and wetting cycle. This experiment was performed three times for each block sample.

The core samples were prepared from the rock blocks using the core-drilling (Fig. 3). They were 50 mm in diameter and the edges of the specimens were cut parallel and smoot. And then, porosity and the uniaxial compressive strength test were performed on these core samples.

The porosity (n) of the rock was estimated using the following equations:

where ρd is the dry density and ρs is the grain density.

The UCS test experiments were performed using 10 samples under dry conditions for each group. During the test, the samples were loaded to be broken in 10 and 15 min. The sample’s stress was calculated as the ratio of the compressive force and sample cross-section area at the beginning of the test, and the uniaxial compressive strength was the ratio of the maximum applied force and cross-section area (Table 2).

Modeling techniques and their application

The input parameters and data normalization

The intrinsic properties affecting UCS of rock material can be divided into two groups; one is pore characteristics, and the second is microstructural variables consisting of mineralogical composition and rock texture (Ceryan 2014). Pore characteristics is known to exert a first-order control on the physical properties of rocks that aids in governing physical attributes of rocks, such as strength, deformability and hydraulic conductivity (Tugrul 2004; Bubeck et al. 2017; Griffiths et al. 2017). Porosity is one of the most important and widely used parameters used in defining the pore characteristics of rock materials. For this, porosity is often used in the models developed to estimate UCS (Baud et al. 2014; Bubeck et al. 2017; Ceryan and Korkmaz Can 2018). These studies focused on the impact of porosity on UCS demonstrated that a negative linear or curvilinear relationship exists between UCS and porosity of rock materials.

Mineralogical and petro-physical properties including density, cation packing index, content of specific mineral such as quartz and clay are widely used for characterizing microstructural variables and weathering grades of rock materials (e.g. Zorlu et al. 2008; Ceryan 2012; Ceryan 2008; Manouchehrian et al. 2014; Ceryan 2014; Ceryan 2015). The slake durability of a rock is an important property and is closely related to its mineralogical composition. Hence, its resistance to degradation (weakening and disintegration) is measured using a standard drying and wetting cycle (Sharma and Singh 2008). The slake durability test is a cheap and easy test to carry out and requires very little sample preparation. For this, some researchers have investigated the relation between UCS and slake durability index (Id) to develop an estimation equation for UCS (e.g. Cargill and Shakoor 1990; Koncagul and Santi 1999; Gokceoglu et al. 2000; Dincer et al. 2008; Yagiz 2011; Kahraman et al. 2016).

In this study, porosity representing pore characteristics and slake durability index representing mineralogical and petro-physical properties were used as an input parameter for the LS-SVM, RPRM and ELM models.

In the models suggested in this study, the input and output data are normalized to prevent the model from being dominated by the variables with large values, as is commonly used. The normalization of all data was carried out using Eq. 24:

where zi is the scaled value, xi is the original data, xmin and xmax are, respectively, the minimum and maximum values of the original data.

Regression analysis

The regression analysis is a statistical tool that can be applied to examine the relationships between variables. In this technique, the relationship between independent (predictor) variable and dependent (output) variable is systematically determined in the form of a function (Jahed Armaghani et al. 2016a). Two main regression methods in statistics are simple and multivariable analysis The simple regression analyses provide a means of summarizing the relationship between two variables (Yagiz et al. 2012). While a simple linear equation (SLR) has one basic form as y = b0 + b1x, non-linear equations obtained by simple none linear equation (SNLR) can take many different forms including power (y = axb), logarithmic (y = a lnx + b) and exponential (y = aebx) functions that covers many different forms, which is why non-linear regression provides the most flexible curve-fitting functionality (Ceryan and Korkmaz Can 2018).

The general purpose of multiple regression is to learn more about the relationship between several independent or predictor variables and a dependent or criterion variable. The goal of multiple linear regression (MLR) is to model the linear relationship between the explanatory (independent) variables and response (dependent) variable. A linear equation is constructed by adding the results for each term. This constrains the equation to just one basic form such as “Response = constant + parameter * predictor + ... + parameter * predictor” (Ceryan and Korkmaz Can 2018).

In order to estimate UCS by regression analysis in this study, two simple linear and many simple non-linear relationships were found. The highest performance was obtained from exponential (y = aebx) functions. In addition, MLR analysis was performed in this study.

Least square support vector machine

The basic concept of SVM is to transform the signal to a higher dimensional feature space and find the optimal hyper-plane in the space that maximizes the margin between the classes (Lee et al. 2008). Suykens and Vandewalle (1999) applied some modifications to the traditional SVM algorithm to simplify the process of finding a model by solving a set of linear equations instead of non-linear equations and named it as least square support vector machine (LSSVM). LS-SVM includes similar advantages of traditional SVM, but it performs faster computationally (Sattarib et al. 2014). LS-SVM method can be described as follows according to the studies proposed by Suykens et al. (2002) and Van Gestel et al. (2004).

Considering a given training set of N data points \( {\left\{{x}_k,{y}_k\right\}}_{k=1}^N \) with input data xk∈ ℝN and output data yk ∈ r where ℝN is the N-dimensional vector space and r is the one-dimensional vector space. Given training set \( {\left\{{x}_k,{y}_k\right\}}_{k=1}^N \), the minimization of the cost function J of LS-SVM is defined as Eq. 4

where \( {e}_k^2 \) is the quadratic loss term and γ is the regularization parameter.

The solution of the optimization problem of LS-SVM is obtained by considering the Lagrangian as Eq. 5

where αk are the Lagrange multipliers. The conditions for optimality can be obtained by differentiating with respect to W, b, ek and αk, i.e. (Eq. 6)

Solution of expressions can be written as Eq. 7

with \( y=\left[{y}_1;....;{y}_N\right],\overrightarrow{1}=\left[1;....;1\right],\alpha =\left[{\alpha}_1;....;{\alpha}_N\right] \) and by applying Mercer’s theorem (Mercer 1909).

The resulting LS-SVM for function estimation can be expressed as Eq. 8

where \( {\alpha}_k^{\ast } \) and b∗ values are the solutions to Eq. (7).

where K(xk, xm) = ∅ (xk)T ∅ (xk) for k, m = 1, …, N is the kernel function and b is the bias term. Any kernel function can be preferred in accordance with Mercer’s theorem (Gedik 2018).

The kernel functions treated by LSSVM modeling studies are generally some specific functions including linear, spline, polynomial, sigmoid and Gaussian radial basis (Samui 2008; Gedik 2018). The Gaussian kernel is used in this analysis. The Gaussian kernel is given as Eq. 9 (Burges 1998):

where σ is the width of the Gaussian kernel.

MPRM

A MPMR algorithm is an improved version of SVM (Strohmann and Grudic 2002), where one data is analyzed by shifting all of the regression data +ε along the dependent variable axis, and the other is analyzed by shifting all regression data −ε along the dependent variable axis (Deo and Samui 2017). MPMR uses Mercer’s kernel for obtaining non-linear regression models (Gopinath et al. 2018). Let us assume that the unknown regression function f: Rd➔R, which has the form

where x∈ RD = input vector according to a bounded distribution Ω; y∈ R = output vector; ρ is the noise or fitting error and has zero mean value, i.e. E(ρ) = 0, variance Var(ρ) = σ2 and finite σ∈R (Strohmann and Grudic 2002; Lanckriet et al. 2002a; Gopinath et al. 2018). Let us consider the training set examples (Eq. 11)

where ∀i ∈ {1, 2 …N}, xi = {xi1, xi2 …xid}∈Rd and yi ∈ R.

We have two objectives; one is to find the approximation function \( \hat{y}=\hat{f}(x) \) and the second objective is to find the error ε > 0 and fitting error (minimum probability) (Strohmann and Grudic 2002; Lanckriet et al. 2002b; Gopinath et al. 2018) (Eq. 12)

The MPMR formulation for the above approximation \( \hat{\mathrm{y}} \) is given by Eq.13

where k(xi, x) = φ(xi), φ(x) is the kernel which satisfies Mercer’s conditions; xi ∀i ∈ {1, 2, …. N} are obtained from learning data Γ. In the above formulation, βi and b ∈ R are the outputs of MPMR learning algorithm.

One data set is obtained by shifting all of the regression data +ε along the output variable axis. The other data is obtained by shifting all of the regression data −ε along the output variable axis. The classification boundary between these two classes is defined as a regression surface.

ELM

The brief methodology of ELM will be given in this section. The ELM proposed by Huang et al. (2006) is in essence a least-square-based learning algorithm for “generalized” SLFNs, which can be applied as an estimator in regression problem. The weights of the hidden layer in the ELM can be initialized randomly; thus, it is only necessary to optimize the weights of the output layer (Liu et al. 2015). The optimization can be carried out by means of the Moore–Penrose generalized inverse (Liu et al. 2015).

In SLFN, the relation between input (x) and output (y) is given below (Eq. 14):

where wi denotes the weight vector connecting the ith hidden neuron and the input neurons, βi represents the weight vector connecting the ith hidden neuron and the output neurons, bi denotes the threshold of the ith hidden neuron, gi represents the activation function, m denotes the number of hidden nodes and N is the number of datasets.

The Eq. 14 can be expressed in the following way (Eq. 15).

where H = {hij} (i = 1,…,N, j = 1,…,K and hij = g(wj. xi)) denotes the hidden-layer output matrix, β(β = [β1,..., βK]) represents the matrix of output weights and T(T = y1, y2, ..., yN)T denotes the matrix of targets.

The value of β is determined from the following expression (Eq. 16).

where H−1 is the Moore–Penrose generalized inverse of H (Serre 2002). The learning speed of ELM is increased by using Moore–Penrose generalized inverse method.

Results and the prediction performances

Since the studied samples have different degrees of weathering, the change of the porosity (n), slake durability index (Id) and the uniaxial compressive strength (UCS) with weathering were determined (Fig. 4). These changes of the said properties of the samples with weathering can be seen at the box diagrams graphed using maximum, minimum, median, the first quartile and third quartiles of the data measured for each weathered degree (Fig. 4).

The mean values of n and Id are 5.0% and 92% in slightly weathered samples while these values are 2.2% and 97% in fresh samples, respectively. On the other hand, the mean values of n and Id are 8.2% and 81% in moderately weathered samples, respectively. The mean of UCS values is 194.7 MPa in fresh samples. These UCS values are 136.8 MPa and 84.8 MPa in slightly weathered samples and in moderately weathered samples, respectively. According to these values obtained for n, Id and UCS, n increases when the grade of weathering of the samples increases while slake durability index decreases with weathering. The condition resulted in decreases of UCS with weathering degree increasing (Table 2, Fig. 4).

To evaluate the performance, the models suggested in this study accuracies, the root mean square error (RMSE), variance account factor (VAF), maximum determination coefficient value (R2), adjusted determination coefficient (Adj. R2) and performance index (PI), given in Eqs. 17–21 (Gokceoglu 2002; Gokceoglu and Zorlu 2004; Yagiz et al. 2012; Ceryan 2014) were computed for each model (Tables 3 and 4).

RMSE evaluates the residual between desired and output data, and VAF represents the ratio of the error variance to the measured data variance. R2 and Adj. R2 evaluate the linear relation between desired and output data. For a statistical model, in theory, the VAF, RMSE and R2 are 100% for VAF, 0 for RMSE and 1 for R2 and approximately 2 for PI (Ceryan 2014). In reality, VAF, RMSE and R2 can be separately used to examine the model accuracy. Due to none of these indices is superior, the performance index (PI) can be used to examine the accuracy of the statistical models (Yagiz et al. 2012; Ceryan 2014). PI was suggested by Yagiz et al. (2012), and then, it was modified by Ceryan (2014). In the PI modified, Adj. R2 was used instead of R2. The RMSE was calculated from the normalized data.

where n is the number of training or testing samples, p is the model input quantity and dt and yt are the measured and predicted values, respectively.

The performance of the SNLR models with slake durability index is higher than the other SNLR model. The performance index and VAF values of the SNLR models with n are 1.302 and 66.6. It can be said that the performance of the SNLR models with n is not successful (Table 3). Considering the values of the performance indices except RMSE, it can be said that the peformence of MLR models is higher than SNLR model with slake durability index. The performance index of the SNLR models with Id is 1.532 wihle PI value of MLR model is 1.560. The performance of these regression models in predicting maximum and minimum values is not successful.

Taking into considering RMSE, R2, maximum and minimum value and VAF values, the LS-SVM model having the worst performance among soft computing methods given in this study performed slightly better than the MLR models having the best performance among regression models (Tables 3 and 4). The performance index of the MLR model is 1.560 while PI value of LS-SVM model is 1.573 (Tables 3, 4 and Fig. 6).

In this study, the huffled complex evolution algorithm (Wang et al. 2009) and grid-search method (Samui and Dixon 2012) were used to determine the optimum range for LS-SVM parameters, respectively. For the developed LSSVM, the design parameters of γ and σ are 0.7 and 50, respectively.

In the ELM applications, three-layered FFNN was constructed for modeling of runoff series. The optimal number of neuron in the hidden layer was determined using a trial-and-error approach by varying the number of neurons from 2 to 10. The developed ELM gives best performance for seven neurons in the hidden layer.

In the MPRM modeling, this study uses trial and error approach for determination of design parameters of error insensitive zone (ε) and σ. The design values of ε and σ are 0.001 and 1.9, respectively.

The R2 values calculated for the MPRM, ELM and LS-SVM models are 0.9597, 0.9587 and 0.9099 during training and 0.9114, 0.8997 and 0.9062 during the testing periods, respectively (Table 4). The Adj. R2 values calculated for the MPRM and ELM models are 0.9570 and 0.9560 during training and 0.8953 and 0.8814 during the testing periods, respectively. These values for LS-SVM model during training and testing periods are 0.9039 and 0.8892, respectively. In the training and testing periods (Table 4). The RMSE values calculated for the MPRM, ELM and LS-SVM models are 0.0590, 0.0597 and 0.0954 during training and 0.0905, 0.0934 and 0.1497 during the testing periods, respectively (Table 4). The best R2 and RMSE values in during the training and testing periods were obtained for MPRM model while the worse values in both periods obtained for LS-SVM models (Table 4). The VAF values calculated for the MPRM, ELM and LS-SVM models are 0.981, 0.985 and 0.781 during training and 0.853, 0.848 and 0.834 during the testing periods, respectively (Table 4).

In MPRM and ELM models, the difference in VAF, R2 and Adj. R2 values between training and testing period ranges 5–13% while the difference in PI was obtained 13%. In the LS-SVM models, the difference in VAF, R2, Adj. R2 and PI values between training and testing period ranges 0.5–6%. This is very low value.

In training period, the best convergence to the maximum and minimum values measured was obtained for the ELM model while the best convergence to these measured values was obtained for LS-SVM in testing period (Table 4).

According to the results in this study, the MPRM, ELM and LS-SVM methods are useful tools for modeling the sample UCS and performed well. The PI values obtained for the MPRM, ELM and LS-SVM models are 1.879, 1.881 and 1.590 during training and 1.658, 1.636 and 1.573 during the testing periods, respectively (Table 4). Considering PI value, The MPRM performed slightly better than the ELM model. Further, the difference in training and testing performance between the ELM and LS-SVM models is meaningful.

In order to check the validation of the prediction models suggested in this study, the relations of predicted values versus measured values are plotted in Fig. 5.

The error in the predicted value was represented by the distance that each data point plots from the 1:1 diagonal line (Fig. 6). It can be seen that the predicted values for ELM and MPRM models are almost lying on the diagonal line unlike LS-SVM model (Fig. 6).

Regression error characteristic (REC) curve (Bi and Bennett 2003) gave the graph of error tolerance versus percentage of points that are predicted within the tolerance. The x-axis and the y-axis represent the error tolerance and the accuracy of a regression function, respectively (Fig. 7). The area over the REC curve (AOC) provides the approximation of the expected error. The lesser the AOC, the better is the performance of the models. Thus, ROC curves allow easy and reliable visual estimate of the performance of the models (Fig. 7). Figures 7 and 8 show the ROC curves and bar chart of AOC values of the different models. The value of AOC of LSSVM model is higher than the developed MPMR and ELM models. Hence, performance of MPMR and ELM is better than the developed LSSVM model. The performance of MPMR and ELM is almost the same.

Taylor diagrams (Taylor 2001) are simple graphical representation of how the predicted values of are in correspondence with the observed values and compare the performance of various models used for prediction. It depicts statistical comparison of various models in a two-dimensional graph by plotting standard deviations, correlation coefficient and centered root mean square (RMS). Standard deviation is denoted by the radial distance from the origin. The RMS error is relational to the distance between observed and simulated fields assessed in the identical units to standard deviation. Correlation coefficient is represented by the azimuthal angle (Fig. 9).

Figure 9 illustrates taylor diagram of the developed MPMR, ELM and LSSVM models. It is clear from Fig. 3 that the developed MPMR and ELM produce better performance than the LSSVM model.

Discussion and conclusions

This study examines the applicability and capability of the Extreme Learning Machine (ELM), Minimax Probability Machine Regression (MPMR) approaches for prediction of UCS of the volcanic rocks with different weathering degree. The results of the developed models ELM and MPMR have been compared with Least Square Support Vector Machine (LS-SVM) models. In these models, porosity and slake durability index were used as input parameters.

According to results of this study, the LS-SVM model having the worst performance among soft computing methods given in this study performed slightly better than the MLR models having the best performance among regression models. Also, some difficulties in the implementation and generalization of these statistical models are valid such as given in introduction section.

Considering the PI, REC and Taylor diagram, the performance of MPMR and ELM is better than the developed LS-SVM model. The prediction performance of MPRM, ELM models is excellent while the performance of LS-SVM model is good. Hence, the relation between inputs and output has been captured successfully by the developed soft computing models. The performance of MPMR and ELM is almost the same.

The developed MPMR tries to keep the predicted output within a bound and model controls future prediction of UCS. However, ELM and LS-SVM have no control over future prediction. MPMR uses two tuning parameters (error insensitive zone and width of radial basis function). LS-SVM also uses two tuning parameters (error insensitive zone and regularization parameter). ELM uses four tuning parameters(activation function, number of hidden neurons, number of training dataset and size of block data). ELM is a modified version of ANN. So, it is constructed based on empirical risk minimization principle. MPMR and ELM have been constructed based on structural risk minimization principle. The concept of probability is used for developing MPMR model. ELM and LS-SVM are not probabilistic models.

In general, there is no discernible difference between the Id values of the fresh rocks and the Id values in the slightly weathered rocks (Ceryan et al. 2008; Wyering et al. 2014; Ceryan 2015; Undul and Tugrul 2016; Udagedara et al. 2017). On the other hand, there is a significant difference between the Id value of the moderately weathered rock and the Id value of the fresh rock. This difference is much higher in highly decomposed rocks. This condition is also valid for n. Similarly, UCS decreases with increasing degree of weathering, especially after moderately weathering degree (Wyering et al. 2014; Ceryan 2015; Undul and Tugrul 2016; Udagedara et al. 2017). These implications apply also to this study. Therefore, these soft computing models given in this study are suitable for samples from magmatic and metamorphic rock with at least three different degrees of weathering except completely weathering degree. The soft computing models provided in this study are not recommended for examples that do not have different degrees of weathering. User can use the developed models as quick tools for prediction of UCS of magmatic and metamorphic rock with different weathering degree. The developed models can be tried for solving different problems in weathered rocks.

References

Aboutaleb S, Mahmoud Behnia M, Bagherpour R, Bluekian B (2018) Using non-destructive tests for estimating uniaxial compressive strength and static Young’s modulus of carbonate rocks via some modeling techniques. Bull Eng Geol Environ 77(4):1717–1728

Acarlioglu S, Kadir S, Abdioglu E, Arslan M (2013) Epithermal-alteration geology, mineralogy and geochemistry of Eocene volcanic rocks in the Hasandağ (Giresun) area, eastern Pontides, NE Turkey. N Jb Miner Abh (J.Min. Geochem.) 190(1):79–99

Ali M, Guan W, Ibrahim A (2014) Empirical relations between compressive strength and microfabric properties of amphibolites using multivariate regression, fuzzy inference and neural networks: a comparative study. Eng Geol 183:230–240

Alvarez-Grima M, Babuska R (1999) Fuzzy model for the prediction of unconfined compressive strength of rock samples. Int J Rock Mech Min Sci 36:339–349

Ashtaria M, Mousavib SE, Cheshomib A, Khamechiana M (2019) Evaluation of the single compressive strength test in estimating uniaxial compressive and Brazilian tensile strengths and elastic modulus of marlstone. Eng Geol 248:256–266

Baud P, Wong T-F, Zhu W (2014) Effects of porosity and crack density on the compressive strength of rocks. Int J Rock Mech Min Sci 64:202–211

Baykasoglu A, Gullu H, Canakci H (2008) Predicting of compressive and tensile strength of limestone via genetic programming. Expert Syst Appl 35(1–2):111–123

Beiki M, Majdi A, Givshad AD (2013) Application of genetic programming to predict the uniaxial compressive strength and elastic modulus of carbonate rocks. Int J Rock Mech Min Sci 63:159–169

Bi J, Bennett KP (2003) Regression error characteristic curves, proceedings of the twentieth international conference on machine learning (ICML-2003), Washington, pp 43–50

BS 5930 (1981) Code of practice for site investigations. British Standards Institute, London

Bubeck A, Walker RJ, Healy D, Dobbs M, Holwell DA (2017) Pore geometry as a control on rock strength. Earth Planet Sci Lett 457:38–48

Burges C (1998) A tutorial on support vector machines for pattern recognition”, in “data mining and knowledge discovery. Kluwer Academic Publishers, Boston

Canakci H, Baykasoglu A, Gullu H (2009) Prediction of compressive and tensile strength of Gaziantep basalts via neural networks and gene expression programming. Neural Comput & Applic 18:1031–1041

Cargill JS, Shakoor A (1990) Evaluation of empirical methods for measuring the uniaxial compressive strength. Int J Rock Mech Min Sci 27:495–503

Celik SB (2019) Prediction of uniaxial compressive strength of carbonate rocks from nondestructive tests using multivariate regression and LS-SVM methods. Arab J Geosci 12:193

Cengiz K, Sertcelik F, Sertcelik I (2018) Estimation of unconfined uniaxial compressive strength using schmidt hardness ultrasonic pulse velocity. Tehnıckı VjesnıkTechnıcal Gaz 25(5):1669–1574

Ceryan S (2008) New chemical weathering indices for estimating engineering properties of rocks: a case study from Kürtün Granodiorite, NE Turkey. Turkish J Earth Sci 17:187–207

Ceryan S (2012) Weathering indices for assessment of weathering effect and classification of weathered rocks: a case study from ne Turkey. In: Dar, Imran A. (Ed.), Earth Sciences. InTech Publication, pp. 19–44, ISBN 978-953-307-861-8

Ceryan N (2014) Application of support vector machines and relevance vector machines in predicting uniaxial compressive strength of volcanic rocks. J Afr Earth Sci 100:634–644

Ceryan S (2015) New weathering indices for evaluating durability and weathering characterization of crystalline rock material: a case study from NE Turkey. J Afr Earth Sci 103:54–64

Ceryan N, Korkmaz Can N (2018) Prediction of the uniaxial compressive strength of rocks materials. In: Ceryan N (ed) Handbook of Research on Trends and Digital Advances in Engineering Geology. IGI Global United States of America, pp 39–76

Ceryan S, Tudes S, Ceryan N (2008) A new quantitative weathering classification for igneous rocks. Environ Geol 55:1319–1336

Ceryan N, Okkan U, Kesimal A (2013) Prediction of unconfined compressive strength of carbonate rocks using artificial neural networks. Environ Earth Sci 68:807–819

Ceryan N, Berber S, Ceryan S (2016) Prediction of unconfined compressive strength of weathered rocks using p-durability index and porosity in soft computing models. ICENS 2016, 2nd International Conference on Engineering and Natural Sciences, 24-28

Cevik A, Sezer EA, Cabalar AF, Gokceoglu C (2011) Modeling of the uniaxial compressive strength of some clay-bearing rocks using neural network. Appl Soft Comput 11:2587–2594

Chang C, Zoback MD, Khaksar A (2006) Empirical relations between rock strength and physical properties in sedimentary rocks. J Pet Sci Eng 51:223–237

Chaudhary B (2004). Correlation between UCS and penetration strength on weak calcarenite of the roman mine pillar by Tu Delft hand penetrometer. Personal communication. ITC, Delft

Cheshom A, Ahmadi-Sheshde E (2013) Determination of uniaxial compressive strength of microcrystalline limestone using single particles load test. J Pet Sci Eng 111:121–126

Cheshomi A, Ahmadi-Seshde E, Galandarzade A (2012) Introducing single particle loading apparatus and repeatability of the results. Iran J Eng Geol 5:17–32 (Persian Language)

Cheshomi A, Mousavi E, Ahmadi-Sheshde E (2015) Evaluation of single particle loading test to estimate the uniaxial compressive strength of sandstone. J Pet Sci Eng 135:421–428

Cheshomi A, Hajipour G, Hassanpour J, Dashtaki BB, Firouzei Y, Sheshde EA (2017) Estimation of uniaxial compressive strength of shale using indentation testing. J Pet Sci Eng 151:24–30

Deere DU, Miller R P(1966) Engineering classification and index properties for intact rock. Technical Report AFWL-TR-65-116. Air Force Weapons Lab., Kirtland Air Force Base, New Mexico pp. 308

Dehghan S, Sattari GH, Chehreh Chelgani S, Aliabadi MA (2010) Prediction of uniaxial compressive strength and modulus of elasticity for travertine samples using regression and artificial neural networks. Min Sci Technol 20:41–46

Demirdag S, Sengun N, Ugur I, Altindag R (2018) Estimating the uniaxial compressive strength of rocks with schmidt rebound hardness by considering the sample size. Arab J Geosci 11:502

Deo RC, Samui P (2017) Forecasting evaporative loss by least-square support-vector regression and evaluation with genetic programming, Gaussian process, and minimax probability machine regression: case study of Brisbane City. J Hydrol Eng 22(6):05017003. https://doi.org/10.1061/(ASCE)HE.1943-5584.0001506

Dincer I, Acar A, Ural S (2008) Estimation of strength and deformation properties of quaternary caliche deposits. Bull Eng Geol Environ 67:353–366

Fattahi H (2017) Applying soft computing methods to predict the uniaxial compressive strength rocks from schmidt hammer rebound values. Comput Geosci 21(4):665–681

Fener M, Kahraman S, Bilgil A, Gunaydin O (2005) A comparative evaluation of indirect methods to estimate the compressive strength of rocks. Rock Mech Rock Eng 38(4):329–334

Feng X (2015) Application of Bayesian approach in geotechnical engineering Ph.D. Thesis Polytechnic University of Madrid, 198p

Fereidooni D (2016) Determination of the geotechnical characteristics of hornfelsic rocks with a particular emphasis on the correlation between physical and mechanical properties. Rock Mech Rock Eng 49:2595–2608

Gedik N (2018) Least squares support vector mechanics to predict the stability number of rubble-mound breakwaters. Waterm 10(10):1452. https://doi.org/10.3390/w10101452

Ghasemi E, Kalhori H, Bagherpour R, Yagiz S (2018) Model tree approach for predicting uniaxial compressive strength and Young’s modulus of carbonate rocks. Bull Eng Geol Environ 77:331–343

Gokceoglu C (1997) Approach to eliminate the difficulties encountered in the engineering classification of clay masses containing dense discontinuities PhD Thesis, Hacette University, Department of Geological Engineering, 214p. (in Turkish)

Gokceoglu C (2002) A fuzzy triangular chart to predict the uniaxial compressive strength of the agglomerates from their petrographic composition. Eng Geol 66(1–2):39–51

Gokceoglu C, Zorlu K (2004) A fuzzy model to predict the uniaxial compressive strength and the modulus of elasticity of a problematic rock. Eng Appl Artif Intell 17:61–72

Gokceoglu C, Ulusay R, Sonmez H (2000) Factors affecting the durability of selected weak and clay bearing rocks from Turkey. With particular emphasis on the influence of the number of drying and wetting cycles. Eng Geol 57:215–237

Gokceoglu C, Sonmez H, Zorlu K (2009) Estimating the uniaxial compressive strength of some clay bearing rocks selected from Turkey by nonlinear multivariable regression and rule-based fuzzy models. Expert Syst 26(2):176–190

Gopinath KGS, Pal S, Tambe P (2018) Prediction of weight percentage alumina and pore volume fraction in bio-ceramics using gaussian process regression and minimax probability machine regression material today. Proceedings 5(5):12233–12239

Griffiths L, Heap MJ, Xu T, Chen CF, Baud P (2017) The influence of pore geometry and orientation on the strength and stiffness of porous rock. J Struct Geol 96:149–160

Haftani M, Bohloli B, Moosavi M, Nouri A, Moradi M, Javan MRM (2013) A new method for correlating rock strength to indentation tests. J Pet Sci Eng 112:24–31

Haramy KY, De Marco MJ (1985) Use of schmidt hammer for rock and coal testing. In: Proceedings 26th US symposium rock mechanics, 26–28 June, Rapid City, SD. Rotterdam: Balkema, 549–555

Heidari M, Mohseni H, Jalali SH (2018) Prediction of uniaxial compressive strength of some sedimentary rocks by fuzzy and regression models. Geotech Geol Eng 36:401–412

Heidarian M, Jalalifar H, Rafati A (2016) Prediction of rock strength parameters for an Iranian oil field using neurofuzzy method. J AI Data Min 4:2

Huang G-B, Zhu Q-Y, Siew C-K (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1–3):489–501

Huang Y, Lan Y, Thomson SJ, Fang A, Hoffmann WC, Lacey RE (2010) Development of soft computing and applications in agricultural and biological engineering. Comput Electron Agric 71(2):107–127

Huang G, Huang G-B, Song S, You K (2015) Trends in extreme learning machines: a review. Neural Netw 61:32–48

International Society for Rock Mechanics (1981) In: Brown ET (ed) Rock characterization, testing and monitoring-ISRM suggested methods. Oxford Pergamon, p 211

International Society for Rock Mechanics (2007) The complete ISRM suggested methods for rock characterization, testing and monitoring: 1974–2006. In: Ulusay H (ed) Suggested methods prepared by the commission on testing methods. International Society for Rock Mechanics. ISRM Turkish National Group, Ankara, p 628

Jahed Armaghani D, Tonnizam Mohamad E, Momeni E, Monjezi M, Narayanasamy MS, Amin MFM (2015) An adaptive neuro-fuzzy inference system for predicting unconfined compressive strength and Young’s modulus: a study on Main range granite. Bull Eng Geol Environ 74:1301–1319

Jahed Armaghani D, Amin MFM, Yagiz S, Faradonbeh RS, Abdullah RA (2016a) Prediction of the uniaxial compressive strength of sandstone using various modeling techniques. Int J Rock Mech Min Sci 85:174–186

Jahed Armaghani D, Tonnizam Mohamad E, Momeni E, Monjezi M, Narayanasamy MS (2016b) Prediction of the strength and elasticity modulus of granite through an expert artificial neural network. Arab J Geosci 9:48

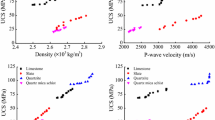

Jamshidi A, Zamanian H, Sahamieh RZ (2018) The effect of density and porosity on the correlation between uniaxial compressive strength and p-wave velocity. Rock Mech Rock Eng 51(4):1279–1286

Kahraman S, Fener M, Kilic CO (2016) A preliminary study on the conversion factor used in the prediction of the UCS from the BPI for pyroclastic rocks. Bull Eng Geol Environ 75(2):771–780

Karakus M, Tutmez B (2006) Fuzzy and multiple regression modelling for evaluation of intact rock strength based on point load, Schmidt hammer and sonic velocity. Rock Mech Rock Eng 39(1):45–57

Kayabali K, Selcuk L (2010) Nail penetration test for determining the uniaxial compressive strength of rock. Int J Rock Mech Min Sci 47:265–275

Koncagul CE, Santi PM (1999) Predicting the unconfined compressive strength of the Breathitt shale using durability, shore hardness and rock structural properties. Int J Rock Mech Min Sci 36:139–153

Koolivand-Salooki M, Esfandyari M, Rabbani E, Koulivand M, Azarmehre A (2017) Application of genetic programing technique for predicting uniaxial compressive strength using reservoir formation properties. J Pet Sci Eng 159:35–48

Kumar M, Samui P, Naithani AK (2013) Determination of uniaxial compressive strength and modulus of elasticity of travertine using machine learning techniques. Int J Adv Soft Comput Appl 5(3):1–15

Lanckriet GRG, Ghaoui LE, Bhattacharyya C, Jordan MI (2002a) Minimax probability machine. In: Dietterich TG, Becker S, Ghahramani Z (eds) Advances in neural information processing systems 14. MA. MIT Press, Cambridge

Lanckriet GRG, Ghaoui LE, Bhattacharyya C, Jordan MI (2002b) A robust minimax approach to classification. J Mach Learn Res 3:555–582

Lee CJ, Lee GSW, Yoon ES (2008) A new estimation algorithm of physical proper-ties based on a group contribution and support vector machine. Korean J Chem Eng 25:568–574

Li W, Tan Z (2017) Research on rock strength prediction based on least squares support vector machine. Geotech Geol Eng 35:385–393

Liu Z, Shao J, Xu W, Wu Q (2015) Indirect estimation of unconfined compressive strength of carbonate rocks using extreme learning machine. Acta Geotech 10:651–663

Madhubabu N, Singh PK, Kainthola A, Mahanta B, Tripathy A, Singh TN (2016) Prediction of compressive strength and elastic modulus of carbonate rocks. Measurement 88:202–213

Maji VB, Sitharam TG (2008) Prediction of elastic modulus of jointed rock mass using artificial neural networks. Geotech Geol Eng 26(4):443–452

Manouchehrian A, Sharifzadeh M, Hamidzadeh Moghadem R, Nouri T (2013) Selection of regression models for predicting strength and deformability properties of rocks using GA. Int J Min Sci Technol 23:495–501

Manouchehrian A, Sharifzadeh M, Marji MF (2014) A bonded particle model for analysis of the flaw orientation effect on crack propagation mechanism in brittle materials under compression. Arch Civ Mech Eng 14:40–52

Maruto Corporation (2006) Penetrom:eter for soft rock: model SH-70 instruction manual, Tokyo

Mateus J, Saavedra N, Carrillo ZC, Mateus D (2007) Correlation development between indentation parameters and unaxial compressive strength for Colombian sandstones. CT&F-Ciencia Tecnología y Futuro 3(3):125–136

Matin SS, Farahzadi L, Makaremi S, Chehreh Chelgan S, Sattari GH (2018) Variable selection and prediction of uniaxial compressive strength and modulus of elasticity by random forest. Appl Soft Comput 70:980–987

Mazidi MS, Haftani M, Bohloli B, Cheshomi A (2012) Measurement of uniaxial compressive strength of rocks using reconstructed cores from rock cuttings. J Pet Sci Eng 86-87:39–43

Mercer J (1909) Functions of positive and negative type, and their connection with the theory of integral equations. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences

Meulenkamp F, Alvarez Grima M (1999) Application of neural networks for the prediction of the unconfined compressive strength (UCS) from Equotip hardness. Int J Rock Mech Min Sci 36:29–39

Mishra DA, Basu A (2012) Use of the block punch test to predict the compressive and tensile strengths of rocks. Int J Rock Mech Min Sci 51:119–127

Mishra DA, Basu A (2013) Estimation of uniaxial compressive strength of rock materials by index tests using regression analysis and fuzzy inference system. Eng Geol 160:54–68

Mohamad T, Jahed Armaghani D, Momeni E, Yazdavar AH, Ebrahim M (2018) Rock strength estimation: a PSO-based BP approach. Neural Comput and Applic 30:1635–1646

Mokhtari M, Behnia M (2019) Comparison of LLNF, ANN, and COA-ANN techniques in modeling the uniaxial compressive strength and static Young’s modulus of limestone of the Dalan formation. Nat Resour Res 28(1):223–239

Momeni E, Jahed Armaghani D, Hajihassani M, Amin MFM (2015) Prediction of uniaxial compressive strength of rock samples using hybrid particle swarm optimization-based artificial neural networks. Measurement 60:50–63

Monjezi M, Amini Khoshalan H, Razifard M (2012) A neuro-genetic network for predicting uniaxial compressive strength of rocks. Geotech Geol Eng 30:1053–1062

Moos D, Peska P, Finkbeiner T, Zoback MD (2003) Comprehensive wellbore stability analysis utilizing quantitative risk assessment. J Pet Sci Eng 38:97–110

Moradian Z, Behnia M (2009) Predicting the uniaxial compressive strength and static Young's modulus of intact sedimentary rocks using the ultrasonic test. Int J Geomech 9(1):14–19

Nazir R, Momeni E, Jahed Armaghani D, Mohd For M (2013) Correlation between unconfined compressive strength and indirect tensile strength of limestone rock samples. EJGE 18(1):1737–1746

Nefeslioglu HA (2013) Evaluation of geo-mechanical properties of very weak and weak rock materials by using non-destructive techniques: ultrasonic pulse velocity measurements and reflectance spectroscopy. Eng Geol 160:8–20

Ng K, Peiris S, Gerlach R (2014) Estimation and forecasting with logarithmic autoregressive conditional duration models: a comparative study with an application. Expert Syst Appl 41(7):3323–3332

Ngan-Tillard DJM, Verwaa W, Maurenbrecher PM, Paassen LA (2009) Microstructural degradation of maastrichtian limestones. In: Proceedings of Eurock 2009, Engineering in difficult ground conditions soft rocks and karst Leiden, CRC Press, 321–326

Ojha VK, Mishra AA (2018) Neural tree for estimating the uniaxial compressive strength of rock materials. Springer International Publishing AG, part of Springer Nature 2018 A. Abraham et al. (Eds.): HIS 2017, AISC 734: 1–10

Ozbek A, Unsal M, Dikec A (2013) Estimating uniaxial compressive strength of rocks using genetic expression programming. J Rock Mech Geotech Eng 5(4):325–329

Palassi M, Mojtaba Pirpanahi M (2013) Edge load strength test for indirect estimation of unconfined compressive strength. Int J Rock Mech Min Sci 61:111–117

Pollak D, Gulam V, Bostjancic I (2017) A visual determination method for uniaxial compressive strength estimation based on Croatian carbonate rock materials. Eng Geol 231:68–80

Rabbani E, Sharif F, Koolivand Salooki M, Moradzadeh A (2012) Application of neural network technique for prediction of uniaxial compressive strength using reservoir formation properties. Int J Rock Mech Min Sci 56:100–111

Rasool D, Nasser ME, Zahra H (2014) Modeling of uniaxial compressive strength by genetic programming and neuro-fuzzy. J Biodivers Environ Sci (JBES) 5(2):604–611

Rezaei M, Majdi A, Monjezi M (2014) An intelligent approach to predict unconfined compressive strength of rock surrounding access tunnels in longwall coal mining. Neural Comput Appl 24:233–241

Rzhevsky V, Novick G (1971) The physics of rocks. Mir Publisher, Moscow, 320 p. (1971)

Saedi B, Mohammadi SD, Shahbazi H (2019) Prediction of uniaxial compressive strength and elastic modulus of migmatites using various modeling techniques. Arab J Geosci 11:574

Samui P (2008) Slope stability analysis: a support vector machine approach. Environ Geol 56:255–267

Samui P, Dixon B (2012) Application of support vector machine and relevance vector machine to determine evaporative losses in reservoirs. Hydrol Process 26(9):1361–1369

Sattarib M, Gharagheizia F, Ilani-Kashkoulia P, Mohammadic AH, Ramjugernatha D (2014) Determination of the speed of sound in ionic liquids using a leastsquares support vector machine group contribution method. Fluid Phase Equilib 367:188–193

Serre D (2002) Matrices theory and applications. Springer, Berlin

Sharma PK, Singh TN (2008) A correlation between P-wave velocity, impact strength index, slake durability index and uniaxial compressive strength. Bull Eng Geol Environ 67:17–22

Sharma LK, Vishal V, Singh TN (2017) Developing novel models using neural networks and fuzzy systems for the prediction of strength of rocks from key geomechanical properties. Measurement 102:158–169

Sheshde EA, Cheshomi A (2015) New method for estimating unconfined compressive strength (UCS) using small rock samples. J Pet Sci Eng 133:367–375

Shorey PR, Barat D, Das MN, Mukherjee KP, Singh B (1984) Schmidt hammer rebound data for estimation of large scale in situ coal strength. Int J Rock Mech Min Sci Geomech Abstr 21:39–42

Singh R, Umrao RK, Ahmad M, Ansari MK, Sharma LK, Singh TN (2017) Prediction of geomechanical parameters using soft computing and multiple regressio. Measurement 99:08–119

Sonmez H, Tuncay E, Gokceoglu C (2004) Models to predict the uniaxial compressive strength and the modulus of elasticity for Ankara agglomerate. Int J Rock Mech Min Sci 41:717–729

Sonmez H, Gokceoglu C, Nefeslioglu HA, Kayabasi A (2006) Estimation of rock modulus: for intact rocks with an artificial neural network and for rock masses with a new empirical equation. Int J Rock Mech Min Sci 43(2):224–235

Sridevi J (2000) Numerical modeling of jointed rocks. PhD Thesis, Department of Civil Engineering, IISc, Bangalore

Strohmann T, Grudic GZ (2002) A formulation for minimax probability machine regression. Proc., Advances in Neural Information Processing System, MIT Press, Cambridge, 769–776

Sulukcu S, Ulusay R (2001) Evaluation of the block punch index test with particular reference to the size effect, failure mechanism and its effectiveness in predicting rock strength. Int J Rock Mech Min Sci 38:1091–1111

Suykens JAK, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9:293–300

Suykens JAK, De Brabanter J, Lukas L, Vandewalle J (2002) Weighted least squares support vector machines: robustness and sparse approximation. Neurocomputing 48(1–4):85–105

Szwedzicki T (1998) ISRM suggested methods for determining the indentation hardness index of rock materials. Int J Rock Mech Min Sci Geomech Abstr 35:831–835

Taylor KE (2001) Summarizing multiple aspects of model performance in a single diagram. J Geophys Res 106:7183–7192

Tonnizam Mohamad E, Jahed Armaghani D, Momeni E, Alavi Nezhad Khalil Abad SV (2015) Prediction of the unconfined compressive strength of soft rocks: a PSO-based ANN approach. Bull Eng Geol Environ 74:745–757

Torabi-Kaveh M, Naseri F, Saneie S, Sarshari B (2014) Application of artificial neural networks and multivariate statistics to predict UCS and E using physical properties of Asmari limestones. Arab J Geosci 8(5):2889–2897

Tugrul A (2004) The effect of weathering on pore geometry and compressive strength of selected rock types from Turkey. Eng Geol 75(3–4):215–227

Udagedara DT, Oguchi CT, Gunatilake JK (2017) Evaluation of geomechanical and geochemical properties in weathered metamorphic rocks in tropical environment: a case study from Samanalawewa hydropower project, Sri Lank. Geosci J 21(3):441–452

Ulusay R, Gokceoglu C (1997) The modified block punch index test. Can Geotech J 34(6):991–1001

Umra RK, Sharma LK, Singh R, Singh TN (2018) Determination of strength and modulus of elasticity of heterogenous sedimentary rocks: an ANFIS predictive technique. Measurement 126:194–201

Undul O, Tugrul A (2016) On the variations of geo-engineering properties of dunites and diorites related to weathering. Environ Earth Sci 75:1326

Usturbelli Z (2008) Determination of weathering degree and weatherability of wall building stone constructed for environmental recreation in engineering time in Karadeniz Technical University central campus (Trabzon), Ms Thesis, Karadeniz Technical University, Department of Geological Engineering, 90p (in Turkish)

Van Gestel T, Suykens JAK, Baesens B, Viaene S, Vanthienen J, Dedene G, De Moor B, Vandewalle J (2004) Benchmarking least squares support vector machine classifiers. Mach Learn 54:5–32

Vapnik VN (1995) The nature of statistical learning theory. Springer

Verwaal W, Mulder A (1993) Estimating rock strength with the Equotip hardness tester. Int J Rock Mech Min Sci Geomech Abstr 30:659–662

Wang Y, Aladejare AE (2015) Selection of site-specific regression model for characterization of uniaxial compressive strength of rock. Int J Rock Mech Min Sci 75:73–81

Wang CW, Chau KW, Cheng CT, Qiu LA (2009) Comparison of performance of several artificial intelligience methods for forecasting monthly discharge time series. J Hydrol 374:294–306

Wyering LD, Villeneuve MC, Wallis IC, Siratovich PA, Kennedy BM, Gravley DM, Cant JL (2014) Mechanical and physical properties of hydrothermally altered rocks, Taupo Volcanic Zone, New Zealand. J Volcanol Geotherm Res 288:76–93

Yagiz S (2009) Predicting uniaxial compressive strength, modulus of elasticity and index properties of rocks using Schmidt hammer. Bull Eng Geol Environ 68:55–63

Yagiz S (2011) Correlation between slake durability and rock properties for some carbonate rocks. Bull Eng Geol Environ 70:377–383

Yagiz S, Sezer EA, Gokceoglu C (2012) Artificial neural networks and nonlinear regression techniques to assess the influence of slake durability cycles on the prediction of uniaxial compressive strength and modulus of elasticity for carbonate rocks. Int J Numer Anal Methods Geomech 36:1636–1650

Yamaguchi Y, Nakamura Y, Nakamura M, Hakoishi N, Yamaya M, Kato Y (2005) Verification of design strength of soft rock foundation by needle penetration testing. J Jpn Soc Eng Geol 46(1):20–27 (In Japanese)

Yang L, Yang B, Jing S, Sun Q (2019) A minimax probability extreme machine framework and its application in pattern recognition. Eng Appl Artif Intell 81:260–269

Yasar E, Erdogan Y (2004) Correlating sound velocity with the density, compressive strength and Young’s modulus of carbonate rocks. Int J Rock Mech Min Sci 41:871–875

Yesiloglu-Gultekin N, Sezer EA, Gokceoglu C, Bayhan H (2013) An application of adaptive neuro fuzzy inference system for estimating the uniaxial compressive strength of certain granitic rocks from their mineral contents. Expert Syst Appl 40(3):921–928

Yilmaz I (2009) A new testing method for indirect determination of the unconfined compressive strength of rocks. Int J Rock Mech Min Sci 46:1349–1357

Yilmaz NG (2013) The influence of testing procedures on uniaxial compressive strength prediction of carbonate rocks from equotip hardness tester (EHT) and proposal of a new testing methodology: hybrid dynamic hardness (HDH). Rock Mech Rock Eng 46:95–106

Yilmaz I, Yuksek AG (2008) An example of artificial neural network (ANN) application for indirect estimation of rock parameters. Rock Mech Rock Eng 41:781–795

Yilmaz I, Yuksek AG (2009) Prediction of the strength and elastic modulus of gypsum using multiple regression, ANN, and ANFIS models. Int J Rock Mech Min Sci 46:803–810

Yuen KV (2010) Recent developments of Bayesian model class selection and applications in civil engineering. Struct Saf 32(5):338–346

Zausa F, Civolani L (2001) Method for selecting drilling parameters. International Application Published under the Patent Cooperation Treaty, p 23

Zong WW, Huang G-B, Chen YQ (2013) Weighted extreme learning machine for imbalance learning. Neurocomputing 101:229–242

Zorlu K, Gokceoglu C, Ocakoglu F, Nefeslioglu HA, Acikalin S (2008) Prediction of uniaxial compressive strength of sandstones using petrography-based models. Eng Geol 96:141–158

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible Editor: Ali Karrech

Rights and permissions

About this article

Cite this article

Ceryan, N., Samui, P. Application of soft computing methods in predicting uniaxial compressive strength of the volcanic rocks with different weathering degree. Arab J Geosci 13, 288 (2020). https://doi.org/10.1007/s12517-020-5273-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12517-020-5273-4