Abstract

In this work, we deal with a global optimization problem (P) for which we look for the most preferred extreme point (vertex) of the convex polyhedron according to a new linear criterion, among all efficient vertices of a multi-objective linear programming problem. This problem has been studied for decades and a lot has been done since the 70’s. Our purpose is to propose a new and effective methodology for solving (P) using a branch and bound based technique, in which, at each node of the search tree, new customized bounds are established to delete uninteresting areas from the decision space. In addition, an efficiency test is performed considering the last simplex tableau corresponding to the current visited vertex. A comparative study shows that the proposed method outperforms the most recent and performing method dedicated to solve (P).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The concept of optimal solution in linear programming with single objective function is no longer valid in multi-objective linear programming (MOLP). Indeed, several objective functions, often conflicting, must be optimized simultaneously and as a consequence; the improvement of one of the functions generates the deterioration of at least one another. Hence, the term optimal solution is replaced by efficient solution in the decision space, or non-dominated solution in the criteria space of the program to be solved.

However, in multi-objective optimization, the set \(S_E\) of efficient solutions is generally of considerable size and the choice of a solution that suits the decision maker’s preferences according to a new criterion, becomes a difficult task. Indeed, it is well known that this problem (henceforth denoted (P)) is a difficult global optimization problem (see for instance Benson (1991), Fülöp and Muu (2000), Thoai (2000), Yamamoto (2002)) since the set \(S_E\) of efficient solutions of (P) is nonconvex. Notice that, in this case, the efficient set \(S_E\) has two interesting properties. First, \(S_E\) is connected and second, it contains extreme points of the polyhedron. An extensive literature review allowed us to notice that several studies have been carried out during the end of the last century. In fact, one of the pioneers in this field is Philip (1972) who studied this problem and suggested for solving it an algorithm that moves from an efficient vertex to an efficient adjacent one yielding an increase in the objective function and a cutting plane technique is used to overcome the difficulty of non-convexity of the efficient set. A lot of papers followed his work using somehow the same technique; we cite for example those proposed by Isermann (1974), Bolintineanu (1993), Ecker and Song (1994) and Fülöp and Muu (2000). In a different way, Benson (1993) proposed a nonadjacent extreme point search algorithm which dispenses with the enumeration.

Not long ago, a new study to obtain all efficient extreme points of MOLP with only three objectives has been published Piercy and Steuer (2019). This approach operates in the decision space and especially useful when working with such MOLPs possessing large numbers of efficient extreme points. Whereas, in this case, working in the criteria space can be very beneficial in terms of execution time.

Recently, Liu et al. (2018) proposed two procedures to solve (P) in the criteria space, which the authors called Primal and Dual Algorithms. In fact, problems of interest are divided in two special cases of (P) where in the first case, they consider \(S_E\) as the efficient set of MOLP problem (multi-objective linear program) and in the second case, they consider a CMOP problem (convex multi-objective program). Based on a revised version of Benson’s outer approximation algorithm, they first propose a new primal algorithm for MOLP problem by incorporating a bounding procedure in the primal algorithm described in Hamel et al. (2013). Then, this primal algorithm is extended to solve problem (P) in the criteria space.

In the dual algorithm, they introduce dual algorithms to solve cases one and two in dual objective space. The dual algorithms are derived from dual variants of Benson’s algorithm Hamel et al. (2013), Löhne et al. (2014). Comparison is done with several state of the art algorithms from the literature on a set of randomly generated instances to demonstrate that they are considerably faster than the competitors.

In Boland et al. (2017) the authors described a new algorithm for optimizing a linear function over the set of efficient solutions of a multi-objective integer linear program (MOILP). To generate the non-dominated solutions, the authors developed a novel criteria space decomposition scheme resulting from a limited number of search sub-spaces created as well as that of disjunctive constraints required to define the single-objective integer program that searches for a non-dominated solution. A computational study shows that the proposed algorithm clearly outperforms Jorge’s algorithm Jorge (2009) that defined a sequence of progressively more constrained single-objective integer problems to eliminate not only all the previously generated efficient solutions, but also any other feasible solutions whose criterion vectors are dominated.

In the present study, we propose a branch and bound based method to solve the global optimization problem (P), named BCO-method. In a structured search tree, a node is created to determine an extreme point in the polytope of the MOLP problem, and a branch defines adjacent edges of the polytope along which we move from an extreme point to a neighbour one to improve at least one criterion. Several evaluation process rules to fathom a node are developed; the corresponding extreme point is efficient or has already been visited, no criterion of the MOLP problem can be improved or the value of the function f to be optimized is less than the best value of f previously encountered (for maximization problem). This has the effect to prune uninteresting branches, which allows us to find the optimal solution for (P) without having to check the entire set of efficient solutions that correspond to efficient extreme points of the MOLP problem. Moreover, results in the simplex tableau are used to launch the current node efficiency test run. In the following section (Sect. 2), we give mathematical formulations and necessary notations for a good understanding of the algorithm developed. Also, we provide some preliminaries on multi-objective optimization. In Sect. 3, the principle of the proposed BCO-method is set forth and the different steps of the algorithm are processed. In Sect. 4, theoretical results and proofs on which the algorithm is based are established. Furthermore, in Sect. 5, an illustrative example is performed to exhibit the resolution mechanism. In Sect. 6, a comparative study is carried out and shows that the BCO-method performs better than the Primal method recently described in reference Liu and Ehrgott (2018). Finally, some concluding remarks and prospects are given in Sect. 7.

2 Mathematical formulation

Let \( S=\lbrace x\in {\mathfrak {R}}^n|Ax \le b,\ x\ \ge \ 0\rbrace \) be a nonempty compact polyhedron in \({\mathfrak {R}}^n\), where A is an \((m\times n)-\)real matrix, b an \((m \times 1)\)-real vector and let \(c^i\) be an \((1\times n)-\) real vectors for \(i=1,\ \ldots,\ r;\ r\ge 2\). Let C be the \((r \times n)-\)matrix whose rows are the vectors \(c^i,\ \ i=1,\ \ldots,\ r\).

We consider the following muli-objective linear program (MOLP):

A solution \(x\in S\) is known as efficient for MOLP problem, if there does not exist another solution \(y\in S\) such that \(Cy\ge Cx\) and \(Cy\ne Cx\). The image of an efficient solution in the criteria space is called a non-dominated solution or Pareto optimal solution. Let \(S_E\subset S\) be the set of all efficient vertices of MOLP problem. The number of solutions in \(S_E\) being generally very large, deciding to find all of them becomes a very heavy task and requires an exponential computing time. In addition, the choice of the best solution can be very difficult according to the decision maker’s preference with a long efficient solutions list. Therefore, it ought to be more computationally practical to generate the preferred efficient solution that maximizes a decision maker’s utility, corresponding to the following mathematical programming problem to solve in this work:

where \(f:{\mathfrak {R}}^n \rightarrow {\mathfrak {R}}\) is a continuous linear function over S and \(f(x)=\sum _{j=1}^{n}{f_j x_j}\) .

f is not necessarily a combination of the MOLP problem’s criteria and can be any linear function. The resolution of the program (P) is based on the relaxed linear program (PR) defined by:

Applying the simplex method, let \(x_l^*\) be a basic feasible solution of (PR) obtained at a step \(l,\ l\ge 0\), of the solving procedure dedicated to the program (P). Let’s note:

-

1.

\(B_{l}\) and \(N_{l}\) : the sets of indices of basic variables and non-basic variables respectively of \(x_l^*\),

-

2.

\({{\hat{c}}}_j^i\) and \({{\hat{f}}}_j\) : the reduced costs associated with MOLP problem’s criteria (1), as well as those associated to f in program (2) after the pivoting process,

-

3.

\(H_l\): the set of indices that indicate possible improving directions for the criteria \(c^i,\ i=1,\ldots ,r\), to search efficient extreme points in a structured search tree.

$$\begin{aligned} H_l=\left( H_l^1\cap H_l^3\right) \cup \left( H_l^2\cap H_l^4\right) \end{aligned}$$(4)where: \(H_l^1=\left\{ j\in N_l|\exists i\in \left\{ 1,\ldots ,r\right\} ;\ {{\hat{c}}}_j^i>0\right\} ,\ \ H_l^2=\left\{ j\in N_l|{{\hat{c}}}_j^i=0,\ \ \forall i\in \left\{ 1,\ldots ,r\right\} \right\} \),

\(H_l^3=\left\{ j\in N_l|{{\hat{f}}}_j\le 0\right\} \) and \(H_l^4=\left\{ j\in N_l|{{\hat{f}}}_j<0\right\} \).

-

4.

\((T)_l\) : the following linear program :

$$\begin{aligned} (T)_l\left\{ \begin{array}{ll} Max\ w =\sum _{j=1}^{r}e_j\\ Cx-e=Cx^*_l &{}\\ Ax\le b&{}\\ x\ge 0,\ e_j\ge 0,\ j=1\ldots ,r&{}\\ e=(e_j)_{j=1,\ldots ,r}&{} \end{array} \right. \end{aligned}$$(5)to test the efficiency of \(x_l^*\) like described in Ecker and Kouada (1975). We shall derive below from the program \({(T)}_l\) a simple procedure, called the BCO-efficiency test, so that we can test whether a basic feasible solution is efficient or not.

In the following, we present the principle of the method.

3 Method description

The main subject of our work is to propose an exact method for solving the global optimization program (P). To do this, we focus on the optimization of the function f over the feasible set corresponding to the vertices of S, the relaxed domain of \(S_E\), by solving the linear program (PR) using simplex method, without having to revisit those already visited. Indeed, the simplex table is increased by the r rows of the matrix of criteria C, \(Z_i\left( x\right) =c^{i}x\) for \(i=1,\ldots ,\ r\), to be reevaluated and updated simultaneously with the function f.

Thereby, to solve program (P), our algorithm is developed to rank the basic feasible solutions of the (PR) program. The algorithm is structured in a search tree in which at each node l, \(l \ge 0\), a basic feasible solution \(x_l^*\) of program (PR) is generated. If this \(k-\)th best (\(k\ge 0\)) basic feasible solution of (PR) is the first one to satisfy the efficiency test along the branch ending at node l, then, the corresponding node is fathomed and the best value encountered \(f^*\) of f is updated as well as the optimal solution \(x^*\) which is replaced by \(x_l^*\).

Else, the set \(H_l\) is determined to create as many child nodes as there are elements in \(H_l\) and each of them will corresponds to another vertex of the domain S, obtained from \(x_l^*\) by pivoting with respect to a corresponding direction \(j\in H_l\). This process is repeated until all the created nodes are fathomed.

A slave program is launched to test the efficiency of each visited feasible basic solution.

A node l of the search tree is fathomed if one of the three conditions is satisfied:

-

1.

The corresponding feasible basic solution \(x_l^*\) is efficient or already visited,

-

2.

The set \(H_l\) is empty,

-

3.

\(f\left( x_l^*\right) <f^*\), where \(f^*\) is the best value of f previously encountered.

The algorithm is summarized in the following steps:

3.1 Algorithm of the proposed method

Inputs : A, b, C and f.

Outputs: \(x^*\): optimal solution of (P) and \(f^*=f(x^*)\).

Step 0: (Initial step)

\(l:= 0\); (search tree root); \(f^*=-\infty \); \(VN:=\emptyset \); (set of generated vertices of S).

0.1. Solve (PR) at node 0. If it is not feasible, then terminate, (P) is unfeasible.

0.2. Otherwise, let \(x_0^*\) be an obtained optimal solution for (PR), \(VN:=\left\{ x_0^*\ \right\} \).

0.2.1 If the solution \(x_0^*\) is efficient, \(x^*:= x_0^*\) and \(f^*:=f(x_0^*)\). Terminate.

0.2.2. Else:

- Determine the sets \(N_0\) and \(H_0\).

- For each \(j\in H_0\), a node \(l,l=1,\ldots ,\left| H_0\right| \), will be created in the search tree.

- Go to Step 1.

Step 1: (General step)

As long as there are nodes in the search tree not fathomed, do:

1.1. Choose a node l from the search tree regarding depth first strategy. Suppose that the non-basic variable of index j, \(j\in H_{k}\), is considered at this node, where node k denotes the parent of the node l, \(l\ge k\).

1.2. Consider the simplex tableau at node k and pivot according to the non-basic variable of index \(j\in H_{k}\).

1.3. Let \(x_l^*\) be the obtained basic feasible solution of program (PR):

1.3.1. If \(x_l^*\in VN\), the node l is fathomed.

1.3.2. Else, \(VN:=VN\cup \left\{ x_l^*\ \right\} \) .

1.3.2.1. If \(f^*\ge f(x_l^*)\), then the node l is fathomed. Go to Step 1.

1.3.2.2. Otherwise, applied the efficiency test for the solution \(x_l^*\) :

1.3.2.2.1. If it is efficient, then \(x^*:= x_l^*\) and \(f^*:=f(x^*)\). The node l is fathomed, go to Step 1.

1.3.2.2.2. Else, determine the sets \(N_l\) and \( H_l\).

1.4. For each \(j\in H_l\), a node p, \(p=q+1,\ldots ,q+|H_l|\), will be created in the search tree, where q is the number of nodes already created in the search tree.

1.5. Go to Step 1.

In what follows, we highlight the main theoretical results which ensure the convergence of our algorithm.

4 Theoretical results

In this section, the justifications of the steps described in the above algorithm are established. Let \(x_l^*\) be the basic feasible solution of program (PR) obtained at node l of the search tree.

Theorem 1

Let x, \(x\ne x_l^*\), an efficient feasible basic solution of which \(x_l^*\) is the parent in the search tree. Then x is obtained according to a pivot column \(j\in H_l^1\cup H_l^2\).

Proof

Suppose for any coordinate \(x_j\) of x, \(j\in N_l\), we have : \(j\notin H_l^1\cup H_l^2\). Then \(j\notin H_l^1\) and \(j\notin H_l^2\) hence, for all j in \(N_l\), \({{\hat{c}}}_j^i \le 0\), \(\forall i\in \left\{ 1,\ldots ,r\right\} \) with at least one strict inequality. Then we have: \(\forall i\in \left\{ 1,\ldots ,r\right\} \), \(Z^i\left( x\right) =Z^i(x_l^*)+\sum _{j\in N_l}{{{\hat{c}}}_j^ix_j} \Rightarrow \), \(\forall i\in \left\{ 1,\ldots ,r\right\} \), \(Z^i(x)< Z^i(x^*_l)\) because \(\sum _{j\in N_l}{{{\hat{c}}}_j^ix_j}< 0\).

Thus, the vector Z(x) is dominated by the vector \(Z(x^*_l)\): which means that x is not efficient. \(\square \)

Remark 1

As \(H_l=\left( H_l^1\cap H_l^3\right) \cup \left( H_l^2\cap H_l^4\right) \), then \(H_l\subseteq (H_l^1\cup H_l^2)\). According to theorem 1, only the moves in the directions \(j\in H_l\) can ensure to find efficient solutions of the MOLP problem.

Theorem 2

Let x, \(x\ne x_l^*\), an optimal solution for program (P) of which \(x_l^*\) is the parent solution in the search tree. Then, x is obtained according to a pivot column \(j\in H_l\).

Proof

Suppose that for any coordinate \(x_j\) of x, \(j\in N_l\), \(j\notin H_l\). Then \(j\notin \left( H_l^1\cap H_l^3\right) \) and \(j\notin \left( H_l^2\cap H_l^4\right) \).

So we have the following cases:

1st case:

As \(j\notin {(H}_l^3\cup H_l^4)\), then \({{\hat{f}}}_j>0\) and therefore, the solution x is not optimal.

2nd case:

We obtain a contradiction in this case, because \(j\notin H_l^3\) therefore \({{\hat{f}}}_j<0\) and \(j\in H_l^4\) thus \({{\hat{f}}}_j>0\).

3rd case:

This corresponds to \({{\hat{f}}}_j=0\) and \({{\hat{c}}}_j^i=0\) for all \(i\in \left\{ 1,\ldots ,r\right\} \). Therefore the solution x is alternative to the solution \(x_l^*\) relative to the function f and at the same time to the criteria \(Z^i\,i=1,\ldots ,r\). Thus it has the same status as \(x_l^*\) which is not efficient, otherwise we would have probed the node l.

4th case:

Since \(j\notin (H_l^1\cup H_l^2)\), then the solution x is not efficient according to theorem 1. Thus, theorem 2 is proved by contraposition. \(\square \)

Proposition 1

The vertices of set S generated at the nodes of a branch of the search tree, are all different.

Proof

Remind that \(H_l=\left( H_l^1\cap H_l^3\right) \cup \left( H_l^2\cap H_l^4\right) \) with \(H_l^3=\left\{ j\in N_l\ {{\hat{f}}}_j\le 0\right\} \) and \(H_l^4=\left\{ j\in N_l\ {{\hat{f}}}_j<0\right\} \). Thus, along a branch of the search tree, when one pivots according to the simplex method from \(x_l^*\) towards another vertex x according to a pivot column \(j\in H_l\), i.e. \(j\in H_l^3\) or \(j\in H_l^4\), the function f does not increase. In addition, the instruction 1.3.1. of the general step of the algorithm prohibits processing of a vertex already visited. \(\square \)

Lemma 1

If at a node l of the search tree, we have \(f^*\ge f(x_l^*)\) then, there is no solution \(x\in S\), when we move in the directions of \(H_l\), such that \(f(x)>f^*\).

Proof

From \(x_l^*\), if we move in the directions of \(H_l\), the function f decreases. Thus, \(f(x)\le f(x_l^*)\) for any solution x obtained by moving in the directions of \(H_l\). \(\square \)

Theorem 3

The program \({(T)}_l\) defined by Eq. 5 is equivalent to the following program:

Proof

Recall that program \({(T)}_l\) is :

where \(1_{r\times r}=(1,1,\ldots ,1)\). Its standard form is then written as follows:

Using the current basis \(B_l\) at the basic feasible solution \(x_l^*, C=[C_{B_l},C_{N_l}]\), we can write:

where the Eqs. (10), (11) and (12) are obtained as follows:

\((10) =\ B_l^{-1}.(7)\); \((11) = C_{B_l}.B_l^{-1}(7) - (8)\) since \({C_{B_l}.B}_l^{-1}.b_{m\times 1}=Cx_l^*\) and \((12) = 1_{1\times r}.[C_{B_l}.B_l^{-1}.(7) - (8)] + (9)\).

The corresponding linear program is the follows:

Hence, the obtained program provide a feasible simplex tableau for program \({(T)}_l\) with the basic feasible solution \(\left( x,\ e\right) =\left( x_l^*,\ 0\right) \) when \(y=0\). \(\square \)

In what follows, we propose another particular version of the efficiency-test which exploits the data of the simplex tableau in \(x_l^*\). First of all, solving program \((O)_0\) is a check for efficiency of an optimal solution \(x_0^*\) for the program (PR). To prove the efficiency of the solution \(x_0^*\) at step 0.2.1 of the algorithm, we prove the following theorem:

Theorem 4

The program \({(R)}_l\) defined above and the following program \((O)_l\) have the same admissible regions:

where \(Z\left( x\right) =\left( Z_i(x)\right) _{i=\bar{1,r}}\)

Proof

As described in the principle of the proposed method, the criteria matrix C evolves in the same way according to the pivoting process in the simplex tableau when solving the program (PR). Therefore, the initial program to solve is as follows:

Its standard form is given by:

At any node l of the search tree, by considering the basis \(B_l\) associated with the basic feasible solution \(x_l^*\), we obtain the following linear system :

where the Eqs. (16), (17) and (18) are obtained as follows:

\((16) =\ B_l^{-1}.(13)\); \((17) =C_{B_l}.(16) - (14)\) and \((18) = 1_{1\times m}(16) - (15)\).

Thus, we obtain the standard form of the following linear program:

Note that the programs \({(P)}_l\) and \({(O)}_l\) have the same feasible solutions set and \(g\left( x\right) =-f\left( x\right) \). Thus, we aim to find an optimal solution of the function \(g=-f\) over the corresponding feasible solution set. Furthermore, at node l, \(Z\left( x_l^*\right) =C_{B_l}B_l^{-1}b\) and to test the efficiency of \(x_l^*\), we have to search a solution x in the domain S, such that \(Z(x)\ge C_{B_l}B_l^{-1}b\). Hence, the solution x satisfies the inequality \(Z(x){-C}_{B_l}B_l^{-1}b\ge 0\) and consequently, \(C_{B_l}B_l^{-1}Ax+C_{B_l}B_l^{-1}y\le Cx\) is obtained from the Eq. (17).

Thus, we conclude that the feasible solutions set of the program \({(O)}_l\) is the same as that of the program \({(R)}_l\) defined in Theorem 3. \(\square \)

Theorem 5

\(x_0^*\) is efficient if and only if \(x_0^*\) is the unique optimal solution for program \((O)_0\).

Proof

\(\left. \Leftarrow \right| \) Recall that the admissible domain of \((O)_l\) is not other than \(D(x_l^*)=\lbrace x\in S |Cx\ge Cx_l^* \rbrace \) being the optimal solution of the program (PR) (step 0.1 of the algorithm), it maximizes the function f over the domain S, and therefore over the domain \(D(x_0^*)\). On the other hand, \(x_0^*\) being the unique optimal solution of the program \((O)_0\), it maximizes \((-f(x))\) over \(D(x_0^*)\). So, \(x_0^*\) minimizes f(x) over \(D(x_0^*)\). Thus, both the maximum and the minimum of the function f over the domain \(D(x_0^*)\) are achieved at \(x_0^*\), it is therefore the unique feasible solution in the domain \(D(x_0^*)\) so that \(Cx=Cx_0^*\). For this purpose, the optimal objective value of the program \((T)_0\) is 0 and therefore, \(x_0^*\) is efficient.

\(\left. \Rightarrow \right| \) Proof by contradiction. Suppose that \(x_0^*\) is not an optimal solution of \((O)_0\), then there exists a solution y in \(D(x_0^*)\), y different from \(x_0^*\), such that \(-f(x_0^*)<-f(y)\). Hence, there exists a criterion i in \(\lbrace 1,\cdots ,r \rbrace \), such that \(c^iy-c^ix_0^*=e_i\), with \(e_i= f(x_0^*) - f (y)\), \(e_i>0\) since \(f(x_0^*)> f(y)\). Then, (y, e) is a feasible solution for program \((T)_0\), \(e=(e_j)\), \(j=1,\cdots ,r\), with at least one criterion i such that \(e_i= f (x_0^*) - f (y)\) and \(\sum _{j=1}^{r}{e_j>0}\). Hence, solution \(x_0^*\) is not efficient.

Suppose again that \(x_0^*\) is an optimal solution of \((O)_0\), but not unique. Then there exists a solution y in \(D(x_0^*)\), y different from \(x_0^*\), such that \(f(x_0^*)=f (y)\). Hence, \(x_0^*\) is not the unique optimal solution for program (PR) and y is a basic feasible solution in S. This is a situation where the function f is proportional to both a criterion and a constraint of the admissible region S. There is then a criterion i such that \(c^iy=c^ix_0^*\), and a criterion j, \(j\ne i\), such that \(c^jy>c^jx_0^*\). By setting \({e_j=c}^jy-c^jx_0^*\), \(e_j>0\) and \(\sum _{k=1}^{r}{e_k>0}\), which proves that \(x_0^*\) is not efficient. \(\square \)

We note \((-O)_l\) the program \((O)_l\) with objective function f to maximize instead of g. Hence, programs \((-O)_l\) and \((O)_l\) have the same admissible region \(D(x^*_l)\). Moreover, to ensure the validity of the BCO-efficiency test, program \((-O)_l\) must also be solved taking into account that \(x^*_l\), \(l \ge 1\), is not the optimal solution of the program (PR).

Corollary 1

\(x^*_l\), \(l\ge 1\) is efficient if and only if \(x^*_l\) is the unique optimal solution for both programs \((O)_l\) and \((-O)_l\) .

Proof

If \(x^*_l\) is not the same optimal solution for the two programs \((O)_l\) and \((-O)_l\) , or \(x^*_l\) is not the unique optimal solution for one of the two programs, then the domain \(D(x^*_l)\) admits a feasible solution y other than \(x^*_l\). Hence, solution \(x^*_l\) is not efficient (see Proof of Theorem 5). Conversely, if \(x^*_l\) is the unique optimal solution for each of the two programs, this means that the domain \(D(x^*_l)\) is reduced to the only solution \(x^*_l\), which means that \(x^*_l\) is efficient. \(\square \)

Remark 2

In fact, as soon as one of the two programs \((O)_l\) or \((-O)_l\) encounters a solution u different from \(x^*l\), the computation stops indicating that \(x^*l\) is not efficient. Moreover, if \(x^*l\) is not efficient, no solution obtained from (O)l or \((-O)_l\) can be used because it is not necessarily a basic feasible solution of the admissible region S.

4.1 The BCO-efficiency test algorithm

1. Let \(l=0\) and \(x^*_0\) the optimal solution for program (PR), solve the \((O)_0\) program.

- If \(x^*_0\) is the optimal solution, then \(x^*_0\) is efficient (Theorem 5).

- Else

- If \(x^*_0\) is not optimal for program (PR), then \(x^*_0\) is not efficient,

- Else (\(x^*_0\) is not unique), then \(x^*_0\) is not efficient.

2. For \(l\ge 1\), let \(x^*_l\) be the obtained basic feasible solution for program (PR), solve the \((O)_l\) program,

2.1. If \(x^*_l\) is not an optimal solution, then \(x^*_l\) is not efficient,

2.2. If \(x^*_l\) is not the unique optimal solution, then \(x^*_l\) is not efficient,

2.3. If \(x^*_l\) is the unique optimal solution, then solve the \((-O)_l\) program,

2.3.1. If \(x^*_l\) is not an optimal solution, then \(x^*_l\) is not efficient,

2.3.2. If \(x^*_l\) is not the unique optimal solution, then \(x^*_l\) is not efficient,

2.3.3. If \(x^*_l\) is the unique optimal solution, then \(x^*_l\) is efficient (Corollary 1).

Theorem 6

The algorithm terminates in a finite number of iterations and returns an optimal solution of the program (P).

Proof

The set S of feasible solutions being compact, it contains a finite number of vertices. According to proposition 1 , the algorithm ends in a finite number of iterations. The convergence of the algorithm towards an optimal solution of the program (P) is ensured by lemma 1, corollary 1 and theorems 1, 2 and 5. \(\square \)

5 Illustrative example

Let us consider the following problem of optimizing a linear function over an efficient set defined as:

where \(S_E\) is the efficient vertex set of the following MOLP problem:

The relaxed linear program (PR) of (P) is then written as follows:

Initial step: \(l= 0\); \(f^*=-\infty \); After solving the program (PR), the optimal solution thus obtained is \(x_0^*=\left( 0,0,2\right) \) as shown in the following simplex tableau:

From Table 1\(VN:=\lbrace x_0^*\rbrace \) and the BCO-efficiency test is applied to \(x_0^*\) (see Tables 2 and 3). To do this, f(x) is replaced by \(g(x)=-f(x)\) in the last tableau, the criteria \(Z_1\) and \(Z_2\) are added as constraints and the obtained tableau will be optimized:

The obtained optimal solution is \(u=(8,0,0)\), \(u\ne \ x_0^*\), then \(x_0^*\) is not efficient. \(f^*=-8\). The sets \(N_0=\lbrace 1,2,9\rbrace \) and \(H_0=\lbrace 1,2,9\rbrace \) correspond to \(x_0^*\).

Step 1

The separation starts with the creation of the node 1 corresponding to the first direction of \(H_0\), \(j=1\). By pivoting in this direction, we find the optimal solution \(x_1^*=(8,0,2)\) given by this tableau (Table 4):

\(VN:=VN\cup \lbrace x_1^*\rbrace \). The BCO-efficiency test shows that the solution \(x_1^*\) is not efficient. \(N_1=\lbrace 2,4,9\rbrace \) and \(H_1=\lbrace 9 \rbrace \). Using deep first strategy, we create node 2, corresponding to the single direction of \(H_1\), \(j=9\). By pivoting in this direction, the solution \(x_2^*=(8,0,0)\) given in Table 5 is obtained:

\(VN=VN\cup {x_2^*}\). \(x_2^*\) is efficient by applying the BCO-efficiency test. Therefore \(x^*:=x_2^*\) and \(f^*=- 8\). This current node is fathomed. From node 1, we create node 3 corresponding to the second direction of \(H_0\); \(j=2\). By pivoting in this direction, the solution \(x_3^*=(0,7,2)\) is obtained in Table 6:

\(VN=VN\cup \lbrace x_3^*\rbrace \). \(x_3^*\) is not efficient by applying the BCO-efficiency test. \(N_3=\lbrace 1,8,9\rbrace \) and \(H_3=\lbrace 1,8,9\rbrace \), then we create a new node 4 corresponding to the direction of \(H_3\); \(j=1\) and we execute a pivoting in this direction to obtain the solution \(x_4^*=(3,7,2)\) in Table 7:

\(VN=VN\cup \lbrace x_4^*\rbrace \). By applying the BCO-efficiency test, we prove that solution \(x_4^*\) is efficient. Hence, \(x^*:=x_4^*\) and \(f^*=1\). Node 4 is fathomed. We create a new node 5 corresponding to the second direction of \(H_3\); \(j=8\), and we obtain the solution \(x_5^*=(0,0,2)\), in Table 8:

Since this solution is already visited, then \(VN:=VN\) and this node is fathomed. Node 6 corresponding to the third direction of \(H_3\); \(j=9\) is created and the new solution x\(_6^*=(0,7,0)\) is generated in Table 9:

\(VN=VN\cup \lbrace x_6^*\rbrace \). This node in fathomed since \(f\left( x_6^*\right) <f^*\). Node 7 corresponding to the final direction of \(H_0\); \(j=9\) is then created and the solution \(x_7^*=(0,0,0)\) is obtained in Table 10:

\(VN=VN\cup \lbrace x_7^*\rbrace \) but \(f\left( x_7^*\right) <f^*\). This node is fathomed.

The algorithm terminates since all created nodes are fathomed and the optimal solution for (P) is \(x^*=\left( 3,7,2\right) \) and \(f^*=1\).

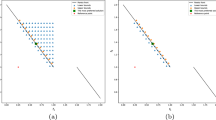

To summarize the proposed method throughout this example, we present a search tree representing states of the nodes during the process in Fig. 1.

6 Computational results and comparative study

The experimental results obtained during the implementation of our algorithm are summarized in this section. We carried out the numerical implementation using MATLAB R2015 software, on an Intel R Core\({}^{\mathrm{TM}}\) i7-5500U, 2.40Ghz and 8GB Ram processor computer. All the procedures of the BCO-method are programmed and none of the optimization packages are used. The algorithm is compared with the Primal method described in reference Liu and Ehrgott (2018) on instances being on the site: https://doi.org/10.17635/lancaster/researchdata/224. For each instance, r is the number of criteria, n is the number of variables and m is the number of constraints. Three instances are given for the same triplet (r, n, m), all used data are real numbers given with \(10^{-16}\) accuracy and distributed over intervals \(\left[ a,b\right] \), with minimum value \(a<0\) and maximum value \(b>0\). The constraints matrix values A belongs to the interval \(\left[ -1, 0\right] \), those of the right-hand side vector b vary according to the size of the instance, the larger it is and the more b increases. For example for instance (4, 100, 100), b belongs to \(\left[ -27,-20\right] \). The criteria coefficients vary in \(\left[ -1,1\right] \). The results of the experimental compilation are given in the following Table 1. We reported the percentage of negative coefficients on average, the intervals of coefficients for the linear function f represented by the max and min values and the mean of CPU time for each method.

Obviously, the computation time increases rapidly as the size of the instances increase. The largest size of the instances tested is 6 objectives, 500 variables and 500 constraints. Even though the authors of the Primal method described in Liu and Ehrgott (2018) praised it because described in the criteria space, it is supposed to support big instances better than in decision space, we observe that the CPU time increases strongly depending on the number of criteria. In this case, the number of criteria has a negative impact on the CPU time since it makes a huge jump of less than one second for less than 5 criteria, at one hour and more for the instances with five and six criteria. Given that the BCO-method solves the problem directly in the decision space, it seems to be influenced just by the described instances particularity unlike what we might expect. Regarding the obtained results comparison, we can see that the CPU time of the Primal method Liu and Ehrgott (2018) exceeds the CPUBCO-method on only six (06) instances of the instances presented in Table 11. However, the BCO-method is better on the remaining instances.

We can see from Fig. 2 that the linear trend curve (blue dashed line) of CPU BCO-method is almost horizontal when we except the instance (4,500,500) which represents a singular point with negative coefficients proportion equal to 51.33%. However, the linear trend curve (red dashed line) for the CPU Primal rapidly grows with the criteria number.

Tacking into account that the Primal method Liu and Ehrgott (2018) is better than several state of the art algorithms from the literature Benson (2011), Fülöp and Muu (2000), Thoai (2000), like observed by the authors, we can confirm the competitive statute to our proposed method.

7 Conclusion

In this study, we have proposed a branch and bound based method to search for an efficient extreme point which optimizes a linear function over the set of efficient extreme points of a convex polyhedron. The separation principle is based on the improving directions of the criteria at each feasible extreme point. Moreover, several tests are identified to fathom nodes whose corresponding domains not contain efficient or optimal extreme points, and thus make it possible to prune entire branches from the search tree. The adapted BCO-efficiency test at a feasible extreme point greatly simplified the computation and, therefore, contributed to accelerating the convergence of the method. The comparative study carried out shows that the BCO-method is very competitive and that the considered number of criteria does not constitute a handicap, unlike many methods cited in the literature. On the other hand, the proposed method can be generalized to other specific functions considered in the program (P), because of its manoeuvrability.

References

Benson HP (1991) An all-linear programming relaxation algorithm for optimizing over the efficient set. J Global Opt 1(1):83–104

Benson HP (1993) A bisection-extreme point search algorithm for optimizing over the efficient set in the linear dependence case. J Global Optim 3(1):95–111

Benson HP (2011) An outcome space algorithm for optimization over the weakly efficient set of a multiple objective nonlinear programming problem. J Global Optim 52(3):553–574

Boland N, Charkhgard H, Savelsbergh M (2017) A new method for optimizing a linear function over the efficient set of a multiobjective integer program. Eur J Oper Res 260(3):904–919

Bolintineanu S (1993) Minimization of a quasi-concave function over an efficient set. Math Program 61(1–3):89–110

Ecker JG, Kouada IA (1975) Finding efficient points for linear multiple objective programs. Math Program 8(1):375–377

Ecker JG, Song JH (1994) Optimizing a linear function over an efficient set. J Optim Theory Appl 83(3):541–563

Fülöp J, Muu LD (2000) Branch-and-bound variant of an outcome-based algorithm for optimizing over the efficient set of a bicriteria linear programming problem. J Optim Theory Appl 105(1):37–54

Hamel AH, Löhne A, Rudloff B (2013) Benson type algorithms for linear vector optimization and applications. J Global Optim 59(4):811–836

Isermann H (1974) Technical note–proper efficiency and the linear vector maximum problem. Oper Res 22(1):189–191

Jorge JM (2009) An algorithm for optimizing a linear function over an integer efficient set. Eur J Oper Res 195(1):98–103

Liu Z, Ehrgott M (2018) Primal and dual algorithms for optimization over the efficient set. Optimization 67(10):1661–1686

Löhne A, Rudloff B, Ulus F (2014) Primal and dual approximation algorithms for convex vector optimization problems. J Global Optim 60(4):713–736

Philip J (1972) Algorithms for the vector maximization problem. Math Program 2(1):207–229

Piercy CA, Steuer RE (2019) Reducing wall-clock time for the computation of all efficient extreme points in multiple objective linear programming. Eur J Oper Res 277(2):653–666

Thoai NV (2000) Conical algorithm in global optimization for optimizing over efficient sets. J Global Optim 18(4):321–336

Yamamoto Y (2002) Optimization over the efficient set: overview. J Global Optim 22:285–317

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Belkhiri, H., Chergui, M.EA. & Ouaïl, F.Z. Optimizing a linear function over an efficient set. Oper Res Int J 22, 3183–3201 (2022). https://doi.org/10.1007/s12351-021-00664-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12351-021-00664-z