Abstract

This paper deals with a bi-objective hybrid flow shop scheduling problem minimizing the maximum completion time (makespan) and total tardiness, in which we consider re-entrant lines, setup times and position-dependent learning effects. The solution method based on genetic algorithm is proposed to solve the problem approximately, which belongs to non-deterministic polynomial-time (NP)-hard class. The solution procedure is categorized through methods where various solutions are found and then, the decision-makers select the most adequate (a posteriori approach). Taguchi method is applied to set the parameters of proposed algorithm. To demonstrate the validation of proposed algorithm, the full enumeration algorithm is used to find the Pareto-optimal front for special small problems. To show the efficiency and effectiveness of the proposed algorithm in comparison with other efficient algorithm in the literature (namely MLPGA) on our problem, the experiments were conducted on three dimensions of problems: small, medium and large. Computational results are expressed in terms of standard multi-objective metrics. The results show that the proposed algorithm is able to obtain more diversified and competitive Pareto sets than the MLPGA.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Scheduling is an important tool for manufacturing and engineering, where it can have a major impact on the productivity of a process. Production scheduling is management and allocation of resources, events and processes to create goods and services. Production scheduling aims to maximize the efficiency of the operation and to minimize the production time and costs, by telling a production facility when to make, with which staff, and on which equipment.

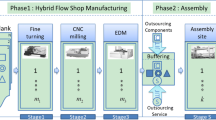

In the literature, the notion of hybrid flow shop (HFS) scheduling problem has emerged in 1970s (Arthanary and Ramaswamy 1971). The HFS can be found in various types of industries. The most representative one is in electronics, such as semiconductor wafer fabrication, printed circuit board (PCB) manufacturing, thin film transistor-liquid crystal display (TFT-LCD) manufacturing, etc. In addition, various traditional industries, such as food, oil, pharmaceutical, tobacco, textile, chemical, steel, paper, and metallurgical industry, have various HFSs (or can be modeled as a HFS). Ruiz and Rodríguez (2010) described the HFS problem in its “standard” form. This paper investigates an HFS problem in standard form with additional features, including: setup times, re-entrant flows, and position-dependent learning effects.

The literature of HFS is filled with different applied industrial assumptions to better represent the real nature of scheduling environments. One of the most prevailing and extremely favored assumptions by many researchers in real scheduling configurations is the integration of sequence-dependent setup times into different shop scheduling environments. The importance and applications of scheduling models with explicit considerations of setup times (costs) have been discussed in several studies (i.e. Chang et al. 2003; Andrés et al. 2005). One of the underlying assumptions in this paper is to consider setup times in scheduling configurations. The setup times considered in this problem are classified into two types: (1) sequence-independent setup time (SIST); and (2) sequence-dependent setup times (SDST). In the former, setup depends only on the job to be processed. In the latter, setup depends on both the job to be processed and the immediate preceding job. Allahverdi et al. (1999, 2008) provided a comprehensive review of the literature on scheduling problems involving setup times (costs). The HFS problem with setup times has been investigated in several studies (Jungwattanakit et al. 2008, 2009; Behnamian et al. 2009; Davoudpour and Ashrafi 2009; Naderi et al. 2009a, b; Rashidi et al. 2010; Karimi et al. 2010; Mousavi et al. 2011a, b, 2012a; b; Hakimzadeh Abyaneh and Zandieh 2012; Pargar and Zandieh 2012; Behnamian and Zandieh 2013; Fadaei and Zandieh 2013; Jolai et al. 2013; Attar et al. 2014; Wang and Liu 2014). For example, Jungwattanakit et al. (2008, 2009) considered the flexible flow shop with unrelated parallel machines and sequence/machine dependent setup times, release date and due date constraints. Davoudpour and Ashrafi (2009) focused on the SDST HFS problems with identical parallel machines, and release date. Rashidi et al. (2010) investigated the HFS problems with unrelated parallel machines, SDST and blocking processor. Mousavi et al. (2011a, b, 2012a, b) studied the problem of scheduling n independent jobs in HFS environment with SDST. Fadaei and Zandieh (2013) investigated group scheduling in the problem of HFS scheduling within the area of sequence-dependent family setup times. Wang and Liu (2014) also found an integrated bi-objective optimization problem with non-resumable jobs for production scheduling and preventive maintenance in a two-stage HFS. They considered the SDST and preventive maintenance on the first stage machine.

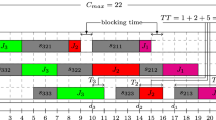

HFSs can be classified into two types according to product flows: (1) those with unidirectional flows; and (2) those with re-entrant flows. The unidirectional flows imply that each job starts at the first stage and finishes at the last stage. On the other hand, in the reentrant flows, each job may visit a serial stage twice or more. Therefore, the re-entrant HFS (RHFS) means that there are n jobs to be processed on g stages and every job must be processed on stages in the order of stage 1, stage 2,…, stage g for l times (l is the number of repetition of jobs on the sequence of stages). Lin and Lee (2011) provided a comprehensive review of the literature on scheduling problems involving re-entrant flows. Also, Dugardin et al. (2010), Cho et al. (2011) and Ying et al. (2014) considered the multi-objective HFS problem with re-entrant flow.

The last underlying assumption in this paper is the consideration of learning effects in scheduling configurations. In classical scheduling, job processing and setup times are assumed to be constant from the first job to be processed until the last job to be completed. Despite the effect of learning in a production environment, the processing and setup times of a given job are shorter if it is scheduled later in the production sequence. The learning effects considered in scheduling environments are classified into two types: (1) position-based learning; and (2) the sum of processing time. Regarding the last underlying assumption in this paper, processing and setup times of a given job depend on its position in the sequence arrived to each stage which means the learning effects are position-based learning. Biskup (2008) provided a comprehensive review of the literature on scheduling problems involving learning effects. The HFS problem with learning effects has been investigated in several studies. For example, Pargar and Zandieh (2012) and Behnamian and Zandieh (2013) investigated the HFS problems with SDST and position-dependent learning effects.

The present study investigates scheduling problem with learning considerations, using the learning curve introduced by Biskup (1999). The learning curve assumed by Biskup (1999) reflects decrease in production time as a function of number of repetitions. As shown in Biskup (1999), we assume that the processing time of job j at stage t of layer l if scheduled in position r, is given by Eq. (1).

where \( - 1 \le a_{jl}^{t} \le 0 \) is a constant learning index, given as the logarithm to the base 2 of the learning rate (LR). In this paper, we assume that all machines and jobs in each stage and layer have the same learning rate \( (a_{jl}^{t} = a) \). Similarly, the setup time of job i to job j if scheduled in position r at stage t of layer l, is given by Eq. (2).

\( P_{jl}^{t} \) actual processing time for job j at stage t of layer l, \( S_{ijl}^{t} \) actual setup time between job j and job i at stage t of layer l while job j is scheduled immediately after job i, \( P_{jrl}^{t} \) the processing time for job j in position r at stage t of layer l, \( S_{ijrl}^{t} \) the setup time of job i to job j, scheduled in position r at stage t of layer l.

Nowadays, because of the intensely competitive markets and limited resources, many manufacturers have come to appreciate the importance of scheduling by attempting to reduce their production expenses and the final costs of their products. The scheduling objective in such industries may vary. Three types of decision-making goals are prevalent in scheduling: (1) efficient utilization of resources; (2) rapid response to demands, or minimizing the work-in-progress; and (3) close conformance to prescribed deadlines (Pinedo 2008). According to just-in-time (JIT) concept, production managers should consider more than one criterion in scheduling problems. Therefore, simultaneous minimization of two conflicted objective functions that are makespan and total tardiness. In fact, minimizing the makespan causes internal efficiency and maintains the work-in-process inventory at a low level. Minimizing the total tardiness causes external efficiency and reduces the penalties incurred for late jobs.

According to the best of our knowledge, bi-objective RHFS with SDST and learning effect problem have never been investigated in the scheduling problems. Therefore, the aim of this paper is to develop a solution method for the proposed problem that search a set of non-dominated solutions.

It has been shown in several studies that some of scheduling problems are belong to NP-hard class; for example, a single machine SDST scheduling problem is equivalent to a traveling salesman problem (TSP) and is NP-hard (Pinedo 1995). The HFS problem is significantly more complex than the regular single machine scheduling. On the other hand, Gupta (1988) showed the flow shop with multiple processors (FSMP) problem with only two stages to be NP-hard even when one of the two stages contains a single machine. The FSMP problem can be considered as a specific case of the HFS. Also, the re-entrant permutation flowshop scheduling problem for minimizing makespan has already been proven to be NP-hard (Wang et al. 1997). According to the research presented, we can easily conclude that our proposed problem is also an NP-hard problem which is not easy to solve by a traditional mathematical model. The exact methods are unable to render feasible solutions even for small instances of this problem in a reasonable computational time. Therefore, this inability justifies the need for employment of a variety of heuristics and meta-heuristics to solve these problems to optimality or near optimality. In this paper, we are going to use a meta-heuristic algorithm to solve scheduling problem. The proposed meta-heuristic and the details of it are explained in Sect. 2. The rest of the paper is organized as follows: Sect. 3 presents the computational results and numerical comparisons. Finally, Sect. 4 is devoted to conclusion and future works.

2 The proposed algorithm

In this paper, a genetic algorithm is proposed for solving bi-objective optimization problem. The main reason for using this approach is that the problem under study is NP-hard and genetic algorithm approach has been demonstrated cost- effective for solving this kind of problem.

The term “genetic algorithm”, almost universally abbreviated to GA, was first used by Holland (1975). Different approaches of GA appear in the literature of multi-objective optimization problem (MOP), some of them are Vector Evaluated Genetic Algorithm (VEGA) (Schaffer 1985), Multi-Objective Genetic Algorithm (MOGA) (Fonseca and Fleming 1993), Niched Pareto Genetic Algorithm (NPGA) (Horn et al. 1994), Non-dominated Sorting Genetic Algorithm (NSGA & NSGA-II) (Srinivas and Deb 1995; Deb et al. 2002), Pareto Stratum-Niche Cubicle Genetic Algorithm (PS-NC GA) (Hyun et al. 1998), Multiple Objective Genetic Local Search (MOGLS) (Ishibuchi and Murata 1998), Strength Pareto Evolutionary Algorithm (SPEA & SPEA-II) (Zitzler and Thiele 1999; Zitzler et al. 2001), Pareto Archive Evolution Strategy (PAES) (Knowles and Corne 1999), Elitist Non-dominated Sorting Genetic Algorithm (ENGA) (Bagchi 1999), The Pareto Envelope based Selection Algorithm (PESA & PESA-II) (Corne et al. 2000, 2001), and so on.

Although many studies have provided valuable developments and applications for GA, improvements still can be made in designing GA for MOPs. In this paper, the proposed algorithm is somewhat similar to the NSGA-II. The NSGA-II algorithm has been developed by Deb et al. (2002) as a fast and efficient multi-objective genetic algorithm. The aim of this algorithm is to find a set of non-dominated solutions based on the Pareto dominance relationship. The main concept of the NSGA-II is the creation of an initial population, the selection of parents, the creation of children and the finding of non-dominated solutions. In the following, we extensively describe the structure and details of the proposed algorithm.

2.1 The structure of the proposed algorithm

The steps of proposed algorithm are shown below.

Step 1: Encoding

The application of an algorithm requires the representation of a solution. We apply a scheme using integers that shows the number of job. In this kind of representation, a single row array of the size equals to the number of the jobs to be scheduled. The value of the first element of the array shows which job is scheduled first. The second value shows a job which is scheduled secondly and so on. For example, consider a problem with five jobs (n = 5), two stages (g = 2), two machines at stage one (m 1 = 2), and three machines at stage two (m 2 = 3). Suppose a solution is generated according to integer coding as [3 1 4 2 5]. It is known that the machines in parallel are identical in capability and processing rate. Therefore, job 3 is process on machine 1 and job 1 is process on machine 2 at stage one. Then, the job 4 is assigned to the machine of which the completion time is smaller than other machines. This process continues like this, until all jobs are assigned to the first stage machines. For determining the order of jobs in the second stage, first in first out (FIFO) rule is used.

Step 2: Initialization

-

(i)

Initialize the number of initial population (N pop ), Probability of crossover (P c ), Probability of mutation (P m ), Number of generation (N g ).

-

(ii)

Initialize an initial population (P0) randomly.

Step 3: Non-dominated sorting and ranking procedure

First, the objective values of all chromosomes in the current population (Pi) is evaluated, then the population of solutions is classified into successive non-dominated fronts (primary rank), F1 is the set of non-dominated solutions in Pi which is the first frontier and F2 is the set of non-dominated solutions in Pi\F1 which is the second frontier and so on, Ft is the set of non-dominated solutions in the last frontier, and finally, the crowding distance of each solution with respect to every other solution on the same front (secondary rank) will computed.

Step 4: Dividing the population

The current population is divided into 3 categories: (1) Set ‘A’ contains the non-dominated solutions in F1, and the size of A (|F1|) is N A. (2) Set ‘B’ contains the non-dominated solutions in F1, F2, …, and Fq, and the size of B (|F1| + |F2| + ⋯ + |Fq|) is N B. (3) Set ‘C’ contains the non-dominated solutions in Fq+1, Fq+2, …, Ft, and the size of C (|Fq+1| + |Fq+2| + ⋯ + |Ft|) is N C. If t fronts are obtained by primary rank, then q is half of fronts. It is known that the number of fronts (t) is different in each generation.

Step 5: Selection scheme

The binary-tournament selection is employed at the selection operation to reproduce the next generation. According to this selection scheme, between two solutions with differing non-domination ranks (primary rank), we prefer the solution with the lower rank. Otherwise, if both solutions belong to the same front, then the solution located in a lesser crowded region is preferred (secondary rank).

Step 6: Neighborhood operator

First, a neighborhood relation on the search space is defined, and then k-neighborhood solutions (k = 2) of each solution in set ‘A’ are generated. Inversion, swap, shift and k-exchange moves are applied in this paper. In each generation, neighborhood operator is selected randomly among introduced operators.

Step 7: Crossover operator

Select (N pop − (k + 1) × N A ) × P c pairs of parents from set ‘B’ based on the binary-tournament selection, and perform crossover on the parents. Order crossover (OX) is applied in this paper.

Step 8: Mutation operator

Select (N pop − (k + 1) × N A ) × P m parents from set ‘C’ based on the binary-tournament selection, and perform mutation on the parents. Inversion, swap, and k-exchange moves are applied in this paper. In each generation, mutation operator is selected randomly among introduced operators.

Step 9: Replacement

Set A (include F1) with solutions obtained from the previous steps (include steps 6, 7 and 8) are combined as new population (Pi+1).

Step 10: Stopping Rule

If there is not improvement in F1 obtained of two successive generations (Pi and Pi+1), then U count increases by one. If counter U equals to the pre-specified number (N g) then stop, otherwise go to step 3. Data envelopment analysis (DEA) is used to design the stopping criterion.

Graphically, the proposed algorithm so-called NSGA-DEA can be presented as in Fig. 1. In the following subsections, we describe the details of the computation of crowding distance and stopping criterion.

2.2 Crowding distance

The introduced method by Pasupathy et al. (2007) is used for the computation of crowding distance for a bi-objective problem. The crowding distance of the ith solution in its front, called \( cd_{i} \) is computed as given in Eq. (3). In order to compute the \( cd_{i} \) first the “normalized Euclidean-distance based on crowding distance” (NEDCD) between solutions i and j, called D ij , is computed as given in Eq. (4).

In Eq. (3), f i denotes the front on which solution i lies, and \( \left[ {F_{{f_{i} }} } \right] \) denotes the set of solutions that lie on the same front as that of solution i. In Eq. (4), \( Z_{bi} \) and \( Z_{bj} \) denote the values with respect to the bth objective function for solutions i and j, respectively. The solution with the largest value of crowding distance is assigned with the highest secondary rank (i.e., rank 1) in comparison to other solutions on the same front.

2.3 Proposed stop criterion

Suppose F 1 and \( F_{1}^{{\prime }} \) are the first frontier of two successive generations. Two cases can be expected of comparison between F 1 and \( F_{1}^{{\prime }} \) which are as follows: (1) \( F_{1}^{{\prime }} \) is better than F 1, (2) \( F_{1}^{{\prime }} \) and F 1 are the same. In order to compare two sets of F 1 and \( F_{1}^{{\prime }} \) quantitatively, the introduced method by Ruiz-Torres and Lopez (2004) is applied. They used free disposal hull (FDH) formulation that is a particular case of DEA. In this subsection, only the stages of method are briefly described. It is noted that each scheduling solution is a decision making unit (DMU), and inputs are the makespan and the total tardiness.

-

1.

The scheduling solutions (or DMUs) of F 1 and \( F_{1}^{{\prime }} \) are combined in one single data set to generate an ‘FDH problem’ set.

-

2.

In this stage, each DMU is comparable to the other DMUs on a one-to-one basis. DMUs which dominate the others but do not dominate themselves are efficient DMUs that are collected in a set so-called T. Efficient DMUs (or scheduling solutions) always have a degree of efficiency equal to one. Now, we want to calculate the degree of efficiency for DMU G, which is inefficient.

F 1and \( F_{1}^{{\prime }} \) | Set of DMUs provided by two successive generations |

\( \left| {F_{1} } \right| \) and \( \left| {F_{1}^{{\prime }} } \right| \) | Number of DMUs in sets F 1 and \( F_{1}^{{\prime }} \) |

\( C_{max}^{s} \) | Makespan corresponding to DMU S |

\( \bar{T}^{s} \) | Total tardiness corresponding to DMU S |

\( w_{i} \) | Relative weight of criteria i, with \( \sum\nolimits_{i = 1}^{2} {w_{i} = 1} \) |

T | Set of all efficient DMUs |

\( Q^{G} \) | Set of DMUs in T that make schedule G inefficient |

\( E^{G} \) | Degree of efficiency of DMU G |

The degree of efficiency of any DMU (efficient or inefficient) is computed as given in Eq. (5).

-

3.

The ‘efficiency’ of F 1 and \( F_{1}^{{\prime }} \) are calculated as given in Eq. (6) (called A 1 and \( A_{1}^{{\prime }} \) respectively).

$$ A_{1} = \frac{{\sum\nolimits_{{G \in F_{1} }} {E^{G} } }}{{\left| {F_{1} } \right|}}\quad {\text{and}}\quad A_{1}^{{\prime }} = \frac{{\sum\nolimits_{{G \in F_{1}^{{\prime }} }} {E^{G} } }}{{\left| {F_{1}^{{\prime }} } \right|}} $$(6)

Two cases can be expected of comparison between A 1 and \( A_{1}^{{\prime }} \) which are as follows: (1) \( A_{1}^{{\prime }} \) value is bigger than A 1. (2) \( A_{1}^{{\prime }} \) value is equal to A 1. In the first case, improvement has been observed in the process. In the second, this result shows that no improvement has been reported. Therefore, U count increases by one. The stopping criterion for algorithm is terminated while counter U is equal to N g (pre-specified number).

2.4 Discussion on the structure of algorithm

In this subsection, ideas used in the structure of algorithm are discussed in the form of questions and answers. The most important questions can be expressed as follows: (1) Why is a new operator so-called neighborhood operator added to search the neighborhood first frontier (F 1) in each generation? (2) Why are several neighborhood and mutation operators introduced to select among alternatives randomly in each generation? (3) Why is crossover operator on solutions in high level fronts applied? (4) Why is mutation operator on solutions in low level fronts applied? (5) Why is stop condition as stated in Sect. 2.3 considered?

Now we will respond to questions. In response to the first question, solutions belonging to the best non-dominant set F 1 are of best solutions in the population and must be emphasized more than any other solutions in the population. In fact, set F 1 is the closest front to the Pareto-optimal front. We make slight changes in solutions of set F 1 with the hope to find better results (closest to the Pareto-optimal set). These slight changes are done through neighborhood operator. This corresponds to the concept of the exploitation.

In response to the second question, the main reason for using this approach is that the algorithm is able to guide the search to another promising region through different types of moves. Therefore, the performance of algorithm with cited characteristic can be better. This corresponds to the concept of the diversification.

Concerning the third question, genetic algorithms have a recombination operation so-called crossover which is probably closest to the natural paragon. The crossover operator is used to mimic biological recombination between two single chromosome organisms. Therefore, offspring has the information from two parents. According to nature, competition among individuals for scanty resources results in the fittest individuals dominating over the weaker ones. Therefore, the population is modified with the natural law. We are going to modify population more quickly. In order to increase the selection probability of fittest individuals, crossover operator is applied to the solutions in high level fronts.

Regarding the fourth question, the main concept of the mutation is the changing of the structure of a gene, resulting in a variant form which may be transmitted to subsequent generations, caused by the alteration of single base units in DNA, or the deletion, insertion, or rearrangement of larger sections of genes or chromosomes. In the nature, mutation is usually employed to eliminate defects occurred in individuals. The defects here are considered to be as equivalent solutions in low level fronts. Therefore, mutation operator is applied on the solutions in low-level fronts.

In response to the last question, suppose our aim is to solve a single-objective minimization problem with GA. The objective value of the best solution in each generation will improve/remain constant when the algorithm is running. The relative difference (Δ) between the best solutions in two successive generations is simply calculated. Two cases can occur as follows: (1) Δ = 0, (2) Δ < 0. In the first, the best solutions remain constant in two successive generations (improvement has not been observed). In the second case, the algorithm has found a better solution for the next generation (improvement has been observed). The Δ value can be used to design two stop conditions as follows: (1) algorithm has reached a plateau such that successive iterations no longer produce better results (i.e. Δ = 0 in successive iterations for the pre-specified number), (2) in non-consecutive U-generation, improvement has not been observed (i.e. Δ = 0 in non-consecutive U iterations for the pre-specified number). Now, our aim is to solve a MOP with GA. In a MOP, non-dominated solutions in each generation will improve/remain constant as the algorithm is running. However, there is no straightforward manner such as Δ. Therefore, the terminating condition is proposed based on DEA to express the improvement or lack of improvement in the non-dominated solutions of two successive generations. The proposed approach is similar to Δ. The proposed stop condition is a new approach in the design of the stop condition.

3 Computational experiments

This section contains the method of generating data sets, performance criteria, the parameter setting with Taguchi method, validation of the proposed algorithm, running data sets by proposed algorithm and algorithm in the literature, and then expresses the results of the comparisons.

3.1 Test problems

The numerical data should be created to test the performance of the algorithm. Data required for a problem consists of number of jobs, number of stages, number of machines per stage, number of re-entrants, processing times, setup times, due dates, and learning indices. Note that, the largest number of machines in a stage must be less than the number of jobs \( \left( {n > max\left\{ {m^{t} ;t \in g} \right\}} \right) \). Designing range of levels of each factor is illustrated in Table 1. The number of machines, processing times and setup times (20–40 % of the mean of the processing time) are randomly generated from a discrete uniform distribution as described in Table 1. This table is divided into four categories: (1) special small problems, (2) small problems, (3) medium problems, and (4) large problems. Special small problems are designed to assess the validity of the proposed algorithm. The term “specific” is given to these problems because they cover small problems of single machine, parallel machine, flow shop, and two-stage HFS. To demonstrate the effectiveness of proposed NSGA-DEA compared to algorithm in the literature, the experiments were conducted on three sizes of problems: small, medium and large. Due to levels of factor, the twenty-four problems are produced for the special small problems. The eighteen problems of multiplication levels of factors (n × g × L) are produced for the small, medium, and large problems. Learning indices −0.152 and −0.514 were selected with respect to the learning curve of 90 and 70 %, respectively. Also, we consider RHFS with SDST scheduling problems with no learning effect \( (a_{jl}^{t} = 0) \). In general, all problems are tested with regard to the level of learning indices. To generate due dates of all n jobs, we proposed the following steps:

Compute total processing time of each job on all g stages.

Compute average setup time for all possible subsequent jobs and sum it for all g stages.

Determine a due date for each job.

where random is a random number from a uniform distribution over range (0, 1).

3.2 Performance criteria

In the literature of multi-objective optimization problems, several performance criteria have been presented to evaluate the quality of the obtained non-dominated front and to assess the performance of multi-objective optimizers. Each criterion has its advantages and disadvantages. There is no agreement as to which criteria should be used. In general, the quality of the approximated sets must be measured by qualitative and quantitative criteria. The following performance criteria are applied to compare the results of multi-objective algorithms quantitatively.

A. Qualitative metrics

-

1.

Number of Pareto solutions (NPS): This performance criterion presents the number of non-dominated solutions obtained from each algorithm. The larger the number, the better the performance of the algorithm will be.

-

2.

Quality metric 1 (QM1): To calculate the value of this criterion, first, the net non-dominated solutions (NDS) are generated by a set of all non-dominated solutions obtained from all algorithms (whose members should be also non-dominated in relation to one another) and then the percentage of non-dominated solutions of each algorithm in NDS to NPS is calculated. The larger the number, the better the performance of the algorithm will be.

-

3.

Quality metric 2 (QM2): To calculate the value of this criterion, the percentage of non-dominated solutions of each algorithm in NDS to the number of NDS is calculated. The third metric signifies the percentage of the solutions in the net non-dominated Pareto set obtained by a certain algorithm. The larger the number, the better the performance of the algorithm will be.

B. Quantitative metrics

-

1.

Mean ideal distance (MID): This measure presents the closeness between Pareto solution and ideal point (0, 0) which can be shown as Eq. (10).

$$ MID = \frac{{\sum\nolimits_{i = 1}^{n} {c_{i} } }}{n} $$(10)where n is the number of non-dominated set and \( c_{i} = \sqrt {z_{1i}^{2} + z_{2i}^{2} } \). The lower value of MID, the better of solutions quality we have.

-

2.

Spread of non-dominated solution (SNS): This metric indicates the measure of diversity of Pareto-solutions, and more diversity of solutions is desirable. The value of SNS is measured as Eq. (11).

$$ SNS = \sqrt {\frac{{\sum\nolimits_{i = 1}^{n} {(MID - c_{i} )^{2} } }}{n - 1}} $$(11) -

3.

Triangle method (TM): This measure presents the area under linear regression curve which can be calculated as Eq. (12) (Mousavi et al. 2012).

$$ z_{1} = b + a \times z_{2} $$(12)where a and b are calculated as Eqs. (13) and (14):

$$ a = \bar{z}_{1} - b \times \bar{z}_{2} $$(13)$$ b = \frac{{\sum\nolimits_{i = 1}^{n} {(z_{2i} - \bar{z}_{2} )(z_{1i} - \bar{z}_{1} )} }}{{\sum\nolimits_{i = 1}^{n} {(z_{2i} - \bar{z}_{2} )} }} $$(14)

Therefore, a smaller value of this metric for an algorithm proves to be better.

(B-4) Free Disposal Hull approach (FDH): In order to calculate this metric, all non-dominated solutions obtained by the algorithms are combined and the efficiency of these points is obtained by the FDH approach, which was proposed by Ruiz-Torres and Lopez (2004).

3.3 Parameter setting

Algorithm parameter values vary depending on different problem types when applying algorithm to achieve efficient solutions, so appropriate value selection has significant impact on the efficiency of algorithm. In this paper, the existing parameters in algorithm are determined by Taguchi method. Taguchi’s method applies the quality loss function to evaluate product quality along with an orthogonal array to reduce the number of experiments.

In parameter setting, you first choose control factors and their levels and choose an orthogonal array appropriate for these control factors. The control factors comprise the inner array. In this paper, the parameters and their levels are shown in Table 2. The square matrix with 4 parameters in 3 levels used in the Taguchi method is L9, which is given in Table 3.

The experiment is carried out by running several times each combination of control factor settings. The response data from each run in the outer array are usually aligned in a row, next to the factors settings for that run of the control factors in the inner array. Then, measured values are transferred in the form of S/N value.

Now, let us confirm the research characteristic-anticipating minimizing the makespan and total tardiness of jobs; namely, the smaller the cost values the better. Therefore, S/N ratio must be calculated using lower-is-better formula as Eq. (15).

The problem is that the response data from each run in MOPs are usually as set (non-dominated solutions). Since the Taguchi function should be assessed by one criterion, then a function which has shown the combination of all indexes is defined. The introduced utility function by Jolai et al. (2013) is used as follows (Eq. 16):

This utility function is comprised of three qualitative and four quantitative criteria. The weight of 1 is allocated to qualitative criteria and the weight of 2 is allocated to quantitative criteria. This function has the role of a variable Y in Eq. (15).

Table 4 shows the data which is transformed into S/N value. The rate of S/N is shown in Fig. 2. As it can be seen in Table 4, factor C (probability of crossover) is prominent in the execution process of determining NSGA-DEA. In addition, the influence of four factors on minimizing makespan and the total tardiness in NSGA-DEA is, in the order of: probability of crossover, number of initial population, number of generation, and probability of mutation. As it can be seen in Fig. 2, the optimal factors are: A (3), B (3), C (2), and D (1).

3.4 The validation of proposed algorithm

To demonstrate the validation of proposed algorithm, the experiments were conducted on special small problems. It is impossible to find the Pareto-optimal solutions using the enumeration algorithm because of extreme complexity of the problems. The full enumeration algorithm is used to find the Pareto-optimal solutions for only several special small problems. The total of possible states of problems with n = 5, 7 and 10 is the 120, 5040 and 3,628,800 solutions to explore the full enumeration algorithm, respectively. Details special small size problems and the results are shown in Table 5. In there, the first column indicates the abbreviation codes of each test problem, the second and third columns describe the details problems (number of jobs × number of stages × number of re-entrants, and number of machines per stage), the fourth describes learning indices, the fifth and sixth columns describe number of Pareto-optimal solutions and the number of non-dominated solutions obtained from algorithm respectively, the seventh describes the number of non-dominated solutions obtained from algorithm in Pareto-optimal solutions, and the last column describes the average CPU time (second unit). Based on the results of given in Table 5, the following observations can be made.

Due to the fifth and seventh columns, the proposed algorithm is able to find all solutions in Pareto-optimal in 62.5 % cases (the Pareto-optimal solutions exactly). In other cases, more solutions were found, except for one or two. This result indicates that the proposed algorithm has a very high reliability (excellent performance) to solve the problems. Due to the sixth and seventh columns, the all solutions obtained of proposed algorithm are efficient in 83.3 % cases, because they exist at Pareto-optimal. Due to the last column, the proposed algorithm is able to solve the problems in the length of the interval from 26.4266 to 41 s. The full enumeration algorithm has spent the interval from 0.0780 to 2713.5281 s. This result indicates that the proposed algorithm has a shorter range in solving problem.

3.5 Numerical result

The performance of the proposed NSGA-DEA is compared with a MLPGA algorithm proposed by Cho et al. (2011). It is noticeable that all of algorithms are implemented in MATLAB 2009a, and run on a PC with 2.30 GHz Intel Core and 4 GB of RAM memory. To show the efficiency and effectiveness of the proposed algorithm in comparison with a MLPGA, computational experiments were done on various test problems (i.e. small, medium and large). For each algorithm, we run each test problem ten times and four qualitative metrics and four quantitative metrics are computed for each of them. The comparisons are performed on the basis of the sets of non-dominant solutions obtained by each algorithm.

Tables 6, 7 and 8 represent the values of the four qualitative metrics for various problems. The value of NPS, NDS, QM1 and QM2 are shown in these tables. Now, the first line of Table 6 is explained. The results shown in NPS column only evaluate the number of non-dominated solutions found by each algorithm, not their quality. However, the quality of solutions can be measured by NDS, QM1 and QM2, as summarized in Tables. The values of NPS of NSGA-DEA and MLPGA are equal to 9 and 8 respectively. Consequently, the NSGA-DEA front has more non-dominated solutions than MLPGA front, corresponding the value of NPS. The values of NDS of NSGA-DEA and MLPGA are equal to 9 and 4 respectively. It shows number of the net non-dominated solutions (NDS) of each algorithm. The larger value of NDS, the better of solution quality we have. The values of QM1 of NSGA-DEA and MLPGA are equal to 100 and 50 % respectively. The value of QM1 of NSGA-DEA is calculated by divided NDS = 9 with the NPS = 9. It means that all solutions obtained of the NSGA-DEA are efficient. Consequently, there are none solutions in NSGA-DEA front that are dominated by at least one solution from MLPGA front. The value of QM1 of MLPGA is calculated by divided NDS = 4 with the NPS = 8. It means that four solutions of the MLPGA are efficient. Consequently, four solutions on the MLPGA front are dominated by at least one solution on the NSGA-DEA front. The values of QM2 of NSGA-DEA and MLPGA are equal to 100 and 44.4 % respectively. The value of QM2 of NSGA-DEA is calculated by divided “NDS of algorithm = 9” with the “NDS = 9”. It means that the all solutions in NSGA-DEA front have been included in net non-dominated solutions. The value of QM2 of MLPGA is calculated by divided “NDS of algorithm = 4” with the “NDS = 9”. It means that the four solutions in MLPGA front have been included in net non-dominated solutions. It is notable that, net non-dominated solutions may be both algorithms. For example, 4 solutions of net non-dominated solutions (NDS) are both algorithms.

As it can be observed, four metrics (NDS, NPS, QM1 and QM2) have better values for NSGA-DEA in comparing with other algorithm in more cases. The averages and summarized results of these experiments are shown in Table 12. As it can be seen in average row in Table 12, the number of non-dominated solutions of MLPGA is less than that of NSGA-DEA, 79 % of Pareto members of NSGA-DEA are efficient. Also, 87.1 % of the net Pareto set members made by members of the Pareto set which belongs to NSGA-DEA, while the solutions of MLPGA only cover 8.6 % of the members of the Pareto set. As shown in Table 12, the proposed algorithm is more effective than the MLPGA algorithm in terms NDS, NPS, QM1 and QM2 for small, medium and large-sized problems.

Tables 9, 10 and 11 present the values of four quantitative metrics on all test problems for each algorithm. The averages and summarized results of these experiments are shown in Table 12. As it can be seen in average row in Table 12, algorithm NSGA-DEA can obtain better performances on metrics MID and TM than other algorithm. The values of MID and TM of NSGA-DEA are less than that of other algorithm in 75.3 and 49.4 % cases respectively. It indicates that the obtained solutions of NSGA-DEA converge towards the ideal point (0, 0). It is closer to the ideal point. Consequently, the Pareto set of NSGA-DEA algorithm is better than the other one. The values of SNS of NSGA-DEA are also more than that of other algorithm in 51.2 % cases. It indicates that the non-dominated solutions of NSGA-DEA are compacted in greater space than that of other algorithm. Similarly, the values of FDH of NSGA-DEA are also more than that of other algorithm in 81.5 % cases. It indicates that the non-dominated solutions of NSGA-DEA dominate some members of the Pareto set which belongs to MLPGA. These results show that the proposed algorithm works effectively in all size of problems. Therefore, the average values of all the metrics in Table 12 show that the NSGA-DEA is able to obtain more diversified and competitive Pareto sets than the MLPGA.

Graphical representation is provided to demonstrate output results of the NSGA-DEA and MLPGA. Figures 3, 4 and 5 represent the non-dominated solutions of a single run by proposed algorithm and MLPGA for problems of small, medium and large size respectively. It is obvious that the solutions obtained in Pareto-front by the NSGA-DEA algorithm are more desirable. Also, we plot the initial set and the final set of solutions obtained by each algorithm for an instance in Fig. 6. The initial set is the same for all algorithms. This figure shows that NSGA-DEA generates more efficient solutions. Therefore, these figures illustrate and confirm the conclusion derived from the numerical results based on the performance criteria.

4 Conclusion and further researches

This paper considers the problem of scheduling n independent jobs in a hybrid flow shop with the objectives of minimizing both the makespan and the total tardiness. There are considerable numbers of practical assumptions in real world scheduling settings. To address the realistic assumptions of the proposed problem, three additional traits were added to the scheduling problem. These include re-entrant lines, setup times and position-dependent learning effects. A genetic algorithm is proposed for solving this bi-objective optimization problem. The performance of the proposed algorithm is compared with a genetic algorithm proposed in the literature on a set of test problems. Several computational tests are used to evaluate the effectiveness and efficiency of the proposed algorithm in finding good quality schedules. Computational results show that the proposed algorithm provides better results than genetic algorithm in the literature by qualitative and quantitative criteria. For future study, the scheduling with other system characteristics, which have not been included in this paper, such as release date, limited intermediate buffers, machine availability constraints, and unrelated parallel machines at each stage can be a practical extension, although the problem would be very difficult to solve.

References

Allahverdi A, Gupta JND, Aldowaisan T (1999) A review of scheduling research involving setup considerations. OMEGA Int J Manag Sci 27(2):219–239

Allahverdi A, Ng CT, Cheng TCE, Kovalyov MY (2008) A survey of scheduling problems with setup times or costs. Eur J Oper Res 187(3):985–1032

Andrés C, Albarracín JM, Tormo G, Vicens E, García-Sabater JP (2005) Group technology in a hybrid flowshop environment: a case study. Eur J Oper Res 167:272–281

Arthanary TS, Ramaswamy KG (1971) An extension of two machine sequencing problems. Oper Res 8(1):10–22

Attar SF, Mohammadi M, Tavakkoli-Moghaddam R, Yaghoubi S (2014) Solving a new multi-objective hybrid flexible flowshop problem with limited waiting times and machine-sequence-dependent set-up time constraints. Int J Comput Integr Manuf 27(5):450–469

Bagchi TP (1999) Multi-objective scheduling by genetic algorithms. Kluwer, Boston

Behnamian J, Zandieh M (2013) Earliness and tardiness minimizing on a realistic hybrid flowshop scheduling with learning effect by advanced metaheuristic. Arab J Sci Eng 38:1229–1242

Behnamian J, Fatemi Ghomi SMT, Zandieh M (2009) A multi-phase covering Pareto-optimal front method to multi-objective scheduling in a realistic hybrid flowshop using a hybrid metaheuristic. Expert Syst Appl 36:11057–11069

Biskup DA (1999) Single machine scheduling with learning considerations. Eur J Oper Res 115(1):173–178

Biskup DA (2008) A state-of-the-art review on scheduling with learning effects. Eur J Oper Res 188:315–329

Chang PC, Hsieh JC, Wang YW (2003) Genetic algorithms applied in BOPP film scheduling problems: minimizing total absolute deviation and setup times. Appl Soft Comput 3:139–148

Cho H-M, Bae S-J, Kim J, Jeong I-J (2011) Bi-objective scheduling for reentrant hybrid flow shop using Pareto genetic algorithm. Comput Ind Eng 61:529–541

Corne DW, Knowles JD, Oates MJ (2000) The Pareto envelope based selection algorithm for multi-objective optimization. In: Proceedings of the parallel problem solving from nature VI conference, Springer Lecture Notes in Computer Science, vol 1917/2000, pp 839–848

Corne DW, Jerram NR, Knowles JD, Oates MJ (2001) PESA-II: region-based selection in evolutionary multiobjective optimization. In: Proceedings of the genetic and evolutionary computation conference, (GECCO-2001). Morgan Kaufmann, San Francisco, CA, pp 283–290

Davoudpour H, Ashrafi M (2009) Solving multi-objective SDST flexible flow shop using GRASP algorithm. Int J Adv Manuf Technol 44(7–8):737–747

Deb K, Pratap A, Agarwal S, Meyarivan T (2002) A fast and elitist multi-objective genetic algorithm: NSGA II. IEEE Trans Evolut Comput 6(2):182–197

Dugardin F, Yalaoui F, Amodeo L (2010) New multi-objective method to solve reentrant hybrid flow shop scheduling problem. Eur J Oper Res 203:22–31

Fadaei M, Zandieh M (2013) Scheduling a bi-objective hybrid flow shop with sequence-dependent family setup times using metaheuristics. Arab J Sci Eng 38:2233–2244

Fonseca CM, Fleming PJ (1993) Genetic algorithms for multi-objective optimization: formulation, discussion and generalization. In: Proceedings of the fifth international conference on genetic algorithms, July 1993. Morgan Kaufmann, San Mateo, pp 416–423

Gupta JND (1988) Two-stage, hybrid flowshop scheduling problem. J Oper Res Soc 39(4):359–364

Hakimzadeh Abyaneh S, Zandieh M (2012) Bi-objective hybrid flow shop scheduling with sequence-dependent setup times and limited buffers. Int J Adv Manuf Technol 58:309–325

Holland JH (1975) Adaptation in natural and artificial systems. University of Michigan, Ann Arbor

Horn J, Nafpliotis N, Goldberg DE (1994) A niched Pareto genetic algorithm for multi-objective optimization. In: Proceedings of the 1st IEEE-ICEC conference, computational computation, 27–29 June 1994, Orlando, FL, vol 1, pp 82–87

Hyun CJ, Kim Y, Kim YK (1998) A genetic algorithm for multiple objective sequencing problems in mixed model assembly lines. Comput Oper Res 25(7–8):675–690

Ishibuchi H, Murata T (1998) A multi-objective genetic local search algorithm and its application to flowshop scheduling. IEEE Trans Syst Manuf Cybern 28(3):392–403

Jolai F, Asefi H, Rabiee M, Ramezani P (2013) Bi-objective simulated annealing approaches for no-wait two-stage flexible flow shop scheduling problem. Sci Iran E 20(3):861–872

Jungwattanakit J, Reodecha M, Chaovalitwongse P, Werner F (2008) Algorithms for flexible flow shop problems with unrelated parallel machines, setup times, and dual criteria. Int J Adv Manuf Technol 37(3–4):354–370

Jungwattanakit J, Reodecha M, Chaovalitwongse P, Werner F (2009) A comparison of scheduling algorithms for flexible flow shop problems with unrelated parallel machines, setup times, and dual criteria. Comput Oper Res 36(2):358–378

Karimi N, Zandieh M, Karamooz HR (2010) Bi-objective group scheduling in hybrid flexible flowshop: a multi-phase approach. Expert Syst Appl 37(6):4024–4032

Knowles JD, Corne DW (1999) The Pareto archived evolution strategy: a new baseline algorithm for multiobjective optimization. In: Proceedings of the 1999 congress on evolutionary computation, 6–9 July, IEEE Service Center, Washington, DC, pp 98–105

Lin D, Lee CKM (2011) A review of the research methodology for the re-entrant scheduling problem. Int J Prod Res 49(8):2221–2242

Mousavi SM, Zandieh M, Amiri M (2011a) An efficient bi-objective heuristic for scheduling of hybrid flow shops. Int J Adv Manuf Technol 54(1–4):287–307

Mousavi SM, Zandieh M, Amiri M (2011b) Comparisons of bi-objective genetic algorithms for the hybrid flowshop scheduling with sequence-dependent setup times. Int J Prod Res 50(10):2570–2591

Mousavi SM, Mousakhani M, Zandieh M (2012a) Bi-objective hybrid flow shop scheduling: a new local search. Int J Adv Manuf Technol 64(5–8):933–950

Mousavi SM, Zandieh M, Yazdani M (2012b) A simulated annealing/local search to minimize the makespan and total tardiness on a hybrid flowshop. Int J Adv Manuf Technol 64(1–4):369–388

Naderi B, Zandieh M, Balagh AKG, Roshanaei V (2009a) An improved simulated annealing for hybrid flowshops with sequence-dependent setup and transportation times to minimize total completion time and total tardiness. Expert Syst Appl 36(6):9625–9633

Naderi B, Zandieh M, Roshanaei V (2009b) Scheduling hybrid flowshops with sequence-dependent setup times to minimize makespan and maximum tardiness. Int J Adv Manuf Technol 41:1186–1198

Pargar F, Zandieh M (2012) Bi-criteria SDST hybrid flow shop scheduling with learning effect of setup times: water flow-like algorithm approach. Int J Prod Res 50(10):2609–2623

Pasupathy T, Rajendran C, Suresh RK (2007) A multi-objective genetic algorithm for scheduling in flowshops to minimize the makespan and total flow time of jobs. Int J Adv Manuf Technol 27(7–8):804–815

Pinedo ML (1995) Scheduling theory, algorithms, and systems. Prentice-Hall, Englewood Cliffs

Pinedo ML (2008) Theory, algorithms, and systems, 3rd edn. Springer Science + Business Media, New York

Rashidi E, Jahandar M, Zandieh M (2010) An improved hybrid multi-objective parallel genetic algorithm for hybrid flow shop scheduling with unrelated parallel machines. Int J Adv Manuf Technol 49:1129–1139

Ruiz R, Vázquez-Rodríguez JA (2010) The hybrid flow shop scheduling problem. Eur J Oper Res 205:1–18

Ruiz-Torres AJ, Lopez FJ (2004) Using the FDH formulation of DEA to evaluate a multi-criteria problem in parallel machine scheduling. Comput Ind Eng 47:107–121

Schaffer JD (1985) Multi-objective optimization with vector evaluated genetic algorithms. In: Grefenstette J (ed) Proceedings of the international conference on genetic algorithms and their applications. Lawrence Erlbaum, Hillsdale, pp 93–100

Srinivas N, Deb K (1995) Multi-objective function optimization using non-dominated sorting genetic algorithms. IEEE Trans Evol Comput 2(3):221–248

Wang S, Liu M (2014) Two-stage hybrid flow shop scheduling with preventive maintenance using multi-objective tabu search method. Int J Prod Res 52(5):1495–1508

Wang MY, Sethi SP, Van de Velde SL (1997) Minimizing makespan in a class of reentrant shops. Oper Res 45(5):702–712

Ying KC, Lin SW, Wan SY (2014) Bi-objective reentrant hybrid flowshop scheduling: an iterated Pareto greedy algorithm. Int J Prod Res. doi:10.1080/00207543.2014.910627

Zitzler E, Thiele L (1999) Multi-objective evolutionary algorithm: a comparative case study and the strength Pareto approach. IEEE Trans Evol Comput 3(4):251–257

Zitzler E, Laumanns M, Thiele L (2001) SPEA2: improving the strength Pareto evolutionary algorithm. Report 103. Computer Engineering and Networks Laboratory (TIK), Zurich

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mousavi, S.M., Mahdavi, I., Rezaeian, J. et al. An efficient bi-objective algorithm to solve re-entrant hybrid flow shop scheduling with learning effect and setup times. Oper Res Int J 18, 123–158 (2018). https://doi.org/10.1007/s12351-016-0257-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12351-016-0257-6