Abstract

In this paper we extend three results about polycycles (also known as graphs) of planar smooth vector field to planar non-smooth vector fields (also known as piecewise vector fields, or Filippov systems). The polycycles considered here may contain hyperbolic saddles, semi-hyperbolic saddles, saddle-nodes and tangential singularities of any degree. We determine when the polycycle is stable or unstable. We prove the bifurcation of at most one limit cycle in some conditions and at least one limit cycle for each singularity in other conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Description of the Results

The field of Dynamic Systems has developed and now have many branches, being one of them the field of non-smooth vector fields (also known as piecewise vector fields, or Filippov systems), a common frontier between mathematics, physics and engineering. See [3, 11] for the pioneering works in this area. For applications, see [4, 16, 17] and the references therein. In this paper we are interested in the qualitative theory of non-smooth vector fields. More precisely, in the qualitative theory of polycycles in non-smooth vector fields. A polycycle is a simple closed curve composed by a collection of singularities and regular orbits, inducing a first return map. There are many works in the literature about polycycles in smooth vector fields, take for example some works about its stability [6, 8, 12, 27], the number of limit cycles which bifurcates from it [9, 10, 14, 21, 28], the displacement maps [7, 13, 15, 25] and some bifurcation diagrams [9, 22]. There are also some literature about polycycles in non-smooth vector fields, dealing for example with bifurcation diagrams [1, 23, 24] and the Dulac problem [2].

The goal of this paper is to extend to non-smooth vector fields three results about polycycles in smooth vector fields. To do this, we lay, as in the smooth case, mainly in the idea of obtaining global properties of the polycycle from local properties of its singularities. For a brief description of the obtained results, let Z be a non-smooth vector field with a polycycle \(\Gamma ^n\) with n singularities \(p_i\), each of them being either a hyperbolic saddle or a tangential singularity. For each \(p_i\), we associated a positive real number \(r_i\) such that if \(r_i>1\) (resp. \(r_i<1\)), then \(p_i\) locally contract (resp. repels) the flow. Our first main result deals with the stability of \(\Gamma ^n\), stating that if \(r(\Gamma ^n)=\prod _{i=1}^{n}r_i\) is such that \(r(\Gamma ^n)>1\) (resp. \(r(\Gamma ^n)<1)\), then the polycycle contracts (resp. repels) the flow. Our second and third main results deal with the number of limit cycles that can bifurcate from \(\Gamma ^n\). More precisely, in our second main result we state sufficient conditions so that the cyclicity of \(\Gamma ^n\) is one and in our third main result we state sufficient conditions so that the cyclicity of \(\Gamma ^n\) is at least n.

The paper is organized as follows. In Sect. 2 we establish the main theorems. In Sect. 3 we have some preliminaries about the transitions maps near a hyperbolic saddle, a semi-hyperbolic singularity with a hyperbolic sector, and a tangential singularity. Theorems 1 and 2 are proved in Sect. 4. In Sects. 5 and 6 we study some tools to approach Theorem 3, which is proved in Sect. 7.

2 Main Results

Let \(h_i:{\mathbb {R}}^2\rightarrow {\mathbb {R}}\), \(i\in \{1,\dots ,N\}\), \(N\geqslant 1\), be \(C^\infty \)-real functions. For these functions, define \(\Sigma _i=h^{-1}(\{0\})\). Suppose also that 0 is a regular value of \(h_i\), i.e. \(\nabla h_i(x)\ne 0\) for every \(x\in \Sigma _i\), \(i\in \{1,\dots ,N\}\). Define \(\Sigma =\cup _{i=1}^{N}\Sigma _i\) and let \(A_1,\dots , A_M\), \(M\geqslant 2\), be the connected components of \({\mathbb {R}}^2\backslash \Sigma \). For each \(j\in \{1,\dots ,M\}\), let \(\overline{A}_j\) be the topological closure of \(A_j\) and let \(X_j\) be a \(C^\infty \)-planar vector field defined over \(\overline{A}_j\).

Definition 1

Given \(\Sigma \), \(A_1,\dots , A_M\) and \(X_1,\dots X_M\) as above, the associated planar non-smooth vector field \(Z=(X_1,\dots , X_M;\Sigma )\), with discontinuity \(\Sigma \), is the non-smooth planar vector field given by \(Z(x,\mu )=X_j(x,\mu )\), if \(x\in A_j\), for some \(j\in \{1,\dots ,M\}\). In this case, we say that the vector fields \(X_1,\dots ,X_M\) are the components of Z and \(\Sigma _1,\dots ,\Sigma _N\) are the components of \(\Sigma \).

From now on, let us denote by p points on \(\Sigma \) such that there exists a unique \(i\in \{1,\dots ,N\}\) such that \(p\in \Sigma _i\). Let also X be one of the two components of Z defined at p. The Lie derivative of \(h_i\) in the direction of the vector field X at p is defined as

where \(\left<,\;\right>\) denotes the standard inner product of \({\mathbb {R}}^2\). Under these conditions, we say that p is a tangential singularity if

where \(X_a\) and \(X_b\) are the two components of Z defined at p. Let \(x\in \Sigma \). We say that x is a crossing point if there exist a unique \(i\in \{1,\dots ,N\}\) such that \(x\in \Sigma _i\) and \(X_ah_i(x)X_bh_i(x)>0\), where \(X_a\) and \(X_b\) are the two components of Z defined at x.

Definition 2

A graphic of Z is a subset formed by singularities \(p_1,\dots p_n,p_{n+1}=p_1\), (not necessarily distinct) and regular orbits \(L_1,\dots , L_n\) such that \(L_i\) is a stable characteristic orbit of \(p_i\) and a unstable characteristic orbit of \(p_{i+1}\) (i.e. \(\omega (L_i)=p_i\) and \(\alpha (L_i)=p_{i+1}\)), oriented in the sense of the flow. A polycycle is a graphic with a return map. A polycycle \(\Gamma ^n\) is semi-elementary if it satisfies the following conditions.

-

(a)

Each regular orbit \(L_i\) intersects \(\Sigma \) at most in a finite number of points \(\{x_{i,0},x_{i,1},\dots ,x_{i,n(i)}\}\), with each \(x_{i,j}\) being a crossing point;

-

(b)

\(\Gamma ^n\) is homeomorphic to \({\mathbb {S}}^1\);

-

(c)

Each singularity \(p_i\) satisfies exactly one of the following conditions:

-

(i)

\(p_i\) is semi-hyperbolic and \(p_i\not \in \Sigma \);

-

(ii)

\(p_i\) is a hyperbolic saddle and \(p_i\not \in \Sigma \);

-

(iii)

\(p_i\) is a tangential singularity.

-

(i)

A polycycle is elementary if it satisfies conditions (a), (b) and if its singularities satisfies either (ii) or (iii).

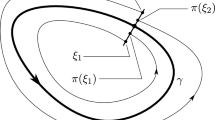

From now on, let \(\Gamma ^n\) denote an elementary or semi-elementary polycycle with n distinct singularities \(p_1,\dots ,p_n\). See Fig. 1.

Observe that \(\Gamma ^n\) divide the plane in two connected sets, with only one being bounded. Let A denote the connected set in which the first return map is contained. Observe that A can be either the bounded or unbounded set delimited by \(\Gamma ^n\). See Fig. 2.

Definition 3

Let \(p\in \Sigma _i\) be a tangential singularity, X one of the components of Z defined at p and let \(X^kh_i(p)=\left<X(p),\nabla X^{k-1}h_i(p)\right>\), \(k\geqslant 2\). We say that X has m-order contact with \(\Sigma \) at p, \(m\geqslant 1\), if m is the first positive integer such that \(X^mh_i(p)\ne 0\).

Let \(p\in \Sigma _i\) be a tangential singularity of \(\Gamma ^n\), \(L_s\) and \(L_u\) the regular orbits of \(\Gamma ^n\) such that \(\omega (L_s)=p\) and \(\alpha (L_u)=p\). Let \(X_a\) and \(X_b\) be the two components of Z defined at p and let \(A_a\), \(A_b\) be the respective connected components of \({\mathbb {R}}^2\backslash \Sigma \) such that \(X_a\) and \(X_b\) are defined over \(\overline{A_a}\) and \(\overline{A_b}\). Given two parametrizations \(\gamma _s(t)\) and \(\gamma _u(t)\) of \(L_s\) and \(L_u\) such that \(\gamma _s(0)=\gamma _u(0)=p\), let \(A_s\), \(A_u\in \{A_a,A_b\}\) be such that \(A_s\cap \gamma _s([-\varepsilon ,0])\ne \emptyset \) and \(A_u\cap \gamma _u([0,\varepsilon ])\ne \emptyset \), for any \(\varepsilon >0\) small. Let also \(X_s\), \(X_u\in \{X_a,X_b\}\) denote the components of Z defined at \(A_s\) and \(A_u\). Observe that we may have \(A_s=A_u\) and thus \(X_s=X_u\). See Fig. 3.

Definition 4

Given a tangential singularity p, let \(X_s\) and \(X_u\) be as above. We define the stable and unstable contact order of p as the contact order \(n_s\) and \(n_u\) of \(X_s\) and \(X_u\) with \(\Sigma \) at p, respectively. Furthermore we also say that \(X_s\) and \(X_u\) are the stable and unstable components of Z defined at p.

Definition 5

Let \(\Gamma ^n\) be an elementary polycycle with distinct singularities \(p_1,\dots ,p_n\). The hyperbolicity ratio of \(r_i>0\) of \(p_i\) is defined as follows.

-

(a)

If \(p_i\) is a tangential singularity, then \(r_i=\frac{n_{i,u}}{n_{i,s}}\), where \(n_{i,s}\) and \(n_{i,u}\) are the stable and unstable contact orders of \(p_i\).

-

(b)

If \(p_i\) is a hyperbolic saddle, then \(r_i=\frac{|\nu _i|}{\lambda _i}\), where \(\nu _i<0<\lambda _i\) are the eigenvalues of \(p_i\).

In the case of a smooth vector fields, Cherkas [6] proved that if \(\Gamma \) is a polycycle composed by n hyperbolic saddles \(p_1,\ldots ,p_n\), with hyperbolicity ratios \(r_1,\dots ,r_n\), then \(\Gamma \) is stable if,

and unstable if \(r<1\). Therefore, our first main theorem is an extension, to non-smooth vector fields, of such classic result.

Theorem 1

Let \(Z=(X_1,\dots ,X_M;\Sigma )\) be a planar non-smooth vector field with an elementary polycycle \(\Gamma ^n\). Let also,

If \(r(\Gamma ^n)>1\) (resp. \(r(\Gamma ^n)<1\)), then there is a neighborhood \(N_0\) of \(\Gamma ^n\) such that the orbit of Z through any point \(p\in N_0\cap A\) has \(\Gamma ^n\) as \(\omega \)-limit (resp. \(\alpha \)-limit).

Definition 6

Let \(\Gamma ^n\) be a semi-elementary polycycle with n distinct singularities \(p_1,\dots ,p_n\). We say that \(p_i\) is stable (unstable) singularity of \(\Gamma ^n\) if it satisfies one of the following conditions.

-

(a)

\(p_i\) is a semi-hyperbolic singularity and \(\lambda _i<0\) (resp. \(\lambda _i>0\)), where \(\lambda _i\) is the unique non-zero eigenvalue of \(p_i\);

-

(b)

\(p_i\) is a hyperbolic saddle and \(r_i>1\) (resp. \(r_i<1\)), where \(r_i\) is the hyperbolicity ratio of \(p_1\);

-

(c)

\(p_i\) is a tangential singularity and \(n_{i,s}=1\) (resp. \(n_{i,u}=1)\), where \(n_{i,s}\) and \(n_{i,u}\) are the stable and unstable contact orders of \(p_i\).

Let \(\Gamma ^n\) be a semi-elementary polycycle. We say that the cyclicity of \(\Gamma ^n\) is k if at most k limit cycles can bifurcate from an arbitrarily small perturbation of \(\Gamma ^n\). In the case of smooth vector fields, Dumortier et al [9] proved that if \(\Gamma \) is a polycycle of a smooth vector field composed only by stable (resp. unstable) singular points, then \(\Gamma \) has cyclicity one. Furthermore if any small perturbation of \(\Gamma \) has a limit cycle, then it is hyperbolic and stable (resp. unstable). Therefore, our second main theorem is an extension of such result to the realm of non-smooth vector fields.

Theorem 2

Let \(Z=(X_1,\dots ,X_M;\Sigma )\) be a planar non-smooth vector field with a semi-elementary polycycle \(\Gamma ^n\). Suppose that each singularity \(p_i\) is a stable (resp. unstable) singularity of \(\Gamma ^n\). If a small perturbation of \(\Gamma ^n\) has a limit cycle, then it is unique, hyperbolic and stable (resp. unstable). In particular, the cyclicity of \(\Gamma ^n\) is one.

Let \(\Gamma \) be a polycycle of a smooth vector field composed by n hyperbolic saddles \(p_1,\dots ,p_n\), with hyperbolicity ratios \(r_1,\dots ,r_n\). Let also, \(R_i=\prod _{j=1}^{i}r_j\). Han et al [14] proved that if \((R_i-1)(R_{i+1}-1)<0\), \(i\in \{1,\dots ,n-1\}\), then there exists an arbitrarily small \(C^\infty \)-perturbation of \(\Gamma \) with at least n limit cycles. In our third main result, we extend this result to the case of non-smooth vector fields.

Theorem 3

Let \(Z=(X_1,\dots ,X_M;\Sigma )\) be a planar non-smooth planar vector field with an elementary polycycle \(\Gamma ^n\) and let \(R_i=\prod _{j=1}^{i}r_j\), \(i\in \{1,\dots ,n\}\). Suppose \(R_n\ne 1\) and, if \(n\geqslant 2\), suppose \((R_i-1)(R_{i+1}-1)<0\) for \(i\in \{1,\dots ,n-1\}\). Then, there exist an arbitrarily small perturbation of Z such that at least n limit cycles bifurcates from \(\Gamma ^n\). In particular, the cyclicity of \(\Gamma ^n\) is at least n.

3 Preliminaries

3.1 Transition Map Near a Hyperbolic Saddle

Let \(X_\mu \) be a \(C^\infty \) planar vector field depending in a \(C^\infty \)-way on a parameter \(\mu \in {\mathbb {R}}^r\), \(r\geqslant 1\), defined in a neighborhood of a hyperbolic saddle \(p_0\) at \(\mu =\mu _0\). Let \(\Lambda \subset {\mathbb {R}}^r\) be a small enough neighborhood of \(\mu _0\), \(\nu (\mu )<0<\lambda (\mu )\) be the eigenvalues of the hyperbolic saddle \(p(\mu )\), \(\mu \in \Lambda \), and \(r(\mu )=\frac{|\nu (\mu )|}{\lambda (\mu )}\) be the hyperbolicity ratio of \(p(\mu )\). Let B be a small enough neighborhood of \(p_0\) and \(\Phi :B\times \Lambda \rightarrow {\mathbb {R}}^2\) be a \(C^\infty \)-change of coordinates such that \(\Phi \) sends the hyperbolic saddle \(p(\mu )\) to the origin and its unstable and stable manifolds \(W^u(\mu )\) and \(W^s(\mu )\) to the axis Ox and Oy, respectively. Let \(\sigma \) and \(\tau \) be two small enough cross sections of \(Oy^+\) and \(Ox^+\), respectively. We can suppose that \(\sigma \) and \(\tau \) are parametrized by \(x\in [0,x_0]\) and \(y\in [0,y_0]\), with \(x=0\) and \(y=0\) corresponding to \(Oy^+\cap \sigma \) and \(Ox^+\cap \tau \), respectively. The flow of \(X_\mu \) in the first quadrant in this new coordinate system defines a transition map:

called the Dulac’s map [8]. See Fig. 4. Observe that D is of class \(C^\infty \) for \(x\ne 0\) and it can be continuously extend by \(D(0,\mu )=0\) for all \(\mu \in \Lambda \).

Definition 7

Let \(I_k\), \(k\geqslant 0\), denote the set of functions \(f:[0,x_0]\times \Lambda _k\rightarrow {\mathbb {R}}\), with \(\Lambda _k\subset \Lambda \), satisfying the following properties.

-

(a)

f is \(C^\infty \) on \((0,x_0]\times \Lambda _k\);

-

(b)

For each \(j\in \{0,\dots ,k\}\) we have that \(\varphi _j=x^j\frac{\partial ^j f}{\partial x^j}(x,\mu )\) is continuous on \((0,x_0]\times \Lambda _k\) with \(\varphi _j(x,\mu )\rightarrow 0\) for \(x\rightarrow 0\), uniformly in \(\mu \).

A function \(f:[0,x_0]\times \Lambda \rightarrow {\mathbb {R}}\) is said to be of class I if f is \(C^\infty \) on \((0,x_0]\times \Lambda \) and for every \(k\geqslant 0\) there exists a neighborhood \(\Lambda _k\subset \Lambda \) of \(\mu _0\) such that f is of class \(I^k\) on \((0,x_0]\times \Lambda _k\).

Theorem 4

(Mourtada, [9, 21]) Let \(X_\mu \), \(\sigma \), \(\tau \), and D be as above. Then, for \((x,\mu )\in (0,x_0]\times \Lambda \), we have

with \(\varphi \in I\) and A a positive \(C^\infty \)-function.

Following Dumortier et al [9], we call Mourtada’s form the expression (2) of the Dulac map and denote by \({\mathfrak {D}}\) the class of maps given by (2).

Proposition 1

([9, 21]) Given \(D(x,\mu )=x^{r(\mu )}(A(\mu )+\varphi (x,\mu ))\in {\mathfrak {D}}\), the following statements hold.

-

(a)

\(D^{-1}\) is well defined and \(D^{-1}(x,\mu )=x^{\frac{1}{r(\mu )}}(B(\mu )+\psi (x,\mu ))\in {\mathfrak {D}}\);

-

(b)

\(\frac{\partial D}{\partial x}\) is well defined and

$$\begin{aligned} \frac{\partial D}{\partial x}(x,\mu )=r(\mu )x^{r(\mu )-1}(A(\mu )+\xi (x,\mu )), \end{aligned}$$(3)with \(\xi \in I\).

For a complete characterization of the Dulac map, see [19, 20]. The following result is also a classical result about the Dulac map.

Proposition 2

Let X be a vector field of class \(C^\infty \) with a hyperbolic saddle p at the origin, with eigenvalues \(\nu<0<\lambda \). Suppose also that the unstable and stable manifolds \(W^u\) and \(W^s\) of p are given by the axis Oy and Ox, respectively, and let \(D=D(x)\) be the Dulac map associated with p. Then, given \(\varepsilon >0\), there is \(\delta >0\) such that,

The proof of Proposition 2 is due to Sotomayor [27, Section 2.2]. A similar result was also proved by Cherkas [6]. Since both the references are not in English (and as far as we know, there are no translation of it), we find it useful to prove Proposition 2 in this paper.

Proof of Proposition 2. Let \(X=(P,Q)\) be given by,

Since \(W^s\) and \(W^u\) are given the coordinate axis, it follows that \(r_1(0,y)=r_2(x,0)=0\), for every \((x,y)\in {\mathbb {R}}^2\). Observe that,

Hence, it follows that we can write \(r_1(x,y)=x\overline{r}_1(x,y)\), with \(\overline{r}_1\) continuous and such that \(\overline{r}_1(0,0)=0\). Similarly, we have \(r_2(x,y)=y\overline{r}_2(x,y)\). Given \(\varepsilon >0\), consider the linear vector field \(X_\varepsilon =(P_\varepsilon ,Q_\varepsilon )\) given by,

Let

and observe that

Therefore, there is \(\delta >0\) such that if \(0<x<\delta \) and \(0<y<\delta \), then \(\det J(x,y)>0\) and thus the vectors X(x, y) and \(X_\varepsilon (x,y)\) have positive orientation. Hence, if \(D_\varepsilon \) is the Dulac map associated with \(X_\varepsilon \), it follows that \(D(x)\leqslant D_\varepsilon (x)\), for every \(0<x<\delta \). See Fig. 5.

Since \(X_\varepsilon \) is linear, it follows that its flow \(\varphi \) is given by

and thus we have \(D_\varepsilon (x)=ye^{\nu t_0}\), where \(t_0>0\) is such that \(xe^{(\lambda +\varepsilon )t_0}=\delta \). Hence, \(t_0=\frac{1}{\lambda +\varepsilon }\ln \frac{\delta }{x}\) and thus we have,

This proves the first inequality of the proposition. The other one can be obtained by considering \(X_\varepsilon (x,y)=((\lambda -\varepsilon )x,\nu y)\). \(\square \)

3.2 Transition Map Near A Semi-hyperbolic Singularity

Theorem 5

(Theorem 3.2.2 of [9]) Let \(X_\mu \) be a \(C^\infty \)-planar vector field depending in a \(C^\infty \)-way on a parameter \(\mu \in \Lambda \subset {\mathbb {R}}^r\), \(r\geqslant 1\). Suppose that at \(\mu =\mu _0\) we have a semi-hyperbolic singularity at the origin O. Let also B be a small enough neighborhood of O. If \(\Lambda \) is a small enough neighborhood of \(\mu _0\), then for each \(k\geqslant 1\), \(k\in {\mathbb {N}}\), there exists a \(C^k\)-family of diffeomorphisms on B such that at this new coordinate system, \(X_\mu \) is given by

except by the multiplication of a \(C^k\)-positive function. Furthermore, g is a function of class \(C^k\) satisfying,

Let \(X_\mu \) be a \(C^\infty \)-planar vector field depending in a \(C^\infty \)-way on a parameter \(\mu \in \Lambda \subset {\mathbb {R}}^r\), \(r\geqslant 1\). Suppose that at \(\mu =\mu _0\) we have a semi-hyperbolic singularity \(p_0\) with a hyperbolic sector (e.g. a saddle-node or a degenerated saddle). At \(\mu =\mu _0\), let \(\lambda \in {\mathbb {R}}\backslash \{0\}\) be the unique non-zero eigenvalue of \(p_0\). Reversing the time if necessary, we can assume that \(\lambda <0\). Locally at \(p_0\), it follows from Theorem 5 that we can suppose that \(X_\mu \) is given by

with g of class \(C^k\) (for any k large enough) and satisfying,

In this new coordinate system given by Theorem 5, and at \(\mu =\mu _0\), let \(\sigma \) and \(\tau \) be two small cross sections of the axis \(Oy^+\) and \(Ox^+\) (which are, respectively, the stable and the central manifolds of \(p_0\)). As in subsection 3.1, we can suppose that \(\sigma \) and \(\tau \) are parametrized by \(x\in [0,x_0]\) and \(y\in [0,y_0]\), with \(x=0\) and \(y=0\) corresponding to \(Oy^+\cap \sigma \) and \(Ox^+\cap \tau \), respectively (see Fig. 4). Let \(x^*(\mu )\) be the largest solution of \(g(x,\mu )=0\) and observe that \(x^*(\mu _0)=0\). For each \(\mu \in \Lambda \), let \(\sigma (\mu )\subset \sigma \) be given by \(x\in [x^*(\mu ),x_0]\) and let

As in Sect. 3.1, in this new coordinate system the flow of \(X_\mu \) defines a transition map \(F:C\rightarrow (0,y_0]\).

Theorem 6

[Theorem 3 of [9]] Let \(X_\mu \) and F be as above. Then

where \(Y>0\) and \(T:C\rightarrow {\mathbb {R}}^+\) is the time function from \(\sigma (\mu )\) to \(\tau \). Moreover, if \(\mu =(\mu _1,\dots ,\mu _r)\), then for any k, \(m\in {\mathbb {N}}\) and for any \((i_0,\dots ,i_r)\in {\mathbb {N}}^{n+1}\) with \(i_0+\dots +i_r=m\), we have

3.3 Transition Map Near a Tangential Singularity

Let \(p_0\) be a tangential singularity of \(\Gamma ^n\) and \(X_s\), \(X_u\) be the stable and unstable components of Z defined at \(p_0\) with \(\mu =\mu _0\). Let B be a small enough neighborhood of \(p_0\) and \(\Phi :B\times \Lambda \rightarrow {\mathbb {R}}^2\) be a \(C^\infty \) change of coordinates such that \(\Phi (p_0,\mu _0)=(0,0)\) and \(\Phi (B\cap \Sigma )=Ox\). Let \(l_s=\Phi (B\cap L_s)\), \(l_u=\Phi (B\cap L_u)\) and \(\tau _s\), \(\tau _u\) two small enough cross sections of \(l_s\) and \(l_u\), respectively. Let also,

depending on \(\Gamma ^n\). It follows from Andrade et al [1] that \(\Phi \) can be choose such that the transition maps \(T^{s,u}:\sigma \times \Lambda \rightarrow \tau _{s,u}\), given by the flow of \(X_{s,u}\) in this new coordinate system, are well defined and given by,

with \(\lambda _i^{s,u}(\mu _0)=0\), \(k_{s,u}(\mu _0)\ne 0\), \(h_\mu :{\mathbb {R}}\rightarrow {\mathbb {R}}\) a diffeomorphism and with \(h_\mu \) and \(\lambda _i^{s,u}\) depending continuously on \(\mu \). For examples of such maps, see Fig. 6.

4 Proofs of Theorems 1 and 2

Proof of Theorem 1

For simplicity, we assume that \(\Sigma =h^{-1}(0)\) has one component and thus \(Z=(X_1,X_2;\Sigma )\) has two components. Moreover, we assume that \(\Gamma ^n=\Gamma ^3\) is composed by two tangential singularities \(p_1\), \(p_2\) and by a hyperbolic saddle \(p_3\). See Fig. 7. The general case follows similarly. Let \(B_i\) be a small enough neighborhood of \(p_i\) and let \(\Phi _i:B_i\times \{\mu _0\}\rightarrow {\mathbb {R}}^2\) be the change of variables chosen as in Sect. 3.3, \(i\in \{1,2\}\). Let also \(B_3\) be a neighborhood of \(p_3\) and \(\Phi _3:B_3\times \{\mu _0\}\rightarrow {\mathbb {R}}^2\) be the change of variables chosen as in Sect. 3.1. Knowing that \(T_i^{s,u}:\sigma _i\times \{\mu _0\}\rightarrow \tau _i^{s,u}\) and \(D:\sigma \times \{\mu _0\}\rightarrow \tau \), let,

with \(i\in \{1,2\}\). Let also,

be defined by the flow of \(X_1\) and \(X_2\). See Fig. 7. Finally let,

and,

with \(i\in \{1,2\}\). See Fig. 7.

Illustration of the maps used in the proof of Theorem 1

Let \(\nu<0<\lambda \) be the eigenvalues of \(p_3\) and denote \(r=\frac{|\nu |}{\lambda }\). Let also \(n_{i,s}\) and \(n_{i,u}\) denote the stable and unstable order of \(p_i\), \(i\in \{1,2\}\). Suppose \(r(\Gamma ^n)>1\). Given \(\varepsilon >0\), it follows from Sects. 3.1 and 3.3 that,

with \(k_{i,s}\), \(k_{i,u}\), \(a_j\), \(a\ne 0\), \(C>0\), \(i\in \{1,2\}\) and \(j\in \{1,2,3\}\). Since \(\varepsilon >0\) is arbitrary, it follows that if we define

then one can conclude that,

with \(K\ne 0\) and \(1<r_0<r(\Gamma ^n)\). Hence, if x is small enough we conclude that \(\pi (x)<x\). The result now follows from the fact that the first return map

satisfies \(P=\Phi _3^{-1}\circ \pi \circ \Phi _3\). If \(r(\Gamma ^n)<1\), the results follows by inverting the time variable. \(\square \)

Proof of Theorem 2

Let us suppose that every singularity of \(\Gamma ^n\) is attracting (see Definition 6). Following the proof of Theorem 1, we observe that the Poincaré map, when well defined, can be written as the composition

where each \(F_i\) is the transition map near a hyperbolic saddle (given by (2)), a semi-hyperbolic singularity (given by (4)), or a tangential singularity (given by (6)), and each \(G_i\) is regular transition given by the flow of Z, i.e. a \(C^\infty \)-diffeomorphism in x. We call \(y_1=F_1(x_1)\), \(x_2=G_1(y_1)\), \(\dots \), \(y_k=F_k(x_k)\), \(x_{k+1}=G_k(y_k)\). Thus,

Therefore, it follows from (3), (5) and (6) that for all \(\varepsilon >0\) there exists a neighborhood \(\Lambda \) of \(\mu _0\) and neighborhoods \(W_i\) of \(x_i=0\), \(i\in \{1,\dots ,k+1\}\), such that if \(x_1\in W_1\), then \(x_i\in W_i\) and \(|F_i'(x_i)|<\varepsilon \), for all \(i\in \{1,\dots ,k+1\}\) and for all \(\mu \in \Lambda \). Also, if \(\Lambda \) and each \(W_i\) are small enough, then each \(G_i'(y_i)\) is bounded, and bounded away from zero. Since \(\varepsilon >0\) is arbitrarily small, it follows that \(P_\mu '(x_1)\) is also arbitrarily small, for \((x_1,\mu )\in W_1\times \Lambda \). Therefore, the derivative of the displacement map \(d_\mu (x_1)=P_\mu (x_1)-x_1\) cannot vanish and thus at most one limit cycle bifurcate from \(\Gamma ^n\). Moreover, if it does, it is hyperbolic and stable. The other case follows by reversing the time variable. \(\square \)

Unlike Theorems 1 and 2, to obtain Theorem 3 it will be necessary to work on some technicalities about the displacement maps of a polycycle. We will deal with that at Sects. 5 and 6.

5 The Displacement Map

Let \(Z=(X_1,\dots ,X_m;\Sigma )\) be a planar non-smooth vector field, depending in a \(C^\infty \)-way on a parameter \(\mu \in {\mathbb {R}}^r\), and such that Z has an elementary polycycle \(\Gamma ^n\) at \(\mu =\mu _0\). Let also \(\Lambda \subset {\mathbb {R}}^r\) be a small neighborhood of \(\mu _0\) and from now on assume \(\mu \in \Lambda \). In this section, we will study the displacement map between two singularities \(p_i\) and \(p_{i+1}\) of \(\Gamma ^n\). We will begin by the case in which both \(p_i\) and \(p_{i+1}\) are hyperbolic saddles. To simplify the notation, at \(\mu =\mu _0\), let \(p_1\in A_1\) and \(p_2\in A_2\) be two hyperbolic saddles of \(\Gamma ^n\) with the heteroclinic connection \(L_0\) such that \(\omega (L_0)=p_1\), \(\alpha (L_0)=p_2\) and \(L_0\cap \Sigma =\{x_0\}\), \(\Sigma =h^{-1}(0)\). Let \(\gamma _0(t)\) be a parametrization of \(L_0\) such that \(\gamma _0(0)=x_0\) and \(u_0\) be a unitary vector orthogonal to \(T_{x_0}\Sigma \) such that \(sign(\left<u_0,\nabla h(x_0)\right>)=sign(X_1h(x_0))=sign(X_2h(x_0))\). See Figure 8.

Also, define \(\omega _0\in \{-1,1\}\) such that \(\omega _0=1\) if the orientation of \(\Gamma ^n\) is counterclockwise and \(\omega _0=-1\) if the orientation of \(\Gamma ^n\) is clockwise. We denote by \(DX(p,\mu ^*)\) the Jacobian matrix of \(X|_{\mu =\mu ^*}\) at p, i.e. if \(X=(P,Q)\), then

If \(\Lambda \) is a small enough neighborhood of \(\mu _0\), then it follows from the Implicit Function Theorem that if \(\mu \in \Lambda \), then the perturbation \(p_i(\mu )\) of \(p_i\) is well defined and it is a hyperbolic saddle of \(X_i\), with \(p_i(\mu )\rightarrow p_i\) as \(\mu \rightarrow \mu _0\), and with \(p_i(\mu )\) of class \(C^\infty \), \(i\in \{1,2\}\). Let \((y_{i,1},y_{i,2})=(y_{i,1}(\mu ),y_{i,2}(\mu ))\) be a coordinate system with its origin at \(p_i(\mu )\) and such that the \(y_{i,1}\)-axis and the \(y_{i,2}\)-axis are the one-dimensional stable and unstable spaces \(E_i^s(\mu )\) and \(E_i^u(\mu )\) of the linearization of \(X_i(\cdot ,\mu )\) at \(p_i(\mu )\), \(i\in \{1,2\}\). It follows from the Center-Stable Manifold Theorem (see [18]) that the stable and unstable manifolds \(S_i^\mu \) and \(U_i^\mu \) of \(X_i(\cdot ,\mu )\) at \(p_i(\mu )\) are given by,

where \(\Psi _{i,1}\) and \(\Psi _{i,2}\) are \(C^\infty \)-functions, \(i\in \{1,2\}\). Restricting \(\Lambda \) if necessary, it follows that there exist \(\delta >0\) such that,

\(i\in \{1,2\}\). If \(C_i(\mu )\) is the diagonalization of \(DX_i(p_i(\mu ),\mu )\), then at the original coordinate system \((x_1,x_2)\) we obtain,

\(i\in \{1,2\}\). Furthermore \(x_i^s(\mu )\) and \(x_i^u(\mu )\) are also \(C^\infty \) at \(\Lambda \). Let \(\phi _i(t,\xi ,\mu )\) be the flow of \(X_i(\cdot ,\mu )\) such that \(\phi _i(0,\xi ,\mu )=\xi \) and \(L^s_0=L^s_0(\mu )\), \(L^u_0=L^u_0(\mu )\) be the perturbations of \(L_0\) such that \(\omega (L^s_0(\mu ))=p_1(\mu )\) and \(\alpha (L^u_0(\mu ))=p_2(\mu )\). Then it follows that,

are parametrizations of \(L^s_0(\mu )\) and \(L^u_0(\mu )\), respectively. Since \(L_0\) intersects \(\Sigma \), it follows that there are \(t_0^s<0\) and \(t_0^u>0\) such that \(x^s(t_0^s,\mu _0)=x_0=x^u(t_0^u,\mu _0)\) and thus by the uniqueness of solutions we have,

for \(t\in [0,+\infty )\) and \(t\in (-\infty ,0]\), respectively.

Lemma 1

Taking a small enough neighborhood \(\Lambda \) of \(\mu _0\), there exists unique \(C^\infty \)-functions \(\tau ^s(\mu )\) and \(\tau ^u(\mu )\) such that \(\tau ^s(\mu )\rightarrow t_0^s\) and \(\tau ^u(\mu )\rightarrow t_0^u\), as \(\mu \rightarrow \mu _0\), and \(x_0^s(\mu )=x^s(\tau ^s(\mu ),\mu )\in \Sigma \) and \(x_0^u(\mu )=x^u(\tau ^u(\mu ),\mu )\in \Sigma \), for all \(\mu \in \Lambda \). See Fig. 9.

Proof

Let \(X_1\) denote a \(C^\infty \)-extension of \(X_1\) to a neighborhood of \(\overline{A_1}\) and observe that now \(x^s(t,\mu _0)\) is well defined for \(|t-t_0^s|\) small enough. Knowing that \(\Sigma =h^{-1}(0)\), define \(S(t,\mu )=h(x^s(t,\mu ))\) and observe that \(S(t_0^s,\mu _0)=h(x_0)=0\) and,

It then follows from the Implicit Function Theorem that there exist a \(C^\infty \)-function \(\tau ^s(\mu )\) such that \(\tau ^s(\mu _0)=t_0^s\) and \(S(\tau ^s(\mu ),\mu )=0\) and thus \(x_0^s(\mu )=x^s(\tau ^s(\mu ),\mu )\in \Sigma \). In the same way one can prove the existence of \(\tau ^u\). \(\square \)

Definition 8

It follows from lemma 1 that the displacement function,

where \((x_1,x_2)\wedge (y_1,y_2)=x_1y_2-y_1x_2\), is well defined near \(\mu _0\) and it is of class \(C^\infty \). See Fig. 10.

Remark 1

We observe that \(L_0\) can intersect \(\Sigma \) multiple times. In this case, following Sect. 2, we write \(L_0\cap \Sigma =\{x_0,x_1,\dots ,x_n\}\) and let \(\gamma _0(t)\) be a parametrization of \(L_0\) such that \(\gamma _0(t_i)=x_i\), with \(t_n<\dots<t_1<t_0=0\). Therefore, applying lemma 1 one shall obtain \(x_n^u(\mu )\) and then applying the Implicit Function Theorem multiple times one shall obtain \(x_{i}^u(\mu )\) as a function of \(x_{i+1}^u(\mu )\), \(i\in \{0,\dots ,n-1\}\), and thus the displacement function is still well defined at \(x_0\).

Let us define,

new parametrizations of \(L^s_0(\mu )\) and \(L^u_0(\mu )\), respectively. In the following lemma we will denote by \(X_i\) some \(C^\infty \)-extension of \(X_i\) at some neighborhood of \(\overline{A_i}\), \(i\in \{1,2\}\), and thus \(x^s_\mu (t)\) and \(x^u_\mu (t)\) are well defined for |t| small enough.

Lemma 2

For any \(\mu ^*\in \Lambda \) and any \(i\in \{1,\dots ,n\}\) the maps

are bounded as \(t\rightarrow +\infty \) and \(t\rightarrow -\infty \), respectively.

Proof

Let us consider a small perturbation of the parameter in the form,

where \(e_i\) is the ith vector of the canonical base of \({\mathbb {R}}^r\). The corresponding perturbation of the singularity \(p_2(\mu ^*)\) takes the form,

Knowing that \(X_2(p_2(\mu ),\mu )=0\) for any \(\varepsilon \) it follows that,

and thus applying \(\varepsilon \rightarrow 0\) we obtain,

where \(F_0=DX_2(p_2(\mu ^*),\mu ^*)\) and \(G_0=\frac{\partial X_2}{\partial \mu }(p_2(\mu ^*),\mu ^*)\) (observe that \(F_0\) is reversible because \(p_2\) is a hyperbolic saddle). Hence,

Therefore, it follows from the \(C^\infty \)-differentiability of the flow near \(p_2(\mu )\) that,

and thus we have the proof for \(x_{\mu ^*}^u\). The proof for \(x_{\mu ^*}^s\) is similar. \(\square \)

Let \(\theta _i\in (-\pi ,\pi )\) be the angle between \(X_i(x_0)\) and \(u_0\), \(i\in \{1,2\}\). See Fig. 11.

For \(i\in \{1,2\}\) we denote by \(M_i\) the rotation matrix of angle \(\theta _i\), i.e.

Following Perko [25], we define

It then follows from Definition 8 that,

and thus,

Therefore, to understand the displacement function \(d(\mu )\), it is enough to understand \(n^u\) and \(n^s\). Let \(X_i=(P_i,Q_i)\), \(i\in \{1,2\}\). Knowing that \(\gamma _0\) is a parametrization of \(L_0\) such that \(\gamma _0(0)=x_0\), let \(L_0^+=\{\gamma _0(t):t>0\}\subset A_1\) and,

where,

and,

\(i\in \{1,2\}\), \(j\in \{1,\dots ,r\}\).

Proposition 3

For any \(j\in \{1,\dots ,r\}\) it follows that,

Proof

From now on in this proof we will denote \(X_1\) some \(C^\infty \)-extension of \(X_1\) at some neighborhood of \(\overline{A_1}\) and thus \(x_\mu ^s(t)\) is well define for |t| small enough. Let \(j\in \{1,\dots ,r\}\). Defining,

it then follows that,

Let \((s,n)=(s(t,\mu ),n(t,\mu ))\) be the coordinate system with origin at \(x_\mu ^s(t)\) and such that the angle between \(X_1(x_\mu ^s(t),\mu )\) and s equals \(\theta _1\), and n is orthogonal to s, pointing outwards in relation to G. See Fig. 12.

Write \(\xi (t,\mu )=\xi _s(t,\mu )s+\xi _n(t,\mu )n\) in function of this new coodinate system and observe that \(\xi _n\) (i.e. the component of \(\xi \) in the direction of n) is given by

where \(\rho (t,\mu )=\xi \wedge M_1X_1(x_\mu ^s(t),\mu )\). Since \(n(0,\mu )\) is precisely equal to the normal direction of \(u_0\), it follows that,

Denoting \(M_1X_1=(P_0,Q_0)\), \(\xi =(\xi _1,\xi _2)\) and,

we conclude that,

where,

Hence,

Knowing that,

we conclude,

Replacing (10) and (14) in (13) one can conclude,

Solving (15) we obtain,

Observe that \(X_1(x_\mu ^s(t),\mu )\rightarrow 0\) as \(t\rightarrow +\infty \). Therefore, it follows from (11) and (12) that \(\rho (t,\mu )\rightarrow 0\) as \(t\rightarrow +\infty \) (since from lemma 2 we know that \(\frac{\partial n^s}{\partial \mu _j}\) is bounded). Thus, if we take \(t_0=0\) and let \(t_1\rightarrow +\infty \) in (16), then it follows that,

and thus it follows from (11) and (12) we have that,

\(\square \)

Remark 2

Observe that even if \(L_0\) intersects \(\Sigma \) in multiple points, \(L_0^+\) was defined in such a way that there is no discontinuities on it. Moreover, if \(L_0\cap \Sigma =\{x_0\}\), then \(L_0^-=\{\gamma _0(t):t<0\}\) also has no discontinuities and thus, as in Proposition 3, one can prove that,

with \(\overline{\rho }\) satisfying (15), but with \(x_\mu ^u\) instead of \(x_\mu ^s\) and \(X_2\) instead of \(X_1\). Furthermore we have \(\overline{\rho }(t,\mu )\rightarrow 0\) as \(t\rightarrow -\infty \) and thus by setting \(t_1=0\) and letting \(t_0\rightarrow -\infty \) we obtain,

Hence, in the simple case where \(L_0\) intersects \(\Sigma \) in a unique point \(x_0\), it follows from (8) and from Proposition 3 that,

with,

Remark 3

If instead of a non-smooth vector field we suppose that \(Z=X\) is smooth, then we can assume \(X_1=X_2\) and take \(u_0=\frac{X(x_0,\mu _0)}{||X(x_0,\mu _0)||}\). In this case we would have \(\theta _1=\theta _2=0\) and therefore conclude that,

as in the works of Perko, Holmes and Guckenheimer [13, 15, 25].

Remark 4

Within this section, the hypothesis of a polycycle is not necessary. In fact, if we assume only a heteroclinic connection between saddles, then we can define the displacement function as

and therefore obtain,

it is only necessary to pay attention at which direction we have \(d(\mu )>0\) or \(d(\mu )<0\).

Let us now study the case where at least one of the endpoints of the heteroclinic connection is a tangential singularity. In the case of the hyperbolic saddle, we use the Center-Stable Manifold Theorem [18] to take a point \(x_1^s(\mu )\) within the stable manifold of the hyperbolic saddle. Then, we define,

where \(\phi _1\) is the flow of \(X_1\), the component of Z which contains the hyperbolic saddle. Then, we use the Implicit Function Theorem at lemma 1 to obtain a smooth function \(\tau ^s(\mu )\) such that,

for every \(\mu \in \Lambda \), where \(\Lambda \) is a small enough neighborhood of \(\mu _0\). Therefore, in the case of the tangential singularity, we define

where \(T^s\) and \(h_\mu \) are given by (6). However, in the case of a hyperbolic saddle the definition of \(x^s(t,\mu )\) given by (17) works essentially because the hyperbolic saddle is structurally stable. But this may not be the case of a tangential singularity. Moreover, the parameters at (6), related with the transitions maps near tangential singularities, depend continuously on the parameter \(\mu \). Hence, we cannot take its derivative with respect to \(\mu \). To avoid these problems, it is sufficient to assume that Z is constant, in relation to \(\mu \), in a neighborhood of the tangential singularity.

Remark 5

From now on, given a tangential singularity p of the planar non-smooth vector field Z, we suppose that Z is constant, in relation to \(\mu \), in a neighborhood B of p.

In this case, let \(x_1^s(\mu )\in B\) be given by (19). It follows from Remark 5 that \(x_1^s(\mu )=x_1^s\) is constant. Now, similarly to the case of the hyperbolic saddle, let \(x^s(t,\mu )\) be given by (17) (i.e. \(x^s(t,\mu )\) is the parametrization of the regular orbit \(L_0^s(\mu )\)). Let \(x_0=x^s(t_0^s,\mu _0)\) be the intersection of \(L_0(\mu _0)\) and \(\Sigma \). See Fig. 13.

Similarly to lemma 1, it follows from the Implicit Function Theorem that there exist a \(C^\infty \)-function \(\tau ^s(\mu )\) such that \(\tau ^s(\mu )\rightarrow t_0^s\), as \(\mu \rightarrow \mu _0\), and such that \(x_0^s(\mu )=x^s(\tau ^s(\mu ),\mu )\in \Sigma \), for all \(\mu \in \Lambda \). Similarly, one can define \(x_1^u(\mu )\) and then obtain \(\tau ^u(\mu )\) and \(x_0^u(\mu )\). Therefore, we have obtained an analogous version of lemma 1 for the case of tangential singularities. Since Z is constant, in relation to \(\mu \), in a neighborhood of \(p_1\), it follows that the partial derivatives of \(x^s\) in relation to \(\mu \) are zero near \(p_1\). Hence, lemma 2 is also clear in this case. Although the assumption made at Remark 5 is strong, we observe that to prove Theorem 3, we will make use of bump-functions to construct perturbations that does not affect any tangential singularity. We now prove the similar version of Proposition 3.

Proposition 4

Let \(p_1\) be a tangential singularity satisfying Remark 5. Then for any \(j\in \{1,\dots ,r\}\) it follows that,

where \(I_j^+\) is given by (9)

and \(\gamma _0\) is a parametrization of \(L_0^+\) such that \(\gamma _0(0)=x_0\) and \(\gamma _0(t_1)=p_1\).

Proof

Let \(\gamma _0\) be a parametrization of \(L_0\) such that \(\gamma _0(0)=x_0\) and \(\gamma _0(t_1)=p_1\). It follows from Proposition 3 that,

with,

satisfying,

Observe that \(t_1>0\) and thus we can define \(L_0^+=\{\gamma _0(t):0<t<t_1\}\). Then, it follows from (20) that,

Since Z is constant in a neighborhood of \(p_1\), it follows that \(\xi (t_1,\mu )=0\) and thus \(\rho (t_1,\mu _0)=0\). This finishes the prove. \(\square \)

In the following proposition we use the Poincaré-Bendixson theory for non-smooth vector fields (see Buzzi et al [5]) and the displacement maps to prove, under some conditions, the bifurcation of limit cycles. Such result will be used in an induction argumentation in the proof of Theorem 3.

Proposition 5

Let Z and \(\Gamma ^n\) be as in Sect. 2, with the tangential singularities satisfying Remark 5, and \(d_i:\Lambda \rightarrow {\mathbb {R}}\), \(i\in \{1,\dots ,n\}\), be the displacement maps defined at the regular orbits of \(\Gamma ^n\). Let \(\sigma _0\in \{-1,1\}\) be a constant such that \(\sigma _0=1\) (resp. \(\sigma _0=-1\)) if the Poincaré map is defined in the bounded (resp. unbounded) region of \(\Gamma ^n\). Then following statements holds.

-

(a)

If \(r(\Gamma ^n)>1\) and \(\mu \in \Lambda \) is such that \(\sigma _0d_1(\mu )\leqslant 0,\dots , \sigma _0d_n(\mu )\leqslant 0\) with \(\sigma _0d_i(\mu )<0\) for some \(i\in \{1,\dots ,n\}\), then at least one stable limit cycle \(\Gamma \) bifurcates from \(\Gamma ^n\).

-

(b)

If \(r(\Gamma ^n)<1\) and \(\mu \in \Lambda \) is such that \(\sigma _0d_1(\mu )\geqslant 0,\dots ,\sigma _0d_n(\mu )\geqslant 0\) with \(\sigma _0d_i(\mu )>0\) for some \(i\in \{1,\dots ,n\}\), then at least one unstable limit cycle \(\Gamma \) bifurcates from \(\Gamma ^n\).

Proof

For the simplicity we will use the same polycycle \(\Gamma \) used in the proof of Theorem 1. Let \(x_{i,0}\in L_i\) be as in Sect. 2 and \(l_i\) be transversal sections of \(L_i\) through \(x_{i,0}\), \(i\in \{1,2,3\}\). Let \(R_i:l_i\times \Lambda \rightarrow l_{i-1}\) be functions given by the compositions of the functions used in the proof of Theorem 1, \(i\in \{1,2,3\}\). See Fig. 14.

Hence, if \(d_i(\mu )\leqslant 0\), \(i\in \{1,2,3\}\), then the Poincaré map \(P:l_1\times \Lambda \rightarrow l_1\) can be written as \(P(x,\mu )=R_3(R_2(R_1(x,\mu ),\mu ),\mu )\). We observe that P is \(C^\infty \) in x, continuous in \(\mu \), and it follows from the proof of Theorem 1 that \(P(\cdot ,\mu _0)\) is non-flat. It follows from Theorem 1 that there is an open ring A in the bounded region delimited by \(\Gamma \), such that the orbit \(\Gamma \) through any point \(q\in A_0\) spiral towards \(\Gamma ^3\) as \(t\rightarrow +\infty \). Let p be the interception of \(\Gamma \) and \(l_1\), \(q_0\in A_0\cap l_1\), \(\xi \) a coordinate system along \(l_1\) such that \(\xi =0\) at p and \(\xi >0\) at \(q_0\) and let we identify this coordinate system with \({\mathbb {R}}_+\). Observe that \(P(q_0,\mu _0)<q_0\) and thus by continuity \(P(q_0,\mu )<q_0\) for any \(\mu \in \Lambda \). See Fig. 15.

Therefore, it follows from the Poincaré-Bendixson theory and from the non-flatness of P that at least one stable limit cycle \(\Gamma _0\) bifurcates from \(\Gamma ^3\). Statement (b) can be prove by time reversing. \(\square \)

6 The Further Displacement Map

Let Z and \(\Gamma ^n\) be as in Sect. 2, with the tangential singularities satisfying Remark 5. Let \(L_i^u(\mu )\) and \(L_i^s(\mu )\) be the perturbations of \(L_i\) such that \(\alpha (L_i^u)=p_{i+1}\) and \(\omega (L_i^s)=p_i\), \(i\in \{1,\dots ,n\}\), with each index being modulo n. Following the work of Han et al [14], let \(C_i=x_{i,0}\). If \(C_i\notin \Sigma \), then let \(v_i\) be the unique unitary vector orthogonal to \(Z(C_i,\mu _0)\) and pointing outwards in relation to \(\Gamma ^n\). See Fig. 16.

On the other hand, if \(C_i\in \Sigma \), then let \(v_i\) be the unique unitary vector tangent to \(T_{C_i}\Sigma \) and pointing outwards in relation to \(\Gamma ^n\). In both cases, let \(l_i\) be the transversal section normal to \(L_i\) at \(C_i\). It is clear that any point \(B\in l_i\) can be written as \(B=C_i+\lambda v_i\), with \(\lambda \in {\mathbb {R}}\). Moreover, let \(N_i\) be a small enough neighborhood of \(C_i\) and \(J_i=N_i\cap \Sigma \). It then follows that any point \(B\in J_i\) can be orthogonally projected on the line \(l_i:C_i+\lambda v_i\), \(\lambda \in {\mathbb {R}}\), and thus it can be uniquely, and smoothly, identified with \(C_i+\lambda _B v_i\), for some \(\lambda _B\in {\mathbb {R}}\). In either case \(C_i\in \Sigma \) or \(C_i\not \in \Sigma \), observe that if \(\lambda >0\), then B is outside \(\Gamma ^n\) and if \(\lambda <0\), then B is inside \(\Gamma ^n\). For each \(i\in \{1,\dots ,n\}\) we define,

Therefore, it follows from Sect. 5 that,

\(i\in \{1,\dots ,n\}\). Let \(r_i=\frac{|\nu _i(\mu _0)|}{\lambda _i(\mu _0)}\) if \(p_i\) is a hyperbolic saddle or \(r_i=\frac{n_{i,u}}{n_{i,s}}\) if \(p_i\) is a tangential singularity, \(i\in \{1,\dots ,n\}\). If \(r_i>1\) and \(d_i(\mu )<0\), then following [14], we observe that,

is well defined and thus we define the further displacement map as,

See Fig. 17.

On the other hand, if \(r_i<1\) and \(d_{i-1}(\mu )>0\), then,

is well defined and thus we define the further displacement map as,

Proposition 6

For \(i\in \{1,\dots ,n\}\) and \(\Lambda \subset {\mathbb {R}}^r\) small enough we have,

Proof

For simplicity let us assume \(i=n\) and \(r_n>1\). It follows from the definition of \(d_{n-1}^*\) and \(d_{n-1}\) that,

Let \(B=B_n^s+\lambda v_n\in l_n\), \(\lambda <0\), with \(|\lambda |\) small enough and observe that the orbit through B will intersect \(l_{n-1}\) in a point C which can be written as,

Therefore, we have a function \(F:l_n\rightarrow l_{n-1}\) with \(F(\lambda ,\mu )<0\) for \(\lambda <0\), \(|\lambda |\) small enough, such that \(F(\lambda ,\mu )\rightarrow 0\) as \(\lambda \rightarrow 0\). From (21) we have,

Since \(B_{n-1}^*\) is the intersection of the positive orbit through \(B_n^u\) with \(l_{n-1}\) it follows from (23) that,

Therefore, it follows from (22) that,

If \(p_i\) is a hyperbolic saddle, then F is, up to the composition of some diffeomorphisms given by the flow of the components of Z, the Dulac map \(D_i\) defined at Sect. 3.1. If \(p_i\) is a tangential singularity (we remember that we are under the hypothesis of Remark 5), then F is, up to the composition of some diffeomorphisms given by the flow of the components of Z, the composition \(T_i^u\circ (T_i^s)^{-1}\) defined at Sect. 3.3. In either case, it follows from Sect. 3 that,

with \(A(\mu _0)\ne 0\). Since \(d_n=O(||\mu ||)\), it follows from (25) that,

and thus from (24) we have the result. The case \(r_n<1\) follows similarly from the fact that the inverse \(F^{-1}\) has order \(r_n^{-1}\) in \(\mu \). \(\square \)

Corollary 1

For each \(i\in \{1,\dots ,n\}\) the further displacement map \(d_i^*\) is continuous differentiable with the j-partial derivative given either by the j-partial derivative of \(d_i\) or \(d_{i+1}\). Furthermore a connection between \(p_i\) and \(p_{i-2}\) exists if and only if \(d_{i-2}^*(\mu )=0\) and \(d_{i-1}(\mu )\ne 0\).

7 Proof of Theorem 3

Proof of Theorem 3

Let \(Z=(X_1,\dots ,X_M;\Sigma )\) and denote \(X_i=(P_i,Q_i)\), \(i\in \{1,\dots ,M\}\). Let \(\{p_1,\dots ,p_n\}\) be the singularities of \(\Gamma ^n\) and \(L_i\) the regular orbits between them such that \(\omega (L_i)=p_i\) and \(\alpha (L_i)=p_{i+1}\). If \(L_i\cap \Sigma =\emptyset \), then take \(x_{i,0}\in L_i\) and \(\gamma _i(t)\) a parametrization of \(L_i\) such that \(\gamma _i(0)=x_{i,0}\). If \(L_i\cap \Sigma \ne \emptyset \), then let \(L_i\cap \Sigma =\{x_{i,0},\dots ,x_{i,n(i)}\}\) and take \(\gamma _i(t)\) a parametrization of \(L_i\) such that \(\gamma _i(t_{i,j})=x_{i,j}\) with \(0=t_{i,0}>t_{i,1}>\dots >t_{i,n(i)}\). In either case denote \(L_i^+=\{\gamma _i(t):t>0\}\) if \(p_i\) is a hyperbolic saddle or \(L_i^+=\{\gamma _i(t):0<t<t_i\}\), where \(t_i\) is such that \(\gamma _i(t_i)=p_i\), if \(p_i\) is a \(\Sigma \)-singularity. Following [14], for each \(i\in \{1,\dots ,n\}\) let \(G_{i,j}\), \(j\in \{1,2\}\), be two compact disks small enough such that,

-

(1)

\(\Gamma ^n\cap G_{i,j}=L_i^+\cap G_{i,j}\ne \emptyset \), \(j\in \{1,2\}\);

-

(2)

\(G_{i,1}\subset \text {Int}G_{i,2}\);

-

(3)

\(G_{i,2}\cap G_{s,2}=\emptyset \) for any \(i\ne s\);

-

(4)

\(G_{i,j}\cap \Sigma =\emptyset \).

Let \(k_i:{\mathbb {R}}^2\rightarrow [0,1]\) be a \(C^\infty \)-bump function such that,

See Fig. 18.

Let \(\mu \in {\mathbb {R}}^n\) and \(g_i:{\mathbb {R}}^2\rightarrow {\mathbb {R}}^2\), \(i\in \{1,\dots ,n\}\), be maps that we yet have to define. Let also,

and for now one let us denote \(X_i=X_i+g\). Let \(\Lambda \) be a small enough neighborhood of the origin of \({\mathbb {R}}^n\). It follows from Sect. 5 that each displacement map \(d_i:\Lambda \rightarrow {\mathbb {R}}\) controls the bifurcations of \(L_i\) near \(x_{i,0}\). It follows from Definition 8 that,

where \(\eta _i\) is the analogous of \(u_0\) in Fig. 8. But from the definition of g we have that each \(x_{i,0}^u(\mu )\) does not depend on \(\mu \) and thus \(x_{i,0}^u\equiv x_{i,0}\). Furthermore it follows from the definition of \(k_i\) that each singularity \(p_i\) of \(\Gamma ^n\) also does not depend on \(\mu \) and thus \(\frac{\partial \gamma _i}{\partial \mu _j}(t_i)=0\) for every tangential singularity \(p_i\). Therefore, it follows from Propositions 3 and 4 that,

with,

and,

i, \(j\in \{1,\dots ,n\}\). We observe that if \(L_i\cap \Sigma =\emptyset \), then \(\theta _i=0\). It follows from the definition of the sets \(G_{i,j}\) that \(\frac{\partial d_i}{\partial \mu _j}(0)=0\) if \(i\ne j\). Let \(M_iX_i=(\overline{P}_i,\overline{Q}_i)\) and \(R_{i,i}=\frac{\partial P_i}{\partial \mu _i}F_{i,1}+\frac{\partial Q_i}{\partial \mu _i}F_{i,2}\), where,

Let \(g_i=(g_{i,1},g_{i,2})\) and observe that,

Therefore, if we take \(g_i=-\omega _0(-\overline{Q}_i-\sin \theta _iF_{i,1},\;\overline{P}_i-\sin \theta _iF_{i,2})\), then we can conclude that,

with \(a_i=\frac{\partial d_i}{\partial \mu _i}(0)>0\), \(i\in \{1,\dots ,n\}\). If \(n=1\), then it follows from Proposition 5 that any \(\mu \in {\mathbb {R}}\) arbitrarily small such that \((R_1-1)\sigma _0\mu <0\) result in the bifurcation of at least one limit cycle. Suppose \(n\geqslant 2\) and that the result had been proved in the case \(n-1\). We will now prove by induction in n. For definiteness we can assume \(R_n>1\) and therefore \(R_{n-1}<1\) and thus \(r_n>1\). Moreover, it follows from Theorem 1 that \(\Gamma ^n\) is stable. Define,

It follows from Proposition 6 and from (26) that we can apply the Implicit Function Theorem on D and thus obtain unique \(C^\infty \)-functions \(\mu _i=\mu _i(\mu _n)\), \(\mu _i(0)=0\), \(i\in \{1,\dots ,n-1\}\), such that,

for \(|\mu _n|\) small enough. It also follows from (26) that \(d_n\ne 0\) if \(\mu _n\ne 0\), with \(|\mu _n|\) small enough. Therefore, if \(\mu _i=\mu _i(\mu _n)\) and \(\mu _n\ne 0\), then it follows from the definition of \(D=0\) that there exist a \(\Gamma ^{n-1}=\Gamma ^{n-1}(\mu _n)\) polycycle formed by \(n-1\) singularities and \(n-1\) regular orbits \(L_i^*=L_i^*(\mu _n)\) such that,

-

(1)

\(\Gamma ^{n-1}\rightarrow \Gamma ^n\),

-

(2)

\(L_{n-1}^*\rightarrow L_n\cup L_{n-1}\) and,

-

(3)

\(L_i^*\rightarrow L_i\), \(i\in \{1,\dots ,n-2\}\),

as \(\mu _n\rightarrow 0\). See Fig. 19.

Let,

\(j\in \{1,\dots ,n-1\}\). Then it follows from the hypothesis,

for \(i\in \{1,\dots ,n-1\}\) and from the hypothesis \(R_{n-1}<1\), that,

for \(i\in \{1,\dots ,n-2\}\) and \(R_{n-1}^*<1\) for \(\mu _n\ne 0\) small enough. Thus, it follows from Theorem 1 that \(\Gamma ^{n-1}\) is unstable while \(\Gamma ^n\) is stable. It then follows from the Poincaré-Bendixson theory, and from the fact that the first return map is non-flat, that at least one stable limit cycle \(\overline{\gamma }_n(\mu _n)\) exists near \(\Gamma ^{n-1}\). In fact both the limit cycle and \(\Gamma ^{n-1}\) bifurcates from \(\Gamma ^n\). Now fix \(\mu _n\ne 0\), \(|\mu _n|\) arbitrarily small, and define the non-smooth system,

where \(Z^*(x)=Z(x)+g(x,\mu _1(\mu _n),\dots ,\mu _{n-1}(\mu _n),\mu _n)\) and,

with \(\overline{\mu }_i=\mu _i-\mu _i(\mu _n)\). It then follows by the definitions of \(G_{i,j}\) and \(L_i^*\) that,

\(i\in \{1,\dots ,n-1\}\) and \(j\in \{1,2\}\). In this new parameter coordinate system the bump functions \(k_i\) still ensures that \(\frac{\partial d_i}{\partial \mu _j}(\mu )=0\) if \(i\ne j\). Since \(a_i>0\), it also follows that at the origin of this new coordinate system we still have \(\frac{\partial d_i}{\partial \mu _i}(0)>0\). Therefore, it follows by induction that at least \(n-1\) crossing limit cycles \(\overline{\gamma }_j(\overline{\mu })\), \(j\in \{1,\dots ,n-1\}\), bifurcates near \(\Gamma ^{n-1}\) for arbitrarily small \(|\overline{\mu }|\). Furthermore, we observe that \(\overline{\gamma }_n(\mu _n)\) persists for \(\overline{\mu }\) small enough, because it has odd multiplicity. \(\square \)

References

Andrade, K., Gomide, O., Novaes, D.: Qualitative Analysis of Polycycles in Filippov Systems, arXiv:1905.11950v2 (2019)

Andrade, K., Jeffrey, M., Martins, R., Teixeira, M.: On the Dulac’s problem for piecewise analytic vector fields. J. Diff. Equ. 4, 2259–2273 (2019)

Andronov, A., Vitt, A., Khaikin, S.: Theory of Oscillators, Translated from the Russian by F. Immirzi; translation edited and abridged by W. Fishwick. Pergamon Press, Oxford (1966)

Bonet, C., Jeffrey, M.R., Martín, P., Olm, J.M.: Ageing of an oscillator due to frequency switching. Commun. Nonlinear Sci. Numer. Simul. 102, 105950 (2021). https://doi.org/10.1016/j.cnsns.2021.105950

Buzzi, C., Carvalho, T., Euzébio, R.: On Poincaré-Bendixson theorem and non-trivial minimal sets in planar nonsmooth vector fields. Publicacions Matemàtiques 62, 113–131 (2018)

Cherkas, L.: The stability of singular cycles. Differ. Uravn. 4, 1012–1017 (1968)

Duff, G.: Limit-cycles and rotated vector fields. Ann. Math. 57, 15–31 (1953)

Dulac, H.: Sur les cycles limites. Bull. Soc. Math. France 62, 45–188 (1923)

Dumortier, F., Roussarie, R., Rousseau, C.: Elementary graphics of cyclicity 1 and 2. Nonlinearity 7, 1001–1043 (1994)

Dumortier, F., Morsalani, M., Rousseau, C.: Hilbert’s 16th problem for quadratic systems and cyclicity of elementary graphics. Nonlinearity 9, 1209–1261 (1996)

Filippov, A.: Differential Equations with Discontinuous Right-hand Sides, Translated from the Russian, Mathematics and its Applications (Soviet Series) 18. Kluwer Academic Publishers Group, Dordrecht (1988)

Gasull, A., Mañosa, V., Mañosas, F.: Stability of certain planar unbounded polycycles. J. Math. Anal. Appl. 269, 332–351 (2002)

Guckenheimer, J., Holmes, P.: Nonlinear oscillations, dynamical systems, and bifurcations of vector fields. Springer-Verlag, New York (1983)

Han, M., Wu, Y., Bi, P.: Bifurcation of limit cycles near polycycles with n vertices. Chaos, Solitons Fractals 22, 383–394 (2004)

Holmes, P.: Averaging and chaotic motions in forced oscillations. SIAM J. Appl. Math. 38, 65–80 (1980)

Jeffrey, M.: Modeling with Nonsmooth Dynamics. Springer Nature, Switzerland AG (2020)

Jeffrey, M.R., Seidman, T.I., Teixeira, M.A., Utkin, V.I.: Into higher dimensions for nonsmooth dynamical systems. Phys. D: Nonlinear Phenomena 434, 133222 (2022). https://doi.org/10.1016/j.physd.2022.133222

Kelley, A.: The stable, center-stable, center, center-unstable, unstable manifolds. J. Diff. Equ. 3, 546–570 (1967)

Marin, D., Villadelprat, J.: Asymptotic expansion of the Dulac map and time for unfoldings of hyperbolic saddles: Local setting. J. Diff. Equ. 269, 8425–8467 (2020)

Marin, D., Villadelprat, J.: Asymptotic expansion of the Dulac map and time for unfoldings of hyperbolic saddles: General setting. J. Diff. Equ. 275, 684–732 (2021)

Mourtada, A., Cyclicite finie des polycycles hyperboliques de champs de vecteurs du plan mise sous forme normale, Springer-Verlag, Berlin Heidelberg. Lecture Notes in Mathematics, vol. 1455,: Bifurcations of Planar Vector Fields, pp. 272–314. Luminy Meeting Proceedings, France (1990)

Mourtada, A.: Degenerate and non-trivial hyperbolic polycycles with two vertices. J. Diff. Equ. 113, 68–83 (1994)

Novaes, D., Rondon, G.: On limit cycles in regularized Filippov systems bifurcating from homoclinic-like connections to regular-tangential singularities. Physica D 442, 133526 (2022)

Novaes, D., Teixeira, M., Zeli, I.: The generic unfolding of a codimension-two connection to a two-fold singularity of planar Filippov systems. Nonlinearity 31, 2083–2104 (2018)

Perko, L.: Homoclinic loop and multiple limit cycle bifurcation surfaces. Trans. Amer. Math. Soc 344, 101–130 (1994)

Perko, L.: Differential Equations and Dynamical Systems Texts in Applied Mathematics. Springer-Verlag, New York (2001)

Sotomayor, J.: Curvas Definidas por Equações Diferenciais no Plano. Instituto de Matemática Pura e Aplicada, Rio de Janeiro (1981)

Ye, Y., Cai, S., Lo, C.: Theory of Limit Cycles, Providence. American Mathematical Society, R.I. (1986)

Acknowledgements

We thank to the reviewers their comments and suggestions which help us to improve the presentation of this paper. The author is supported by São Paulo Research Foundation (FAPESP), grants 2019/10269-3 and 2021/01799-9.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Santana, P. Stability and Cyclicity of Polycycles in Non-smooth Planar Vector Fields. Qual. Theory Dyn. Syst. 22, 142 (2023). https://doi.org/10.1007/s12346-023-00838-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12346-023-00838-4