Abstract

In order to get the lower bound of the number of limit cycles for near-Hamiltonian systems, one often faces the difficulty in verifying the independence of the coefficients of some polynomials. The difficulty is mainly coming from the tedious iterative computation. In the present paper, we provide an approach to verify the independence which largely reduces calculation and illustrate this method by perturbing a piecewise smooth Hamiltonian system with a homoclinic loop. Using this method, we prove that the maximal number of limit cycles of this system is \(n+[\frac{n+1}{2}]\), and this bound can be reached.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

Mutational behavior after slow change is ubiquitous in natural and artificial systems and is usually described by piecewise smooth mathematical models. For a long time, this research has attracted much attention in the field of nonlinear science. As a part of nonlinear dynamical systems, piecewise smooth dynamical systems are applied in many fields of applied science and engineering, such as collision vibration system in mechanical engineering, stick slip vibration system with dry friction, circuit system with controllable switch, see [1, 8, 17].

Similar to smooth differential systems, the number and distribution of limit cycles is one of the important problems of non-smooth differential systems. So far as we know, there are two basic methods to study the number of limit cycles. One is the Melnikov function method developed in [6, 14] and the other is the averaging method established in [12, 13]. By using the above two methods, many researchers have been extensively studied the upper or lower bound of the number of limit cycles for the following piecewise smooth near-Hamiltonian system

where \(0<|\varepsilon |\ll 1\), \(H^\pm (x,y)\) are polynomials of x and y of degree \(m+1\) and

For the purpose of getting the upper bound, one usually analyzed the algebraic structure of the corresponding first order Melnikov function M(h) of system (1.1) with the help of Picard-Fuchs equations. Then, the upper bound is obtained by comprehensively applying the methods in the literatures [7, 9, 22, 26] or argument principle or Chebyshev criterion etc, see [2, 5, 10, 16, 20, 21, 23, 25] that have used these methods. Fortunately, the independence of the coefficients of the coefficient polynomials of the generators of M(h) does not need to be verified. Because this is very intricate. However, this is inevitable if you want to get the lower bound. In [11, 14, 24], the authors got the lower bound of the number of limit cycles bifurcating from the period annulus of system (1.1) with a homoclinic loop or heteroclinic loop or eye-figure loop. In order to get the independence of the coefficients, they took some special perturbed polynomials \(f^\pm (x,y)\) and \(g^\pm (x,y)\) for the sake of simplifying the calculation. In [4, 15, 18, 19], the authors obtained the lower bound for the general perturbed polynomials. But, the proof of the independence of the coefficients involves a lot of computations. With that in mind, in the present paper, we provide a way to verify the independence of coefficients which largely reduces calculation and illustrate this method with a concrete example.

Consider the following perturbed piecewise smooth Hamiltonian system

The corresponding Hamiltonian functions for system (1.2)\(|_{\varepsilon =0}\) are

and

When \(\varepsilon =0\), system (1.2) has a family of periodic orbits as follows

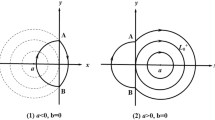

with \(h\in (0,\frac{1}{2})\). As h tends to 0, \(\Gamma _h\) approaches to the origin, and as h tends to \(\frac{1}{2}\), \(\Gamma _h\) approaches to a homoclinic loop passing through the saddle point (1,0), see Fig. 1.

The phase portrait of system (1.2) with \(\varepsilon =0\)

By [6, 14], corresponding to periodic orbits \(\{\Gamma _h|h\in (0,\frac{1}{2})\}\), system (1.2) has the first order Melnikov function described by

and the number of zeros of M(h) controls the number of limit cycles of system (1.2) if M(h) is not identically zero. In [10] the authors posed the following conjecture.

Conjecture

By using the first order Melnikov function, the maximal number of limit cycles of system (1.2) bifurcating from the period annulus around the origin is \(n+[\frac{n+1}{2}]\).

We confirm the conjecture with the following theorem.

Theorem 1.1

By using the first order Melnikov function, the number of limit cycles of system (1.2) bifurcating from the period annulus around the origin is not more than \(n+[\frac{n+1}{2}]\), and this bound can be reached.

This paper is organized as follows. In Sect. 2, we obtain the detailed expression of the first order Melnikov function M(h) and verify the independence of the coefficients of the coefficient polynomials of the generators of M(h) by using mathematical induction. Section 3 is devoted to the proof of Theorem 1.1. Finally, conclusion is drawn in Sect. 4.

2 The Algebraic Structure of the First Order Melnikov Function

In order to estimate the number of zeros of the first order Melnikov function M(h), one should study the algebraic structure of M(h). To this end, we denote

Since the orbits \(\Gamma ^\pm _h\) are symmetric with respect to the x-axis, \(I_{i,2j+1}(h)=J_{i,2j+1}(h)\equiv 0\). So we only need to consider \(I_{i,2j}(h)\) and \(J_{i,2j}(h)\).

The next Lemma shows that M(h) can be expressed as a combination of some generator integrals with polynomial coefficients and the coefficients of these polynomials can be taken as free parameters.

Lemma 2.1

For \(h\in (0,\frac{1}{2})\) and any \(n\in \mathbb {N} \) it holds that

where \(\alpha _i\), \(\beta _i\) and \(\gamma _i\) are constants and can be chosen arbitrarily.

Proof

Let D be the interior of \(\Gamma _{h}^+\cup \overrightarrow{BA}\), see Fig. 1. Using the Green’s Formula, one has

Thus,

Similarly, one has

By (1.5), (2.2) and (2.3), one obtains

where

It is easy to check that \(\xi _{i,j}\) and \(\eta _{i,j}\) can be taken as free parameters.

\(\square \)

Now we claim that

where \({\bar{\alpha }}_i\), \(i=0,1,2,\cdots ,[\frac{n}{2}]\) and \({\bar{\beta }}_j\), \(j=0,1,2,\cdots ,[\frac{n-1}{2}]\) can be taken as free parameters.

In fact, differentiating \(H^+(x,y)=h\) both sides with respect to y, one obtains

Multiplying (2.5) by the one-form \(x^{i}y^{j-1}dy\) and integrating over \(\Gamma _h^+\), one obtains the relation

Similarly, multiplying \(H^+(x,y)=h\) both sides by \(x^{i-2}y^{j}dy\) and integrating over \(\Gamma _h^+\), one gets another relation

Elementary manipulations reduce equations (2.6) and (2.7) to

and

Now we will prove the claim by induction on n. Without loss of generality, we only prove the claim if n is an even number (the claim can be proved similarly if n is an odd number). Indeed, a direct computation using the above two equalities (2.8) and (2.9) gives

Hence, one has for \(n=2,3\)

That is, the claim holds for \(n=2,3\).

Now assume that the claim holds for all \(i+j\le n-1\). Then, taking \((i,j)=(0,n),(2,n-2),\cdots ,(n-2,2)\) in (2.9) and \((i,j)=(n,0)\) in (2.8), respectively, one has

Therefore, by the induction hypothesis and (2.11), one obtains

where \({\tilde{\alpha }}_i\), \({\tilde{\beta }}_i\), \({\bar{\alpha }}_i\) and \({\bar{\beta }}_i\) are constants.

Next, we prove that \({\bar{\alpha }}_i\), \(i=0,1,2,\cdots ,[\frac{n}{2}]\) and \({\bar{\beta }}_j\), \(j=0,1,2,\cdots ,[\frac{n-1}{2}]\) can be taken as free parameters. In fact, by the induction hypothesis, one has \({\tilde{\alpha }}_i\), \(i=0,1,2,\cdots ,[\frac{n-1}{2}]\) and \({\tilde{\beta }}_j\), \(j=0,1,2,\cdots ,[\frac{n-2}{2}]\) are independent of each other. That is, the determinant of the following Jacobian matrix

is different from zero, here the sum of subscripts of \(\xi _{i,j}\) in the above Jacobian matrix is less than or equal to \(n-1\). A directly calculation implies the following Jacobian matrix

where \({\mathbf {0}}\) is a row vector and \({\mathbf {B}}\) is a column vector. It is easy to get that

which yields \({\bar{\alpha }}_i\), \(i=0,1,2,\cdots ,[\frac{n}{2}]\) and \({\bar{\beta }}_j\), \(j=0,1,2,\cdots ,[\frac{n-1}{2}]\) can be taken as free parameters.

In a similar way, one can prove that

where \({\hat{\alpha }}_i\), \(i=0,1,2,\cdots ,[\frac{n}{2}]\) and \({\hat{\beta }}_j\), \(j=0,1,2,\cdots ,[\frac{n-1}{2}]\) can be taken as free parameters.

Observe that \({\bar{\alpha }}_i\) and \({\bar{\beta }}_j\) are expressed by \(\xi _{i,j}\) and \({\hat{\alpha }}_i\) and \({\hat{\beta }}_j\) are expressed by \(\eta _{i,j}\), one has that \({\bar{\alpha }}_i\), \({\bar{\beta }}_j\), \({\hat{\alpha }}_i\) and \({\hat{\beta }}_j\) are independent of each other. A straightforward calculation yields that

In view of (2.4), (2.13) and (2.14), one gets the equality (2.1), where

which means that \(\alpha _i\), \(\beta _i\) and \(\gamma _i\) can be chosen arbitrarily. This ends the proof. \(\lozenge \)

Remark 2.1

In the proof of Lemma 2.1, we have verified that the coefficients of the coefficient polynomials of \(I_{0,0}(h)\), \(I_{1,0}(h)\), \(J_{0,0}(h)\) and \(J_{1,0}(h)\) are independent of each other under general polynomial perturbations by using mathematical induction. Compared with the verification processes in the previous literatures, the calculation in this paper is simpler.

3 Proof of Theorem 1.1

In order to obtain the lower bound of the number of zeros of M(h), we resort to a result of Coll, Gasull and Prohens published in [3]. We review this result here for the convenience of the reader.

Lemma 3.1

[3]. Consider \(p+1\) linearly independent analytical functions \(f_i:U\rightarrow {\mathbb {R}},\ i=0,1,2,\cdots , p\), where \(U\in {\mathbb {R}}\) is an interval. Suppose that there exists \(j\in \{0,1,\cdots ,p\}\) such that \(f_j\) has constant sign. Then there exists \(p+1\) constants \(\delta _i,\ i=0,1,\cdots ,p\), such that \(f(x)=\sum _{i=0}^p\delta _if_i(x)\) has at least p simple zeros in U.

To apply the above Lemma 3.1 one should show that the first order Melnikov function M(h) can be expressed as a combination of some linearly independent functions. To this end, let us start with some preliminary considerations.

Let \(u=\sqrt{h},\ h\in (0,\frac{1}{2})\). Then (2.1) can be written as

where \(\delta _i\) are constants and can be chosen as free parameters. Therefore, one finds that

in view of

where

It is easy to check that \(\varphi (u)\) satisfies the following differential equation

In order to show the linear independence of generating functions of M(u) in (3.1), we extend M(u) to a complex domain. From now on, we suppose that u is complex. We know that \(\varphi (u)\) can be analytically extended to the complex domain \(\Omega = {\mathbb {C}}\backslash \{u\in {\mathbb {R}}|\, |u|\ge \frac{\sqrt{2}}{2}\}\). For \(|u|>\frac{\sqrt{2}}{2}\) and let \(\varphi ^\pm (u)\) donates the analytic continuation of \(\varphi (u)\) along an arc with \(\pm Im (u)>0\), respectively. Then by (3.2) the function \(\varphi ^\pm (u)\) satisfies

where \(\mathbf{i}^2=-1\) and c is a nonzero real number.

The following Proposition plays a key role in estimating the lower bound of the number of zeros of M(h).

Proposition 3.1

The Melnikov function M(u) in (3.1) can be represented as a linear combination of the following \(n+[\frac{n+1}{2}]+1\) linearly independent generating functions

Proof

We assume that there exist constants \(\sigma _1,\cdots ,\sigma _{n+1},\mu _0,\mu _1,\cdots ,\mu _{[\frac{n-1}{2}]}\) such that

To show the linear independence of generating functions, we only need to prove that all the coefficients in (3.4) are zeros.

Since \(\varphi (u)\) can be analytically extended to domains \(\Omega \), M(h) can be analytically extended to the domain \(\Omega \). For \(u\in (-\infty ,-\frac{\sqrt{2}}{2})\cup (\frac{\sqrt{2}}{2},+\infty )\), by (3.3) and (3.4), one has that

which implies \(\mu _i=0\), \(i=0,1,2,\cdots ,[\frac{n-1}{2}].\) Then \({\overline{M}}(u)\) in (3.4) is simplified into the form

Taking for granted that the functions \(u,u^2,\cdots ,u^{n+1}\) are independent of each other, one obtains that \(\sigma _i=0\), \(i=1,2,\cdots ,n+1\). This ends the proof.

\(\square \)

The following Lemma proves to be extremely useful in estimating the upper bound of the number of zeros of M(h).

Lemma 3.2

Let \(f^{(n)}(h)\) represents the nth-order derivative of f(h). Then for \(n\ge m+1\) and any \(m,n\in \mathbb {N}\), it holds that

where \(P_{n-1}\)(h) is a polynomial of degree \(n-1\).

Proof

It is easy to get that

in view of induction on k, where \(Q_{k-1}\)(h) is a polynomial of degree \(k-1\). Hence, by Leibniz formula and the above equality, one finds that

The proof is completed. \(\square \)

Proof of Theorem 1.1

In accordance with Lemma 2.1, Lemma 3.1 and Proposition 3.1, one knows that M(u) in (3.1) can have \(n+[\frac{n+1}{2}]\) zeros on \((0,\frac{\sqrt{2}}{2})\), which means that M(h) in (2.1) can have \(n+[\frac{n+1}{2}]\) zeros on the interval \((0,\frac{1}{2})\). Therefore, system (1.2) can have \(n+[\frac{n+1}{2}]\) limit cycles for \(h\in (0,\frac{1}{2})\).

Next we want to obtain the upper bound of the number of limit cycles of system (1.2). (2.1) can be written as

where \(\zeta _i\) are constants. Differentiating (3.5) \([\frac{n-1}{2}]+2\) times using Lemma 3.2 gives that

Thus \(M^{\left( {\left[ {\frac{n-1}{2}}\right] +2}\right) }(h)\) has at most \([\frac{n}{2}]+\left[ {\frac{n-1}{2}}\right] +1\) zeros on \((0,\frac{1}{2})\). Therefor, by Rolle’s theorem, M(h) has at most \(n+\left[ {\frac{n+1}{2}}\right] +1\) zeros on \([0,\frac{1}{2})\). Notice that \(M(0)=0\) and hence M(h) has at most \(n+[\frac{n+1}{2}]\) zeros on \((0,\frac{1}{2})\). This completes the proof of Theorem 1.1. \(\square \)

4 Conclusion

The motivation of this work is to find a simple approach to verify the independence of the coefficients of the coefficient polynomials of the generators of the first order Melnikov function M(h) of system (1.1). Because this is an essential step in estimating the lower bound of the number of zeros of M(h) and the existing methods for verifying independence are cumbersome.

To achieve our goal, we illustrate this approach by estimating the number of limit cycles of a near-Hamiltonian system with a homoclinic loop. Using this method, we have proved that this near-Hamiltonian system (1.2) has at most \(n+[\frac{n+1}{2}]\) limit cycles and this number can be reached. This is a new result on the bound of the number of limit cycles for such system with a homoclinic loop and the method in this paper can be applied in the study of limit cycle bifurcations of integrable differential systems.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Bernardo, M., Budd, C., Champneys, A., Kowalczyk, P.: Piecewise-Smooth Dynamical Systems, Theory and Applications. Springer-Verlag, London (2008)

Chen, Y., Yu, J.: The study on cyclicity of a class of cubic systems. Discrete Contin. Dyn. Syst.-B 27(11), 6233–6256 (2022)

Coll, B., Gasull, A., Prohens, R.: Bifurcation of limit cycles from two families of ceters. Dyn. Contin. Discrete Implus Syst. Ser. A Math. Anal. 12, 275–287 (2005)

Gong, S., Han, M.: An estimate of the number of limit cycles bifurcating from a planar integrable system. Bull. Sci. Math. 176, 103118 (2022)

Grau, M., Mañosas, F., Villadelprat, J.: A Chebyshev criterion for Abelian integrals. Trans. Amer. Math. Soc. 363, 109–129 (2011)

Han, M., Sheng, L.: Bifurcation of limit cycles in piecewise smooth systems via Melnikov function. J. Appl. Anal. Comput. 5, 809–815 (2015)

Horozov, E., Iliev, I.: Linear estimate for the number of zeros of Abelian integrals with cubic Hamiltonians. Nonlinearity 11, 1521–1537 (1998)

Kunze, V.: Non-Smooth Dynamical Systems. Springer-Verlag, Berlin (2000)

Li, W., Zhao, Y., Li, C., Zhang, Z.: Abelian integrals for quadratic centers having almost all their orbits formed by quartics. Nonlinearity 15, 863–885 (2002)

Liang, F., Han, M., Romanovski, V.: Bifurcation of limit cycles by perturbing a piecewise linear Hamiltonian system with a homoclinic loop. Nonlinear Anal. 75, 4355–4374 (2012)

Liang, F., Han, M.: On the number of limit cycles in small perturbations of a piecewise linear Hamiltonian system with a heteroclinic loop. Chin. Ann. Math. 37B(2), 267–280 (2016)

Llibre, J., Mereu, A., Novaes, D.: Averaging theory for discontinuous piecewise differential systems. J. Differ. Equ. 258, 4007–4032 (2015)

Llibre, J., Novaes, D., Teixeira, M.: On the birth of limit cycles for non-smooth dynamical systems. Bull. Sci. Math. 139, 229–244 (2015)

Liu, X., Han, M.: Bifurcation of limit cycles by perturbing piecewise Hamiltonian systems. Int. J. Bifur. Chaos 20, 1379–1390 (2010)

Shi, H., Bai, Y., Han, M.: On the maximum number of limit cycles for a piecewise smooth differential system. Bull. Sci. Math. 163, 102887 (2020)

Sui, S., Yang, J., Zhao, L.: On the number of limit cycles for generic Lotka-Volterra system and Bogdanov-Takens system under perturbations of piecewise smooth polynomials. Nonlinear Anal. Real World Appl. 49, 137–158 (2019)

Teixeira, M. Perturbation theory for non-smooth systems. In: Encyclopedia of complexity and systems science, Springer, New York, (2009)

Xiong, Y., Han, M.: Limit cycles appearing from a generalized heteroclinic loop with a cusp and a nilpotent saddle. J. Differ. Equ. 303, 575–607 (2021)

Xiong, Y., Han, M.: Limit cycle bifurcations by perturbing a class of planar quintic vector fields. J. Differ. Equ. 269, 10964–10994 (2020)

Xiong, Y., Hu, J.: A class of reversible quadratic systems with piecewise polynomial perturbations. Appl. Math. Comput. 362, 124527 (2019)

Yang, J., Zhao, L.: Bounding the number of limit cycles of discontinuous differential systems by using Picard-Fuchs equations. J. Differ. Equ. 264, 5734–5757 (2018)

Yang, J., Zhao, L.: The cyclicity of period annuli for a class of cubic Hamiltonian systems with nilpotent singular points. J. Differ. Equ. 263, 5554–5581 (2017)

Yang, J.: Bifurcation of limit cycles of the nongeneric quadratic reversible system with discontinuous perturbations. Sci. China Math. 63(5), 873–886 (2020)

Yang, J., Zhao, L.: Limit cycle bifurcations for piecewise smooth Hamiltonian systems with a generalized eye-figure loop. Int. J. Bifurc. Chaos 26, 1650204 (2016)

Zhao, L., Qi, M., Liu, C.: The cylicity of period annuli of a class of quintic Hamiltonian systems. J. Math. Anal. Appl. 403, 391–407 (2013)

Zhao, Y., Zhang, Z.: Linear estimate of the number of zeros of Abelian integrals for a kind of quartic Hamiltonians. J. Differ. Equ. 155, 73–88 (1999)

Acknowledgements

Supported by National Natural Science Foundation of China(12161069,12071037); Ningxia Natural Science Foundation of China(2022AAC05044,2020AAC03264); Construction of First-class Disciplines of Higher Education of Ningxia (Pedagogy) (NXYLXK2021B10) and Scientific Research Program of Higher Education of Ningxia(NGY2020074).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, J., Zhao, L. Bifurcation of Limit Cycles of a Piecewise Smooth Hamiltonian System. Qual. Theory Dyn. Syst. 21, 142 (2022). https://doi.org/10.1007/s12346-022-00674-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12346-022-00674-y